1. Introduction

In computer science, efficient algorithms for optimizing applications ranging from robot control [

1], logistics applications [

2] to healthcare management [

3] have always evoked great interest. The general aim of an optimization problem is to obtain a solution with a maximum or minimum value to solve the problem. The solution often can be measured as a fitness from a function

where the search space is too huge for a deterministic algorithm to come up with a best solution within a given amount of time [

4]. The optimization algorithms are usually either deterministic, of which there are many in operation research, or non-deterministic, which iteratively and stochastically refine a solution using heuristics. For example, in data mining, heuristics-based search algorithms optimize the data clustering efficiency [

5] and improve the classification accuracy by feature selection [

6]. In the clustering case, different candidate formations of clusters are tried until one is found to be most ideal in terms of the highest similarity among the data in the same cluster. In the classification case, the best feature subset that is most relevant to the prediction target is selected using heuristic means. What these two cases have in common is that the optimization problem is a combinatorial search in nature. The possibilities of choosing a solution in a search space are too huge, which contributes to the NP-hardness of the problem. The search algorithms that are guided by stochastic heuristics are known as meta-heuristics, which literately means a tier of logics controlling the heuristics functions. In this paper, we focus on devising a new meta-heuristic that is parameter-free, based on the semi-swarming type of search algorithms. The swarming kind of search algorithms are contemporary population-based algorithms where search agents form a swarm that moves according to some nature-inspired or biologically-inspired social behavioural patterns. For example, Particle Swarm Optimization (PSO) is the most developed population-based metaheuristic algorithm by which the search agents swarm as a single group during the search operation. Each search particle in PSO has its own velocity, thereby influencing each another; collectively, the agents, which are known as particles, move as one whole large swarm. There are other types of metaheuristics that mimic animal or insect behaviours such as the ant colony algorithm [

7] and the firefly algorithm [

8] and some new and nature-inspired methods like the water wave algorithm. These algorithms do not always have the agents glued together, moving as one swarm. Instead, the agents move independently, and sometimes, they are scattered. In contrast, these algorithms are known as loosely-packed or semi-swarm bio-inspired algorithms. They have certain advantages in some optimization scenarios. Some well-known semi-swarm algorithms are the Bat Algorithm (BA) [

9], the polar bear algorithm [

10], the ant lion algorithm, as well as the wolf search algorithm. These algorithms usually embrace search methods that explore the search space both in breath and in depth and mimic swarm movement patterns of animals, insects or even plants found in nature. Their performance in heuristic optimization has been proven to be on par with that of many classical methods including those tight swarm or full swarm algorithms.

However, as optimization problems can be very different from case to case, it is imperative for nature-inspired swarm intelligence algorithms to be adaptive to different situations. The traditional way to solve this kind of problem is to adjust the control parameters manually. This may involve massive trial-and-error to adapt the model behaviour to changing patterns. Once the situation changes, the model may need to be reconfigured for optimal performance. That is why self-adaptive approaches have become more and more attractive for many researchers in recent years.

Inspired by the preying behaviour of a wolf pack, a contemporary heuristic optimization called the Wolf Search Algorithm (WSA) [

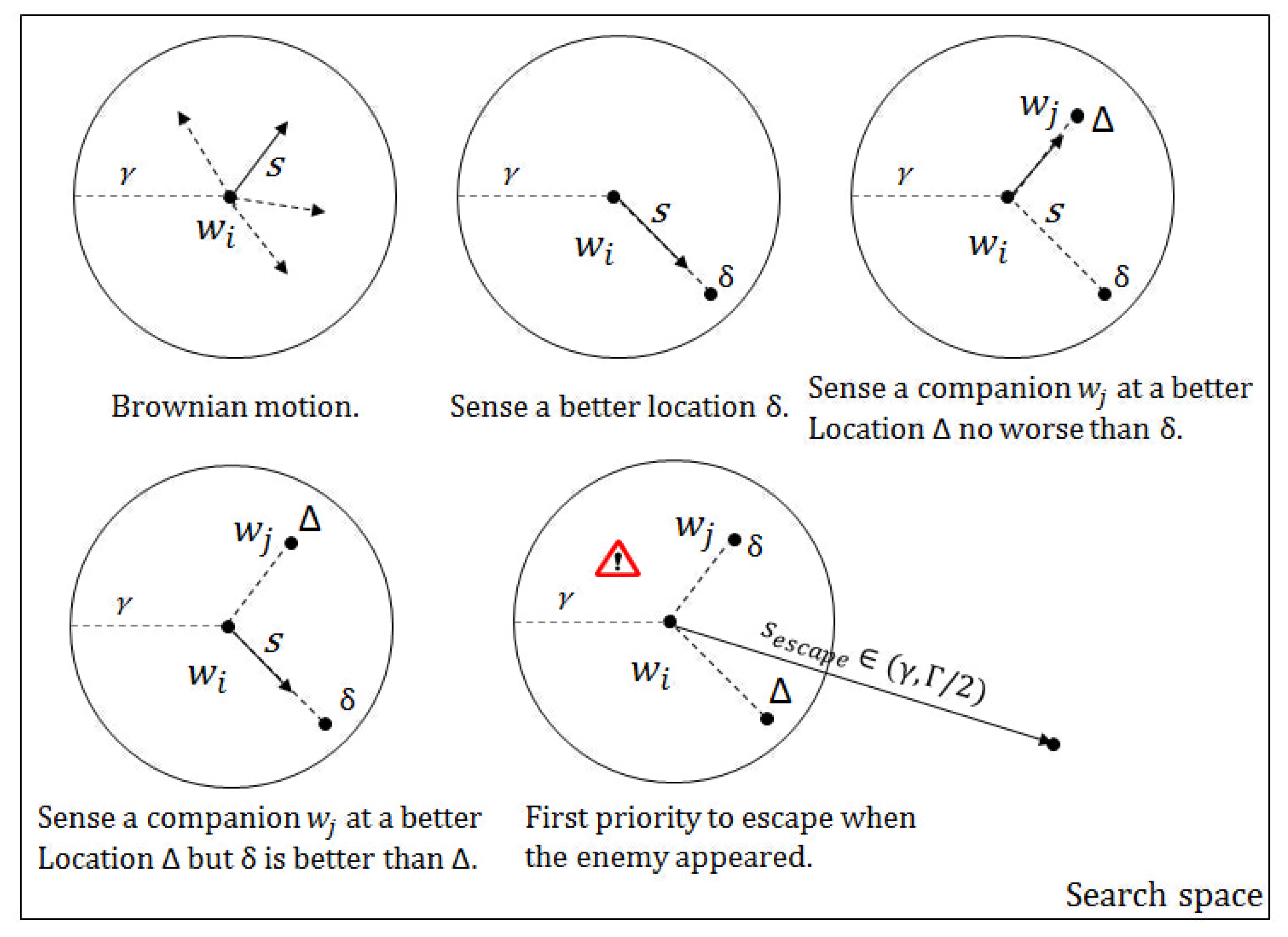

11] was proposed. In the wolf swarm, each wolf can not only search for food individually, but they can also merge with their peers when the latter are in a better situation. By this action model, the search can become more efficient as compared to the other single-leader swarms. By mimicking the hunting patterns of a wolf pack, the wolf in WSA as a search agent can find solutions independently, as well as merge with its peers within its visual range. Sometimes, wolves in WSA are simulated to encounter human hunters from whom they will escape to a position far beyond their current one. The human hunters always pose a natural threat to wolves. In the optimization process, this enemy of wolves triggers the search to stay out of local optima and tries out other parts of the search space in the hope of finding better solutions by the algorithm design. As shown in

Figure 1,

is the current search agent (the wolf) and

is its peer in its visual range

.

and

are locations in the search agent’s visual range;

S is the step size of its movement, and

is the search space for the objective function. The basic movement of an individual search agent is guided by Brownian motion. In this figure, In most of the metaheuristic algorithms, two of the most popular search methods are Levy search and Brownian search. Levy search is good for exploration [

12], and Brownian is efficient for exploiting the optimal solution [

13]. In WSA, both search methods were considered. The Brownian motion is used as the basic movement, and the Levy search is for pack movement.

As a typical swarm intelligence heuristic optimization algorithm, WSA shares a common structure and also a common drawback with other algorithms, involving heavy dependence of the efficacy of the algorithm on the chosen parameter values. It is hardly possible to guess the most suitable parameter values for the best algorithm performance. These values are either taken from some suggested defaults or they are manually adjusted. In

Figure 1 [

14], the parameters’ values remain unchanged during the search operation in the original version of WSA. Quite often, the performance and efficacy of the algorithms for different problems, applications or experimentations would differ greatly, when different parameter values are used. Since there is no golden rule on how the model parameters should be set and the models are sensitive to the parameter values used, users may only guess the values or find the parameter values by trial-and-error. In summary, given that the nature of the swarm search is dynamic, the parameters should be made self-adaptive to the dynamic nature of the problem. Some parameters’ values may be the best at yielding the maximum performance at one time, while other values may be shown to be better in the next moment.

In order to solve this problem, the Self-Adaptive Wolf Search Algorithm (SAWSA) is modified with a combination of techniques, such as a random selection method and a core-guided (or global best-guided) method integrated with the Differential Evolution (DE) crossover function as the parameter-updating mechanism [

14]. This SAWSA is based on a randomization, which clearly is not the best option for the rule-based WSA. Compared with the other swarm intelligence algorithms, the most valuable advantage of the original WSA is the stability. However, even though the average results of the published SAWSA are better than those of the WSA, the stability is weakened. To generate a better schema of the algorithm, the implicit relations between the parameters and the performance should be studied. In this paper, we try to find a way to stabilise the performance and generate a self-adaption-guided part for the algorithm. Furthermore, the coding structure is modified. The new algorithm is denoted as the Gaussian-Guided Self-Adaptive Wolf Search Algorithm (GSAWSA).

The contributions of this paper are summarized as follows. Firstly, the self-adaptive parameter range for WSA is carefully improved. Secondly, the parameter updater is not embedded in the main algorithm any longer, and it evolves as an independent updater in this new version. These two changes essentially show different and better optimization performance from the previous version of SAWSA [

14]. To verify the performances of the new model once the changes have been made, the experiments are redesigned with settings that enhance the clarity of the result display. The novelty in this paper is the Gaussian-guided parameter updater method. It is a method based on information entropy theory. To improve the performance of SAWSA further, we proposed this novel idea by treating the search agent behaviour as chaotic behaviour, so the algorithm can be perceived as a chaotic system. Using the chaotic stability theory, we can use chaotic maps to guide the operation of the system. Additionally, the entropy value can be used as a measurement of the stability and the inner information communication. In our paper, the feasibility of this new method is analysed, and a suitable map for WSA is found to be a Gaussian map. The advantage of using a Gaussian map as a new method is observed via an extensive simulation experiment.

We verify the efficacy of the considered methods with fourteen typical benchmark functions and compare the performance of GSAWSA with the original WSA, the original bat algorithm and the Hybrid Self-Adaptive Bat Algorithm (HSABA) [

15]. The self-adaptiveness is powered by Differential Equations (DE) in SABA. The concept is based on Particle Swarm Optimization (PSO), which moves in some sort of mixed random order and swarming patterns. PSO is one of the classical swarm search algorithms that often shows superior performance with standard benchmark functions. From our investigations, it is supposed that the parameter control by some entropy function in GSAWSA would possibly offer further improvement. The self-adaptive method is a totally hands-free approach that lets the search evolve itself. Parameter control is a guiding approach that steers the course of parameter changes during runtime.

The remainder of the paper is structured as follows: The original wolf search algorithm and the published SAWSA [

14] are briefly introduced in

Section 2. The chaos system entropy analysis and Gaussian-guided parameter control method are discussed in

Section 3, followed by

Section 4, which presents the comparison experiments of both the self-adaptive method and the parameter control method with several optional DE functions. The paper ends after presenting concluding remarks in

Section 5.

2. Related Works and Background

Researchers have extended and improved metaheuristic optimization algorithms to a large extent during the past few decades. The classic algorithms have become more and more mature. Many new and efficient algorithms were invented like those presented in the Introduction. They are tested and shown to be suitable for real case studies. Researchers from other areas have started to use the metaheuristic algorithms in their studies, as well, because of their ease of use. Lately, self-adaptive methods have become popular, and many works in this direction have been published. The purpose of the self-adaptive methods for metaheuristic algorithms is fitting the same algorithm to different problems by self-tuning the parameter values. In the population-based optimization algorithms, two common parameters are very easy to handle: these are the search agents’ population and the search iterations. These two parameters have almost a linear effect with the performance, so the user can choose them judging by the expected level of accuracy and the calculation resources. However, the other parameters are very different from one another in nature. For loosely-packed type of algorithms, one typical research direction is the self-adaptive methods for PSO, the idea being to introduce a check and repair operation to every iterative generation of the search [

16]. The idea is suitable for the strong collective swarm algorithms, as well. The self-adaptive firefly algorithm [

17] and the hybrid self-adaptive bat algorithm [

15] have both proven that the parameter control method makes a great contribution to the search performance. In this paper, as we want to introduce a new parameter control method for WSA, we will first introduce the original WSA and some related self-adaptive methods. Then, this method could be potentially applied to all the other strong collective swarm algorithms.

2.1. The Original Wolf Search Algorithm

The WSA is a relatively young, but efficient member of the family of swarm intelligence algorithms. The logic of the WSA search agents is inspired by the hunting behaviour of wolf packs. When preying, wolves use both cooperation and individual work [

11]. The wolf applies both local search and a global communication at the same time. To transfer the wolf swarm preying behaviour into a computing method, some basic rules of the WSA are formulated as below.

Each wolf search agent has a specific visual range

, defined by Equation (

1).

is the position of the current search agent;

is a nearby search agent within visual range

; and

is the order of the hyper space.

The result of the objective function, which is the fitness value produced from benchmark functions, is used as the measurement of the local position of each search agent (wolf). The search agent can move towards a better location by communication with other agents. Two situations can be found here. One is that the wolf can sense a neighbour with a better location in its visual range. Then, the wolf will move directly towards it. Another situation is that the wolf cannot sense any better peers. Then, the wolf will try to find a better location using a random Brownian movement.

To avoid local optima, an escape strategy is introduced to the WSA. An enemy is randomly generated in the search space. If a wolf search agent senses the presence of an enemy, from the current position, it will jump very far away to a new position. The function

requires a user input parameter

, and it generates a new location for an escaped wolf. The function equation is shown in Equation (

2) [

14].

is the measure of the search space range based on the given upper and lower bounds of the variables, and

is the escape probability. The behaviour control parameters are listed in

Table 1.

An example of the wolves’ preying behaviour is illustrated in

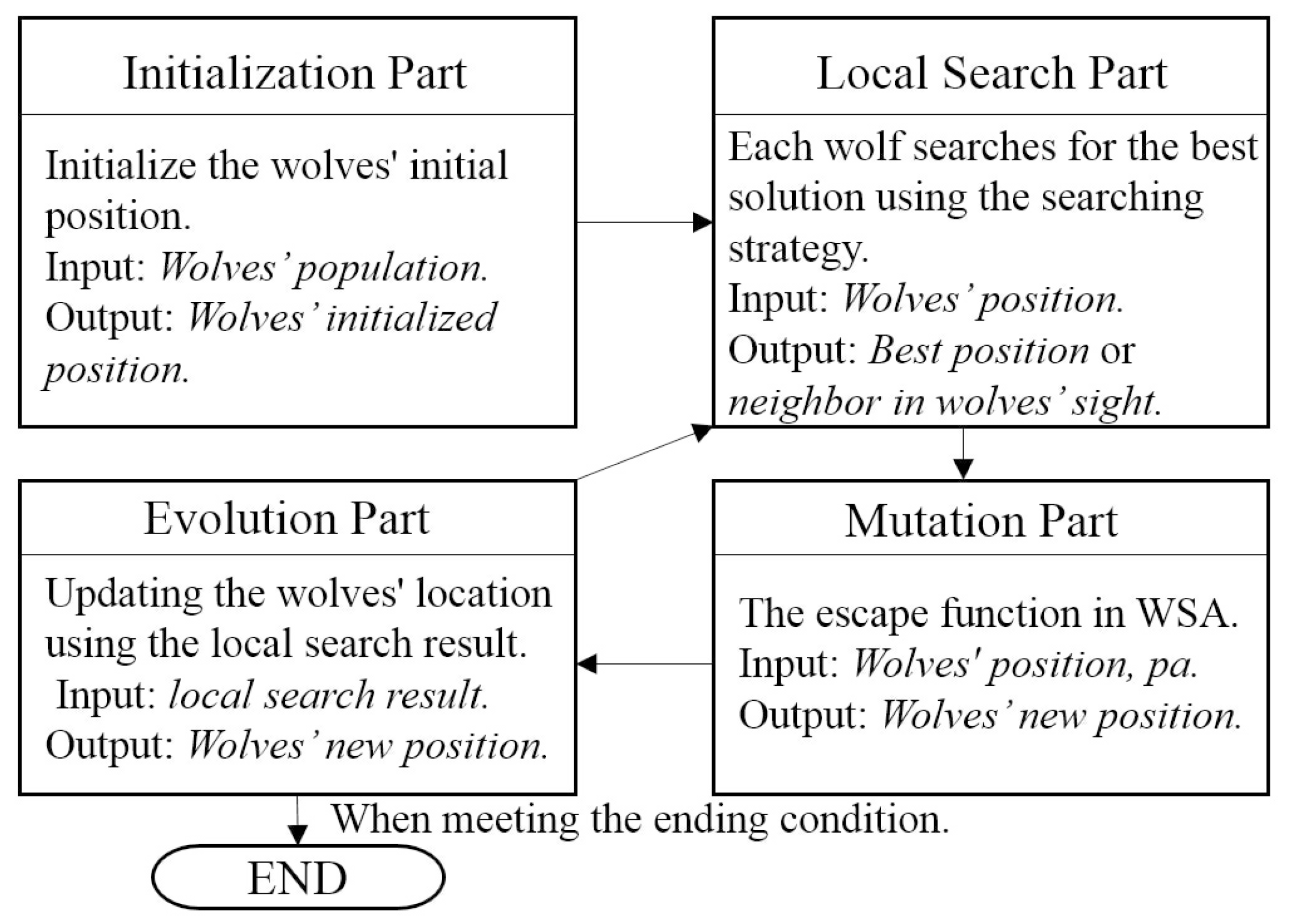

Figure 1. The original WSA consists of four main parts, which are shown as the four blocks in

Figure 2.

The initialization process is important for the original WSA. This is when all the parameters are set with some values. Once the parameters are set, the algorithm proceeds to search for solutions iteratively according to the parameter values, which are fixed throughout the runtime. For all iterative metaheuristics, the parameters control how the iterative search proceeds, such as how the solution evolves and how new candidate solutions are discovered, and the fitter new solutions are replacing the old ones according to some rules coded in the algorithm. For the evolution part, the wolves’ location update function can be summarized by Equation (

3).

2.2. The Self-Adaptive Wolf Search Algorithm

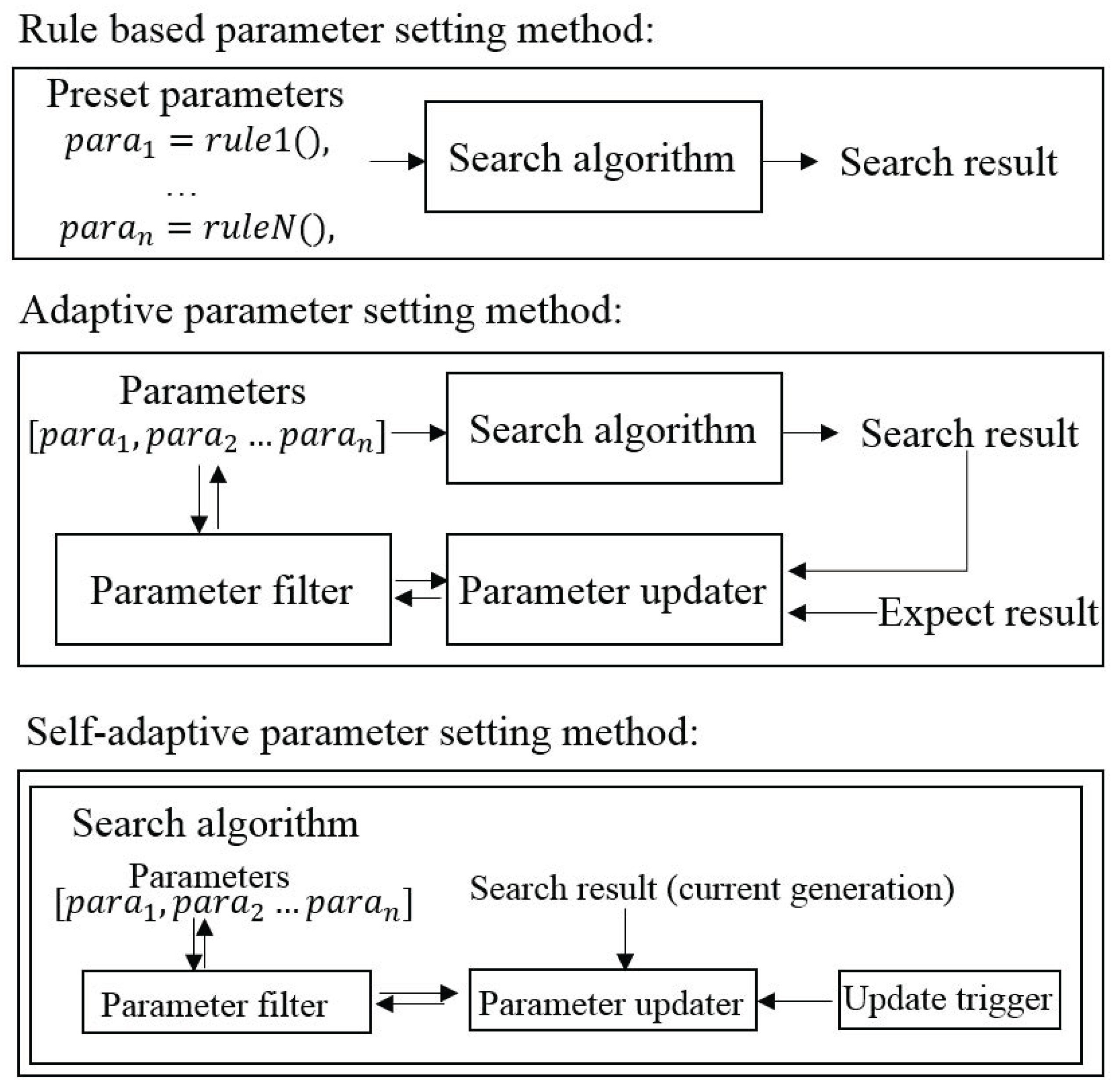

As the parameter training is very important for the performance except for the approach based on preset static parameter values, there are three parameter control methods that are usually used. One is the rule-based parameter setting method. The other one is the feedback adaptive method where the parameters are made adaptive to the feedback from the result of the search algorithm [

18]. The third approach is the free self-adaptive method, where the parameters can be freely changed during the algorithm run time [

15]. Obviously, the adaptive and the self-adaptive methods can be considered as more user friendly, as users do not need to know how to set the parameters or the rules by themselves. From these two methods, the self-adaptive one has turned out to be more popular in the research area, because the search agents’ situation and the local fitness landscape can be dynamically different from generation to generation during the algorithm run. These three methods are conceptually shown in

Figure 3.

In 2014, the original bat algorithm is hybridized with a differential evolution strategy called the DE strategy; it was published in [

19], known as the Hybrid Self-Adaptive Bat Algorithm (HSABA). The working logics of HSABA are briefly listed as follows:

Execute the local search using the DE strategy. A self-adaptation rate,

r, should be present, which states the ratio of self-adaptive bats to the whole population of bats. In [

19], the ratio of 1:10 was used for the number of self-adaptive bats.

Choose four virtual bats randomly from the population, each of which was initialized with a new position.

Apply the DE strategy to improve the candidate solution.

The WSA was designed for solving complex problems, and the advantage in efficiency becomes more apparent when the search space dimension grows. Distinctive from HSABA, in our SAWSA, the calculation cost is taken into account in the evolution. In contrast to SABA, the SAWSA uses the DE functions instead of embedding the parameters into the search agents. DE functions are a kind of well-developed local search and update function with very low calculation cost.

In the SAWSA, two kinds of self-adaptive methods are used. The first one is called the core-guided one, which is related to the HSABA. During the parameter updating process, HSABA considers the current global best solution from four selected bats using the DE function

[

15], which is depicted in Equation (

4). In this equation,

is a real-valued constant, which adjusts the amplification of the differential variation.

The other approach is fully random, which is therefore called the random selection DE method, and a simple description is as follows [

14]:

Randomly select a sufficient number of search agents.

Apply the DE functions on the crossover mechanism.

Determine if updating the parameters is needed by looking into the current global best solution.

Load new values from some allowable range into the parameters.

The pseudocode of the published SAWSA is shown in Algorithm 1. In this algorithm, is the wolf population, is the global fitness values of each wolf, is the objective function, the number of parameters is (with WSA, the number is four), the control parameters are representing , the lower bounds for parameters are , the upper bounds for parameters are , the update probability of parameters is and the self-adaptation probability is .

| Algorithm 1: The self-adaptive wolf search algorithm. |

![Entropy 20 00037 i001]() |

3. Gaussian-Guided SAWSA Based on Information Entropy Theory

For the self-adaptive method, the parameter boundaries constitute crucial information [

20]. By the parameter definition, an extensive testing was carried out for finding the possible values or ranges for the parameters. Some validated parameter boundaries for SAWSA are shown in

Table 2. In the previously-proposed self-adaptive wolf search algorithm, the parameter boundaries are the only limitations of the parameter updating. Clearly, this is not good enough because the ideal parameter control should follow the same patterns such that the changing of the parameter will not affect the algorithm performance.

Just like other classic probabilistic algorithms [

21], a mathematical model of WSA is hard to analyse with formal proofs given its stochastic nature. Therefore, we use extensive experiments to gain insights from the experiences with WSA parameter control. To examine the effects of each parameter, the static step size

s, the velocity factor

, the escape frequency

and the visual range

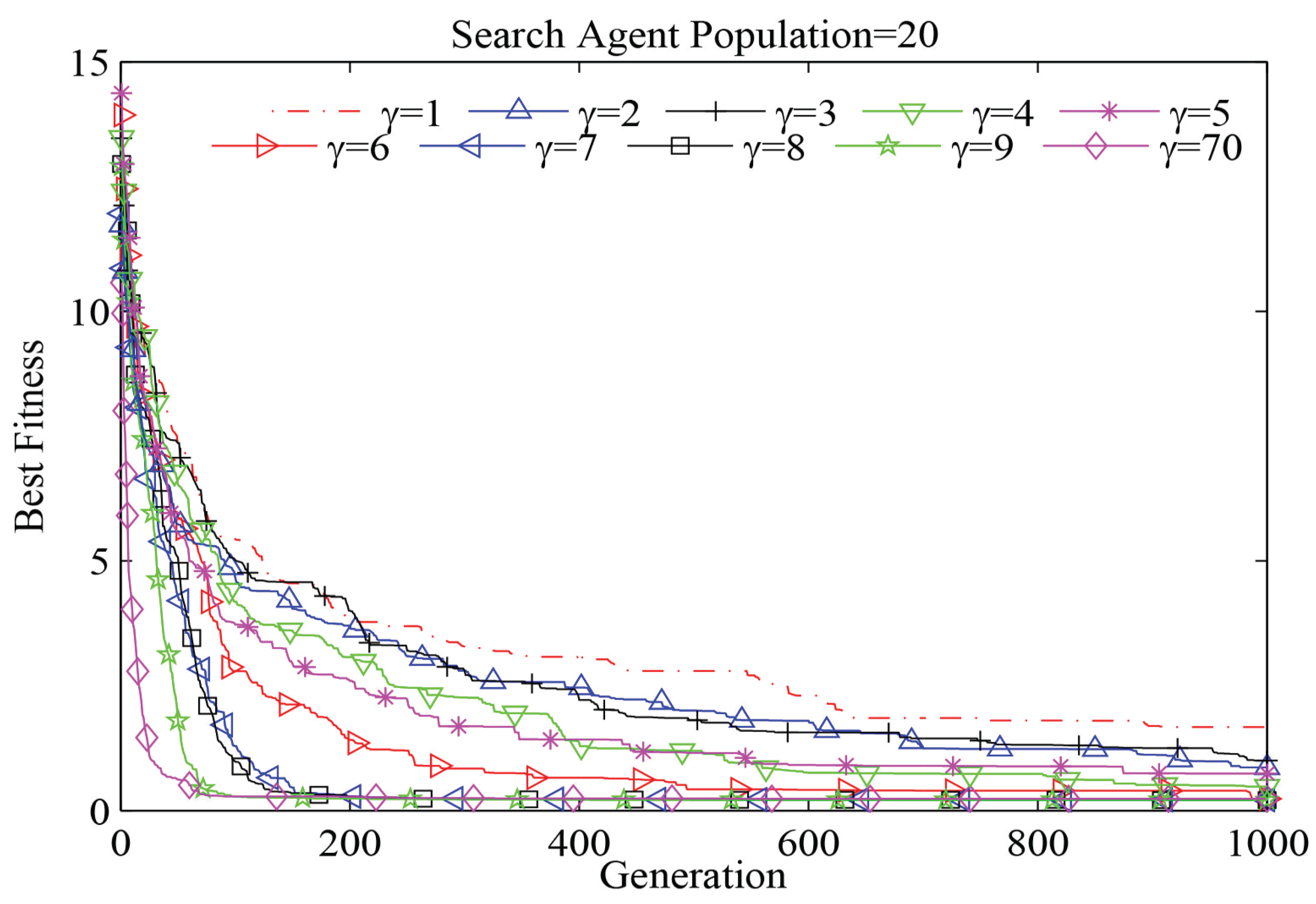

as model variables are used. The results show that already a small change of the parameter limits can obviously affect the performance. When the parameter boundaries are well defined, the performance can hardly be affected. Thereby, it provides consistent optimization performance. In

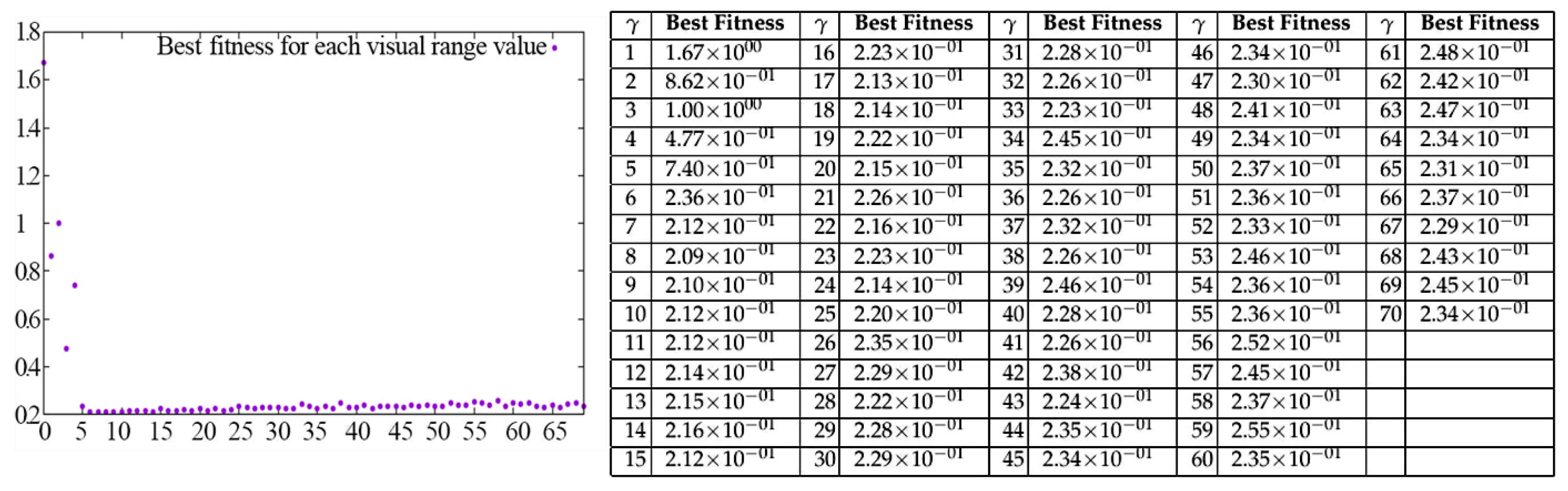

Figure 4, an example with the Ackley 1function for

is shown. Each curve in this figure is the average convergence curve of 20 individuated results (experiment repetitions). Here, the static parameters are

,

and

. The parameter

changes from one to

with a step size of one. Here, as an example, we only evaluate parameter

. The other parameters follow a similar pattern.

In

Figure 4, most of the 70 curves are overlapping because changing the visual range does not bring much improvement. Only in the range from

to

, the improvement is obvious. It can be clearly seen that for the Ackley 1function, updating

in the definitional domain can be used, but is not necessary. The best solution comes from updating

within a reasonable range where a large improvement can be obtained with the least number of attempts. By analysing the distribution of the best fitness values with the corresponding

values in

Figure 5, we can conclude that the best improvement can be obtained by the approach from the lower bound. This pattern can be shown in our experiments with other benchmark functions, as well. Therefore, for parameter

, we can give a more reasonable updating domain from the experiments:

Another pattern can be used as shown in

Figure 5, as well, where the best parameter solution is always located in a small range. If we find a sufficiently suitable parameter value, the best way to update is to do so within a certain range, which means the next generated parameter should be guided by the previous one. By using this method, we can avoid unstable performance and save time from random guesses. Subsequently, the challenge now is to choose a suitable entropy function to guide the parameter control approach.

However, as a probability-based algorithm, the current optimization states can influence the results in the next generation, while the future move is highly unpredictable. This model behaviour can be described by the theory of chaos [

22], and the parameter update behaviour can be treated as a typical chaotic behaviour; this behaviour seems like a random updating with an uncertain, unrepeatable and unpredictable behaviour in a certain described system [

23]. The chaotic phenomenon has to take into account population control, hybrid control or initialized control in metaheuristic algorithm studies [

24] and other data science topics [

25]. In our study, the entropy theory is used to control the parameter self-update. The reason is analysed as below.

The individual parameter update behaviour is unpredictable by the updater; however, as a chaotic system, the stability can be measured. The chaotic and entropy theory have been used in many schemes [

26]. The entropy or entropy rate is one of the efficient measures for the stability of a chaotic system.

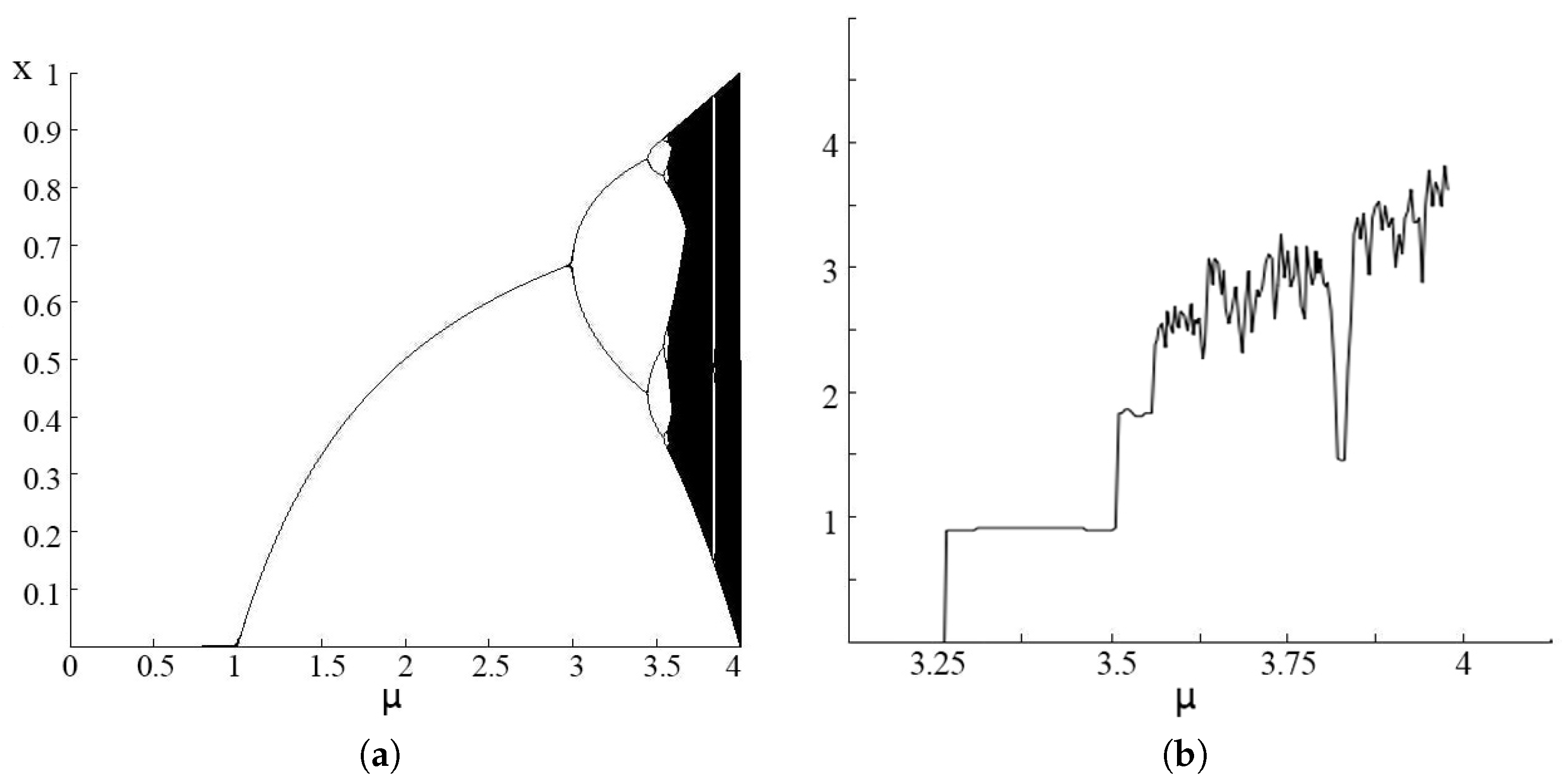

The logistic map is one of the most famous chaos maps [

27]. It has a polynomial mapping of degree two, which is also denoted as a recurrence relation. Often as an archetypal example, it is cited and used to describe how chaotic and complex a behaviour can become after evolving from some straightforward non-linear dynamical equations. In our paper, the logistic map is used as an example. The map is defined by Equation (

6) [

28]:

This map is one of the simplest and most widely-used maps in chaos research, which is also very popular in swarm intelligence. For example, in water engineering, chaos PSO using the logistic map provides a very stable solution to design a flood hydro-graph [

29], and it was also used in a resource allocation problem in 2007 [

30]. Equation (

6) is the mathematic definition of a logistic map; it gives the possible iteration result of a linear problem. To analyse the stability of this map, we can calculate the entropy by using Equation (

7).

The entropy evaluation for the logistic map and the bifurcation diagram is shown in

Figure 6. It is clear that the most probable iteration solutions are located near the upper or lower bound of the definition domain. Comparing with the entropy value, the larger the entropy value is, the larger the range of the distribution for the corresponding

can be provided in the logistic map or defined in information theory as larger information communication. The logistic map can provide a usable way to solve the chaos iteration problem. However, it is not the best way for swarm intelligence, because through many experiments, it is known that the best solution should hardly be located near the boundary. Therefore, here, we consider using another chaos map, which can provide the same stability with a more reasonable iteration outcome.

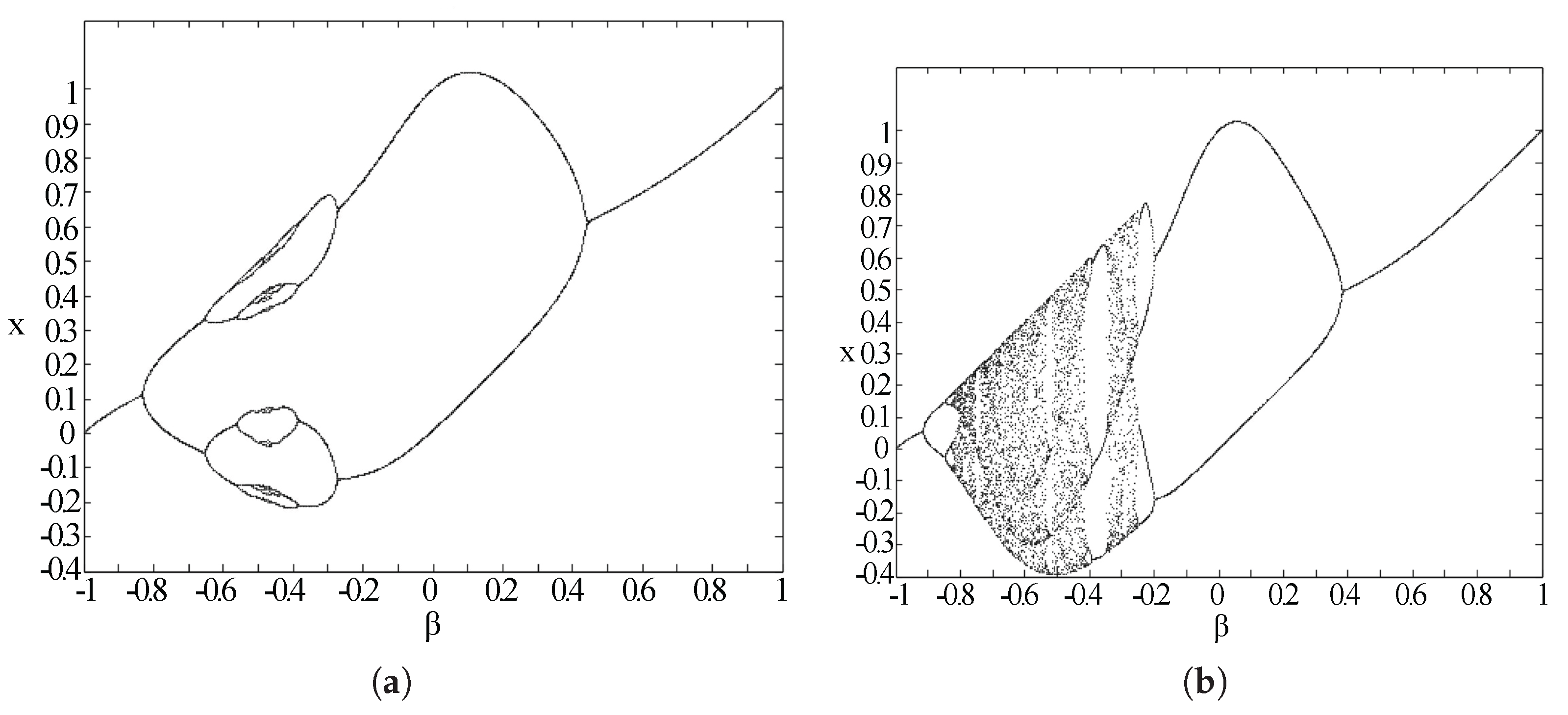

The Gauss iterated map, which is popularly known as the Gaussian map or mouse map, is another type of a nonlinear one-dimensional iterative map given by the Gaussian function [

31] in mathematics:

With this function, the Gaussian map can be described as the equation:

where

and

are constant variables. There is a bell-shaped Gaussian function named after Johann Carl Friedrich Gauss. Its shape is similar to the logistic map. As the Gaussian map has two parameters, it can provide more controllability to the iteration outcome, and the

definition domain can meet more needs than the logistic map. The next step is to choose the most stable and suitable model for use in parameter control. The goal for this step is to satisfy the stability requirement of a chaos system, while keeping up a higher entropy value for more information communication in the system.

The stability of the chaotic maps is defined by the theorem below [

32]:

Theorem 1. Suppose that the map has a fixed point at . Then, the fixed point is stable if: and it is unstable if: The stability analysis of the Gaussian map is shown below.

Using Theorem 1, we get the following stability result depending on the parameter values:

From the analysis, the stability is just related to

; the Gaussian map will move to stable regions when

. In

Figure 7, we can see the possible iterative outcome with each

when

and

to visualize the effects of different

values. As shown in

Figure 8, this map shows the period-doubling and period-undoubling bifurcations. Considering the parameter iteration needs in our program, based on the entropy theory, the final parameters are set as

and

, which can provide both stability and enough inner information change.

Using this Gaussian map, the new parameter iterative method will be updated from:

to:

where

and

will be set as above for generating modified parameter values for WSA within the specified boundaries. If this equation leads to negative values in our algorithm, we simply use the absolute value for the parameter update, as all used parameter values should be positive.

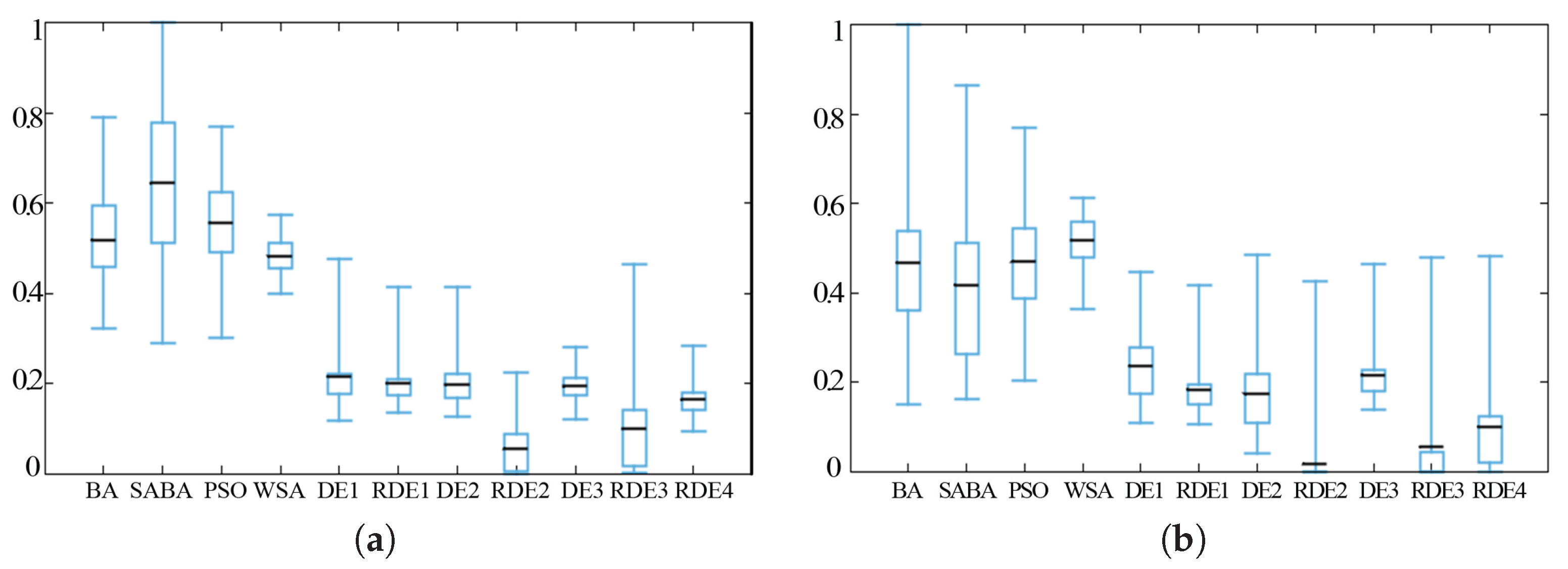

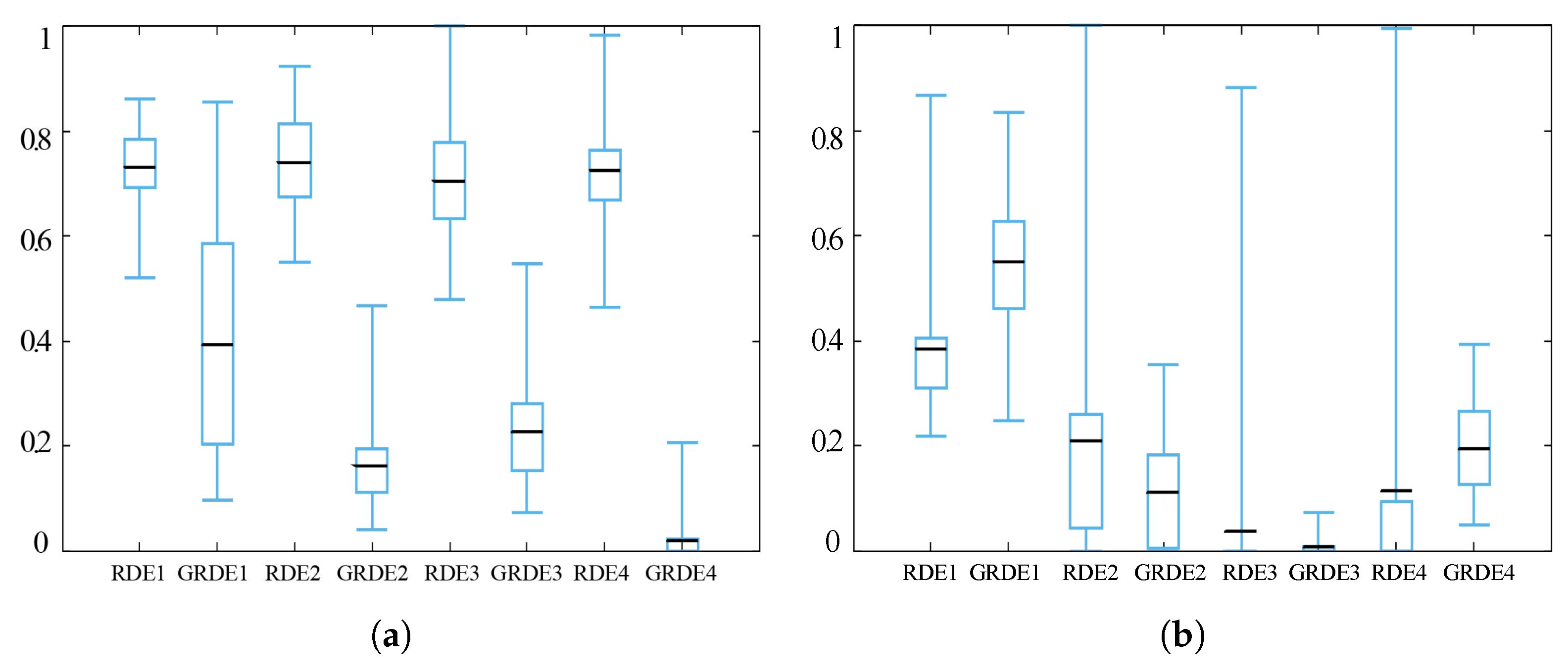

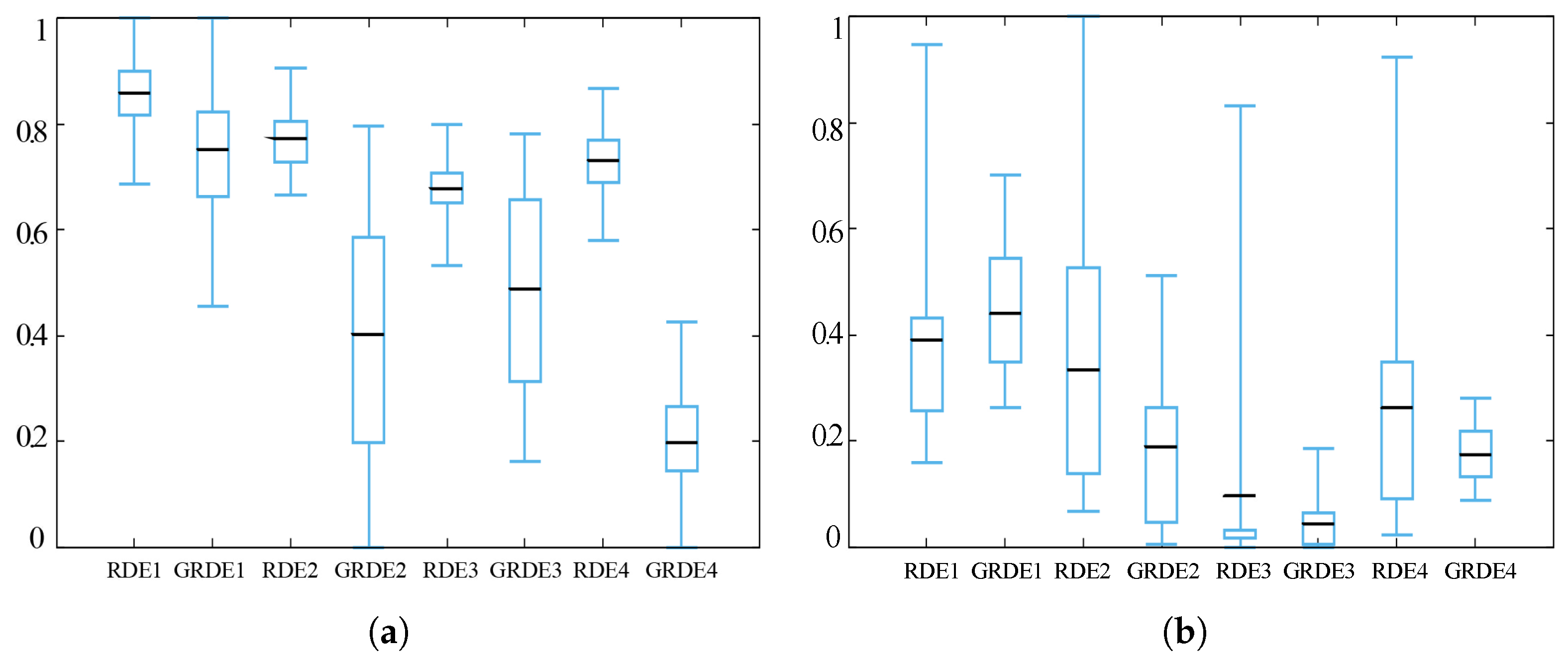

Our experiments show that this parameter control method offers more stability and better performance compared to the proposed SAWSA. The parameter control mechanism will be added into the original random selection-based SAWSA, as it has a much better performance than the core-guided DE, which is strongly based on the global best agent (this experiment result is shown in the next section). With this modification, the final version of the Gaussian-guided SAWSA is generated.

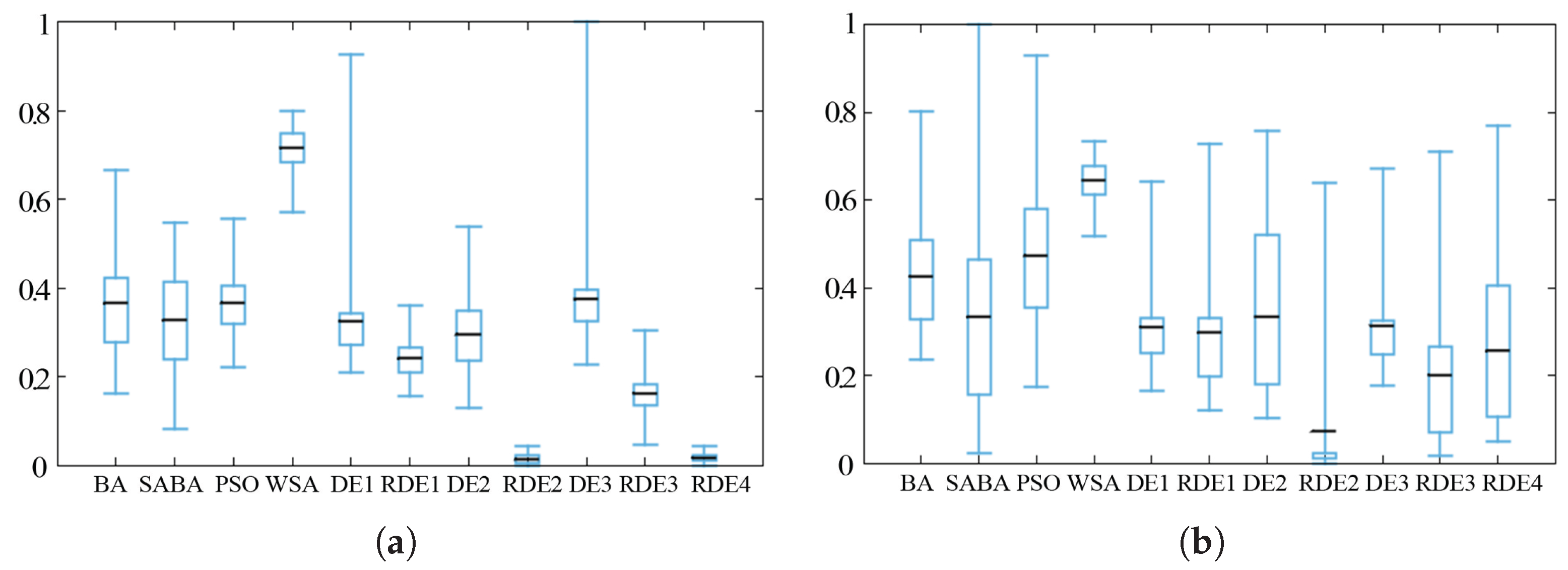

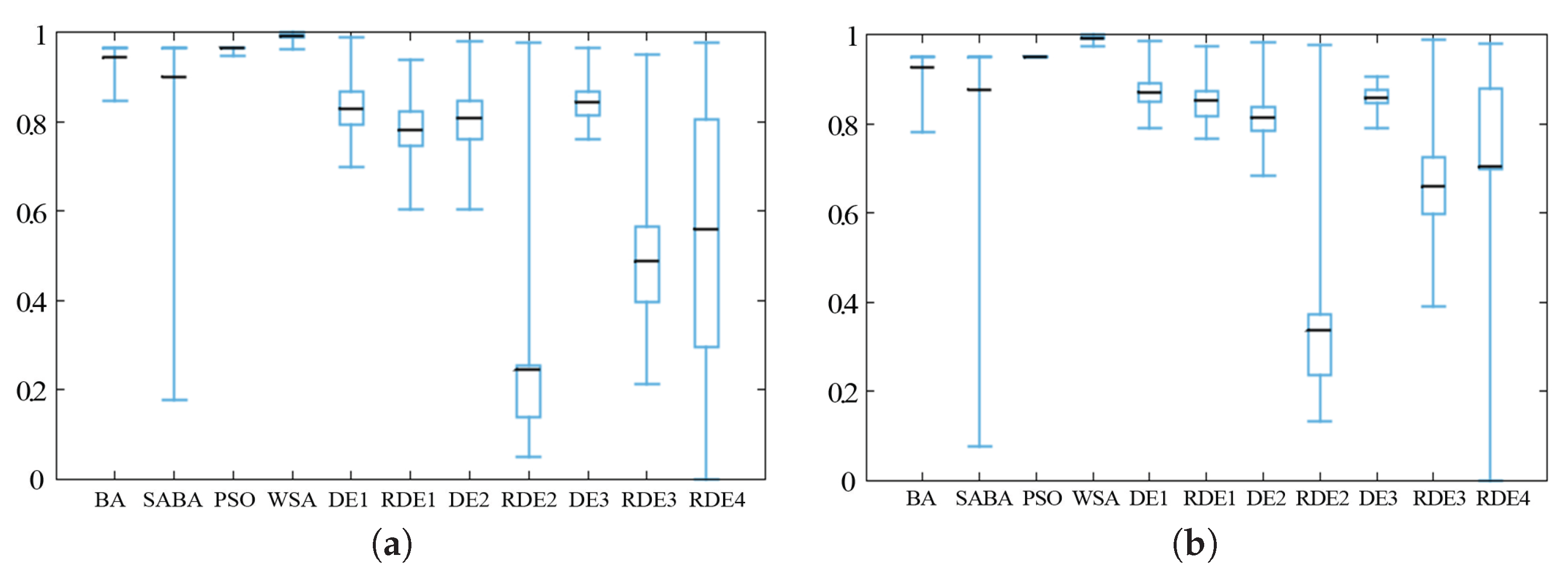

To show the advantage of the entropy-based Gaussian-guided parameter update method, which avoids the randomness, we modified all the optional DE functions to self-adaptive methods. By adapting to all these functions, the result can show if the improvement is caused by the adaptive function or the parameter update-guided methods. We mix two different solution selection methods with DE, and the combinations are shown in

Table 3. The two self-adaptive types of methods are compared in this paper. Hence, we have a list of differential evolution crossover functions such as

,

,

functions. In these functions, the current global best solution is taken into consideration. This approach is supposed to increase the stability of the system because the direction of movement is calculated in relation to the location of the current global best. The calculation by

,

,

and

is only done at the chosen search agents, so it will not overload the system. The solution by

is the one picked as the best current fitness among the other chosen ones. In this way, more randomness can be added to the algorithm by this method. Wider steps are taken for more optional behaviour. So far, it has been tested and worked well with the semi-swarm type of algorithms, such as bat and wolf. It is however not know whether it may become unstable when coupled with other search algorithms.

5. Conclusions

Self-adaptive methods are effective to enable parameter-free metaheuristic algorithms; they can even improve the performance because the parameters’ values are self-tuned to be optimal (hence optimal results). Based on the authors’ prior work about the hybridizing self-adaptive DE functions in the bat algorithm, a similar, but new self-adaptive algorithm was developed called SAWSA. However, due to the lack of stability control and missing knowledge of the inner connection of the parameters and performance, the SAWSA is not sufficiently satisfactory for us. In this paper, the self-adaptive method is considered from the perspective of entropy theory for metaheuristic algorithms, and we developed an improved parameter control called the Gaussian-guided self-adaptive method. After designing this method denoted as GSAWSA, we configured test experiments with fourteen standard benchmark functions. Based on the results, the following conclusions can be drawn.

Firstly, the self-adaptive approach is proven to be very efficient for metaheuristic algorithms, especially those that require much calculation cost. By using this method, the parameter training part can be removed in the real case usage. However, as the self-adaptive modification would increase the complexity of the algorithm, the calculation cost of the self-adaptive method must be taken into consideration.

Secondly, comparing all the optional self-adaptive DE methods in the experiment, the type of random selection is a better choice for SAWSA. However, as the average outcome is improved, the stability is clearly decreased by introducing more randomness into the algorithm. How to balance the random effects and to improve the stability is, in general, a difficult challenge for a the metaheuristic algorithm study.

Thirdly, the parameter-performance changing pattern can be considered a very important feature of metaheuristic algorithms. Normally, researchers use the performance or the situation feedback as an update reference. However, how the parameters influence the performance usually remains outside of consideration. By analysing how the parameters influence the performance, a better self-adaptive updating method could be developed and a better performance could be achieved with less computing resources.

In conclusion, a parameter-free metaheuristic model where a Gaussian map is fused with the WSA algorithm is proposed. It has the advantages of not requiring the parameters’ values to remain static and the parameters will tune themselves as the search operation proceeds. Often, from our experiment results, the new model shows improvement in performance compared to the naive version of WSA, as well as other similar swarm algorithms. As future work, we want to investigate in depth the computational analysis of how the Gaussian map contributes to refining the performance and preventing the search from converging prematurely to local optima. The analysis should be done together with the runtime cost, as well. It is known from the experiments reported in this paper that there is a certain overhead when the Gaussian map is fused with WSA, extending its performance, but at the same time, the extra computation consumes additional resources. In the future the GAWSA should be enhanced with the capability of balancing the runtime cost and the best possible performance in terms of the solution quality obtained.