A Brief Review of Generalized Entropies

Abstract

:1. Introduction

2. Generalized Entropies

- SK1

- Continuity. depends continuously on all variables for each W.

- SK2

- Maximality. For all W,

- SK3

- Expansibility: For all W and ,

- SK4

- Separability (or strong additivity): For all ,where .

- (i)

- Symmetry: is invariant under permutation of .

- (ii)

- satisfies axiom SK1 if and only if g is continuous.

- (iii)

- If satisfies axiom SK2, thenfor all and with .

- (iv)

- If g is concave (i.e., ∩-convex), then satisfies axiom SK2.

- (v)

- satisfies axiom SK3 if and only if .

- (C1)

- g is continuous.

- (C2)

- g is concave.

- (C3)

- .

3. Examples of Generalized Entropies

3.1. Tsallis Entropy

- (T1)

- because (or ).

- (T2)

- is (strictly) ∩-convex for . Figure 1 plots for , 1, 2 and 5. Let us mention in passing that is ∪-convex for .

- (T3)

- (T4)

- (T5)

- Similar to what happens with the BGS entropy, Tsallis entropy can be uniquely determined (except for a multiplicative positive constant) by a small number of axioms. Thus, Abe [35] characterized the Tsallis entropy by: (i) continuity; (ii) the increasing monotonicity of with respect to W; (iii) expansivity; and (iv) a property involving conditional entropies. Dos Santos [36], on the other hand, used the previous Axioms (i) and (ii), q-additivity, and a generalization of the grouping axiom (Equation (9)). Suyari [37] derived from the first three Shannon–Khinchin axioms and a generalization of the fourth one. The perhaps most economical characterization of was given by Furuichi [38]; it consists of continuity, symmetry under the permutation of , and a property called q-recursivity. As mentioned in Section 2, Tsallis entropy was recently shown [27] to be the only composable generalized entropy of the form in Equation (10) under some technical assumptions. Further axiomatic characterizations of the Tsallis entropy can be found in [39].

3.2. Rényi Entropy

- (R1)

- is additive by construction.

- (R2)

- . Indeed, use L’Hôpital’s Rule to derive

- (R3)

- is ∩-convex for and it is neither ∩-convex nor ∪-convex for . Figure 2 plots for , 1, 2 and 5.

- (R4)

- is Lesche-unstable for all , [49].

- (R5)

- The entropies are monotonically decreasing with respect to the parameter q for any distribution of probabilities, i.e.,This property follows from the formulawhere , and is the Kullback–Leibler divergence of the probability distributions and . vanishes only in the event that both probability distributions coincide, otherwise is positive [26].

- (R6)

- A straightforward relation between Rényi’s and Tsallis’ entropies is the following [50]:

3.3. Graph Related Entropies

4. Hanel–Thurner Exponents

- (E1)

- (E2)

- For the Tsallis entropy, see Equation (12),It follows readily that if , and if . Hence, although , there is no parallel convergence concerning the HT exponents.

- (E3)

- For the Rényi entropy, and (see Equation (15)), soas (both for and ). Therefore, . Furthermore,for all , so that . In sum, for all q.

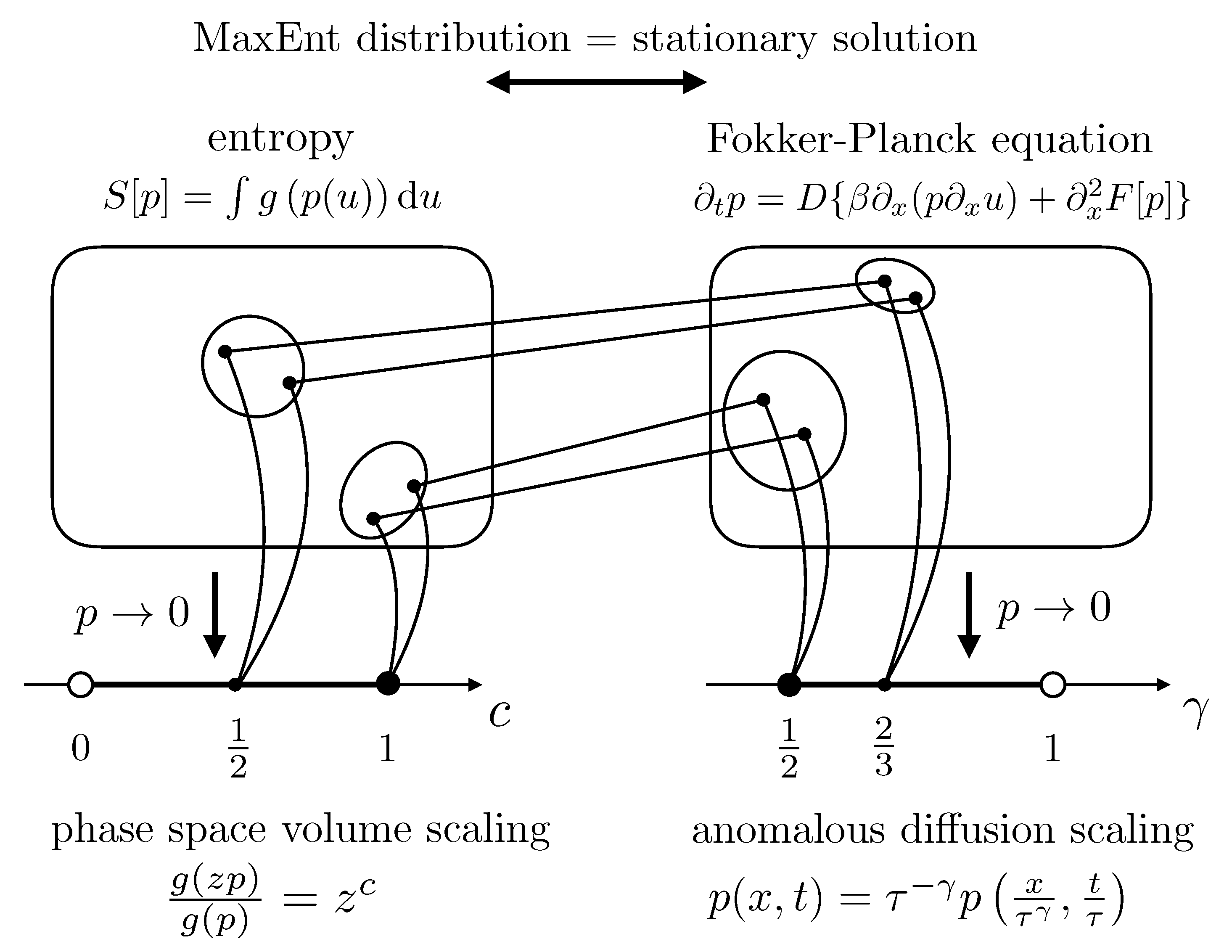

5. Asymptotic Relation between the HT Exponent c and the Diffusion Scaling Exponent

5.1. The Non-Stationary Regime

5.2. Relation between the Stationary and Non-Stationary Regime

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

Appendix C

References

- Clausius, R. The Mechanical Theory of Heat; McMillan and Co.: London, UK, 1865. [Google Scholar]

- Boltzmann, L. Weitere Studien über das Wärmegleichgewicht unter Gasmolekülen. Sitz. Ber. Akad. Wiss. Wien (II) 1872, 66, 275–370. [Google Scholar]

- Boltzmann, L. Über die Beziehung eines allgemeinen mechanischen Satzes zum zweiten Hauptsatz der Wärmetheorie. Sitz. Ber. Akad. Wiss. Wien (II) 1877, 75, 67–73. [Google Scholar]

- Gibbs, J.W. Elementary Principles in Statistical Mechanics—Developed with Especial References to the Rational Foundation of Thermodynamics; C. Scribner’s Sons: New York, NY, USA, 1902. [Google Scholar]

- Dewar, R. Information theory explanation of the fluctuation theorem, maximum entropy production and self-organized criticality in nonequilibrium stationary state. J. Phys. A Math. Gen. 2003, 36, 631–641. [Google Scholar] [CrossRef]

- Martyushev, L.M. Entropy and entropy production: old misconceptions and new breakthroughs. Entropy 2013, 15, 1152–1170. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Wissner-Gross, A.D.; Freer, C.E. Causal entropic forces. Phys. Rev. Lett. 2013, 110, 168702. [Google Scholar] [CrossRef] [PubMed]

- Mann, R.P.; Garnett, R. The entropic basis of collective behaviour. J. R. Soc. Interface 2015, 12, 20150037. [Google Scholar] [CrossRef] [PubMed]

- Kolmogorov, A.N. A new metric invariant of transitive dynamical systems and Lebesgue space endomorphisms. Dokl. Acad. Sci. USSR 1958, 119, 861–864. [Google Scholar]

- Rényi, A. On measures of entropy and information. In Proceedings of the 4th Berkeley Symposium on Mathematics, Statistics and Probability; Neyman, J., Ed.; University of California Press: Berkeley, CA, USA, 1961; pp. 547–561. [Google Scholar]

- Tsallis, C. Possible generalization of Boltzmann–Gibbs statistics. J. Stat. Phys. 1988, 52, 479–487. [Google Scholar] [CrossRef]

- Amigó, J.M.; Keller, K.; Unakafova, V. On entropy, entropy-like quantities, and applications. Disc. Cont. Dyn. Syst. B 2015, 20, 3301–3343. [Google Scholar] [CrossRef] [Green Version]

- Csiszár, I. Axiomatic characterization of information measures. Entropy 2008, 10, 261–273. [Google Scholar] [CrossRef]

- Tsallis, C. Introduction to Nonextensive Statistical Mechanics; Springer: New York, NY, USA, 2009. [Google Scholar]

- Hanel, R.; Thurner, S. A comprehensive classification of complex statistical systems and an axiomatic derivation of their entropy and distribution functions. EPL 2011, 93, 20006. [Google Scholar] [CrossRef]

- Principe, J.C. Information Theoretic Learning: Renyi’s Entropy and Kernel Perspectives; Springer: New York, NY, USA, 2010. [Google Scholar]

- Hernández, S. Introducing Graph Entropy. Available online: http://entropicai.blogspot.com/search/label/Graph%20entropy (accessed on 22 October 2018).

- Salicrú, M.; Menéndez, M.L.; Morales, D.; Pardo, L. Asymptotic distribution of (h, ϕ)-entropies. Commun. Stat. Theory Meth. 1993, 22, 2015–2031. [Google Scholar] [CrossRef]

- Bosyk, G.M.; Zozor, S.; Holik, F.; Portesi, M.; Lamberti, P.W. A family of generalized quantum entropies: Definition and properties. Quantum Inf. Process. 2016, 15, 3393–3420. [Google Scholar] [CrossRef]

- Von Neumann, J. Thermodynamik quantenmechanischer Gesamtheiten. Nachrichten von der Gesellschaft der Wissenschaften zu Göttingen 1927, 1927, 273–291. (In German) [Google Scholar]

- Hein, C.A. Entropy in Operational Statistics and Quantum Logic. Found. Phys. 1979, 9, 751–786. [Google Scholar] [CrossRef]

- Short, A.J.; Wehner, S. Entropy in general physical theories. New J. Phys. 2010, 12, 033023. [Google Scholar] [CrossRef] [Green Version]

- Holik, F.; Bosyk, G.M.; Bellomo, G. Quantum information as a non-Kolmogovian generalization of Shannon’s theory. Entropy 2015, 17, 7349–7373. [Google Scholar] [CrossRef]

- Portesi, M.; Holik, F.; Lamberti, P.W.; Bosyk, G.M.; Bellomo, G.; Zozor, S. Generalized entropie in quantum and classical statistical theories. Eur. Phys. J. Spec. Top. 2018, 227, 335–344. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley and Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Enciso, A.; Tempesta, P. Uniqueness and characterization theorems for generalized entropies. J. Stat. Mech. 2017, 123101. [Google Scholar] [CrossRef]

- Khinchin, A.I. Mathematical Foundations of Information Theory; Dover Publications: New York, NY, USA, 1957. [Google Scholar]

- Ash, R.B. Information Theory; Dover Publications: New York, NY, USA, 1990. [Google Scholar]

- MacKay, D.J. Information Theory, Inference, and Earning Algorithms; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Bandt, C. A new kind of permutation entropy used to classify sleep stages from invisible EEG microstructure. Entropy 2017, 19, 197. [Google Scholar] [CrossRef]

- Havrda, J.; Charvát, F. Quantification method of classification processes. Concept of structural α-entropy. Kybernetika 1967, 3, 30–35. [Google Scholar]

- Abe, S. Stability of Tsallis entropy and instabilities of Renyi and normalized Tsallis entropies. Phys. Rev. E 2002, 66, 046134. [Google Scholar] [CrossRef] [PubMed]

- Tsallis, C.; Brigatti, E. Nonextensive statistical mechanics: A brief introduction. Contin. Mech. Thermodyn. 2004, 16, 223–235. [Google Scholar] [CrossRef] [Green Version]

- Abe, S. Tsallis entropy: How unique? Contin. Mech. Thermodyn. 2004, 16, 237–244. [Google Scholar] [CrossRef]

- Dos Santos, R.J.V. Generalization of Shannon’s theorem for Tsallis entropy. J. Math. Phys. 1997, 38, 4104–4107. [Google Scholar] [CrossRef]

- Suyari, H. Generalization of Shannon–Khinchin axioms to nonextensive systems and the uniqueness theorem for the nonextensive entropy. IEEE Trans. Inf. Theory 2004, 50, 1783–1787. [Google Scholar] [CrossRef]

- Furuichi, S. On uniqueness theorems for Tsallis entropy and Tsallis relative entropy. IEEE Trans. Inf. Theory 2005, 51, 3638–3645. [Google Scholar] [CrossRef]

- Jäckle, S.; Keller, K. Tsallis entropy and generalized Shannon additivity. Axioms 2016, 6, 14. [Google Scholar] [CrossRef]

- Hanel, R.; Thurner, S. When do generalized entropies apply? How phase space volume determines entropy. Europhys. Lett. 2011, 96, 50003. [Google Scholar] [CrossRef] [Green Version]

- Plastino, A.R.; Plastino, A. Stellar polytropes and Tsallis’ entropy. Phys. Lett. A 1993, 174, 384–386. [Google Scholar] [CrossRef]

- Alemany, P.A.; Zanette, D.H. Fractal random walks from a variational formalism for Tsallis entropies. Phys. Rev. E 1994, 49, R956–R958. [Google Scholar] [CrossRef]

- Plastino, A.R.; Plastino, A. Non-extensive statistical mechanics and generalized Fokker–Planck equation. Physica A 1995, 222, 347–354. [Google Scholar] [CrossRef]

- Tsallis, C.; Bukman, D.J. Anomalous diffusion in the presence of external forces: Exact time-dependent solutions and their thermostatistical basis. Phys. Rev. E 1996, 54, R2197. [Google Scholar] [CrossRef]

- Capurro, A.; Diambra, L.; Lorenzo, D.; Macadar, O.; Martin, M.T.; Mostaccio, C.; Plastino, A.; Rofman, E.; Torres, M.E.; Velluti, J. Tsallis entropy and cortical dynamics: The analysis of EEG signals. Physica A 1998, 257, 149–155. [Google Scholar] [CrossRef]

- Maszczyk, T.; Duch, W. Comparison of Shannon, Renyi and Tsallis entropy used in decision trees. In Proceedings of the International Conference on Artificial Intelligence and Soft Computing, Zakopane, Poland, 22–26 June 2008; Springer: Berlin, Germany, 2008; pp. 643–651. [Google Scholar]

- Gajowniczek, K.; Karpio, K.; Łukasiewicz, P.; Orłowski, A.; Zabkowski, T. Q-Entropy approach to selecting high income households. Acta Phys. Pol. A 2015, 127, 38–44. [Google Scholar] [CrossRef]

- Gajowniczek, K.; Orłowski, A.; Zabkowski, T. Simulation study on the application of the generalized entropy concept in artificial neural networks. Entropy 2018, 20, 249. [Google Scholar] [CrossRef]

- Lesche, B. Instabilities of Renyi entropies. J. Stat. Phys. 1982, 27, 419–422. [Google Scholar] [CrossRef]

- Mariz, A.M. On the irreversible nature of the Tsallis and Renyi entropies. Phys. Lett. A 1992, 165, 409–411. [Google Scholar] [CrossRef]

- Aczél, J.; Daróczy, Z. Charakterisierung der Entropien positiver Ordnung und der Shannonschen Entropie. Acta Math. Acad. Sci. Hung. 1963, 14, 95–121. (In German) [Google Scholar] [CrossRef]

- Jizba, P.; Arimitsu, T. The world according to Rényi: Thermodynamics of multifractal systems. Ann. Phys. 2004, 312, 17–59. [Google Scholar] [CrossRef]

- Rényi, A. On the foundations of information theory. Rev. Inst. Int. Stat. 1965, 33, 1–4. [Google Scholar] [CrossRef]

- Campbell, L.L. A coding theorem and Rényi’s entropy. Inf. Control 1965, 8, 423–429. [Google Scholar] [CrossRef]

- Csiszár, I. Generalized cutoff rates and Rényi information measures. IEEE Trans. Inf. Theory 1995, 41, 26–34. [Google Scholar] [CrossRef]

- Bennett, C.; Brassard, G.; Crépeau, C.; Maurer, U. Generalized privacy amplification. IEEE Trans. Inf. Theory 1995, 41, 1915–1923. [Google Scholar] [CrossRef]

- Kannathal, N.; Choo, M.L.; Acharya, U.R.; Sadasivan, P.K. Entropies for detection of epilepsy in EEG. Comput. Meth. Prog. Biomed. 2005, 80, 187–194. [Google Scholar] [CrossRef] [PubMed]

- Contreras-Reyes, J.E.; Cortés, D.D. Bounds on Rényi and Shannon Entropies for Finite Mixtures of Multivariate Skew-Normal Distributions: Application to Swordfish (Xiphias gladius Linnaeus). Entropy 2016, 11, 382. [Google Scholar] [CrossRef]

- Abramowitz, M.; Stegun, I.A. Handbook of Mathematical Tables; Dover Publications: New York, NY, USA, 1972. [Google Scholar]

- Hanel, R.; Thurner, S. Generalized (c, d)-entropy and aging random walks. Entropy 2013, 15, 5324–5337. [Google Scholar] [CrossRef] [Green Version]

- Chavanis, P.H. Nonlinear mean field Fokker–Planck equations. Application to the chemotaxis of biological populations. Eur. Phys. J. B 2008, 62, 179–208. [Google Scholar] [CrossRef]

- Martinez, S.; Plastino, A.R.; Plastino, A. Nonlinear Fokker–Planck equations and generalized entropies. Physica A 1998, 259, 183–192. [Google Scholar] [CrossRef]

- Bouchaud, J.P.; Georges, A. Anomalous diffusion in disordered media: Statistical mechanisms, models and physical applications. Phys. Rep. 1990, 195, 127–293. [Google Scholar] [CrossRef]

- Dubkov, A.A.; Spagnolo, B.; Uchaikin, V.V. Lévy flight superdiffusion: An introduction. Int. J. Bifurcat. Chaos 2008, 18, 2649–2672. [Google Scholar] [CrossRef]

- Schwämmle, V.; Curado, E.M.F.; Nobre, F.D. A general nonlinear Fokker–Planck equation and its associated entropy. EPJ B 2007, 58, 159–165. [Google Scholar] [CrossRef]

- Czégel, D.; Balogh, S.G.; Pollner, P.; Palla, G. Phase space volume scaling of generalized entropies and anomalous diffusion scaling governed by corresponding nonlinear Fokker–Planck equations. Sci. Rep. 2018, 8, 1883. [Google Scholar] [CrossRef] [PubMed]

- Anteneodo, C.; Plastino, A.R. Maximum entropy approach to stretched exponential probability distributions. J. Phys. A Math. Gen. 1999, 32, 1089–1098. [Google Scholar] [CrossRef]

- Kaniadakis, G. Statistical mechanics in the context of special relativity. Phys. Rev. E 2002, 66, 056125. [Google Scholar] [CrossRef] [PubMed]

- Curado, E.M.; Nobre, F.D. On the stability of analytic entropic forms. Physica A 2004, 335, 94–106. [Google Scholar] [CrossRef]

- Tsekouras, G.A.; Tsallis, C. Generalized entropy arising from a distribution of q indices. Phys. Rev. E 2005, 71, 046144. [Google Scholar] [CrossRef] [PubMed]

- Shafee, F. Lambert function and a new non-extensive form of entropy. IMA J. Appl. Math. 2007, 72, 785–800. [Google Scholar] [CrossRef] [Green Version]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amigó, J.M.; Balogh, S.G.; Hernández, S. A Brief Review of Generalized Entropies. Entropy 2018, 20, 813. https://doi.org/10.3390/e20110813

Amigó JM, Balogh SG, Hernández S. A Brief Review of Generalized Entropies. Entropy. 2018; 20(11):813. https://doi.org/10.3390/e20110813

Chicago/Turabian StyleAmigó, José M., Sámuel G. Balogh, and Sergio Hernández. 2018. "A Brief Review of Generalized Entropies" Entropy 20, no. 11: 813. https://doi.org/10.3390/e20110813

APA StyleAmigó, J. M., Balogh, S. G., & Hernández, S. (2018). A Brief Review of Generalized Entropies. Entropy, 20(11), 813. https://doi.org/10.3390/e20110813