1. Introduction

The task of autonomous exploration and mapping of unknown structured environments combines the solutions to three fundamental problems in mobile robotics: (self)localisation, mapping and motion control. Localisation is the problem of determining the position of the robot within its estimated or a given map. Mapping refers to the problem of integrating robot’s ranging and/or bearing sensor measurements into a coherent representation of the environment. Motion control deals with autonomous decision making (e.g., where to move, where to “look”) for the purpose of accomplishing the mission in the most effective manner (in terms of duration and accuracy).

Algorithms for simultaneous localisation and mapping (SLAM) have been studied extensively in the last decades. While the problem is still an active research area, see [

1,

2], several state-of-the-art algorithms are already publicly available in the Robot Operating System (ROS) [

3,

4]. Integrating decision control with SLAM, thereby making the robot autonomous in modelling structured environments, proves to be a much harder problem. Heuristic approaches are reviewed and compared in [

5]. More advanced approaches are based on the information gain [

6,

7,

8,

9,

10].

Autonomous SLAM is a difficult problem because any SLAM algorithm can be seen as an inherently unstable process: unless the robot returns occasionally to already visited and mapped places (this act is referred to as a loop-closure), the pose (position and heading) estimate drifts away from the correct value, resulting in an inaccurate map. Decision making in autonomous SLAM therefore must balance two contradicting objectives, namely exploration and exploitation. Exploration constantly drives the robot towards unexplored areas in order to complete its task as quickly as possible. By exploitation we mean loop-closures: the robot occasionally must return to the already mapped area for the sake of correcting its error in the pose estimate.

Early approaches to robot exploration ignored the uncertainty in the pose estimate and focused on constantly driving the robot towards the nearest frontier, which is the group of cells of the occupancy-grid map on the boundary between the known and unexplored areas [

11]. Current state-of-the art in autonomous SLAM is cast in the context of partially-observed Markov Decision processes. The reward function is typically the expected information (defined in the information theoretic framework) resulting from taking a particular action. Entropy reduction in joint estimation of the map and the pose was proposed in a seminal paper [

6]. Assuming the uncertainty in pose and the map are independent, the joint entropy can be computed as a sum of two entropies: the entropy of the robot pose and entropy of the map. Several authors subsequently pointed out the drawback of this approach [

9,

10]: the scale of the numerical values of the two uncertainties is not comparable (the entropy of the map is much higher than the entropy of the pose). A weighted combination of the two entropies was proposed subsequently, with various approaches to balance the two entropies.

Most of the autonomous SLAM algorithms have been developed in the context of a Rao-Blackwellised particle filter (RBPF)-based SLAM. We will remain in this framework and develop an autonomous SLAM for the recently proposed RFS based occupancy-grid SLAM (RFS-OG-SLAM) [

2], which is also implemented as a RBPF. In this short note we propose a reward function based on the Rényi divergence between the prior and the posterior joint map-pose densities, with the RFS modelling of sensor measurements. This approach results in a joint map-pose uncertainty measure without a need to scale and tune their respective weights.

2. The RFS Occupancy-Grid Based SLAM

The main feature of the RFS-OG-SLAM is that sensor measurements are modelled as a RFS. This model provides a rigorous theoretical framework for imperfect detection (occasionally resulting in false and missed detections) of a ranging sensor. The previous approaches to imperfect detection were based on ad-hoc scan-matching or a design of a likelihood function as a mixture of Gaussian, truncated exponential, and a uniform distribution [

12] (Section 6.3).

Let the robot pose be a vector which consists of its position in a planar coordinate system and its heading angle . Robot motion is modelled by a Markov process specified by a (known) transitional density, denoted by . Here the subscript refers to time instant and is the robot-control input applied during the time interval .

The occupancy-grid map is represented by a vector , where the binary variable denotes the occupancy of the nth grid-cell, , with being the number of cells in the grid.

The ranging sensor on the moving robot provides the range (and azimuth) measurements of reflections from the objects within the sensor field of view. Let the measurements provided by the sensor at time be represented by a set , where is a range-azimuth measurement vector. Both the cardinality of the set and the spatial distribution of its elements are random. For a measurement , which is a true return from an occupied grid cell n (i.e., with ), we assume the likelihood function is known. The probability of detecting an object occupying cell n, of the map, is state dependent (the probability of detection is typically less than one and may depend on the range to the obstacle, but also other factors, such as the surface characteristics of the object, turbidity of air, a temporary occlusion, etc.) and is denoted as . Finally, may include false detections modelled as follows: their spatial distribution over the measurement space is denoted by and their count in each scan is Poisson distributed, with a mean value .

The problem is to formulate the Bayes filter, which sequentially computes the joint posterior probability density function (PDF) , where denotes the sequence and likewise and .

The solution is formulated using the Rao-Blackwell dimension reduction [

13] technique. Application of the chain rule decomposes the the joint posterior PDF as:

Assuming that the occupancy of one cell in the grid-map is independent of the occupancy of other cells (the standard assumption for occupancy-grid SLAM), one can approximate

in (

1) as a product of

for

. Furthermore, the update equation for the probability of occupancy of the

n-th cell, i.e.,

, can be expressed analytically [

2]:

with

The posterior PDF of the pose,

in (

1), is propagated using the standard prediction and update equations of the particle filter [

13]. However, the likelihood function used in the update of particle weights takes the form

which is equivalent to

, with

. Assuming the individual beams of the sensor are independent, we can approximate

by a product of likelihoods

for all

. Finally,

where

as the nearest grid cell to the point where, for a given pose

, range-azimuth measurement

maps to the

plane.

The RBPF propagates the posterior , approximated by a weighted set of S particles . The weights are non-negative and sum up to one. Each particle consists of a pose sample and its associated probability of occupancy vector , which represents the estimate of the map.

4. Numerical Results

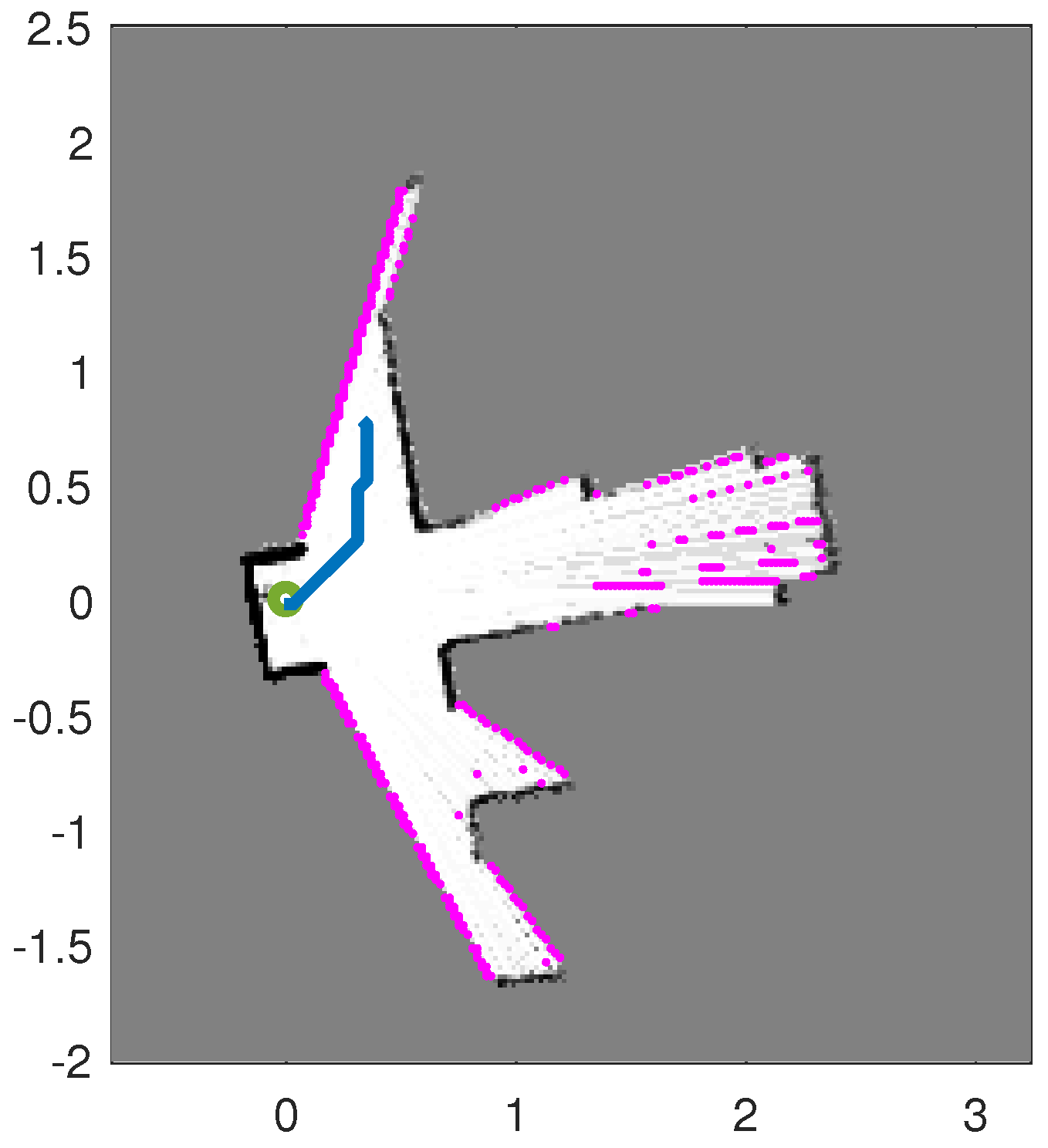

Figure 2 shows the map of the area used in simulations for testing and evaluation of the proposed path planning algorithm. The true initial robot pose (distance is measured in arbitrary units, a.u.) is

. The SLAM algorithm is initialised with particles of equal weights, zero pose vectors and the probability of occupancy for all cells set to

. The simulated ranging sensor is characterised with a coverage of 360°, angular resolution 0.8°, and

if the distance between the robot and

n-th cell is less than

a.u. False detections are modeled with

and

as a uniform distribution over the field of view with the radius

. Standard deviation is set to

a.u. for range measurements and 0.3° for angular measurements. The occupancy-grid cell size is adopted as

a.u. The number of particles

. The

parameter of the Rényi divergence is set to

. The mission is terminated when none of the clusters of frontier cells has more than 14 members.

Figure 3 displays two estimated maps created by the described autonomous RFS-OG-SLAM for the scenario shown in

Figure 2. An estimated map at time

k is reconstructed from the probability of occupancy vector

, computed as the average of particles

,

. The maps are tilted because the initial pose of the SLAM algorithm is set to a zero vector.

Figure 3 also shows the estimated paths created by the autonomous RFS-OG-SLAM (the blue lines). An estimated path is constructed from the two first components of the mean of the pose particles

,

. Duration of exploration and mapping is expressed in the number of discrete-time steps required; the maps in

Figure 3a,b required 85 and 98 time steps, respectively. The quality of an estimated map at time

k is expressed by the entropy of vector

, defined as:

Initially, at time

, map entropy is

. The entropy of the maps in

Figure 3a,b are

and

, respectively. An avi movie of a single run of the algorithm can be found in

Supplementary Material.

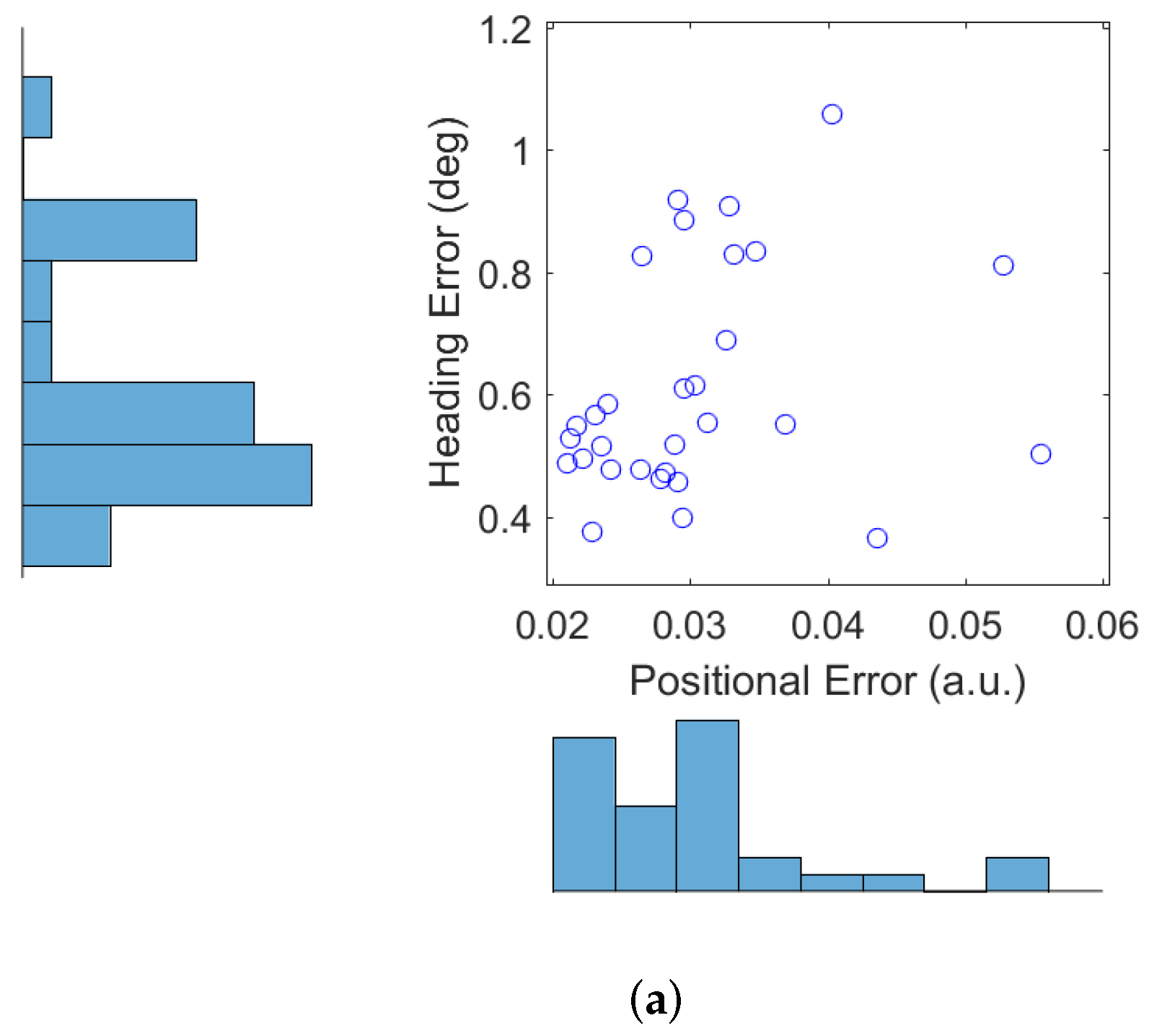

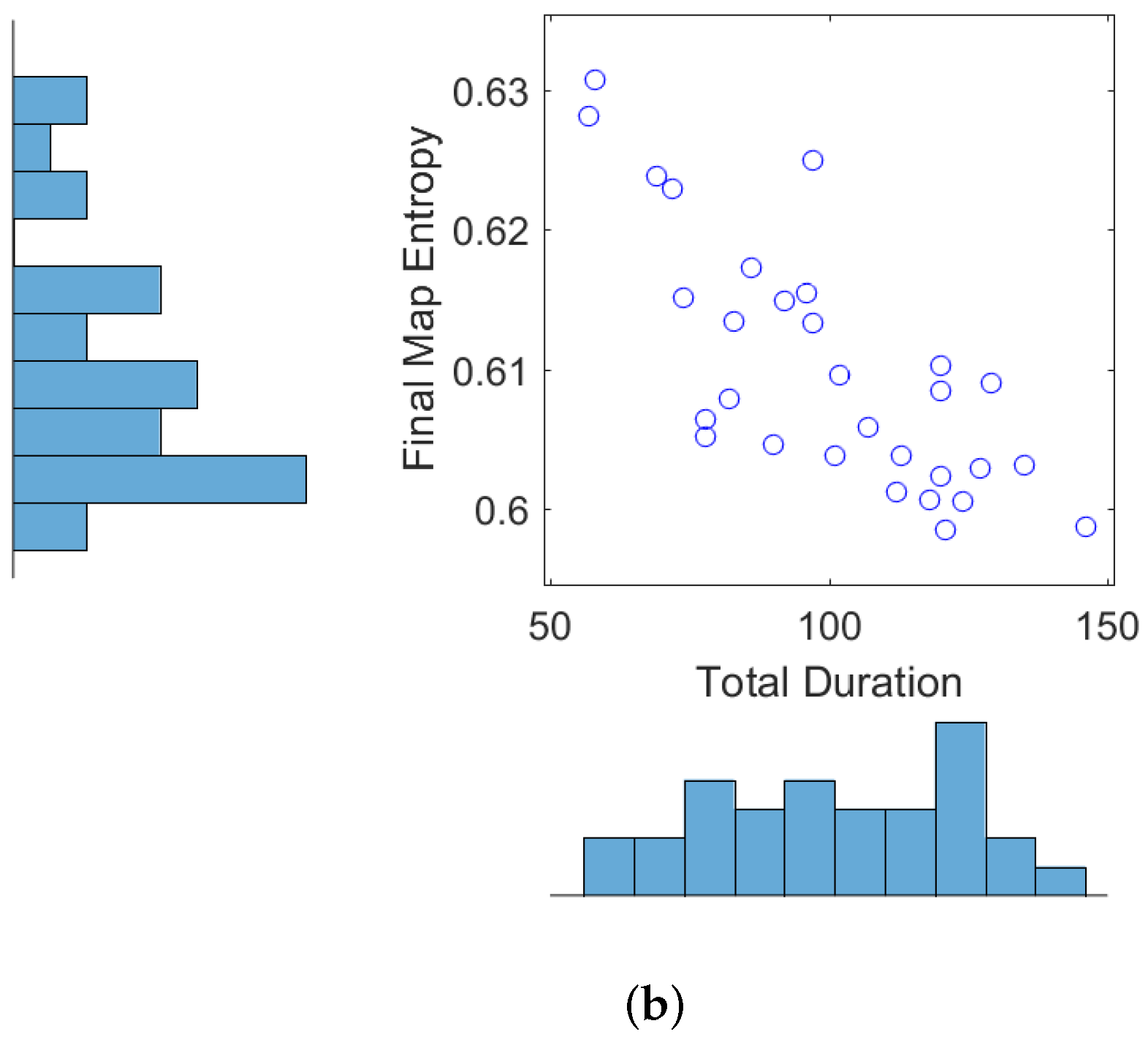

Next we show the results obtained from 30 Monte Carlo runs of the autonomous RFS-OG-SLAM, using the simulation setup described above.

Figure 4a shows the error of the robot estimated position (in a.u.) and the error of the robot estimated heading (in degrees), averaged over time and over all 30 trajectories.

Figure 4b displays the final map entropy versus the duration of the exploration and mapping mission. The average estimated map over 30 Monte Carlo runs is shown in

Figure 5a, while the variance is displayed in

Figure 5b. Variability in the performance of the autonomous RFS-OG-SLAM is due to many causes, such as the inherent randomness of particle filtering, clustering and reward computation. Overall, however, the proposed autonomous RFS-OG-SLAM performs robustly and produces quality maps without a need for human intervention.