An Integral Representation of the Logarithmic Function with Applications in Information Theory

Abstract

:1. Introduction

- (a)

- In most of our examples, the expression we obtain is more compact, more elegant, and often more insightful than the original quantity.

- (b)

- The resulting definite integral can actually be considered a closed-form expression “for every practical purpose” since definite integrals in one or two dimensions can be calculated instantly using built-in numerical integration operations in MATLAB, Maple, Mathematica, or other mathematical software tools. This is largely similar to the case of expressions that include standard functions (e.g., trigonometric, logarithmic, exponential functions, etc.), which are commonly considered to be closed-form expressions.

- (c)

- The integrals can also be evaluated by power series expansions of the integrand, followed by term-by-term integration.

- (d)

- Owing to Item (c), the asymptotic behavior in the parameters of the model can be evaluated.

- (e)

- At least in two of our examples, we show how to pass from an n–dimensional integral (with an arbitrarily large n) to one– or two–dimensional integrals. This passage is in the spirit of the transition from a multiletter expression to a single–letter expression.

2. Mathematical Background

3. Applications

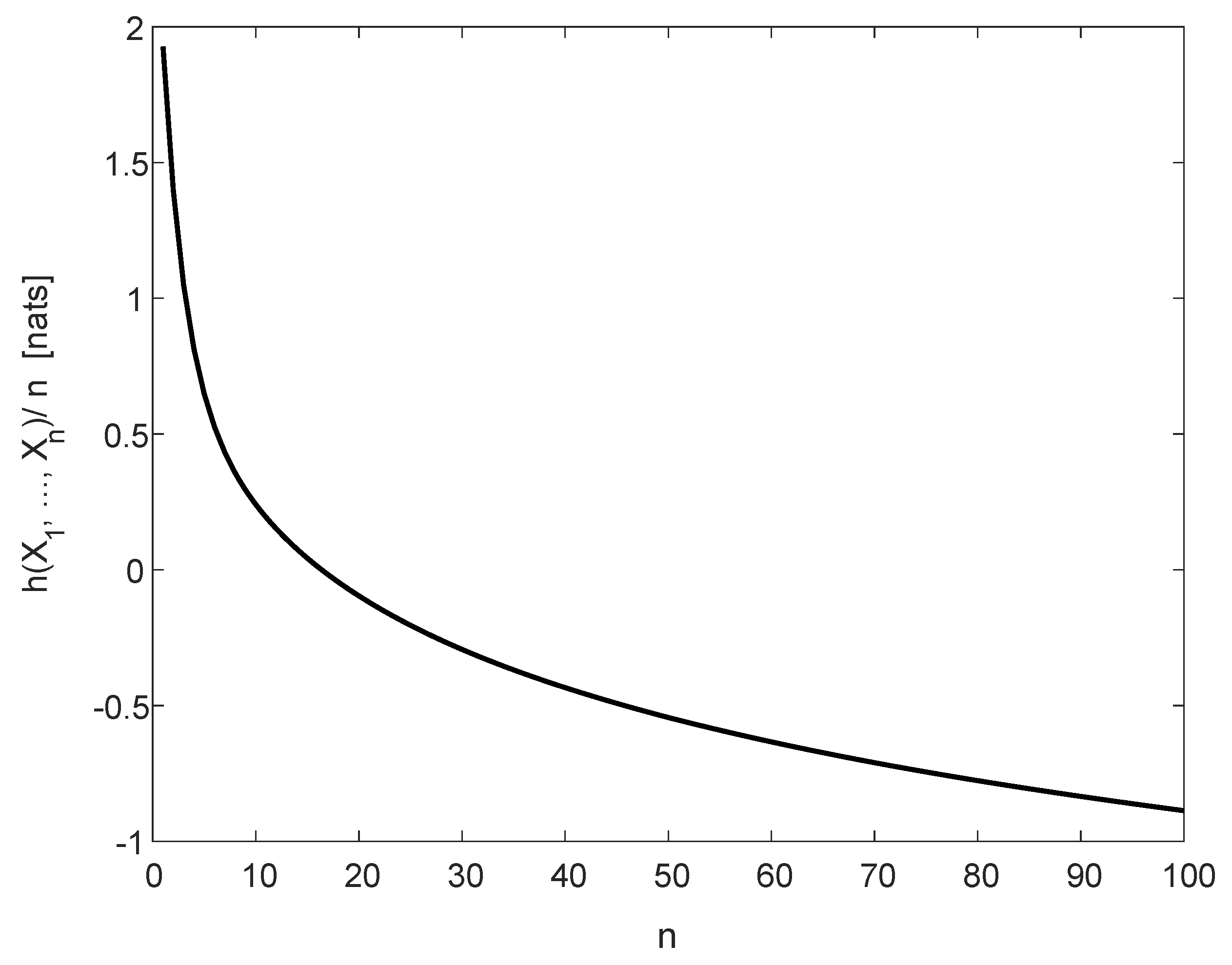

3.1. Differential Entropy for Generalized Multivariate Cauchy Densities

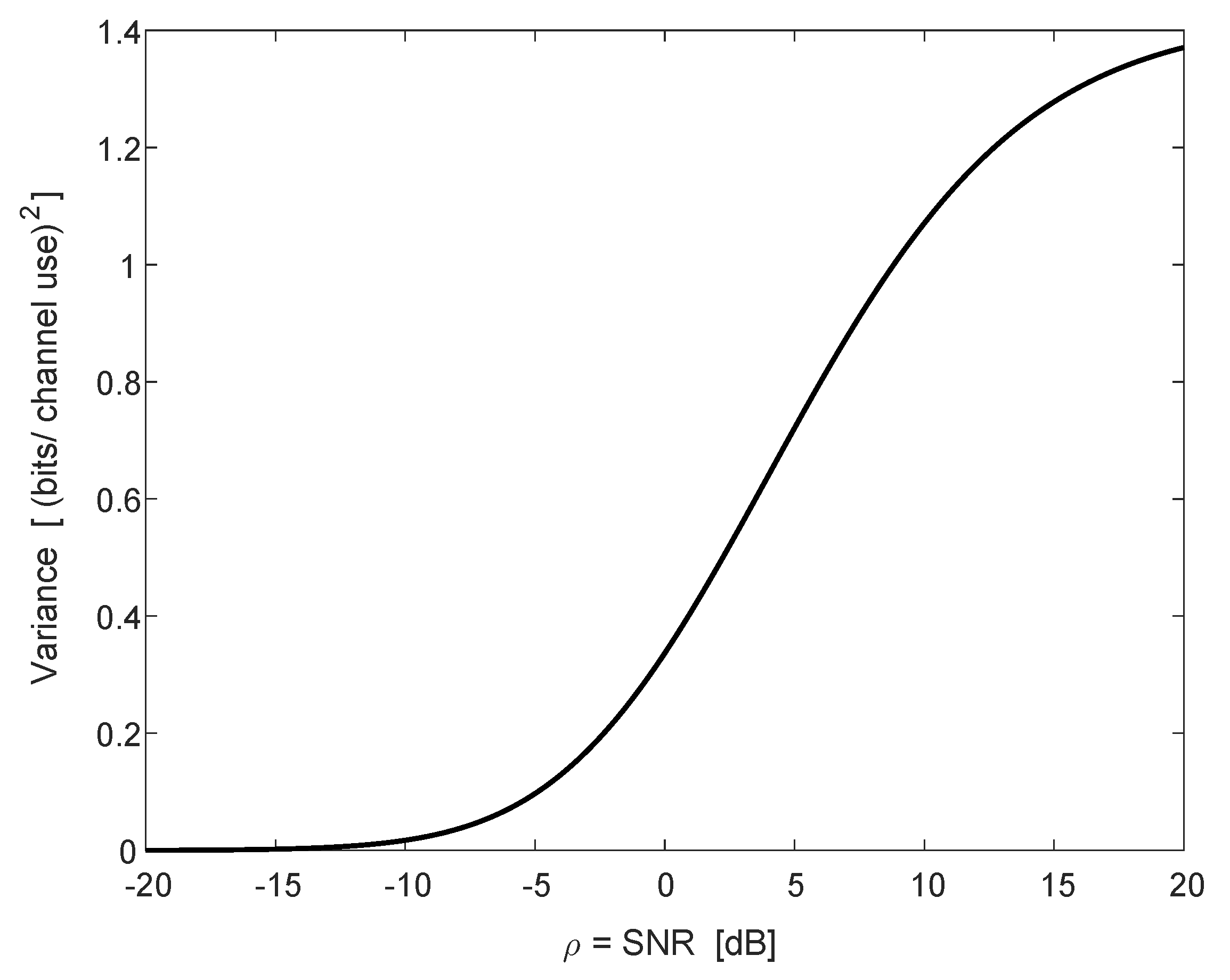

3.2. Ergodic Capacity of the Fading SIMO Channel

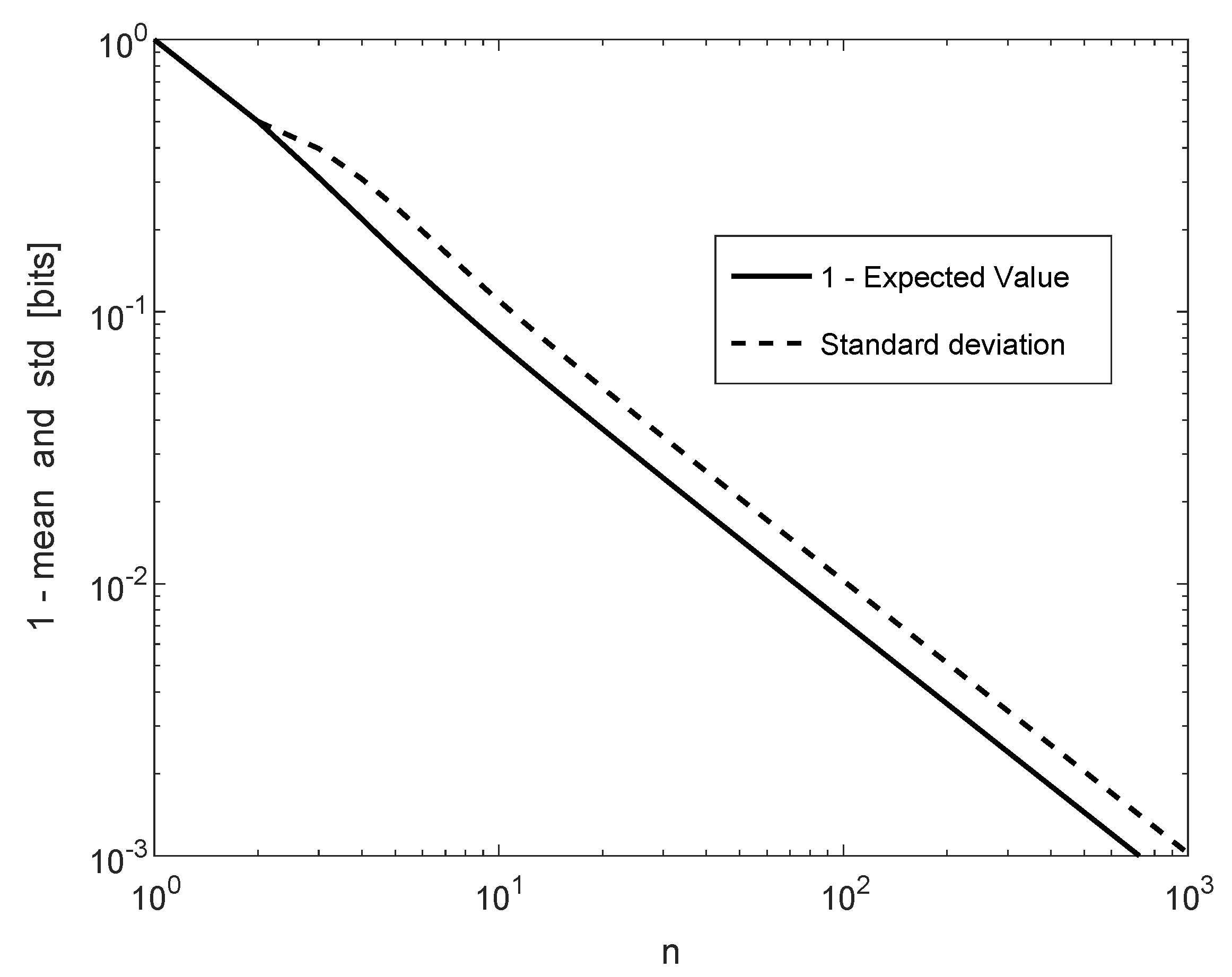

3.3. Universal Source Coding for Binary Arbitrarily Varying Sources

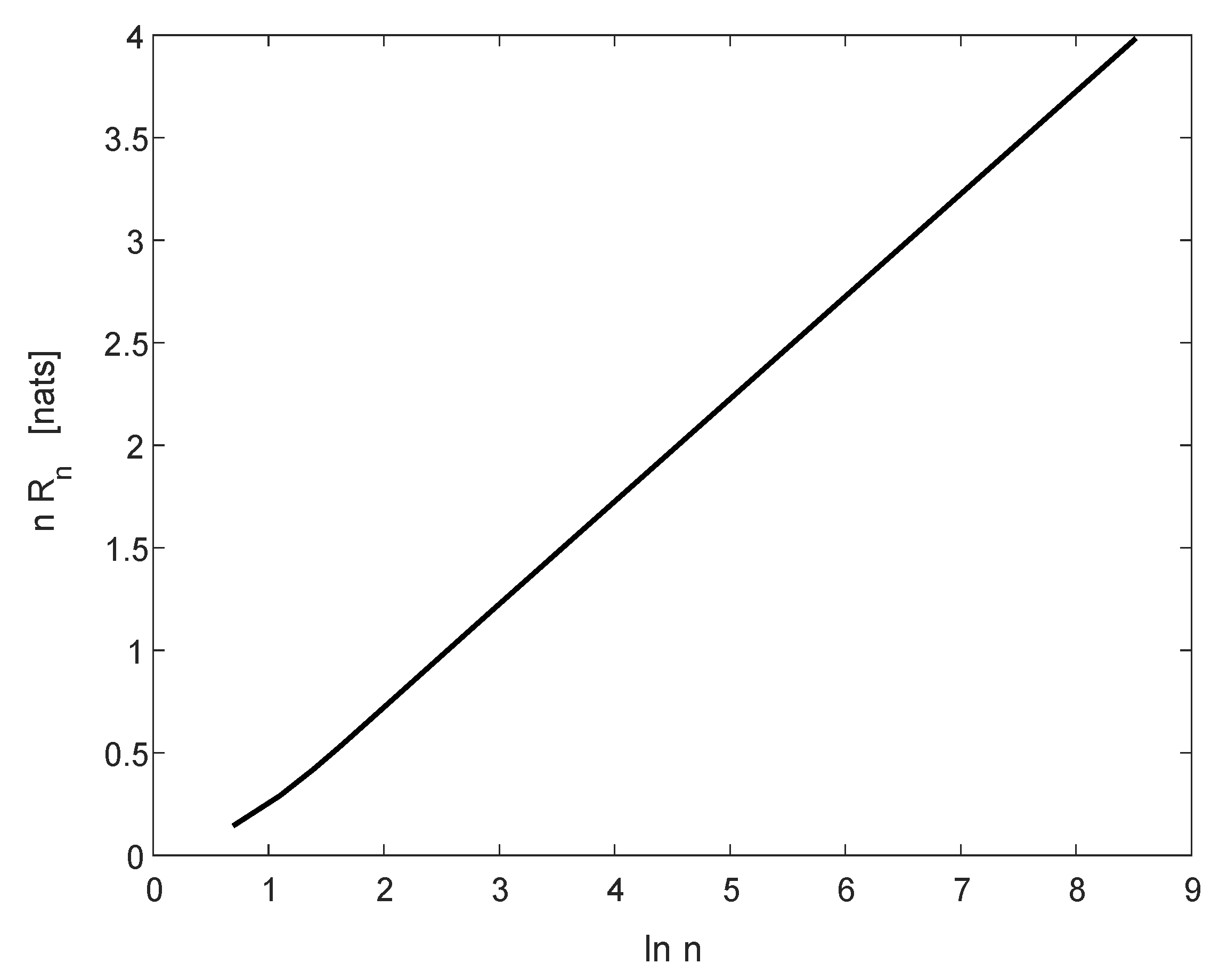

3.4. Moments of the Empirical Entropy and the Redundancy of K–T Universal Source Coding

4. Summary and Outlook

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Mézard, M.; Montanari, A. Information, Physics, and Computation; Oxford University Press: New York, NY, USA, 2009. [Google Scholar]

- Esipov, S.E.; Newman, T.J. Interface growth and Burgers turbulence: The problem of random initial conditions. Phys. Rev. E 1993, 48, 1046–1050. [Google Scholar] [CrossRef]

- Song, J.; Still, S.; Rojas, R.D.H.; Castillo, I.P.; Marsili, M. Optimal work extraction and mutual information in a generalized Szilárd engine. arXiv 2019, arXiv:1910.04191. [Google Scholar]

- Rajan, A.; Tepedelenlioǧlu, C. Stochastic ordering of fading channels through the Shannon transform. IEEE Trans. Inform. Theory 2015, 61, 1619–1628. [Google Scholar] [CrossRef] [Green Version]

- Simon, M.K. A new twist on the Marcum Q-function and its application. IEEE Commun. Lett. 1998, 2, 39–41. [Google Scholar] [CrossRef]

- Simon, M.K.; Divsalar, D. Some new twists to problems involving the Gaussian probability integral. IEEE Trans. Inf. Theory 1998, 46, 200–210. [Google Scholar] [CrossRef] [Green Version]

- Craig, J.W. A new, simple and exact result for calculating the probability of error for two-dimensional signal constellations. In MILCOM 91-Conference Record; IEEE: Piscataway, NJ, USA; pp. 25.5.1–25.5.5.

- Appledorn, C.R. The entropy of a Poisson distribution. SIAM Rev. 1988, 30, 314–317. [Google Scholar] [CrossRef]

- Erdélyi, A.; Magnus, W.; Oberhettinger, F.; Tricomi, F.G.; Bateman, H. Higher Transcendental Functions; McGraw-Hill: New York, NY, USA, 1987; Volume 1. [Google Scholar]

- Martinez, A. Spectral efficiency of optical direct detection. JOSA B 2007, 24, 739–749. [Google Scholar] [CrossRef]

- Knessl, C. Integral representations and asymptotic expansions for Shannon and Rényi entropies. Appl. Math. Lett. 1998, 11, 69–74. [Google Scholar] [CrossRef] [Green Version]

- Ryzhik, I.M.; Gradshteĭn, I.S. Tables of Integrals, Series, and Products; Academic Press: New York, NY, USA, 1965. [Google Scholar]

- Marsiglietti, A.; Kostina, V. A lower bound on the differential entropy of log-concave random vectors with applications. Entropy 2018, 20, 185. [Google Scholar] [CrossRef] [Green Version]

- Dong, A.; Zhang, H.; Wu, D.; Yuan, D. Logarithmic expectation of the sum of exponential random variables for wireless communication performance evaluation. In 2015 IEEE 82nd Vehicular Technology Conference (VTC2015-Fall); IEEE: Piscataway, NJ, USA, 2015. [Google Scholar]

- Tse, D.; Viswanath, P. Fundamentals of Wireless Communication; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Barron, A.; Rissanen, J.; Yu, B. The minimum description length principle in coding and modeling. IEEE Trans. Inf. Theory 1998, 44, 2743–2760. [Google Scholar] [CrossRef] [Green Version]

- Blumer, A.C. Minimax universal noiseless coding for unifilar and Markov sources. IEEE Trans. Inf. Theory 1987, 33, 925–930. [Google Scholar] [CrossRef]

- Clarke, B.S.; Barron, A.R. Information-theoretic asymptotics of Bayes methods. IEEE Trans. Inf. Theory 1990, 36, 453–471. [Google Scholar] [CrossRef] [Green Version]

- Clarke, B.S.; Barron, A.R. Jeffreys’ prior is asymptotically least favorable under entropy risk. J. Stat. Plan. Infer. 1994, 41, 37–60. [Google Scholar] [CrossRef]

- Davisson, L.D. Universal noiseless coding. IEEE Trans. Inf. Theory 1973, 29, 783–795. [Google Scholar] [CrossRef] [Green Version]

- Davisson, L.D. Minimax noiseless universal coding for Markov sources. IEEE Trans. Inf. Theory 1983, 29, 211–215. [Google Scholar] [CrossRef]

- Davisson, L.D.; McEliece, R.J.; Pursley, M.B.; Wallace, M.S. Efficient universal noiseless source codes. IEEE Trans. Inf. Theory 1981, 27, 269–278. [Google Scholar] [CrossRef] [Green Version]

- Krichevsky, R.E.; Trofimov, V.K. The performance of universal encoding. IEEE Trans. Inf. Theory 1981, 27, 199–207. [Google Scholar] [CrossRef]

- Merhav, N.; Feder, M. Universal prediction. IEEE Trans. Inf. Theory 1998, 44, 2124–2147. [Google Scholar] [CrossRef] [Green Version]

- Rissanen, J. A universal data compression system. IEEE Trans. Inf. Theory 1983, 29, 656–664. [Google Scholar] [CrossRef] [Green Version]

- Rissanen, J. Universal coding, information, prediction, and estimation. IEEE Trans. Inf. Theory 1984, 30, 629–636. [Google Scholar] [CrossRef] [Green Version]

- Rissanen, J. Fisher information and stochastic complexity. IEEE Trans. Inf. Theory 1996, 42, 40–47. [Google Scholar] [CrossRef]

- Shtarkov, Y.M. Universal sequential coding of single messages. IPPI 1987, 23, 175–186. [Google Scholar]

- Weinberger, M.J.; Rissanen, J.; Feder, M. A universal finite memory source. IEEE Trans. Inf. Theory 1995, 41, 643–652. [Google Scholar] [CrossRef]

- Xie, Q.; Barron, A.R. Asymptotic minimax regret for data compression, gambling and prediction. IEEE Trans. Inf. Theory 1997, 46, 431–445. [Google Scholar]

- Wald, A. Tests of statistical hypotheses concerning several parameters when the number of observations is large. Trans. Am. Math. Soc. 1943, 54, 426–482. [Google Scholar] [CrossRef]

- Mardia, J.; Jiao, J.; Tánczos, E.; Nowak, R.D.; Weissman, T. Concentration inequalities for the empirical distribution of discrete distributions: Beyond the method of types. Inf. Inference 2019, 1–38. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Merhav, N.; Sason, I. An Integral Representation of the Logarithmic Function with Applications in Information Theory. Entropy 2020, 22, 51. https://doi.org/10.3390/e22010051

Merhav N, Sason I. An Integral Representation of the Logarithmic Function with Applications in Information Theory. Entropy. 2020; 22(1):51. https://doi.org/10.3390/e22010051

Chicago/Turabian StyleMerhav, Neri, and Igal Sason. 2020. "An Integral Representation of the Logarithmic Function with Applications in Information Theory" Entropy 22, no. 1: 51. https://doi.org/10.3390/e22010051

APA StyleMerhav, N., & Sason, I. (2020). An Integral Representation of the Logarithmic Function with Applications in Information Theory. Entropy, 22(1), 51. https://doi.org/10.3390/e22010051