1. Introduction

Compressed sensing (CS) [

1] samples the signal at a rate far lower than the Nyquist rate by utilizing the signal’s sparsity. The original signal can be reconstructed from the sampled data by adopting corresponding reconstruction methods. As a new way of signal processing, CS reduces the amount of sampled data and the space of storage, which would significantly decrease the hardware complexity. CS has a broad application prospect in medical imaging [

2], wideband spectrum sensing [

3], dynamic mode decomposition [

4], etc.

CS projects a high-dimensional

K-sparse signal

into a low-dimensional space through the measurement matrix

(

) and gets a set of incomplete measurements

obeying the linear model

Equation (1) is an underdetermined equation which usually has an infinite number of solutions. However, with prior information on the signal sparsity and a condition imposed on

,

can be exactly reconstructed by solving the

minimization problem [

5]. The most commonly used condition of

is the restricted isometric property (RIP) [

6].

Definition 1. RIP: For any K-sparse vector , if there is always a constant that makesthen is said to satisfy the K-order RIP with.

The minimum of all constants satisfying inequality (2) is referred to as the restricted isometry constant (RIC) . Candès and Tao showed that exact reconstruction could be achieved from certain measurements provided satisfies the RIP.

Constructing a proper measurement matrix is an essential issue in CS. The measurement matrix has significant influences not only on the reconstruction performance but also on the complexity of hardware implementation. The measurement matrices can be divided into random ones and deterministic ones. Gaussian random matrix [

7] and Bernoulli random matrix [

8] are frequently used because they satisfy the RIP and are uncorrelated with most sparse domains. They guarantee good reconstruction performance but bring a specific challenge to hardware such as the requirement for storage space and system design [

9]. On the contrary, deterministic measurement matrices have the advantages not only of economic storage space but also of convenience in engineering design. Nevertheless, the commonly used deterministic measurement matrices such as deterministic polynomial matrix [

10] and Fourier matrix [

11] are correlated with certain sparse domains, resulting in a restriction on practical application.

For solving the problems, the Logistic chaotic sequence is employed in constructing the measurement matrix—called the Logistic chaotic measurement matrix—in [

12]. With the natural gifted pseudo-random property of a chaotic system, the Logistic chaotic measurement matrix possesses the advantages of random matrices and overcomes the shortcoming of the above deterministic matrices. This kind of matrix is respectively used for secure data collection in wireless sensor networks in [

13] and speech signal compression in [

14]. In [

15], the Tent chaotic sequence is employed in constructing the measurement matrix. In [

16], Zhou and Jing construct the measurement matrix with a composite chaotic sequence generated by combining Logistic and Tent chaos. Compared with the Gaussian random matrix, the chaotic measurement matrices have lower hardware complexities and better reconstruction performances. However, large sample distances (at least 15 [

17,

18]) are required to obtain uncorrelated samples from the above chaotic sequences in chaotic measurement matrix construction. Numerous useless data will be generated when the measurement matrix is large-scale, which results in the waste of system resources. In [

19], the Chebyshev chaotic sequence is transformed into a new sequence of elements obeying Gaussian distribution. The new sequence is employed in constructing the measurement matrix that satisfies the RIP with high probability. This method avoids sampling the chaotic sequence, but the measurement matrix does not significantly improve the reconstruction performance.

In this paper, we propose a method of constructing the measurement matrix by the Chebyshev chaotic sequence. The primary contributions are twofold:

We analyze the high-order correlations among the elements sampled from the Chebyshev chaotic sequence.

We use the sampled elements to construct a measurement matrix, termed the Chebyshev chaotic measurement matrix. Based on the assumption that the elements are statistically independent, we prove that the Chebyshev chaotic measurement matrix satisfies the RIP with high probability.

The remainder of this paper is organized as follows. In

Section 2, we describe the expression of the Chebyshev chaotic sequence and analyze the high-order correlations among elements sampled from the Chebyshev chaotic sequence. In

Section 3, we present the construction method of the Chebyshev chaotic measurement matrix and analyze its probability of satisfying the RIP. In

Section 4, simulations are carried out to verify the effectiveness of the Chebyshev chaotic matrix. In the end, the conclusion is drawn.

3. Construction of Chebyshev Chaotic Measurement Matrix and RIP Analysis

3.1. Construction of Chebyshev Chaotic Measurement Matrix

Denote as the sequence extracted from with sample distance . We use to construct a Chebyshev chaotic measurement matrix as shown in Algorithm 1.

| Algorithm 1. The method of constructing the Chebyshev chaotic measurement matrix. |

Input: the number of rows , the number of columns , order , initial value .

Output: measurement matrix |

| 1. | Determine the sample distance ;

|

| 2. | Generate the Chebyshev chaotic sequence of length ; |

| 3. | Sample with and get ; |

| 4. | Use to construct a Chebyshev chaotic measurement matrix as

|

| 5. | Return measurement matrix |

In step 4, is the normalization factor. When the order number, initial value, and sample distance are set, is determined.

3.2. RIP Analysis

The RIP is a sufficient condition on the measurement matrix to recover guarantee. However, certifying the RIP is NP-hard, so we analyze the performance of the measurement matrix by calculating the probability that satisfies the RIP instead.

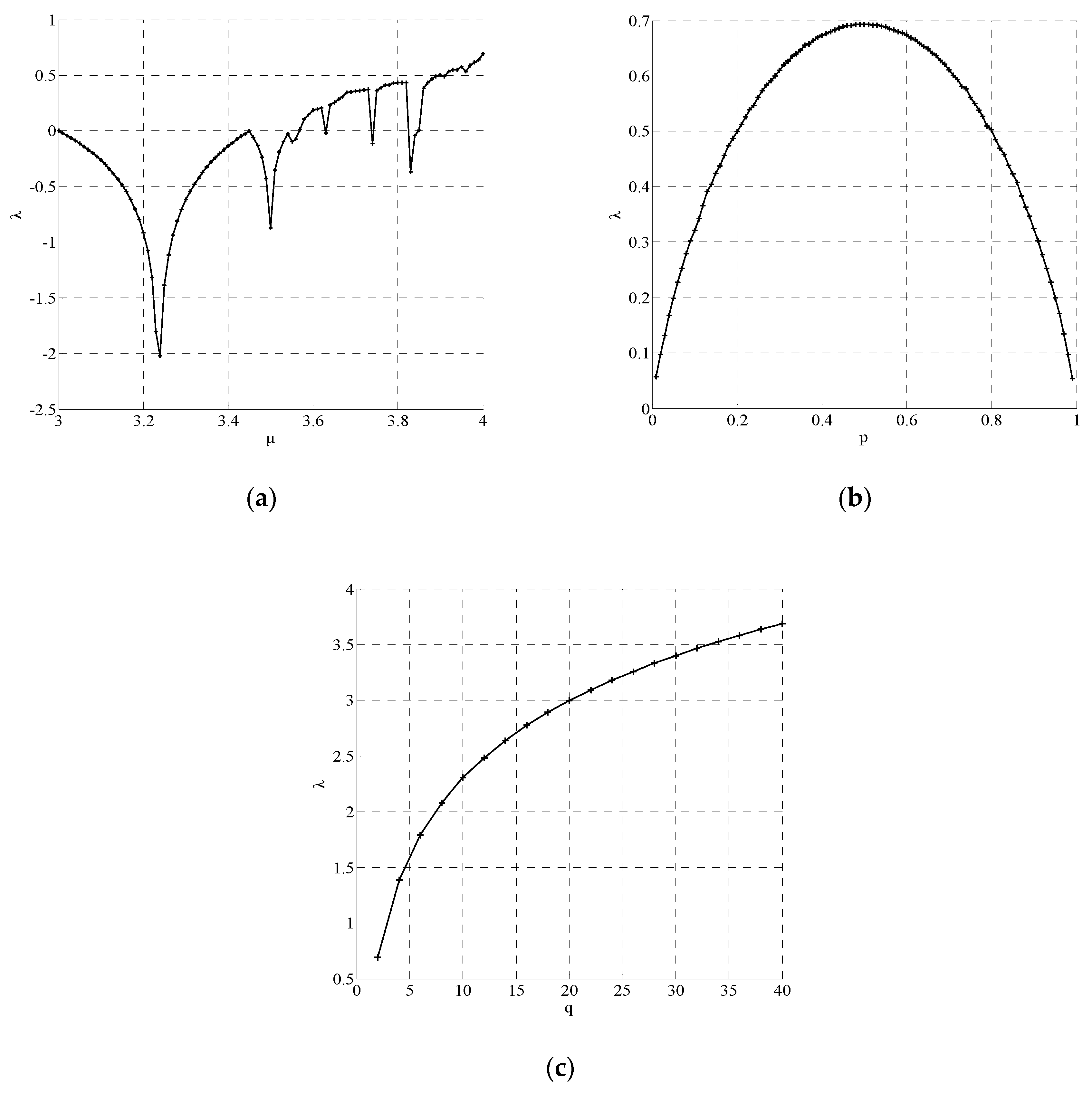

When , the adjacent elements of share the same high-order correlations with the elements sampled from the Logistic chaotic sequence with . In addition, the test procedures show that the sampled elements of the Logistic chaotic sequence are statistically independent when . Hence, we assume that the elements of are statistically independent. Based on this assumption, we calculate the minimum of the probability that our proposed measurement matrix satisfies the RIP and show that the minimum is close to 1 provided some parameters are suitably valued. The main result is shown in Theorem 2.

Theorem 2. TheChebyshev chaotic measurement matrixconstructed by Algorithm 1 satisfies the K-order RIP with high probability provided.

Before proving Theorem 2, we briefly summarize some useful notations. Denote as the position of non-zero elements in and as the normalized vector composed of non-zero elements in . is a submatrix composed of that only contains columns indexed by . Rewrite as , where denotes a vector formed by multiplying the elements positioned at in the th row of by , and . Let , , and . Denote as one complementary event of the condition in inequality (2), where . is the union of all possible complementary events. The idea of proving Theorem 2 is as follows: First, calculate the probability of , which is denoted as . Then, compute the probability of , denoted as . Finally, the probability of satisfying the RIP is .

From the definition of

, we have

where

and

holds for any

. According to Markov inequality, the following inequality holds

Noting that the elements of

are statistically independent of each other, it is evident that

and

are independent of each other for

,

. With

, the inequality can be rewritten as

By expanding

into the form of a second-order Lagrange remainder,

holds, where

is the Lagrange remainder. It is straightforward to verify

. Accordingly, we have

and

To calculate the probabilities in inequalities (10) and (12), let us introduce some useful lemmas.

Lemma 1. Denote, i.i.d. as random variables having a probability distribution like Equation (4). For any real numbers , , let , then for all and , we have Lemma 2. Letbe a unit vector. For arbitraryand , we have Lemma 3. Letand. Then, for arbitrary, we have

Recall . For arbitrary , the Taylor series expansion of is . In the same way, . Applying Lemma 2 and 3 gives . Hence, holds.

Now, we calculate the probabilities in inequalities (10) and (12). Let

, we get

As

and

, we have

According to Boole’s inequality, we get

Let

and

, we have

Let

. Then,

satisfies the RIP with a probability of

Indeed, choosing as sufficiently small, we always have and high . For instance, let and , the probability of satisfying the K-order RIP with is no less than 95% when .

4. Results and Discussion

When a measurement matrix is applied in CS, it is always expected to lead to good reconstruction performance. In this section, we examine the performance of the Chebyshev chaotic measurement matrix and compare it with Gaussian random matrix and the well-established similar matrices in [

12,

15,

16,

19] by presenting the empirical results obtained from a series of CS reconstruction simulations. Each matrix is denoted as Propose, Gaussian, Logistic, Tent, Composite, and the matrix in [

19] for convenience. The test includes the following steps. First, generate the synthetic sparse signals

and construct the measurement matrix

. Then, obtain the measurement vector

by

. Last, reconstruct the signals by approximating the solution of

.

adopted throughout this section only contains

K non-zero entries with length

. The locations and amplitudes of the peaks are subject to Gaussian distribution. The proposed measurement matrix in this paper is constructed by the method shown in Algorithm 1. The orthogonal matching pursuit (OMP) [

24] algorithm is selected as a minimization approach which iteratively builds up an approximation of

. The system parameters in Logistic and Tent chaos are 4 and 0.5, respectively, and the order of Chebyshev chaos is 8. The sample distance is set as

in Logistic, Tent, and Composite, and

in Propose. Each experiment is performed for 1000 random sparse ensembles. The initial value

is randomly set for each ensemble and the performance is averaged over sequences with different initial values. Denote

as the reconstruction error. The reconstruction is identified as a success, namely exact reconstruction, provided

. Denote

as the percentage of successful reconstruction.

Case 1. Comparison of the percentage of exact reconstruction in the noiseless case .

In this case, we conduct two separate CS experiments, first by fixing and varying from 2 to 14, and second by fixing and varying from 20 to 60.

Figure 4 illustrates the change tendency of

with argument

while

in the noiseless case. The figure shows that

decreases with the increase in

K. From inequalities (20) and (21), it can be seen that the upper bound of

increases and the lower bound of

decreases when

K increases. The probability of the measurement matrix satisfying the RIP is reduced, which makes it against the exact reconstruction.

Figure 5 illustrates the change tendency of

with argument

while

in the noiseless case. As can be seen from the figure,

increases with

. According to inequalities (20) and (21),

decreases and the lower bound of

increases when

increases. The increase in the probability of the measurement matrix satisfying the RIP is beneficial to the exact reconstruction.

Figure 4 and

Figure 5 reveal that the percentage of the exact reconstruction of the proposed measurement matrix is significantly higher than that of Gaussian and the matrix in [

19], slightly higher than that of Tent, and almost the same as that of Logistic and Composite.

Case 2. Comparison of the reconstruction error in the noisy case.

In this case, we consider the noisy model

, where

is the vector of additive Gaussian noise with zero means. We conduct the CS experiment by fixing

,

, and varying the signal to noise ratio (SNR) from 10 to 50. It can be seen from

Figure 6 that the errors decrease with the increase in the SNR, and the error of the proposed measurement matrix is smaller than that of Gaussian and the matrix in [

19], slightly smaller than that of Tent, and almost the same as Logistic and Composite.

When noise is included in the measurements, the reconstruction errors increase greatly. At this time, reconstruction algorithms with anti-noise ability can be used, such as the adaptive iterative threshold (AIT) algorithm and entropy minimization-based matching pursuit (EMP) algorithm which can be found in references [

25,

26] for details.

As shown in the simulations, when the Chebyshev chaotic matrix is applied in CS, a good reconstruction performance is obtained. This coincides with the theoretical results obtained in the previous subsection where the Chebyshev chaotic matrix satisfies the RIP with high probability. Recall from Lemma 4 that , where . It is clear that the maximums of and are achievable only if . That is to say, the lower boundary of the probability that the proposed measurement satisfies the RIP is no less than that of the Gaussian random matrix. As seen from the simulations, our proposed measurement matrix outperforms the Gaussian random matrix. Then, the results coincide with the theoretical analysis above.

As mentioned in the Introduction, Zhu et al. [

19] transformed the Chebyshev chaotic sequence into a new sequence of elements obeying Gaussian distribution and applied the new sequence to construct the measurement matrix. In fact, the measurement matrix is similar to the Gaussian random matrix in terms of elements distribution. This coincides with the simulations that the reconstruction performance of OMP with the matrix in [

19] is similar to that of OMP with the Gaussian random matrix. Accordingly, our proposed measurement matrix outperforms the matrix in [

19].

The simulations reveal that by using the proposed measurement matrix, one can achieve the same reconstruction performance as that obtained using Logistic, Tent, and Composite. In fact, these chaotic measurement matrices share a similar independence among the elements and probability of satisfying the RIP. To construct those measurement matrices, uncorrelated samples need to be extracted from the chaotic sequences at certain sample distances. The length of the chaotic sequence is usually no less than . The larger the sample distance, the longer the chaotic sequence, and the larger the resources consumption. Since the sample distance of the Chebyshev chaotic sequence is greatly reduced, the proposed measurement matrix can effectively reduce the consumption of system resources.