Figure 1.

Audio stimuli representations in the time domain and correspondent spectral representation. (a) Tone at 1 KHz audio recordings for the 34 microphones: Time domain; (b) Tone at 1 KHz audio recordings for the 34 microphones: Frequency domain (complex magnitude); (c) Pneumatic hammer audio recordings for the 34 microphones: Time domain; (d) Pneumatic hammer audio recordings for the 34 microphones: Frequency domain (complex magnitude); (e) Gunshot audio recordings for the 34 microphones: Time domain; (f) Gunshot audio recordings for the 34 microphones: Frequency domain (complex magnitude).

Figure 1.

Audio stimuli representations in the time domain and correspondent spectral representation. (a) Tone at 1 KHz audio recordings for the 34 microphones: Time domain; (b) Tone at 1 KHz audio recordings for the 34 microphones: Frequency domain (complex magnitude); (c) Pneumatic hammer audio recordings for the 34 microphones: Time domain; (d) Pneumatic hammer audio recordings for the 34 microphones: Frequency domain (complex magnitude); (e) Gunshot audio recordings for the 34 microphones: Time domain; (f) Gunshot audio recordings for the 34 microphones: Frequency domain (complex magnitude).

Figure 2.

Time domain representation taken from phone 22 for the the tone at 1 KHz (1 K), pneumatic hammer audio stimulus (PNE), and gunshot audio stimulus (GUNSHOT) datasets. (a) 1 K; (b) PNE; (c) GUNSHOT.

Figure 2.

Time domain representation taken from phone 22 for the the tone at 1 KHz (1 K), pneumatic hammer audio stimulus (PNE), and gunshot audio stimulus (GUNSHOT) datasets. (a) 1 K; (b) PNE; (c) GUNSHOT.

Figure 3.

Overall methodology for microphone identification.

Figure 3.

Overall methodology for microphone identification.

Figure 4.

Sample and Approximate Entropy for on the ratio between r and the standard deviation for the PNE, GUNSHOT and 1 K datasets. (a) Sample Entropy diagram in relation to the r factor for the PNE dataset; (b) Approximate Entropy diagram in relation to the r factor for the PNE dataset; (c) Sample Entropy diagram in relation to the r factor for the GUNSHOT dataset; (d) Approximate Entropy diagram in relation to the r factor for the GUNSHOT dataset; (e) Sample Entropy diagram in relation to the r factor for the 1 K dataset; (f) Approximate Entropy diagram in relation to the r factor for the 1 K dataset.

Figure 4.

Sample and Approximate Entropy for on the ratio between r and the standard deviation for the PNE, GUNSHOT and 1 K datasets. (a) Sample Entropy diagram in relation to the r factor for the PNE dataset; (b) Approximate Entropy diagram in relation to the r factor for the PNE dataset; (c) Sample Entropy diagram in relation to the r factor for the GUNSHOT dataset; (d) Approximate Entropy diagram in relation to the r factor for the GUNSHOT dataset; (e) Sample Entropy diagram in relation to the r factor for the 1 K dataset; (f) Approximate Entropy diagram in relation to the r factor for the 1 K dataset.

Figure 5.

Optimal features for all datasets using ReliefF and Neighborhood Component Analysis (NCA) for . (a) Histogram of the ten best (highest ranking) features set with ReliefF and , 1 K dataset; (b) Histogram of the ten best (highest ranking) features set with NCA and , 1 K dataset; (c) Histogram of the ten best (highest ranking) features set with ReliefF and , PNE dataset; (d) Histogram on the set of the ten best (highest ranking) features with NCA and , PNE dataset; (e) Histogram of the ten best (highest ranking) features set with ReliefF and , GUNSHOT dataset; (f) Histogram of the ten best (highest ranking) features set with NCA and , GUNSHOT dataset.

Figure 5.

Optimal features for all datasets using ReliefF and Neighborhood Component Analysis (NCA) for . (a) Histogram of the ten best (highest ranking) features set with ReliefF and , 1 K dataset; (b) Histogram of the ten best (highest ranking) features set with NCA and , 1 K dataset; (c) Histogram of the ten best (highest ranking) features set with ReliefF and , PNE dataset; (d) Histogram on the set of the ten best (highest ranking) features with NCA and , PNE dataset; (e) Histogram of the ten best (highest ranking) features set with ReliefF and , GUNSHOT dataset; (f) Histogram of the ten best (highest ranking) features set with NCA and , GUNSHOT dataset.

Figure 6.

Comparison of the accuracy among different sets of features for the 1 K, PNE, and GUNSHOT datasets for decreasing values of Signal to Noise Ratio (SNR) (expressed in dB). (a) Accuracies for the 1 K dataset with with different set of features; (b) Accuracies for the PNE dataset with with different set of features; (c) Accuracies for the GUNSHOT dataset with with different set of features.

Figure 6.

Comparison of the accuracy among different sets of features for the 1 K, PNE, and GUNSHOT datasets for decreasing values of Signal to Noise Ratio (SNR) (expressed in dB). (a) Accuracies for the 1 K dataset with with different set of features; (b) Accuracies for the PNE dataset with with different set of features; (c) Accuracies for the GUNSHOT dataset with with different set of features.

Figure 7.

Comparison of the accuracy obtained for the PNE and GUNSHOT datasets for decreasing values of SNR expressed in dB and different values of DR. (a) PNE dataset with , and ; (b) GUNSHOT dataset with , and .

Figure 7.

Comparison of the accuracy obtained for the PNE and GUNSHOT datasets for decreasing values of SNR expressed in dB and different values of DR. (a) PNE dataset with , and ; (b) GUNSHOT dataset with , and .

Figure 8.

Comparison of the accuracy obtained for the PNE dataset at SNR = 60 dB and SNR = 5 dB for for different set of features and for different values of . (a) PNE dataset at SNR = 60 dB; (b) PNE dataset at SNR = 5 dB.

Figure 8.

Comparison of the accuracy obtained for the PNE dataset at SNR = 60 dB and SNR = 5 dB for for different set of features and for different values of . (a) PNE dataset at SNR = 60 dB; (b) PNE dataset at SNR = 5 dB.

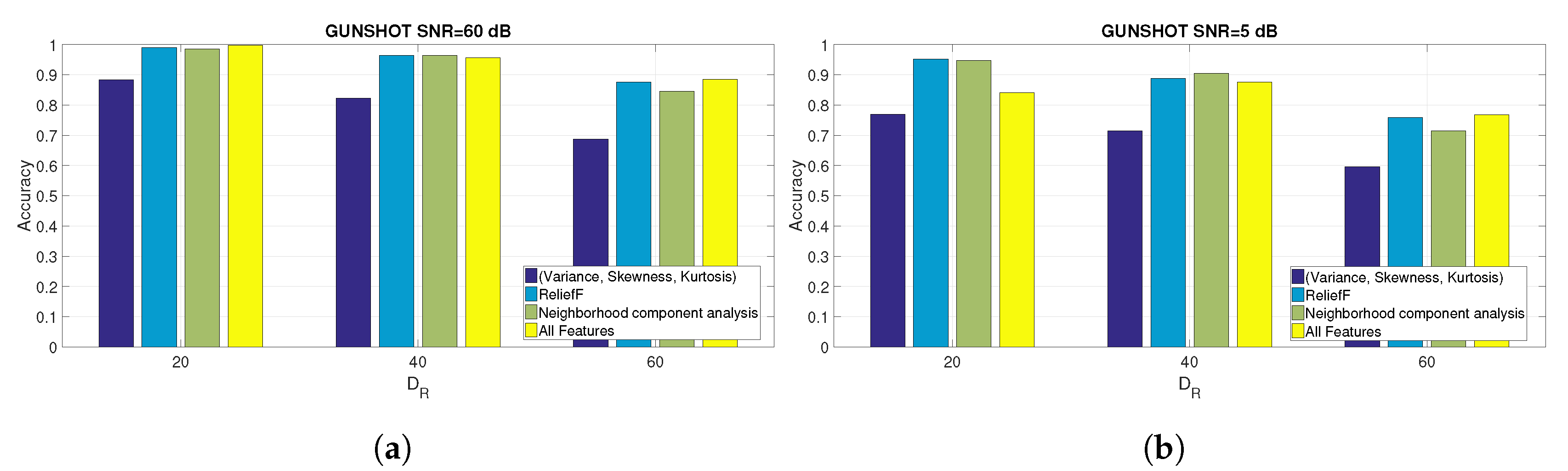

Figure 9.

Comparison of the accuracy obtained for the GUNSHOT dataset at SNR = 60 dB and SNR = 5 dB for different set of features and for different values of . (a) Comparison of the accuracy for the GUNSHOT dataset at SNR = 60 dB for different set of features and for different values of ; (b) Comparison of the accuracy for the GUNSHOT dataset at SNR = 5 dB for different set of features and for different values of .

Figure 9.

Comparison of the accuracy obtained for the GUNSHOT dataset at SNR = 60 dB and SNR = 5 dB for different set of features and for different values of . (a) Comparison of the accuracy for the GUNSHOT dataset at SNR = 60 dB for different set of features and for different values of ; (b) Comparison of the accuracy for the GUNSHOT dataset at SNR = 5 dB for different set of features and for different values of .

Figure 10.

Comparison of the three machine learning algorithms for the three considered datasets. (a) Comparison among SVM, KNN, and DT using the 1 K dataset with all features for . The accuracy is reported; (b) Comparison of the accuracy among SVM, KNN, and DT using the PNE dataset with all features for ; (c) Comparison of the accuracy among SVM, KNN, and DT using the GUNSHOT dataset with all features for .

Figure 10.

Comparison of the three machine learning algorithms for the three considered datasets. (a) Comparison among SVM, KNN, and DT using the 1 K dataset with all features for . The accuracy is reported; (b) Comparison of the accuracy among SVM, KNN, and DT using the PNE dataset with all features for ; (c) Comparison of the accuracy among SVM, KNN, and DT using the GUNSHOT dataset with all features for .

Figure 11.

Computing time (application of entropy measures plus classification time) for all datasets and values of using the Support Vector Machine algorithm and all features. (a) Application of entropy measures plus training time, (b) Application of entropy measures plus test time.

Figure 11.

Computing time (application of entropy measures plus classification time) for all datasets and values of using the Support Vector Machine algorithm and all features. (a) Application of entropy measures plus training time, (b) Application of entropy measures plus test time.

Figure 12.

Confusion Matrices for the three datasets using all features. Y axis represents the instances of the True classes, while the X axis represents the instances of the Predicted classes. (a) All features scenario. Confusion Matrix with and SNR = 10 dB, 1 K dataset with all features; (b) Confusion Matrix with and SNR = −5 dB, 1 K dataset with all features; (c) Confusion Matrix with and SNR = 10 dB, PNE dataset with all features; (d) Confusion Matrix with and SNR = −5 dB, PNE dataset with all features; (e) Confusion Matrix with and SNR = 10 dB, GUNSHOT dataset with all features; (f) Confusion Matrix with and SNR = −5 dB, GUNSHOT dataset with all features.

Figure 12.

Confusion Matrices for the three datasets using all features. Y axis represents the instances of the True classes, while the X axis represents the instances of the Predicted classes. (a) All features scenario. Confusion Matrix with and SNR = 10 dB, 1 K dataset with all features; (b) Confusion Matrix with and SNR = −5 dB, 1 K dataset with all features; (c) Confusion Matrix with and SNR = 10 dB, PNE dataset with all features; (d) Confusion Matrix with and SNR = −5 dB, PNE dataset with all features; (e) Confusion Matrix with and SNR = 10 dB, GUNSHOT dataset with all features; (f) Confusion Matrix with and SNR = −5 dB, GUNSHOT dataset with all features.

Figure 13.

Confusion Matrices for the three datasets with the best features using the ReliefF approach. Y axis represents the instances of the True classes, while the X axis represents the instances of the Predicted classes. (a) Confusion Matrix with and SNR = 10 dB, 1 K dataset; (b) Confusion Matrix with and SNR = −5 dB, 1 K dataset; (c) Confusion Matrix with and SNR = 10 dB, PNE dataset; (d) Confusion Matrix with and SNR = 5 dB, PNE dataset; (e) Confusion Matrix with and SNR = 10 dB, GUNSHOT; (f) Confusion Matrix with and SNR = 5 dB, GUNSHOT.

Figure 13.

Confusion Matrices for the three datasets with the best features using the ReliefF approach. Y axis represents the instances of the True classes, while the X axis represents the instances of the Predicted classes. (a) Confusion Matrix with and SNR = 10 dB, 1 K dataset; (b) Confusion Matrix with and SNR = −5 dB, 1 K dataset; (c) Confusion Matrix with and SNR = 10 dB, PNE dataset; (d) Confusion Matrix with and SNR = 5 dB, PNE dataset; (e) Confusion Matrix with and SNR = 10 dB, GUNSHOT; (f) Confusion Matrix with and SNR = 5 dB, GUNSHOT.

Figure 14.

Receiver Operative Characteristics (ROCs) between different sets of microphones with and different datasets. Selected features using NCA. SVM is used for binary classification. (a) ROC between microphone 1 and 2 (Samsung Ace). 1 K dataset; (b) ROC between microphone 1 and 24 (Samsung Ace and HTC ONE X). 1 K dataset; (c) ROC between microphone 1 and 2 (Samsung Ace). PNE dataset; (d) ROC between microphone 1 and 24 (Samsung Ace and HTC ONE X). PNE dataset; (e) ROC between microphone 1 and 2 (Samsung Ace and HTC ONE X). GUNSHOT dataset; (f) ROC between microphone 1 and 24 (Samsung Ace and HTC ONE X). GUNSHOT dataset.

Figure 14.

Receiver Operative Characteristics (ROCs) between different sets of microphones with and different datasets. Selected features using NCA. SVM is used for binary classification. (a) ROC between microphone 1 and 2 (Samsung Ace). 1 K dataset; (b) ROC between microphone 1 and 24 (Samsung Ace and HTC ONE X). 1 K dataset; (c) ROC between microphone 1 and 2 (Samsung Ace). PNE dataset; (d) ROC between microphone 1 and 24 (Samsung Ace and HTC ONE X). PNE dataset; (e) ROC between microphone 1 and 2 (Samsung Ace and HTC ONE X). GUNSHOT dataset; (f) ROC between microphone 1 and 24 (Samsung Ace and HTC ONE X). GUNSHOT dataset.

Table 1.

List of the 34 mobile phones used in the experiments with relative IDentifiers (IDs).

Table 1.

List of the 34 mobile phones used in the experiments with relative IDentifiers (IDs).

| Mobile Phones | IDs | Quantity |

|---|

| Samsung ACE | from 1 to 23 | 23 |

| HTC One X | from 24 to 26 | 3 |

| Samsung Galaxy S5 | from 27 to 29 | 3 |

| Sony Experia | from 30 to 32 | 3 |

| Google Nexus | from 33 to 34 | 2 |

| Total | | 34 |

Table 2.

Hyperparameters of the machine learning algorithms.

Table 2.

Hyperparameters of the machine learning algorithms.

| Machine Learning Algorithm | First Hyperparameter | Second Hyperparameter |

|---|

| 1 K dataset | | |

| SVM | RBF scaling factor (range: to ) | C factor = (range: to ) |

| KNN | K = 2 (range: 1 to 10) | Distance metric = Euclidean Distance (choices: Chebychev, Euclidean, Minkowski) |

| Decision Tree | Maximum Number of splits = 10 (range: 2 to 12) | Split Criterion = Gini’s diversity index (choices: Gini Index and Cross-Entropy) |

| PNE dataset | | |

| SVM | RBF scaling factor (range: to ) | C factor = (range: to ) |

| KNN | K = 1 (range: 1 to 10) | Distance metric = Euclidean Distance (choices: Chebychev, Euclidean, Minkowski) |

| Decision Tree | Maximum Number of splits = 8 (range: 2 to 12) | Split Criterion = Gini’s diversity index (choices: Gini Index and Cross-Entropy) |

| GUNSHOT dataset | | |

| SVM | RBF scaling factor (range: to ) | C factor = (range: to ) |

| KNN | K = 1 (range: 1 to 10) | Distance metric = Euclidean Distance (choices: Chebychev, Euclidean, Minkowski) |

| Decision Tree | Maximum Number of splits = 8 (range: 2 to 12) | Split Criterion = Gini’s diversity index (choices: Gini Index and Cross-Entropy) |

Table 3.

Combinations of m and c used for each value of to define the applied entropy measures.

Table 3.

Combinations of m and c used for each value of to define the applied entropy measures.

| Parameters Combination |

|---|

| , , , |

| , |

| , |

Table 4.

List of features used in the analysis with .

Table 4.

List of features used in the analysis with .

| Feature Id | Description of the Feature | Hyperparameter |

|---|

| 1 | Standard Deviation | None |

| 2 | Skewness | None |

| 3 | Kurtosis | None |

| 4 | Renyi | 2 |

| 5 | Renyi | 3 |

| 6 | Renyi | 4 |

| 7 | Shannon Entropy | No hyperparameter |

| 8, 24, 25, 41 | Dispersion Entropy | () and () and () and () |

| 9, 26 | Permutation Entropy | () and () |

| 10 and 27 | Approximate Entropy | () and (), r = 0.03 Standard Deviation |

| 11, 28 | Approximate Entropy | () and (), r = 0.05 Standard Deviation |

| 12, 29 | Approximate Entropy | () and (), r = 0.1 Standard Deviation |

| 13, 30 | Approximate Entropy | () and (), r = 0.2 Standard Deviation |

| 14, 31 | Approximate Entropy | () and (), r = 0.3 Standard Deviation |

| 15, 32 | Approximate Entropy | () and (), r = 0.4 Standard Deviation |

| 16, 33 | Sample Entropy | () and (), r = 0.1 Standard Deviation |

| 17, 34 | Sample Entropy | () and (), r = 0.2 Standard Deviation |

| 18, 35 | Sample Entropy | () and (), r = 0.1 Standard Deviation |

| 19, 36 | Sample Entropy | () and (), r = 0.2 Standard Deviation |

| 20, 37 | Fuzzy Entropy | () and (), r = 0.1 Standard Deviation |

| 21, 38 | Fuzzy Entropy | () and (), r = 0.2 Standard Deviation |

| 22, 39 | Fuzzy Entropy | () and (), r = 0.1 Standard Deviation |

| 23, 40 | Fuzzy Entropy | () and (), r = 0.2 Standard Deviation |

Table 5.

List of features used in the analysis with and .

Table 5.

List of features used in the analysis with and .

| Feature Id | Description of the Feature | Hyperparameter |

|---|

| 1 | Standard Deviation | None |

| 2 | Skewness | None |

| 3 | Kurtosis | None |

| 4 | Renyi | 2 |

| 5 | Renyi | 3 |

| 6 | Renyi | 4 |

| 7 | Shannon Entropy | No hyperparameter |

| 8, 24 | Dispersion Entropy | and |

| 9, 25 | Permutation Entropy | and |

| 10, 26 | Approximate Entropy | and , r = 0.03 Standard Deviation |

| 11, 27 | Approximate Entropy | and , r = 0.05 Standard Deviation |

| 12, 28 | Approximate Entropy | and , r = 0.1 Standard Deviation |

| 13, 29 | Approximate Entropy | and , r = 0.2 Standard Deviation |

| 14, 30 | Approximate Entropy | and , r = 0.3 Standard Deviation |

| 15, 31 | Approximate Entropy | and , r = 0.4 Standard Deviation |

| 16, 32 | Sample Entropy | and , r=0.1 Standard Deviation |

| 17, 33 | Sample Entropy | and , r = 0.2 Standard Deviation |

| 18, 34 | Sample Entropy | and , r = 0.1 Standard Deviation |

| 19, 35 | Sample Entropy | and , r = 0.2 Standard Deviation |

| 20, 36 | Fuzzy Entropy | and , r = 0.1 Standard Deviation |

| 21, 37 | Fuzzy Entropy | and , r = 0.2 Standard Deviation |

| 22, 38 | Fuzzy Entropy | and , r = 0.1 Standard Deviation |

| 23, 39 | Fuzzy Entropy | and , r = 0.2 Standard Deviation |

Table 6.

Optimal sets of features for the different datasets and different values of .

Table 6.

Optimal sets of features for the different datasets and different values of .

| Data Set, | Method | Optimal Features Set |

|---|

| = 20 | | |

| 1 K | ReliefF | [4,5,6,7,12,13,14,15,31,32] |

| PNE | ReliefF | [1,2,3,5,6,7,8,13,27,29] |

| GUNSHOT | ReliefF | [1,2,4,5,6,7,12,24,26,29] |

| 1 K | NCA | [1,4,5,6,7,12,13,14,15,31,32] |

| PNE | NCA | [1,2,3,5,6,7,8,14,15,32] |

| GUNSHOT | NCA | [1,2,4,5,6,7,11,21,22,23] |

| = 40 | | |

| PNE | ReliefF | [1,2,5,7,8,9,16,17,19,35] |

| GUNSHOT | ReliefF | [1,2,5,6,7,8,14,16,35,37] |

| PNE | NCA | [1,2,5,6,7,8,9,14,30,32] |

| GUNSHOT | NCA | [1,4,5,6,7,12,13,14,29,30] |

| = 60 | | |

| PNE | ReliefF | [1,2,3,4,6,7,8,9,18,37] |

| GUNSHOT | ReliefF | [1,2,3,4,6,7,8,16,18,37] |

| PNE | NCA | [1,2,6,7,8,9,17,18,37,38] |

| GUNSHOT | NCA | [1,5,6,7,9,16,18,19,38,39] |

Table 7.

Accuracy results for different values (in dB) of the SNR for different set of features. The highest values are highlighted in bold.

Table 7.

Accuracy results for different values (in dB) of the SNR for different set of features. The highest values are highlighted in bold.

| Dataset and SNR Value (dB) | All Features | Selected Features with NCA (Best 10 Features) | Selected Features with ReliefF (Best 10 Features) | Baseline Statistical Features |

|---|

| | | | |

| 1 K dB | 0.2015 | 0.1763 | 0.1768 | 0.1917 |

| 1 K dB | 0.6602 | 0.6399 | 0.6396 | 0.5943 |

| 1 K dB | 0.9816 | 0.9799 | 0.9791 | 0.9688 |

| 1 K dB | 0.9846 | 0.9851 | 0.9848 | 0.9748 |

| PNE dB | 0.371 | 0.4521 | 0.4436 | 0.3615 |

| PNE dB | 0.9863 | 0.9883 | 0.9915 | 0.9137 |

| PNE dB | 0.9971 | 0.9978 | 0.9982 | 0.9741 |

| PNE dB | 0.9974 | 0.9978 | 0.9982 | 0.9764 |

| GUNSHOT dB | 0.6274 | 0.6943 | 0.7039 | 0.5240 |

| GUNSHOT dB | 0.9696 | 0.9816 | 0.9857 | 0.8631 |

| GUNSHOT dB | 0.9761 | 0.9851 | 0.9889 | 0.8800 |

| GUNSHOT dB | 0.9762 | 0.9857 | 0.9890 | 0.8835 |

| | | | |

| PNE dB | 0.3760 | 0.4069 | 0.3621 | 0.3818 |

| PNE dB | 0.9629 | 0.9616 | 0.9567 | 0.8409 |

| PNE dB | 0.9834 | 0.9815 | 0.9793 | 0.8826 |

| PNE dB | 0.9840 | 0.9856 | 0.9784 | 0.8821 |

| GUNSHOT | 0.6004 | 0.6587 | 0.6238 | 0.509 |

| GUNSHOT | 0.9465 | 0.9558 | 0.9531 | 0.8071 |

| GUNSHOT dB | 0.9549 | 0.9647 | 0.9632 | 0.8291 |

| GUNSHOT dB | 0.9565 | 0.9648 | 0.9637 | 0.8228 |

| | | | |

| PNE dB | 0.3890 | 0.3818 | 0.4078 | 0.3842 |

| PNE dB | 0.9520 | 0.9397 | 0.9532 | 0.8302 |

| PNE dB | 0.9750 | 0.9655 | 0.9751 | 0.8745 |

| PNE dB | 0.9746 | 0.9668 | 0.9756 | 0.8719 |

| GUNSHOT | 0.4963 | 0.4731 | 0.5110 | 0.4332 |

| GUNSHOT dB | 0.8682 | 0.8190 | 0.8535 | 0.6780 |

| GUNSHOT dB | 0.8829 | 0.8466 | 0.8714 | 0.6940 |

| GUNSHOT dB | 0.8851 | 0.8451 | 0.8755 | 0.6875 |