Abstract

This paper extends the formulation of the Shannon entropy under probabilistic uncertainties which are basically established in terms or relative errors related to the theoretical nominal set of events. Those uncertainties can eventually translate into globally inflated or deflated probabilistic constraints. In the first case, the global probability of all the events exceeds unity while in the second one lies below unity. A simple interpretation is that the whole set of events losses completeness and that some events of negative probability might be incorporated to keep the completeness of an extended set of events. The proposed formalism is flexible enough to evaluate the need to introduce compensatory probability events or not depending on each particular application. In particular, such a design flexibility is emphasized through an application which is given related to epidemic models under vaccination and treatment controls. Switching rules are proposed to choose through time the active model, among a predefined set of models organized in a parallel structure, which better describes the registered epidemic evolution data. The supervisory monitoring is performed in the sense that the tested accumulated entropy of the absolute error of the model versus the observed data is minimized at each supervision time-interval occurring in-between each two consecutive switching time instants. The active model generates the (vaccination/treatment) controls to be injected to the monitored population. In this application, it is not proposed to introduce a compensatory event to complete the global probability to unity but instead, the estimated probabilities are re-adjusted to design the control gains.

1. Introduction

Classical entropy is a state function in Thermodynamics. The originator of the concept of entropy was the celebrated Rudolf Clausius in the mid-nineteenth century. The property that reversible processes have a zero variation of entropy among equilibrium states while irreversible processes have an increase of entropy is well-known. Reversible processes only occur under ideal theoretical modelling of isolated processes without energy losses such as, for instance, the Carnot cycle. Real processes are irreversible because the above ideal conditions are impossible to fulfil. In classical Thermodynamics, the Clausius equality establishes that the entropy variation in reversible cycle processes is zero. That is, the entropy variation is path-independent since it is a state function which takes an identical value at a final state being coincident with an initial one state. However, in irreversible ones the entropy variation is positive. This is the motivating reason which associates a positive variation of entropy to a “disorder increase”. It has to be pointed out that, in order to interpret correctly the non-negative variations of entropy, the system under consideration has to be an isolated one. In other words, some of the mutually interacting subsystems of an isolated system can exhibit negative variations of entropy. Later on Boltzmann, Gibbs and Maxwell have defined entropy under a statistical framework. On the other hand, Shannon entropy (named after Claude E. Shannon, 1916–2001, “the father of information theory”) is a very important tool to measure the amount of uncertainty in processes characterized in the probabilistic framework by sets of events, [1,2,3,4,5]. In some extended studies in the frameworks of physics, economy or fractional calculus, it is admitted the existence of events with negative probabilities, [4,5,6]. Entropy may be interpreted as information loss [1,2,3,7,8,9] and is useful, in particular, to characterize dynamic systems from this point of view [8]. On the other hand, entropy tools have also being used either to model or for complementary modelling support to evaluate certain epidemic models, [10,11,12,13,14,15,16,17]. In some of the studies, the investigation of epidemic models, which are ruled by a differential system of coupled equations involving the various subpopulations, has been proposed in the framework of a patchy environment [13]. The model uncertainty amount is evaluated in such a way that the time-derivative of the entropy is shown to be non-negative at a set of testing sampling instants. In [9], a technique to develop a formal Shannon entropy in the complex framework is proposed. In this way, the components of the overall entropy are calculated so as to determine the real and the imaginary parts of the state complex Shannon entropy as a natural quantum-amplitude generalization of the classical Shannon entropy.

Epidemic evolution has been typically studied through models based either on differential equations, difference equations or mixed hybrid models. Such models, because of their structure, become very appropriate to study the equilibrium points, the oscillatory behaviors, the illness permanence and the vaccination and treatment controls. See, for instance, [18,19,20,21,22,23,24] and some of the references therein. More recently, entropy-based models have been proposed for epidemic models. See, for instance, [14,15,16,25] and some of the references therein. In particular, entropy tools for analysis are mixed with differential-type models in [16,25,26]. Also, the control techniques are appropriate for studies of alternative biological problems, [27,28,29] and, in particular, for implementation of decentralized control techniques in patchy environments where several nodes are interlaced, [27,29]. Under that generic framework, it can be described, for instance, the situation of several towns with different own health centers, where the controls are implemented, and whose susceptible and infectious populations interact through in-coming and out-coming population fluxes. This paper proposes a supervisory design tool to decrease the uncertainty between the observed data related to infectious disease, or those given by a complex model related to a disease, via the use of a set of dynamic integrated simplest models together with a higher-level supervisory switching algorithm. Such a hierarchical structure selects on line the most appropriate model as the one which has a smaller error uncertainty according to an entropy description (the so-called “active model”). Such an active model is re-updated through time in the sense that another active model can enter into operation. In that way, the active model is used to generate the correcting sanitary actions to control the epidemics such as, for instance, the gains of the vaccination and antiviral or antibiotic treatment controls and the corresponding control interventions.

The paper is organized as follows: Section 2 recalls some basic concepts of Shannon entropy. Also, those concepts are extended to the case of eventual presence of relative probabilistic uncertainties in the defined set of events compared to the nominal set of probabilities. Section 3 states and proves mathematical results for the Shannon entropy for the case when a nominal complete finite and discrete set of events is eventually subject to relative errors of their associate probabilities in some of all of their various integrating events. The error-free nominal system of events is assumed to be complete. The current system of events under probabilistic errors may be either deflated or inflated in the sense that the total probability for the whole sets might be either below or beyond unity, respectively, so that it might be non-complete. In the case of globally inflated of deflated probability of the whole set of events, new compensatory events can be used to accomplish with a unity global probability. The entropy of the current system is compared via quantified worst-case results to that of the nominal system. Section 4 applies partially the results of the former sections to epidemics control in the case that either the disease transmission coefficient rate is not well-known or it varies through time due, for instance, to seasonality. The controls are typically of vaccination or treatment type or appropriate mixed combinations of both of them. A finite predefined set of running models described by a system of coupled differential equations is set. Such a whole discrete set covers a range of variation of such a coefficient transmission rate within known lower-bound and upper-bound limits. Each model is driven by a constant disease transmission rate and the whole set of models covers the whole range of foreseen variation of such a parameter in the real system. Since the set of models is finite, the various values of the disease transmission rate are integrated in a discrete set within the whole range of admissible variation of the true coefficient rate. A supervisory technique of control monitoring is proposed which chooses the so-called active model which minimizes the accumulated entropy of the absolute error data/model within each supervision interval. A switching rule allows to choose another active model as soon as it is detected that the current active model becomes more uncertain than other(s) related to the observed data. The active model supplies the (vaccination and/or treatment) controls to be injected to the real epidemic process. Due to the particular nature of the problem, compensatory events are not introduced for equalizing the global probability to unity. Instead, a re-adjustment of the error probabilities related to the true available data is performed to calculate the control gains provided by the active model. It can be pointed out that some parameters of the epidemic description evolutions, typically the coefficient transmission rate, can vary according to seasonality [30]. This justifies the use of simpler active invariant models to describe the epidemics evolution along time subject to appropriate model switching. On the other hand, the existing medical tests which evaluate the proportions of healthy and infected individuals within the total population are not always subject to confluent worst-case estimation errors. See, for instance [31,32]. In the case that the estimated global probability of the various subpopulations is not unity, the estimated worst-case probabilities need to be appropriately amended before an intervention. This design work is performed and discussed in Section 5 through numerical simulations in confluence with the above mentioned supervision monitoring technique. Finally, conclusions end the paper.

2. Basic Entropy Preliminaries

Assume a finite system of events of respective probabilities for ; with , with which is complete, that is, only one event occurs at each trial (e.g., the appearance of 1 to 6 points in rolling a die). The Shannon entropy is defined as follows:

which serves as a suitable measure of the uncertainty of the above finite scheme. The name “entropy” pursues a physical analogy with parallel problems, for instance, in Thermodynamics or Statistics Physics which does not have a similar sense here so that there is no need to go into in the current context. The above entropy has been defined with neperian logarithms but any logarithm with a fixed base could be used instead with no loss in generality.

Note that with if and only if for some arbitrary and ; and

with if and only if . Assume that the probabilities are uncertain, given by ; , subject to the constraints ; , where and are known so that the current entropy (or the entropy of the current system) is uncertain and given by ; . The reference entropy for the case when the probabilities of all the events are precisely known being equal to ; is said to be the nominal entropy (or the entropy of the nominal system). Some constraints have to be fulfilled in order for the formulation to be coherent related to the entropy bounds under probabilistic constraints in the set of involved events. The following related result follows:

Lemma 1.

Assume a nonempty finite complete nominal system of eventsof respective nominal probabilitiesfor;and a current (or uncertain) version of the system of eventsof uncertain probabilities;, whereare relative probability errors due to probabilistic uncertainties. Define the following disjoint subsets of:

,,.

Then, the following constraints hold:

- (i)

- is complete if and only if(global probabilistic uncertainty mutual compensation)

- (ii)

- , andor(and then)

Proof:

The proof of Property (i) follows since being complete implies that is complete if and only if .

and is complete since . Property (i) has been proved, The proof of Property (ii) follows from Property (i). To prove the first constraint, assume, on the contrary, that . Then, either and or and . Assume that and . Then, which contradicts Property (i). Similarly, if and then which again contradicts Property (i). Thus, and the first constraint of Property (i) has been proved. Now, assume that and . First, assume that and (from the already proved above first constraint of this property). If then, which contradicts Property (i). If then and . So, if then and (then ). In the same way, interchanging the roles of and , it follows that, if then and . One concludes that either or and Property (ii) is proved.

Note that, in Lemma 1, is the subset of probabilistically certain events of (in the sent that is elements have a known probability) and is the subset of probabilistically uncertain events of . For being nonempty, any of the sets , and or pair combinations may be empty. Note also that the probabilistic uncertainties have been considered with fixed values ; . □

3. Entropy Versus Global Inflated and Deflated Probability Constraints in Incomplete Systems of Events under Probabilistic Uncertainties

An important issue to be addressed is how to deal with the case when, due to incomplete knowledge of the probabilistic uncertainties, the sum of probabilities of the current complete system of events, i.e., that related to the uncertain values of individual probability values exceeds unity, that is , a constraint referred to as “global inflated probability”. If both the nominal individual and nominal global probability constraints, ; and hold then there is a global inflated probability if and only if the probabilistic disturbances fulfill . It is always possible to include the case of “global deflated probability” if implying that provided that .

Three possible solutions to cope with this drawback, associate to an exceeding amount of modeled probabilistic uncertainty leading to global inflated probability, are:

(a) To incorporate a new (non empty) event with negative probability which reduces the probabilistic uncertainty so that the global probability constraint of the extended complete system of events holds. Obviously, losses is characteristic property of being a complete system of events and is said to be partially complete since it fulfills the global probability constraint but it has one negative probability.

(b) To modify all or some of the probabilistic uncertainty relative amounts ; so that the global probability constraint of the complete system of events holds for the new amended fixed set .

(c) To consider uncertain normalized probabilities ; . In this case, there is no need to modify the individual uncertainties of the events but the initial uncertainties are kept. Furthermore, the events would keep its ordination according to uncertainties, namely, if for then , the modified set of events is complete and keeps the same number of events as the initial one.

Two parallel solutions to cope with global deflated probability are:

(d) To incorporate a new (non empty) event with positive probability which decreases the probabilistic uncertainty so that the global probability constraint of the extended complete system of events holds. As before, the current system of events losses is completeness and is complete.

(e) To modify all or some of the probabilistic uncertainty relative amounts ; so that new amended fixed set agrees with the global probability constraint.

Firstly, we note that the introduction of negative probabilities invoked in the first proposed solution has a sense in certain problems. In this context, Dirac commented in a speech in 1942 that negative probabilities can have a sense in certain problems as negative money has in some financial situations. Later on, Feynman has used also this concept to describe some problems of physics [4] and also said that negative trees have nonsense but negative probability can have sense as negative money has in some financial situations. More recently, Tenreiro-Machado has used this concept in the context of fractional calculus [6].

It is now proved in the next result that the incorporation of a new event of negative probability to define from the system of events an extended partial complete system of events does not alterate the property of the non-negativity of the Shannon entropy in the sense that that of the extended system of events is still non-negative. At the same time, it is proved that

(1) If then the real part of the entropy of (which is complex because of the negative probability of the added event for completeness) is smaller that that of . The interpretation is that the excessive disorder in , due to its global inflated probability, is reduced (equivalently, “the order amount” is increased in with respect to ) when building its extended version by the contribution of the added event of negative probability. Also, both and are not complete while is partially complete since it fulfills the global probability constraint although not the partial ones in all the events due to the incorporated one of negative probability.

(2) If then the above qualitative considerations on increase/ decrease of “order” or” disorder” are reversed with respect to Case a.

Theorem 1.

Assume a nonempty finite complete nominal system of eventsof respective nominal probabilitiesfor;and a current (or uncertain) version of the system of eventsof uncertain probabilities;. Assume that(that is, there is a global inflated probability of the current system of events). Define the extended system of eventssuch thathas a probability. Then,

- (i)

- Ifthenwithif and only if.

- (ii)

- Ifthen,

where:

are the respective entropies of and , the second one being complex.

Proof:

□

Since then is not complete. Since the nominal system of events is complete then with ; and . The entropy of is

and that of the extended system of events is . Consider two cases:

Case (a)

Since the neperian logarithm of a negative real number exists in the complex field and it is real such that its real part is the neperian logarithm of its modulus and the imaginary part is ( being the imaginary unit), so that it becomes a negative real number, one gets the following set of relations:

Then:

since leads to

One concludes that that with if and only if .

It has to be proved now that . Assume, on the contrary, that . Since , this happens if and only if the following contradiction holds

since in the assumption of global inflated probability. Then, .

Case (b) for some . Then and then the last above identity changes the second term right-hand-side term resulting in:

It is now proved in the next result for the case of deflated probability that the introduction of an additional event of positive probability to define from the system of events an extended partial complete system of events does not modify the property of the non-negativity of the Shannon entropy in the sense that that of the extended system of events is still non-negative. At the same time, it is proved that the entropy of is larger that that of . The interpretation is that the excessively low disorder in , due to its global deflated probability, is increased (equivalently, “the order amount” is decreased) when building its extended version by the contribution of the added event of positive probability. Also, is not complete while is complete.

Theorem 2.

Assume a nonempty finite complete nominal system of eventsof respective nominal probabilitiesfor;and a current (or uncertain) version of the system of eventsof uncertain probabilities;. Assume that(that is, there is a global deflated probability of the current system of events). Define the extended system of eventssuch thathas a probability. Then,, where:

are the respective entropies ofand.

Proof:

Since then is not complete. Since the nominal system of events is complete then with ; and . The entropy of is and that of is:

Since , one gets the following set of relations:

One concludes that that .

Note that since we entropy is defined with a sum of weighted logarithms of nonnegative real numbers bounded by unity in the usual cases (which exclude negative probabilities) then the Shannon entropy of the worst case of the probability uncertainty is not necessarily larger than or equal to the nominal one. □

Example 1.

Assume that the nominal system of two events iswith, then. Assume that it probabilistic uncertainty is given by,,. Then, the entropy under the uncertain probability ofis.

Example 2.

Consider the complete system of eventssuch that;, for some, is the probability of,and constants;satisfying that. Note thatso that there is no negative probability and neither global inflated or global deflated probabilities. The Shannon entropy is the everywhere continuous real concave function:

whose maximum value is reached at a real constantif. Direct calculation yields, since,

Such ais unique for the given set, since the functionis concave in its whole definition domain, and admissible provided that. The unique solution for each given setis satisfying the constraint:

Sinceis continuous and bounded from above withand, andsuch that a realexists inand it is unique, since there is a uniquesuch that, for each given set and the maximum entropy becomes

In particular, ifwithandthen

and:

leading toso thatand. Note that this is equivalent to, that is. In particular, ifthen. Assume now that one uses any basis of logarithms to define the entropy so that:

leading to:

which is satisfied for the same conditions as above, that is, if so that again. However, if ( being the basis of the neperian algorithms).

Example 3.

Consider the particular case of Example 2 forwith global deflated probability, that is,and. The system of two eventsis not complete and we extend it with a new eventof positive probabilityso that the extended system of events is complete. Now, one has for such an extended system:

which is satisfied forsuch that. Note thatwithbeing obtained in Example 2 for the non-deflated probability case, as expected from Theorem 2. Note also that the value ofgiving the maximum entropy is less than the one producing the maximum entropy in the non-deflated case of Example 2 since that onewith give the contradiction 0=1 in the above formula forandwould give the contradictionin such a mentioned formula.

The next result relies on the case where the probabilities are uncertain but each nominal probability is assumed to be eventually subject to the whole set of probabilistic uncertainties so as to cover a wider class of potential probabilistic uncertainties. It is also assumed (contrarily to the assumptions of Theorem 1 and Theorem 2) that the probability disturbances never increase each particular probability of the nominal system of event since they are all constrained to the real interval . As a result, the cardinalities of the extended current and nominal systems of events are, in general, distinct. It is found that the entropy of the current system of events is non-smaller than the nominal entropy.

Theorem 3.

Letandthe extended perturbed and current entropies of the sets of nonempty eventsand, respectively, of respective cardinalitiesand, withand. Then, the following properties hold:

- (i)

- , whereis the incremental entropy due to the uncertainties andif and only ifand;and some arbitrary.Ifandwith;andthen the above relations hold withif and only ifand;and some arbitrary.

- (ii)

- Assume thatandwith;and. Then,for any given integerand anysuch that.

- (iii)

- The following identity holds under the constraints of Property (ii):for any given integerand anysuch that.

Proof:

Note from the additive property of the entropy that and ; , , , equivalently, and if . Then, Property (i) follows from the non-negativity property of the entropy and the fact that if and only if and ; and some arbitrary what implies that . The property for and ; is a particular case of the above one. Property (i) has been proved.

On the other hand, one gets from the recursive property of the entropy if and ; with , ; , and that:

for any given integer each equality for a right-hand-side is valid for each such that , ; , implying that the sum of the two first argument of the entropy is positive, that is, . Property (ii) has been proved. Property (iii) is a consequence of Property (ii). □

The next result relies on the case where the probabilities are uncertain but belonging to a known admissibility real interval, rather than fixed as it has been assumed in Lemma 1. Contrarily to Theorem 3, it is not assumed that the probabilistic disturbances are within so that they can increase the corresponding nominal probabilities.

Theorem 4.

Assume a finite complete system of eventssuch that the nominal and current probabilities of the eventareand, respectively, with, such that(so that the constraintholds,and;and. Then, the following properties hold:

- (i)

- withandwhereand

- (ii)

- where:

- (iii)

- Ifandthen:

- (iv)

- Ifandthen:such that an upper-bound of the lower-boundofis:

Proof:

Note that, since ; then ; so that:

and Property (i) follows directly. On the other hand, note that:

Note that, since ; , we can use Taylor’s expansion series around 1 for the above neperian logarithms to get:

so that:

so that . Since then so that and . Furthermore, (26) and (27) imply that and . The proof of Property (i) has been completed. Now, since and ; then (25) follows since:

since ; :

since ; , equivalently, ; so that:

and Property (ii) has been proved. On the other hand, Property (iii) is proved as follows:

After using of Cauchy-Schwartz inequality for summable sequences and the constraint , one gets, since and , that Property (iii) holds since:

and Property (iv) follows from Property (iii) and the fact that:

□

4. Applications to Epidemic Systems

This section is devoted to the application of entropy tools to the modelling of epidemics. It should be pointed first out that some parameters of the epidemic description evolutions, typically the coefficient transmission rate, can vary according to seasonality [30]. It can be also pointed out that the existing medical tests to evaluate the proportions of healthy and infected individuals are not always subject to confluent estimation of the errors. That is the estimated worst-case errors for reach of the populations can have different running ranges due to the fact that they are performed with different techniques. See, for instance, [31,32] where the mentioned question is justified taking as a basis medical tests.

-To solve the first problem, one considers the usefulness of designing a parallel scheme of alternative time-invariant models, being ran by a supervisory switching law, to choose the most appropriate active time-invariant model through time. The whole set of models covers the whole range of expected variations of the model parameters through time. The objective of the parallel structure is to select the one which describes the registered data more tightly along a certain period of time.

-On the other hand, the fact that the existing tests of errors on the subpopulation integrating the model not always give similar worst-case allowed estimated errors for all the subpopulations, justifies and adjustment of the probabilities in the case when the sum of all of them does not equalize unity. Two potential actions to overcome this drawback are: (a) the introduction of events of positive (respectively, negative) probabilities in the case of deflated (respectively, inflated) global probability; (b) to readjust all the individuals estimated probabilities via normalization by the current sum which is distinct of unity. In the first case, the re-adjustment is made by an algebraic sum manipulation. In the second one, be readjusting via normalization all the individual probabilities. In both cases, it is achieved that the amended global probability is unity.

Assume an epidemic disease with unknown time-varying bounded coefficient transmission rate , where and are known, which is defined by the following differential system of first-order differential equations:

where is the state-vector and is the vector of parameters containing all other parameters that the coefficient transmission rate, like recovery rate mortality rate, average survival rate, average expectation of life irrespective of the illness etc. In practice, Equation (43) can be replaced by a non-parameterized description, based on the state measurements through time, where is given by provided experimental on-line data on the subpopulation which in this case, should be discretized with a small sampling period. In this way, (43) can be either a more sophisticated mathematical model, than those simplified ones provided later on, which provides data on the illness close to the real measurements or the listed real data themselves got from the disease evolution. The state vector contains the subpopulations integrated in the model which depend on the type of model itself such as susceptible (E), infectious (I) and recovered (or immune) (R) in the so-called SIR models to which it is added, in the so-called SEIR models the exposed subpopulation (E) which are those in the first infection stages with no external symptoms. The models can also contain a vaccinated subpopulation (V) and can have also several nodes or patches, describing, for instance, different environments, in general coupled, each having their own set of coupled subpopulations which interact with the remaining ones though population fluxes. The vector is the control vector. There are typically either one control, namely, vaccination on the susceptible, or two controls, namely, vaccination on the susceptible and (either antiviral or antibiotic) treatment on the infectious in the case when there is only one node. Those controls might be applied to each subsystem associated to one patch if there are several patches integrated in the model. The matrix function of dynamics is a real -matrix for each . The control matrix has as many columns as controls are applied and it typically consists of entries being “o” (i.e., no control applied on the corresponding state component associated to one subpopulation), “−1” if the control leads to a decrease of the rate of growing of a subpopulation, for instance, vaccination effort on the susceptible) and “+1” if it leads to a compensatory increase rate of a subpopulation due to a corresponding decrease of another one, for instance, the increase in the recovered in the vaccination case (when the susceptible are decreased via vaccination) or again the recovered in the treatment case (when the infectious are decreased via treatment). For simplicity, it is assumed that is constant and there are no delays in the dynamics.

The control architecture which is proposed consists of a scheme of approximated models located in a parallel disposal, one of them being chosen by a higher- level supervisory switching scheme as the active model to select the controller gains along each current time interval.

In particular, it is proposed to run a set of approximated models of the same dimension as Equation (43) with a constant coefficient transmission rate ; being chosen as:

with ,where:

in such way that and . Note that the approximated models (44) and (45) are parameterized by a constant coefficient transmission rate contrarily to the real model (43) whose coefficient transmission rate is time-varying. The remaining parameters are constant and eventually time- varying, respectively. Note also that the models (44) are initialized to the initial conditions. We consider a set of event errors of the states of the models (44) and (45) with respect to (43), that is, ; . Each event is integrated by a set of events which are the errors of each of its integrating subpopulations with respect to the real system, that is, ; .

Define the instantaneous error entropies of each error event by summing up all the component-wise contributions, that is, ; , while the corresponding accumulated continuous-time and discrete-time entropies on the time interval are defined in a natural way from the instantaneous ones, respectively, as follows:

and:

provided that so that is the set of sampling intervals on of period which is a submultiple of with and being design parameters satisfying these constraints.

The control effort is calculated by applying on a time interval the control which has made the accumulated entropy of the error on an error event to be smallest one among all the error events on a tested previous time interval which defines the so-called active model on . To simplify the exposition, and with no loss in generality, the accumulated discrete - time entropy is the particular one used for testing in the sequel. Then, the following switching Algorithm 1 is proposed:

| Algorithm 1 (all the subpopulations are ran by the same active model within each inter-switching interval) |

| Step 0- Auxiliary design parameters: Define the prefixed minimum inter-sample period threshold and being an auxiliary time interval, , to measure the possible degradation of the current active model in operation what foresees a new coming switching. |

| Step 1-Initial control: for , with , for some arbitrary model and make , the initial active control being an the initial running integer for switching time instants is . |

| Step 2- Eventually switched control:

|

| such that: -current active model: ; is the active model in the set of models which generates the control on , - next active model: , such that is the next active model to be in operation at the next switching time instant . - The switching time instants and the inter-switching time periods for depend on the set of preceding sampling instants . - The control law (48) is calculated by computing the accumulated discrete- time entropies (47) with the following probabilistic rule: ; , , |

| Step 3-Updating the activation of the next active control and inter-switching time interval: Do with being the next controller switching time instant to the next active model , re-initialize all the models to the measured data, that is, ; and Go to Step 2. |

Remarks:

(1) Note that the initial control run on a time interval lasting at least the designed time interval length. In the case of availability of some “a priori” knowledge about the adequacy of the various models to the epidemic process in the initial stage of the disease, this knowledge can be used to overcome the arbitrariness in the selection of the initial controller.

(2) Note also that if;,,then the global probability of the error eventcannot be inflated but it can be deflated. If;,, then such a global probability is neither inflated nor deflated for all time.

;,. Note also that the fact thatcan be time-varying so that the probabilities have a margin for experimental design adjustment relies with the problem statement of probabilities subject to possible errors in the theoretical statements of the above sections.

(3) Note that in the above algorithm there are zero-probability (although non impossible) events of leading a unique solution like, for instance, the first accumulated entropy equality in (48) of the strict inequality leading the next active controller. If the event a lack of uniqueness be detected one can fix any valid solution (from the set of valid ones) to run or simply to give an uniqueness rule, as for instance, to get as active the nearest indexed model to the last active one active among the set of valid ones.

(4) Note also that the eventsforand each givenare not mutually independent since they consist of the solutions of all the subpopulations, each given by a single-order differential equation, between thedifferential equation of the-model. The reason is that first-order equations of the differential system are coupled.

(5) It can be observed that the switching time instants are also resetting times of initial conditions with the real (or more tightly) data provided by the real model (43).

(6) The use of Equation (49) to calculate the probabilities together with their companion saturation rules for the control gains, is sufficient to evaluate the entropies of Equation (48) in order to implement the algorithm. So, it is no need of introduction of a compensatory event of negative probability to equalize to unity the global probability.

An alternative to the above switching algorithm consists of defining the error events, one- per subpopulation of the epidemic description (44) versus their real one counterparts in (43). For instance, we can think of choosing the “susceptible event error” with the first error components of all the solutions of (44) compared to the first component of (43) and one proceeds so on for the various remaining subpopulations. So, we can chose online the best simplified model (that is, the closest one to the true complex model) for each subpopulation. Note that this can be reasonable since the approximated models are designed by choosing parameterization of the coefficient transmission rate which sweep a region where the true one is point-wise allocated through time. In parallel, each whole set of error events, associated to each of the subpopulations, consists of mutually independent events since, in this case, the data of all the set of models, including those of the active one, are generated by different differential equations on nonzero measure intervals. In this case, the set of events is , such that ; is associated to the -th subpopulation, instead of with ; as associated to one model as it was sated in the former design. Then, we have the following Algorithm 2.

| Algorithm 2 (each subpopulation can be ran by a different active model within each inter-switching interval) |

| Step 0- Auxiliary design parameters: it is similar to that of Algorithm 1. |

| Step 1-Initial control: it is similar to that of Algorithm 1. |

| Step 2- Eventually switched control:

|

| such that is the -th controller component, and one can distinguish: - the current active model for the-th subpopulation: ; is the active model in the set of models which generates the control on , - the next active model for the -th subpopulation: , such that is the next active model to be in operation at the next switching time instant . - The switching time instants are obtained in a similar way as in Algorithm 1. - The control law (33) is calculated by computing the accumulated discrete- time entropies (47) with a similar probabilistic rule as that of Algorithm1 with errors ; , , |

| Step 3- Updating the activation of the next active control and inter-switching time interval: it is similar to that of Algorithm 1. |

Remark 6.

Related to (50) in Algorithm 2 versus (48) in Algorithm 1, note thatis the-th controller component. Assume that the epidemic model is a SEIR one such that. The precise meaning of the sentence is that the active vaccination control is got, for instance, from the active controllerif feedback vaccination control is used as being proportional to the susceptible subpopulation. And the active treatment control is got, for instance, from the active controllerif feedback treatment control is used being proportional to the infectious subpopulation. If there are no more controllers the remaining controllers would be zeroed for all time. The models for the other components could be omitted from the whole scheme or simply used for information of the estimation of subpopulations since no controls are got from them to be injected to the real population.

5. Simulation Examples

This section contains some simulation examples illustrating the application of the proposed Entropy paradigm to the multi-model epidemic system discussed in Section 4. Thus, the behavior of Algorithms 1 and 2 will be shown in this section through numerical examples in open and closed-loop. The accurate model considered as the one generating the actual or true data is the SEIR one described in [27] with vaccination:

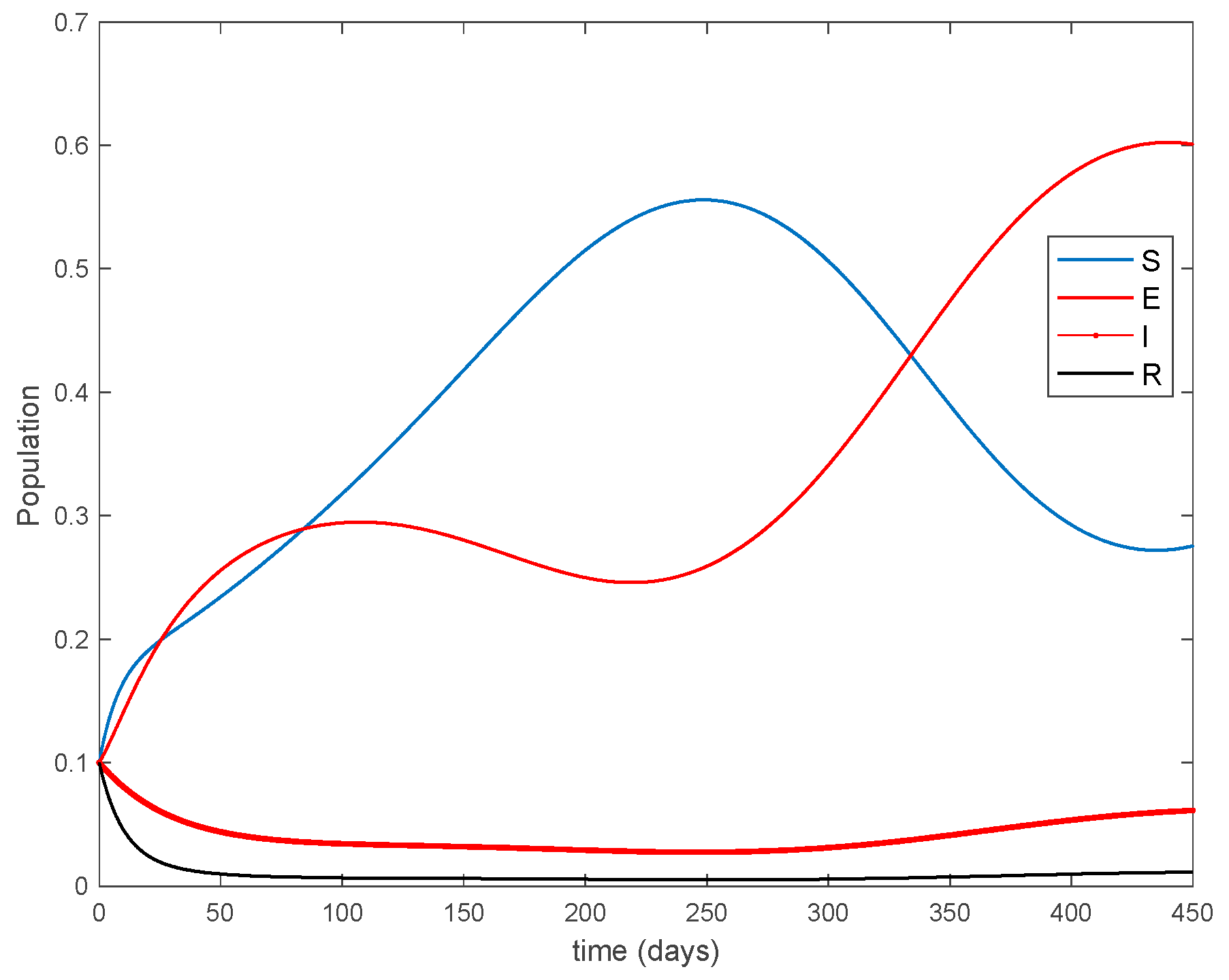

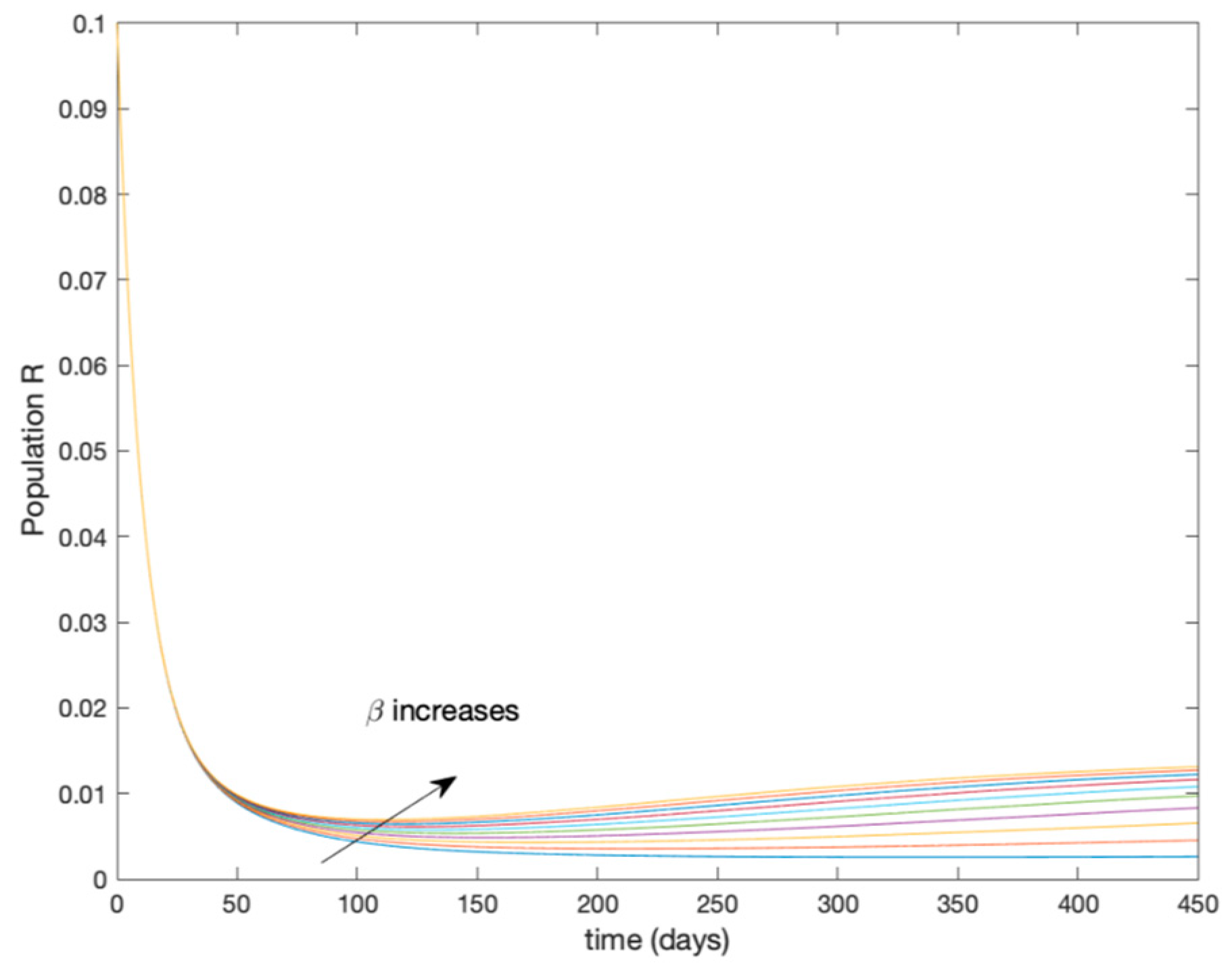

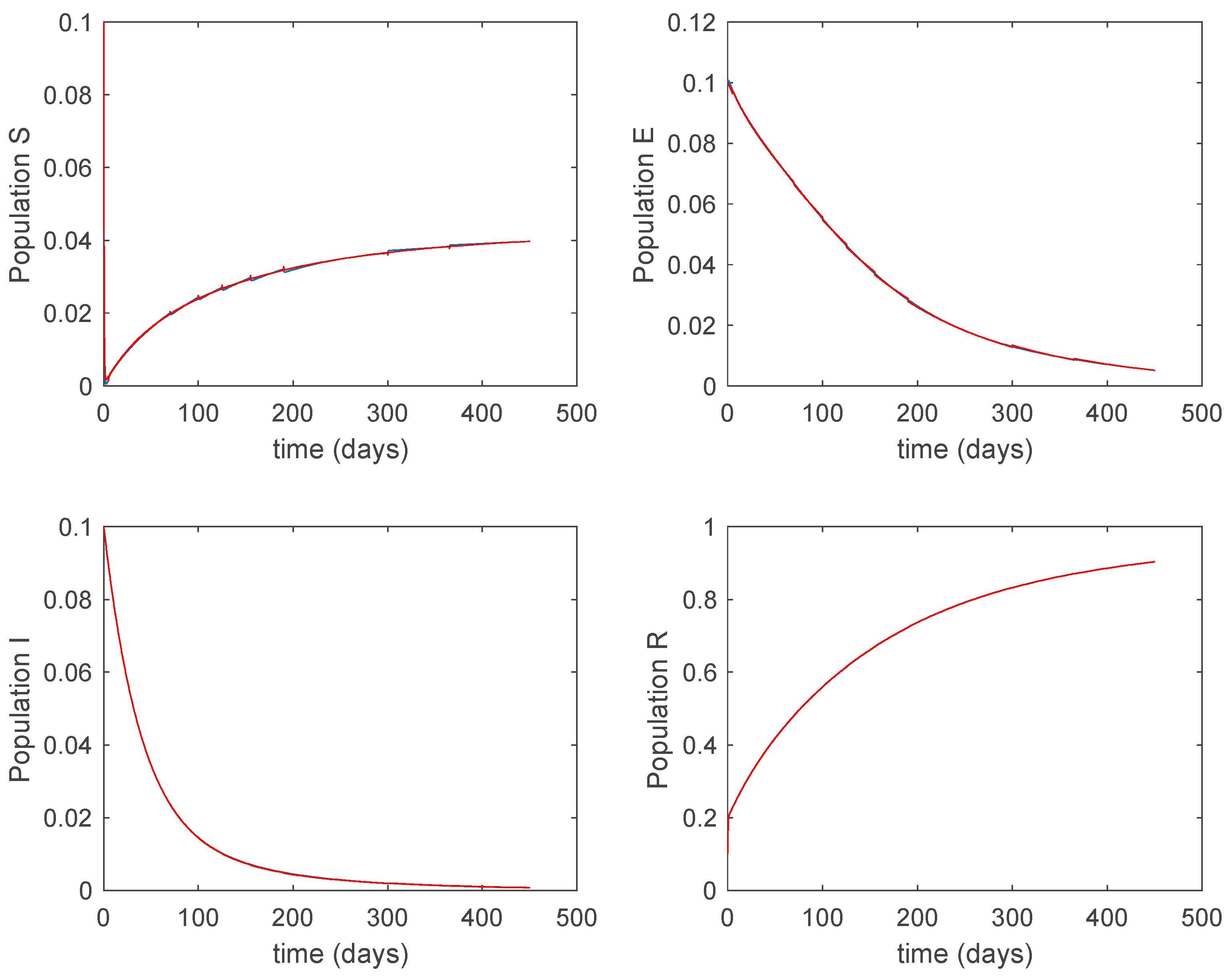

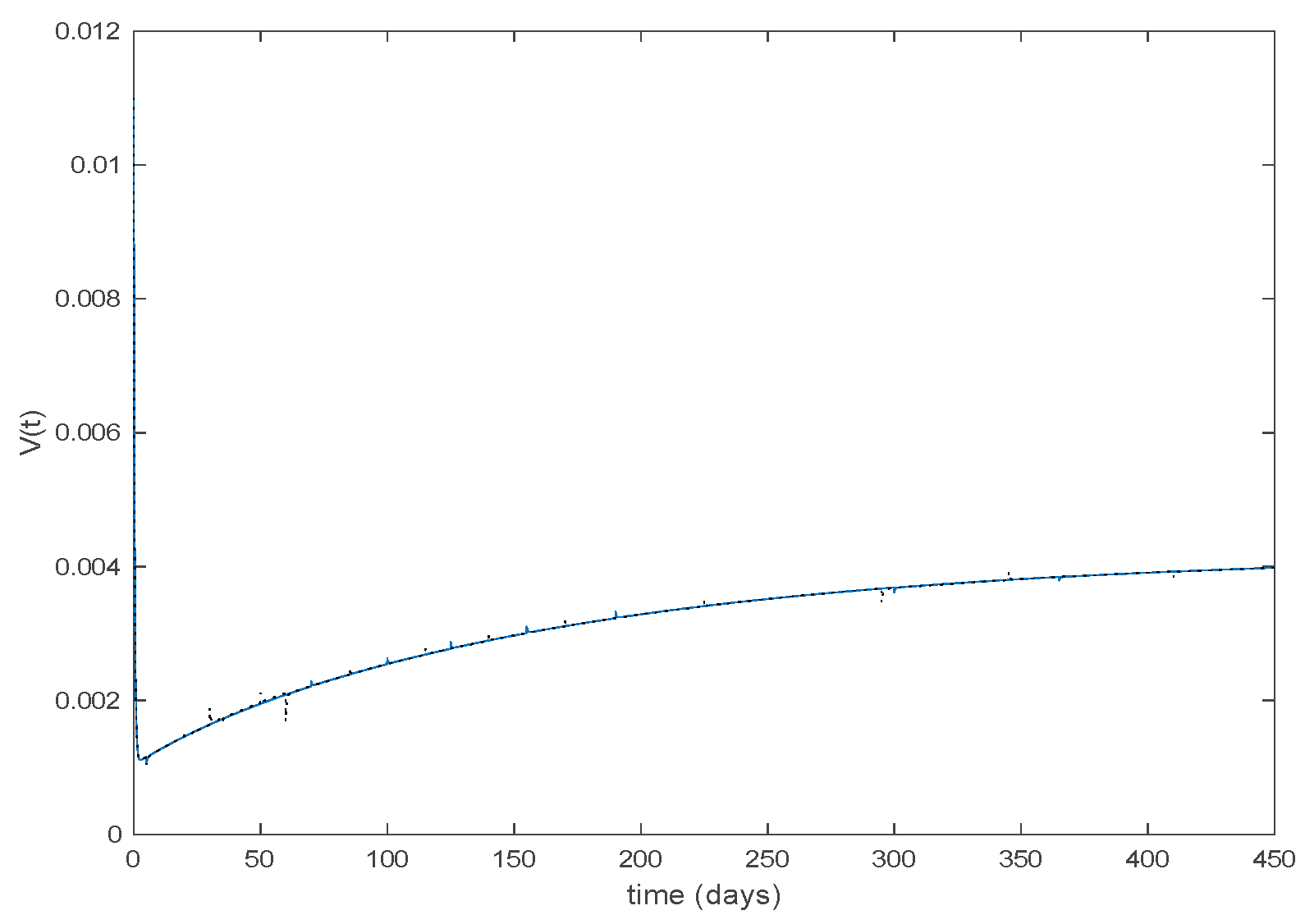

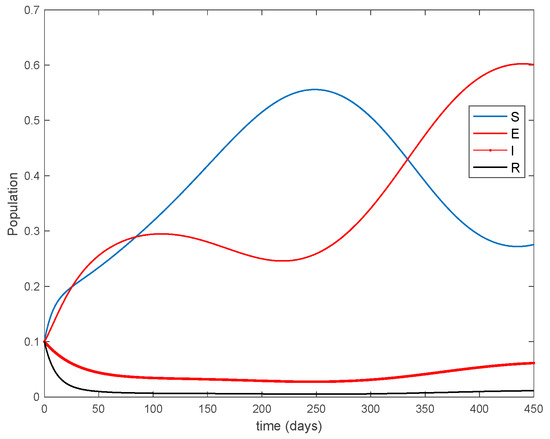

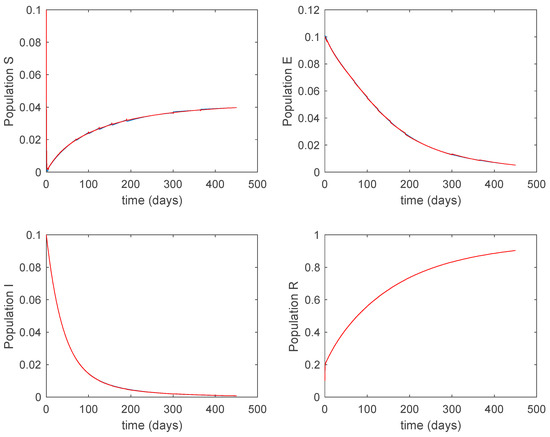

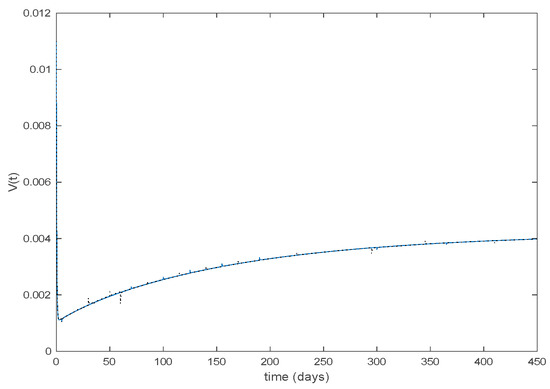

where years−1 is the growth and death rate of the population, years−1, days−1, days−1 are the instantaneous per capita rates of leaving the exposed, infected and recovered stages, respectively, and denotes the vaccination. This model fits in the structure given by (26) where acts as the control command. The initial conditions are given by . All the parameters are assumed to be constant except , the disease transmission coefficient, which describes the seasonality in the infection rate and is given by the widely accepted Dietz’s model, [30], with and . The function describes annual seasonality in this example. Figure 1 shows the behavior of this system in the absence of any external action (i.e., in open loop). As it can be observed in Figure 1, the disease is persistent since the infectious do not converge to a zero steady-state value asymptotically. This situation will be tackled in Section 5.2 by means of the vaccination function in order to generate a closed-loop system whose infectious tend to zero.

Figure 1.

Dynamics of the accurate seasonal epidemic model.

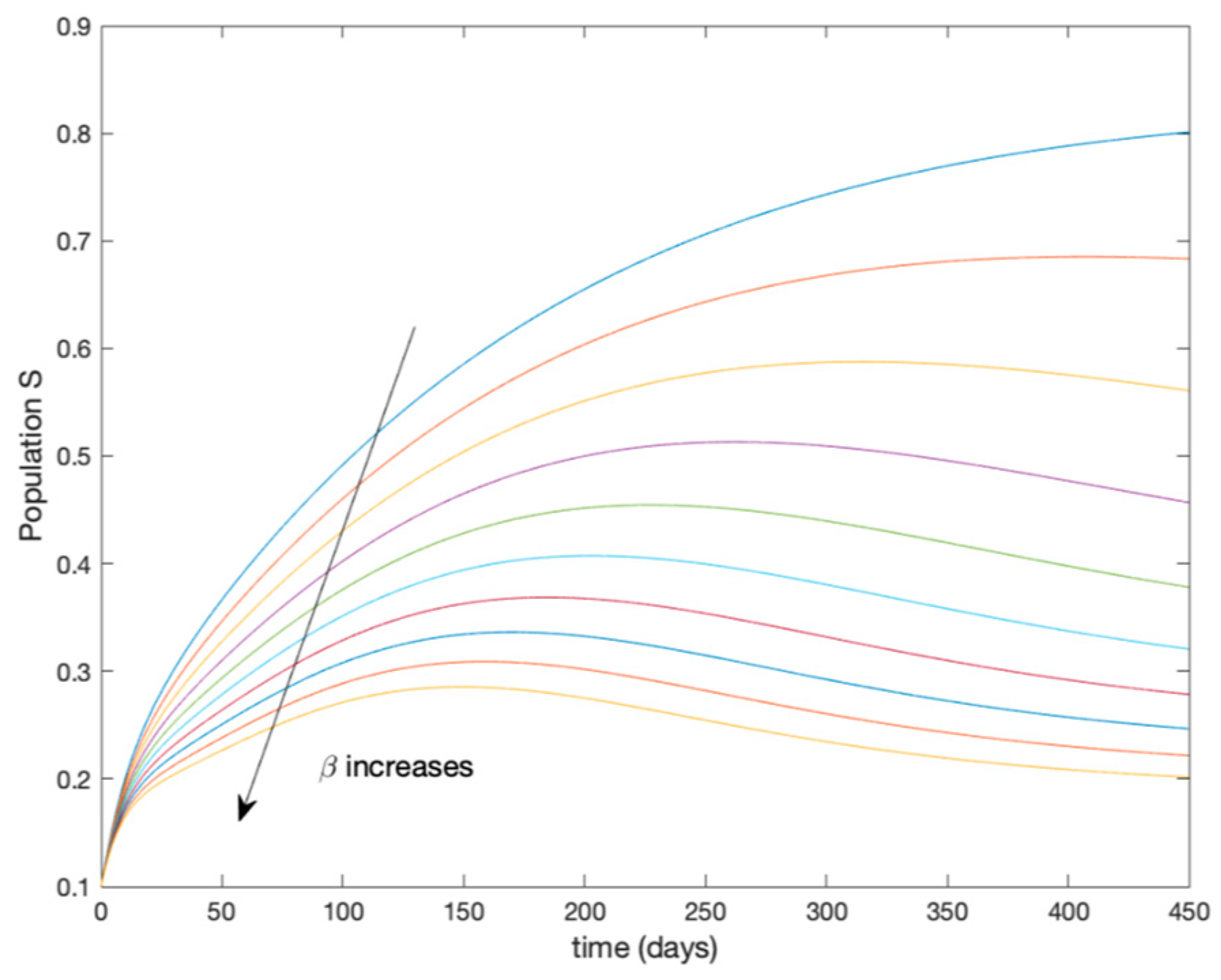

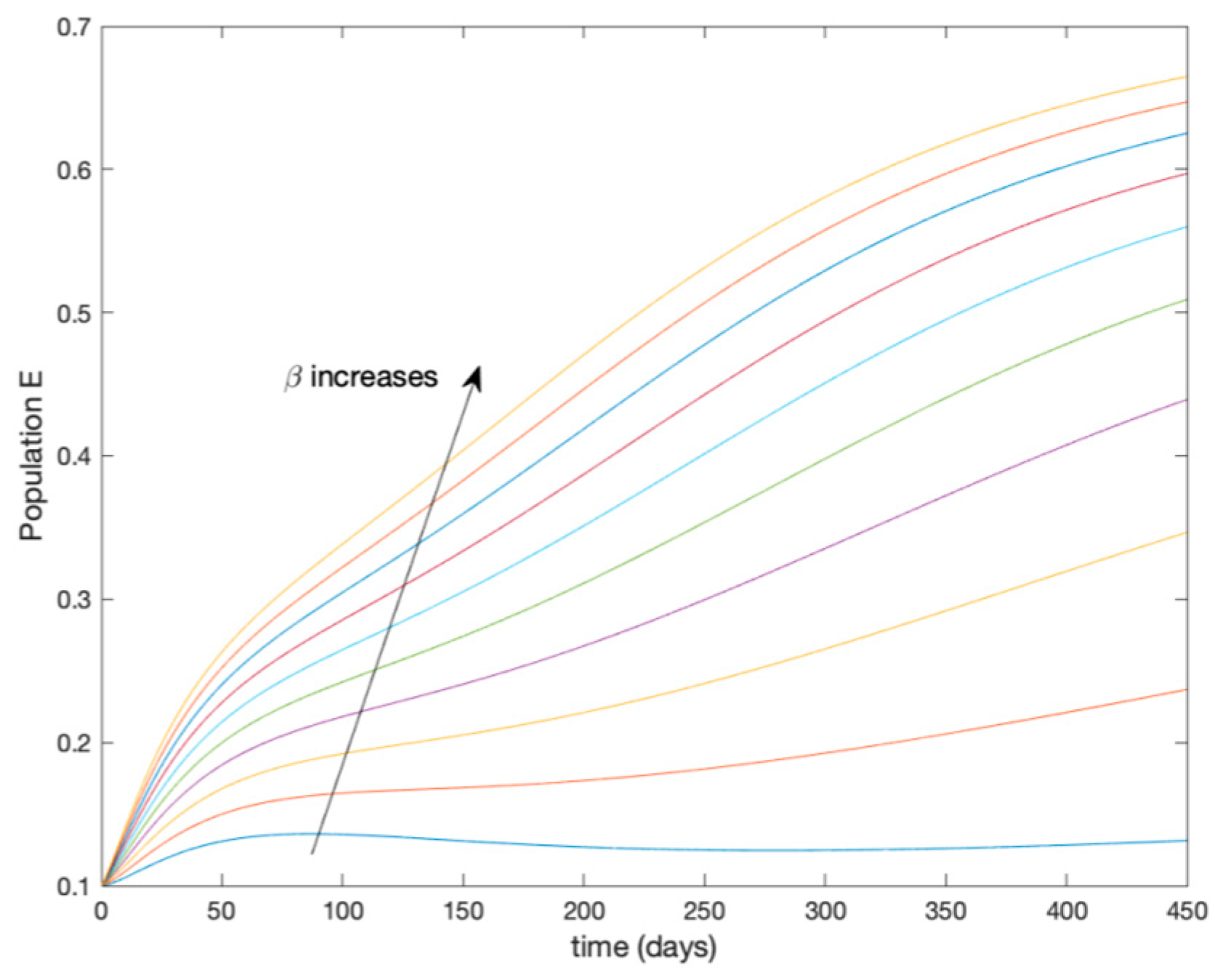

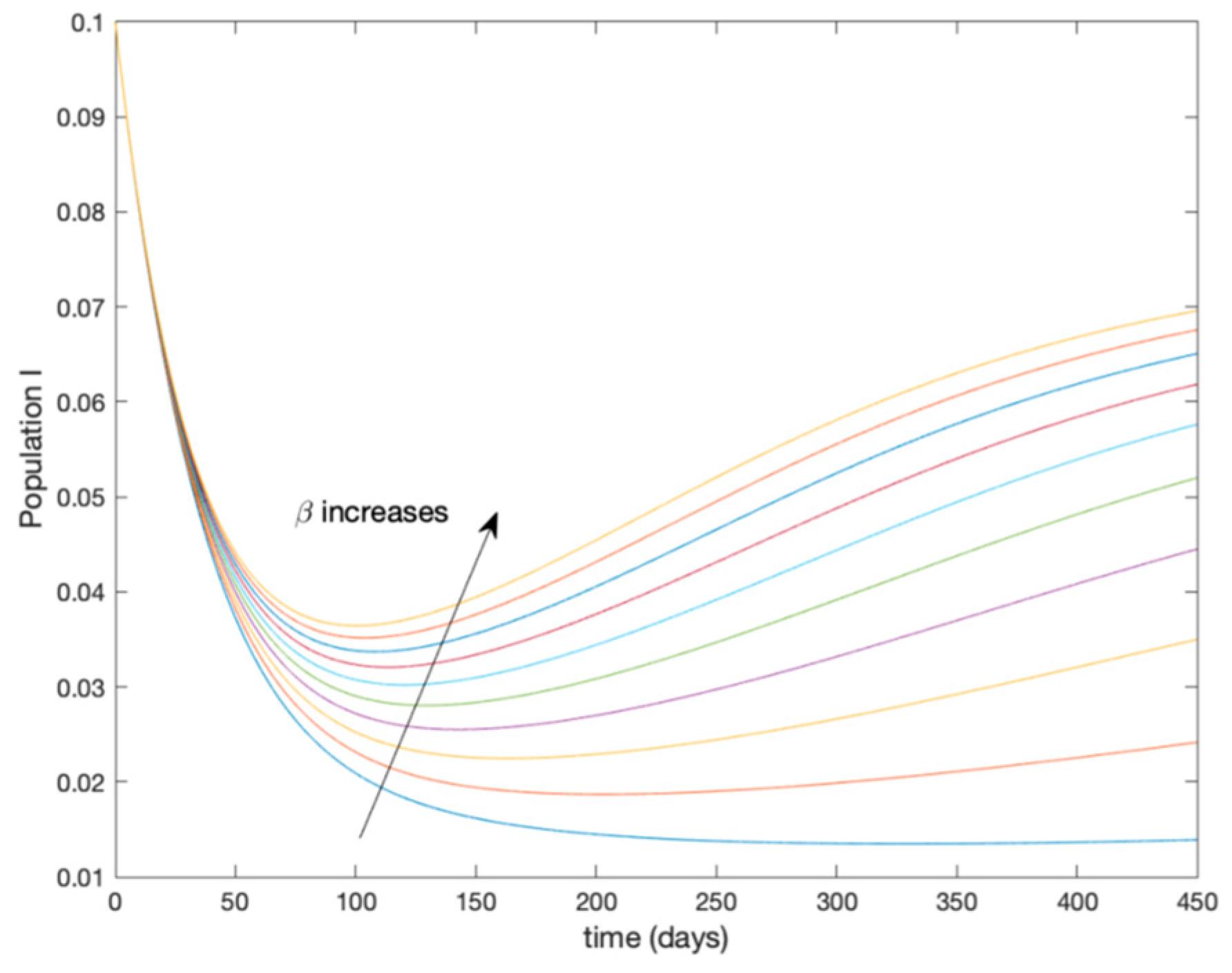

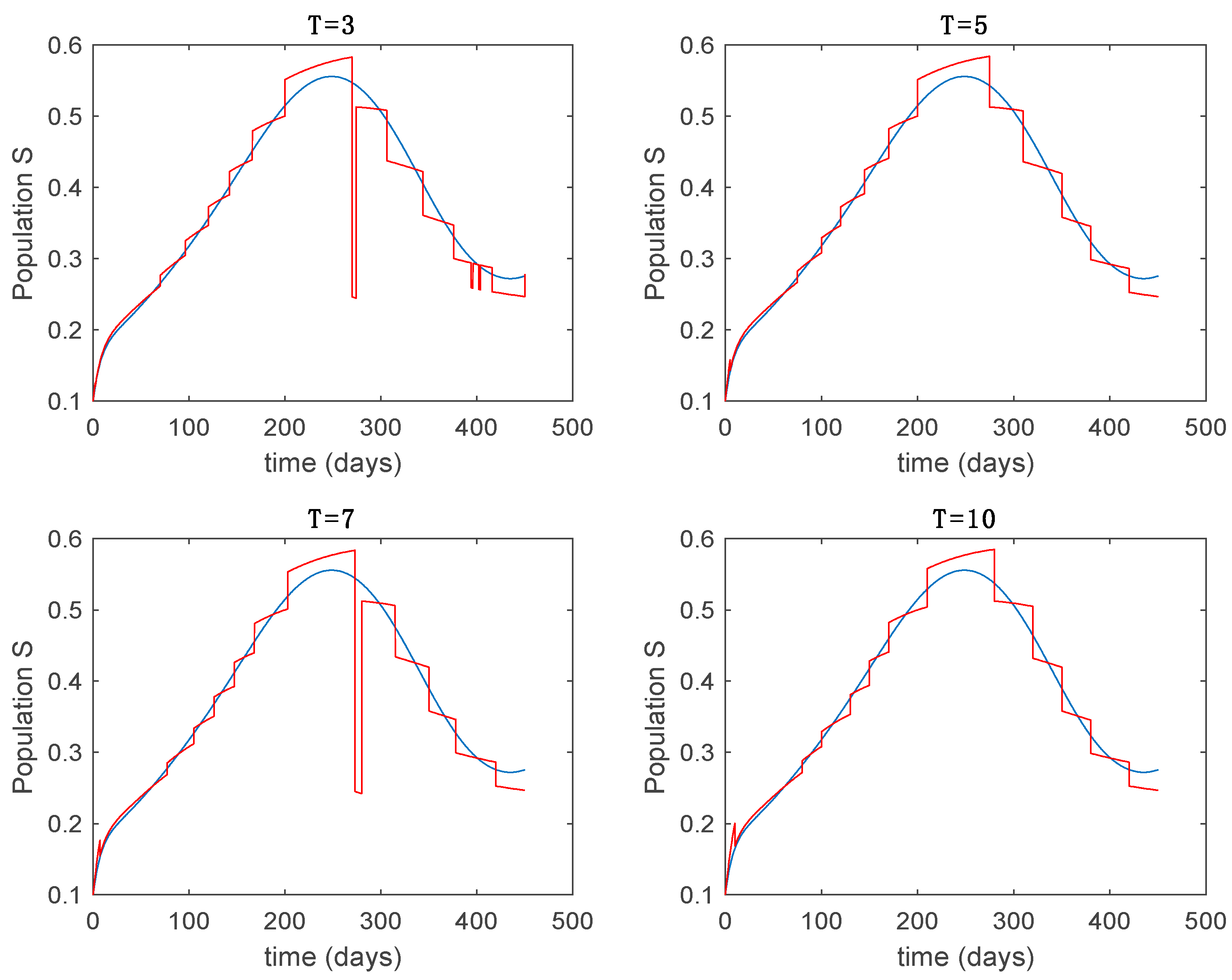

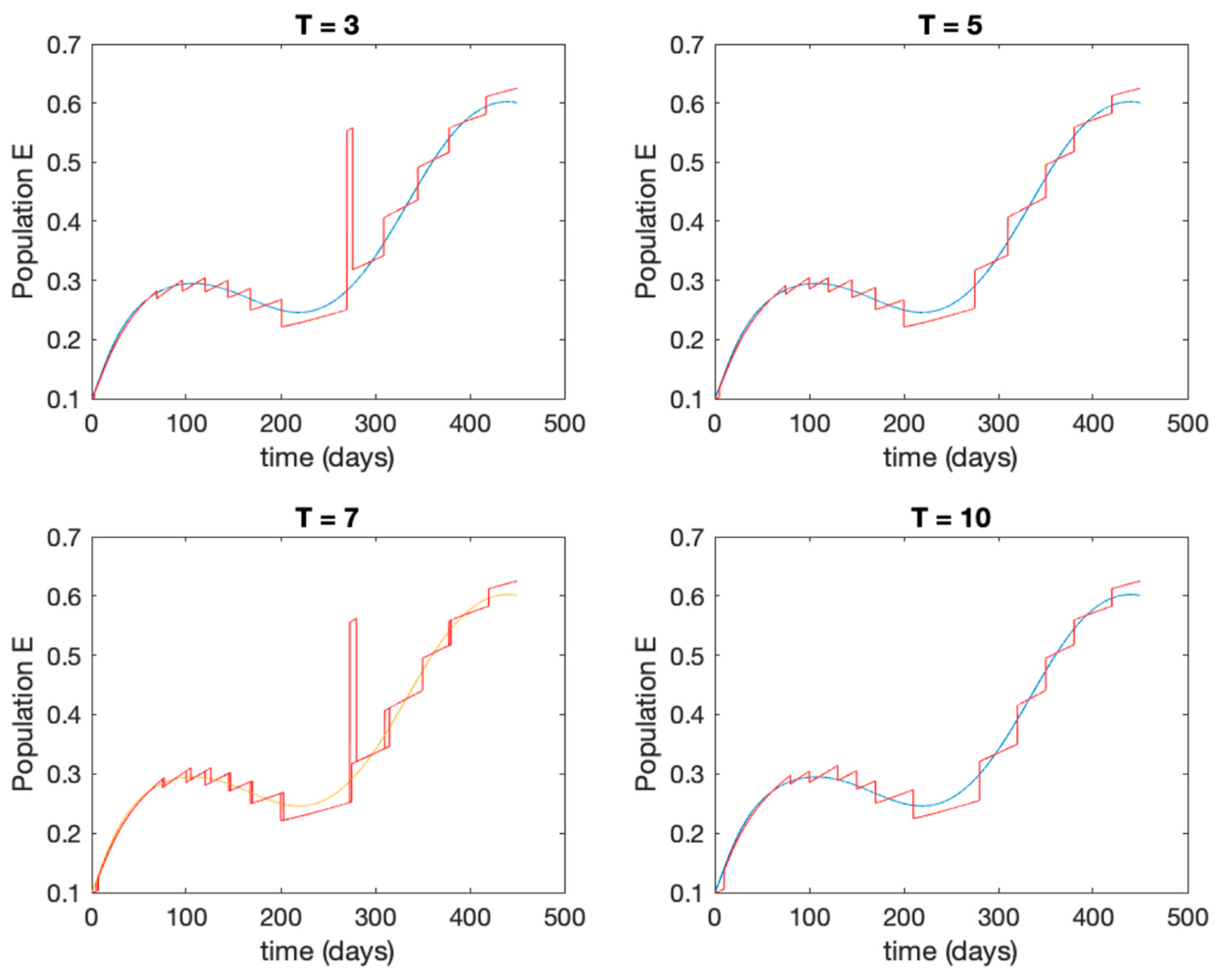

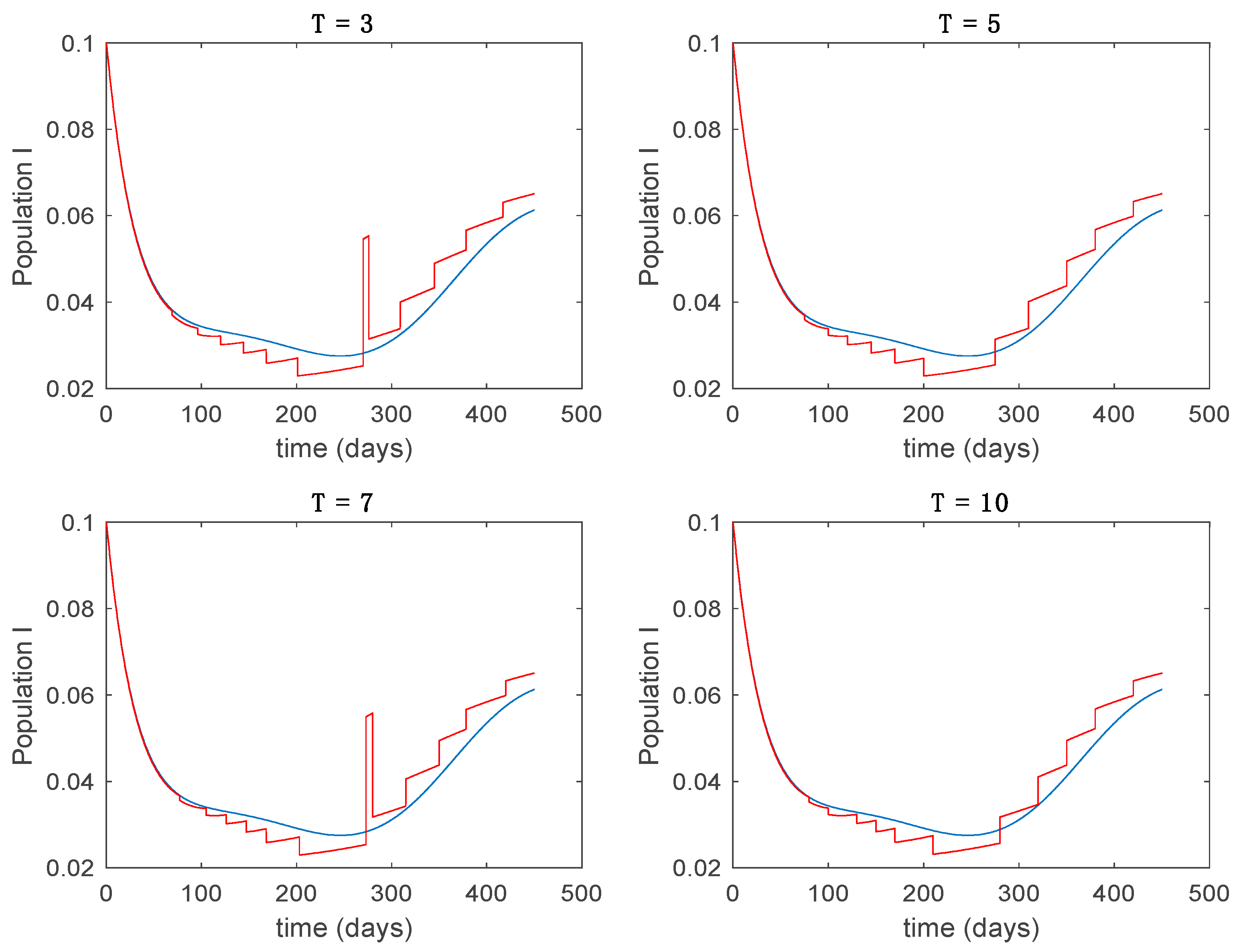

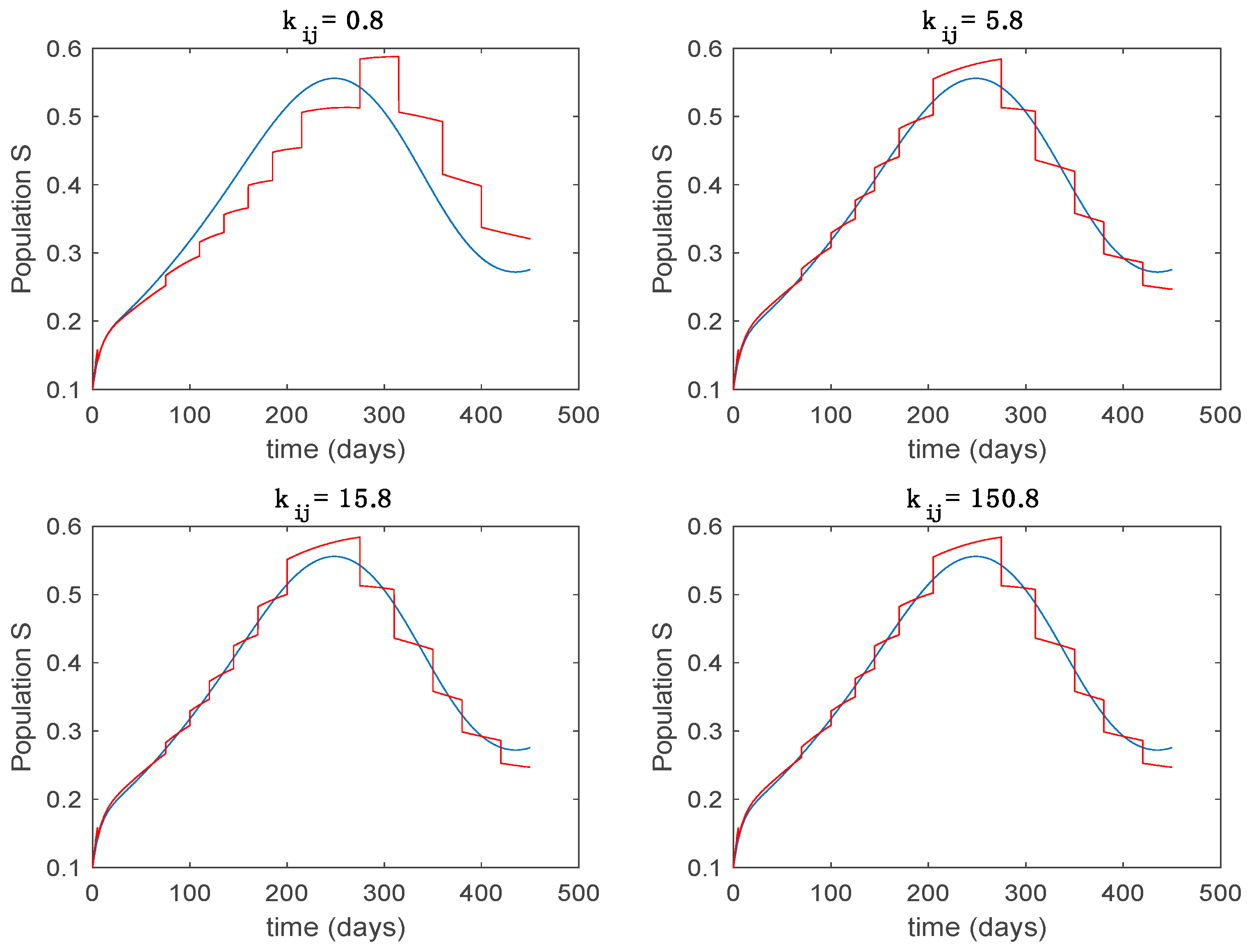

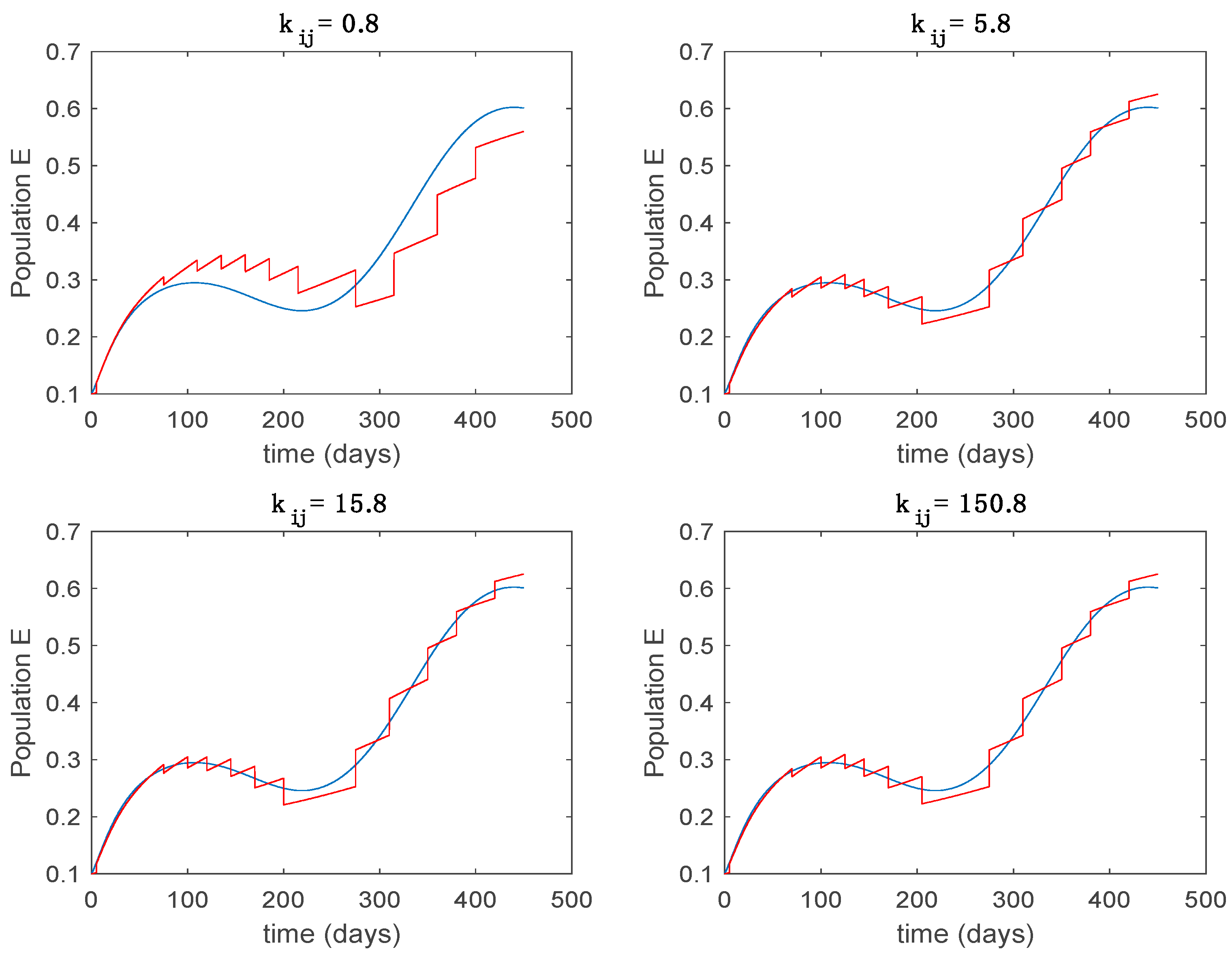

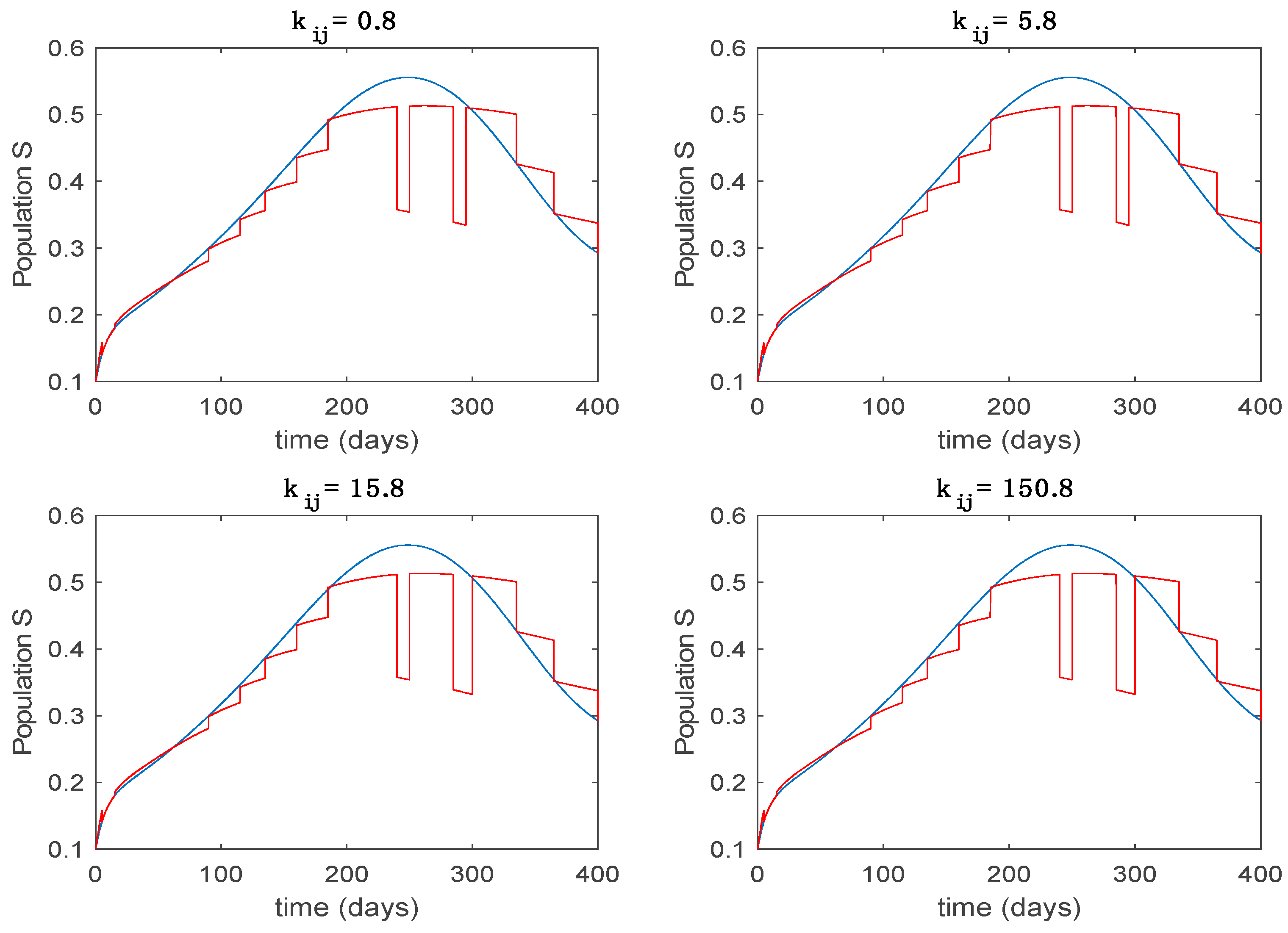

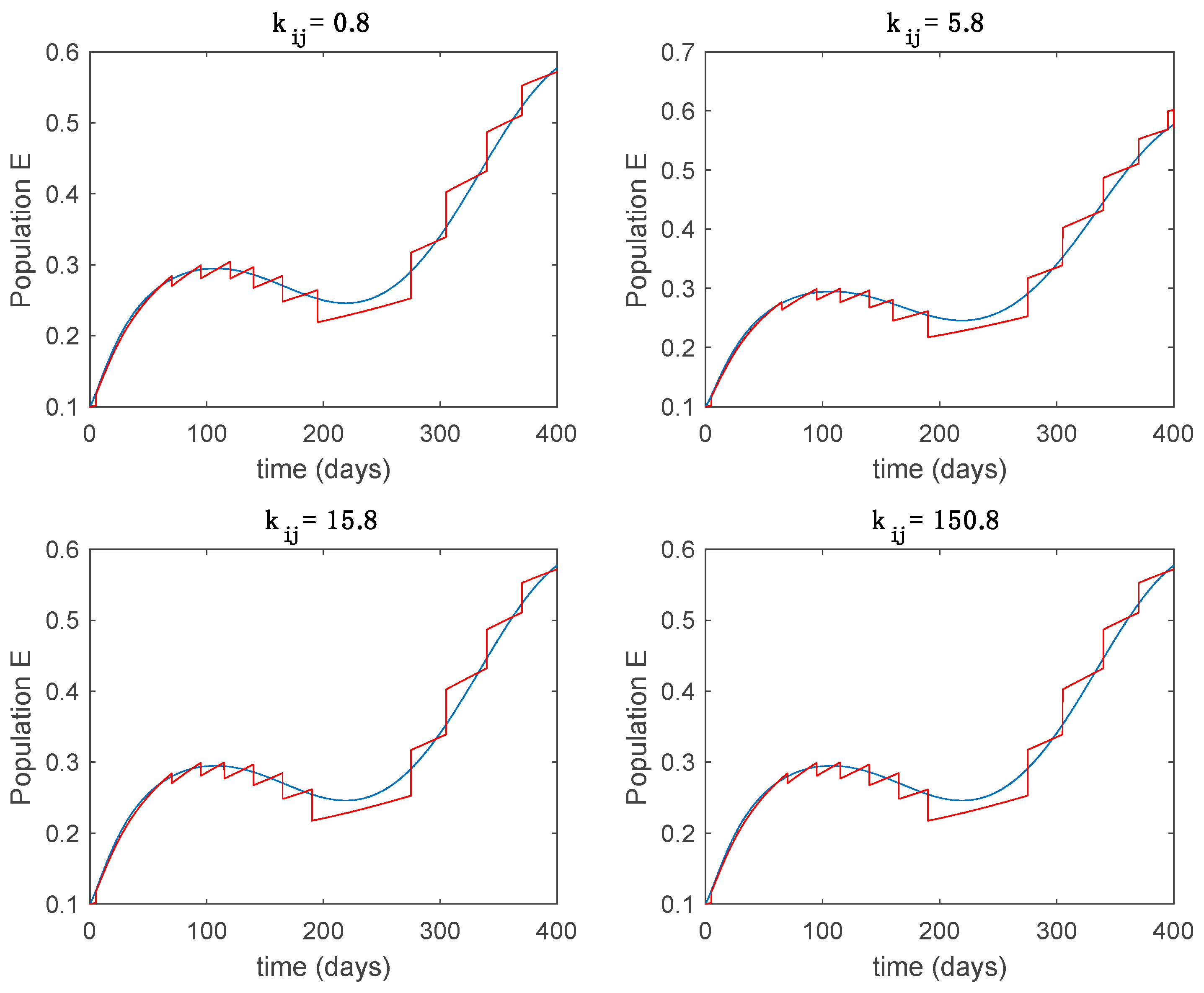

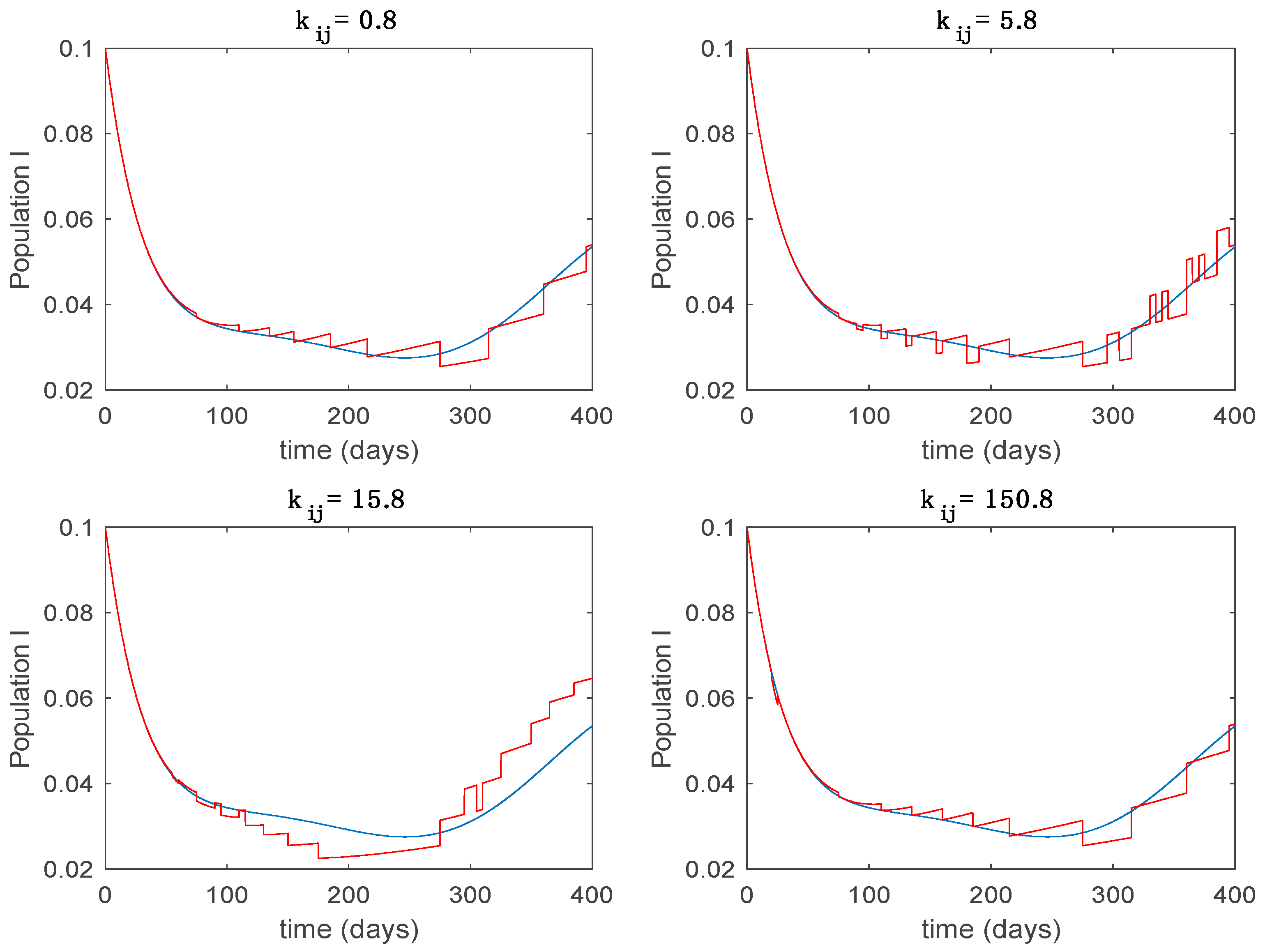

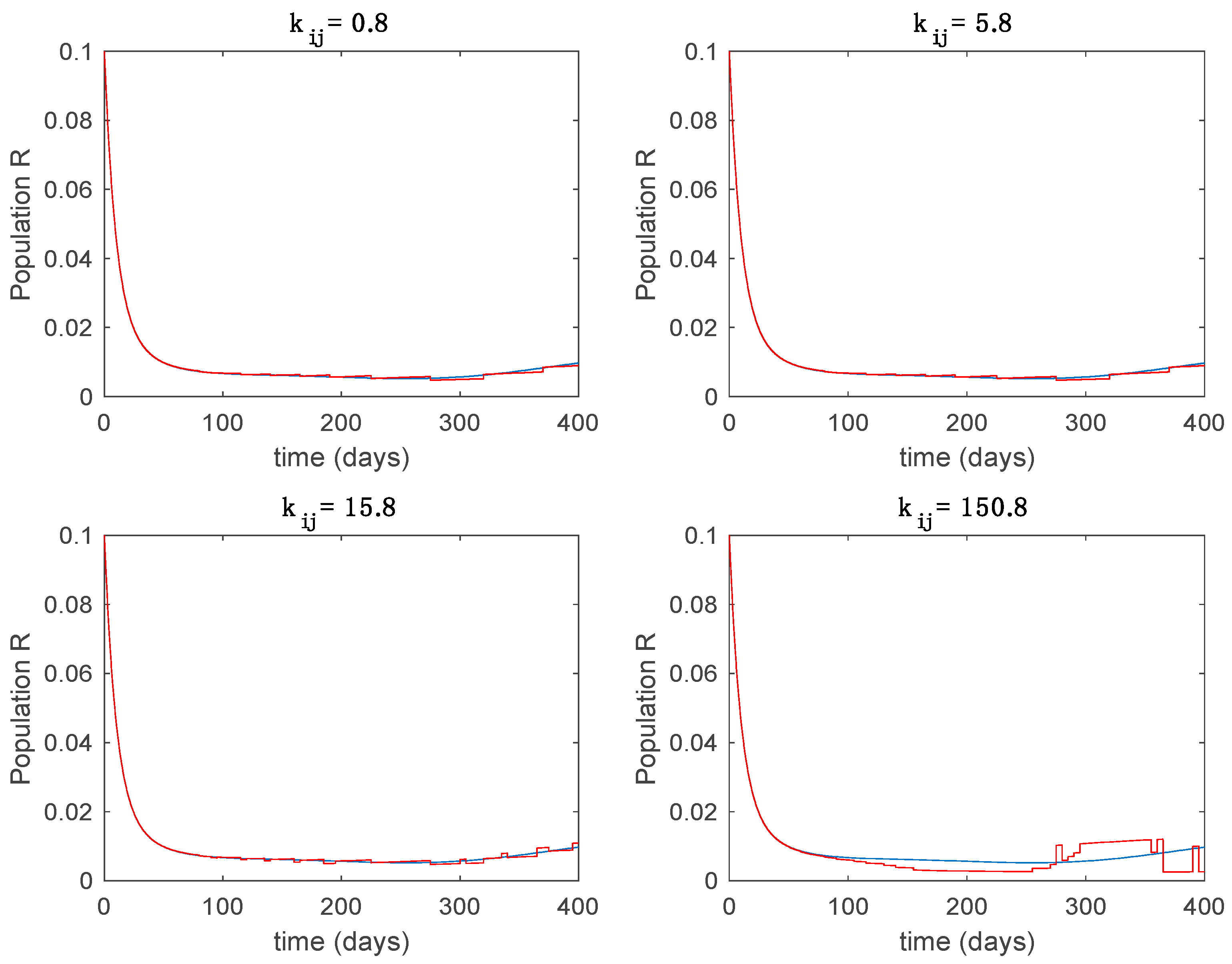

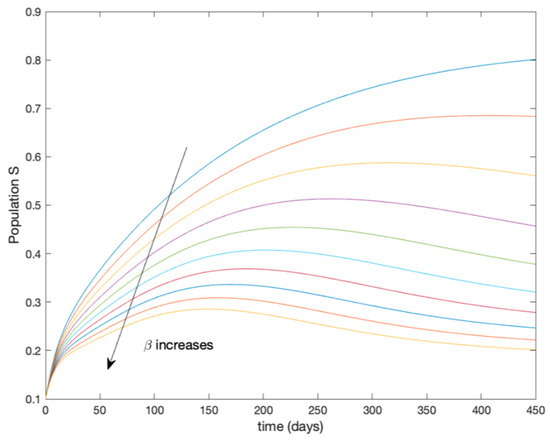

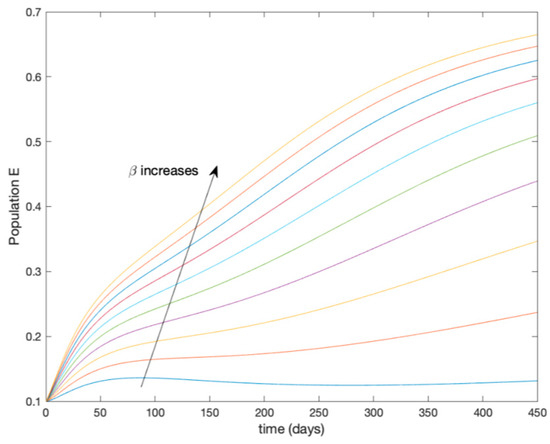

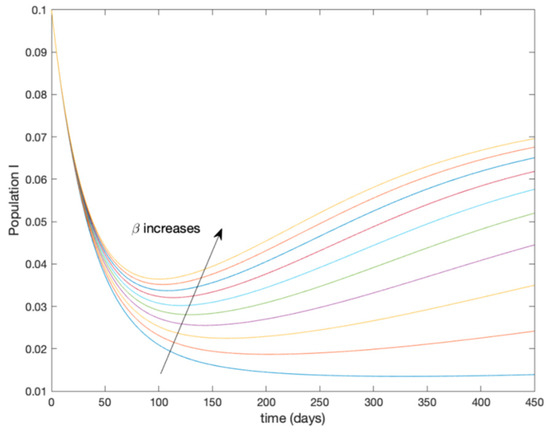

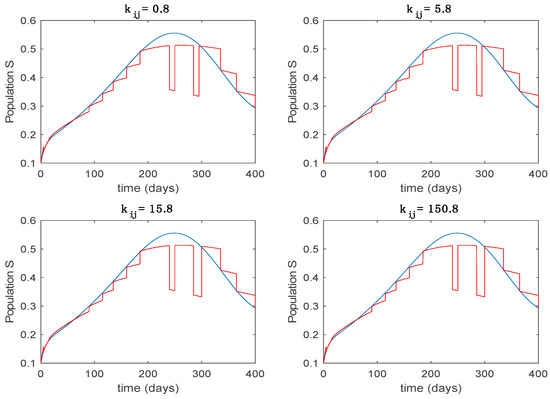

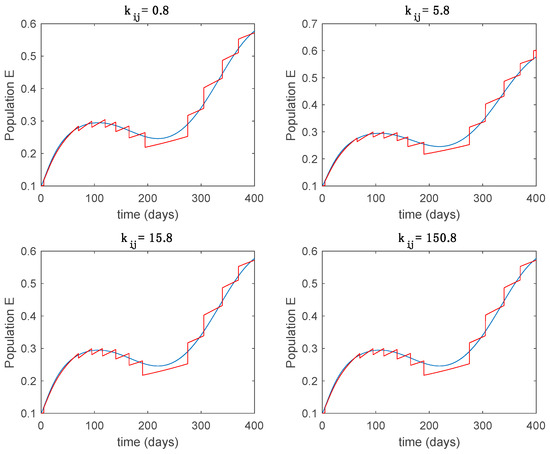

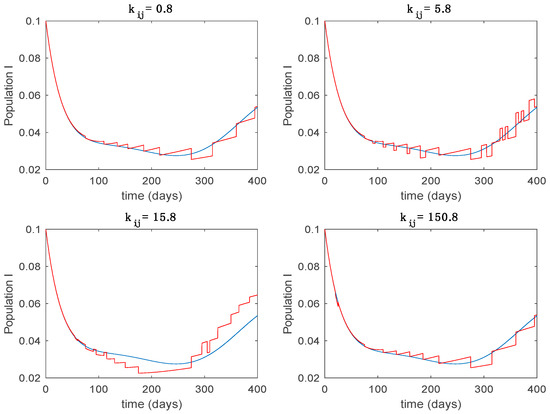

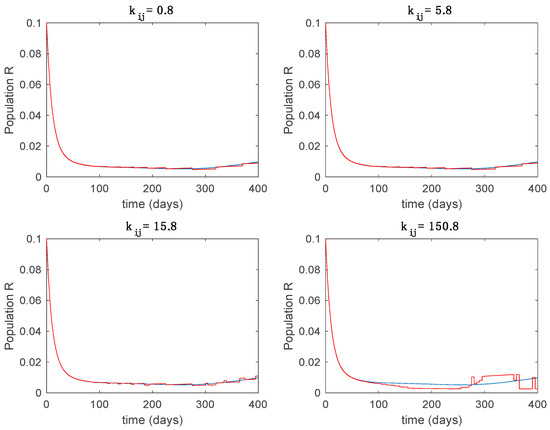

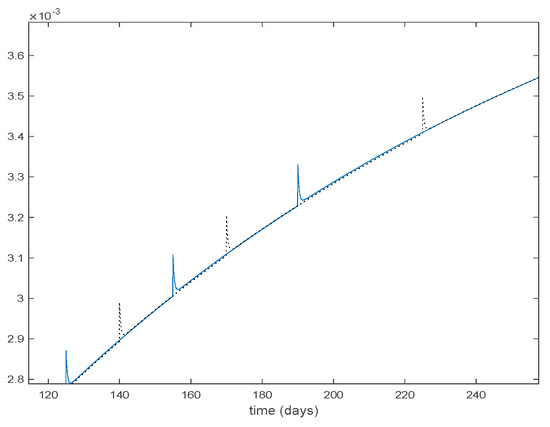

This accurate time-varying model is described by a number of simplified time-invariant models running in parallel with the same constant parameter values and fixed values of obtained according to (44). In this example , so that 10 models will be running in parallel. The Figure 2, Figure 3, Figure 4 and Figure 5 show the trajectory followed by these fixed models for the different considered constant values of .

Figure 2.

Dynamics of susceptible (S) for different values of constant .

Figure 3.

Dynamics of exposed (E) for different values of constant .

Figure 4.

Dynamics of infectious (I) for different values of constant .

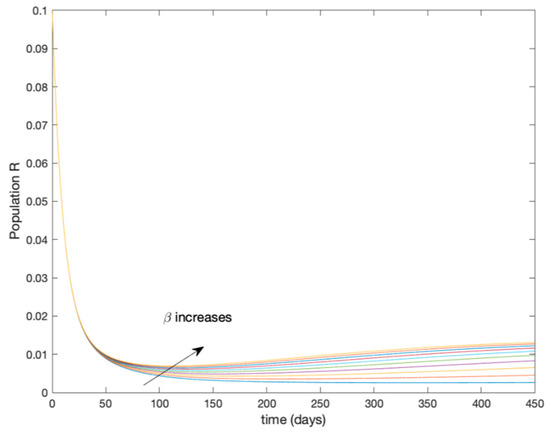

Figure 5.

Dynamics of immune (R) for different values of constant .

It is worth to mention that none of the models whose trajectory is depicted in Figure 2, Figure 3, Figure 4 and Figure 5 can solely describe the dynamics of the whole accurate model, since none of them is able to reproduce the complex behavior generated by the time-varying seasonal incidence rate, . In this way, the switching mechanisms given by Algorithms 1 and 2 inspired in the entropy paradigm are employed to generate a switched piecewise constant model able to describe the time-varying system by means of time-invariant ones. Section 5.1 shows the capabilities of the switched model to reproduce the behavior of the accurate model in open loop, i.e., in the absence of any external action.

5.1. Open-Loop Switched Model

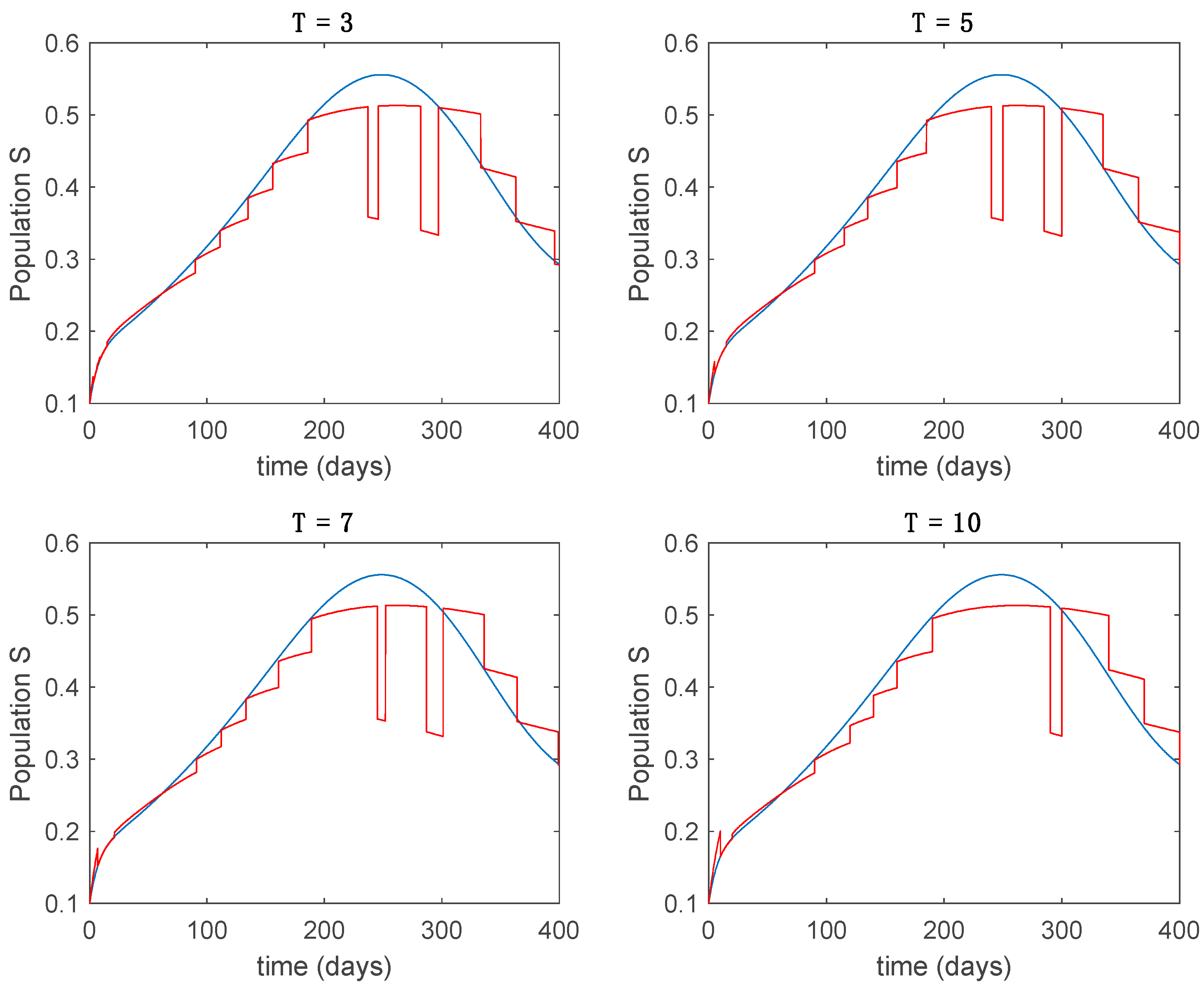

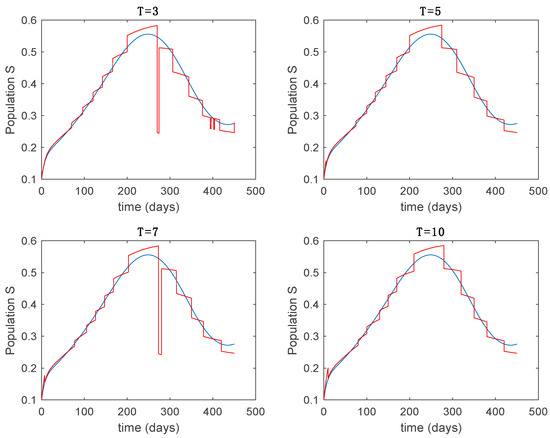

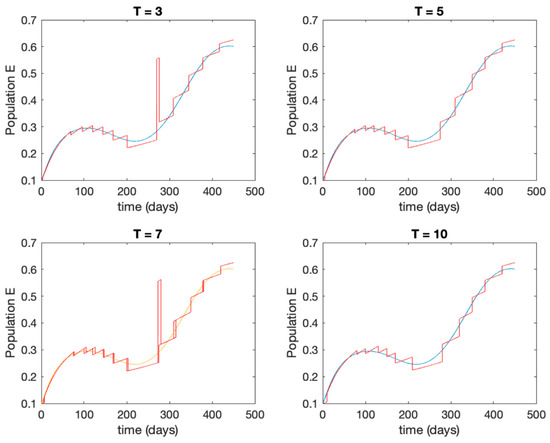

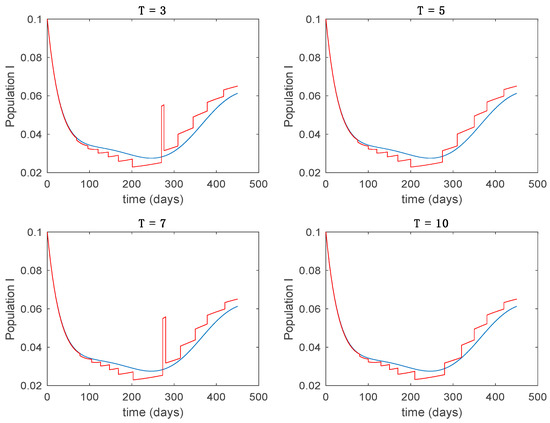

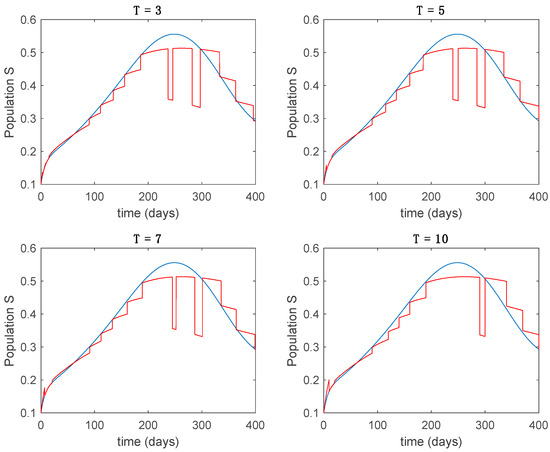

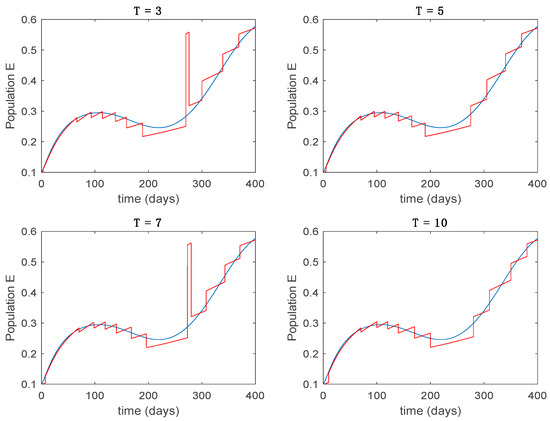

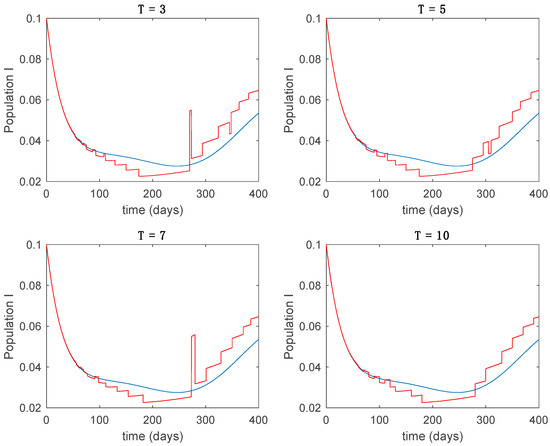

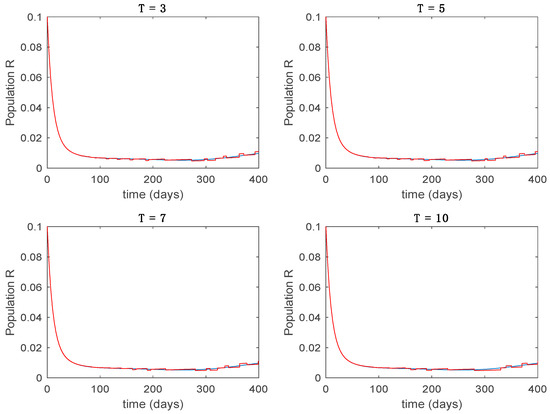

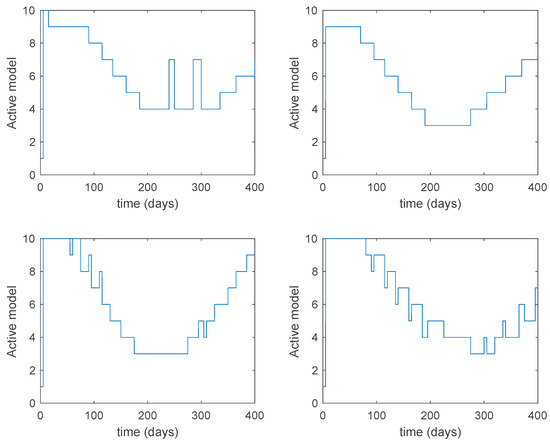

In this subsection the vaccination function is set to zero so that all the models are running in open loop. The simulations are completed for different values of the switching time (in days) and the probability gains of (49) in order to observe their influence on the open-loop trajectories. The values of the probability gains include the case of inflation as well. Thus, the Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10 display the trajectories of the switched system for Algorithm 1, different values of the switching time and a constant gain of while Figure 11, Figure 12, Figure 13, Figure 14 and Figure 15 depict the trajectories for different values of the probability gains and a constant value of days. In all the subsequent figures, the blue line denotes the output of the time-varying system while the output of the switched system is displayed in red; legends have been then omitted from figures for the sake of clarity. On the other hand, Figure 16, Figure 17, Figure 18, Figure 19, Figure 20, Figure 21, Figure 22, Figure 23 and Figure 24 display the trajectories under the same conditions but for Algorithm 2.

Figure 6.

Trajectory of the susceptible for the switched system (Algorithm 1) and different values of .

Figure 7.

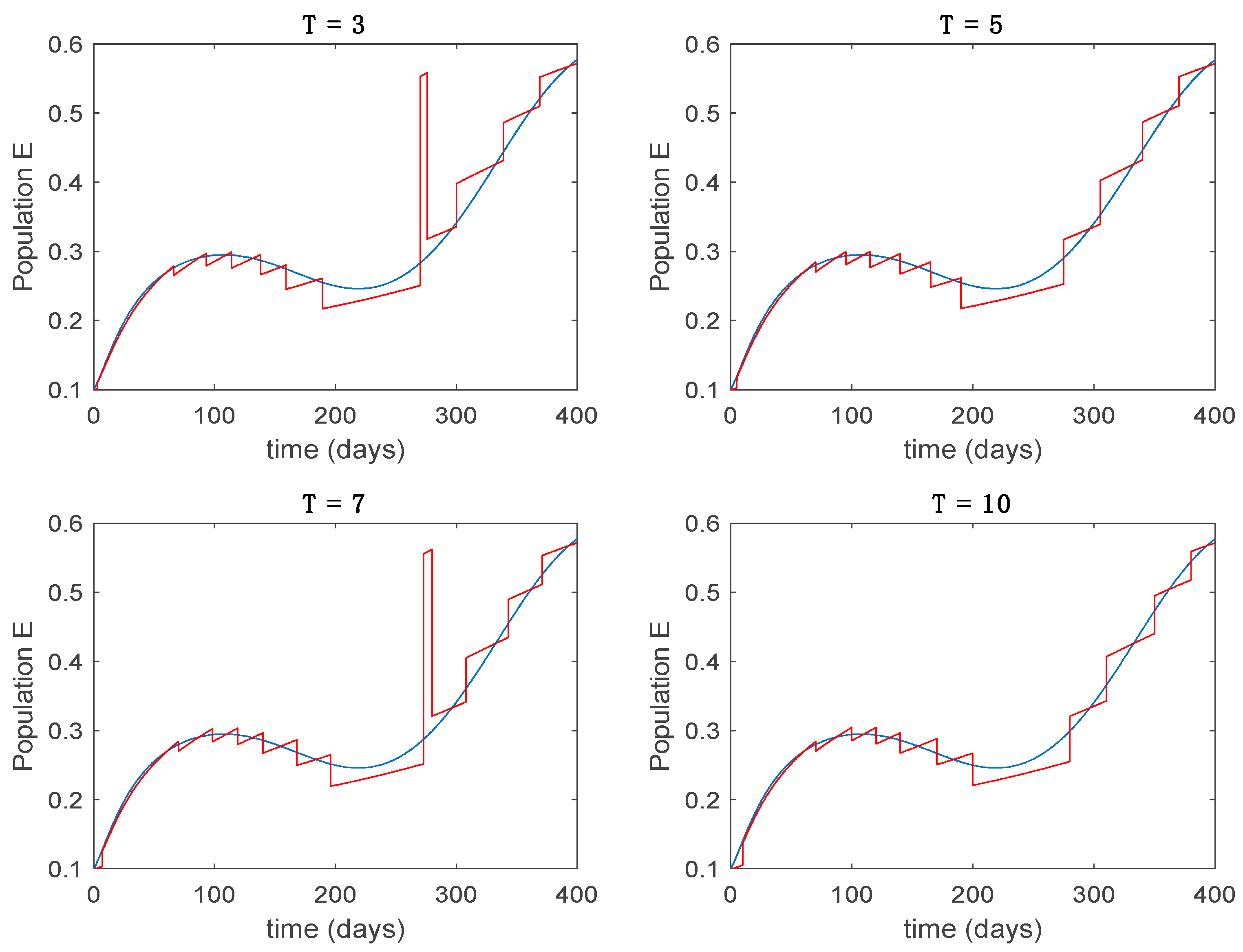

Trajectory of the exposed for the switched system (Algorithm 1) and different values of .

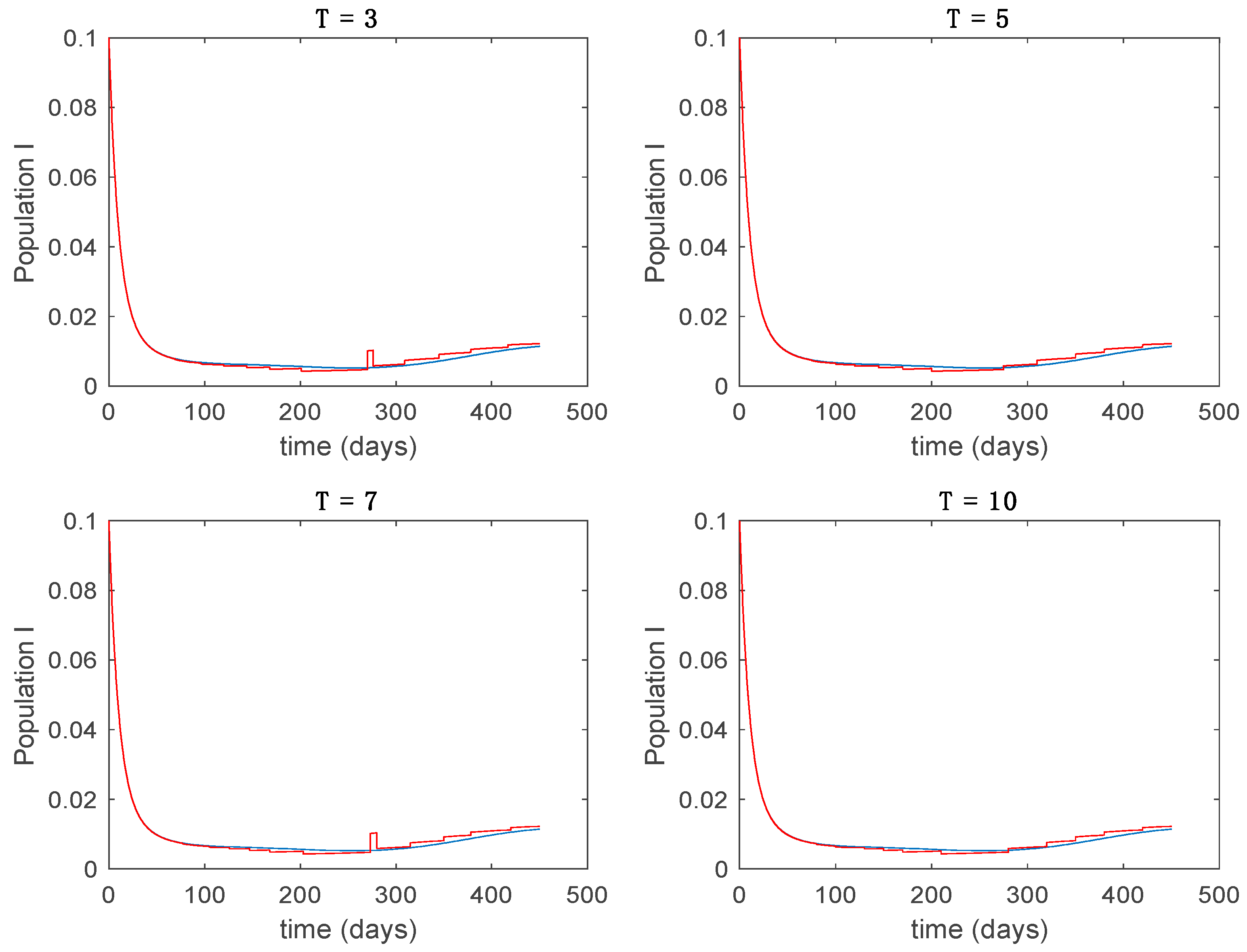

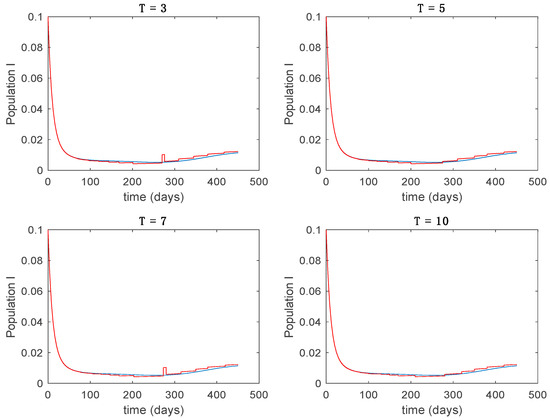

Figure 8.

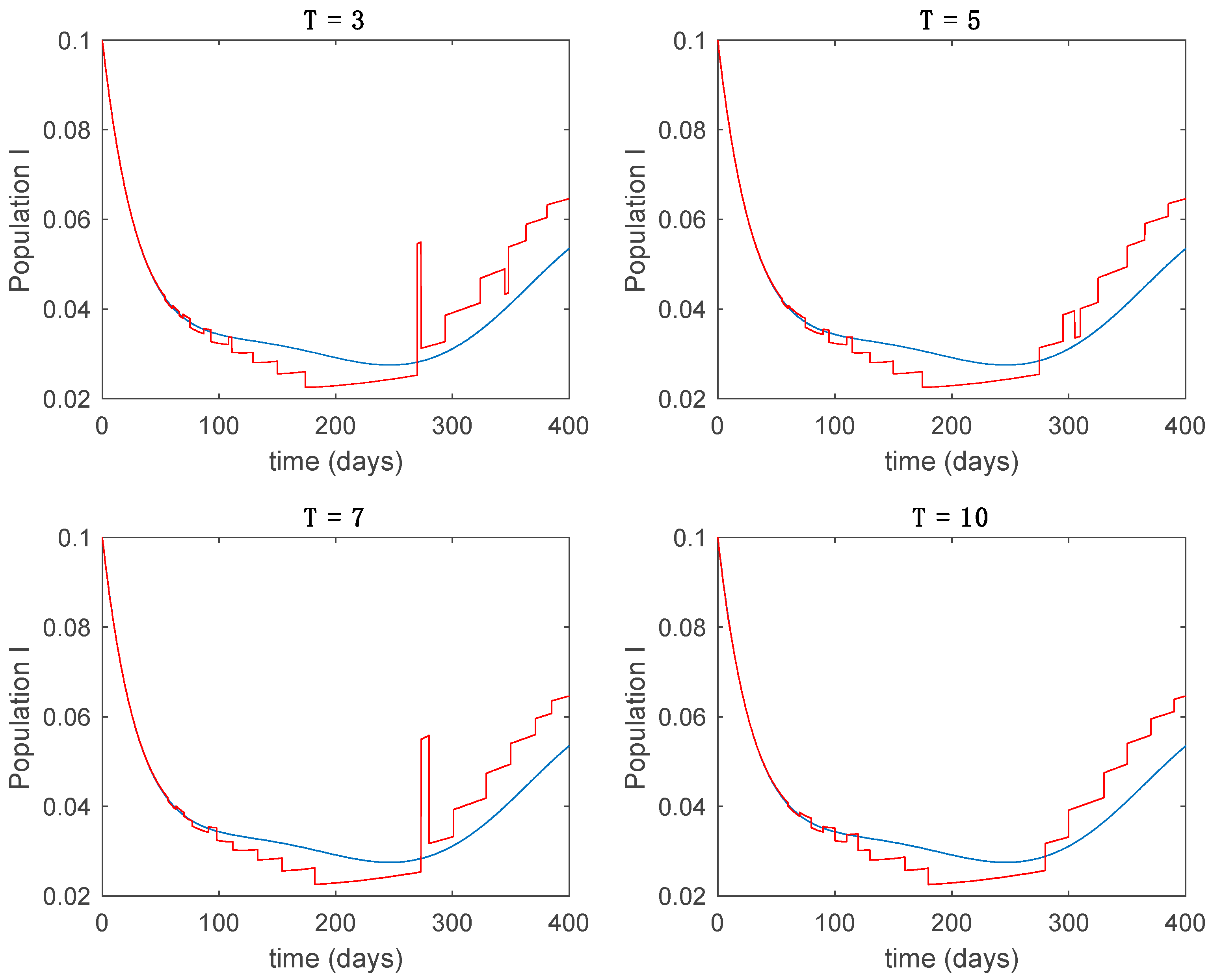

Trajectory of the infectious for the switched system (Algorithm 1) and different values of .

Figure 9.

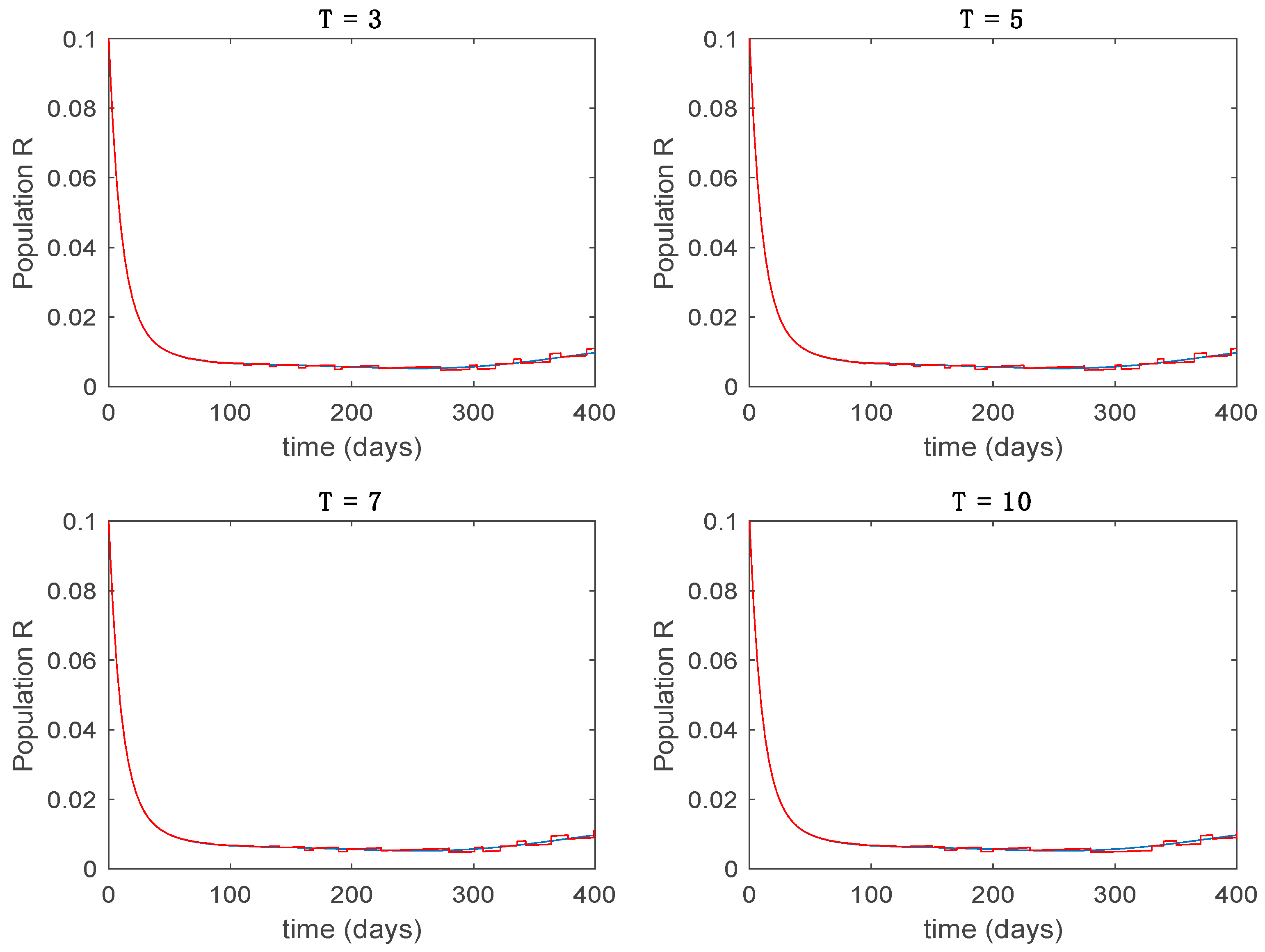

Trajectory of the immune for the switched system (Algorithm 1) and different values of T.

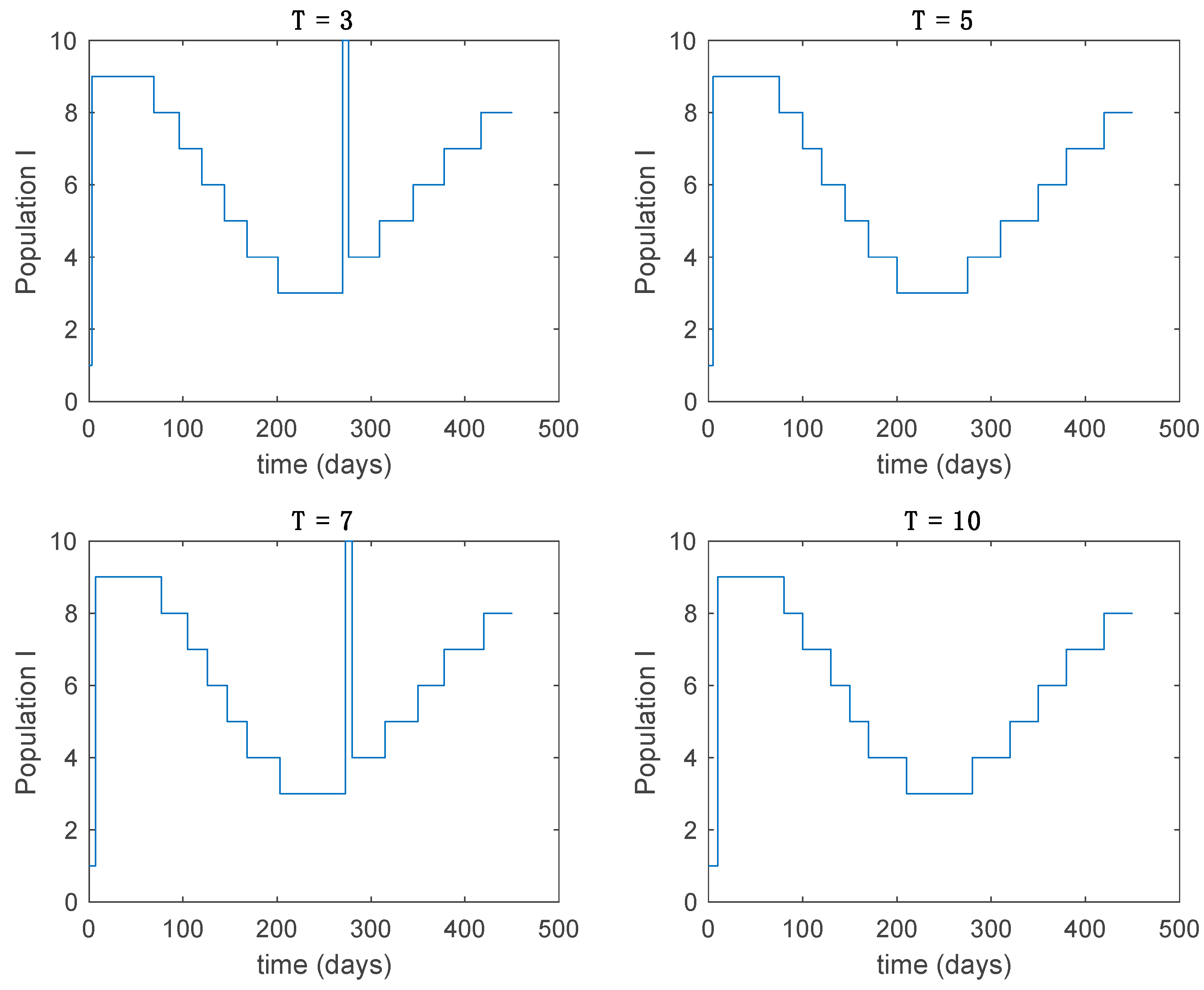

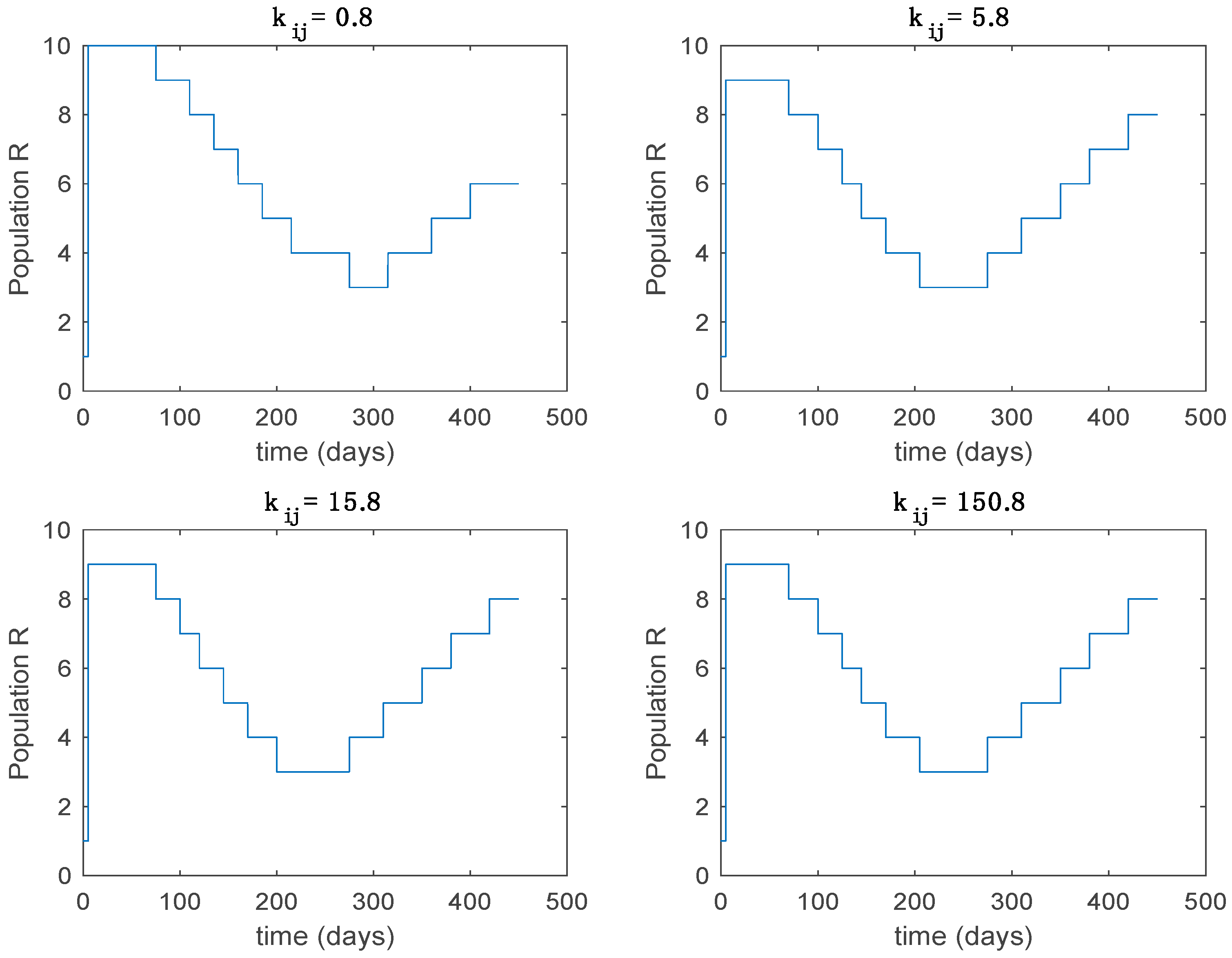

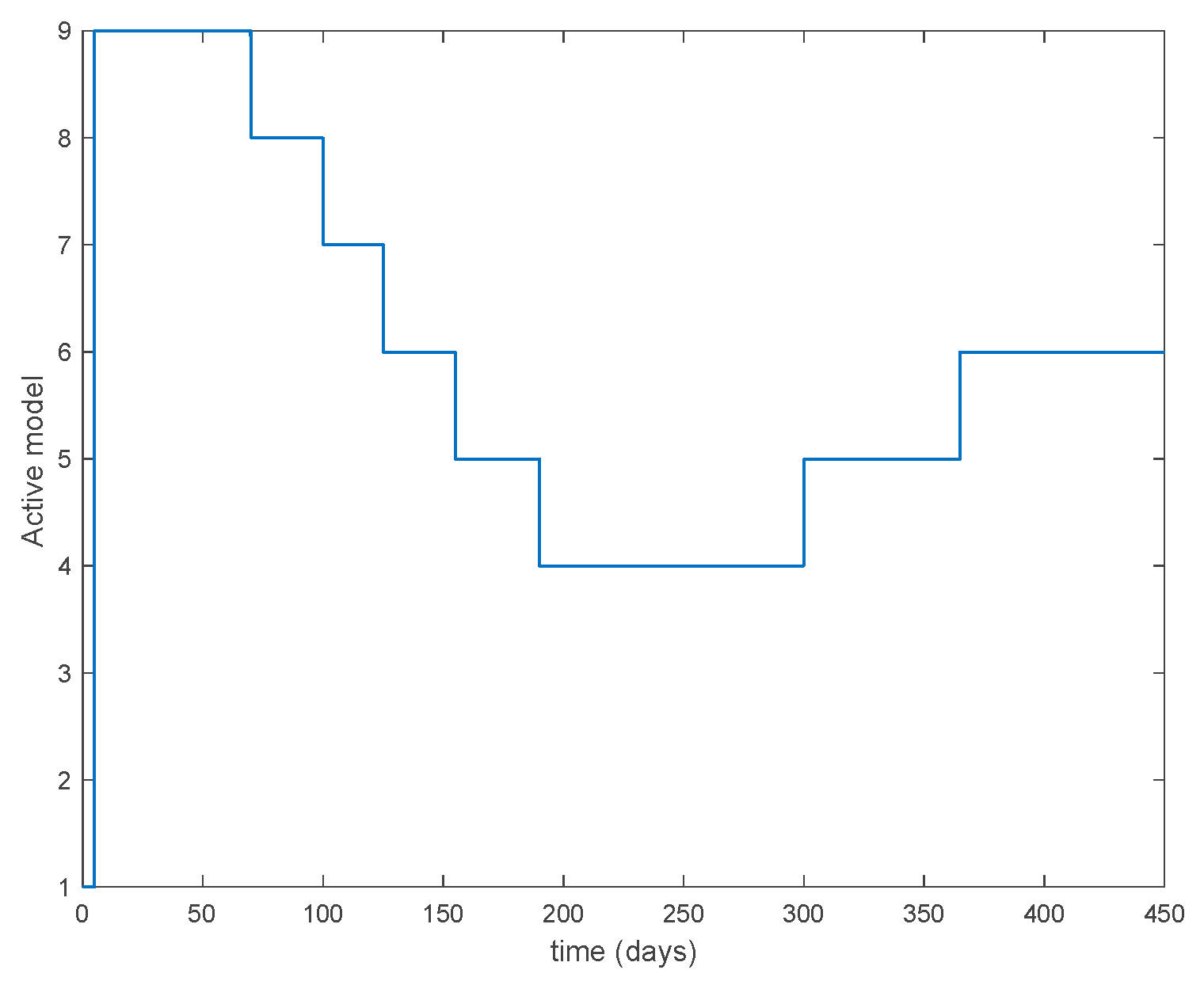

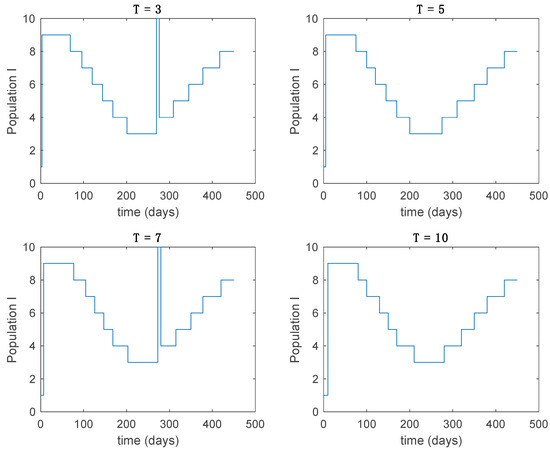

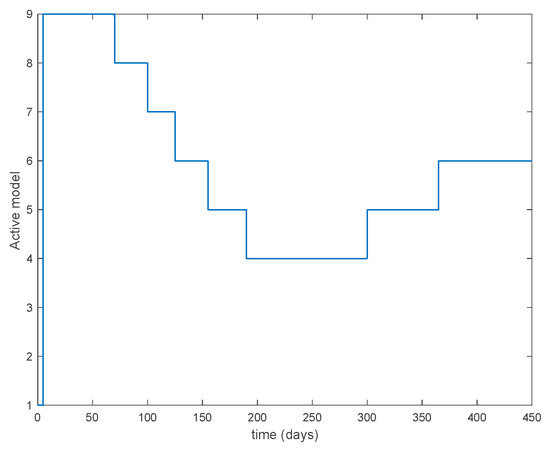

Figure 10.

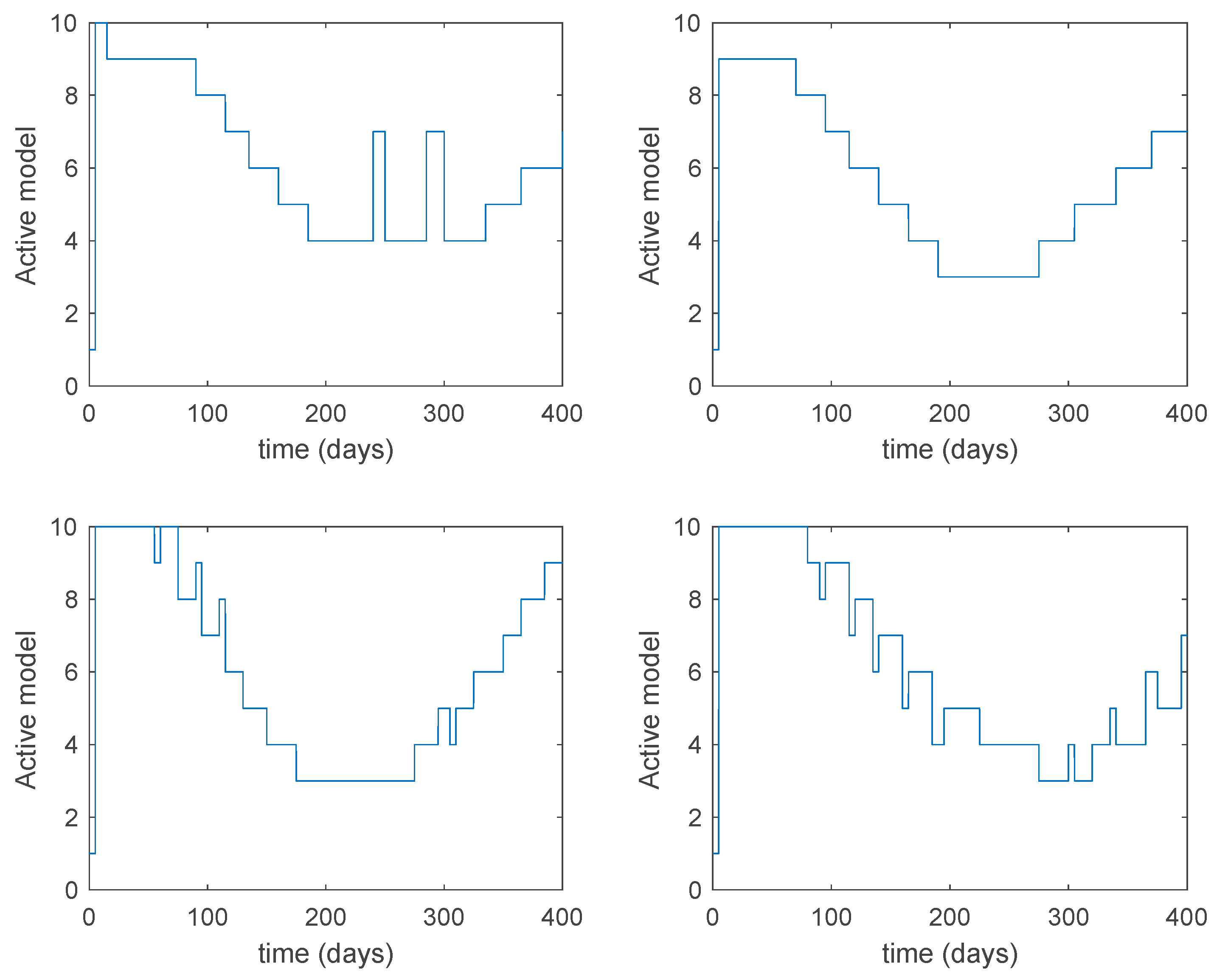

Active model within time intervals for the different values of T (Algorithm 1).

Figure 11.

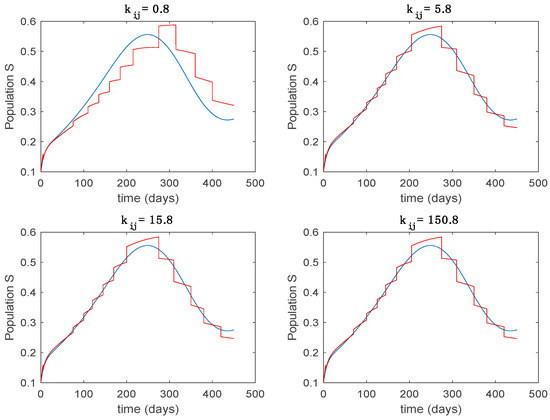

Trajectory of the susceptible for the switched system (Algorithm 1) and different values of .

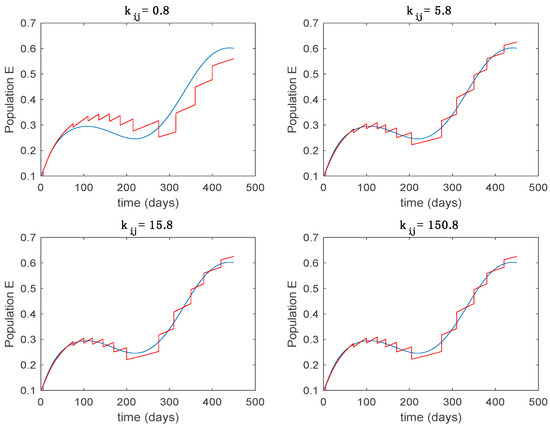

Figure 12.

Trajectory of the exposed for the switched system (Algorithm 1) and different values of .

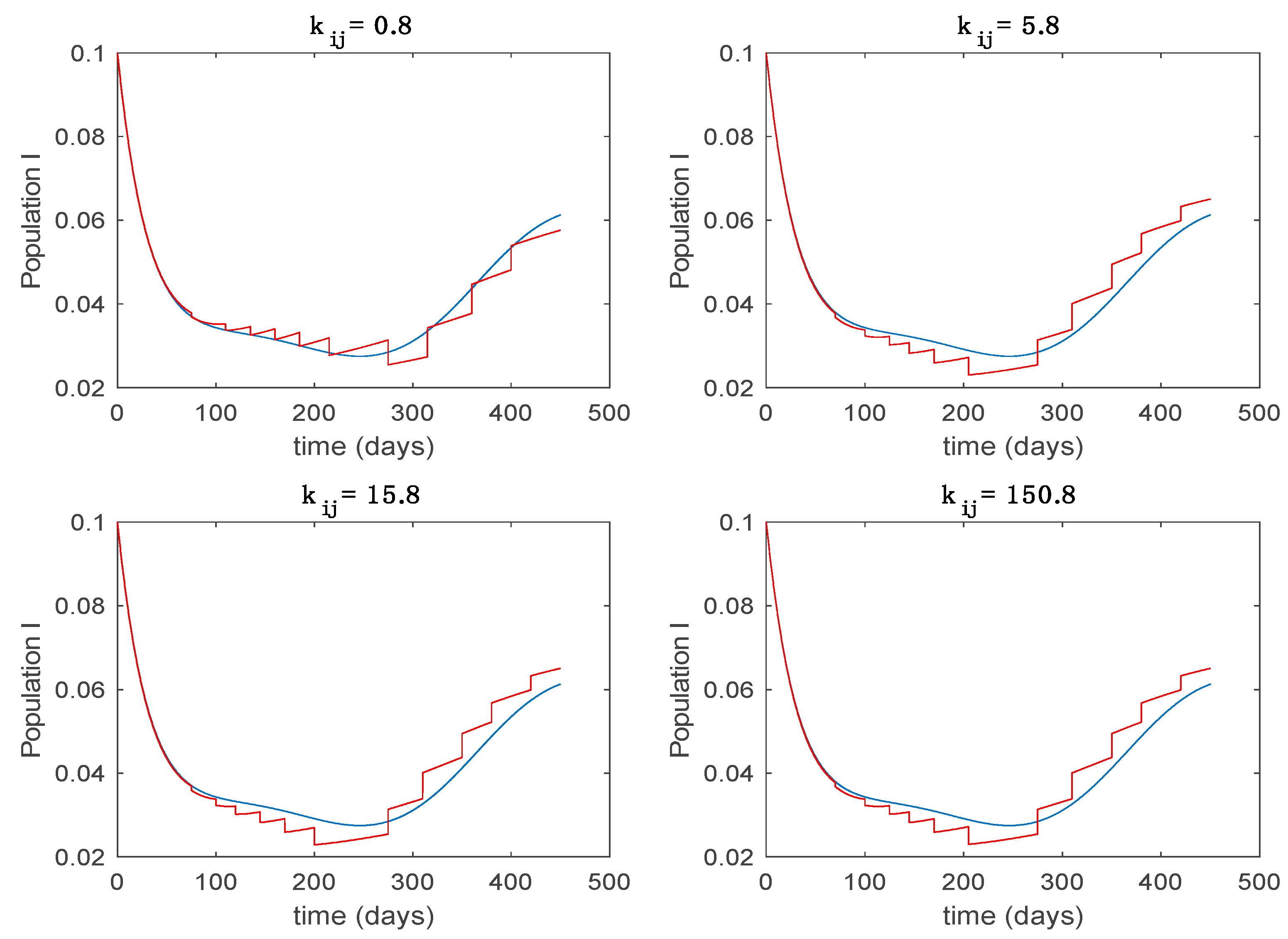

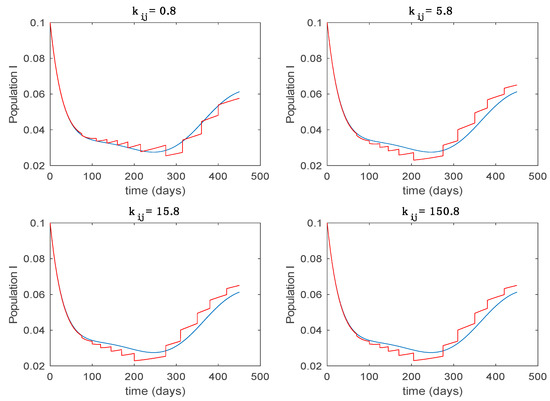

Figure 13.

Trajectory of the infectious for the switched system (Algorithm 1) and different values of .

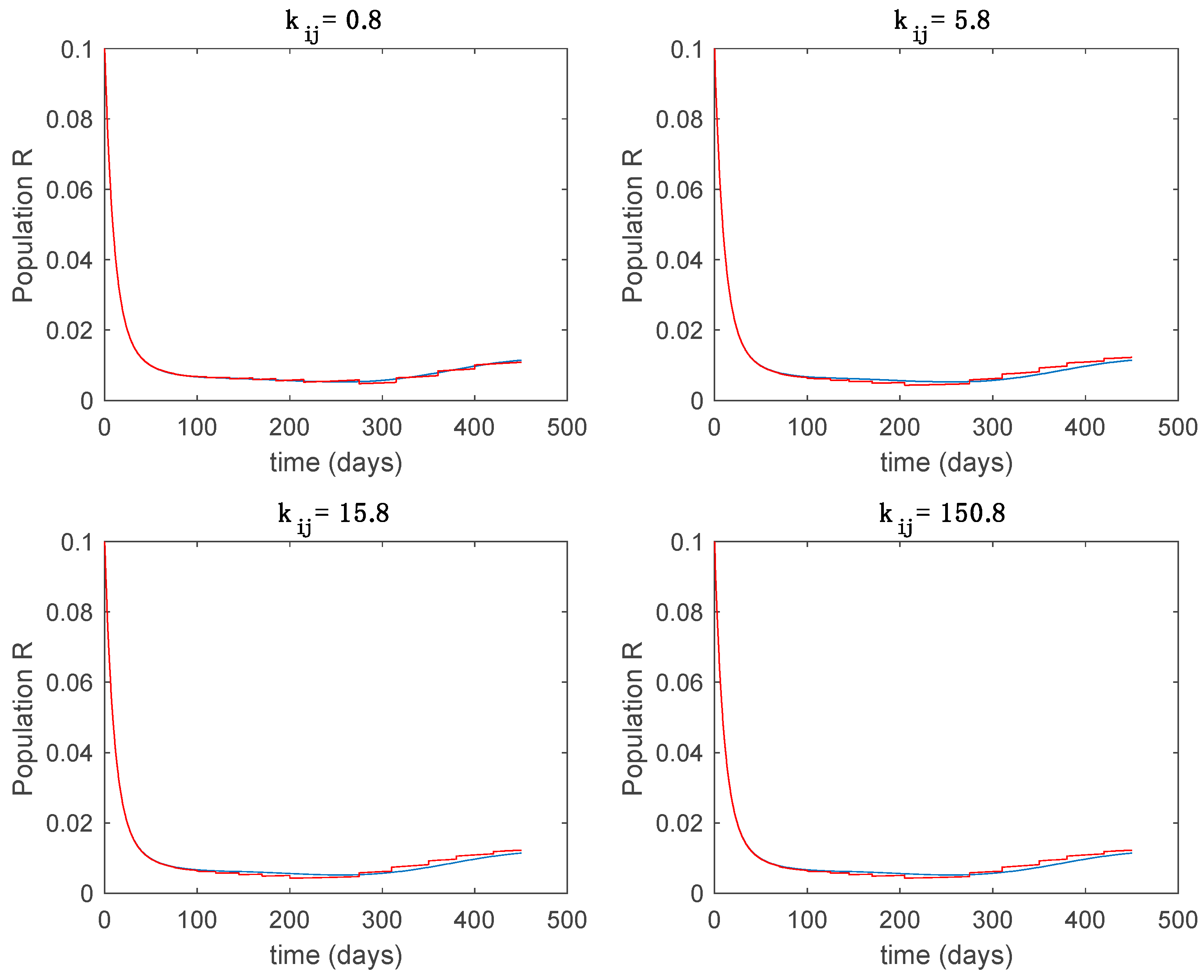

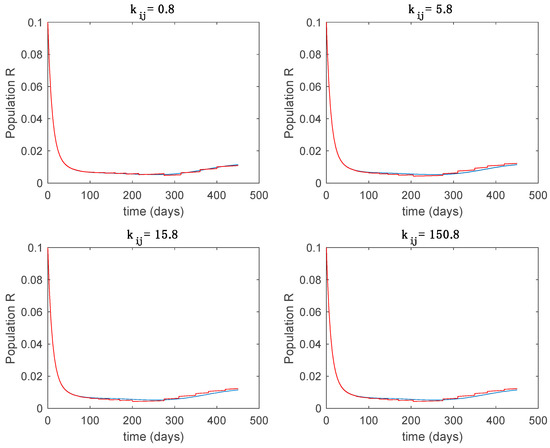

Figure 14.

Trajectory of the immune for the switched system (Algorithm 1) and different values of .

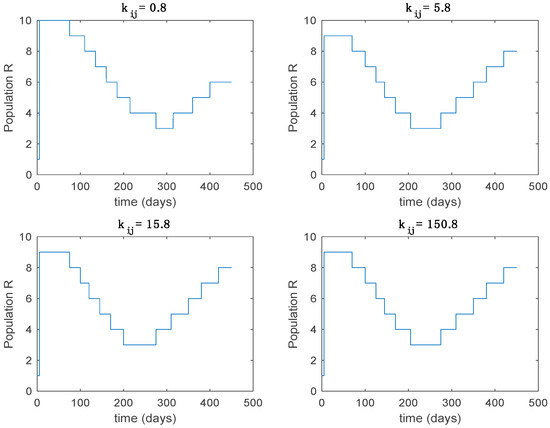

Figure 15.

Active model within each time interval for different values of (Algorithm 1).

Figure 16.

Trajectory of the susceptible for the switched system (Algorithm 2) and different values of .

Figure 17.

Trajectory of the exposed for the switched system (Algorithm 2) and different values of .

Figure 18.

Trajectory of the infectious for the switched system (Algorithm 2) and different values of .

Figure 19.

Trajectory of the immune for the switched system (Algorithm 2) and different values of .

Figure 20.

Active model within each time interval for Algorithm 2 and values days and .

Figure 21.

Trajectory of the susceptible for the switched system (Algorithm 2) and different values of .

Figure 22.

Trajectory of the exposed for the switched system (Algorithm 2) and different values of .

Figure 23.

Trajectory of the infectious for the switched system (Algorithm 2) and different values of .

Figure 24.

Trajectory of the immune for the switched system (Algorithm 2) and different values of .

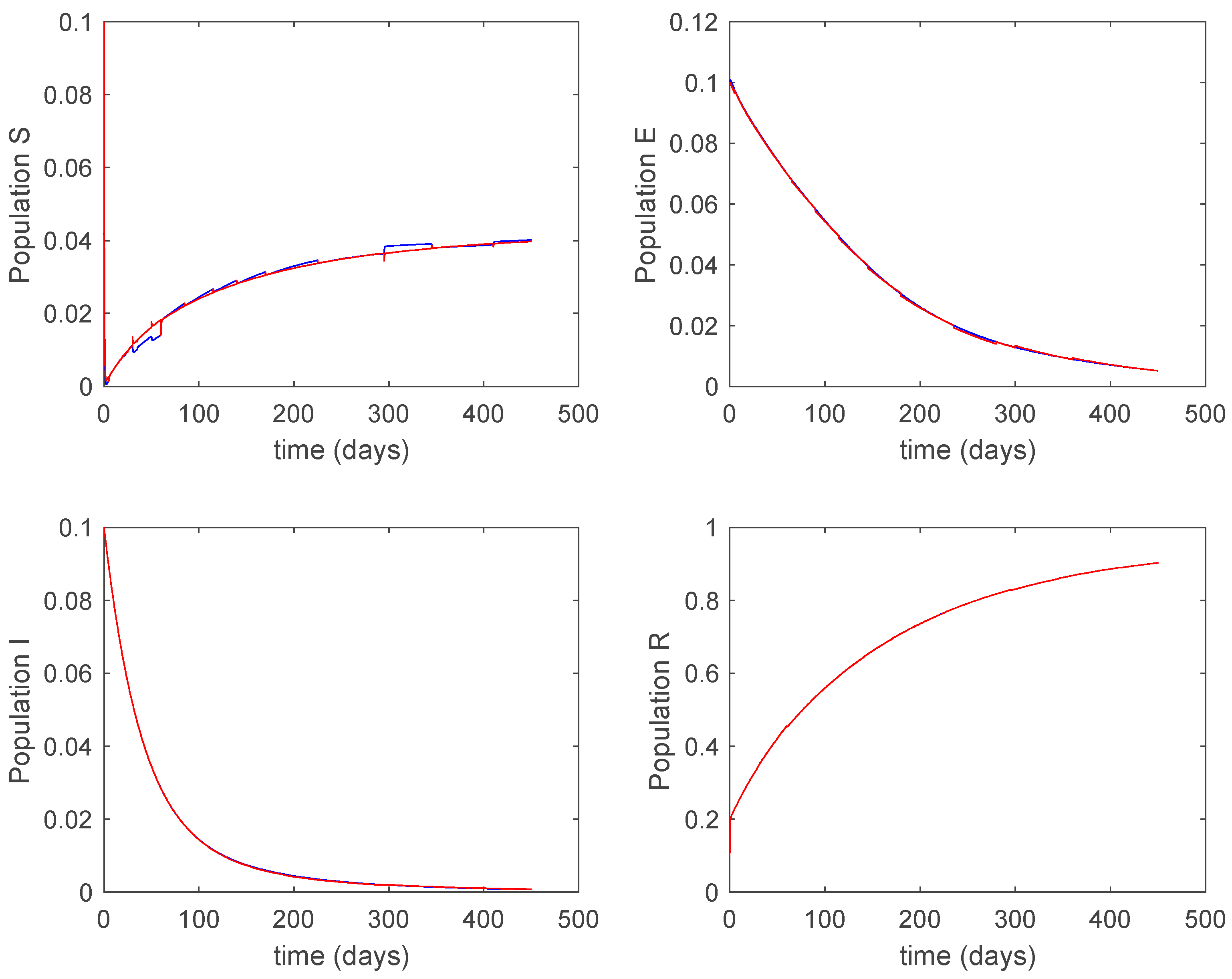

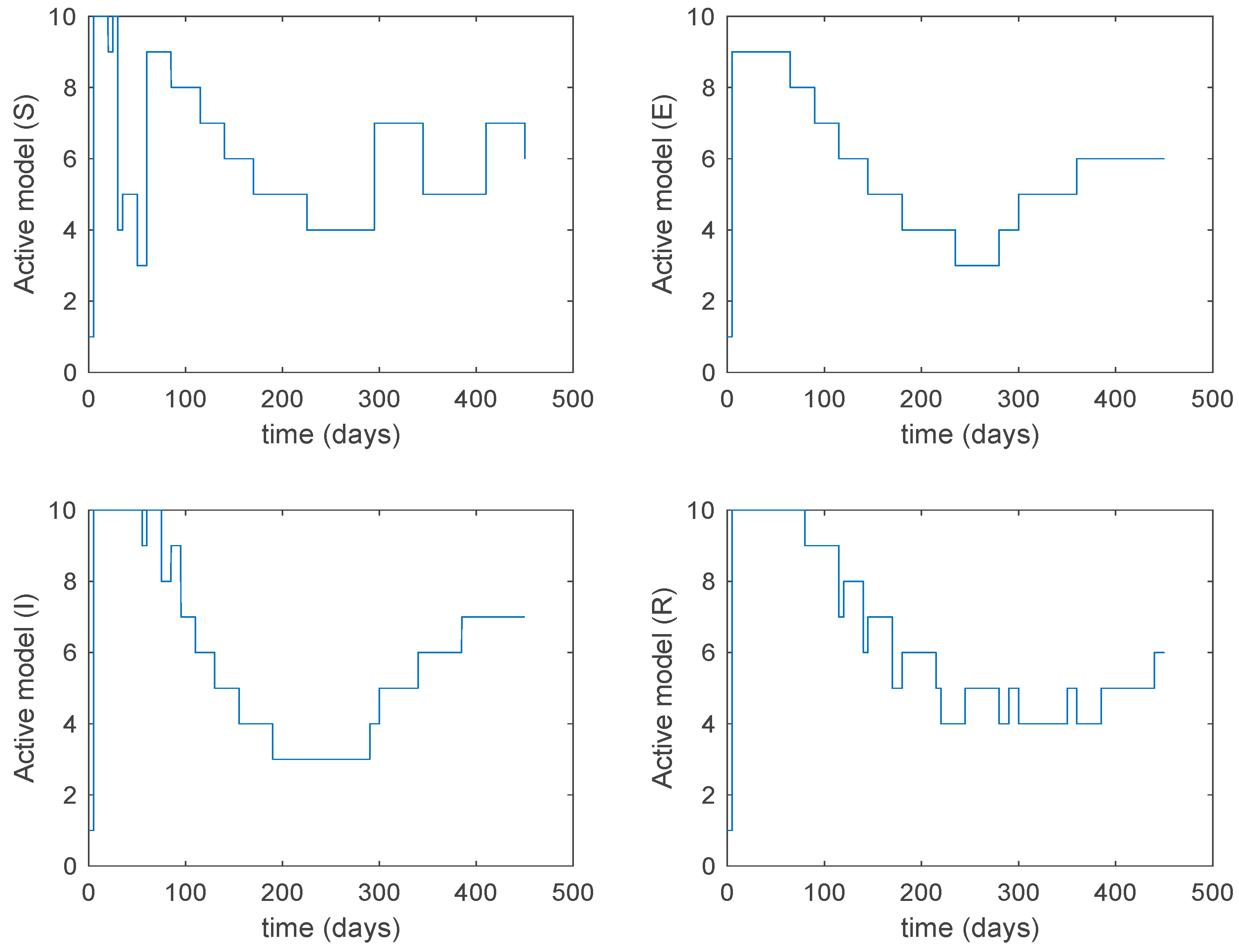

From the above figures we can conclude that the switched system, either with Algorithm 1 or with Algorithm 2, is able to reproduce the shape of the trajectories of the accurate-time-varying system. Consequently, the presented approach is useful to describe the overall dynamics of a complex set of data, coming either from real measurements or from a complex model, by means of a simpler piece-wise time-invariant model. The switching rules based on the entropy of the errors between each model and the data have revealed to be an adequate frame for such a task. Figure 10 and Figure 15 show the active model selected within each time interval by Algorithm 1 to parameterize the switched system. Moreover, Figure 20 shows the active model when Algorithm 2 is employed. It is seen in Figure 20 how the algorithm selects a different model for each one of the state components of the system, which is the particularity of the algorithm. From the simulation results we can also observe that the probability value has a slight influence on the approximation performance and the selection of an appropriate value is not that critical. However, the switching time plays a more important role in the obtained performance as it can be deduced from the above examples. Moreover, there are no specific criteria for the selection of the switching time while a trial-error work can be performed in order to obtain an appropriate value for it. Finally, it can also be observed that Algorithm 1 is able to attain closer curves to the actual system than Algorithm 2, especially when it comes to the Susceptible population. The above figures show that the disease is persistent in the sense that the infectious do not vanish with time. Therefore, in the next Section 5.2 vaccination is used as external control to make the infectious converge to zero asymptotically.

5.2. Closed-Loop Control

In this subsection, vaccination is employed to avoid the persistency of the disease. To this end, the following state-feedback type vaccination law is used [29]:

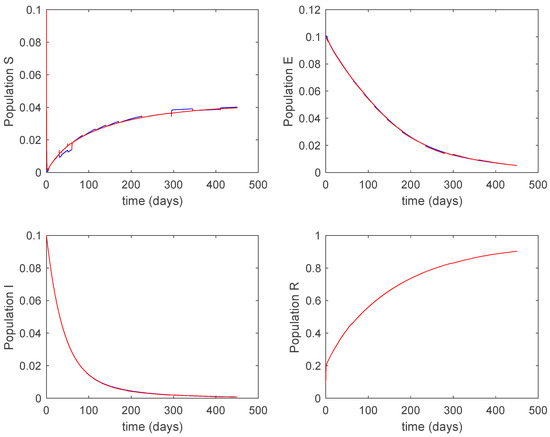

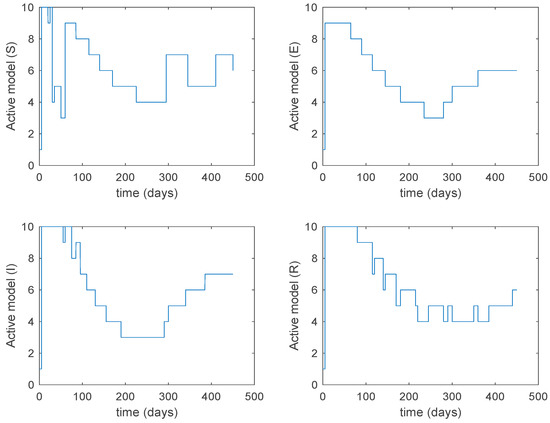

with and being the state-feedback gains and , the state components of the corresponding active model according to Algorithms 1 and 2. Along this section, days and . Figure 25 displays the evolution of the closed-loop system when Algorithm 1 is employed while Figure 26 shows the corresponding active model selected to parameterize the control command within each time interval. Figure 27 shows the trajectories for Algorithm 2 and Figure 28 shows the corresponding active model selected to parameterize the control command within each time interval. From Figure 25 and Figure 27 we can conclude that the output of the real system and the output of the active model are practically the same when a feedback control action is included in the system. Thus, the approximation errors appearing in open loop vanish in closed-loop because of the control action. Moreover, there are no differences in the closed-loop trajectories generated by Algorithms 1 and 2. It can also be observed in Figure 25 and Figure 27 that the infectious tend to zero eradicating the disease from the population, as desired. Therefore, the control objective is achieved. Figure 29 and Figure 30 display the vaccination function calculated by using both algorithms. It can be seen that both control commands are very similar with only some peaks associated with the switching process making the difference between one and another. Overall, the proposed approach has been showed to be a powerful tool to model the complex time-varying system.

Figure 25.

Evolution of the closed-loop system when vaccination is applied and Algorithm 1 is used.

Figure 26.

Active model when Algorithm 1 is employed.

Figure 27.

Evolution of the closed-loop system when vaccination is applied and Algorithm 2 is used.

Figure 28.

Active model when Algorithm 2 is employed.

Figure 29.

Vaccination function for Algorithms 1 and 2.

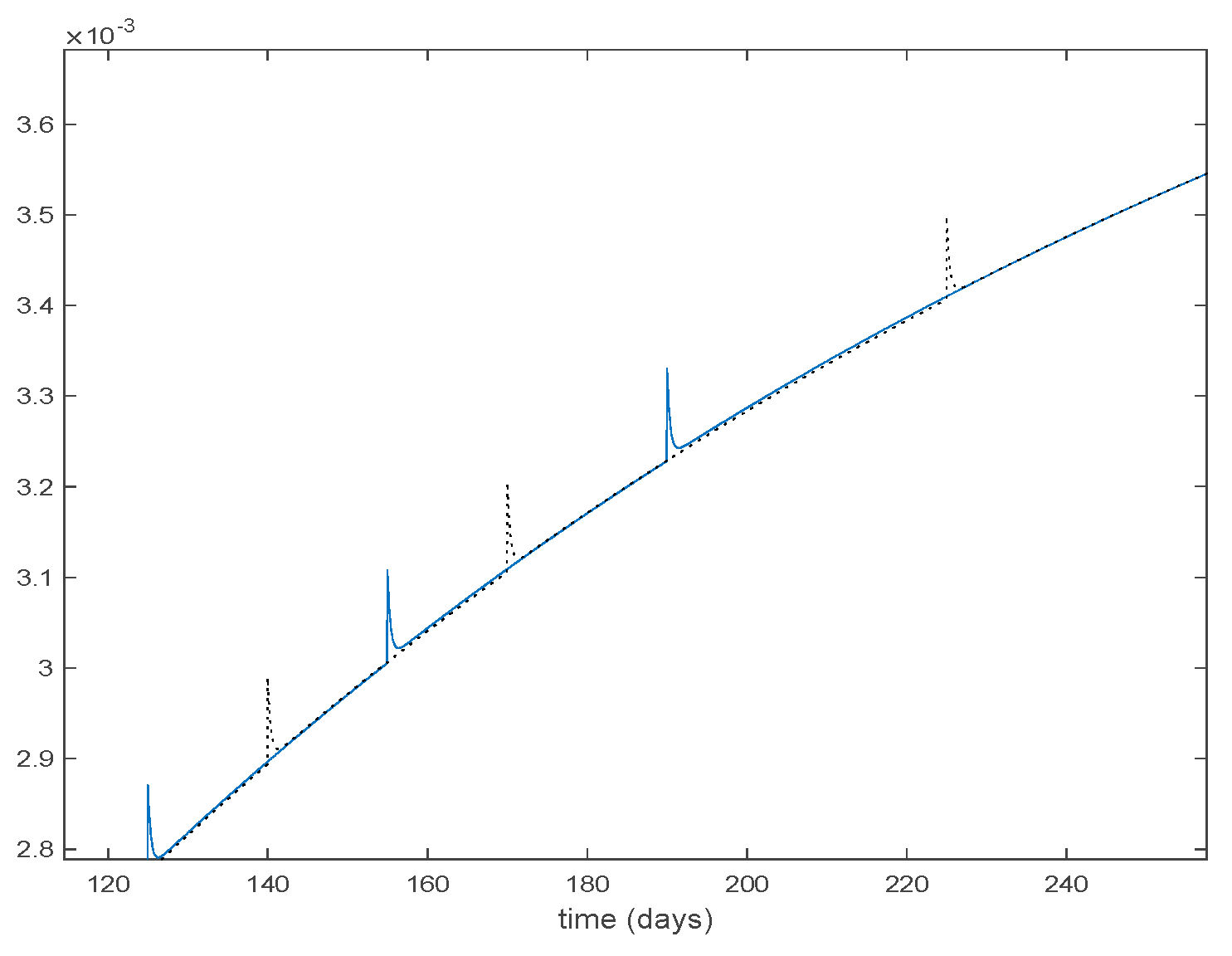

Figure 30.

Zoom on the vaccination function for Algorithms 1 and 2.

5.3. Example with Actual Data

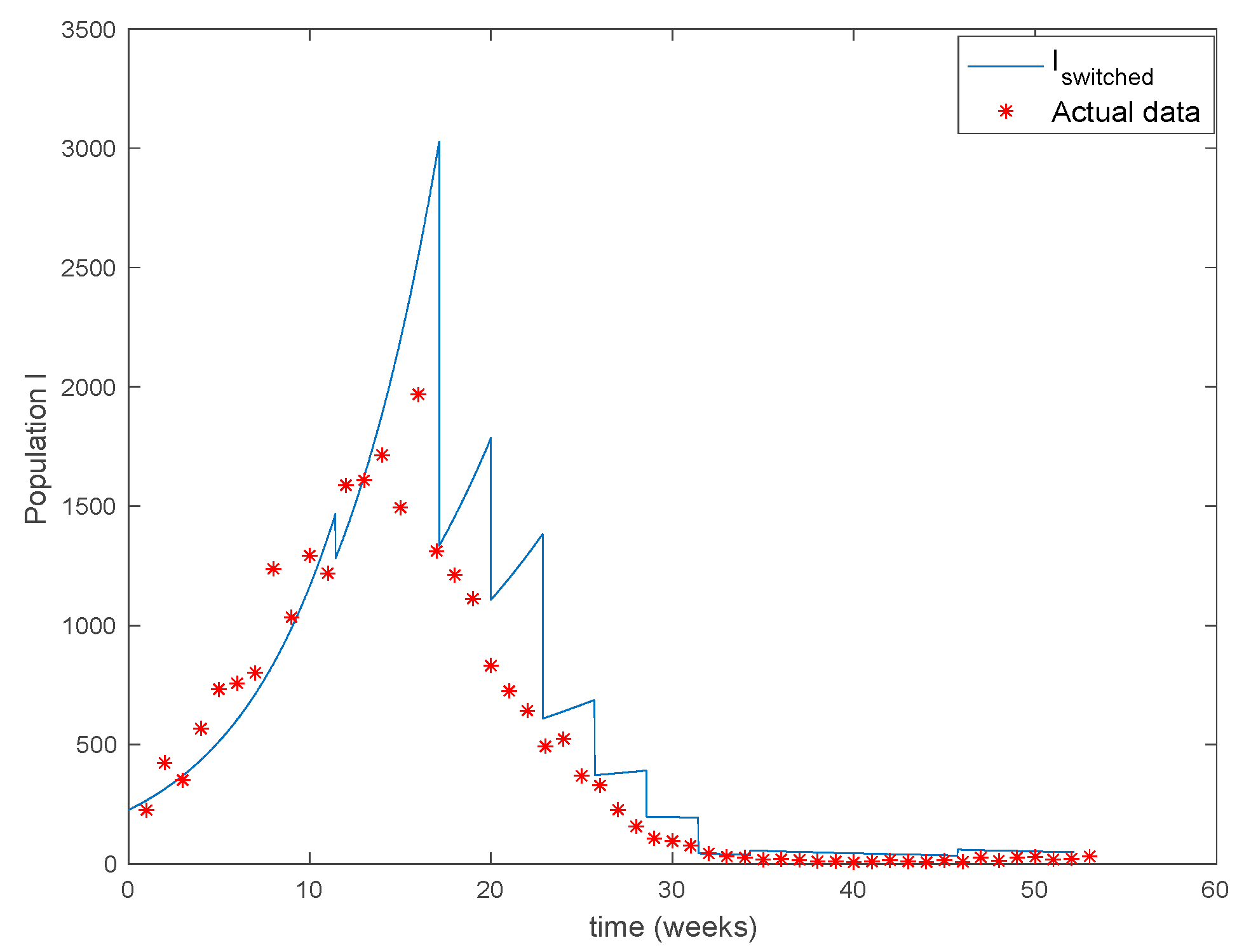

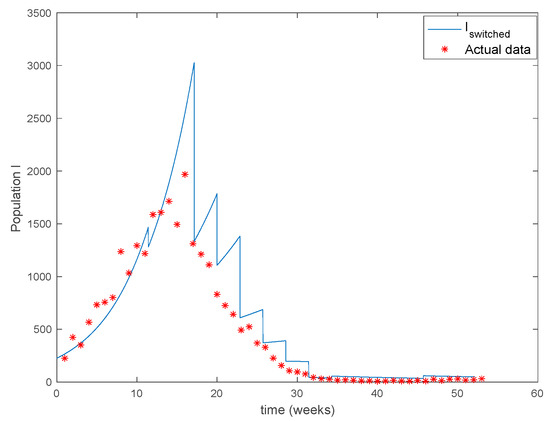

In this subsection the proposed entropy approach is applied to the real case of measles in the city of New York. In this way, it was stated in [33] that measles outbreaks in NYC during the period 1930–1970 can be appropriately described by a SIR model with a time-varying contact rate. Moreover, [33] estimates a monthly contact rate for the model while [34] proposes a Dietz-type contact rate for this problem. Thus, this real situation fits in with the formulation treated in the application problem. The authors of [34] gathered the weekly amount of reported measles cases for 93 years corresponding to the period 1891–1984 and made them publicly available as a supplementary material of [34]. In this simulation, the Algorithm 2 from Section 4 will be used to generate a switched model describing the data set of the year 1960. The SIR model describing the problem is given by:

The parameters of the model are estimated in [34] to be days, , , and the yearly number of new born is approximately . The initial values of the populations are , the population of New York in 1960, and while days. There are 40 time-invariant models linearly spaced between and . Actual data for the infectious are used in (49) in order to calculate the error corresponding to each one of models running in parallel. The Figure 31 shows the number infectious predicted by the switched model compared to the actual data during 1960. The x axis of the figure represents the 52 weeks of the year.

Figure 31.

Comparison of the output of the switched model with the actual data set for 1960.

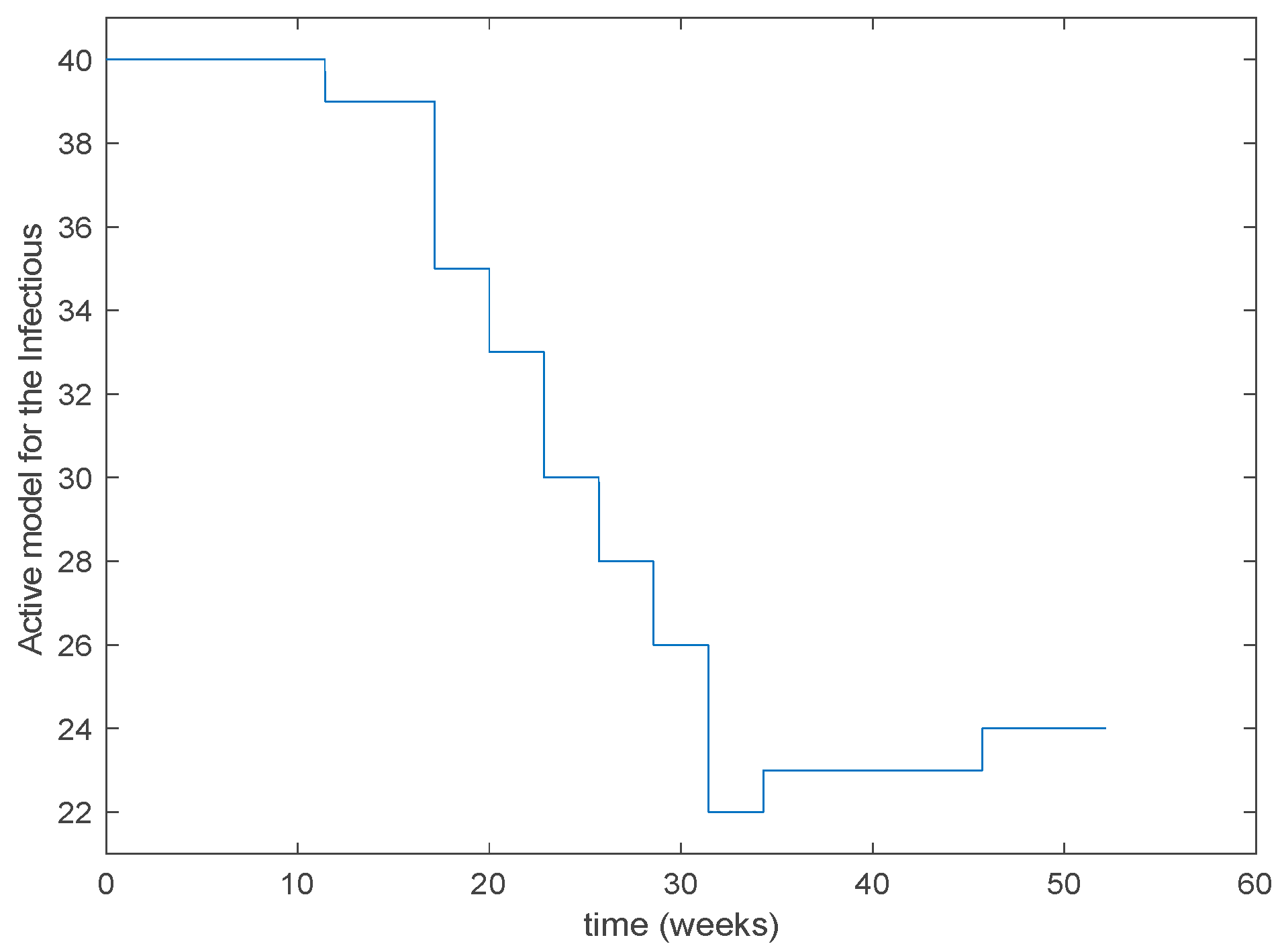

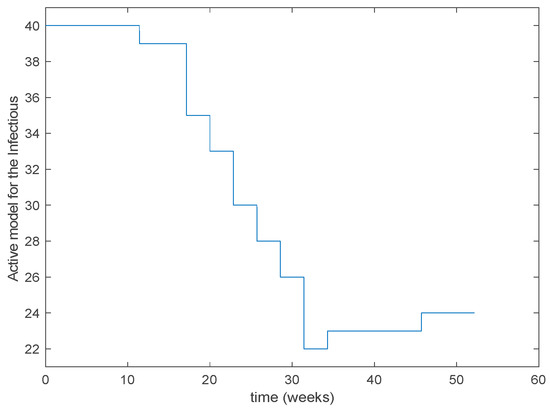

As it can be observed from Figure 31 the proposed approach succeeds at reproducing the trend contained in the data and predicting the time when the outbreak reaches the peak. Finally, the Figure 32 shows the active model at each time for the infectious population. Since the data are only available for the infectious, only this population has been displayed in the figures.

Figure 32.

Active model selected at each time interval for the infectious population.

6. Conclusions

This paper has presented results for the Shannon entropy when a complete the events of a complete finite and discrete set are eventually subject to relative errors of their associate probabilities. As a result, the current system of events, eventually under probabilistic errors, can lose its completeness since it may be either deflated or inflated in the sense that the total probability for the whole sets might be either below or beyond unity. Later on the previous technical results have been applied to control of epidemics evolution in the case that either the disease transmission coefficient rate is not well-known or it varies through time due, for instance, to seasonality. For such a purpose, a finite predefined set of running models, described by coupled differential equations, is chosen which covers a range of variation of such a coefficient transmission rate within known lower-bound and upper-bound limits. Each one of such models is driven by a constant disease transmission rate and the whole set of models covers the whole range of foreseen variation of such a parameter in the real system. In a general context, different uncertain parameters, or groups of parameters, other that the coefficient transmission rate could be checked by the proposed minimum-error entropy supervisory scheme. Two monitoring algorithms have been proposed to select the active one which minimizes the accumulated entropy of the absolute error data/model within each supervision time interval. A switching rule allows to choose another active model as soon as it is detected that the current active model becomes more uncertain than other (s) related to the observed data. Some numerical results have been also performed and discussed including a discussion on a real registered case. The active model, which is currently in operation, generates the vaccination and/or treatment controls to be injected to the real epidemic process.

Author Contributions

Data curation, A.I.; Formal analysis, M.D.l.S.; Funding acquisition, M.D.l.S.; Investigation, M.D.l.S.; Methodology, M.D.l.S.; Project administration, M.D.l.S.; Resources, M.D.l.S. and A.I.; Software, A.I.; Supervision, M.D.l.S.; Validation, A.I. and R.N.; Visualization, A.I.; Writing—review and editing, R.N. All authors have read and agreed to the published version of the manuscript.

Funding

The authors are grateful to the Spanish Government for Grant RTI2018-094336-B-I00 (MCIU/AEI/FEDER, UE) and to the Basque Government for Grant IT1207-19.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Khinchin, A.I. Mathematical Foundations of Information Theory; Dover Publications Inc.: New York, NY, USA, 1957. [Google Scholar]

- Aczel, J.D.; Daroczy, Z. On Measures of Information and Their Generalizations; Academic Press: New York, NY, USA, 1975. [Google Scholar]

- Ash, R.B. Information Theory; Interscience Publishers: New York, NY, USA; John Wiley and Sons: Hoboken, NJ, USA, 1965. [Google Scholar]

- Feynman, R.P. Simulating Physics and Computers. Int. J. Theor. Phys. 1982, 21, 467–488. [Google Scholar] [CrossRef]

- Burgin, M.; Meissner, G. Larger than one probabilities in mathematical and practical finance. Rev. Econ. Financ. 2012, 2, 1–13. [Google Scholar]

- Tenreiro-Machado, J. Fractional derivatives and negative probabilities. Commun. Nonlinear Sci. Numer. Simul. 2019, 79, 104913. [Google Scholar] [CrossRef]

- Baez, J.C.; Fritz, T.; Leinster, T. A characterization of entropy in terms of information loss. Entropy 2011, 2011, 1945–1957. [Google Scholar] [CrossRef]

- Delyon, F.; Foulon, P. Complex entropy for dynamic systems. Ann. Inst. Henry Poincarè Phys. Théor. 1991, 55, 891–902. [Google Scholar]

- Nalewajski, R.F. Complex entropy, resultant information measures. J. Math. Chem. 2016, 54, 1777–2782. [Google Scholar] [CrossRef]

- Goh, S.; Choi, J.; Choi, M.Y.; Yoon, B.G. Time evolution of entropy in a growth model: Dependence of the description. J. Korean Phys. Soc. 2017, 70, 12–21. [Google Scholar] [CrossRef][Green Version]

- Wang, W.B.; Wu, Z.N.; Wang, C.F.; Hu, R.F. Modelling the spreading rate of controlled communicable epidemics through and entropy-based thermodynamic model. Sci. China Phys. Mech. Astron. 2013, 56, 2143–2150. [Google Scholar] [CrossRef]

- Tiwary, S. The evolution of entropy in various scenarios. Eur. J. Phys. 2019, 41, 1–11. [Google Scholar] [CrossRef]

- Koivu-Jolma, M.; Annila, A. Epidemic as a natural process. Math. Biosci. 2018, 299, 97–102. [Google Scholar] [CrossRef]

- Artalejo, J.R.; Lopez-Herrero, M.J. The SIR and SIS epidemic models. A maximum entropy approach. Theor. Popul. Biol. 2011, 80, 256–264. [Google Scholar] [CrossRef] [PubMed]

- Erten, E.Y.; Lizier, J.T.; Piraveenan, M.; Prokopenko, M. Criticality and information dynamics in epidemiological models. Entropy 2017, 19, 194. [Google Scholar] [CrossRef]

- De la Sen, M. On the approximated reachability of a class of time-varying systems based on their linearized behaviour about the equilibria: Applications to epidemic models. Entropy 2019, 2019, 1–31. [Google Scholar]

- Meyers, L. Contact network epidemiology: Bond percolation applied to infectious disease prediction and control. Bull. Am. Math. Soc. 2007, 44, 63–86. [Google Scholar] [CrossRef]

- Cui, Q.; Qiu, Z.; Liu, W.; Hu, Z. Complex dynamics of an SIR epidemic model with nonlinear saturated incidence and recovery rate. Entropy 2017, 2017, 1–16. [Google Scholar]

- Nistal, R.; de la Sen, M.; Alonso-Quesada, S.; Ibeas, A. Supervising the vaccinations and treatment control gains in a discrete SEIADR epidemic model. Int. J. Innov. Comput. Inf. Control 2019, 15, 2053–2067. [Google Scholar]

- Verma, R.; Sehgal, V.K.; Nitin, V. Computational stochastic modelling to handle the crisis occurred during community epidemic. Ann. Data Sci. 2016, 3, 119–133. [Google Scholar] [CrossRef]

- Iggidr, A.; Souza, M.O. State estimators for some epidemiological systems. Math. Biol. 2019, 78, 225–256. [Google Scholar] [CrossRef]

- Yang, H.M.; Ribas-Freitas, A.R. Biological view of vaccination described by mathematical modellings: From rubella to dengue vaccines. Math. Biosci. Eng. 2018, 16, 3185–3214. [Google Scholar]

- De la Sen, M. On the design of hyperstable feedback controllers for a class of parameterized nonlinearities. Two application examples for controlling epidemic models. Int. J. Environ. Res. Public Health 2019, 16, 2689. [Google Scholar] [CrossRef]

- De la Sen, M. Parametrical non-complex tests to evaluate partial decentralized linear-output feedback control stabilization conditions for their centralized stabilization counterparts. Appl. Sci. 2019, 9, 1739. [Google Scholar] [CrossRef]

- Abdullahi, A.; Shohaimi, S.; Kilicman, A.; Ibrahim, M.H.; Salari, N. Stochastic SIS modelling: Coinfection of two pathogens in two-host communities. Entropy 2019, 22, 54. [Google Scholar] [CrossRef]

- Li, Y.; Cai, W.; Li, Y.; Du, X. Key node ranking in complex networks: A novel entropy and mutual information-based approach. Entropy 2019, 22, 52. [Google Scholar] [CrossRef]

- Nakata, Y.; Kuniya, T. Global dynamics of a class of SEIRS epidemic models in a periodic environment. J. Math. Anal. Appl. 2010, 363, 230–237. [Google Scholar] [CrossRef]

- De la Sen, M.; Alonso-Quesada, S. Control issues for the Beverton-Holt equation in ecology by locally monitoring the environment carrying capacity: Non-adaptive and adaptive cases. Appl. Math. Comput. 2009, 215, 2616–2633. [Google Scholar] [CrossRef]

- De la Sen, M.; Ibeas, A.; Alonso-Quesada, S.; Nistal, R. On a SIR model in a patchy environment under constant and feedback decentralized controls with asymmetric parameterizations. Symmetry 2019, 11, 430. [Google Scholar] [CrossRef]

- Buonomo, B.; Chitnis, N.; d’Onofrio, A. Seasonality in epidemic models: A literature review. Ric. Mat. 2018, 67, 7–25. [Google Scholar] [CrossRef]

- Pewsner, D.; Battaglia, M.; Minder, C.; Markx, A.; Bucher, H.C. Ruling a diagnosis in or with “SpPin” and “SnOut”: A note of caution. Br. Med. J. 2004, 329, 209–213. [Google Scholar] [CrossRef]

- Facente, S.N.; Dowling, T.; Vittinghoff, E.; Sykes, D.L.; Colfax, G.N. False positive rate of rapid oral fluid VIH test increases as kits near expiration date. PLoS ONE 2009, 4, e8217. [Google Scholar] [CrossRef]

- London, W.P.; Yorke, J. Recurrent outbreaks of measles, chickenpox and mumps. I Seasonal variations in contact rates. Am. J. Epidemiol. 1973, 98, 453–468. [Google Scholar] [CrossRef]

- Hempel, K.; Earn, D.J.D. A century of transitions in New York City’s measles dynamics. J. R. Soc. Interface 2015, 12, 20150024. [Google Scholar] [CrossRef] [PubMed]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).