Abstract

We study the Hilbert geometry induced by the Siegel disk domain, an open-bounded convex set of complex square matrices of operator norm strictly less than one. This Hilbert geometry yields a generalization of the Klein disk model of hyperbolic geometry, henceforth called the Siegel–Klein disk model to differentiate it from the classical Siegel upper plane and disk domains. In the Siegel–Klein disk, geodesics are by construction always unique and Euclidean straight, allowing one to design efficient geometric algorithms and data structures from computational geometry. For example, we show how to approximate the smallest enclosing ball of a set of complex square matrices in the Siegel disk domains: We compare two generalizations of the iterative core-set algorithm of Badoiu and Clarkson (BC) in the Siegel–Poincaré disk and in the Siegel–Klein disk: We demonstrate that geometric computing in the Siegel–Klein disk allows one (i) to bypass the time-costly recentering operations to the disk origin required at each iteration of the BC algorithm in the Siegel–Poincaré disk model, and (ii) to approximate fast and numerically the Siegel–Klein distance with guaranteed lower and upper bounds derived from nested Hilbert geometries.

1. Introduction

German mathematician Carl Ludwig Siegel [1] (1896–1981) and Chinese mathematician Loo-Keng Hua [2] (1910–1985) have introduced independently the symplectic geometry in the 1940s (with a preliminary work of Siegel [3] released in German in 1939). The adjective symplectic stems from the Greek, and means “complex”: That is, mathematically the number field instead of the ordinary real field . Symplectic geometry was originally motivated by the study of complex multivariate functions in the two landmark papers of Siegel [1] and Hua [2]. As we shall see soon, the naming “symplectic geometry” for the geometry of complex matrices originally stems from the relationships with the symplectic groups (and their matrix representations). Presently, symplectic geometry is mainly understood as the study of symplectic manifolds [4] which are even-dimensional differentiable manifolds equipped with a closed and nondegenerate differential 2-form , called the symplectic form, studied in geometric mechanics.

We refer the reader to the PhD thesis [5,6] for an overview of Siegel bounded domains. More generally, the Siegel-like bounded domains have been studied and classified into 6 types in the most general setting of bounded symmetric irreducible homogeneous domains by Elie Cartan [7] in 1935 (see also [8,9]).

The Siegel upper space and the Siegel disk domains provide generalizations of the complex Poincaré upper plane and the complex Poincaré disk to spaces of symmetric square complex matrices. In the remainder, we shall term them the Siegel–Poincaré upper plane and the Siegel–Poincaré disk. The Siegel upper space includes the well-studied cone of real symmetric positive-definite (SPD) matrices [10] (SPD manifold). The celebrated affine-invariant SPD Riemannian metric [11] can be recovered as a special case of the Siegel metric.

Applications of the geometry of Siegel upper/disk domains are found in radar processing [12,13,14,15] especially for dealing with Toepliz matrices [16,17], probability density estimations [18] and probability metric distances [19,20,21,22], information fusion [23], neural networks [24], theoretical physics [25,26,27], and image morphology operators [28], just to cite a few.

In this paper, we extend the Klein disk model [29] of the hyperbolic geometry to the Siegel disk domain by considering the Hilbert geometry [30] induced by the open-bounded convex Siegel disk [31,32]. We call the Hilbert metric distance of the Siegel disk the Siegel–Klein distance. We term this model the Klein-Siegel model for short to contrast it with the Poincaré-Siegel upper plane model and the Poincaré-Siegel disk model. The main advantages of using the Klein-Siegel disk model instead of the usual Siegel–Poincaré upper plane or the Siegel–Poincaré disk are that the geodesics are unique and always straight by construction. Thus, this Siegel–Klein disk model is very well-suited for designing efficient algorithms and data structures by borrowing techniques of Euclidean computational geometry [33]. Moreover, in the Siegel–Klein disk model, we have an efficient and robust method to approximate with guarantees the calculation of the Siegel–Klein distance: This is especially useful when handling high-dimensional square complex matrices. The algorithmic advantage of the Hilbert geometry was already observed for real hyperbolic geometry (included as a special case of the Siegel–Klein model): For example, the hyperbolic Voronoi diagrams can be efficiently computed as an affine power diagram clipped to the boundary circle [34,35,36,37]. To demonstrate the advantage of the Siegel–Klein disk model (Hilbert distance) over the Siegel–Poincaré disk model (Kobayashi distance), we consider approximating the Smallest Enclosing Ball (SEB) of the a set of square complex matrices in the Siegel disk domain. This problem finds potential applications in image morphology [28,38] or anomaly detection of covariance matrices [39,40]. Let us state the problem as follows:

Problem 1 (Smallest Enclosing Ball (SEB)).

Given a metric space and a finite set of n points in X, find the smallest-radius enclosing ball with circumcenter minimizing the following objective function:

In general, the SEBs may not be unique in a metric space: For example, the SEBs are not unique in a discrete Hamming metric space [41] making it notably NP-hard to calculate. We note in passing that the set-complement of a Hamming ball is a Hamming ball in a Hamming metric space. However, the SEB is proven unique in the Euclidean geometry [42], the hyperbolic geometry [43], the Riemannian positive-definite matrix manifold [44,45], and more generally in any Cartan-Hadamard manifold [46] (Riemannian manifold that is complete and simply connected with non-positive sectional curvatures). The SEB is guaranteed to be unique in any Bruhat–Tits space [44] (i.e., complete metric space with a semi-parallelogram law) which includes the Riemannian SPD manifold.

A fast -approximation algorithm which requires iterations was reported in [46,47] to approximate the SEB in the Euclidean space: That is a covering ball of radius where for . Since the approximation factor does not depend on the dimension, this SEB approximation algorithm found many applications in machine learning [48] (e.g., in Reproducing Kernel Hilbert Spaces [49], RKHS).

1.1. Paper Outline and Contributions

In Section 2, we concisely recall the usual models of the hyperbolic complex plane: The Poincaré upper plane model, and the Poincaré disk model, and the Klein disk model. We then briefly review the geometry of the Siegel upper plane domain in Section 3 and the Siegel disk domain in Section 4. Section 5 introduces the novel Siegel–Klein model using the Hilbert geometry and its Siegel–Klein distance. To demonstrate the algorithmic advantage of using the Siegel–Klein disk model over the Siegel–Poincaré disk model in practice, we compare in Section 6 the two implementations of the Badoiu and Clarkson’s SEB approximation algorithm [47] in these models. Finally, we conclude this work in Section 7. In the Appendix, we first list the notations used in this work, recall the deflation method for calculating numerically the eigenvalues of a Hermitian matrix (Appendix A), and provide some basic snippet code for calculating the Siegel distance (Appendix A).

Our main contributions are summarized as follows:

- First, we formulate a generalization of the Klein disk model of hyperbolic geometry to the Siegel disk domain in Definition 2 using the framework of Hilbert geometry. We report the formula of the Siegel–Klein distance to the origin in Theorem 1 (and more generally a closed-form expression for the Siegel–Klein distance between two points whose supporting line passes through the origin), describe how to convert the Siegel–Poincaré disk to the Siegel–Klein disk and vice versa in Proposition 2, report an exact algorithm to calculate the Siegel–Klein distance for diagonal matrices in Theorem 4. In practice, we show how to obtain a fast guaranteed approximation of the Siegel–Klein distance using geodesic bisection searches with guaranteed lower and upper bounds (Theorem 5 whose proof is obtained by considering nested Hilbert geometries).

- Second, we report the exact solution to a geodesic cut problem in the Siegel–Poincaré/Siegel–Klein disks in Proposition 3. This result yields an explicit equation for the geodesic linking the origin of the Siegel disk domain to any other matrix point of the Siegel disk domain (Propositions 3 and 4). We then report an implementation of the Badoiu and Clarkson’s iterative algorithm [47] for approximating the smallest enclosing ball tailored to the Siegel–Poincaré and Siegel–Klein disk domains. In particular, we show in §6 that the implementation in the Siegel–Klein model yields a fast algorithm which bypasses the costly operations of recentering to the origin required in the Siegel–Poincaré disk model.

Let us now introduce a few notations on matrices and their norms.

1.2. Matrix Spaces and Matrix Norms

Let be a number field considered in the remainder to be either the real number field or the complex number field . For a complex number (with imaginary number ), we denote by its complex conjugate, and by its modulus. Let and denote the real part and the imaginary part of the complex number , respectively.

Let be the space of square matrices with coefficients in , and let denote its subspace of invertible matrices. Let denote the vector space of symmetric matrices with coefficients in . The identity matrix is denoted by I (or when we want to emphasize its dimension). The conjugate of a matrix is the matrix of complex conjugates: . The conjugate transpose of a matrix M is , the adjoint matrix. Conjugate transposition is also denoted by the star operator (i.e., ) or the dagger symbol (i.e., ) in the literature. A complex matrix is said Hermitian when (hence M has real diagonal elements). For any , Matrix is Hermitian: .

A real matrix is said symmetric positive-definite (SPD) if and only if for all with . This positive-definiteness property is written , where ≻ denotes the partial Löwner ordering [50]. Let be the space of real symmetric positive-definite matrices [10,44,51,52] of dimension . This space is not a vector space but a cone, i.e., if then for all . The boundary of the cone consists of rank-deficient symmetric positive semi-definite matrices.

The (complex/real) eigenvalues of a square complex matrix M are ordered such that , where denotes the complex modulus. The spectrum of a matrix M is its set of eigenvalues: . In general, real matrices may have complex eigenvalues but symmetric matrices (including SPD matrices) have always real eigenvalues. The singular values of M are always real:

and ordered as follows: with and . We have , and in particular .

Any matrix norm (including the operator norm) satisfies:

- with equality if and only if (where 0 denotes the matrix with all its entries equal to zero),

- ,

- , and

- .

Let us define two usual matrix norms: The Fröbenius norm and the operator norm. The Fröbenius norm of M is:

The induced Fröbenius distance between two complex matrices and is .

The operator norm or spectral norm of a matrix M is:

Notice that is a Hermitian positive semi-definite matrix. The operator norm coincides with the spectral radius of the matrix M and is upper bounded by the Fröbenius norm: , and we have . When the dimension , the operator norm of coincides with the complex modulus: .

To calculate the largest singular value , we may use a the (normalized) power method [53,54] which has quadratic convergence for Hermitian matrices (see Appendix A). We can also use the more costly Singular Value Decomposition (SVD) of M which requires cubic time: where is the diagonal matrix with coefficients being the singular values of M.

2. Hyperbolic Geometry in the Complex Plane: The Poincaré Upper Plane and Disk Models and the Klein Disk Model

We concisely review the three usual models of the hyperbolic plane [55,56]: Poincaré upper plane model in Section 2.1, the Poincaré disk model in Section 2.2, and the Klein disk model in Section 2.2.1. We then report distance expressions in these models and conversions between these three usual models in Section 2.3. Finally, in Section 2.4, we recall the important role of hyperbolic geometry in the Fisher–Rao geometry in information geometry [57,58].

2.1. Poincaré Complex Upper Plane

The Poincaré upper plane domain is defined by

The Hermitian metric tensor is:

or equivalently the Riemannian line element is:

Geodesics between and are either arcs of semi-circles whose centers are located on the real axis and orthogonal to the real axis, or vertical line segments when .

The geodesic length distance is

or equivalently

where

Equivalent formula can be obtained by using the following identity:

where

By interpreting a complex number as a 2D point with Cartesian coordinates , the metric can be rewritten as

where is the Euclidean (flat) metric. That is, the Poincaré upper plane metric can be rewritten as a conformal factor times the Euclidean metric . Thus, the metric of Equation (16) shows that the Poincaré upper plane model is a conformal model of hyperbolic geometry: That is, the Euclidean angle measurements in the chart coincide with the underlying hyperbolic angles.

The group of orientation-preserving isometries (i.e., without reflections) is the real projective special group (quotient group), where denotes the special linear group of matrices with unit determinant:

The left group action is a fractional linear transformation (also called a Möbius transformation):

The condition is to ensure that the Möbius transformation is not constant. The set of Möbius transformations form a group . The elements of the Möbius group can be represented by corresponding matrices of :

The neutral element e is encoded by the identity matrix I.

The fractional linear transformations

are the analytic mappings of the Poincaré upper plane onto itself.

The group action is transitive (i.e., such that ) and faithful (i.e., if then ). The stabilizer of i is the rotation group:

The unit speed geodesic anchored at i and going upward (i.e., geodesic with initial condition) is:

Since the other geodesics can be obtained by the action of , it follows that the geodesics in are parameterized by:

2.2. Poincaré Disk

The Poincaré unit disk is

The Riemannian Poincaré line element (also called Poincaré-Bergman line element) is

Since , we deduce that the metric is conformal: The Poincaré disk is a conformal model of hyperbolic geometry. The geodesic between points and are either arcs of circles intersecting orthogonally the disk boundary , or straight lines passing through the origin 0 of the disk and clipped to the disk domain.

The geodesic distance in the Poincaré disk is

The group of orientation-preserving isometry is the complex projective special group where denotes the special group of complex matrices with unit determinant.

In the Poincaré disk model, the transformation

corresponds to a hyperbolic motion (a Möbius transformation [59]) which moves point to the origin 0, and then makes a rotation of angle . The group of such transformations is the automorphism group of the disk, , and the transformation is called a biholomorphic automorphism (i.e., a one-to-one conformal mapping of the disk onto itself).

The Poincaré distance is invariant under automorphisms of the disk, and more generally the Poincaré distance decreases under holomorphic mappings (Schwarz–Pick theorem): That is, the Poincaré distance is contractible under holomorphic mappings f: .

2.2.1. Klein Disk

The Klein disk model [29,55] (also called the Klein-Beltrami model) is defined on the unit disk domain as the Poincaré disk model. The Klein metric is

It is not a conformal metric (except at the disk origin), and therefore the Euclidean angles in the chart do not correspond to the underlying hyperbolic angles.

The Klein distance between two points and is

An equivalent formula shall be reported later in page 24 in a more setting of Theorem 4.

The advantage of the Klein disk over the Poincaré disk is that geodesics are straight Euclidean lines clipped to the unit disk domain. Therefore, this model is well-suited to implement computational geometric algorithms and data structures, see for example [34,60]. The group of isometries in the Klein model are projective maps preserving the disk. We shall see that the Klein disk model corresponds to the Hilbert geometry of the unit disk.

2.3. Poincaré and Klein Distances to the Disk Origin and Conversions

In the Poincaré disk, the distance of a point w to the origin 0 is

Since the Poincaré disk model is conformal (and Möbius transformations are conformal maps), Equation (31) shows that Poincaré disks have Euclidean disk shapes (however with displaced centers).

In the Klein disk, the distance of a point k to the origin is

Observe the multiplicative factor of in Equation (32).

Thus, we can easily convert a point in the Poincaré disk to a point in the Klein disk, and vice versa as follows:

Let and denote these conversion functions with

We can write and , so that is an expansion factor, and is a contraction factor.

The conversion functions are Möbius transformations represented by the following matrices:

For sanity check, let be a point in the Poincaré disk with equivalent point in the Klein disk. Then we have:

We can convert a point z in the Poincaré upper plane to a corresponding point w in the Poincaré disk, or vice versa, using the following Möbius transformations:

Notice that we compose Möbius transformations by multiplying their matrix representations.

2.4. Hyperbolic Fisher–Rao Geometry of Location-Scale Families

Consider a parametric family of probability densities dominated by a positive measure (usually, the Lebesgue measure or the counting measure) defined on a measurable space , where denotes the support of the densities and is a finite -algebra [57]. Hotelling [61] and Rao [62] independently considered the Riemannian geometry of by using the Fisher Information Matrix (FIM) to define the Riemannian metric tensor [63] expressed in the (local) coordinates , where denotes the parameter space. The FIM is defined by the following symmetric positive semi-definite matrix [57,64]:

When is regular [57], the FIM is guaranteed to be positive-definite, and can thus play the role of a metric tensor field: The so-called Fisher metric.

Consider the location-scale family induced by a density symmetric with respect to 0 such that , and (with ):

The density is called the standard density, and corresponds to the parameter : . The parameter space is the upper plane, and the FIM can be structurally calculated [65] as the following diagonal matrix:

with

By rescaling as with and , we get the FIM with respect to expressed as:

a constant time the Poincaré metric in the upper plane. Thus, the Fisher–Rao manifold of a location-scale family (with symmetric standard density f) is isometric to the planar hyperbolic space of negative curvature .

3. The Siegel Upper Space and the Siegel Distance

The Siegel upper space [1,3,5,66] is defined as the space of symmetric complex square matrices of size which have positive-definite imaginary part:

The space is a tube domain of dimension since

with and . We can extract the components X and Y from Z as and . The matrix pair belongs to the Cartesian product of a matrix-vector space with the symmetric positive-definite (SPD) matrix cone: . When , the Siegel upper space coincides with the Poincaré upper plane: . The geometry of the Siegel upper space was studied independently by Siegel [1] and Hua [2] from different viewpoints in the late 1930s–1940s. Historically, these classes of complex matrices were first studied by Riemann [67], and later eponymously called Riemann matrices. Riemann matrices are used to define Riemann theta functions [68,69,70,71].

The Siegel distance in the upper plane is induced by the following line element:

The formula for the Siegel upper distance between and was calculated in Siegel’s masterpiece paper [1] as follows:

where

with denoting the matrix generalization [72] of the cross-ratio:

and denotes the i-th largest (real) eigenvalue of (complex) matrix M. The letter notation ‘R’ in is a mnemonic which stands for ‘r’atio.

The Siegel distance can also be expressed without explicitly using the eigenvalues as:

where . In particular, we can truncate the matrix power series of Equation (58) to get an approximation of the Siegel distance:

It costs to calculate the Siegel distance using Equation (55) and to approximate it using the truncated series formula of Equation (59), where denotes the cost of performing the spectral decomposition of a complex matrix, and denotes the cost of multiplying two square complex matrices. For example, choosing the Coppersmith-Winograd algorithm for matrix multiplications, we have . Although Siegel distance formula of Equation (59) is attractive, the number of iterations l to get an -approximation of the Siegel distance depends on the dimension d. In practice, we can define a threshold δ > 0, and as a rule of thumb iterate on the truncated sum until .

A spectral function [52] of a matrix M is a function F which is the composition of a symmetric function f with the eigenvalue map : . For example, the Kullback–Leibler divergence between two zero-centered Gaussian distributions is a spectral function distance since we have:

where and denotes respectively the determinant of a positive-definite matrix , and the i-the real largest eigenvalue of , and

is the density of the multivariate zero-centered Gaussian of covariance matrix ,

is a symmetric function invariant under parameter permutations, and denotes the eigenvalue map.

This Siegel distance in the upper plane is also a smooth spectral distance function since we have

where f is the following symmetric function:

A remarkable property is that all eigenvalues of are positive (see [1]) although R may not necessarily be a Hermitian matrix. In practice, when calculating numerically the eigenvalues of the complex matrix , we obtain very small imaginary parts which shall be rounded to zero. Thus, calculating the Siegel distance on the upper plane requires cubic time, i.e., the cost of computing the eigenvalue decomposition.

This Siegel distance in the upper plane generalizes several well-known distances:

- When and , we havethe Riemannian distance between and on the symmetric positive-definite manifold [10,51]:In that case, the Siegel upper metric for becomes the affine-invariant metric:Indeed, we have for any and

- In 1D, the Siegel upper distance between and (with and in ) amounts to the hyperbolic distance on the Poincaré upper plane :where

- The Siegel distance between two diagonal matrices and isObserve that the Siegel distance is a non-separable metric distance, but its squared distance is separable when the matrices are diagonal:

The Siegel metric in the upper plane is invariant by generalized matrix Möbius transformations (linear fractional transformations or rational transformations):

where is the following block matrix:

which satisfies

The map is called a symplectic map.

The set of matrices S encoding the symplectic maps forms a group called the real symplectic group [5] (informally, the group of Siegel motions):

It can be shown that symplectic matrices have unit determinant [73,74], and therefore is a subgroup of , the special group of real invertible matrices with unit determinant. We also check that if then .

Matrix S denotes the representation of the group element . The symplectic group operation corresponds to matrix multiplications of their representations, the neutral element is encoded by , and the group inverse of with is encoded by the matrix:

Here, we use the parenthesis notation to indicate that it is the group inverse and not the usual matrix inverse . The symplectic group is a Lie group of dimension . Indeed, a symplectic matrix of has elements which are constrained from the block matrices as follows:

The first two constraints are independent and of the form which yields each elementary constraints. The third constraint is of the form , and independent of the other constraints, yielding elementary constraints. Thus, the dimension of the symplectic group is

The action of the group is transitive: That is, for any and , we have . Therefore, by taking the group inverse

we get

The action can be interpreted as a “Siegel translation” moving matrix to matrix Z, and conversely the action as moving matrix Z to matrix .

The stabilizer group of (also called isotropy group, the set of group elements whose action fixes Z) is the subgroup of symplectic orthogonal matrices :

We have , where is the group of orthogonal matrices of dimension :

Informally speaking, the elements of represent the “Siegel rotations” in the upper plane. The Siegel upper plane is isomorphic to .

A pair of matrices can be transformed into another pair of matrices of if and only if , where denotes the spectrum of matrix M.

By noticing that the symplectic group elements M and yield the same symplectic map, we define the orientation-preserving isometry group of the Siegel upper plane as the real projective symplectic group (generalizing the group obtained when ).

The geodesics in the Siegel upper space can be obtained by applying symplectic transformations to the geodesics of the positive-definite manifold (geodesics on the SPD manifold) which is a totally geodesic submanifold of . Let and . Then the geodesic with and is expressed as:

where denotes the matrix exponential:

and is the principal matrix logarithm, unique when matrix M has all positive eigenvalues.

The equation of the geodesic emanating from P with tangent vector (symmetric matrix) on the SPD manifold is:

Both the exponential and the principal logarithm of a matrix M can be calculated in cubic time when the matrices are diagonalizable: Let V denote the matrix of eigenvectors so that we have the following decomposition:

where are the corresponding eigenvalues of eigenvectors. Then for a scalar function f (e.g., or ), we define the corresponding matrix function as

The volume element of the Siegel upper plane is where is the volume element of the -dimensional Euclidean space expressed in the Cartesian coordinate system.

4. The Siegel Disk Domain and the Kobayashi Distance

The Siegel disk [1] is an open convex complex matrix domain defined by

The Siegel disk can be written equivalently as or . In the Cartan classification [7], the Siegel disk is a Siegel domain of type III.

When , the Siegel disk coincides with the Poincaré disk: . The Siegel disk was described by Siegel [1] (page 2, called domain E to contrast with domain H of the upper space) and Hua in his 1948’s paper [75] (page 205) on the geometries of matrices [76]. Siegel’s paper [1] in 1943 only considered the Siegel upper plane. Here, the Siegel (complex matrix) disk is not to be confused with the other notion of Siegel disk in complex dynamics which is a connected component in the Fatou set.

The boundary of the Siegel disk is called the Shilov boundary [5,77,78]): . We have , where

is the group of unitary matrices. Thus, is the set of symmetric unitary matrices with determinant of unit module. The Shilov boundary is a stratified manifold where each stratum is defined as a space of constant rank-deficient matrices [79].

The metric in the Siegel disk is:

When , we recover which is the usual metric in the Poincaré disk (up to a missing factor of 4, see Equation (25).

This Siegel metric induces a Kähler geometry [13] with the following Kähler potential:

The Kobayashi distance [80] between and in is calculated [79] as follows:

where

is a Siegel translation which moves to the origin O (matrix with all entries set to 0) of the disk: We have . In the Siegel disk domain, the Kobayashi distance [80] coincides with the Carathéodory distance [81] and yields a metric distance. Notice that the Siegel disk distance, although a spectral distance function via the operator norm, is not smooth because of it uses the maximum singular value. Recall that the Siegel upper plane distance uses all eigenvalues of a matrix cross-ratio R.

It follows that the cost of calculating a Kobayashi distance in the Siegel disk is cubic: We require the computation of a symmetric matrix square root [82] in Equation (101), and then compute the largest singular value for the operator norm in Equation (100).

Notice that when , the “1d” scalar matrices commute, and we have:

This corresponds to a hyperbolic translation of to 0 (see Equation (28)). Let us call the geometry of the Siegel disk the Siegel–Poincaré geometry.

We observe the following special cases of the Siegel–Poincaré distance:

- Distance to the origin: When and , we have , and therefore the distance in the disk between a matrix W and the origin 0 is:In particular, when , we recover the formula of Equation (31): .

- When , we have and , and

- Consider diagonal matrices and . We have for . Thus, the diagonal matrices belong to the polydisk domain. Then we haveNotice that the polydisk domain is a Cartesian product of 1D complex disk domains, but it is not the unit d-dimensional complex ball .

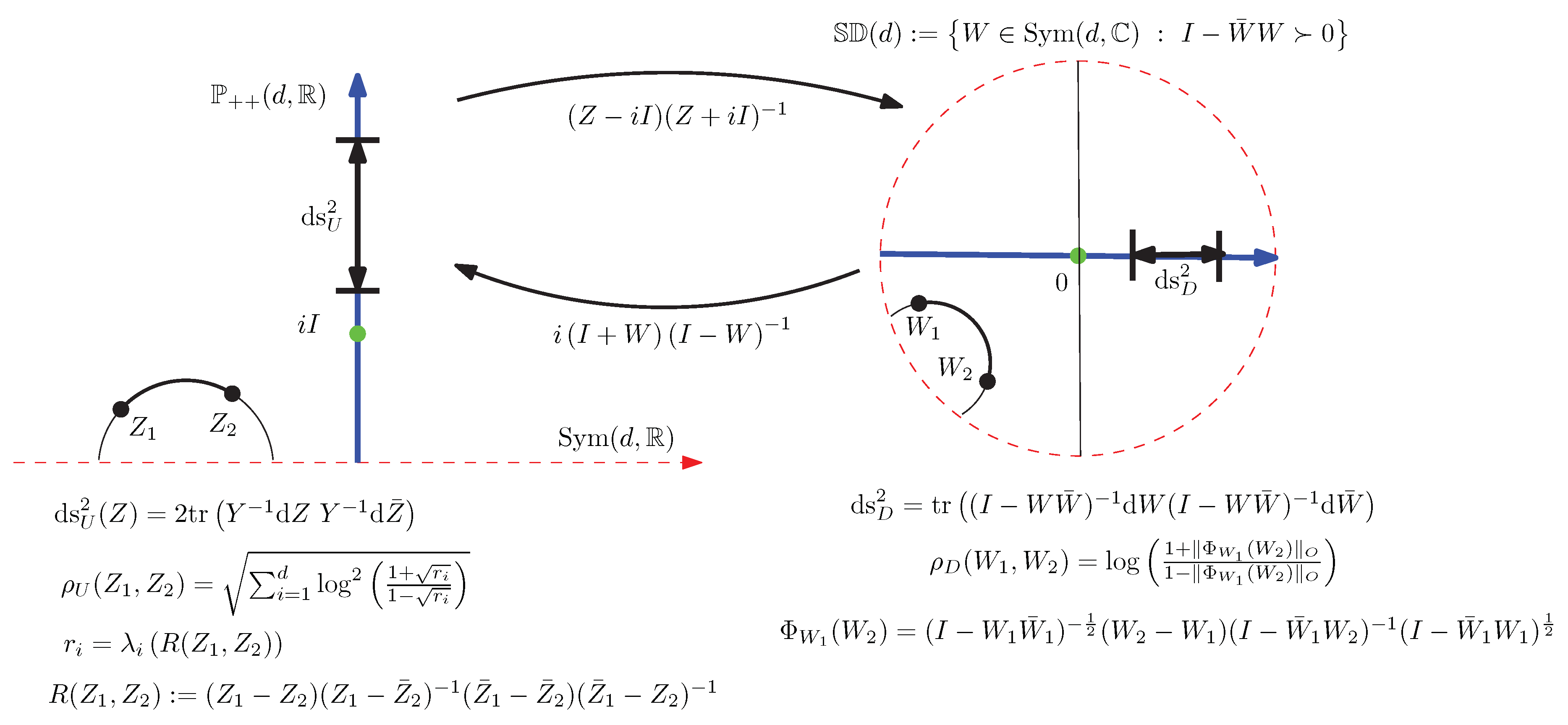

We can convert a matrix Z in the Siegel upper space to an equivalent matrix W in the Siegel disk by using the following matrix Cayley transformation for :

Notice that the imaginary positive-definite matrices of the upper plane (vertical axis) are mapped to

i.e., the real symmetric matrices belonging to the horizontal-axis of the disk.

The inverse transformation for a matrix W in the Siegel disk is

a matrix in the Siegel upper space. With those mappings, the origin of the disk coincides with matrix in the upper space.

A key property is that the geodesics passing through the matrix origin 0 are expressed by straight line segments in the Siegel disk. We can check that

for any .

To describe the geodesics between and , we first move to 0 and to . Then the geodesic between 0 and is a straight line segment, and we map back this geodesic via . The inverse of a symplectic map is a symplectic map which corresponds to the action of an element of the complex symplectic group.

The complex symplectic group is

with

for the identity matrix I. Notice that the condition amounts to check that

The conversions between the Siegel upper plan to the Siegel disk (and vice versa) can be expressed using complex symplectic transformations associated with the matrices:

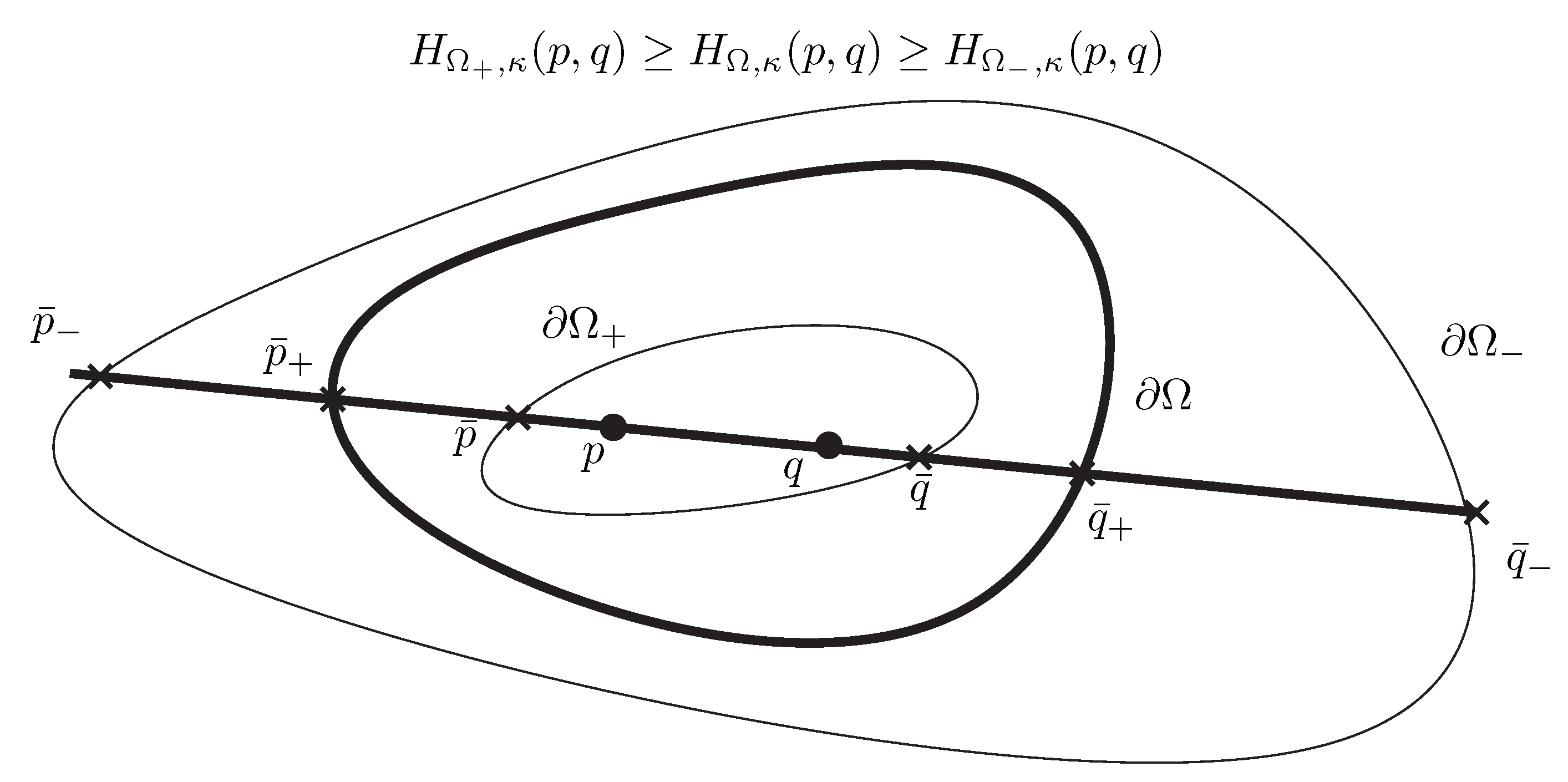

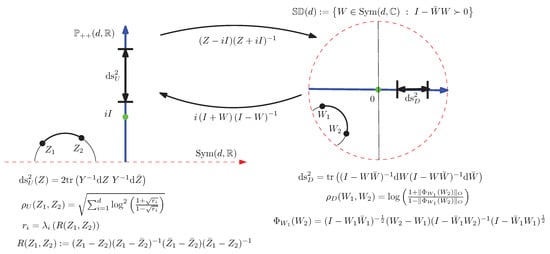

Figure 1 depicts the conversion of the upper plane to the disk, and vice versa.

Figure 1.

Illustrating the properties and conversion between the Siegel upper plane and the Siegel disk.

The orientation-preserving isometries in the Siegel disk is the projective complex symplectic group .

It can be shown that

with

and the left action of is

The isotropy group at the origin 0 is

where is the unitary group: .

Thus, we can “rotate” a matrix W with respect to the origin so that its imaginary part becomes 0: There exists A such that .

More generally, we can define a Siegel rotation [83] in the disk with respect to a center as follows:

where

Interestingly, the Poincaré disk can be embedded non-diagonally onto the Siegel upper plane [84].

In complex dimension , the Kobayashi distance coincides with the Siegel distance . Otherwise, we calculate the Siegel distance in the Siegel disk as

5. The Siegel–Klein Geometry: Distance and Geodesics

We define the Siegel–Klein geometry as the Hilbert geometry for the Siegel disk model. Section 5.1 concisely explains the Hilbert geometry induced by an open-bounded convex domain. In Section 5.2, we study the Hilbert geometry of the Siegel disk domain. Then we report the Siegel–Klein distance in Section 5.3 and study some of its particular cases. Section 5.5 presents the conversion procedures between the Siegel–Poincaré disk and the Siegel–Klein disk. In Section 5.7, we design a fast guaranteed method to approximate the Siegel–Klein distance. Finally, we introduce the Hilbert-Fröbenius distances to get simple bounds on the Siegel–Klein distance in Section 5.8.

5.1. Background on Hilbert Geometry

Consider a normed vector space , and define the Hilbert distance [30,85] for an open-bounded convex domain as follows:

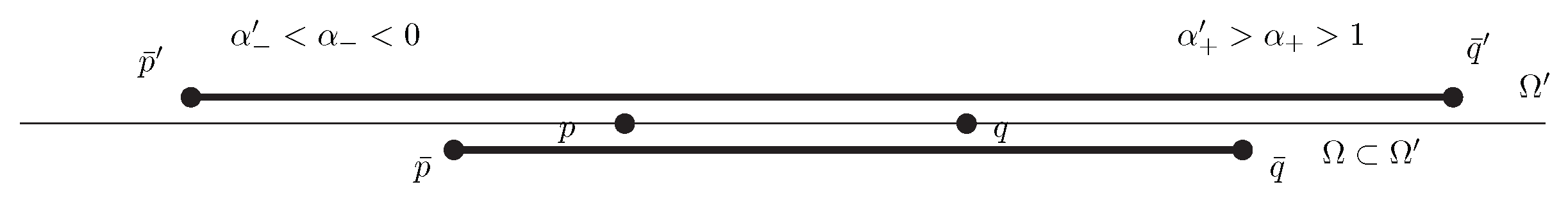

Definition 1 (Hilbert distance).

When , we have:The Hilbert distance is defined for any open-bounded convex domain and a prescribed positive factor by

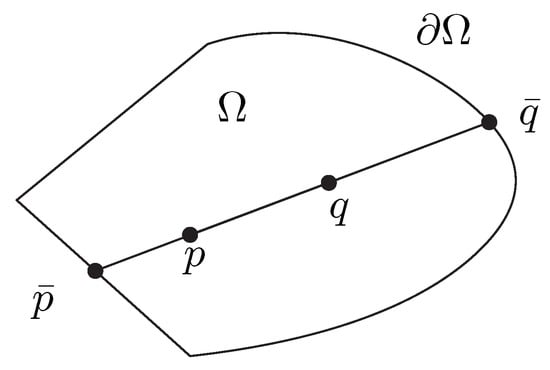

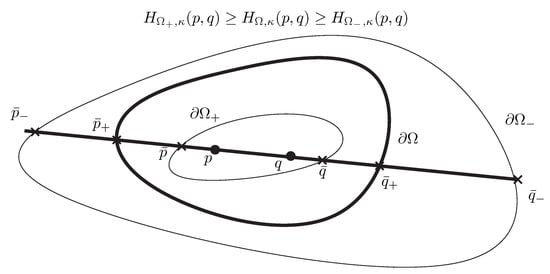

where and are the unique two intersection points of the line with the boundary of the domain as depicted in Figure 2, and denotes the cross-ratio of four points (a projective invariant):

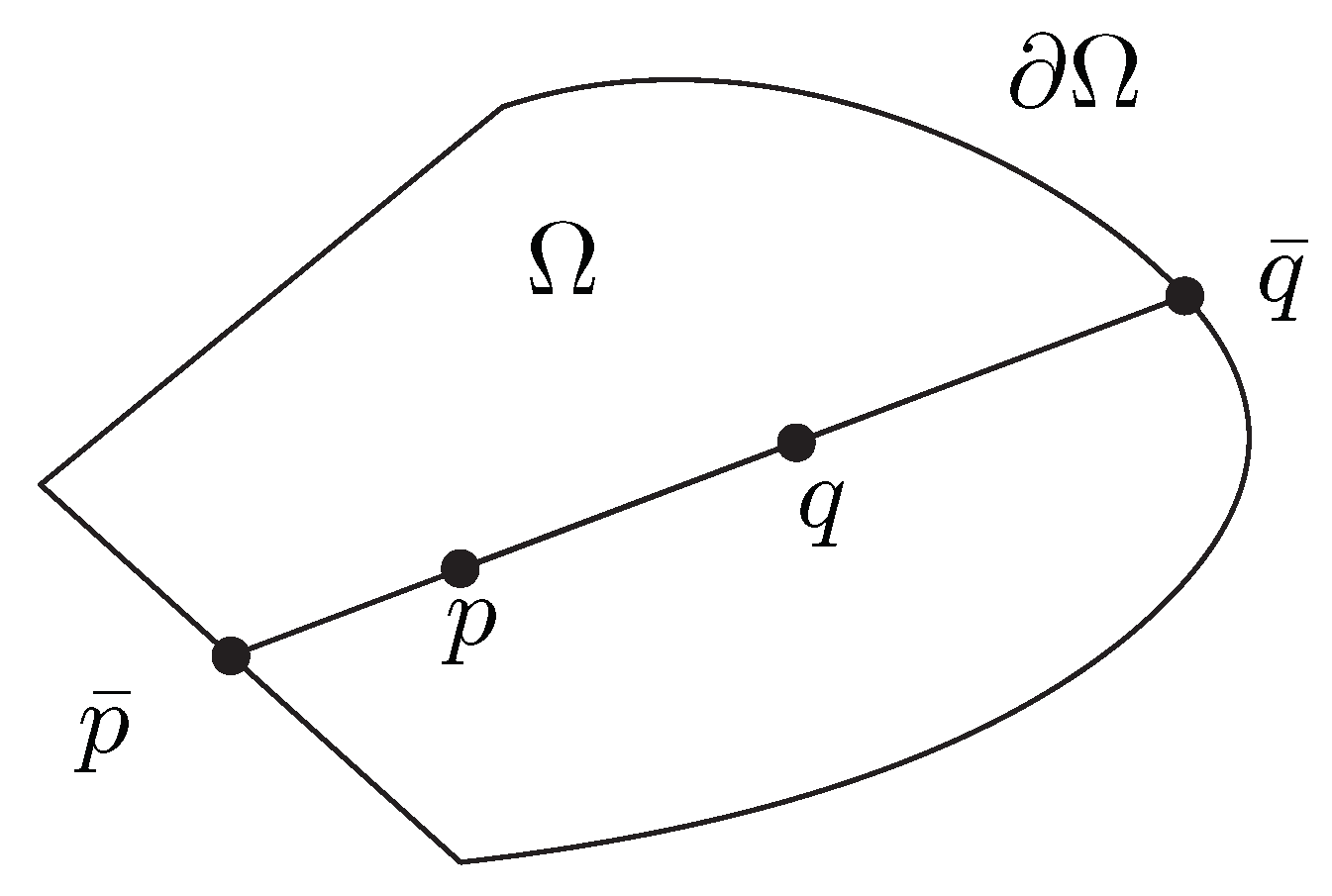

Figure 2.

Hilbert distance induced by a bounded open convex domain .

The Hilbert distance is a metric distance which does not depend on the underlying norm of the vector space:

Proposition 1 (Formula of Hilbert distance).

The Hilbert distance between two points p and q of an open-bounded convex domain Ω is

where and are the two intersection points of the line with the boundary of the domain Ω.

Proof.

For distinct points p and q of , let be such that , and such that . Then we have , , and . Thus, we get

and if and only if . ☐

We may also write the source points p and q as linear interpolations of the extremal points and on the boundary: and with for distinct points p and q. In that case, the Hilbert distance can be written as

The projective Hilbert space is a metric space. Notice that the above formula has demonstrated that

That is, the Hilbert distance between two points of a d-dimensional domain is equivalent to the Hilbert distance between the two points on the 1D domain defined by restricted to the line passing through the points p and q.

Notice that the boundary of the domain may not be smooth (e.g., may be a simplex [86] or a polytope [87]). The Hilbert geometry for the unit disk centered at the origin with yields the Klein model [88] (or Klein-Beltrami model [89]) of hyperbolic geometry. The Hilbert geometry for an ellipsoid yields the Cayley-Klein hyperbolic model [29,35,90] generalizing the Klein model. The Hilbert geometry for a simplicial polytope is isometric to a normed vector space [86,91]. We refer to the handbook [31] for a survey of recent results on Hilbert geometry. The Hilbert geometry of the elliptope (i.e., space of correlation matrices) was studied in [86]. Hilbert geometry may be studied from the viewpoint of Finslerian geometry which is Riemannian if and only if the domain is an ellipsoid (i.e., Klein or Cayley-Klein hyperbolic geometries). Finally, it is interesting to observe the similarity of the Hilbert distance which relies on a geometric cross-ratio with the Siegel distance (Equation (55)) in the upper space which relies on a matrix generalization of the cross-ratio (Equation (57)).

5.2. Hilbert Geometry of the Siegel Disk Domain

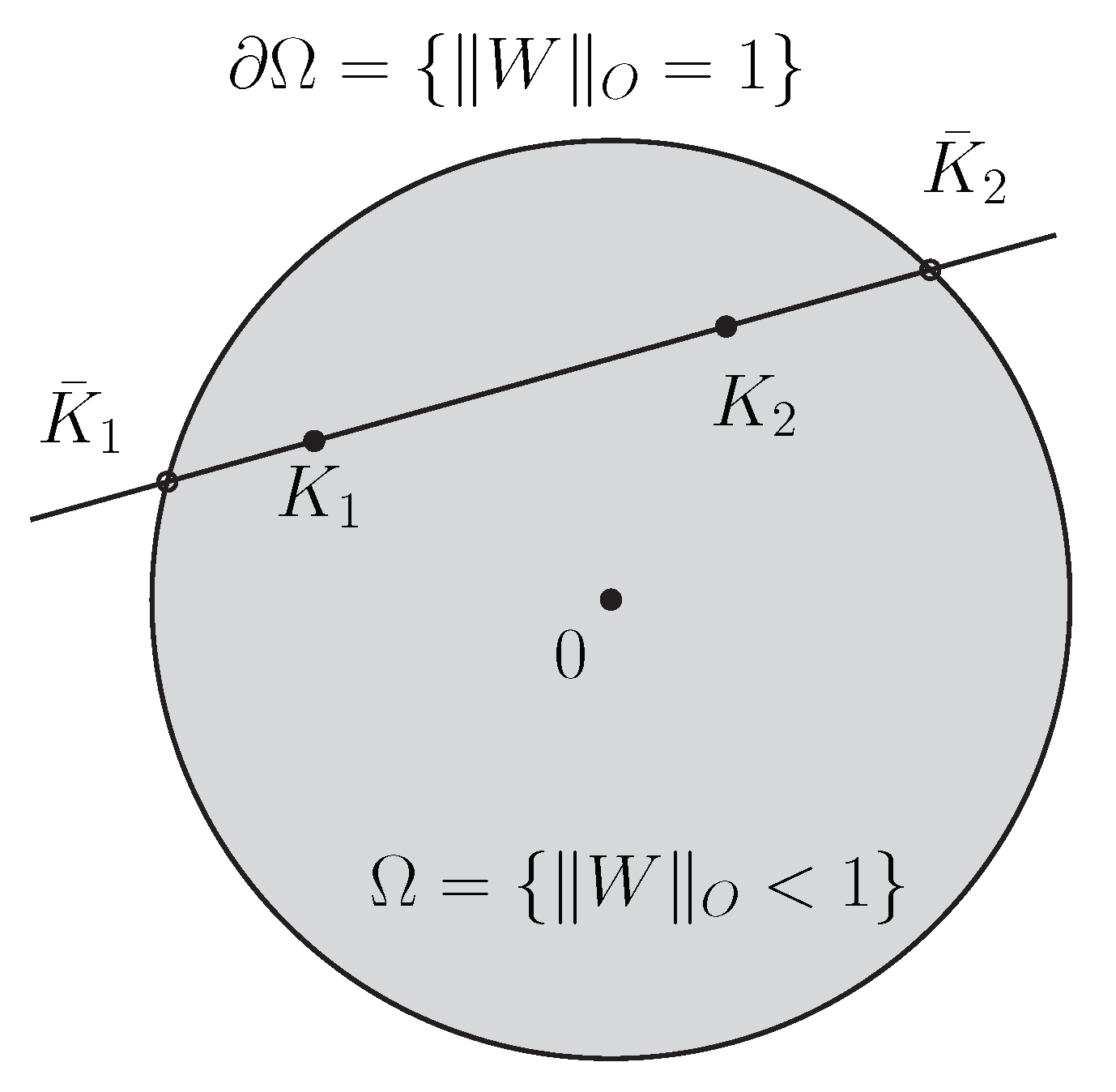

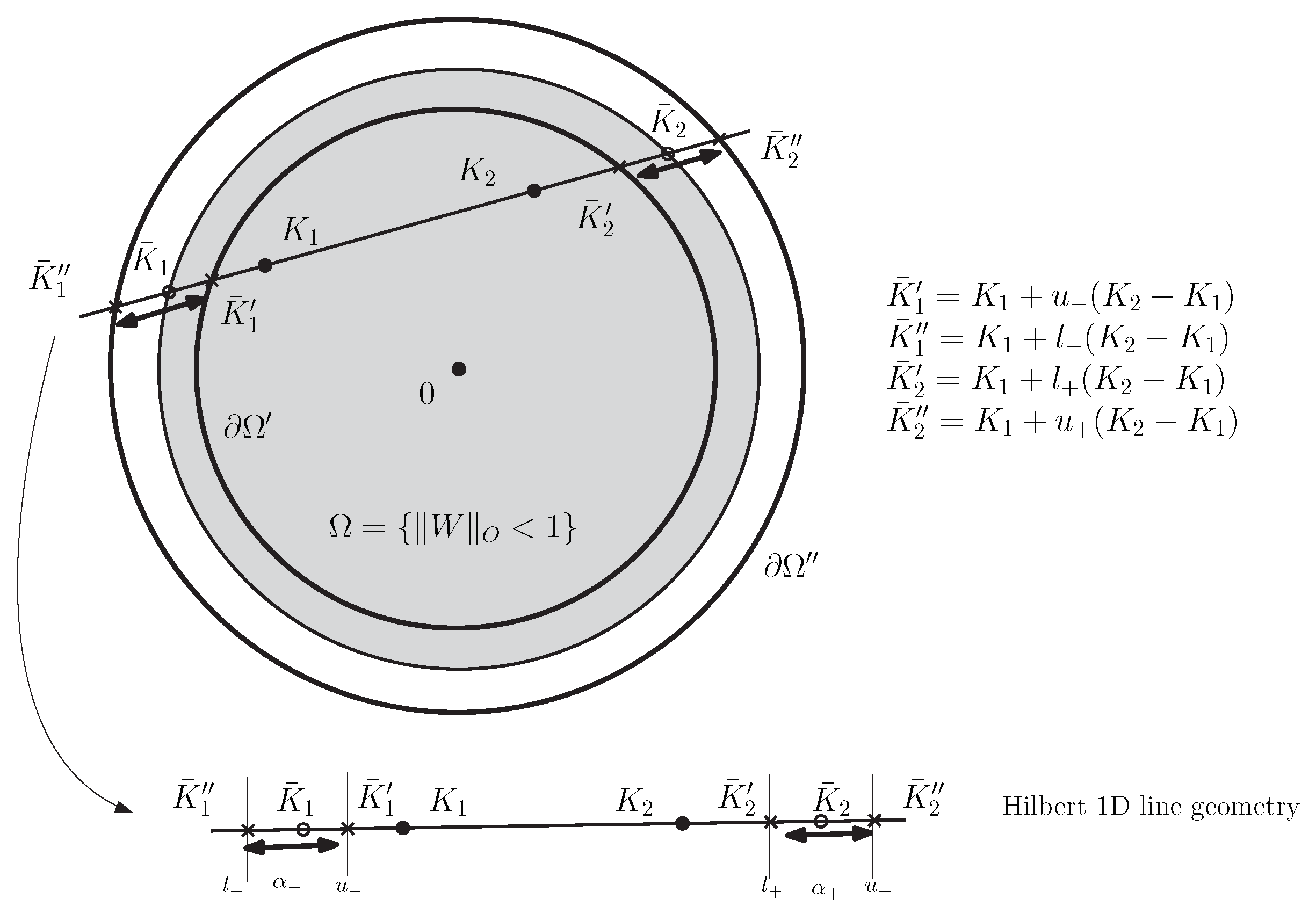

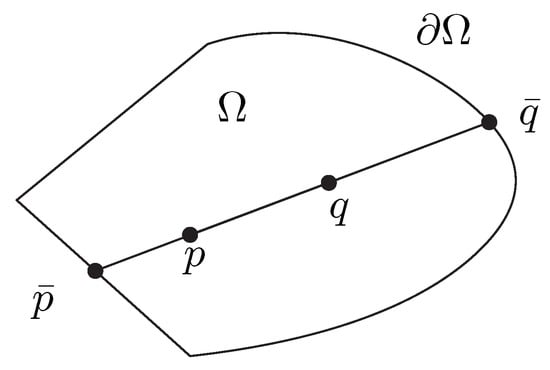

Let us consider the Siegel–Klein disk model which is defined as the Hilbert geometry for the Siegel disk domain as depicted in Figure 3 with .

where

Definition 2 (Siegel–Klein geometry).

When , the Siegel–Klein disk is the Klein disk model of hyperbolic geometry, and the Klein distance [34] between two any points and restricted to the unit disk is

The Siegel–Klein disk model is the Hilbert geometry for the open-bounded convex domain with prescribed constant . The Siegel–Klein distance is

Figure 3.

Hilbert geometry for the Siegel disk: The Siegel–Klein disk model.

This formula can be retrieved from the Hilbert distance induced by the Klein unit disk [29].

5.3. Calculating and Approximating the Siegel–Klein Distance

The Siegel disk domain can be rewritten using the operator norm as

Let denote the line passing through (matrix) points and . That line intersects the Shilov boundary when

when , there are two unique solutions since a line intersects the boundary of a bounded open convex domain in at most two points: Let one solution be with , and the other solution be with . The Siegel–Klein distance is then defined as

where and are the extremal matrices belonging to the Shilov boundary .

Notice that matrices and/or may be rank-deficient. We have , see [92].

In practice, we may perform a bisection search on the matrix line to approximate these two extremal points and (such that these matrices are ordered along the line as follows: , , , ). We may find a lower bound for and a upper bound for as follows: We seek on the line such that falls outside the Siegel disk domain:

Since is a matrix norm, we have

Thus, we deduce that

5.4. Siegel–Klein Distance to the Origin

When (the 0 matrix denoting the origin of the Siegel disk), and , it is easy to solve the equation:

We have , i.e.,

In that case, the Siegel–Klein distance of Equation (139) is expressed as:

where is defined in Equation (104).

Theorem 1 (Siegel–Klein distance to the origin).

The Siegel–Klein distance of matrix to the origin O is

The constant is chosen in order to ensure that when the corresponding Klein disk has negative unit curvature. The result can be easily extended to the case of the Siegel–Klein distance between and where the origin O belongs to the line . In that case, for some (e.g., where denotes the matrix trace operator). It follows that

Thus, we get the two values defining the intersection of with the Shilov boundary:

We then apply formula Equation (139):

Theorem 2.

The Siegel–Klein distance between two points and on a line passing through the origin is

where .

5.5. Converting Siegel–Poincaré Matrices from/to Siegel–Klein Matrices

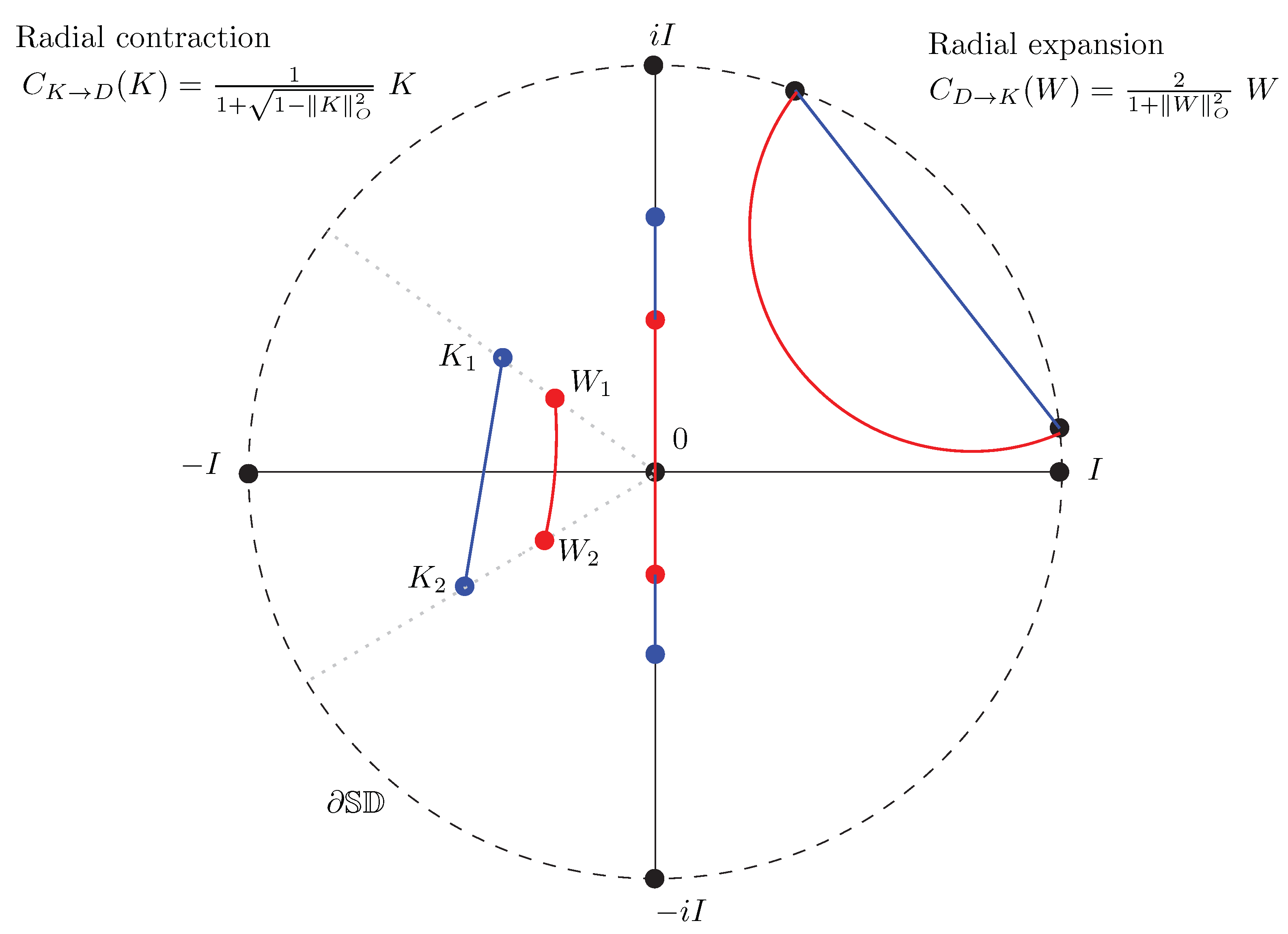

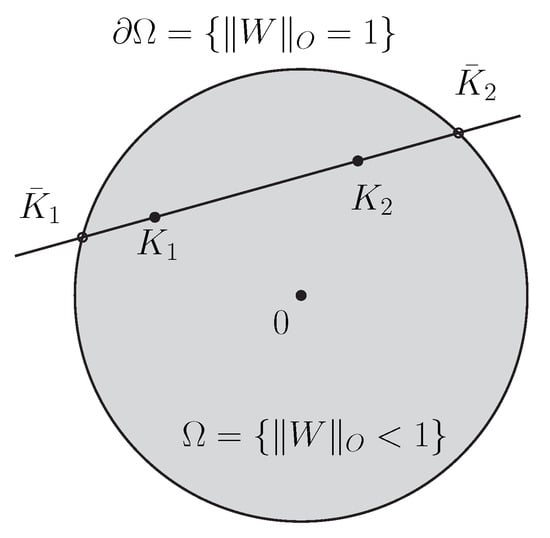

From Equation (149), we deduce that we can convert a matrix K in the Siegel–Klein disk to a corresponding matrix W in the Siegel–Poincaré disk, and vice versa, as follows:

- Converting K to W: We convert a matrix K in the Siegel–Klein model to an equivalent matrix W in the Siegel–Poincaré model as follows:This conversion corresponds to a radial contraction with respect to the origin 0 since (with equality for matrices belonging to the Shilov boundary).

- Converting W to K: We convert a matrix W in the Siegel–Poincaré model to an equivalent matrix K in the Siegel–Klein model as follows:This conversion corresponds to a radial expansion with respect to the origin 0 since (with equality for matrices on the Shilov boundary).

Proposition 2 (Conversions Siegel–Poincaré⇔Siegel–Klein disk).

The conversion of a matrix K of the Siegel–Klein model to its equivalent matrix W in the Siegel–Poincaré model, and vice versa, is done by the following radial contraction and expansion functions: and .

Figure 4 illustrates the radial expansion/contraction conversions between the Siegel–Poincaré and Siegel–Klein matrices.

Figure 4.

Conversions in the Siegel disk domain: Poincaré to/from Klein matrices.

The cross-ratio of four collinear points on a line is such that whenever r belongs to that line. By virtue of this cross-ratio property, the (pre)geodesics in the Hilbert–Klein disk are Euclidean straight. Thus, we can write the pregeodesics as:

Riemannian geodesics are paths which minimize locally the distance and are parameterized proportionally to the arc-length. A pregeodesic is a path which minimizes locally the distance but is not necessarily parameterized proportionally to the arc-length. For implementing geometric intersection algorithms (e.g., a geodesic with a ball), it is enough to consider pregeodesics.

Another way to get a generic closed-form formula for the Siegel–Klein distance is by using the formula for the Siegel–Poincaré disk after converting the matrices to their equivalent matrices in the Siegel–Poincaré disk. We get the following expression:

Theorem 3 (Formula for the Siegel–Klein distance).

The Siegel–Klein distance between and in the Siegel disk is .

The isometries in Hilbert geometry have been studied in [93].

We now turn our attention to a special case where we can report an efficient and exact linear-time algorithm for calculating the Siegel–Klein distance.

5.6. Siegel–Klein Distance between Diagonal Matrices

Let with . When solving for the general case, we seek for the extremal values of such that:

This last equation is reminiscent to a Linear Matrix Inequality [94] (LMI, i.e., with and where the coefficients are however linked between them).

Let us consider the special case of diagonal matrices corresponding to the polydisk domain: and of the Siegel disk domain.

First, let us start with the simple case , i.e., the Siegel disk which is the complex open unit disk . Let with . We have with , and . To find the two intersection points of line with the boundary of , we need to solve . This amounts to solve an ordinary quadratic equation since all coefficients a, b, and c are provably reals. Let be the discriminant ( when ). We get the two solutions and , and apply the 1D formula for the Hilbert distance:

Doing so, we obtain a formula equivalent to Equation (30).

For diagonal matrices with , we get the following system of d inequalities:

For each inequality, we solve the quadratic equation as in the 1d case above, yielding two solutions and . Then we satisfy all those constraints by setting

and we compute the Hilbert distance:

Theorem 4 (Siegel–Klein distance for diagonal matrices).

The Siegel–Klein distance between two diagonal matrices in the Siegel–Klein disk can be calculated exactly in linear time.

Notice that the proof extends to triangular matrices as well.

When the matrices are non-diagonal, we must solve analytically the equation:

with the following Hermitian matrices (with all real eigenvalues):

Although and commute, it is not necessarily the case for and , or and .

When , and are simultaneously diagonalizable via congruence [95], the optimization problem becomes:

where for some , and we apply Theorem 4. The same result applies for simultaneously diagonalizable matrices , and via similarity: with .

Notice that the Hilbert distance (or its squared distance) is not a separable distance, even in the case of diagonal matrices. (However, recall that the squared Siegel–Poincaré distance in the upper plane is separable for diagonal matrices.)

When , we have

We now investigate a guaranteed fast scheme for approximating the Siegel–Klein distance in the general case.

5.7. A Fast Guaranteed Approximation of the Siegel–Klein Distance

In the general case, we use the bisection approximation algorithm which is a geometric approximation technique that only requires the calculation of operator norms (and not the square root matrices required in the functions for calculating the Siegel distance in the disk domain).

We have the following key property of the Hilbert distance:

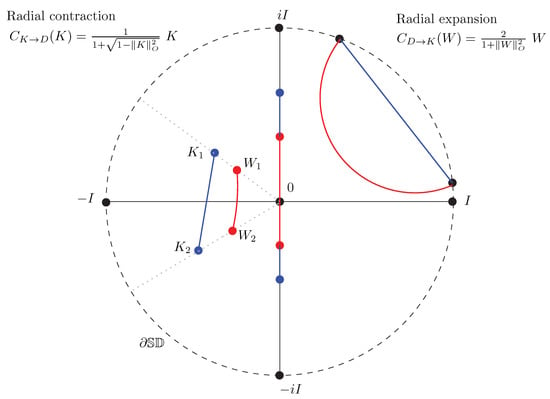

Property 1 (Bounding Hilbert distance).

Let be strictly nested open convex bounded domains. Then we have the following inequality for the corresponding Hilbert distances:

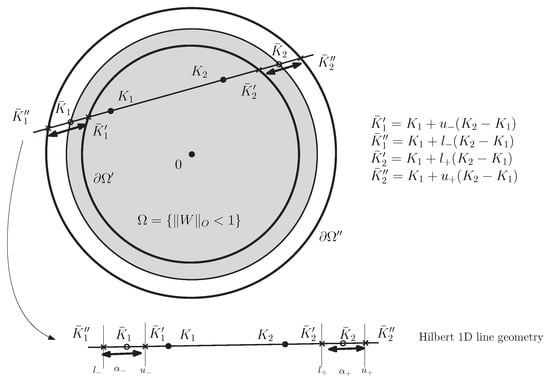

Figure 5 illustrates the Property 1 of Hilbert distances corresponding to nested domains. Notice that when is a large enclosing ball of with radius increasing to infinity, we have , and therefore the Hilbert distance tends to zero.

Figure 5.

Inequalities of the Hilbert distances induced by nested bounded open convex domains.

Proof.

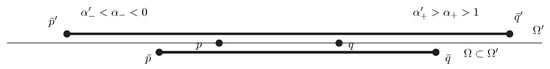

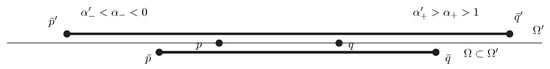

Recall that , i.e., the Hilbert distance with respect to domain can be calculated as an equivalent 1-dimensional Hilbert distance by considering the open-bounded (convex) interval . Furthermore, we have (with set containment ). Therefore let us consider the 1D case as depicted in Figure 6. Let us choose so that we have . In 1D, the Hilbert distance is expressed as

for a prescribed constant . Therefore it follows that

Figure 6.

Comparison of the Hilbert distances and induced by nested open interval domains : .

We can rewrite the argument of the logarithm as follows:

with

Since , we have and , see [29]. Therefore we deduce that when . ☐

Therefore the bisection search for finding the values of and yields both lower and upper bounds on the exact Siegel–Klein distance as follows: Let and where , , , are real values defining the extremities of the intervals. Using Property 1, we get the following theorem:

Theorem 5 (Lower and upper bounds on the Siegel–Klein distance).

The Siegel–Klein distance between two matrices and of the Siegel disk is bounded as follows:

where

Figure 7 depicts the guaranteed lower and upper bounds obtained by performing the bisection search for approximating the point and the points .

Figure 7.

Guaranteed lower and upper bounds for the Siegel–Klein distance by considering nested open matrix balls.

We have:

where denotes the cross-ratio. Hence we have

Notice that the approximation of the Siegel–Klein distance by line bisection requires only to calculate an operator norm at each step: This involves calculating the smallest and largest eigenvalues of M, or the largest eigenvalue of . To get a -approximation, we need to perform dichotomic steps. This yields a fast method to approximate the Siegel–Klein distance compared with the costly exact calculation of the Siegel–Klein distance of Equation (159) which requires calculation of the functions: This involves the calculation of a square root of a complex matrix. Furthermore, notice that the operator norm can be numerically approximated using a Lanczos’s power iteration scheme [96,97] (see also [98]).

5.8. Hilbert-Fröbenius Distances and Fast Simple Bounds on the Siegel–Klein Distance

Let us notice that although the Hilbert distance does not depend on the chosen norm in the vector space, the Siegel complex ball is defined according to the operator norm. In a finite-dimensional vector space, all norms are said “equivalent”: That is, given two norms and of vector space X, there exists positive constants and such that

In particular, this property holds for the operator norm and Fröbenius norm of finite-dimensional complex matrices with positive constants , , and depending on the dimension d of the square matrices:

As mentioned in the introduction, we have .

Thus, the Siegel ball domain may be enclosed by an open Fröbenius ball (for any ) with

Therefore we have

where denotes the Fröbenius-Klein distance, i.e., the Hilbert distance induced by the Fröbenius balls with constant .

Now, we can calculate in closed-form the Fröbenius-Klein distance by computing the two intersection points of the line with the Fröbenius ball . This amounts to solve an ordinary quadratic equation for parameter :

where denotes the coefficient of matrix K at row i and column j. Notice that is a real. Once and are found, we apply the 1D formula of the Hilbert distance of Equation (132).

We summarize the result as follows:

Theorem 6 (Lower bound on Siegel–Klein distance).

The Siegel–Klein distance is lower bounded by the Fröbenius-Klein distance for the unit complex Fröbenius ball, and it can be calculated in time.

6. The Smallest Enclosing Ball in the SPD Manifold and in the Siegel Spaces

The goal of this section is to compare two implementations of a generalization of the Badoiu and Clarkson’s algorithm [47] to approximate the Smallest Enclosing Ball (SEB) of a set of complex matrices: The implementation using the Siegel–Poincaré disk (with respect to the Kobayashi distance ), and the implementation using the Siegel–Klein disk (with respect to the Siegel–Klein distance ).

In general, we may encode a pair of features in applications as a Riemann matrix , and consider the underlying geometry of the Siegel upper space. For example, anomaly detection of time-series maybe considered by considering where is the covariance matrix at time t and is the approximation of the derivative of the covariance matrix (a symmetric matrix) for a small prescribed value of .

The generic Badoiu and Clarkson’s algorithm [47] (BC algorithm) for a set of n points in a metric space is described as follows:

- Initialization: Let and

- Repeat L times:

- -

- Calculate the farthest point: .

- -

- Geodesic cut: Let , where is the point which satisfies

- -

- .

This elementary SEB approximation algorithm has been instantiated to various metric spaces with proofs of convergence according to the sequence : see [43] for the case of hyperbolic geometry, ref. [46] for Riemannian geometry with bounded sectional curvatures, ref. [99,100] for dually flat spaces (a non-metric space equipped with a Bregman divergences [101,102]), etc. In Cartan-Hadamard manifolds [46], we require the series to diverge while the series to converge. The number of iterations L to get a -approximation of the SEB depends on the underlying geometry and the sequence . For example, in Euclidean geometry, setting with steps yield a -approximation of the SEB [47].

We start by recalling the Riemannian generalization of the BC algorithm, and then consider the Siegel spaces.

6.1. Approximating the Smallest Enclosing Ball in Riemannian Spaces

We first instantiate a particular example of Riemannian space, the space of Symmetric Positive-Definite matrix manifold (PD or SPD manifold for short), and then consider the general case on a Riemannian manifold .

Approximating the SEB on the SPD Manifold

Given n positive-definite matrices [103,104 of size , we ask to calculate the SEB with circumcenter minimizing the following objective function:

This is a minimax optimization problem. The SPD cone is not a complete metric space with respect to the Fröbenius distance, but is a complete metric space with respect to the natural Riemannian distance.

When the minimization is performed with respect to the Fröbenius distance, we can solve this problem using techniques of Euclidean computational geometry [33,47] by vectorizing the PSD matrices into corresponding vectors of such that , where vectorizes a matrix by stacking its column vectors. In fact, since the matrices are symmetric, it is enough to half-vectorize the matrices: , where , see [50].

Property 2.

The smallest enclosing ball of a finite set of positive-definite matrices is unique.

Let us mention the two following proofs:

- The SEB is well-defined and unique since the SPD manifold is a Bruhat–Tits space: That is, a complete metric space enjoying a semi-parallelogram law: For any and geodesic midpoint (see below), we have:See [44] page 83 or [105] Chapter 6). In a Bruhat–Tits space, the SEB is guaranteed to be unique [44,106].

- Another proof of the uniqueness of the SEB on a SPD manifold consists of noticing that the SPD manifold is a Cartan-Hadamard manifold [46], and the SEB on Cartan-Hadamard manifolds are guaranteed to be unique.

We shall use the invariance property of the Riemannian distance by congruence:

In particular, choosing , we get

The geodesic from I to P is . The set of the d eigenvalues of coincide with the set of eigenvalues of P raised to the power (up to a permutation).

Thus, to cut the geodesic , we must solve the following problem:

That is

The solution is . Thus, . For arbitrary and , we first apply the congruence transformation with , use the solution , and apply the inverse congruence transformation with . It follows the theorem:

Theorem 7 (Geodesic cut on the SPD manifold).

For any , we have the closed-form expression of the geodesic cut on the manifold of positive-definite matrices:

The matrix can be rewritten using the orthogonal eigendecomposition as , where D is the diagonal matrix of generalized eigenvalues. Thus, the PD geodesic can be rewritten as

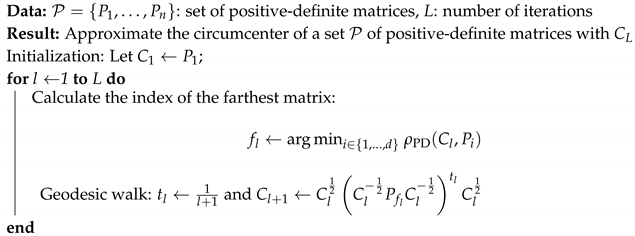

We instantiate the algorithm of Badoiu and Clarkson [47] to a finite set of n positive-definite matrices in Algorithm 1.

| Algorithm 1: Algorithm to approximate the circumcenter of a set of positive-definite matrices. |

|

The complexity of the algorithm is in where T is the number of iterations, d the row dimension of the square matrices and n the number of matrices.

Observe that the solution corresponds to the arc-length parameterization of the geodesic with boundary values on the SPD manifold:

The curve is a geodesic for any affine-invariant metric distance where is a symmetric gauge norm [107].

In fact, we have shown the following property:

Property 3 (Riemannian geodesic cut).

Let denote the Riemannian geodesic linking p and q on a Riemannian manifold (i.e., parameterized proportionally to the arc-length and with respect to the Levi–Civita connection induced by the metric tensor g). Then we have

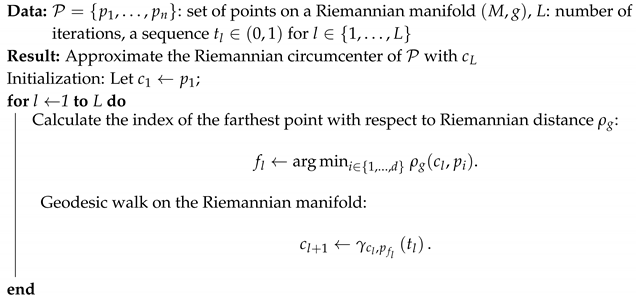

We report the generic Riemannian approximation algorithm in Algorithm 2.

| Algorithm 2: Algorithm to approximate the Riemannian circumcenter of a set of points. |

|

Theorem 1 of [46] guarantees the convergence of the Algorithm 2 algorithm provided that we have a lower bound and an upper bound on the sectional curvatures of the manifold . The sectional curvatures of the PD manifold have been proven to be negative [108]. The SPD manifold is a Cartan-Hadamard manifold with scalar curvature [109] depending on the dimension d of the matrices. Notice that we can identify with an element of the quotient space since is the isotropy subgroup of the for the action (i.e., when ). Thus, we have . The SEB with respect to the Thompson metric

has been studied in [107].

6.2. Implementation in the Siegel–Poincaré Disk

Given n complex matrices , we ask to find the smallest-radius enclosing ball with center minimizing the following objective function:

This problem may have potential applications in image morphology [110] or anomaly detection of covariance matrices [39]. We may model the dynamics of a covariance matrix time-series by the representation where and use the Siegel SEB to detect anomalies, see [40] for detection anomaly based on Bregman SEBs.

The Siegel–Poincaré upper plane and disk are not Bruhat–Tits space, but spaces of non-positive curvatures [111]. Indeed, when , the Poincaré disk is not a Bruhat space.

Notice that when , the hyperbolic ball in the Poincaré disk have Euclidean shape. This is not true anymore when : Indeed, the equation of the ball centered at the origin 0:

amounts to

when , , and Poincaré balls have Euclidean shapes. Otherwise, when , and is not a complex Fröbenius ball.

To apply the generic algorithm, we need to implement the geodesic cut operation . We consider the complex symplectic map in the Siegel disk that maps to 0 and to . Then the geodesic between 0 and is a straight line.

We need to find (with ) such that . That is, we shall solve the following equation:

We find the exact solution as

Proposition 3 (Siegel–Poincaré geodesics from the origin).

The geodesic in the Siegel disk is

with

Thus, the midpoint of and can be found as follows:

where

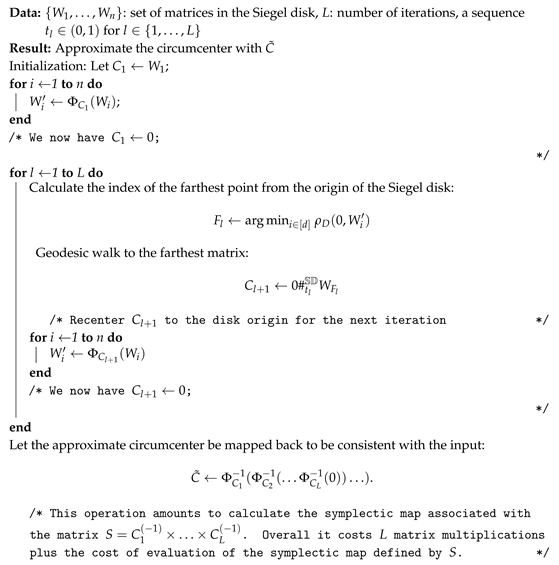

To summarize, Algorithm 3 recenters at every step the current center to the Siegel disk origin 0.

| Algorithm 3: Algorithm to approximate the circumcenter of a set of matrices in the Siegel disk. |

|

The farthest point to the current approximation of the circumcenter can be calculated using the data-structure of the Vantage Point Tree (VPT), see [112].

The Riemannian curvature tensor of the Siegel space is non-positive [1,113] and the sectional curvatures are non-positive [111] and bounded above by a negative constant. In our implementation, we chose the step sizes . Barbaresco [14] also adopted this iterative recentering operation for calculating the median in the Siegel disk. However at the end of his algorithm, he does not map back the median among the source matrix set. Recentering is costly because we need to calculate a square root matrix to calculate . A great advantage of Siegel–Klein space is that we have straight geodesics anywhere in the disk so we do not need to perform recentering.

6.3. Fast Implementation in the Siegel–Klein Disk

The main advantage of implementing the Badoiu and Clarkson’s algorithm [47] in the Siegel–Klein disk is to avoid to perform the costly recentering operations (which require calculation of square root matrices). Moreover, we do not have to roll back our approximate circumcenter at the end of the algorithm.

First, we state the following expression of the geodesics in the Siegel disk:

Proposition 4 (Siegel–Klein geodesics from the origin).

The geodesic from the origin in the Siegel–Klein disk is expressed

with

The proof follows straightforwardly from Proposition 3 because we have .

7. Conclusions and Perspectives

In this work, we have generalized the Klein model of hyperbolic geometry to the Siegel disk domain of complex matrices by considering the Hilbert geometry induced by the Siegel disk, an open-bounded convex complex matrix domain. We compared this Siegel–Klein disk model with its Hilbert distance called the Siegel–Klein distance to both the Siegel–Poincaré disk model (Kobayashi distance ) and the Siegel–Poincaré upper plane (Siegel distance ). We show how to convert matrices W of the Siegel–Poincaré disk model into equivalent matrices K of Siegel–Klein disk model and matrices Z in the Siegel–Poincaré upper plane via symplectic maps. When the dimension , we have the following equivalent hyperbolic distances:

Since the geodesics in the Siegel–Klein disk are by construction straight, this model is well-suited to implement techniques of computational geometry [33]. Furthermore, the calculation of the Siegel–Klein disk does not require the recentering of one of its arguments to the disk origin, a computationally costly Siegel translation operation. We reported a linear-time algorithm for computing the exact Siegel–Klein distance between diagonal matrices of the disk (Theorem 4), and a fast way to numerically approximate the Siegel distance by bisection searches with guaranteed lower and upper bounds (Theorem 5). Finally, we demonstrated the algorithmic advantage of using the Siegel–Klein disk model instead of the Siegel–Poincaré disk model for approximating the smallest-radius enclosing ball of a finite set of complex matrices in the Siegel disk. In future work, we shall consider more generally the Hilbert geometry of homogeneous complex domains and investigate quantitatively the Siegel–Klein geometry in applications ranging from radar processing [14], image morphology [28], computer vision, to machine learning [24]. For example, the fast and robust guaranteed approximation of the Siegel–Klein distance may prove useful for performing clustering analysis in image morphology [28,38,110].

Supplementary Materials

The following are available online at https://franknielsen.github.io/SiegelKlein/.

Funding

This research received no external funding.

Acknowledgments

The author would like to thank Marc Arnaudon, Frédéric Barbaresco, Yann Cabanes, and Gaëtan Hadjeres for fruitful discussions, pointing out several relevant references, and feedback related to the Siegel domains.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following notations and main formulas are used in this manuscript:

| Complex matrices: | |

| Number field | Real or complex |

| Space of square matrices in | |

| Space of real symmetric matrices | |

| 0 | matrix with all coefficients equal to zero (disk origin) |

| Fröbenius norm | |

| Operator norm | |

| Domains: | |

| Cone of SPD matrices | |

| Siegel–Poincaré upper plane | |

| Siegel–Poincaré disk | |

| Distances: | |

| Siegel distance | |

| Upper plane metric | |

| PD distance | |

| PD metric | |

| Kobayashi distance | |

| Translation in the disk | |

| Disk distance to origin | |

| Siegel–Klein distance | |

| (), () | |

| Seigel-Klein distance to 0 | |

| Symplectic maps and groups: | |

| Symplectic map | with (upper plane) |

| with (disk) | |

| Symplectic group | |

| group composition law | matrix multiplication |

| group inverse law | |

| Translation in of to | |

| symplectic orthogonal matrices | |

| (rotations in ) | |

| Translation to 0 in | |

| Isometric orientation-preserving group of generic space | |

| group of Möbius transformations |

Appendix A. The Deflation Method: Approximating the Eigenvalues

A matrix is diagonalizable if there exists a non-singular matrix P and a diagonal matrix such that . A Hermitian matrix (i.e., ) is a diagonalizable self-adjoint matrix which has all real eigenvalues and admits a basis of orthogonal eigenvectors. We can compute all eigenvalues of a Hermitian matrix M by repeatedly applying the (normalized) power method using the so-called deflation method (see [114], Chapter 10, and [115], Chapter 7). The deflation method proceeds iteratively to calculate numerically the (normalized) eigenvalues ’s and eigenvectors ’s as follows:

- Let and .

- Initialize at random a normalized vector (i.e., on the unit sphere with )

- For j in :

- Let and

- Let . If then let and goto 2.

The deflation method reports the eigenvalues ’s such that

where is the dominant eigenvalue.

The overall number of normalized power iterations is (matrix-vector multiplication), where the number of iterations of the normalized power method at stage l can be defined such that we have , for a prescribed value of . Notice that the numerical errors of the eigenpairs ’s propagate and accumulate at each stage. That is, at stage l, the deflation method calculates the dominant eigenvector on a residual perturbated matrix . The overall approximation of the eigendecomposition can be appreciated by calculating the last residual matrix:

The normalized power method exhibits linear convergence for diagonalizable matrices and quadratic convergence for Hermitian matrices. Other numerical methods for numerically calculating the eigenvalues include the Krylov subspace techniques [114,115,116].

- Snippet Code

We implemented our software library and smallest enclosing ball algorithms in Java™.

The code below is a snippet written in Maxima (available in the supplementary material): A computer algebra system, freely downloadable at http://maxima.sourceforge.net/

- /* Code in Maxima */

- /* Calculate the Siegel metric distance in the Siegel upper space */

- load(eigen);

- /* symmetric */

- S1: matrix( [0.265, 0.5],

- [0.5 , -0.085]);

- /* positive-definite */

- P1: matrix( [0.235, 0.048],

- [0.048 , 0.792]);

- /* Matrix in the Siegel upper space */

- Z1: S1+%i*P1;

- S2: matrix( [-0.329, -0.2],

- [-0.2 , -0.382]);

- P2: matrix([0.464, 0.289],

- [0.289 , 0.431]);

- Z2: S2+%i*P2;

- /* Generalized Moebius transformation */

- R(Z1,Z2) :=

- ((Z1-Z2).invert(Z1-conjugate(Z2))).((conjugate(Z1)-conjugate(Z2)).invert(conjugate(Z1)-Z2));

- R12: ratsimp(R(Z1,Z2));

- ratsimp(R12[2][1]-conjugate(R12[1][2]));

- /* Retrieve the eigenvalues: They are all reals */

- r: float(eivals(R12))[1];

- /* Calculate the Siegel distance */

- distSiegel: sum(log( (1+sqrt(r[i]))/(1-sqrt(r[i])) )**2, i, 1, 2);

References

- Siegel, C.L. Symplectic geometry. Am. J. Math. 1943, 65, 1–86. [Google Scholar] [CrossRef]

- Hua, L.K. On the theory of automorphic functions of a matrix variable I: Geometrical basis. Am. J. Math. 1944, 66, 470–488. [Google Scholar] [CrossRef]

- Siegel, C.L. Einführung in die Theorie der Modulfunktionen n-ten Grades. Math. Ann. 1939, 116, 617–657. [Google Scholar] [CrossRef]

- Blair, D.E. Riemannian Geometry of Contact and Symplectic Manifolds; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Freitas, P.J. On the Action of the Symplectic Group on the Siegel upper Half Plane. Ph.D. Thesis, University of Illinois at Chicago, Chicago, IL, USA, 1999. [Google Scholar]

- Koufany, K. Analyse et Géométrie des Domaines Bornés Symétriques. Ph.D. Thesis, Université Henri Poincaré, Nancy, France, 2006. [Google Scholar]

- Cartan, É. Sur les domaines bornés homogènes de l’espace de n variables complexes. In Abhandlungen aus dem Mathematischen Seminar der Universität Hamburg; Springer: Berlin/Heidelberg, Germany, 1935; Volume 11, pp. 116–162. [Google Scholar]

- Koszul, J.L. Exposés sur les Espaces Homogènes Symétriques; Sociedade de Matematica: Rio de Janeiro, Brazil, 1959. [Google Scholar]

- Berezin, F.A. Quantization in complex symmetric spaces. Math. USSR-Izv. 1975, 9, 341. [Google Scholar] [CrossRef]

- Förstner, W.; Moonen, B. A metric for covariance matrices. In Geodesy-the Challenge of the 3rd Millennium; Springer: Berlin/Heidelberg, Germany, 2003; pp. 299–309. [Google Scholar]

- Harandi, M.T.; Salzmann, M.; Hartley, R. From manifold to manifold: Geometry-aware dimensionality reduction for SPD matrices. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 17–32. [Google Scholar]

- Barbaresco, F. Innovative tools for radar signal processing based on Cartan’s geometry of SPD matrices & information geometry. In Proceedings of the 2008 IEEE Radar Conference, Rome, Italy, 26–30 May 2008; pp. 1–6. [Google Scholar]

- Barbaresco, F. Robust statistical radar processing in Fréchet metric space: OS-HDR-CFAR and OS-STAP processing in Siegel homogeneous bounded domains. In Proceedings of the 12th IEEE International Radar Symposium (IRS), Leipzig, Germany, 7–9 September 2011; pp. 639–644. [Google Scholar]

- Barbaresco, F. Information geometry of covariance matrix: Cartan-Siegel homogeneous bounded domains, Mostow/Berger fibration and Fréchet median. In Matrix Information Geometry; Springer: Berlin/Heidelberg, Germany, 2013; pp. 199–255. [Google Scholar]

- Barbaresco, F. Information geometry manifold of Toeplitz Hermitian positive definite covariance matrices: Mostow/Berger fibration and Berezin quantization of Cartan-Siegel domains. Int. J. Emerg. Trends Signal Process. 2013, 1, 1–11. [Google Scholar]

- Jeuris, B.; Vandebril, R. The Kähler mean of block-Toeplitz matrices with Toeplitz structured blocks. SIAM J. Matrix Anal. Appl. 2016, 37, 1151–1175. [Google Scholar] [CrossRef]

- Liu, C.; Si, J. Positive Toeplitz operators on the Bergman spaces of the Siegel upper half-space. Commun. Math. Stat. 2019, 8, 113–134. [Google Scholar] [CrossRef]

- Chevallier, E.; Forget, T.; Barbaresco, F.; Angulo, J. Kernel density estimation on the Siegel space with an application to radar processing. Entropy 2016, 18, 396. [Google Scholar] [CrossRef]

- Burbea, J. Informative Geometry of Probability Spaces; Technical report; Pittsburgh Univ. PA Center: Pittsburgh, PA, USA, 1984. [Google Scholar]

- Calvo, M.; Oller, J.M. A distance between multivariate normal distributions based in an embedding into the Siegel group. J. Multivar. Anal. 1990, 35, 223–242. [Google Scholar] [CrossRef]

- Calvo, M.; Oller, J.M. A distance between elliptical distributions based in an embedding into the Siegel group. J. Comput. Appl. Math. 2002, 145, 319–334. [Google Scholar] [CrossRef]

- Tang, M.; Rong, Y.; Zhou, J. An information geometric viewpoint on the detection of range distributed targets. Math. Probl. Eng. 2015, 2015. [Google Scholar] [CrossRef]

- Tang, M.; Rong, Y.; Zhou, J.; Li, X.R. Information geometric approach to multisensor estimation fusion. IEEE Trans. Signal Process. 2018, 67, 279–292. [Google Scholar] [CrossRef]

- Krefl, D.; Carrazza, S.; Haghighat, B.; Kahlen, J. Riemann-Theta Boltzmann machine. Neurocomputing 2020, 388, 334–345. [Google Scholar] [CrossRef]

- Ohsawa, T. The Siegel upper half space is a Marsden–Weinstein quotient: Symplectic reduction and Gaussian wave packets. Lett. Math. Phys. 2015, 105, 1301–1320. [Google Scholar] [CrossRef]

- Froese, R.; Hasler, D.; Spitzer, W. Transfer matrices, hyperbolic geometry and absolutely continuous spectrum for some discrete Schrödinger operators on graphs. J. Funct. Anal. 2006, 230, 184–221. [Google Scholar] [CrossRef][Green Version]

- Ohsawa, T.; Tronci, C. Geometry and dynamics of Gaussian wave packets and their Wigner transforms. J. Math. Phys. 2017, 58, 092105. [Google Scholar] [CrossRef]

- Lenz, R. Siegel Descriptors for Image Processing. IEEE Signal Process. Lett. 2016, 23, 625–628. [Google Scholar] [CrossRef]

- Richter-Gebert, J. Perspectives on Projective Geometry: A Guided Tour through Real and Complex Geometry; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Hilbert, D. Über die gerade Linie als kürzeste Verbindung zweier Punkte (About the straight line as the shortest connection between two points). Math. Ann. 1895, 46, 91–96. [Google Scholar] [CrossRef]

- Papadopoulos, A.; Troyanov, M. Handbook of Hilbert Geometry, Volume 22 of IRMA Lectures in Mathematics and Theoretical Physics; European Mathematical Society (EMS): Zürich, Switzerland, 2014. [Google Scholar]

- Liverani, C.; Wojtkowski, M.P. Generalization of the Hilbert metric to the space of positive definite matrices. Pac. J. Math. 1994, 166, 339–355. [Google Scholar] [CrossRef]

- Boissonnat, J.D.; Yvinec, M. Algorithmic Geometry; Cambridge University Press: Cambridge, UK, 1998. [Google Scholar]

- Nielsen, F.; Nock, R. Hyperbolic Voronoi diagrams made easy. In Proceedings of the 2010 IEEE International Conference on Computational Science and Its Applications, Fukuoka, Japan, 23–26 March 2010; pp. 74–80. [Google Scholar]

- Nielsen, F.; Muzellec, B.; Nock, R. Classification with mixtures of curved Mahalanobis metrics. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 241–245. [Google Scholar]

- Nielsen, F.; Nock, R. Visualizing hyperbolic Voronoi diagrams. In Proceedings of the Thirtieth Annual Symposium on Computational Geometry, Kyoto, Japan, 8–11 June 2014; pp. 90–91. [Google Scholar]

- Nielsen, F.; Nock, R. The hyperbolic Voronoi diagram in arbitrary dimension. arXiv 2012, arXiv:1210.8234. [Google Scholar]

- Angulo, J.; Velasco-Forero, S. Morphological processing of univariate Gaussian distribution-valued images based on Poincaré upper-half plane representation. In Geometric Theory of Information; Springer: Berlin/Heidelberg, Germany, 2014; pp. 331–366. [Google Scholar]

- Tavallaee, M.; Lu, W.; Iqbal, S.A.; Ghorbani, A.A. A novel covariance matrix based approach for detecting network anomalies. In Proceedings of the 6th IEEE Annual Communication Networks and Services Research Conference (cnsr 2008), Halifax, NS, Canada, 5–8 May 2008; pp. 75–81. [Google Scholar]

- Cont, A.; Dubnov, S.; Assayag, G. On the information geometry of audio streams with applications to similarity computing. IEEE Trans. Audio Speech Lang. Process. 2010, 19, 837–846. [Google Scholar] [CrossRef]

- Mazumdar, A.; Polyanskiy, Y.; Saha, B. On Chebyshev radius of a set in hamming space and the closest string problem. In Proceedings of the 2013 IEEE International Symposium on Information Theory, Istanbul, Turkey, 7–12 July 2013; pp. 1401–1405. [Google Scholar]

- Welzl, E. Smallest enclosing disks (balls and ellipsoids). In New Results and New Trends in Computer Science; Springer: Berlin/Heidelberg, Germany, 1991; pp. 359–370. [Google Scholar]

- Nielsen, F.; Hadjeres, G. Approximating covering and minimum enclosing balls in hyperbolic geometry. In Proceedings of the International Conference on Geometric Science of Information, Palaiseau, France, 28–30 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 586–594. [Google Scholar]

- Lang, S. Math Talks for Undergraduates; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Nielsen, F.; Bhatia, R. Matrix Information Geometry; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Arnaudon, M.; Nielsen, F. On approximating the Riemannian 1-center. Comput. Geom. 2013, 46, 93–104. [Google Scholar] [CrossRef]

- Badoiu, M.; Clarkson, K.L. Smaller core-sets for balls. In Proceedings of the Fourteenth Annual ACM-SIAM Symposium on Discrete Algorithms, Baltimore, MD, USA, 12–14 January 2003; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2003; pp. 801–802. [Google Scholar]

- Tsang, I.H.; Kwok, J.Y.; Zurada, J.M. Generalized core vector machines. IEEE Trans. Neural Netw. 2006, 17, 1126–1140. [Google Scholar] [CrossRef] [PubMed]

- Paulsen, V.I.; Raghupathi, M. An Introduction to the Theory of Reproducing Kernel Hilbert Spaces; Cambridge University Press: Cambridge, UK, 2016; Volume 152. [Google Scholar]

- Nielsen, F.; Nock, R. Fast (1+ϵ)-Approximation of the Löwner Extremal Matrices of High-Dimensional Symmetric Matrices. In Computational Information Geometry; Springer: Berlin/Heidelberg, Germany, 2017; pp. 121–132. [Google Scholar]

- Moakher, M. A differential geometric approach to the geometric mean of symmetric positive-definite matrices. SIAM J. Matrix Anal. Appl. 2005, 26, 735–747. [Google Scholar] [CrossRef]

- Niculescu, C.; Persson, L.E. Convex Functions and Their Applications: A Contemporary Approach, 2nd ed.; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Lanczos, C. An Iteration Method for the Solution of the Eigenvalue Problem of Linear Differential and Integral Operators1. J. Res. Natl. Bur. Stand. 1950, 45, 255–282. [Google Scholar] [CrossRef]

- Cullum, J.K.; Willoughby, R.A. Lanczos Algorithms for Large Symmetric Eigenvalue Computations: Vol. 1: Theory; SIAM: Philadelphia, PA, USA, 2002; Volume 41. [Google Scholar]

- Cannon, J.W.; Floyd, W.J.; Kenyon, R.; Parry, W.R. Hyperbolic geometry. Flavors Geom. 1997, 31, 59–115. [Google Scholar]

- Goldman, W.M. Complex Hyperbolic Geometry; Oxford University Press: Oxford, UK, 1999. [Google Scholar]

- Amari, S.I. Information Geometry and Its Applications; Springer: Berlin/Heidelberg, Germany, 2016; Volume 194. [Google Scholar]

- Nielsen, F. An elementary introduction to information geometry. arXiv 2018, arXiv:1808.08271. [Google Scholar]

- Ratcliffe, J.G. Foundations of Hyperbolic Manifolds; Springer: Berlin/Heidelberg, Germany, 1994; Volume 3. [Google Scholar]

- Jin, M.; Gu, X.; He, Y.; Wang, Y. Conformal Geometry: Computational Algorithms and Engineering Applications; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Hotelling, H. Spaces of statistical parameters. Bull. Am. Math. Soc. 1930, 36, 191. [Google Scholar]

- Rao, C.R. Information and accuracy attainable in the estimation of statistical parameters. Bull Calcutta. Math. Soc. 1945, 37, 81–91. [Google Scholar]

- Nielsen, F. Cramér-Rao lower bound and information geometry. In Connected at Infinity II; Springer: Berlin/Heidelberg, Germany, 2013; pp. 18–37. [Google Scholar]

- Sun, K.; Nielsen, F. Relative Fisher information and natural gradient for learning large modular models. In Proceedings of the International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 3289–3298. [Google Scholar]

- Komaki, F. Bayesian prediction based on a class of shrinkage priors for location-scale models. Ann. Inst. Stat. Math. 2007, 59, 135–146. [Google Scholar] [CrossRef]

- Namikawa, Y. The Siegel upperhalf plane and the symplectic group. In Toroidal Compactification of Siegel Spaces; Springer: Berlin/Heidelberg, Germany, 1980; pp. 1–6. [Google Scholar]

- Riemann, B. Theorie der Abel’schen Functionen. J. Für Die Reine Und Angew. Math. 1857, 54, 101–155. [Google Scholar]

- Riemann, B. Über das Verschwinden der Theta-Functionen. Borchardt’s [= Crelle’s] J. Für Reine Und Angew. Math. 1865, 65, 214–224. [Google Scholar]

- Swierczewski, C.; Deconinck, B. Computing Riemann Theta functions in Sage with applications. Math. Comput. Simul. 2016, 127, 263–272. [Google Scholar] [CrossRef]

- Agostini, D.; Chua, L. Computing Theta Functions with Julia. arXiv 2019, arXiv:1906.06507. [Google Scholar]

- Agostini, D.; Améndola, C. Discrete Gaussian distributions via theta functions. SIAM J. Appl. Algebra Geom. 2019, 3, 1–30. [Google Scholar] [CrossRef]

- Bucy, R.; Williams, B. A matrix cross ratio theorem for the Riccati equation. Comput. Math. Appl. 1993, 26, 9–20. [Google Scholar] [CrossRef]

- Mackey, D.S.; Mackey, N. On the Determinant of Symplectic Matrices; Manchester Centre for Computational Mathematics: Manchester, UK, 2003. [Google Scholar]

- Rim, D. An elementary proof that symplectic matrices have determinant one. Adv. Dyn. Syst. Appl. (ADSA) 2017, 12, 15–20. [Google Scholar]

- Hua, L.K. Geometries of matrices. II. Study of involutions in the geometry of symmetric matrices. Trans. Am. Math. Soc. 1947, 61, 193–228. [Google Scholar] [CrossRef]

- Wan, Z.; Hua, L. Geometry of matrices; World Scientific: Singapore, 1996. [Google Scholar]

- Clerc, J.L. Geometry of the Shilov boundary of a bounded symmetric domain. In Proceedings of the Tenth International Conference on Geometry, Integrability and Quantization, Varna, Bulgaria, 6–11 June 2008; Institute of Biophysics and Biomedical Engineering, Bulgarian Academy: Varna, Bulgaria, 2009; pp. 11–55. [Google Scholar]

- Freitas, P.J.; Friedland, S. Revisiting the Siegel upper half plane II. Linear Algebra Its Appl. 2004, 376, 45–67. [Google Scholar] [CrossRef][Green Version]

- Bassanelli, G. On horospheres and holomorphic endomorfisms of the Siegel disc. Rend. Del Semin. Mat. Della Univ. Padova 1983, 70, 147–165. [Google Scholar]