Hybrid Harmony Search–Artificial Intelligence Models in Credit Scoring

Abstract

1. Introduction

2. Harmony Search to Modified Harmony Search

2.1. Harmony Search

- Definition of objective function and the required parameters. The parameters are Harmony Memory Size (), Harmony Memory Considering Rate (), Pitch-Adjustment Rate (), bandwidth (), and maximum iterations ().

- Initialization of Harmony Memory (HM). HM consists of the number of possible candidate solutions with n dimensions which depends on the number of decision variables. All the candidate solutions in HM are generated from a uniform distribution that is based on the decision variables’ range.

- Improvisation to generate new harmony. There are two main operators, i.e., to control the exploration and to control the exploitation of the search process. is the probability of selecting a new harmony from HM, while its counterpart is the probability to randomly generates a new harmony. A low indicates high explorative power of the search process, because the search process will continuously generate a new harmony out of HM by exploring different search spaces. is the probability to improvise the selected harmony from HM by moving to the neighbouring values with a step size of (for continuous variables) or one step to the left or right (for discrete variables). A low indicates low exploitation power to conduct local exploitation of the harmony around its neighbourhood.

- Update . The new harmony from (3) is evaluated against the fitness function. Replace the worst solution in HM with the new harmony if it has a better fitness value.

- Termination. Repeat (3) and (4) until has reached.

2.2. Numerical Experiment Part I: Potential of HS Compared to GS

2.3. Numerical Experiment Part II: Intuition to Develop MHS Hybrid Models

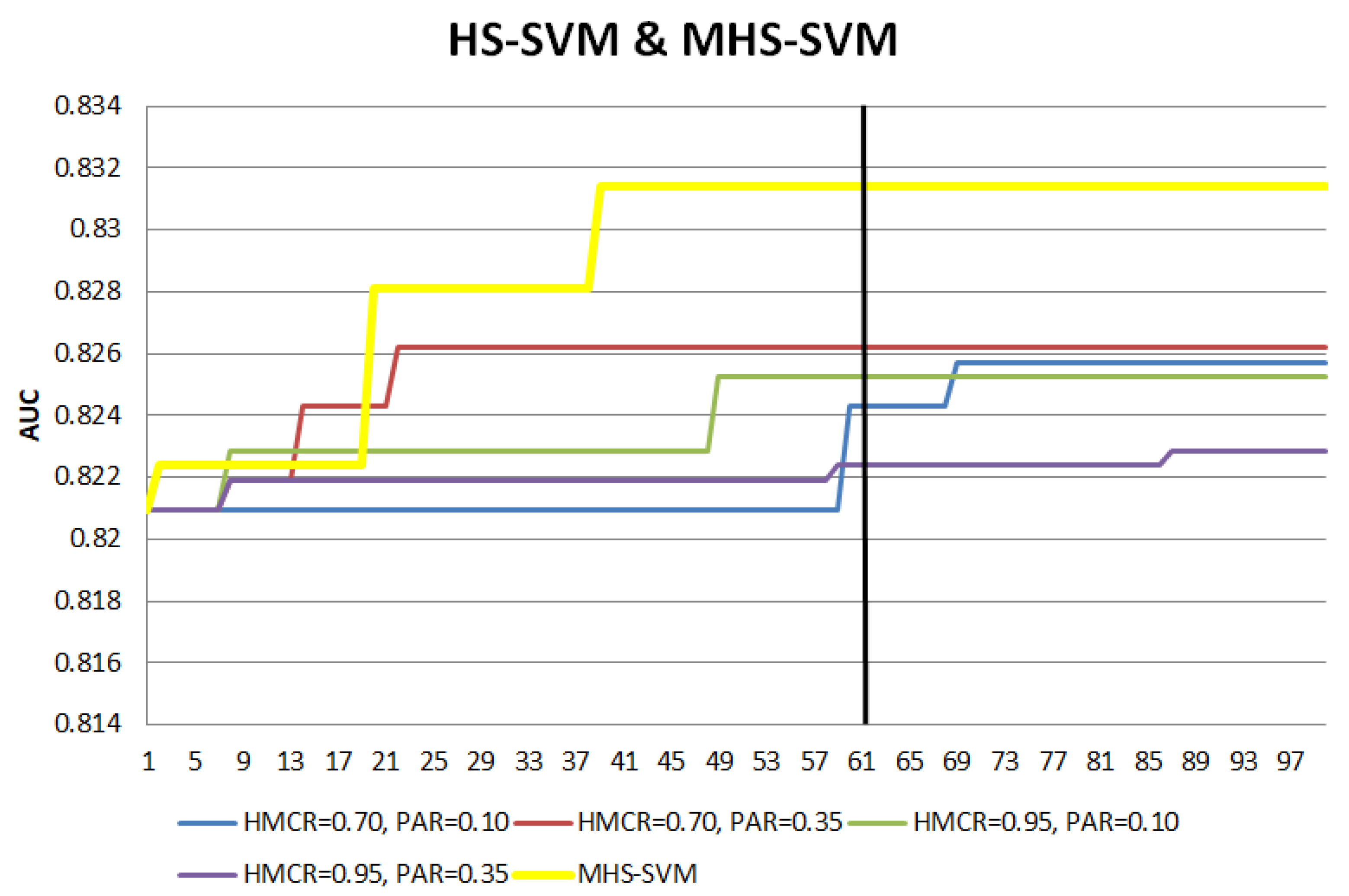

2.3.1. Search Patterns of HS-SVM and HS-RF

- Elitism selection during memory considerationThe selection of new harmony is no longer a random selection from , but with an objective to select a better quality harmony. Elitism selection leads the search process to focus on better quality candidates, thus enabling a faster convergence. Harmony vectors in are divided into two groups, i.e., elite () and non-elite (), where consists of harmony vectors with better performance than .Each harmony vector in takes an index number from the sequence of . Since is sorted in the order of best to worst performance, harmony vectors with lower index number indicate their potential as the candidates in the elite group. The first quartile, of the index sequence is computed as in Equation (1), with decimal places being rounded up because index values are discrete. The computed is the cutoff to divide into the elite and non-elite groups where and .An extra parameter is included to allocate a proper weightage on the elite group. So, the selected new harmony has a higher probability to originate from the elite group. With a probability , a new harmony is selected from the elite group. If the selection is from the non-elite group, two harmonies will be picked. Then, the better one of the two will be the new harmony.By doing this, a better harmony is always selected. Note that a low quality harmony, when joining with other harmony or being adjusted, may also produce good harmony. Thus, cannot be too high to ensure a balance to seek from elite and non-elite group. The detailed selection process is illustrated in Algorithm 1.

Algorithm 1 selection( ) /*refer Equation (1)*/ /*refer Equation (1)*/ if else if() else return - Dynamic and with step functionThe numerical experiment demonstrates repeated trials with different combinations of and for the hybrid HS models. The competitive results reported from the different combinations indicated that the recommended range by [34] ( and ) is an appropriate range for both operators. Along with the elitism selection, it is important to ensure sufficient exploration and exploitation of the search process before reaching convergence. Thus, the and is designated to be dynamic following an increasing and decreasing step function, respectively.The increasing and decreasing step function of and cooperates with each other for a balance of exploration and exploitation. Initially, a lower provides an active global search to explore the search area and it works along with a higher that provides an active local search to exploit the neighbourhood of the search area. Thus, consists of candidates scattering around the search area with its corresponding neighbourhood being well-exploited in the early stage of the search procedure. Following the step function, the global search exploration decreases and focuses in the search area stored in the , leading to the local exploitation to be focused in this specific search area. The dynamic settings of and enable effective determination of the appropriate search area that lead to a more efficient convergence towards the final solutions.In utilizing the step function, several components, i.e., range, range, increment, decrement, and step size () have to be determined. Based on the numerical experiment conducted earlier, the range of the operators are set as the recommended range. The interval for increment and decrement of and , respectively, is set at 0.05 as this small interval is sufficient to cover the whole range for these two operators. The determines the number of iterations for and to maintain before shifting to another value in the range until both operators reach a plateau. The setting of depends on the search range size with a smaller step preferable as the main aim is to have faster convergence with active exploration and exploitation in the early stage of the search. Thus, is set to enable both and to reach a plateau within the first half of the total iterations. For the numerical experiment, MHS-SVM has while MHS-RF has . The smaller for MHS-RF is due to its smaller discrete search space than MHS-SVM with continuous search space.

- Self-adjustedis an assistant tool for pitch adjustment and poses an effect on local exploitation. We suggest to replace the using a coefficient of variation () (Equation 2) of the decision variable for every iteration, which will now be an auto-updated value in each iteration, thus enabling possible early convergence. This modification is only applicable for continuous decision variables as is not required for the adjustment of discrete decision variables.This intuition comes from several past researches [35,36,37] that have proposed the improved HS with the modified. From these modifications, it is suggested that the dynamic should converge to smaller values as the iterations of the search process increases. Reference [35] recommended standard deviation () as the appropriate replacement of . Reference [37] also utilized to replace , with an additional constant attached to control the local exploitation. Hence, using to substitute is an appropriate strategy because the division of with the mean is perceived as equivalent to the attached constant as in [37], yet has the benefit of being automatically updated in every iteration. Besides, can effectively scale the to ensure the search processes are maintained in an appropriate range. When the iterations increase, solutions in HM will converged, causing the to converge to smaller values.

- Additional termination criteriaThe termination criteria used in this study are the maximum number of iterations (), convergence of HM, and non-improvement on the best solution for a fixed number of consecutive iterations (). Since the previous three modifications open up the possibility for faster convergence, both criteria are included to avoid redundant iterations to save computational effort. MHS procedure will stop when any one of the criteria is met.

2.3.2. Potential of MHS-SVM and MHS-RF

3. Hybrid Models

3.1. HS-SVM and MHS-SVM

- Step 1:

- Define objective function and parameters of HS and MHS.The objective function is to maximize the AUC of the SVM classification function with three decision variables. The first decision variable, is a binary (0,1) string of length a (number of features in dataset), second () and third () decision variables correspond to the SVM hyperparameters search range and [38], respectively. The detailed parameters settings are enclosed in Section 4.3.

- Step 2:

- Initialization of Harmony MemoryEach harmony vector in has three decision variables. Every harmony vector is evaluated with the fitness function and sorted from the best to worst. Each decision variable is randomly initialized as in Equation (4). Both HS-SVM and MHS-SVM have the same .

- Step 3:

- ImprovisationWith probability , a new harmony is selected from the . The selected harmony is adjusted to the neighbouring values with a probability . The two continuous variables (), the hyperparameters of SVM are adjusted to neighbouring values of width . With probability , a new harmony vector is generated as in Equation (4).However, for the first decision variable, (which is the features), operator acts as a flipping agent. When it is activated, the selected harmony will be flipped from 1 to 0 or vice versa. Note that not every feature is flipped as our aim is to adjust the harmony rather than randomize the harmony. The higher the fraction of features flipped, the more randomized is the harmony, causing it to resembles exploration instead of exploitation of the features, and altogether resulting in higher computational effort as the search process continuously explore other search space. Features fraction of more than half is considered as high randomization. On the other hand, the lower the fraction of features flipped, the lesser the harmony is being exploited. To ensure the functionality of the operator as the exploitation tool, the midpoint between zero and half of the features to be flipped is selected. Thus, only a quarter of the features is flipped. This is controlled by , a random vector generating the feature numbers to be flipped.MHS-SVM will have the three modifications, i.e., dynamic and following the step function in Equation (5), elitism selection, and replacement of with .The improvisation procedure for HS-SVM and MHS-SVM are summarized in Algorithms 2 and 3, respectively.

- Step 4:

- Update by replacing the worst solution in with the new harmony if it has a better fitness value. This procedure is the same for HS-SVM and MHS-SVM.

- Step 5:

- Repeat Steps 3 and 4 until is reached for HS-SVM and for MHS-SVM when one of the two additional criteria, i.e., converges or is reached.

| Algorithm 2 |

| if |

| if |

| else |

| if |

| if |

| else |

| if |

| if |

| else |

| Note: a,b: Refer Equation (5) c: Refer Algorithm 1 d: Refer Equation (2) |

| Algorithm 3 |

| a |

| b |

| if |

| selection ( )c |

| if |

| else |

| if |

| selection ( )c |

| if |

| d |

| else |

| if |

| selection ( )c |

| if |

| d |

| else |

| Note: a,b: Refer Equation (5) c: Refer Algorithm 1 d: Refer Equation (2) |

3.2. HS-RF and MHS-RF

- Step 1:

- Define objective function and parameters of HS and MHS.The objective function is the RF classification function with two decision variables that corresponds to the two hyperparameters, i.e., and . The search range for is chosen to be discrete values of , where these values are then converted to the corresponding hundred. This search range is selected as it is often attempted by researchers. The search range of the second decision variable is discrete values of , where a is the total number of attributes available. This search range is chosen because the hyperparameter is the random subset of variables from the total available attributes. The detailed parameters are enclosed in Section 4.3.

- Step 2:

- Initialization of Harmony MemoryEach harmony vector in has two decision variables. Every harmony vector is evaluated with the fitness function and sorted from the best to worst. Since the decision variables to solve RF are discrete, the harmony vectors are sampled directly from the search range as in Step 1. Both HS-RF and MHS-RF have the same .

- Step 3:

- ImprovisationWith probability , a new harmony is selected from . Then the selected harmony is adjusted to the neighbouring values with probability . As there are only discrete variables, the new harmony is adjusted directly to the left or right; is not required to adjust the new harmony. Hence only two modifications are involved in MHS-RF, i.e., dynamic and following Equation (6) and the elitism selection.The improvisation procedures for HS-RF and MHS-RF are summarized in Algorithms 4 and 5, respectively.

| Algorithm 4 |

| fori in (1:2) |

| if |

| if |

| else |

| Note: a,b: Refer Equation (6) c: Refer Algorithm 1 |

| Algorithm 5 |

| a |

| b |

| fori in (1:2) |

| if |

| selection ( )c |

| if |

| else |

| Note: a,b: Refer Equation (6) c: Refer Algorithm 1 |

- Step 4:

- Update by evaluating and comparing the fitness function of the new harmony with the worst harmony in . Replace the worst harmony if the new harmony has better fitness value. This procedure is the same for both HS-RF and MHS-RF.

- Step 5:

- Repeat Steps 3 and 4 until is reached for HS-RF and for MHS-RF when one of the two additional criteria, i.e., converges or is reached.

3.3. Parallel Computing

| Algorithm 6 |

| Master: Data preparation and partitioning |

| do_parallel |

| for i in (1:10) |

| Slave: Step 1-5 of MHS-RF |

| return AUC, ACC, ACC* |

| Master: mean(AUC), mean (ACC), mean (ACC*) |

4. Experimental Setup

4.1. Credit Datasets Preparation

4.2. Performance Measures

4.3. Models Setup

5. Results and Discussions

5.1. Model Performances

5.2. Model Explainability

5.3. Computational Time

6. Conclusions and Future Directions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| ABC | Artificial Bee Colony |

| ACC | Accuracy |

| AF | Average Frequency |

| AI | Artificial Intelligence |

| AUC | Area Under Receiver Operating Characteristics |

| GA | Genetic Algorithm |

| GS | Grid Search |

| HM | Harmony Memory |

| HMCR | Harmony Memory Considering Rate |

| HMS | Harmony Memory Size |

| HS | Harmony Search |

| LDA | Linear Discriminant Analysis |

| LOGIT | Logistic Regression |

| MA | Metaheuristic Algorithm |

| mDA | mean Decrease Accuracy |

| mGI | mean decrease in Gini Impurity |

| MHS | Modified Harmony Search |

| PAR | Pitch-Adjusting Rate |

| PSO | Particle Swarm Optimization |

| RF | Random Forest |

| SEN | Sensitivity |

| SPE | Specificity |

| STEP | Backward Stepwise Logistic Regression |

| SVM | Support Vector Machine |

References

- Baesens, B.; Van Gestel, T.; Viaene, S.; Stepanova, M.; Suykens, J.; Vanthienen, J. Benchmarking state-of-the-art classification algorithms for credit scoring. J. Oper. Res. Soc. 2003, 54, 627–635. [Google Scholar] [CrossRef]

- Lessmann, S.; Baesens, B.; Seow, H.V.; Thomas, L.C. Benchmarking state-of-the-art classification algorithms for credit scoring: An update of research. Eur. J. Oper. Res. 2015, 247, 124–136. [Google Scholar] [CrossRef]

- Goh, R.Y.; Lee, L.S. Credit scoring: A review on support vector machines and metaheuristic approaches. Adv. Oper. Res. 2019, 2019, 30. [Google Scholar] [CrossRef]

- Yu, L.; Yao, X.; Wang, S.; Lai, K.K. Credit risk evaluation using a weighted least squares SVM classifier with design of experiment for parameter selection. Expert Syst. Appl. 2011, 38, 15392–15399. [Google Scholar] [CrossRef]

- Zhou, L.; Lai, K.K.; Yu, L. Credit scoring using support vector machines with direct search for parameters selection. Int. J. Inf. Technol. Decis. Mak. 2009, 13, 149–155. [Google Scholar] [CrossRef]

- Danenas, P.; Garsva, G. Selection of support vector machines based classifiers for credit risk domain. Expert Syst. Appl. 2015, 42, 3194–3204. [Google Scholar] [CrossRef]

- Garsva, G.; Danenas, P. Particle swarm optimization for linear support vector machines based classifier selection. Nonlinear Anal. Model. Control 2014, 19, 26–42. [Google Scholar] [CrossRef]

- Hsu, F.J.; Chen, M.Y.; Chen, Y.C. The human-like intelligence with bio-inspired computing approach for credit ratings prediction. Neurocomputing 2018, 279, 11–18. [Google Scholar] [CrossRef]

- Gorter, D. Added Value of Machine Learning in Retail Credit Risk. Ph.D. Thesis, Financial Engineering and Management, University of Twente, Enschede, The Netherlands, 2017. [Google Scholar]

- Òskarsdòttir, M.; Bravo, C.; Sarraute, C.; Vanthienen, J.; Baesens, B. The value of big data for credit scoring: Enhancing financial inclusion using mobile phone data and social network analytics. Appl. Soft Comput. 2019, 74, 26–39. [Google Scholar] [CrossRef]

- Van Sang, H.; Nam, N.H.; Nhan, N.D. A novel credit scoring prediction model based on feature selection approach and parallel random forest. Indian J. Sci. Technol. 2016, 9, 1–6. [Google Scholar] [CrossRef]

- Tang, L.; Cai, F.; Ouyang, Y. Applying a nonparametric random forest algorithm to assess the credit risk of the energy industry in China. Technol. Forecast. Soc. Chang. 2019, 144, 563–572. [Google Scholar] [CrossRef]

- Ye, X.; Dong, L.A.; Ma, D. Loan evaluation in P2P lending based on random forest optimized by genetic algorithm with profit score. Electron. Commer. Res. Appl. 2018, 32, 23–36. [Google Scholar] [CrossRef]

- Malekipirbazari, M.; Aksakalli, V. Risk assessment in social lending via random forests. Expert Syst. Appl. 2015, 42, 4621–4631. [Google Scholar] [CrossRef]

- Jiang, C.; Wang, Z.; Zhao, H. A prediction-driven mixture cure model and its application in credit scoring. Eur. J. Oper. Res. 2019, 277, 20–31. [Google Scholar] [CrossRef]

- He, H.; Zhang, W.; Zhang, S. A novel ensemble method for credit scoring: Adaption of different imbalance ratios. Expert Syst. Appl. 2018, 98, 105–117. [Google Scholar] [CrossRef]

- Jadhav, S.; He, H.; Jenkins, K. Information gain directed genetic algorithm wrapper feature selection for credit rating. Appl. Soft Comput. 2018, 69, 541–553. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, Z.; Bai, R.; Mao, Y. A hybrid system with filter approach and multiple population genetic algorithm for feature selection in credit scoring. J. Comput. Appl. Math. 2018, 329, 307–321. [Google Scholar] [CrossRef]

- Huang, C.L.; Chen, M.C.; Wang, C.J. Credit scoring with a data mining approach based on support vector machines. Expert Syst. Appl. 2007, 33, 847–856. [Google Scholar] [CrossRef]

- Zhou, L.; Lai, K.K.; Yen, J. Credit scoring models with AUC maximization based on weighted SVM. Int. J. Inf. Technol. Decis. Mak. 2009, 8, 677–696. [Google Scholar] [CrossRef]

- Yeh, C.C.; Lin, F.; Hsu, C.Y. A hybrid KMV model, random forests and rough set theory approach for credit rating. Knowl.-Based Syst. 2012, 33, 166–172. [Google Scholar] [CrossRef]

- Huang, Y.F.; Wang, S.H. Movie genre classification using SVM with audio and video features. In International Conference on Active Media Technology; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1–10. [Google Scholar]

- Ceylan, O.; Taskın, G. SVM parameter selection based on harmony search with an application to hyperspectral image classification. In Proceedings of the 2016 24th Signal Processing and Communication Application Conference (SIU), Zonguldak, Turkey, 16–19 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 657–660. [Google Scholar]

- Nekkaa, M.; Boughaci, D. Hybrid harmony search combined with stochastic local search for feature selection. Neural Process. Lett. 2016, 44, 199–220. [Google Scholar] [CrossRef]

- Sridharan, K.; Komarasamy, G. Sentiment classification using harmony random forest and harmony gradient boosting machine. Soft Comput. 2020, 24, 7451–7458. [Google Scholar] [CrossRef]

- Yusup, N.; Zain, A.M.; Latib, A.A. A review of harmony search algorithm-based feature selection method for classification. J. Phys. Conf. Ser. 2019, 1192, 012038. [Google Scholar] [CrossRef]

- Krishnavei, V.; Santhiya, K.G.; Ramesh, P.; Jaganathan, S. A credit scoring prediction model based on harmony search based 1-NN classifier feature selection approach. Int. J. Adv. Res. Sci. Eng. 2018, 7, 468–476. [Google Scholar]

- Geem, Z.W.; Kim, J.H.; Loganathan, G.V. A new heuristic optimization algorithm: Harmony search. Simulation 2001, 76, 60–68. [Google Scholar] [CrossRef]

- Lahmiri, S.; Bekiros, S. Can machine learning approaches predict corporate bankruptcy? Evidence from a qualitative experimental design. Quant. Financ. 2019, 19, 1569–1577. [Google Scholar] [CrossRef]

- Peng, Y.; Wang, G.; Kou, G.; Shi, Y. An empirical study of classification algorithm evaluation for financial risk prediction. Appl. Soft Comput. 2011, 11, 2906–2915. [Google Scholar] [CrossRef]

- Zięba, M.; Tomczak, S.K.; Tomczak, J.M. Ensemble boosted trees with synthetic features generation in application to bankruptcy prediction. Expert Syst. Appl. 2016, 58, 93–101. [Google Scholar] [CrossRef]

- Lahmiri, S.; Bekiros, S.; Giakoumelou, A.; Bezzina, F. Performance assessment of ensemble learning systems in financial data classification. Intell. Syst. Account. Financ. Manag. 2020, 27, 3–9. [Google Scholar] [CrossRef]

- Drummond, C.; Holte, R.C. C4.5, class imbalance, and cost sensitivity: Why under-sampling beats over-sampling. In Workshop on Learning from Imbalanced Datasets II; Citeseer: Washington, DC, USA, 2003; Volume 11, pp. 1–8. [Google Scholar]

- Yang, X.S. Harmony search as a metaheuristic algorithm. In Music-Inspired Harmony Search Algorithm: Theory and Applications; Geem, Z.W., Ed.; Springer: Berlin, Germany, 2009; Volume 191, pp. 1–14. [Google Scholar]

- Mukhopadhyay, A.; Roy, A.; Das, S.; Das, S.; Abraham, A. Population-variance and explorative power of harmony search: An analysis. In Proceedings of the Third International Conference on Digital Information Management (ICDIM 2008), London, UK, 13–16 November 2008; IEEE: Piscataway, NJ, USA; pp. 775–781. [Google Scholar]

- Kalivarapu, J.; Jain, S.; Bag, S. An improved harmony search algorithm with dynamically varying bandwidth. Eng. Optim. 2016, 48, 1091–1108. [Google Scholar] [CrossRef]

- Tuo, S.; Yong, L.; Deng, F.A. A novel harmony search algorithm based on teaching-learning strategies for 0-1 knapsack problems. Sci. World J. 2014, 637412. [Google Scholar] [CrossRef] [PubMed]

- Hsu, C.W.; Chang, C.C.; Lin, C.J. A Practical Guide to Support Vector Classification. Available online: http://www.csie.ntu.edu.tw/~cjlin/papers/guide/guide.pdf (accessed on 2 February 2020).

| HS-SVM | HS-RF | ||||

|---|---|---|---|---|---|

| HMCR | PAR | AUC | Time (min) | AUC | Time (min) |

| 0.70 | 0.10 | 0.8177 | 4.4481 | 0.8256 | 10.2604 |

| 0.70 | 0.15 | 0.8175 | 4.6211 | 0.8271 | 10.0100 |

| 0.70 | 0.20 | 0.8200 | 4.4203 | 0.8272 | 10.8761 |

| 0.70 | 0.25 | 0.8186 | 4.5008 | 0.8305 | 10.9019 |

| 0.70 | 0.30 | 0.8181 | 4.5614 | 0.8252 | 10.4817 |

| 0.70 | 0.35 | 0.8196 | 4.5122 | 0.8254 | 11.0123 |

| 0.75 | 0.10 | 0.8170 | 4.3773 | 0.8255 | 11.1008 |

| 0.75 | 0.15 | 0.8173 | 4.3323 | 0.8251 | 10.4266 |

| 0.75 | 0.20 | 0.8204 | 4.6357 | 0.8281 | 10.8729 |

| 0.75 | 0.25 | 0.8191 | 4.3702 | 0.8279 | 9.7096 |

| 0.75 | 0.30 | 0.8190 | 4.4640 | 0.8259 | 10.6458 |

| 0.75 | 0.35 | 0.8193 | 4.4810 | 0.8267 | 10.7759 |

| 0.80 | 0.10 | 0.8164 | 4.5614 | 0.8252 | 11.3625 |

| 0.80 | 0.15 | 0.8173 | 4.5198 | 0.8258 | 11.2265 |

| 0.80 | 0.20 | 0.8196 | 4.5138 | 0.8271 | 9.9028 |

| 0.80 | 0.25 | 0.8193 | 4.4698 | 0.8300 | 9.9752 |

| 0.80 | 0.30 | 0.8186 | 4.5357 | 0.8263 | 10.6068 |

| 0.80 | 0.35 | 0.8189 | 4.5541 | 0.8298 | 9.9372 |

| 0.85 | 0.10 | 0.8163 | 4.3997 | 0.8252 | 11.7332 |

| 0.85 | 0.15 | 0.8179 | 4.4258 | 0.8256 | 11.2218 |

| 0.85 | 0.20 | 0.8190 | 4.3716 | 0.8241 | 10.6202 |

| 0.85 | 0.25 | 0.8163 | 4.5028 | 0.8273 | 10.5372 |

| 0.85 | 0.30 | 0.8158 | 4.6122 | 0.8265 | 10.9275 |

| 0.85 | 0.35 | 0.8152 | 4.6928 | 0.8252 | 10.9275 |

| 0.90 | 0.10 | 0.8170 | 4.3648 | 0.8276 | 11.2554 |

| 0.90 | 0.15 | 0.8184 | 4.3502 | 0.8262 | 11.5087 |

| 0.90 | 0.20 | 0.8193 | 4.3950 | 0.8244 | 10.9275 |

| 0.90 | 0.25 | 0.8130 | 4.6232 | 0.8275 | 10.6972 |

| 0.90 | 0.30 | 0.8138 | 4.4245 | 0.8279 | 10.8565 |

| 0.90 | 0.35 | 0.8139 | 4.4260 | 0.8258 | 11.2159 |

| 0.95 | 0.10 | 0.8154 | 4.6132 | 0.8256 | 10.8480 |

| 0.95 | 0.15 | 0.8145 | 4.2717 | 0.8217 | 11.1239 |

| 0.95 | 0.20 | 0.8147 | 4.5164 | 0.8244 | 11.6584 |

| 0.95 | 0.25 | 0.8133 | 4.6013 | 0.8282 | 11.3777 |

| 0.95 | 0.30 | 0.8152 | 4.4070 | 0.8277 | 11.1307 |

| 0.95 | 0.35 | 0.8152 | 4.4630 | 0.8275 | 12.3353 |

| mean | 0.8172 | 4.4817 | 0.8265 | 10.8754 | |

| sd | 0.0021 | 0.1000 | 0.0018 | 0.5785 | |

| AUC | Time (min) | |

|---|---|---|

| GS-SVM | 0.8078 | 23.9922 |

| HS-SVM * | 0.8172 | 4.4817 |

| GS-RF | 0.8214 | 9.0614 |

| HS-RF * | 0.8265 | 10.8754 |

| AUC | Time (min) | Iterations | |

|---|---|---|---|

| GS-SVM | 0.8078 | 23.9922 | 614 |

| HS-SVM * | 0.8172 | 4.4817 | 100 |

| MHS-SVM | 0.8197 | 3.1502 | 71 |

| GS-RF | 0.8214 | 9.0614 | 100 |

| HS-RF * | 0.8265 | 10.8754 | 100 |

| MHS-RF | 0.8261 | 5.2008 | 49 |

| Instances | Categorical | Numerical | Default Rate | |

|---|---|---|---|---|

| German | 1000 | 13 | 7 | 30% |

| Australian | 690 | 8 | 6 | 44.45% |

| LC | 9887 | 4 | 17 | 27.69% |

| Attributes | Type | Attributes | Type |

|---|---|---|---|

| loan_amnt | Numerical | last_credit_pull_d *** | Numerical |

| emp_length * | Numerical | acc_now_delinq | Numerical |

| annual_inc | Numerical | chargeoff_within_12mths | Numerical |

| dti | Numerical | delinq_amnt | Numerical |

| delinq_2_yrs | Numerical | pub_rec_bankruptcies | Numerical |

| earliest_cr_line ** | Numerical | tax_liens | Numerical |

| inq_last_6mths | Numerical | home_ownership | Categorical |

| open_acc | Numerical | verification_status | Categorical |

| pub_rec | Numerical | purpose | Categorical |

| revol_util | Numerical | initial_list_status | Categorical |

| total_acc | Numerical |

| Parameters | German | Australian | Lending Club | |

|---|---|---|---|---|

| HS-SVM | 30 | 30 | 30 | |

| 0.70 | 0.80 | 0.80 | ||

| 0.30 | 0.10 | 0.30 | ||

| 0.10 | 0.10 | 0.10 | ||

| 1000 | 1000 | 1000 | ||

| MHS-SVM | 30 | |||

| 0.70 | ||||

| {0.70, 0.95} | ||||

| {0.10, 0.35} | ||||

| 20 | ||||

| 1000 | ||||

| 500 | ||||

| HS-RF | 10 | 10 | 10 | |

| 0.70 | 0.70 | 0.80 | ||

| 0.30 | 0.30 | 0.10 | ||

| 100 | 100 | 100 | ||

| MHS-RF | 10 | |||

| 0.70 | ||||

| {0.70, 0.95} | ||||

| {0.10, 0.35} | ||||

| 5 | ||||

| 100 | ||||

| 25 | ||||

| German | Australian | Lending Club | |||||||

|---|---|---|---|---|---|---|---|---|---|

| AUC | ACC | F1 | AUC | ACC | F1 | AUC | ACC | F1 | |

| LOGIT | 0.7989 | 0.7590 | 0.8356 | 0.9308 | 0.8725 | 0.8462 | 0.6257 | 0.7239 | 0.8386 |

| STEP | 0.7999 | 0.7620 | 0.8378 | 0.9321 | 0.8739 | 0.8550 | 0.6245 | 0.7238 | 0.8387 |

| LDA | 0.8008 | 0.7470 | 0.8365 | 0.9286 | 0.8623 | 0.8473 | 0.6231 | 0.7238 | 0.8390 |

| GS-SVM | 0.8006 | 0.7440 | 0.8315 | 0.9292 | 0.8536 | 0.8486 | 0.7168 | 0.7236 | 0.8393 |

| HS-SVM | 0.8015 | 0.7620 | 0.8424 | 0.9313 | 0.8639 | 0.8579 | 0.8278 | 0.8251 | 0.8841 |

| MHS-SVM | 0.8051 | 0.7620 | 0.8403 | 0.9310 | 0.8565 | 0.8524 | 0.8267 | 0.8203 | 0.8800 |

| GS-RF | 0.7999 | 0.7640 | 0.8448 | 0.9354 | 0.8723 | 0.8598 | 0.8670 | 0.8580 | 0.9068 |

| HS-RF | 0.8044 | 0.7640 | 0.8453 | 0.9366 | 0.8738 | 0.8614 | 0.8674 | 0.8571 | 0.9063 |

| MHS-RF | 0.8053 | 0.7560 | 0.8410 | 0.9356 | 0.8695 | 0.8556 | 0.8679 | 0.8572 | 0.9064 |

| , (0.8224) | , (0.6633) | , (4.826e-13) | |||||||

| LOGIT | STEP | LDA | SVM | HS-SVM | MHS-SVM | RF | HS-RF | MHS-RF | |

|---|---|---|---|---|---|---|---|---|---|

| LOGIT | - | ||||||||

| STEP | 1.31 × 10−1 | - | |||||||

| LDA | 8.40 × 10−2 | 3.23 × 10−1 | - | ||||||

| GS-SVM | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | - | |||||

| HS-SVM | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | - | ||||

| MHS-SVM | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 3.23 × 10−1 | - | |||

| GS-RF | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | - | ||

| HS-RF | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 6.25 × 10−1 | - | |

| MHS-RF | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 1.95 × 10−3 | 2.86 × 10−1 | 4.41 × 10−1 | - |

| German | Australian | Lending Club | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AUC | ACC | F1 | Rank | AUC | ACC | F1 | Rank | AUC | ACC | F1 | Rank | ORank | |

| LOGIT | 9 | 6 | 8 | 7.7 | 7 | 3 | 9 | 6.3 | 7 | 7 | 9 | 7.7 | 7.3 |

| STEP | 7.5 | 4 | 6 | 5.8 | 4 | 1 | 5 | 3.3 | 8 | 8.5 | 8 | 8.17 | 5.9 |

| LDA | 5 | 8 | 7 | 6.7 | 9 | 7 | 8 | 8 | 9 | 8.5 | 7 | 8.17 | 7.7 |

| SVM | 6 | 9 | 9 | 8 | 8 | 9 | 7 | 8 | 6 | 6 | 6 | 6 | 6.4 |

| HS-SVM | 4 | 4 | 3 | 3.7 | 5 | 6 | 3 | 4.7 | 4 | 4 | 4 | 4 | 4.2 |

| MHS-SVM | 2 | 4 | 5 | 3.7 | 6 | 8 | 6 | 6.7 | 5 | 5 | 5 | 5 | 5.2 |

| RF | 7.5 | 1.5 | 2 | 3.7 | 3 | 4 | 2 | 3 | 3 | 3 | 1 | 2.3 | 3.1 |

| HS-RF | 3 | 1.5 | 1 | 1.83 | 1 | 2 | 1 | 1.3 | 2 | 2 | 3 | 2.3 | 1.9 |

| MHS-RF | 1 | 7 | 4 | 4 | 2 | 5 | 4 | 3.7 | 1 | 1 | 2 | 1.3 | 3.1 |

| German | Australian | Lending Club | ||||

|---|---|---|---|---|---|---|

| SEN | SPE | SEN | SPE | SEN | SPE | |

| LOGIT | 0.8757 | 0.4867 | 0.8678 | 0.8629 | 0.9919 | 0.0462 |

| STEP | 0.8786 | 0.4900 | 0.8848 | 0.8581 | 0.9930 | 0.0208 |

| LDA | 0.8743 | 0.4967 | 0.9165 | 0.8070 | 0.9952 | 0.0150 |

| GS-SVM | 0.9057 | 0.3667 | 0.9155 | 0.8043 | 0.9980 | 0.0069 |

| HS-SVM | 0.9086 | 0.4200 | 0.9185 | 0.8200 | 0.9229 | 0.5695 |

| MHS-SVM | 0.8957 | 0.4500 | 0.9252 | 0.8016 | 0.9130 | 0.5782 |

| GS-RF | 0.9187 | 0.4000 | 0.8699 | 0.8674 | 0.9555 | 0.6034 |

| HS-RF | 0.9229 | 0.3933 | 0.8634 | 0.8822 | 0.9564 | 0.5979 |

| MHS-RF | 0.9229 | 0.3667 | 0.8635 | 0.8746 | 0.9565 | 0.5979 |

| Study | Proposed Approach | Classifier | Database | Performance Measures |

|---|---|---|---|---|

| [33] | Comparison of undersampling and oversampling to solve class imbalance | C4.5 | 4 UCI datasets* (A) | expected cost |

| [30] | 3 MCDM methods to rank 9 techniques (Bayesian Network, Naive Bayes, SVM, LOGIT, k-nearest neighbour, C4.5, RIPPER, RBF network, ensemble) | Top three: LOGIT, Bayesian network, ensemble | 2 UCI datasets* (G,A) and credit datasets representing 4 other countries | ACC, AUC, SEN, SPE, precision |

| [31] | Tree-based ensembles with synthetic features for features ranking and performance improvement | Extreme Gradient Boosting to learn ensemble of decision trees | EMIS database (Polish company) | AUC |

| [32] | Performance assessment of 5 tree-based ensemble models | AdaBoost, LogitBoost, RUSBoost, Subspace, Bagging | 3 UCI datasets* (A) | error rate |

| [29] | Performance assessment of 4 neural network models | BPNN, PNN, RBFNN, GRNN with RT as benchmark | 1 UCI dataset | ACC, SEN, SPE |

| Data | Study | Model | ACC | AUC | SEN | SPE |

|---|---|---|---|---|---|---|

| Australian | [30] | LOGIT | 0.8623 | 0.9313 | 0.8590 | 0.8664 |

| Bayesian Network | 0.8522 | 0.9143 | 0.8656 | 0.7980 | ||

| Ensemble | 0.8551 | 0.9900 | 0.8773 | 0.8274 | ||

| [32] | AdaBoost | 0.8725 | – | – | – | |

| LogitBoost | 0.8696 | – | – | – | ||

| RUSBoost | 0.8551 | – | – | – | ||

| Subspace | 0.7667 | – | – | – | ||

| Bagging | 0.8939 | – | – | – | ||

| HS-SVM | 0.8639 | 0.9313 | 0.9185 | 0.8200 | ||

| MHS-SVM | 0.8565 | 0.9310 | 0.9252 | 0.8016 | ||

| HS-RF | 0.8738 | 0.9366 | 0.8634 | 0.8822 | ||

| MHS-RF | 0.8695 | 0.9356 | 0.8635 | 0.8746 | ||

| German | [30] | LOGIT | 0.7710 | 0.7919 | 0.8900 | 0.4933 |

| Bayesian Network | 0.7250 | 0.7410 | 0.8814 | 0.3600 | ||

| Ensemble | 0.7620 | 0.7980 | 0.8943 | 0.4533 | ||

| HS-SVM | 0.7620 | 0.8015 | 0.9086 | 0.4200 | ||

| MHS-SVM | 0.7620 | 0.8051 | 0.8957 | 0.4500 | ||

| HS-RF | 0.7640 | 0.8044 | 0.9229 | 0.3933 | ||

| MHS-RF | 0.7560 | 0.8053 | 0.9229 | 0.3667 |

| German | Australian | Lending Club | |

|---|---|---|---|

| STEP | 14.6 | 7 | 16.2 |

| HS-SVM | 14.9 | 8.4 | 5.8 |

| MHS-SVM | 13.9 | 9 | 6.1 |

| German | Australian | Lending Club | |

|---|---|---|---|

| LOGIT | 0.3698 s | 0.3624 s | 5.8037 s |

| STEP | 15.8467 s | 0.3822 s | 6.2699 min |

| LDA | 0.5280 s | 0.5339 s | 3.0937 s |

| GS-SVM | 49.3829 min | 20.1442 min | 3.6870 days |

| HS-SVM | 101.4499 min | 42.3236 min | 4.652 days |

| MHS-SVM | 36.8149 min | 17.7540 min | 1.4710 days |

| MHS-SVM (P) | 5.764 min | 3.405 min | 9.854 h |

| GS-RF | 49.4010 min | 15.8474 min | 1.9173 h |

| HS-RF | 57.0272 min | 30.0728 min | 2.3945 h |

| MHS-RF | 32.4922 min | 12.2331 min | 1.2369 h |

| MHS-RF (P) | 5.5027 min | 3.5496 min | 12.5525 min |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Goh, R.Y.; Lee, L.S.; Seow, H.-V.; Gopal, K. Hybrid Harmony Search–Artificial Intelligence Models in Credit Scoring. Entropy 2020, 22, 989. https://doi.org/10.3390/e22090989

Goh RY, Lee LS, Seow H-V, Gopal K. Hybrid Harmony Search–Artificial Intelligence Models in Credit Scoring. Entropy. 2020; 22(9):989. https://doi.org/10.3390/e22090989

Chicago/Turabian StyleGoh, Rui Ying, Lai Soon Lee, Hsin-Vonn Seow, and Kathiresan Gopal. 2020. "Hybrid Harmony Search–Artificial Intelligence Models in Credit Scoring" Entropy 22, no. 9: 989. https://doi.org/10.3390/e22090989

APA StyleGoh, R. Y., Lee, L. S., Seow, H.-V., & Gopal, K. (2020). Hybrid Harmony Search–Artificial Intelligence Models in Credit Scoring. Entropy, 22(9), 989. https://doi.org/10.3390/e22090989