1. Introduction

Complex networks are natural manifestations of many real-world problems, such as social networks, computer networks, and protein interaction networks. Although a long history of complex network development exists, a sharp increase in related problems and data has ushered in a more extensive development of these networks in recent years. Small world [

1] and scale-free [

2] properties, as well as high aggregation [

3] are the most obvious characteristics of complex networks. The aggregation feature is often measured according to the community structure of a network [

4,

5]. In complex networks, a community refers to a group of nodes that are densely connected internally but sparsely connected to the outside. Generally, the nodes belonging to the same communities have similar functions or properties and vice versa. For example, the nodes in a same community of social network often indicate that they might have a same family, a same career, or a same hobby [

6], while those of a protein–protein interaction network are probably proteins with similar functions [

7]. Through studying the community structure of a complex network, we can better understand the network nature as a whole and different functions as local communities as well.

In a complex network, there are interactions between different communities with an important form wherein different communities share the same nodes. We call these nodes overlapping nodes, and the communities are referred to as overlapping communities. Overlapping nodes and overlapping communities exist widely in complex networks in the real world. For example, one individual may be in multiple communities (e.g., families) of a social network. In the biomolecular network, different communities can represent different biological functions, and a gene or protein can participate in a variety of biological functions. In the academic circle, a scholar often works in multiple fields. Overlapping nodes often play an important role in complex networks. Because overlapping nodes belong to and connect multiple overlapping communities and play a pivotal role in information flow, identification of overlapping nodes is an important research topic in complex network analyses. For example, Mengoni et al. have studied student population community elicitation, and found that the co-occurrence of people’s activities is an emerging epiphenomenon of hidden, implicit exchanges of information in side-channel communications [

8]. Many researchers have investigated the importance of overlapping nodes in epidemic spreading, and then developed immunization strategy accordingly [

9,

10,

11].

In 2002, Girvan and Newman proposed the well-known Girvan–Newman (GN) algorithm [

4], which defines the concept of edge betweenness and holds that the edge betweenness within a community should be smaller than the edge betweenness between communities. Since then, many community detection algorithms have been proposed, details can be found in Liu et al.’s review of community mining in complex networks [

12]. However, traditional non-overlapping community detection algorithms cannot be directly applied to overlapping community detection; hence various overlapping community detection algorithms have been developed, which could be classified into seven categories: (1) clique percolation, (2) link partitioning, (3) local expansion and optimization, (4) fuzzy detection, (5) matrix (tensor)-based model, (6) statistical inference (7) label propagation. For comprehensive overview, one can refer to [

13,

14].

Clique percolation method (CPM) holds that the inner edges of a community are closely connected with each other and have high edge density; thus, it is easier to form cliques (complete subgraphs) within communities [

15]. In CPM, communities consist of those cliques being strongly connected with each other and overlapping nodes are recognized if they belong to multiple cliques assigned to different communities [

16]. Cui et al. have extracted fully connected sub-graphs using maximal sub-graph method [

17].

Link partitioning [

18,

19] is based on edges to find the community structure. If a link is put in more than one cluster, then the nodes this link connects to are labeled as overlapping nodes [

20]. Arasteh M and Alizadeh S proposed a fast divisive community detection algorithm based on edge degree betweenness centrality [

21]. A classical

local expansion and optimization model is local fitness model (LFM) [

22], which starts from a random seed node and extends the community step by step until the fitness function is locally maximized. Subsequently, LFM randomly selects a node that is not in the generated communities as a new seed node and repeats the expansion of the community until all nodes belong to one or more communities. Then, those nodes belonging to multiple communities are considered as overlapping nodes. Following the idea of LFM, the greedy clique expression (GCE) [

23] selects the maximal clique as the seed. Guo K et al. proposed a local community detection algorithm based on internal force between nodes to extend the seed set [

24]. Zhang J et al. proposed a series of seed-extension-based, overlapping, community detection algorithms to reveal the role of node similarity and community merging in community detection [

25]. Eustace et al. have utilized neighborhood ratio matrix to detect local communities [

26]. Other local expansion and optimization models include OSLOM [

27], Infomap [

28], Game [

29], and so on.

Fuzzy detection [

30] calculates the connection strength between each pair of nodes and between communities; it also assigns a membership vector to each node. The dimension of membership vectors must be determined, as they can be used as algorithm parameters or calculated from data.

Matrix(Tensor)-based model represents the network structures by matrix or tensor, and yields a more robust community identification in the presence of mixing and overlapping communities by matrix factorization [

31] or tensor decomposition [

32].

Statistical inference can effectively tackle the problem of community detection and has many useful methods like: MMSB, AGM and BIGCLAM et al. MMSB, which combines global parameters that instantiate dense patches of connectivity (blockmodel) with local parameters that instantiate node-specific variability in the connections (mixed membership), can be used in overlapping community detection [

33]. AGM is a community-affiliation graph model that builds on bipartite node-community affiliation networks [

34]. BIGCLAM (cluster affiliation model for big networks) is an overlapping community detection method which scales to large networks of millions of nodes and edges [

35].

The final widely used type of overlapping community detection algorithm is based on

label propagation. Its main idea is to assign a label to represent its class for each node, and to propagate the label messages according to network structure and the label distribution until it is converged. After the label propagation, the nodes in the same community are assigned a same label. The classical label propagation algorithm (LPA) [

36] was developed for non-overlapping community detection. Because of LPA, several groups have extended the method into overlapping community detection. For example, community overlap propagation algorithm (COPRA) [

37] allows assignment of multiple labels for each node, associated with belonging coefficients to indicate the strengths of memberships for different classes. In contrast to COPRA, Xie et al. proposed another label propagation algorithm, which spreads labels among nodes during iterations and saves previous label information for each node [

38]. Le B D et al. proposed an improved network community detection method using meta-heuristic based label propagation [

39]. Because the label propagation process is just like that of speaker-listener communication, the algorithm is referred to as the speaker–listener label propagation algorithm (SLPA). Based on SLPA, Gaiteri et al. proposed the SpeakEasy algorithm [

40], which introduced the label global distribution information into label propagation, and effectively superseded the local neighborhood label information.

SpeakEasy as mentioned above is suitable for several different kinds of networks, and has good adaptabilities compared to previous algorithms. However, the threshold to identify overlapping nodes is difficult to determine, which might result in poor recognition of overlapping nodes. Furthermore, SpeakEasy requires generating community partitions many times to obtain a robust result, which is computationally time consuming.

To address the above weaknesses, this paper proposes a new overlapping community detection algorithm. In our method, it is the membership degree being propagated rather than the label information in existing label propagation algorithms, and therefore is called membership degree propagation algorithm (MDPA). The membership degree represents the probability that a node belongs to a potential community, which replaces SpeakEasy’s sampling of community partitions. Our method is different from COPRA since the latter propagates label information and gives a belonging coefficient for each label. MDPA has been applied to a Lancichinetti–Fortunato–Radicchi (LFR) artificial dataset and to nine commonly used real datasets. Numerical results show that MDPA greatly improves the recognition of overlapping nodes in accuracy and speed. The main contributions of the paper are (1) the introduction of the concept of membership degree, which not only stores the label information, but also the membership degree of the node belonging to the label; and (2) the significant reduction computing cost for that MDPA does not need replaying the algorithm to achieve the overlapped community partition.

3. Membership Degree Propagation Algorithm

In the SpeakEasy algorithm, the label propagation process needs to be repeated

N times, which greatly reduces the efficiency when the network size is large. A more important problem is that the threshold to identify overlapping node is difficult to choose, which often leads to an improper proportion of the overlapping nodes. In view of the above problems, a new algorithm is proposed in this paper. The main idea of the algorithm is to define a membership degree vector for each node representing how likely it belongs to the potential clusters, and then propagate the membership degree vector instead of the label in the existing label propagation methods. Hence, the algorithm is called membership degree propagation algorithm (MDPA), which is roughly divided into three steps: (1) initialization, (2) membership degree propagation, and (3) community partition. The flowchart of MDPA is shown in

Figure 3. The framework of MDPA does not have the outer loop in

Figure 2, and the overlapping node identification is merged with the community partition. The details of the algorithm are illustrated as follows.

3.1. Initialization Process

For simplicity, we will only discuss the undirected, unweighted graph below. It is easy to extend to a directed or weighted graph. Let V = {v1, v2, ∙∙∙, vn} be the set of vertices (or nodes); E is the set of edges, each representing a pair of nodes (x, y) ϵ V2, meaning that there is an edge between nodes x and y. Now we can find the overlapping community partition on graph G = {V, E}.

Much like SpeakEasy and other existing label propagation algorithms, we construct a buffer for each node. However, the difference is that the buffer not only stores the label information, but also the membership degree of the node belonging to the label. So, an element of the buffer is a binary group. Here, we define membership as the possibility that the current node belongs to a potential community. Thus, we denote the buffer of the

ith node

vi as:

where

represents a potential cluster of node

vi, and

, and the corresponding membership degree of node

vi belongs to cluster

lj, which should satisfy

The constant value B in Equation (2) represents the maximum number of potential clusters for each node, which is set to 3 times the average node degree in our experiments.

Like SpeakEasy, the ID numbers of all nodes are set as potential community labels initially. For each node, its own ID number is pushed into the label part of buffer at the beginning, and the membership degree is set as 1/

B. Then, its neighbor ID numbers will be randomly selected for

B-1 times, with the membership degree adding 1/

B correspondingly. It should be noted that if an ID number is selected more than one time, its membership degree will be more than 1/

B, and its buffer length will then less than

B.

Figure 4a shows an initialization example of a simple network with seven nodes, where the parameter

B is set as 5.

3.2. Membership Degree Propagation

The main idea of the membership degree propagation process is to increase the membership degree of the clusters with higher local distribution and lower global distribution, and vice versa. For a cluster

c, the global distribution is its appearing frequency in the entire network buffers, which is calculated by:

The local distribution of cluster

c for node

vi is the occurrence frequency in its neighbor node buffers, which is calculated by:

Taking

Figure 4a as an example, the global distribution is listed in

Table 1, and the local distribution of node

d is listed in

Table 2.

With these tables in place for each node, we now calculate the difference between local distribution and global distribution for each cluster ID in its neighbor buffers. For the calculation to make sense, it should be normalized into a same scale; in our experiments, the scale is set as [0, 5]. Therefore, the normalized difference of cluster

c for node

vi is computed by:

where

α is the scale parameter, set as 5 in our experiments. Then, cluster

c will be selected to update the buffer of node

vi according to the probability:

Still taking node

d in

Figure 4a as an example, the original difference, the normalized difference, and the corresponding probabilities of each label are listed in

Table 3.

Next, we randomly select a cluster from the buffers of node

vi according to the probability of Equation (7). If the selected cluster has already existed in the buffer

bi, the corresponding membership degree adds 1/

B, and the other clusters’ membership degrees are adjusted to let the sum remain equal to 1. Otherwise, the selected cluster should be added into the buffer

bi with the membership degree of 1/

B. If the buffer length is larger than

B, the cluster with the smallest membership degree is removed. However, if there are many clusters with the smallest membership degree, randomly delete one. The membership degrees are then adjusted to let the sum remain equal to 1. In each iteration, all nodes in the network need to update its buffer as described above. When the processing is converged or the number of iterations reaches its limit, the loop stops. The membership degree propagation pseudo-code is shown as Algorithm 1.

| Algorithm 1: Membership Degree Propagation (B, NUM, G’) |

| | Input: Buffer Size: B, The number of iterations: NUM, Initialized graph: G’ |

| | Output: The convergent graph after Membership Degree Propagation: G’ |

| 1 | i = 0; |

| 2 | fori < NUM do |

| 3 | for v ∈ G’ do |

| 4 | g ← Calculate the probability distribution of all labels in the current network; |

| 5 | f(v) ← Calculate the probability distribution of all labels in the local subgraph of the current node v; |

| 6 | L ← Get the maximum difference label L according to d(v), where d(v) = f(v) − g; |

| 7 | G’’ Increase the membership degree corresponding to label L and update the buffer of v; |

| 8 | end |

| 9 | i = i + 1; |

| 10 | end |

For node

d in

Figure 4a, suppose the randomly selected label is

d according to the probabilities computed as

Table 3. The result after updating the buffer of node

d is shown in

Figure 4b, and the final result is shown in

Figure 4c.

3.3. Community Partition

At the end of membership degree propagation process, we obtain the buffer sets for all the nodes in the network. A buffer contains no more than B binary groups, each of which represents a potential cluster and its membership degree correspondingly. Then, the community partition can be divided into two simple steps:

Assign the max membership cluster in the buffer as the first community for each node

where

vi is the

ith node, and

is the membership of

vi, which belongs to cluster

c.

Identify the secondary communities for each node. For node

vi, we make the traversing of its buffer as follows:

where

r is a membership threshold, which is simply set as

r = 1/

Nl, where

Nl is the account number of the first communities assigned to nodes in the above step. Therefore, N1 can be considered as the initial number of communities assigned to all nodes. Then, according to Equation (9), if the membership

is larger than the probability randomly assigns a node to any a community, node

i is considered as a member of cluster

c.

This process is simple, and it is easy to recognize overlapping nodes just by checking to see if more than one cluster have been assigned to them. Therefore, MDPA does not need to repeat the outer loop (initialization and propagation) multiple times, which reduces computational cost greatly when compared to SpeakEasy. The pseudo-code of the community partition is shown as Algorithm 2. The final community partition result is shown in

Figure 4d.

| Algorithm 2: CommunityPartition (r, B, G″) |

| | Input: Threshold: r, Buffer Size: B, The convergent graph after Membership Degree Propagation: G″ |

| | Output: The result of community detection: C |

| 1 | forv ∈ G″ do |

| 2 | flag = 0; |

| 3 | for (l(v), m(v)) ∈ bv do |

| 4 | // l(v) represents a potential cluster of node v; |

| 5 | //m(v) is the membership degree of node v belonging to cluster l(v); |

| 6 | if m(v) > r then |

| 7 | l(v) ← l(v) ∪ {v}; |

| 8 | C ← C ∪ {l(v)}; |

| 9 | flag = 1 |

| 10 | end |

| 11 | end |

| 12 | //m(v) <= r is true for all m(v); |

| 13 | //The node v is an overlapping node and belongs to all the clusters in buffer bv |

| 14 | if flag = 0 then |

| 15 | for (l(v), m(v)) ∈ bv do |

| 16 | l(v) ← l(v) ∪ {v}; |

| 17 | C ← C ∪ {l(v)}; |

| 18 | end |

| 19 | end |

| 20 | end |

3.4. Complexity Analysis

In the initialization phase, MDPA needs to traverse the whole network and fill the membership degree of each traversed node’s buffer. Each node needs to be filled B times, so it needs

nB operations:

In the propagation phase, it is assumed that

N iterations are needed and adjusting each buffer needs fixed

A operations. For the current node

v traversed, we need to calculate the global probability distribution at first, and the number of required operations is

nB. Then we calculate the local probability distribution of node

v. It needs at most

operations, where

is the number of neighbor nodes of node

v. Finally, the buffer is adjusted through

A operations. Therefore, the maximum number of operations MDPA needs to perform in this process:

We can see that

n is much larger than

, and the change of global probability is only due to the update of the buffer of one node. After adjusting the buffer of one node each time, the new global probability distribution can be obtained by only a few operations. Here, the number of operations to adjust the global probability can be regarded as a fixed number of operations. Based on this idea, after optimizing the algorithm, the maximum number of operations needed for MDPA in the propagation phase is as follows:

where

Ag is the fixed number of operations required to adjust the global probability distribution. In other words, at the beginning of the propagation process, we calculate the global probability distribution, and then adjust the global probability distribution after completing the buffer adjustment of the current node.

In the overlapping node identification stage, MDPA only needs to traverse each node and compare the membership degree of each node buffer with the threshold

r, so MDPA needs

nB operations at most in this stage.

So, the maximum number of operations required by MDPA is as follows:

For space complexity, since the buffer allocated to each node in the network contains up to B potential communities, MDPA takes up nB storage units at most.

5. Conclusions and Discussion

In this research, a novel overlapping community detection algorithm is developed, i.e., the membership degree propagation algorithm (MDPA). The main idea is to propagate the community membership degree according to the difference between global distribution information and local distribution information. After the propagation process, it assigns cluster numbers to a node according to its membership degree. MDPA can substantially reduce both overlapping and non-overlapping community detection problems. In both cases, it does not produce as many partition results as the existing methods did. Moreover, the final partition, as well as the overlapping node recognition result, could be obtained in a single effort based only on the converged membership degree vectors. Hence, it requires a significantly lower computation time and avoids the memory complexities of other programs designed to achieve the same objectives.

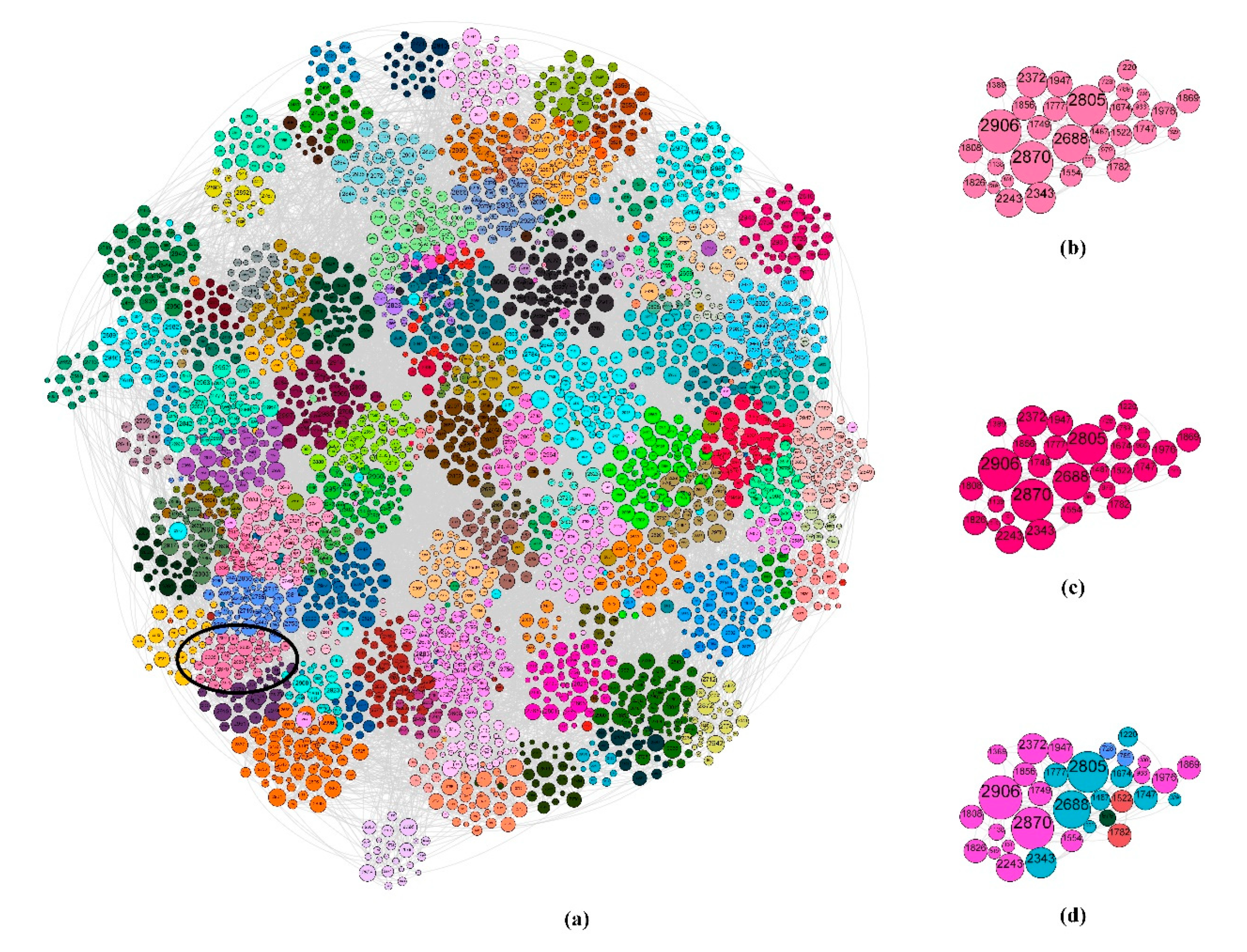

To verify the effectiveness of the proposed MDPA, it is applied on synthetic LFR datasets, and 9 real benchmark datasets. Numerical results show that MDPA is competitive compared with other state-of-the-art algorithms. It was one of the top algorithms in terms of both of NMI and SG on LFR datasets. Especially, focused on the overlapping node detection, MDPA is significantly better than other comparison methods on F1 measure. On the real benchmark datasets, compared with other 8 competitive algorithms, MDPA also obtains the best comprehensive performance in terms of Conductance and EQ metrics.

It should be noted that although only the undirected and unweighted networks are discussed in this paper, the proposed MDPA can easily be extended to directed and/or weighted networks just by adding a directed weighting factor into the superscript of e in the probability formula (Equation (7)) of the membership degree propagation process. In other words, MDPA has strong adaptability for different kinds of community detection problems, such as social network partition, biomarker detection in bionetworks, and epidemic spreading.