Abstract

The thinning operators play an important role in the analysis of integer-valued autoregressive models, and the most widely used is the binomial thinning. Inspired by the theory about extended Pascal triangles, a new thinning operator named extended binomial is introduced, which is a general case of the binomial thinning. Compared to the binomial thinning operator, the extended binomial thinning operator has two parameters and is more flexible in modeling. Based on the proposed operator, a new integer-valued autoregressive model is introduced, which can accurately and flexibly capture the dispersed features of counting time series. Two-step conditional least squares (CLS) estimation is investigated for the innovation-free case and the conditional maximum likelihood estimation is also discussed. We have also obtained the asymptotic property of the two-step CLS estimator. Finally, three overdispersed or underdispersed real data sets are considered to illustrate a superior performance of the proposed model.

1. Introduction

Counting time series naturally occur in many contexts, including actuarial science, epidemiology, finance, economics, etc. The last few years have witnessed the rapid development of modeling time series of counts. One of the most common approaches for modeling integer-valued autoregressive (INAR) time series is based on thinning operators. In order to fit different kinds of situations, many corresponding operators have been developed; see [1] for a detailed discussion on thinning-based INAR models.

The most popular thinning operator is the binomial thinning operator introduced by [2]. Let X be a non-negative integer-valued random variable and , the binomial thinning operator is defined as

and 0 otherwise, where is a sequence of independent identically distributed (i.i.d.) Bernoulli random variables with fixed success probability , and is independent of X. Based on the binomial thinning operator, [3,4] independently proposed an INAR(1) model as follows

where is a sequence of i.i.d. integer-valued random variables with finite mean and variance. Since this seminal work, the INAR-type models have received considerable attention. For recent literature on this topic, see [5,6], among others.

Note that in (1) follows a Bernoulli distribution, so is always less than or equal to X; in other words, the first part of the right side in (2) cannot be greater than , which limits the flexibility of the model. Although it has such a shortcoming, the simple form makes it easy to estimate the parameter, and it also has many similar properties to the multiplication operator in the continuous case. For this reason, there have still been many extensions of the binomial thinning operator since its emergence. Zhu and Joe [7] proposed the expectation thinning operator, which is the generalization of binomial thinning from the perspective of a probability generating function (pgf). Although this extension is very successful, the estimation procedure is a little complicated. Compared with this extension, the thinning operator we proposed is simpler and more intuitive. For recent developments, Yang et al. [8] proposed the generalized Poisson (GP) thinning operator, which is defined by replacing with a GP counting series. Although the GP thinning operator is flexible and adaptable, we argue that it has a potential drawback: the GP distribution is not a strict probability distribution in the conventional sense. Recently, Aly and Bouzar [9] introduced a two-parameter expectation thinning operator based on a linear fractional probability generating function, which can be regarded as a general case of at least nine thinning operators. Kang et al. [10] proposed a new flexible thinning operator, which is named GSC because of three initiators of the counting series: Gómez-Déniza, Sarabia and Calderín-Ojeda.

Although the binomial thinning operator is very popular, it may not perform very well in large numerical value counting time series. This is because under such circumstances, the predicted data are often volatile, and the data are more likely to be non-stationary when the numerical value is large. We intend to establish a new thinning operator which meets the following requirements: (i) it is an extension of the binomial thinning operator; (ii) it contains two parameters to achieve flexibility, (iii) it has a simple structure and is easy to implement.

Based on the above considerations, we propose a new thinning operator based on the extended binomial (EB) distribution. The operator has two parameters: real-valued and integer-valued m (, ), which is more flexible compared to some single parameter thinning, and the binomial thinning operator (1) can be regarded as a special case of in the EB thinning. The case of in the EB thinning usually performs better than in some large value data sets. In other words, the EB thinning alleviates the main defect of the binomial thinning to some extent. Since the EB thinning is not a special case of the expectation thinning in [9], we have further extended the framework of thinning-based INAR models to provide a new way in practical application. Therefore, an INAR(1) model is proposed based on the EB thinning operator, which is an extension of the model (2) and can more accurately and flexibly capture the dispersed features in real data.

This paper is organized as follows. In Section 2, we review the properties of the EB distribution and then introduce the EB thinning operator. Based on the new thinning operator, we propose a new INAR(1) model. In Section 3, two-step conditional least squares estimation is investigated for the innovation-free case of the model and the asymptotic property of the estimator is obtained. The conditional maximum likelihood estimation is discussed and the numerical simulations. In Section 4, we focus on forecasting and introduce two criteria to compare the prediction results for three overdispersed or underdispersed real data sets, which are considered to illustrate a better performance of the proposed model. In Section 5, we give some conclusions and related discussions.

2. A New INAR(1) Model

The EB distribution comes from the theory about Pascal’s triangles, which can be regarded as a multivariate case of the binomial distribution; see [11] for more details. Based on this distribution, we introduce the EB thinning operator and propose a corresponding INAR(1) model.

2.1. EB Distribution

The EB random variable , denoted as , which is defined as follows:

where m and n are both integers satisfying and ; can be calculated as

where ; and and in (3) satisfy the following restriction:

The above restriction is equivalent to . The mean and variance of EB random variables are

respectively. The pgf of can be written as As can be expressed as a convolution, the EB distribution has the reproductive property. Specifically, if are independent random variables with in (3), then . Notice that random variable is equivalent to a Bernoulli random variable satisfying .

2.2. EB Thinning Operator

According to discussions in 2.1, we construct the EB thinning operator based on the configuration of . Let be a sequence of i.i.d. random variables with common distribution , i.e., where and satisfy (4). Note that the mean and variance of are

One can easily see that if and only if

For any , the left-hand side of (6) approaches 0 as and m as , respectively. Hence, or is possible. When , which corresponds to the binomial distribution, and (5) gives and with , then we have for all . When and , one can easily show that , , where . Therefore, for all in this case.

Based on the reproductive property of the EB distribution, we define the EB thinning operator "⊚" as follows: for a non-negative integer-valued random variable X,

where if . Note that the EB thinning operator reduces to the binomial operator (1) when . It is easy to know that or , so the EB thinning operator is quite flexible when dealing with the overdispersed or underdispersed data sets.

Remark 1.

The computation of () for given () is based on (4) and (5). The solution can be obtained by solving these nonlinear equations. When , and when ,

For more complex cases (), we can derive the solution () by solving these large-scale nonlinear systems, and a more detailed calculation procedure is given in Section 3.3.

2.3. EB-INAR(1) Model

Based on the EB thinning operator, we define the EB-INAR(1) model as follows:

where , is a sequence of non-negative integer-valued random variables; the innovation process is a sequence of i.i.d. integer-valued random variables with finite mean and variance; and is independent of .

In order to obtain the estimation equations, we give some conditional or unconditional moments of the EB-INAR(1) model in the following proposition.

Proposition 1.

Suppose is a stationary process defined by (7) and let ; then for ,

- 1.

- ;

- 2.

- ;

- 3.

- ;

- 4.

- ;

- 5.

- and

where and are the expectation and variance of the innovation , respectively.

The proof of some of these properties mentioned above is given in Appendix A.

Remark 2.

Inspired by the INAR(p) model in [12], we can further extend this model to INAR(p); the EB-INAR(p) model is defined as follows:

where , m is an integer satisfying , is a sequence of non-negative integer-valued random variables, the innovation process is a sequence of i.i.d. integer-valued random variables with finite mean and variance, and is independent with .

We will show that the new model can accurately and flexibly capture the dispersion features of real data in Section 4.

3. Estimation

We use the two-step conditional least squares estimation proposed by [13] to investigate the innovation-free case and the asymptotic properties of the estimators are obtained. Conditional maximum likelihood estimation for the parametric cases are also discussed. Finally, we demonstrate the finite sample performance via simulation studies.

3.1. Two-Step Conditional Least Squares Estimation

Denote , and . The two-step CLS estimation will be conducted by the following two steps.

Step 1.1. The estimator for

Let , Let

be the CLS criterion function. Then the CLS estimator of can be obtained by solving the score equation , which implies a closed-form solution:

Step 1.2. The estimator for

Let , . Then

Let ; then the CLS criterion function for can be written as

By solving the score equation , we can obtain the CLS estimator of , which also is a closed-form solution:

Step 2. Estimating parameters via the method of moments.

The estimator of , which is called a two-step CLS estimator, can be obtained by solving the following estimation equations:

where and satisfy (4).

Therefore, the resulting CLS estimator is . To study the asymptotic behaviour of the estimator, we make the following assumptions:

Assumption 1.

is a stationary and ergodic process;

Assumption 2.

.

Proposition 2.

Under assumptions 1 and 2, the CLS estimator is strongly consistent and asymptotically normal:

where , , and denotes the true value of .

To obtain the asymptotic normality of , we make a further assumption:

Assumption 3.

.

Then we have the following proposition.

Proposition 3.

Under assumptions 1 and 3, the CLS estimator is strongly consistent and asymptotically normal:

where , , and denotes the true value of .

Based on Propositions 2 and 3 and Theorem 3.2 in [14], we have the following proposition.

Proposition 4.

Under assumptions 1 and 3, the CLS estimator is strongly consistent and asymptotically normal:

where

, and denotes the true value of θ.

We do the following preparation to establish Proposition 5. Based on (5), solve the equation about , and denote the solution as . Let

Based on Proposition 4, we state the strong consistency and asymptotic normality of in the following proposition.

Proposition 5.

Under assumptions 1 and 3, the CLS estimator is strongly consistent and asymptotically normal:

where D is given in (9); with ; and denote the true values of m and α, respectively.

The brief proofs of Propositions 2–5 are given in Appendix A.

3.2. Conditional Maximum Likelihood Estimation

We maximize the likelihood function with respect to the model parameters to get the conditional maximum likelihood (CML) estimate of the parametric case

where is the parameter of , is the pmf for and is the conditional pmf. Since the marginal distribution is difficult to obtain in general, a simple approach is conditional on the observed . By essentially ignoring the dependency on the initial value and considering the CML estimate given as an estimate for by maximizing the conditional log-likelihood

over , we denote the CML estimate by . The log-likelihood function is as follows:

where and satisfy (4); follows a non-negative discrete distribution with a parameter . In what follows, we consider two cases: .

Case 1: For m = 3 with Poisson innovation, i.e., .

where is given in Remark 1.

Case 2: For m = 4 with geometric innovation, i.e.,

where is given in Remark 1. For higher order m, the formula is a little tedious, which is omitted here. For the estimate of EB-INAR(p), the CML estimation is too complicated, but the two-step CLS estimation is quite feasible, the procedure is similar to the case of . For this reason, we only consider the case of EB-INAR(1) in simulation studies.

3.3. Simulation

A Monte Carlo simulation study was conducted to evaluate the finite sample performance of the estimator. For CLS estimation, we used the package BB in R for solving and optimizing large-scale nonlinear systems to solve Equations (4) and (8). For CML estimation, we used the package maxLik in R to maximize the log-likelihood function.

We considered the following configurations of the parameters:

- Poisson INAR(1) models with

- Geometric INAR(1) models with

In simulations, we chose sample sizes n = 100, 200 and 400 with replications for each choice of parameters. The root mean squared error (RMSE) was calculated to evaluate the performance of the estimator according to the following formula: where is the estimator of in the jth replication.

For the CLS estimate, the solutions of (4) and (8) are sensitive to and , so we adopted the following estimation procedure. First, calculate 500 groups of and estimates, then use the mean values of and to solve the Equations (4) and (8). The simulation results of CLS are summarized in Table 1. We found that the estimation values are closer to the true value and the values of RMSE gradually decrease as the sample size increases.

Table 1.

Means of estimates, RMSEs (within parentheses) by CLS.

As it is a little difficult to estimate the parameter m in CML estimation, we considered m as known. The simulation results of CML estimators are given in Table 2. For all cases, all estimates generally show small values of RMSE, and the values of RMSE gradually decrease as the sample size increases.

Table 2.

Means of estimates, RMSEs (within parentheses) by CML.

4. Real Data Examples

In this section, three real data sets, including overdispersed and underdispersed settings, are considered to illustrate the better performance of the proposed model. The first example is overdispersed crime data in Pittsburgh; the second is overdispersed stock data in New York Stock Exchange (NYSE); and the third is underdispersed crime data in Pittsburgh, which was also analyzed by [15]. As is well known, in time series analysis, forecasting is very important in model evaluation. We first introduce two criteria on forecasting, and other preparations.

4.1. Forecasting

Before introducing the evaluation criterion, we briefly introduce the basic procedure as follows: First, we divide the data into two parts, the training set with the first data and the prediction set with the last data. The training set is used to estimate the parameters and evaluate the fitness of the model. Then we can evaluate the efficiency of each model by comparing the following criteria between prediction data and the real data in the prediction set.

Similar to the procedure in [16], which performs an out-of-sample experiment to compare forecasting performances of two model-based bootstrap approaches, we introduce the forecasting procedure as follows: For each we estimate an INAR(1) model for the data , then we use the fitted result based on to generate the next five forecasts, which is called the 5-step ahead forecast for each t in , where is the forecast at time t. In this way we obtain many sequences of step-ahead forecasts, finally we replicate the whole procedure P times. Then we can evaluate the point forecast accuracy by the forecast mean square error (FMSE) defined as

and forecast mean absolute error (FMAE) defined as

where is the true value of the data, is the mean of all the forecasts at i and P is the number of replicates.

4.2. Overdispersed Cases

We consider two overdispersed data sets, the first one contains 144 observations and represents monthly tallies of crime data from the Forecasting Principles website http://www.forecastingprinciples.com, and these crimes are reported in the police car beats in Pittsburgh from January 1990 to December 2001; the second one is Empire District Electric Company (EDE) data set from the Trades and Quotes (TAQ) set in NYSE, which contains 300 observations, and it was also analyzed by [17].

4.2.1. P1V Data

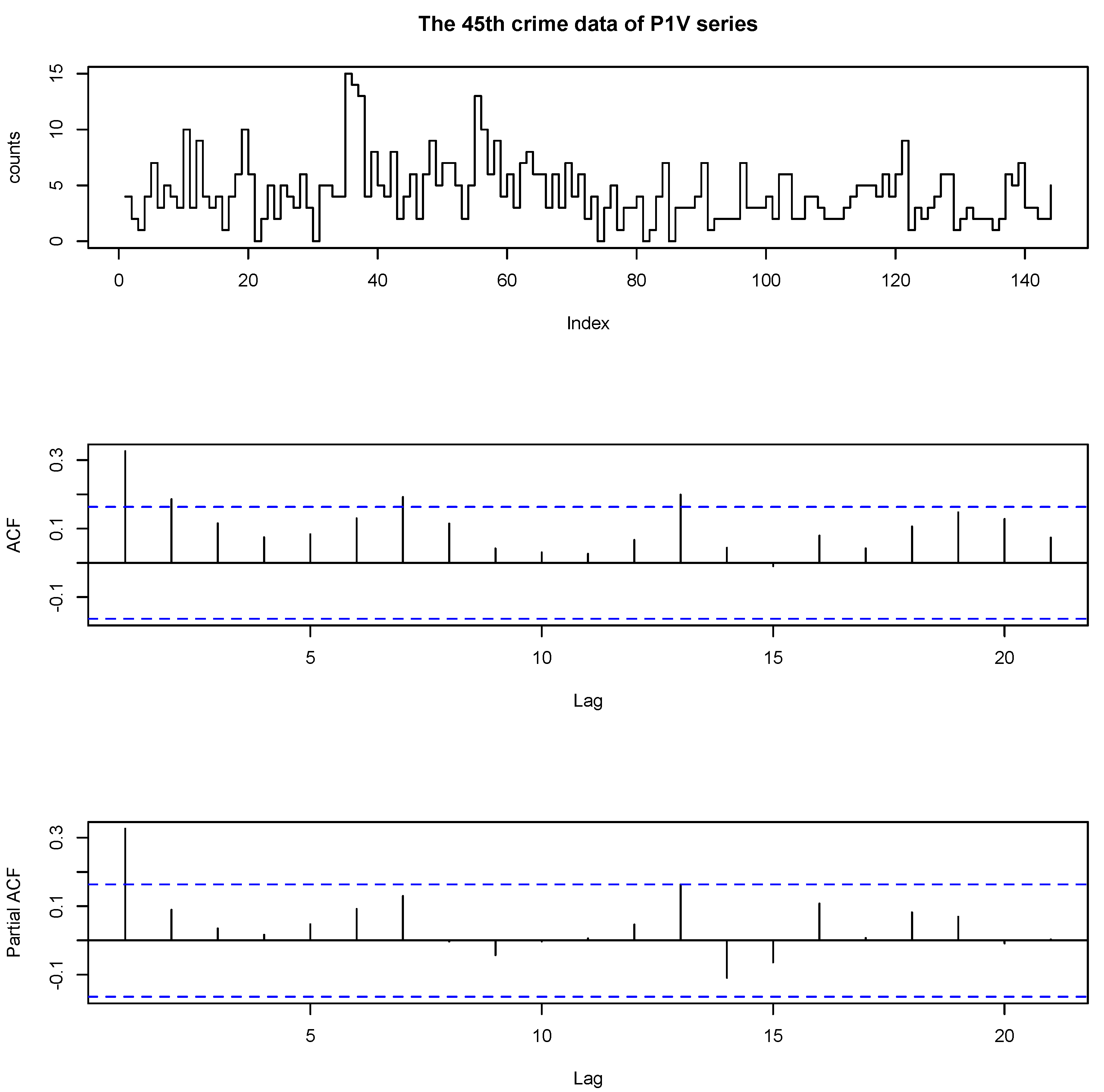

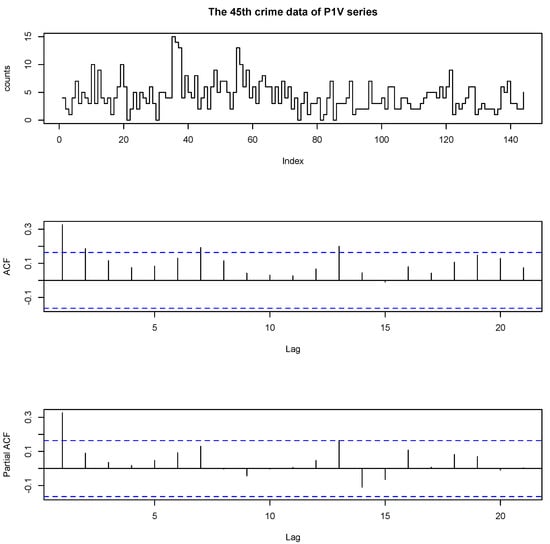

The 45th P1V (Part 1 Violent Crimes) data set contains crimes of murder, rape, robbery and other kinds; see more details in the data dictionary on the Forecasting Principles website. Figure 1 plots the time series plot, the autocorrelation function (ACF) and the partial autocorrelation function (PACF) of 45th data of P1V series, respectively. The maximum value of the data is 15 and the minimum is 0; the mean is 4.3333; the variance is 7.4685. From the ACF plot, we found that the data are dependent. From the PACF plots, we can see that only the first sample is significant, which strongly suggests an INAR(1) model.

Figure 1.

The data, autocorrelation function (ACF) and partial autocorrelation function (PACF) of 45th P1V series.

First, we divided the data set into two parts–the training set with the first counting data and the prediction set with the last data. We fit the training set by the following models: expectation thinning INAR(1) (ETINAR(1)) model in [9], GSC thinning INAR(1) (GSCINAR(1)) model in [10], the binomial thinning INAR(1) model and EB thinning EB-INAR(1) models with . According to the mean and variance of P1V data, we used one of the most common settings–geometric distribution–as the distribution of the innovation in above models.

In order to compare the effectiveness of the models, we consider the following evaluation criteria: (1) AIC. (2) The mean and standard error of Pearson residual and its related Ljung–Box statistics, where the Pearson residuals are defined as

where and are the estimated expectation and variance for related thinning operators, respectively. (3) Three goodness-of-fit statistics: RMS (root mean square error), MAE (mean absolute error) and MdAE (median absolute error), where the error is defined by , . (4) The mean of the data on the training set calculated by the estimated results.

Next, focusing on forecasting, we generated replicates based on the training set for each model. Then we calculated the FMSE and FMAE for each model.

All results of the fitted models are given in Table 3. There is no evidence of any correlation within the residuals of all five models, which is also supported by the Ljung–Box statistic based on 15 lags (because ). There were no significant differences for the RMS, MAE, MdAE and values (the true mean of the 134 training set was 4.3880) of the models. In other words, no model performed the best in terms of these four criteria, so we also considered AIC. Since the CML estimator cannot be adopted in GSCINAR(1), one can only compare other criteria.

Table 3.

Fitting results, AIC and some characteristics of P1V data.

Considering the fitness on the training set, the EB-INAR(1) with has the smallest AIC, EB-INAR(1) with has almost the same AIC as . For the results on forecasting, EB-INAR(1) with has the smallest FMSE and the second smallest FMAE among all models. EB-INAR(1) with has the second smallest FMSE and the smallest FMAE. Based on these results, we conclude that EB-INAR(1) with performs better than INAR(1), ETINAR(1) and GSCINAR(1).

4.2.2. Stock Data

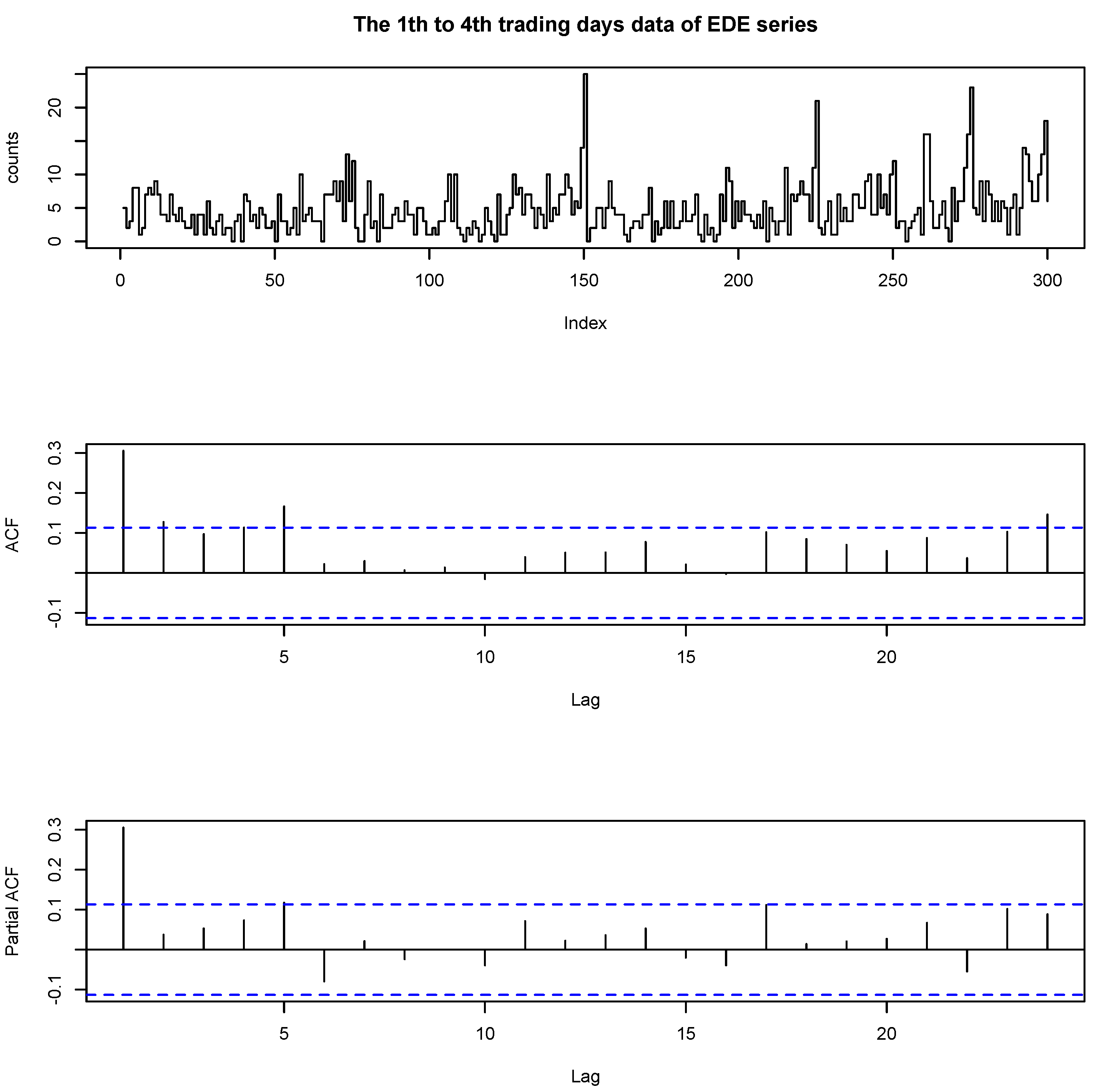

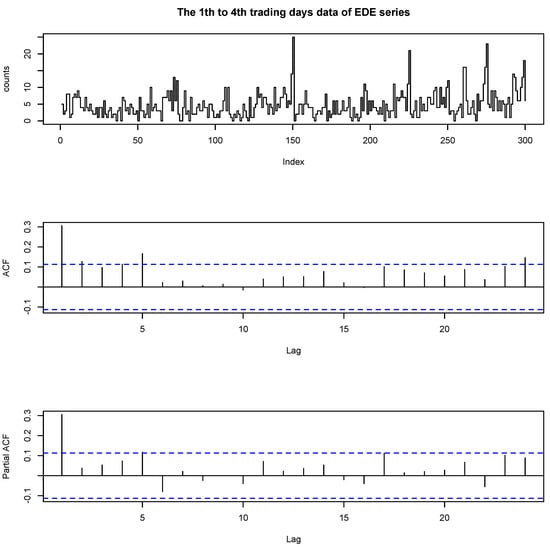

We analyzed another overdispersed data set of Empire District Electric Company (EDE) from the Trades and Quotes (TAQ) data set in NYSE. The data are about the number of trades in 5 min intervals between 9:45 a.m. and 4:00 p.m. in the first quarter of 2005 (3 January–31 March 2005, 61 trading days). Here we analyze a portion of the data between first to fourth trading days. As there are 75 5 min intervals per day, the sample size was T = 300.

Figure 2 plots the time series plot, the ACF and the PACF of the EDE series. The maximum value of the data is 25 and the minimum is 0; the mean is 4.6933; and the variance is 14.1665. It seems that the series is not completely stationary with several outliers or influential observations based on the time series plot. Zhu et al. [18] analyzed the Poisson autoregression for the stock transaction data with extreme values, which can be considered in the current setting. From the ACF plot, we found that the data are dependent. From the PACF plots, we can see that only the first sample is significant, which strongly suggests an INAR(1) model. We used the same procedures and criteria as before. We used the geometric distribution as the distribution of the innovation in above models.

Figure 2.

The data, ACF and PACF of first to fourth trading days of EDE series.

First divide the data set into two parts–the training set with the first data and the prediction set with the last data. All results of the fitted models are given in Table 4. Among all models, EB-INAR(1) with has the smallest AIC, and there is no evidence of any correlation within the residuals of all five models, which is also supported by the Ljung–Box statistic based on 15 lags. There are no significant differences for the RMS, MAE, MdAE and values (the true mean of the 270 training set was 4.3407) of all considered models. For the results of prediction, EB-INAR(1) with has the smallest FMSE and FMAE among all models. Based on the above results, we conclude that EB-INAR(1) with performs best for this data set.

Table 4.

Fitting results, AIC and some characteristics of EDE data.

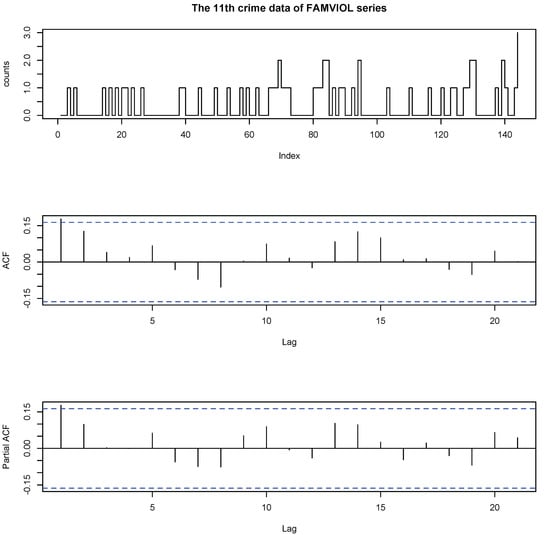

4.3. Underdispersed Case

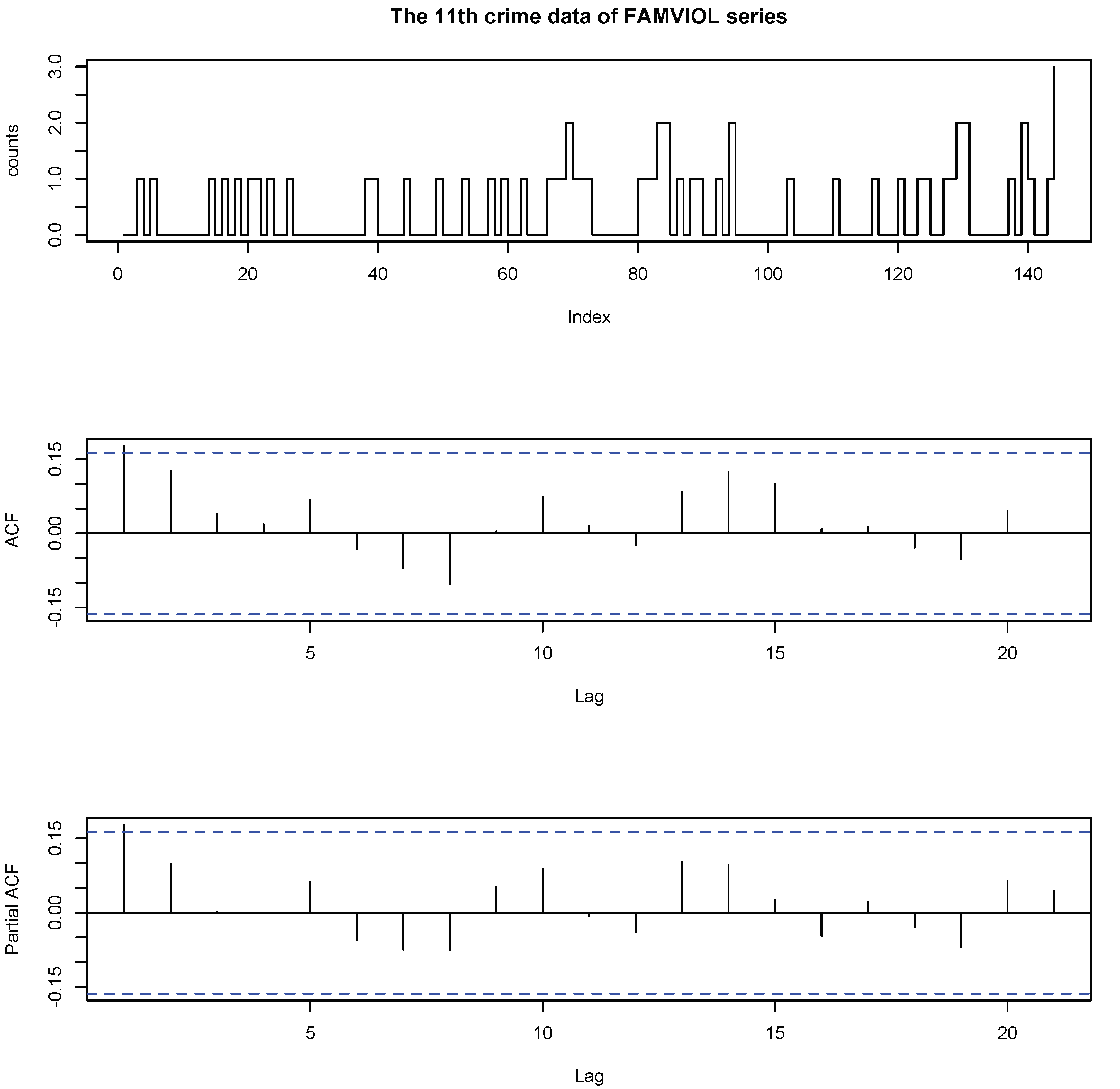

The 11th FAMVIOL data set contains the crimes of family violence, which can also be obtained from the Forecasting Principles website. Figure 3 plots the time series plot, the ACF and the PACF of the 11th data set of FAMVIOL series. The maximum value of the data is 3 and the minimum is 0; the mean is 0.4027; and the variance is 0.3820. We use the procedures and criteria in Section 4.2.1 to compare different models. According to the mean and the variance of FAMVIOL data, we use one of the most common settings-Poisson distribution as the distribution of the innovation in above models.

Figure 3.

The data, ACF and PACF of the 11th data set of the FAMVIOL series.

All results of the fitted models are given in Table 5. There is no evidence of any correlation within the residuals of all five models, which is also supported by the Ljung–Box statistic based on 15 lags. There are no significant differences about the criteria on the fitness and forecasting of all models. ETINAR(1) with the biggest AIC, performed the worst in these models.

Table 5.

Fitting results, AIC and some characteristics of FAMVIOL data.

Now let us have a brief summary. For the P1V data and stock data, which are overdispersed with slightly high-count data, the EB-INAR(1) of is obviously better than . For the FAMVIOL data, which is underdispersed with small-count data, the EB-INAR(1) with is also competitive.

5. Conclusions

This paper proposes an EB-INAR(1) model based on the newly constructed EB thinning operator, which is an extension of the thinning-based INAR models. We gave the estimation method for parameters and established the asymptotic properties of the estimators for the innovation-free case. Based on the simulations and real data analysis, the EB-INAR(1) model can accurately and flexibly capture the dispersion features of the data, which shows its effectiveness and practicality. Compared with other models, such as ETINAR(1) and GSCINAR(1), our model is competitive.

We point out that many existing integer-valued models can be generalized by replacing the binomial thinning operator with the EB thinning operator, such as those models in [19,20,21,22,23]. In addition, we can extend the considered first-order INAR model to the higher-order one. More research will be studied in the future.

Author Contributions

Conceptualization, Z.L. and F.Z.; methodology, F.Z.; software, Z.L.; validation, Z.L. and F.Z.; formal analysis, Z.L.; investigation, Z.L. and F.Z.; resources, F.Z.; data curation, Z.L.; writing—original draft preparation, Z.L.; writing—review and editing, F.Z.; visualization, Z.L.; supervision, F.Z.; project administration, F.Z.; funding acquisition, F.Z. All authors have read and agreed to the published version of the manuscript.

Funding

Zhu’s work is supported by National Natural Science Foundation of China grant numbers 11871027 and 11731015, and Cultivation Plan for Excellent Young Scholar Candidates of Jilin University.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Proposition 1.

Since 1–4 are easy to verify, we only prove 5. By the law of total covariance, we have

Thus, the autocorrelation function . □

Proof of Propositions 2 and 3.

Propositions 2 and 3 are similar to Theorems 1 and 2 in [8], which can be proved by verifying the regularity conditions of Theorems 3.1 and 3.2 in [24]. For instance, in the proof of Proposition 2, the partial derivatives have finite fourth moments in [24], which correspond to Assumption 2 in Section 3.1, in [24] is corresponds to in Step 1.1. Hence, Proposition 2 can be regarded as a direct conclusion of Theorem 3.2.

Besides, the proof of Proposition 3 is similar to Proposition 2; the procedure is almost the same as Theorem 3.2 in [24]. □

Proof of Proposition 4.

Similarly to the Theorem in [25], based on Theorem 3.2 in [14], we have

where

Based on the proof of the Theorem in [25], corresponds to and corresponds to in [25]. Based on the result of in Proposition 2 and in Proposition 3, we can obtain . □

Proof of Proposition 5.

Since the solutions (, ) about in (3.1) are real-valued and have a nonzero differential, Proposition 5 is an application of the -method, for example, which can be found in Theorem A on p.122 of [26]. □

References

- Weiß, C.H. An Introduction to Discrete-Valued Time Series; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2018. [Google Scholar]

- Steutel, F.W.; van Harn, K. Discrete analogues of self-decomposability and stability. Ann. Probab. 1979, 7, 893–899. [Google Scholar] [CrossRef]

- McKenzie, E. Some simple models for discrete variate time series. Water Resour. Bull. 1985, 21, 645–650. [Google Scholar] [CrossRef]

- Al-Osh, M.; Alzaid, A. First-order integer-valued autoregressive (INAR(1)) processes. J. Time Ser. Anal. 1987, 8, 261–275. [Google Scholar] [CrossRef]

- Schweer, S. A goodness-of-fit test for integer-valued autoregressive processes. J. Time Series Anal. 2016, 37, 77–98. [Google Scholar] [CrossRef]

- Chen, C.W.S.; Lee, S. Bayesian causality test for integer-valued time series models with applications to climate and crime data. J. R. Stat. Soc. Ser. C. Appl. Stat. 2017, 66, 797–814. [Google Scholar] [CrossRef]

- Zhu, R.; Joe, H. Negative binomial time series models based on expectation thinning operators. J. Statist. Plann. Inference 2010, 140, 1874–1888. [Google Scholar] [CrossRef]

- Yang, K.; Kang, Y.; Wang, D.; Li, H.; Diao, Y. Modeling overdispersed or underdispersed count data with generalized Poisson integer-valued autoregressive processes. Metrika 2019, 82, 863–889. [Google Scholar] [CrossRef]

- Aly, E.; Bouzar, N. Expectation thinning operators based on linear fractional probability generating functions. J. Indian Soc. Probab. Stat. 2019, 20, 89–107. [Google Scholar] [CrossRef]

- Kang, Y.; Wang, D.; Yang, K.; Zhang, Y. A new thinning-based INAR(1) process for underdispersed or overdispersed counts. J. Korean Statist. Soc. 2020, 49, 324–349. [Google Scholar] [CrossRef]

- Balasubramanian, K.; Viveros, R.; Balakrishnan, N. Some discrete distributions related to extended Pascal triangles. Fibonacci Quart. 1995, 33, 415–425. [Google Scholar]

- Du, J.; Li, Y. The integer-valued autoregressive (INAR(p)) model. J. Time Ser. Anal. 1991, 12, 129–142. [Google Scholar]

- Li, Q.; Zhu, F. Mean targeting estimator for the integer-valued GARCH(1,1) model. Statist. Pap. 2020, 61, 659–679. [Google Scholar] [CrossRef]

- Nicholls, D.F.; Quinn, B.G. Random Coefficient Autoregressive Models: An Introduction; Springer: New York, NY, USA, 1982. [Google Scholar]

- Bakouch, H.S.; Ristić, M.M. A mixed thinning based geometric INAR(1) model. Metrika 2010, 72, 265–280. [Google Scholar] [CrossRef]

- Bisaglia, L.; Gerolimetto, M. Model-based INAR bootstrap for forecasting INAR(p) models. Comput. Statist. 2019, 34, 1815–1848. [Google Scholar] [CrossRef]

- Jung, R.C.; Liesenfeld, R.; Richard, J.-F. Dynamic factor models for multivariate count data: An application to stock-market trading activity. J. Bus. Econom. Statist. 2011, 29, 73–85. [Google Scholar] [CrossRef]

- Zhu, F.; Liu, S.; Shi, L. Local influence analysis for Poisson autoregression with an application to stock transaction data. Stat. Neerl. 2016, 70, 4–25. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, D.; Zhu, F. Inference for INAR(p) processes with signed generalized power series thinning operator. J. Statist. Plann. Inference 2010, 140, 667–683. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, D.; Zhu, F. Generalized RCINAR(1) process with signed thinning operator. Comm. Statist. Theory Methods 2012, 41, 1750–1770. [Google Scholar] [CrossRef]

- Qi, X.; Li, Q.; Zhu, F. Modeling time series of count with excess zeros and ones based on INAR(1) model with zero-and-one inflated Poisson innovations. J. Comput. Appl. Math. 2019, 346, 572–590. [Google Scholar] [CrossRef]

- Liu, Z.; Li, Q.; Zhu, F. Random environment binomial thinning integer-valued autoregressive process with Poisson or geometric marginal. Braz. J. Probab. Stat. 2020, 34, 251–272. [Google Scholar] [CrossRef]

- Qian, L.; Li, Q.; Zhu, F. Modelling heavy-tailedness in count time series. Appl. Math. Model. 2020, 82, 766–784. [Google Scholar] [CrossRef]

- Klimko, L.A.; Nelson, P.I. On conditional least squares estimation for stochastic processes. Ann. Statist. 1978, 6, 629–642. [Google Scholar] [CrossRef]

- Zhu, F.; Wang, D. Estimation of parameters in the NLAR(p) model. J. Time Ser. Anal. 2008, 29, 619–628. [Google Scholar] [CrossRef]

- Serfling, R.J. Approximation Theorems of Mathematical Statistics; John Wiley & Sons, Inc.: New York, NY, USA, 1980. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).