Edge-Preserving Denoising of Image Sequences

Abstract

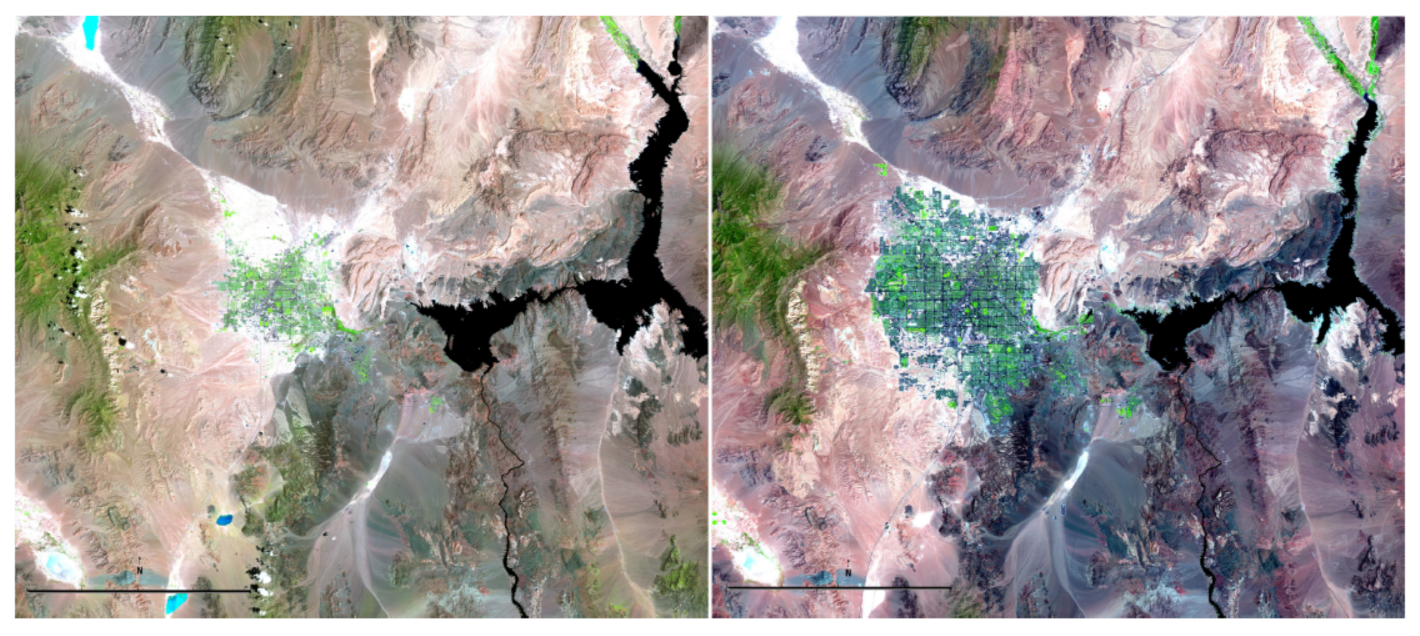

:1. Introduction

2. Materials and Methods

2.1. JRA Model and Its Estimation

2.2. Parameter Selection

3. Results

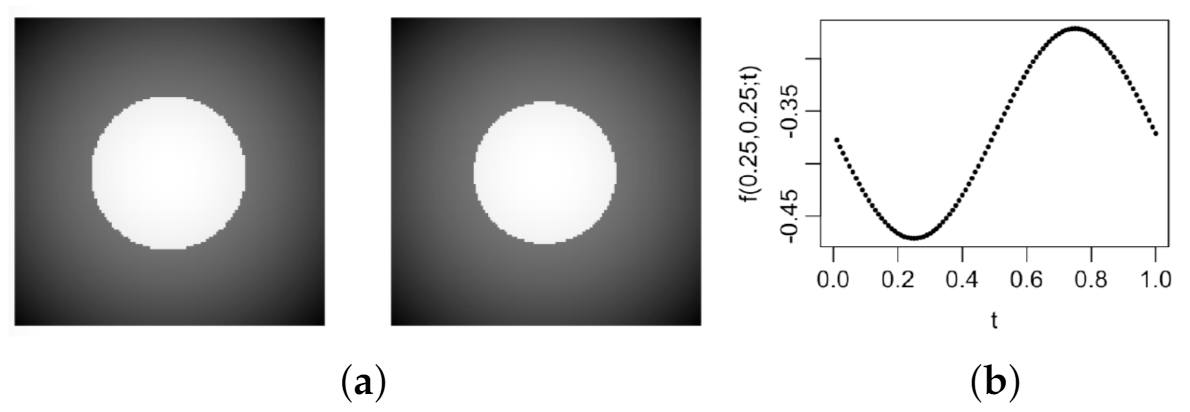

3.1. Statistical Properties

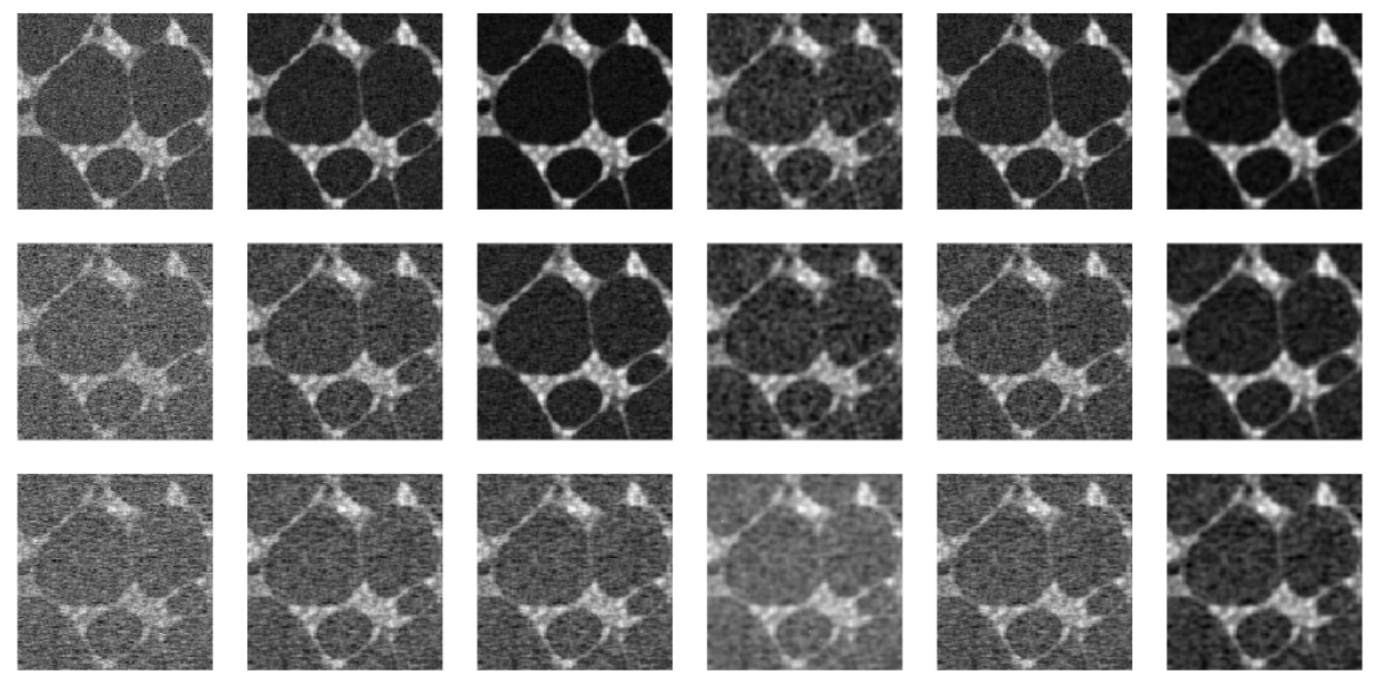

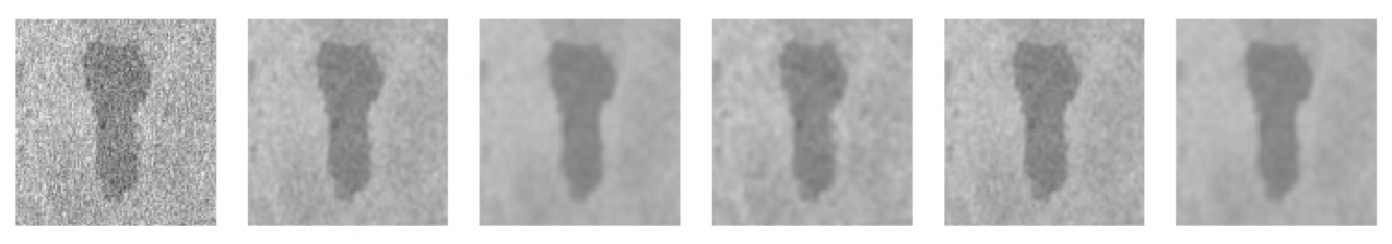

3.2. Numerical Studies

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Proof of Proposition 1

Appendix A.2. Proof of Theorem 1

Appendix A.3. Proof of Theorem 2

Appendix A.4. Proof of Theorem 3

References

- Zanter, K. Landsat 8 (L8) Data Users Handbook; Version 2; LSDS-1574; Department of the Interior, U.S. Geological Survey: Washington, DC, USA, 2016. Available online: https://landsat.usgs.gov/landsat-8-l8-data-users-handbook (accessed on 1 October 2020).

- Qiu, P. Jump regression, image processing and quality control (with discussions). Qual. Eng. 2018, 30, 137–153. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 4th ed.; Pearson: New York, NY, USA, 2018. [Google Scholar]

- Qiu, P. Jump surface estimation, edge detection, and image restoration. J. Am. Stat. Assoc. 2007, 102, 745–756. [Google Scholar] [CrossRef]

- Geman, S.; Geman, D. Stochastic relaxation, Gibbs distributions and the Bayesian restoration of images. IEEE Trans. Pattern Anal. Mach. Intell. 1984, 6, 721–741. [Google Scholar] [CrossRef]

- Besag, J. Spatial interaction and the statistical analysis of lattice systems (with discussions). J. R. Stat. Soc. Ser. B 1974, 36, 192–236. [Google Scholar]

- Fessler, J.A.; Erdogan, H.; Wu, W.B. Exact distribution of edgepreserving MAP estimators for linear signal models with Gaussian measurement noise. IEEE Trans. Image Process. 2000, 9, 1049–1055. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Perona, P.; Malik, J. Scale space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 629–639. [Google Scholar] [CrossRef] [Green Version]

- Weickert, J. Anisotropic Diffusion in Imaging Processing; Teubner: Stuttgart, Germany, 1998. [Google Scholar]

- Beck, A.; Teboulle, M. Fast gradient-based algorithms for constrained total variation image denoising and deblurring problems. IEEE Trans. Image Process. 2009, 18, 2419–2434. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rudin, L.; Osher, S.; Fatemi, E. Jump regression, Nonlinear total variation based noise removal algorithms. Phys. D 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Yuan, Q.; Zhang, L.; Shen, H. Hyperspectral Image Denoising Employing a Spectral–Spatial Adaptive Total Variation Model. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3660–3677. [Google Scholar] [CrossRef]

- Chang, G.S.; Yu, B.; Vetterli, M. Spatially adaptive wavelet thresholding with context modeling for image denoising. IEEE Trans. Image Process. 2000, 9, 1522–1531. [Google Scholar] [CrossRef] [Green Version]

- Mrázek, P.; Weickert, J.; Steidl, G. Correspondences between wavelet shrinkage and nonlinear diffusion. In Scale Space Methods in Computer Vision; Griffin, L.D., Lillholm, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Gijbels, I.; Lambert, A.; Qiu, P. Edge-preserving image denoising and estimation of discontinuous surfaces. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1075–1087. [Google Scholar] [CrossRef]

- Qiu, P. Discontinuous regression surfaces fitting. Ann. Stat. 1998, 26, 2218–2245. [Google Scholar] [CrossRef]

- Qiu, P. Jump-preserving surface reconstruction from noisy data. Ann. Inst. Stat. Math. 2009, 61, 715–751. [Google Scholar] [CrossRef]

- Qiu, P.; Mukherjee, P.S. Edge structure preserving 3-D image denoising by local surface approximation. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1457–1468. [Google Scholar]

- Polzehl, J.; Spokoiny, V.G. Adaptive weights smoothing with applications to image restoration. J. R. Stat. Soc. Ser. B 2000, 62, 335–354. [Google Scholar] [CrossRef]

- Kervrann, C.; Boulanger, J. Optimal Spatial Adaptation for Patch-Based Image Denoising. IEEE Trans. Image Process. 2006, 15, 2866–2878. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jain, P.; Tyagi, V. A survey of edge-preserving image denoising methods. Inf. Syst. Front. 2016, 18, 159–170. [Google Scholar] [CrossRef]

- Qiu, P. Image Processing and Jump Regression Analysis; John Wiley & Sons: New York, NY, USA, 2005. [Google Scholar]

- Fan, J.; Gijbels, I. Local Polynomial Modelling and Its Applications; Chapman and Hall: New York, NY, USA, 1996. [Google Scholar]

- Altman, N.S. Kernel smoothing of data with correlated errors. J. Am. Stat. Assoc. 1990, 85, 749–759. [Google Scholar] [CrossRef]

- Opsomer, J.; Wang, Y.; Yang, Y. Nonparametric regression with correlated errors. Stat. Sci. 2001, 16, 134–153. [Google Scholar] [CrossRef]

- Brabanter, K.D.; Brabanter, J.D.; Suykens, J.; Moor, B. Kernel regression in the presence of correlated errors. J. Mach. Learn. Res. 2011, 12, 1955–1976. [Google Scholar]

- Rudin, L.; Osher, S.; Fatemi, E. neuRosim: An R package for generating fMRI data. J. Stat. Softw. 2011, 44, 1–18. [Google Scholar]

- Hall, P.; Qiu, P. Blind deconvolution and deblurring in image analysis. Stat. Sin. 2007, 17, 1483–1509. [Google Scholar]

- Svoboda, D.; Ulman, V.; Kováč, P.; Šalingová, B.; Tesařová, L.; Koutná, I.K.; Matula, P. Vascular network formation in silico using the extended cellular potts model. IEEE Int. Conf. Image Process. 2016, 3180–3183. [Google Scholar]

- Shuford, W.D.; Warnock, N.; Molina, K.C.; Sturm, K. The Salton Sea as critical habitat to migratory and resident waterbirds. Hydrobiologia 2002, 473, 255–274. [Google Scholar] [CrossRef]

- Davydov, Y.A. Convergence of Distributions Generated by Stationary Stochastic Process. Theory Probab. Its Appl. 1968, 13, 691–696. [Google Scholar] [CrossRef]

| 0.1 | 0.1 | ||||

| (0.03, 0.10, 0.05) | (0.03, 0.08, 0.025) | (0.03, 0.10, 0.05) | (0.02, 0.07, 0.05) | ||

| (0.04, 0.07, 0.025) | (0.03, 0.05, 0.025) | (0.03, 0.08, 0.025) | (0.02, 0.05, 0.025) | ||

| 0.3 | |||||

| (0.04, 0.10, 0.05) | (0.03, 0.07, 0.025) | (0.03, 0.10, 0.05) | (0.02, 0.07, 0.025) | ||

| (0.04, 0.08, 0.025) | (0.03, 0.06, 0.025) | (0.03, 0.08, 0.025) | (0.03, 0.04, 0.025) | ||

| 0.5 | |||||

| (0.03, 0.10, 0.05) | (0.02, 0.07, 0.025) | (0.03, 0.10, 0.05) | (0.02, 0.04, 0.025) | ||

| (0.04, 0.09, 0.025) | (0.03, 0.06, 0.025) | (0.03, 0.09, 0.025) | (0.03, 0.04, 0.025) | ||

| 0.2 | 0.1 | ||||

| (0.04, 0.10, 0.025) | (0.03, 0.08, 0.025) | (0.04, 0.10, 0.025) | (0.03, 0.07, 0.025) | ||

| (0.04, 0.09, 0.025) | (0.03, 0.07, 0.025) | (0.04, 0.08, 0.025) | (0.03, 0.05, 0.025) | ||

| 0.3 | |||||

| (0.04, 0.10, 0.025) | (0.03, 0.08, 0.025) | (0.04, 0.10, 0.025) | (0.03, 0.07, 0.025) | ||

| (0.04, 0.11, 0.025) | (0.03, 0.08, 0.025) | (0.04, 0.09, 0.025) | (0.03, 0.07, 0.025) | ||

| 0.5 | |||||

| (0.04, 0.07, 0.025) | (0.02, 0.07, 0.025) | (0.04, 0.09, 0.025) | (0.02, 0.04, 0.025) | ||

| (0.05, 0.10, 0.025) | (0.04, 0.09, 0.025) | (0.04, 0.11, 0.025) | (0.03, 0.08, 0.025) | ||

| 0.3 | 0.1 | ||||

| (0.05, 0.13, 0.025) | (0.04, 0.09, 0.025) | (0.04, 0.11, 0.025) | (0.03, 0.08, 0.025) | ||

| (0.05, 0.11, 0.025) | (0.04, 0.09, 0.025) | (0.04, 0.10, 0.025) | (0.03, 0.08, 0.025) | ||

| 0.3 | |||||

| (0.05, 0.13, 0.025) | (0.04, 0.09, 0.025) | (0.04, 0.11, 0.025) | (0.03, 0.08, 0.025) | ||

| (0.05, 0.14, 0.025) | (0.04, 0.10, 0.025) | (0.04, 0.13, 0.025) | (0.04, 0.09, 0.025) | ||

| 0.5 | |||||

| (0.04, 0.09, 0.05) | (0.02, 0.07, 0.05) | (0.04, 0.10, 0.025) | (0.02, 0.04, 0.05) | ||

| (0.06, 0.16, 0.025) | (0.05, 0.11, 0.025) | (0.05, 0.14, 0.025) | (0.04, 0.10, 0.025) | ||

| LLK-C | LLK | GLQ | NEW-C | NEW | ||

|---|---|---|---|---|---|---|

| 0.1 | 0.1 | |||||

| 0.3 | ||||||

| 0.5 | ||||||

| 0.2 | 0.1 | |||||

| 0.3 | ||||||

| 0.5 | ||||||

| 0.3 | 0.1 | |||||

| 0.3 | ||||||

| 0.5 | ||||||

| LLK-C | LLK | GLQ | NEW-C | NEW | ||

|---|---|---|---|---|---|---|

| 0.1 | 0.1 | |||||

| 0.3 | ||||||

| 0.5 | ||||||

| 0.2 | 0.1 | |||||

| 0.3 | ||||||

| 0.5 | ||||||

| 0.3 | 0.1 | |||||

| 0.3 | ||||||

| 0.5 | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yi, F.; Qiu, P. Edge-Preserving Denoising of Image Sequences. Entropy 2021, 23, 1332. https://doi.org/10.3390/e23101332

Yi F, Qiu P. Edge-Preserving Denoising of Image Sequences. Entropy. 2021; 23(10):1332. https://doi.org/10.3390/e23101332

Chicago/Turabian StyleYi, Fan, and Peihua Qiu. 2021. "Edge-Preserving Denoising of Image Sequences" Entropy 23, no. 10: 1332. https://doi.org/10.3390/e23101332