1. Introduction

Cryptographic primitives are canonical and representative problems that capture the key challenges in understanding the fundamentals of security and privacy, and are essential building blocks for more sophisticated systems and protocols. There is much recent interest in using information theoretic tools to tackle classical cryptographic primitives [

1,

2,

3,

4,

5,

6,

7]. Along this line, the focus of this work is on a widely studied primitive in cryptography: secure (multiparty) computation [

8].

Secure computation refers to the problem where a number of users wish to securely compute a function on their inputs without revealing any unnecessary information. Interestingly, challenging as it seems, secure computation is always

feasible, i.e., with at least three users,

any function can be computed securely in the information theoretic sense [

9,

10]. We consider the most basic model with honest-but-curious users and no colluding users. This work only considers this basic model, but we note that many other variants have been considered in the literature, which may or may not be feasible depending on the specific assumptions and system parameters of the variant (see, e.g., in [

11]). However, what is largely open is how to perform secure computation

optimally, i.e., efficient secure computation solutions are not known for most cases [

6].

The main motivation of this work is to make progress towards constructing efficient secure computation codes. Towards this end, we focus on a minimal model of secure computation, introduced by Feige, Kilian, and Naor in 1994 [

12]. In this model (see

Figure 1), there are three users: Alice, Bob, and Carol. Alice and Bob have inputs

and

, respectively, and wish to compute a function

at Carol without revealing any additional information about their inputs beyond what is revealed by the function itself. To do so, Alice and Bob share a common random variable

Z that is independent of the inputs and send codewords

and

to Carol, respectively. From

, Carol can recover

and conditioned on

, the codewords

and

are independent of

so that no additional information is leaked.

The key feature of this formulation is that the communication protocol consists of only one codeword from each party that holds the input (thus non-interactive), while for the general secure computation formulation [

9,

10], interactive protocols are allowed and typically used. Elemental as it seems, this minimal secure computation problem preserves most challenging features of general secure computation; in particular, feasibility results remain

strong and optimality results remain

weak, i.e., any function

f can be computed securely while the construction of efficient codes remains open in general [

12,

13]. In this work, we focus exclusively on the original three-party formulation of minimal secure computation [

12], but note that many interesting variants have been studied (sometimes under different names to highlight different assumptions) in the literature, e.g., more than three parties [

14,

15,

16], colluding parties [

17,

18,

19,

20], other security notions [

21], and unresponsive parties [

22].

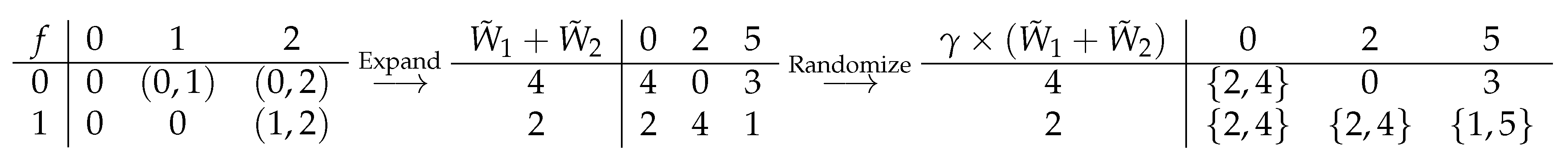

The main contribution of this work is a novel coding scheme that relies on algebraic structures to ensure correctness and security. To illustrate the idea of our coding scheme, let us first consider an example. Suppose Alice and Bob each holds a ternary input, , and wish to compute if is equal to , i.e., if , and otherwise.

As the equality function may not be easily computed in a secure manner, we first

expand it to a linear function so that it becomes simpler to deal with. As shown in

Figure 2, we use the linear function

over the finite field

(equivalent to operations modulo 3). For this expansion, we require that the original function can be fully recovered by the expanded function. This is easily verified for this example, where

if and only if

is not equal to

. This expansion step does not solve the secure computation problem because additional information may be leaked. For example, here Carol should only know if

and is not supposed to learn whether

is 1 or 2. To prevent this leakage, we invoke another step of

randomization so that the leaked information by the expanded function becomes confusable and thus protected. For this equality function example, when

, we wish to make the result equally likely to be 1 or 2. This is realized by multiplying

with

, where

is uniform over

. The multiplication operation is also over

. Thus,

Note that

over

. After this randomization step, the

randomized expanded function does not reveal any additional information beyond the original equality function. The above expand-and-randomize procedure can be easily converted to a distributed secure computation protocol. In particular, Alice and Bob share a common random variable,

, where

and

z are independent,

is uniform over

, and

z is uniform over

. The codewords

sent by Alice and Bob to Carol are

To decode

with no error, Carol subtracts

from

,

and claims that

is equal to

if and only if

. To see why perfect security holds, note that

is invertible to

; both

and

(protected by an independent uniform noise

z) do not leak any information. Specifically, the joint distributions of

remain the same for all

pairs so that

are the same. That is, when

is equal to

, i.e.,

,

are identically distributed (

is uniform over

and

is equal to

) and the same observation holds for all

pairs where

is not equal to

(

is uniform over

;

is independent of

and is uniform over

). Interestingly, this secure computation code is also communication optimal, i.e., the size of

and

must be no less than

bits each (required even if there is no security constraint).

A closer inspection of the above scheme reveals that the key is to find an expanded function such that the expanded function outputs corresponding to the same original function output can be randomized to be fully confusable. The first main result of this work is to characterize the structural properties of such

confusable sets over the finite field

, where

q is a prime power. The confusable function outputs turn out to be characterized by the property that their discrete logarithms (in exponential representation of the finite field elements) have the same remainder in modular arithmetic. Details will be presented in

Section 3.1.

As it turns out, the expand-and-randomize coding scheme is not limited to the finite field. As our second main result, we implement it over the ring of integers modulo

n,

. The ring is equipped with two operations—addition and multiplication—both defined in modulo

n arithmetic. Let us consider an example to illustrate how

is used. Consider the selected-switch function in

Figure 3. Alice has a binary input,

. Bob has a ternary input,

. When

, the switch function

f is OFF and the output is 0 (we may think that the output is not connected to the input, so it is a constant). When

, the switch function

f is ON and the output is equal to the input vector (all information about

goes through).

Following the expand-and-randomize coding paradigm, we first expand the original function to the addition function over

such that it can be fully recovered. Note that to facilitate the construction of the expanded function, here we perform an invertible transformation on the inputs,

. The expanded function reveals more information than allowed when the output is 2 or 4. To protect this information, a randomization step is realized by multiplying

, which is uniform over

. Now,

modulo 6, and

modulo 6. Therefore, the expanded function after randomization can be used to produce the following secure computation protocol. The codewords are

, where

,

and

z are independent,

is uniform over

, and

z is uniform over

. To decode, Carol will compute

. Comparing the original function

f and the randomized expanded function

, it is easy to construct the decoding rule based on

(see

Figure 3). Following a straightforward argument as presented above, we may show that the correctness and security constraints are satisfied. Details will be presented in Theorem 1.

From this example, we find that the crux of the scheme is a

partition of the elements of

into several

disjoint confusable sets such that when any two elements of a confusable set

are multiplied with

which is uniform over a carefully chosen set (

is referred to as the

randomizer), they will produce identically distributed sets of values; specifically, both will produce the confusable set

.

The main technical challenge is to understand which sets of elements can serve as the randomizer

and how the ring

is partitioned into disjoint confusable sets such that security is guaranteed. For this purpose, we require a few notions from group theory and number theory. Details are presented in

Section 3.2. To get a glimpse, consider the above example (see (

5)), where the randomizer

is from the set of integers that are coprime with 6 (1 and 5 both have no common divisor with 6), and the confusable sets are the sets of integers that have the same greatest common divisor with 6 (e.g.,

).

Our proposed coding scheme is inspired by two examples (binary logical AND function and ternary comparison function) presented in Appendix A and Appendix B of the original minimal secure computation paper [

12], where modular arithmetic over a prime number

p is used. Note that for a prime

p, the algebraic operations (addition and multiplication) in both finite field

and the ring of integers modulo

p,

are modular arithmetic. Along this line, our work can be viewed as a generalization of the examples from in [

12] to a general class of achievable schemes that distill the underlying algebraic structure and work over finite fields and modulo rings of integers with general (non-prime) cardinality.

2. Problem Statement

Consider a pair of inputs and a function . We assume the function f is discrete, and use to denote the cardinality of the range of f. is available to Alice and is available to Bob. Alice and Bob also both hold a common random variable Z whose distribution does not depend on .

Alice and Bob wish to compute securely. To this end, Alice sends a codeword and Bob sends a codeword to Carol. is a function of and Z, and has bits. is a function of and Z, and has bits. The function f is known to Alice, Bob, and Carol. As our proposed code will have a fixed length, here we only define fixed-length codes, i.e., does not depend on the value of . In general, variable-length codes might have a lower expected length (see Remark 3).

From

, Carol can recover

with no error. This is referred to as the correctness constraint. To ensure Carol does not learn anything beyond

, the following security constraint must be satisfied.

Equivalently, the security constraint can be stated as follows.

A rate tuple is said to be achievable if there exists a secure computation scheme, for which the correctness and security constraints are satisfied. The closure of the set of all achievable rate tuples is called the optimal rate region.

The main result of this work is a new achievable scheme for secure computation and the new scheme works for any joint distribution of

, so we do not specify explicitly this joint distribution. Further, for simplicity, we introduce the problem statement as a scalar coding problem. Concrete distributions will be given and

L-length extensions (block inputs) will be considered when they play more significant roles in the results, e.g., when we discuss

-error schemes in

Section 4.2 and converse results in

Section 3.3.

3. The Main Coding Scheme

In this section, we present a novel secure computation code that implements the expand-and-randomize scheme over the finite field and the ring of integers modulo n, denoted as . Let us start with relevant definitions.

Definition 1 (Confusable Sets and Randomizer)

. Sets are called confusable sets if they form a partition of all elements from or and there exists a uniform random variable γ over a set or such that , is uniform over . γ is called the randomizer.

The requirement in Definition 1 is stronger than what is needed for security. It suffices to have identical (instead of uniform) distributions over some disjoint set (instead of the confusable set). However, for our proposed scheme, it turns out that these relaxations do not lead to improved achievable rate regions such that they are not considered for simplicity. The notions introduced in Definition 1 are closely related to group actions (and orbits) studied in group theory [

23]. Along this line, the main effort of this work is to identify a class of group actions that can be used in secure computation and to prove that the class found indeed forms valid group actions. Our proof is relatively elementary, relying on basic number theoretic properties. It is possible to alternatively prove the statements using group actions. We view our main findings as identifying which operations form group actions and their applicability to secure computation (while the proof of validity can be done in various ways). Another related concept that has been studied in cryptography is randomized polynomials [

24,

25], which also rely on extra randomization to expand the deterministic function to be computed. Our work used different randomization techniques.

Definition 2 (Feasible Expanded Function)

. For a function , a function over or is called a feasible expanded function if the mapping between and , the mapping between and , and the mapping between and the index of the confusable set to which belongs are all invertible.

For an example of a feasible expanded function (for the equality function with ternary inputs) over

, see

Figure 2. Specifically,

, where

.

is uniform over

.

and

are both uniformly distributed over

.

,

.

f is the equality function and

is

. The Yes output of

f is mapped to

over

and the No output of

f is mapped to

over

. For an example of a feasible expanded function over

, see

Figure 3.

A feasible expanded function as defined above naturally leads to a correct and secure computation scheme, presented in the following theorem.

Theorem 1. For any function , if we have a feasible expanded function over or , then the following computation code is both correct and secure:where , γ is the randomizer, z and γ are independent, and z is uniform over or . Specifically, in this scheme, Alice and Bob each sends a symbol from or to Carol. Proof of Theorem 1. The proof of correctness and security follows in a straightforward manner from the definitions of the confusable sets, the randomizer, and the feasible expanded function. First, we consider the correctness constraint. To recover

with no error, Carol may compute

, from which Carol can uniquely identify the index of the confusable set (invertible to the original function output). Note that by the definition of the confusable sets and the randomizer, multiplying with

does not change the confusable set index. Second, we consider the security constraint (

6). Consider any

pairs that produce the same

output, and we show that

are identically distributed. To see this, note that

is invertible to

. By the definition of the confusable sets,

is uniform over the confusable set that corresponds to

;

is independent of

and is uniform over

or

due to the uniformity and independence of

z. Therefore,

are always uniform thus are identically distributed (so are

). The proof is complete. □

The coding scheme in Theorem 1 relies on the structure of the confusable sets and the randomizer upon which feasible expanded functions are built. Thus, it is crucial to understand the structure of the confusable sets and the randomizer, i.e., which set of elements can be used as the randomizer and how the algebraic object is partitioned to confusable sets. This structure problem is addressed next, through algebraic characterizations. The finite field case is considered in

Section 3.1 and the ring of integers modulo

n case is considered in

Section 3.2.

3.1. Finite Field

We first recall some basic facts of finite fields (refer to standard textbooks such as that in [

26]). A finite field

exists only when

, where

p is a prime and

n is a positive integer.

has

elements. Any two fields with

elements are isomorphic, thus

is referred to as

the finite field. The

elements of

are the polynomials

, where

. The addition and multiplication operations over

are defined modulo

, where

is an irreducible polynomial of degree

n that always exists. The non-zero elements of

form a multiplicative group, denoted as

.

is a cyclic group

that can be generated by a primitive element

. Denote

.

Example 1. The finite field can be constructed by addition and multiplication modulo . The multiplicative group can be generated by . Equipped with the above results (in particular, the cyclic property of the multiplicative group ), we are ready to the state in the following theorem the algebraic characterization of the confusable sets and the randomizer over .

Theorem 2. For where , p is a prime, n is an integer, and g is a primitive element of , the confusable sets and the randomizer can be chosen as follows. Consider any divisor d of , i.e., is an integer. Remark 1. In words, the elements of a confusable set are such that their discrete logarithms have the same remainder modulo a divisor of .

Before we prove Theorem 2, let us first understand it through an example and use it to securely compute a function.

Example 2. Consider . A primitive element of is 3. Setting , the following confusable sets are given by Theorem 2.Consider the function shown in Figure 4, for which a feasible expanded function can be built upon the confusable sets given above. While the primitive element

g of

is guaranteed to exist, there is no analytic formula for it and finding it computationally is difficult in general. Further, given the polynomial representation of

g, it is generally non-trivial to determine the minimum field size

q such that there exists a feasible expanded function over

for a specific function

f. A list of confusable sets for all finite fields

is given in

Figure A1 (see the

Appendix A).

Proof of Theorem 2. To verify that the definition of the confusable sets is satisfied, we only need to show that

,

is uniform over

. This is proved as follows. When

,

so that

and

. When

, consider any element from

, e.g.,

. We have

where (

18) follows from the fact that

and the observation that any

b consecutive integers form the same set under modulo

b, i.e.,

. As

is uniform,

is uniform (over

) as well. □

3.2. Ring of Integers Modulo n

To facilitate the presentation of the algebraic characterization of the confusable sets and the randomizer over , we first introduce some definitions and preliminary results.

Definition 3 (Set of Integers with Same gcd)

. Consider any proper divisor d of a given integer n, i.e., and is an integer. We denote by the set of integers in so that their greatest common divisors with n are d, i.e., .

For example, suppose

, which has proper divisors 1, 3, 5. Then,

The set

has been extensively studied in abstract algebra (see e.g., [

23]) and number theory (see, e.g., in [

27]), and is referred to as the multiplicative group of integers modulo

n (it turns out to form a group under multiplication modulo

n), so we adopt the standard existing notation

.

We present an important result on the projection of a multiplicative subgroup of over in the following lemma. To differentiate set and multiset (where an element might appear several times), we use the notation for a multiset H.

Lemma 1. Consider an arbitrary subgroup of (under multiplication modulo n). When we take modulo d (where d is a divisor of n and ), we have multiple copies of a subgroup of (under multiplication modulo d), i.e., , where is a subgroup of .

In Lemma 1,

G denotes a subgroup and the subscript specifies the original group. The proof of Lemma 1 is presented in

Section 3.4. Here, for illustration, we give an example.

Example 3. Consider a subgroup of . We have so that , which is a subgroup of (in fact, equal to) . , which is two copies of , and is a (trivial) subgroup of .

Consider another subgroup of . , which is two copies of . , which is two copies of and is a subgroup of .

Consider . , which is 4 copies of . , which is 2 copies of .

Given a subgroup

of the group

, we may partition

into cosets (see, e.g., Proposition 4 in Chapter 3 of [

23] or Theorem 6.2 of [

28]). Setting

d as

, we have that

may be partitioned into cosets with

. Combining with (

21), i.e.,

, we may partition

into cosets with

. This partition is denoted by

.

Example 4. Continuing from Example 3, consider a subgroup of . Then,where the partition is obtained from the cosets, e.g., is a coset of with representative or . Similarly, when , from Example 3 we have and the partitions are as follows: For another choice of (again from Example 3), consider of . Then, from Example 3, . The partitions are For the final choice of from Example 3, we have and the partitions are trivial- , , and .

The collection of the cosets for all proper divisors d is a feasible choice of the confusable sets. This result is stated in the following theorem.

Theorem 3. For , the confusable sets and the randomizer can be chosen as follows. Consider the set of all proper divisors of n, and an arbitrary subgroup of . Before presenting the proof of Theorem 3, we first give an example to illustrate its meaning.

Example 5. Continuing from Example 4, consider a subgroup of . Then, from Theorem 3, the confusable sets areFor each of the confusable set above, it is easy to verify that when an element is multiplied with γ (uniform over ), the result is uniform over the confusable set.For another example, consider . The confusable sets areLet us also verify that the uniform property holds. γ is over . For example, consider , then we have . Consider , then we have , which is 2 copies of . Finally, consider . The confusable sets are . For any element in , say 6, we have .

Proof of Theorem 3. The proof relies on Lemma 1 and the property of cosets. First, the confusable sets form a partition of

. Second, we verify the uniform property, i.e.,

,

is uniform over

. Consider any

, e.g., a set from

. From the construction of

, we have

is a coset of

in

. By the definition of cosets and the fact that

, we have

Next, consider

Therefore,

is uniform over

. The proof is complete. □

Remark 2. From Theorem 3, we see that any subgroup of can induce a feasible choice of the confusable sets and the randomizer. We list all possible confusable sets for in Figure A2 (see the Appendix B). We also include in the Appendix C some discussion on the structures of the subgroups of , based on existing group theory and number theory results. 3.3. Converse

One of the challenges to understand the optimality of secure computation codes is the lack of converse results. In information theory, converse results are statements of impossibility claims and are used to prove optimality. As a starting point, we compare our achievable scheme with existing converse results with no security constraint (i.e., the pure computation problem). Interestingly, when the size of the underlying field or ring is the same as the input size, the scheme in Theorem 1 achieves the information theoretically optimal rate region. Without loss of generality, for secure computation problems, we assume there are no identical rows or columns in the function table (as Carol cannot learn anything about the exact row or column index of such identical rows and columns).

Proposition 1. Consider independent and uniform inputs, i.e., are independent and uniform over . For a function , if a feasible expanded function exists over or where or , then the scheme in Theorem 1 is information theoretically optimal.

Achievability directly follows from Theorem 1 and converse

follows from a simple observation that when there is no security constraint, Alice (Bob) needs to tell Carol the exact value of

(

). The reason is that otherwise two

will be mapped to the same codeword

and

has no identical rows or columns such that some value of

cannot be decoded correctly. This (and more general) result has been proved in several different contexts in the literature, see, e.g., the classical function computation of correlated sources work by Han and Kobayashi [

29] (Lemma 1) and the recent generalization [

30], the computation over multiple access channel work [

31] (Lemma 1), and the network coding for computing work [

32,

33]. Note that the converse holds for block inputs as well, where the rate is defined as the number of bits in the codeword per input symbol. As eliminating the security constraint cannot help, the same converse holds for the secure computation problem as well.

Note that Proposition 1 characterizes the optimal rate region for a class of secure computation problems (which contain infinite instances). One could start from the confusable sets of or and invert them into a function with input size or n. Functions constructed from this method satisfy Proposition 1 and thus we obtain the optimal rate region.

To the best of our knowledge, the only existing information theoretic converse results for the secure computation problem are the ones obtained in [

6], whose expression involves common information terms and an optimization over a class of distributions so that the exact bound needs to be evaluated for each individual instance and is generally not trivial to compute. Interestingly, for some small instances, we find that our achievable scheme is information theoretically optimal (see Remark 3 of Example 6 and Remark 4 of Example 7). For most cases, however, there is a gap in the rate region between the achievable scheme in Theorem 1 and the converse results from in [

6], while it is not clear if and by how much the scheme and the converse can be improved. The model considered in [

6] is the general secure computation problem that allows interactive multi-round protocols. Therefore, the converse results therein might be generally too strong for the minimal secure computation problem. We note that there are instances where we know better schemes than that in Theorem 1 (see Examples 9 and 10 in the discussion section).

3.4. Proof of Lemma 1

The proof of Lemma 1 consists of two parts.

First, we show that the set of elements of

,

, forms a subgroup of

. This is proved by two claims: (1)

and (2)

is closed under multiplication modulo

d. Note that for finite groups, the verification of subgroups only requires the check of the closure property (i.e., associativity and the existence of identity and inverse elements are automatically guaranteed. Refer to Proposition 1 in Chapter 2 of [

23]).

For (1), note that any element g of belongs to , so . As d is a divisor of n, we have and . Thus, of belongs to and .

For (2), consider any two elements of

, e.g.,

and

. As

forms a group, we have for some

,

, i.e.,

for some integer

k. Then,

Therefore

is closed under multiplication.

Second, we show that in the multiset

, each element of

appears for the same number of times. Denote

. As

is a subgroup of

, we have

Denote the multiset

, where

appears

times and

. Assume without loss of generality that

. We need to show that

. This proof is presented next.

From the first part of the proof, we know that

is a subgroup of

. Applying (

43) to

and

, we have

Further, setting

in (

44), we have

Note that multiplication mod

d is commutative. Then, there exists

such that

As

, there exists

such that

On the one hand,

On the other hand,

Comparing (

48) and (

52) (i.e., the number of times that

appears), we have proved that

. The proof of the second part, and thus the proof of the lemma, are now complete.