Attention Mechanisms and Their Applications to Complex Systems

Abstract

:1. Introduction

2. Traditional Deep Learning and the Need for Attention

- 1.

- Classic feedforward neural networks with time delayed states in the inputs but perhaps with an unnecessary increase in the number of parameters.

- 2.

- 1.

- Classic models only perform perception, representing a mapping between inputs and outputs.

- 2.

- Classic models follow a hybrid model where synaptic weights perform both processing and memory tasks but do not have an explicit external memory.

- 3.

- Classic models do not carry out sequential reasoning. This essential process is based on perception and memory through attention and guides the steps of the machine learning model in a conscious and interpretable way.

3. Attention Mechanisms

3.1. Differentiable Attention

- 1.

- Temporal dimensions, e.g., different time steps of a sequence.

- 2.

- Spatial dimensions, e.g., different regions of an image.

- 3.

- Different elements of a memory.

- 4.

- Different features or dimensions of an input vector, etc.

- 1.

- Top-down attention, initiated by the current task.

- 2.

- Bottom-up, initiated spontaneously by the source or memory.

- 1.

- Through a conventional attention (the query is different from the key and the value) in Section 4.2, with the encoder selecting input features and the decoder selecting time steps.

- 2.

- Through a memory network in which a memory of historical data guides the current prediction task in Section 4.3.

- 3.

- Through self-attention (the keys, values and queries come from the same source) in Section 4.4. Here, to encode a vector of the input sequence, self-attention allows the model to focus in a direct way on other vectors in the sequence.

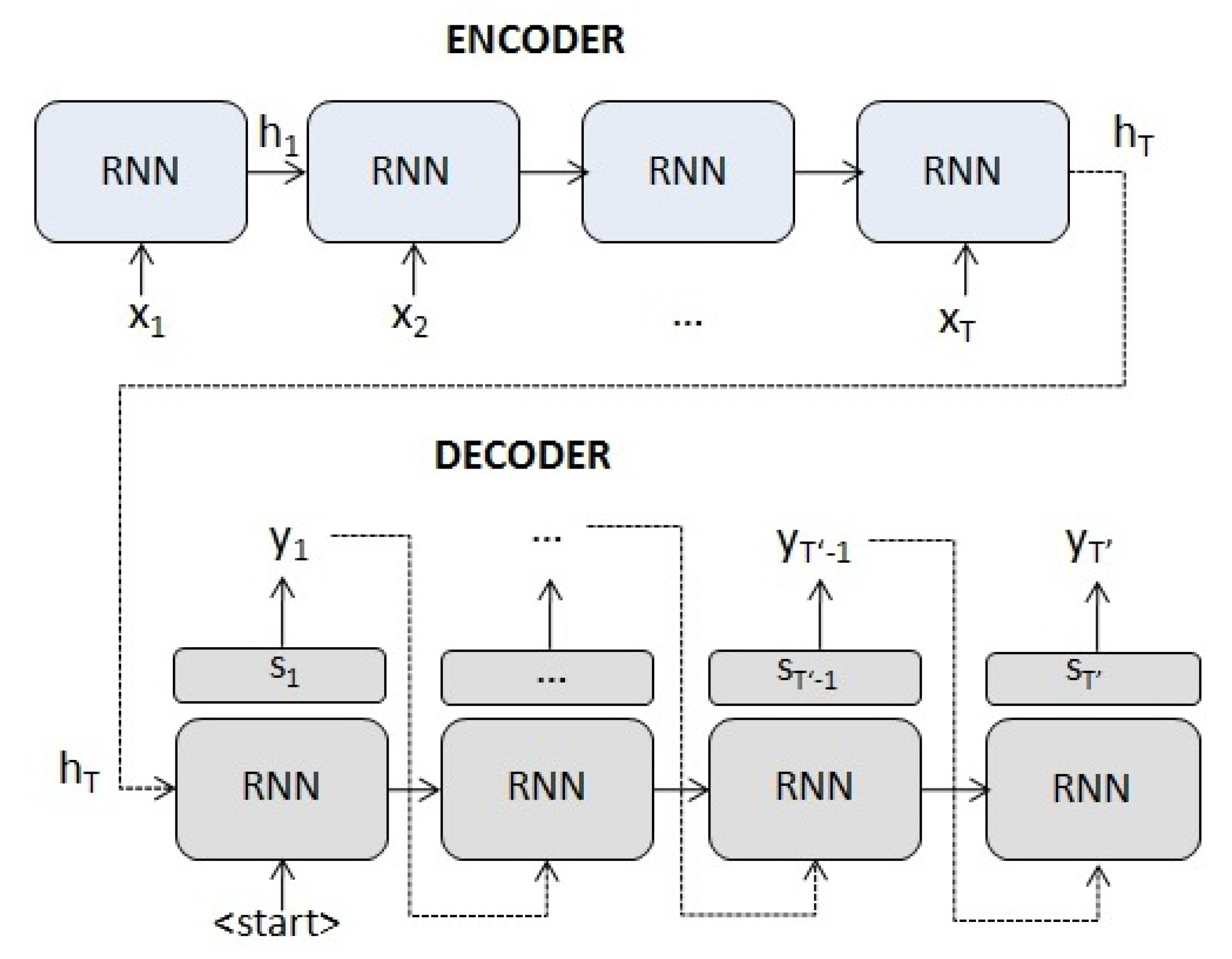

3.2. Attention in seq2seq Models

- 1.

- An encoder which, given an input sequence with , maps towhere is the hidden state of the encoder at time t, m is the size of the hidden state and is an or any of its variants (RNN).

- 2.

- A decoder, where is the hidden state and whose initial state is initialized with the last hidden state of the encoder . It generates the output sequence , (the dimension o depending on the task), whereand is an or any of its variants (RNN) with an additional layer depending on the task (e.g., a linear layer for series prediction or a softmax layer for translation).

3.3. Self-Attention and Memory Networks

- 1.

- For each of the input vectors, create a query , a key and a value vector by multiplying the input vector by three matrices that are trained during the learning process, and .

- 2.

- For each query vector , the self-attention value is computed by mapping the query and all the key-values to an output, , where

- 3.

- This self-attention process is performed h times in what is called multi-headed attention. Each time, the input vectors are projected into a different query, key and value vector using different matrices and for . On each of these projected queries, keys and values, the attention function is performed in parallel, producing dimensional output values, that are concatenated and once again projected to the final values. This multi-headed attention process allows the model to focus on different positions from different representation subspaces.

- 1.

- Encoder: Composed of a stack of six identical layers, each layer with a multi-head self-attention process and a position-wise fully connected feed-forward network. Around each of the sub-layers, a residual connections followed by layer normalization is employed.

- 2.

- Decoder: Is also composed of a stack of six identical layers (with self-attention and a feed-forward network) with an additional third sub-layer to perform attention over the output of the encoder (as in the seq2seq with attention). The self-attention sub-layer is modified to prevent a vector from attending to subsequent vectors in the sequence.

4. Attention Mechanisms in Complex Systems

4.1. Where and How to Apply Attention

- 1.

- In which part of the model should be introduced?

- 2.

- What elements of the model will the attention mechanism relate?

- 3.

- What dimension (temporal, spatial, input dimension, etc.) is the mechanism going to focus on?

- 4.

- Will self-attention or conventional attention be used?

- 5.

- What elements will correspond to the query, the key and the value?

4.2. Attention in Different Phases of a Model

4.3. Memory Networks

4.4. Self-Attention

5. Discussion

- 1.

- By focusing on a subset of elements, it guides the reasoning or cognitive process.

- 2.

- These elements can be tensors (vectors) from the input layer, from the intermediate layer or be external to the model, e.g., a external memory.

- 3.

- It can focus on temporal dimensions (different time steps of a sequence), spatial dimensions (different regions of space) or different features of an input vector.

- 4.

- It can relate each of the input vectors in a more direct and symmetric way to form the output vector.

- 1.

- One stage conventional attention. The attention mechanism allows guiding any complex system task such as modeling, prediction, identification, etc. To do this, it focuses on a set of elements from the input layer or from an intermediate layer. These elements can be temporal, spatial or feature dimensions. For example, to model a dynamical system with an input of dimension n, one can add an attention mechanism to focus and integrate the different input dimensions. The attention mechanism is combined, as we have seen, with an RNN or an LSTM and allows modeling long temporal dependencies. This technique, like the rest, adds complexity to the model. To calculate the attention weights between a task (query) of T elements and an attended region (key, value) of T elements, it is necessary to perform multiplications.

- 2.

- Several stages conventional attention. This case is similar to the previous, one-stage conventional attention but with several attention phases or stages. The attention mechanism is also combined with an RNN or an LSTM and allows modeling long temporal dependencies. As we have seen, the model can focus on a set of feature elements from the input layer and on a set of temporal steps from an intermediate layer. This enables multi-step reasoning. The downside is that more computational cost is added to the model with multiplications for each attention stage.

- 3.

- Memory networks. In memory networks, any complex system task such as modeling, prediction or identification is guided by an external memory. Then, memory networks allow long-term or external dependencies in sequential data to be learned thanks to an external memory component. Instead of taking into account only the most recent states, these networks consider the states of a memory or external data as well. Such is the case of time series prediction also based on an external source that can influence the series. To calculate the attention weights between a task (query) of T elements and an attended memory of elements, it is necessary to perform multiplications.

- 4.

- Self-attention. In self-attention, the component relates different positions of a single sequence in order to compute a transformation of the sequence. The keys, values and queries come from the same source. It is a generalization of neural networks, since they perform a direct transformation of the input but the weights are dynamically calculated. The attention module relates each of the inputs in a more direct way to form the output vector but at the cost of not prioritizing local interactions. Their use case is general since they can replace neural networks, RNNs or even CNNs. To calculate the attention weights for a sequence of T elements it is necessary to perform multiplications.

- 5.

- Combination of the above techniques. It is interesting to combine several of the previous techniques but at the cost of increasing the complexity and adding the computational cost of each of the components. For example, the transformer, which can be used in a multitude of tasks such as sequence modeling, generative models, predictions, machine translation, multi-tasking, etc. The transformer combines self-attention with conventional attention. In the encoder, the transformer has a stack of self-attention blocks. The decoder also has self-attention blocks and an additional layer to perform attention over the output of the encoder.

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| DNC | Differentiable Neural Computers |

| CNN | Convolutional neural network |

| EEG | Electroencephalogram |

| FNN | Feed-forward neural network |

| GPT-3 | Generative Pre-Trained transformer-3 |

| GPU | Graphics Processing Units |

| LSTM | Long short-term memory |

| MDPI | Multidisciplinary Digital Publishing Institute |

| NARX | Nonlinear Autoregressive model with exogenous input |

| ODE | Ordinary Differential Equation |

| RBF | Recurrent radial basis function |

| RNN | Recurrent Neural Network |

References

- Yadan, O.; Adams, K.; Taigman, Y.; Ranzato, M. Multi-GPU Training of ConvNets. arXiv 2013, arXiv:1312.5853. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to Sequence Learning with Neural Networks. In Proceedings of the NIPS 2014, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.R.; Lai, M.; Bolton, A.; et al. Mastering the game of Go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 26 February 2021).

- Chang, B.; Chen, M.; Haber, E.; Chi, E.H. AntisymmetricRNN: A Dynamical System View on Recurrent Neural Networks. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Wang, Z.; Xiao, D.; Fang, F.; Govindan, R.; Pain, C.; Guo, Y. Model identification of reduced order fluid dynamics systems using deep learning. Int. J. Numer. Methods Fluids 2018, 86, 255–268. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y. A new concept using LSTM Neural Networks for dynamic system identification. In Proceedings of the 2017 American Control Conference (ACC), Seattle, WA, USA, 24–26 May 2017; pp. 5324–5329. [Google Scholar] [CrossRef]

- Li, Y.; Cao, H. Prediction for Tourism Flow based on LSTM Neural Network. Procedia Comput. Sci. 2018, 129, 277–283. [Google Scholar] [CrossRef]

- Marcus, G. Deep Learning: A Critical Appraisal. arXiv 2018, arXiv:1801.00631. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the NIPS 2017, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Tang, G.; Müller, M.; Rios, A.; Sennrich, R. Why Self-Attention? A Targeted Evaluation of Neural Machine Translation Architectures. In Proceedings of the EMNLP 2018, Brussels, Belgium, 31 October–4 November 2018. [Google Scholar]

- Hudson, D.A.; Manning, C.D. Compositional Attention Networks for Machine Reasoning. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Gros, C. Complex and Adaptive Dynamical Systems. A Primer, 3rd ed.; Springer: Basel, Switzerland, 2008; Volume 1. [Google Scholar] [CrossRef] [Green Version]

- Layek, G. An Introduction to Dynamical Systems and Chaos; Springer: Basel, Switzerland, 2015; pp. 1–622. [Google Scholar] [CrossRef]

- Arnold, L. Random Dynamical Systems; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Narendra, K.S.; Parthasarathy, K. Identification and control of dynamical systems using neural networks. IEEE Trans. Neural Netw. 1990, 1, 4–27. [Google Scholar] [CrossRef] [Green Version]

- Miyoshi, T.; Ichihashi, H.; Okamoto, S.; Hayakawa, T. Learning chaotic dynamics in recurrent RBF network. In Proceedings of the ICNN’95—International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; Volume 1, pp. 588–593. [Google Scholar] [CrossRef]

- Sato, Y.; Nagaya, S. Evolutionary algorithms that generate recurrent neural networks for learning chaos dynamics. In Proceedings of the IEEE International Conference on Evolutionary Computation, Nagoya, Japan, 20–22 May 1996; pp. 144–149. [Google Scholar] [CrossRef]

- Diaconescu, E. The use of NARX neural networks to predict chaotic time series. WSEAS Trans. Comput. Res. 2008, 3, 182–191. [Google Scholar]

- Assaad, M.; Boné, R.; Cardot, H. Predicting Chaotic Time Series by Boosted Recurrent Neural Networks. In Proceedings of the International Conference on Neural Information Processing 2006, Hong Kong, China, 3–6 October 2006; Volume 4233, pp. 831–840. [Google Scholar] [CrossRef]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014. [Google Scholar] [CrossRef]

- Hernández, A.; Amigó, J.M. The Need for More Integration Between Machine Learning and Neuroscience. In Nonlinear Dynamics, Chaos, and Complexity: In Memory of Professor Valentin Afraimovich; Springer: Singapore, 2021; pp. 9–19. [Google Scholar] [CrossRef]

- Lindsay, G.W. Attention in Psychology, Neuroscience, and Machine Learning. Front. Comput. Neurosci. 2020, 14, 29. [Google Scholar] [CrossRef]

- Deco, G.; Rolls, E. Neurodynamics of Biased Competition and Cooperation for Attention: A Model With Spiking Neurons. J. Neurophysiol. 2005, 94, 295–313. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Huerta, R.; Vembu, S.; Amigó, J.; Nowotny, T.; Elkan, C. Inhibition in Multiclass Classification. Neural Comput. 2012, 24, 2473–2507. [Google Scholar] [CrossRef] [Green Version]

- Arena, P.; Patané, L.; Termini, P.S. Modeling attentional loop in the insect Mushroom Bodies. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, Australia, 10–15 June 2012; pp. 1–7. [Google Scholar]

- Hernández, A.; Amigó, J.M. Multilayer adaptive networks in neuronal processing. Eur. Phys. J. Spec. Top. 2018, 227, 1039–1049. [Google Scholar] [CrossRef]

- Anderson, P.; He, X.; Buehler, C.; Teney, D.; Johnson, M.; Gould, S.; Zhang, L. Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6077–6086. [Google Scholar]

- Gan, Z.; Cheng, Y.; Kholy, A.E.; Li, L.; Liu, J.; Gao, J. Multi-step Reasoning via Recurrent Dual Attention for Visual Dialog. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL 2019), Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Jetley, S.; Lord, N.; Lee, N.; Torr, P. Learn To Pay Attention. arXiv 2018, arXiv:1804.02391. [Google Scholar]

- Hahne, L.; Lüddecke, T.; Wörgötter, F.; Kappel, D. Attention on Abstract Visual Reasoning. arXiv 2019, arXiv:1911.05990. [Google Scholar]

- Xiao, T.; Fan, Q.; Gutfreund, D.; Monfort, M.; Oliva, A.; Zhou, B. Reasoning About Human-Object Interactions Through Dual Attention Networks. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 3918–3927. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Graves, A.; Wayne, G.; Danihelka, I. Neural Turing Machines. arXiv 2014, arXiv:1410.5401. [Google Scholar]

- Cho, K.; van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder–Decoder Approaches. In Proceedings of the SSST-8, Eighth Workshop on Syntax, Semantics and Structure in Statistical Translation, Doha, Qatar, 25 October 2014; Association for Computational Linguistics: Doha, Qatar, 2014; pp. 103–111. [Google Scholar] [CrossRef]

- Graves, A.; Jaitly, N.; Rahman Mohamed, A. Hybrid speech recognition with Deep Bidirectional LSTM. In Proceedings of the 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, Olomouc, Czech Republic, 8–12 December 2013; pp. 273–278. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Sukhbaatar, S.; Szlam, A.; Weston, J.; Fergus, R. End-To-End Memory Networks. In Proceedings of the NIPS 2015, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Qin, Y.; Song, D.; Cheng, H.; Cheng, W.; Jiang, G.; Cottrell, G.W. A Dual-Stage Attention-Based Recurrent Neural Network for Time Series Prediction. arXiv 2017, arXiv:1704.02971. [Google Scholar]

- Hollis, T.; Viscardi, A.; Yi, S.E. A Comparison of LSTMs and Attention Mechanisms for Forecasting Financial Time Series. arXiv 2018, arXiv:1812.07699. [Google Scholar]

- Vinayavekhin, P.; Chaudhury, S.; Munawar, A.; Agravante, D.J.; Magistris, G.D.; Kimura, D.; Tachibana, R. Focusing on What is Relevant: Time-Series Learning and Understanding using Attention. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 2624–2629. [Google Scholar]

- Serrano, S.; Smith, N.A. Is Attention Interpretable? In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics (ACL 2019), Florence, Italy, 28 July–2 August 2019.

- Chang, Y.Y.; Sun, F.Y.; Wu, Y.H.; de Lin, S. A Memory-Network Based Solution for Multivariate Time-Series Forecasting. arXiv 2018, arXiv:1809.02105. [Google Scholar]

- Graves, A.; Wayne, G.; Reynolds, M.; Harley, T.; Danihelka, I.; Grabska-Barwinska, A.; Colmenarejo, S.G.; Grefenstette, E.; Ramalho, T.; Agapiou, J.; et al. Hybrid computing using a neural network with dynamic external memory. Nature 2016, 538, 471–476. [Google Scholar] [CrossRef] [PubMed]

- Ming, Y.; Pelusi, D.; Fang, C.N.; Prasad, M.; Wang, Y.K.; Wu, D.; Lin, C.T. EEG data analysis with stacked differentiable neural computers. Neural Comput. Appl. 2020, 32, 7611–7621. [Google Scholar] [CrossRef] [Green Version]

- Huang, S.; Wang, D.; Wu, X.; Tang, A. DSANet: Dual Self-Attention Network for Multivariate Time Series Forecasting. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 2129–2132. [Google Scholar] [CrossRef] [Green Version]

- Song, H.; Rajan, D.; Thiagarajan, J.J.; Spanias, A. Attend and Diagnose: Clinical Time Series Analysis using Attention Models. arXiv 2017, arXiv:1711.03905. [Google Scholar]

- Lu, Y.; Li, Z.; He, D.; Sun, Z.; Dong, B.; Qin, T.; Wang, L.; Liu, T. Understanding and Improving transformer from a Multi-Particle Dynamic System Point of View. arXiv 2019, arXiv:1906.02762. [Google Scholar]

| Techniques | Capabilities in Modeling Complex Systems |

|---|---|

| Classic models (RNNs, LSTMs …) | Are universal approximators, provide perception, temporal dependence and short memory |

| Seq2seq with attention | Integrates parts, models long term dependencies, guides a task by focusing on a set of elements (temporal, spatial, features …) |

| Memory networks | Integrate external data with the current task and provide an explicit external memory |

| Self-attention | Generalization of neural networks, relates input vectors in a more direct and symmetric way |

| Techniques | Operation | Use Cases | Costs | Applications |

|---|---|---|---|---|

| One-stage att. | Over the input or an | Integrate parts | Complexity | Modeling |

| intermediate layer | Long term dependencies | operations | Prediction | |

| Temporal, spatial … | ||||

| Several stages | Over the input, over | Integrate several parts | Complexity | Modeling |

| an intermediate layer | Long term dependencies | operations | Prediction | |

| Temporal, spatial … | Multi-step reasoning | each att. stage | Sequential reasoning | |

| Memory networks | Over external data | Integrate a memory | Complexity | Modeling |

| Temporal, spatial … | operations | Reasoning over a memory | ||

| Self-attention | Relate elements | General | Complexity | Replace neural networks |

| of the same sequence | Encode an input | Non local operations | ||

| Combination | Combine the above elements | All of the above | Sum of the costs | All of the above |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hernández, A.; Amigó, J.M. Attention Mechanisms and Their Applications to Complex Systems. Entropy 2021, 23, 283. https://doi.org/10.3390/e23030283

Hernández A, Amigó JM. Attention Mechanisms and Their Applications to Complex Systems. Entropy. 2021; 23(3):283. https://doi.org/10.3390/e23030283

Chicago/Turabian StyleHernández, Adrián, and José M. Amigó. 2021. "Attention Mechanisms and Their Applications to Complex Systems" Entropy 23, no. 3: 283. https://doi.org/10.3390/e23030283

APA StyleHernández, A., & Amigó, J. M. (2021). Attention Mechanisms and Their Applications to Complex Systems. Entropy, 23(3), 283. https://doi.org/10.3390/e23030283