1. Introduction

Human fall detection system is an important segment of assistive technology since living assistances are very much obligatory for many people. There has been a remarkable emersion in the elderly population in Bangladesh as well as in western countries over recent years. The statistics on human fall detection have exposed that falls play a key role in injurious death for elders more than 79 years of age [

1]. According to the review from the National Institutes of Health of the United States, approximately 1.6 million aged people are sustaining fall-related excoriations in the U.S. every year [

2]. Meanwhile, China is facing the fastest aging population in human history, the population will rise to about 35% by 2050 [

2] from 2020. A study shows that about 93% of the elders among which 29% live alone in a house [

3]. It was verified that about 50% of the aged people lying on the floor because of fall events for more than one hour, will die within six months even though they do not have any direct injuries [

4].

Another statistic provided by the Public Health Agency of Canada [

5] reported mentionable data. In 2026, one Canadian older than 65 will be out of five where in 2001 the portion was eight to one. It is notable that 93% of elderly people stay in their private house among which 29% of them lead a lonely life [

5]. Again almost 62% of injury-related hospitalizations for elders are the result of falls [

6].

To identify fall at right, in recent years various methods are proposed using advanced devices like wearable sensors, accelerometers, gyroscope, magnetometers and so on. However, this is not an effective solution since it is impractical to wear a device for a long time [

7].

Therefore, it is indispensable to initiate a penetrating surveillance system for senior people, which can immediately and automatically detect fall actions inside the room and notify the state to the caretakers. This is only possible when a sensor-based alarm generation system is placed in the living room.

In this paper, a deep learning and vision-based framework is proposed for human fall detection and classification that can also monitor old people in the indoor environment incorporating a convolutional neural network (CNN) with the recurrent neural network (RNN). Among different types of RNN, gated recurrent unit is integrated along with CNN. We have also explored some transfer learning models like VGG16, VGG19 followed by GRU to classify human fall action. Besides, we have also assessed our proposed model using the two most prominent datasets, UR fall detection dataset and Multiple cameras fall dataset. Our proposed model shows an impressive performance using these datasets compared to other existing models.

Moreover, with the expansion of technology, human beings are leading a sedentary lifestyle which has numerous negative impacts [

8]. Because of this lifestyle, people are suffering from many more diseases like chronic health disease, muscle weakness, labyrinthitis, osteoporosis. Besides, the number of lonely living beings is increasing with the advancement of technology which represents the necessity of a monitoring system to detect adverse events [

9]. This model will be applicable in the medical alert system and even in the old home for monitoring individuals. However, the key contributions of our proposed model are enlisted below:

Building a scratch model combining CNN with GRU to classify human fall events from daily living activities.

Exploring a transfer learning approach through training some recent pre-trained models like Xception, VGG integrating with GRU and evaluating their performance.

Fine-tuning two pre-trained models: VGG16 and VGG19 along with GRU.

Assessing the performance of the proposed model with some deep learning models like 3DCNN, 1DCNN incorporating with GRU.

Executing some best performing recurrent neural networks: Long and Short Term Memory (LSTM), Bidirectional LSTM (BI-LSTM) combining with the proposed 2DCNN network and evaluating performance over two challenging datasets, UR fall detection dataset and Multiple cameras fall dataset.

The later part of this paper is well organized as follows—

Section 2 represents the literature discussion on human fall events,

Section 3 describes the workflow of the proposed architecture,

Section 4 presents experimental details to evaluate the efficiency of the proposed model and

Section 5 discusses the limitation of the proposed model along with potential future work.

2. Related Work

In this decade, among different types of deep learning models [

10], convolutional neural network (CNN) has acquired immense success in computing like image segmentation [

11], object recognition [

12], natural language processing [

13], image understanding [

14] and machine translation [

15], which requires a huge training dataset to complete the set up.

A study by Stone, Erik E. and Marjorie S. reveals that falls of older people at home happens most of the time in dark conditions [

16]. Sowmya K. and Kang-Hyun Jo [

17] classify fall events in a cluttered indoor environment by lessening occlusion effects. However, the knowledge of a series of poses is a key to detect non-fall from fall. For foreground extraction, a frame differencing method is implemented and the human silhouette is extracted using an ellipse fit. Binary support vector machine (SVM) is applied to differentiate fall frames from the non-fall. But SVM underperforms in case of an insufficient training data sample.

Convolutional neural network (CNN) is used to identify different poses in [

18]. Here, in different illumination states, the background subtraction method misclassifies some datasets because of shadow. As a result, it generates false predictions in bending, crawling and sitting positions. Tamura et al. [

19] developed a human fall detection system using a gyroscope and an accelerometer. When a fall action is detected it triggers a wearable airbag. To design the system, 16 subjects have been produced to identify mimicked falls and a thresholding technique is applied to perform this action.

A real-life action recognition system is overviewed in [

20] using deep bidirectional LSTM (DB-LSTM) and convolutional neural network (CNN). Here, DB-LSTM recognizes hidden sequential patterns in the features where CNN extracts data from video frames. But it shows false projection at the identical background and occluded environment.

Du et al. [

21] conducted research on convolutional neural network (CNN) to extract the skeleton joint map from different images. However, the result can be developed if the recurrent neural network (RNN) is implemented properly as in [

22]. Anderson et al. [

23] procreated a surveillance environment using multiple cameras. Human silhouettes captured from the cameras are converted to 3-D representations known as voxel person. Finally, a fall event is classified from linguistic summarizations of temporal fuzzy inference curves, which represent the states of a voxel person.

Different types of human action can be represented as the movement of the skeleton as the human body is an articulated system. The 2D skeleton is extracted from RGB sequences in [

24] using the deeper-cut method and long short term memory (LSTM) is implemented to identify five several actions. However, It is more challenging on processing speed and recognition performance.

The faster R-CNN method is applied in [

25], which achieves an accuracy of 95.5% as it cannot properly classify fall events when a person is sitting on a sofa or a chair. A PCANet model is trained followed by SVM classifier in [

26], which obtains less sensitivity of 88.87% as it cannot identify fall events properly. Moreover, SVM underperforms as fine-tuning hyperparameters in SVM is not so easy.

In [

27] curvature scale space (CSS) features are extracted from human silhouettes and an extreme learning machine (ELM) classifier is used to identify fall action. However, this experiment achieves 86.83% accuracy as it misclassifies the lying and walking position of humans as the silhouette of a falling and a lying person is similar.

A two-stage human fall classification model is proposed in [

28], which achieves an accuracy of 97.34%. To identify human posture from the human skeleton, at the preprocessing stage it considers deflection angles along with spine ratio. To extract the human skeleton it uses OpenPose and to identify confusing daily living actions from fall events, a time-continuous recognition algorithm is developed. However, this model misclassifies workout motions and for increasing accuracy, it has proposed to develop a deep learning model in the future.

Besides, Chen et al. [

29] detect human fall events from the human skeleton information by OpenPose and obtains an accuracy of 97%. To identify fall action three efficient parameters are considered here like centerline angle between human body and ground, speed of descent at the center point of the hip joint, the ratio of width and height of external rectangle surrounding the human body. However, this model is unable to classify partially occluded human actions.

Moreover, A vision-based human fall detection model is proposed by Chen et al. [

7] in the case of complex background. They perform the mask R-CNN method to extract the object from a noisy background. Afterward, for fall action detection, attention-guided Bi-directional LSTM is applied and it acquires 96.7% accuracy. However, this model cannot identify the behavior of multiple people living in the same room.

Nowadays, different types of wearable sensors, that is, accelerometers, buttons are most frequently used to detect falls at the right time. However, using such detectors is uncomfortable and most of the time, older people forget to wear these sensors. Moreover, using a help button is worthless if the person has fainted or is immobilized. Such a framework for elderly fall classification and notification is proposed in [

9]. Here, the tri-axial acceleration of human movement is measured with a cell phone. Both the time domain and frequency domain features are considered here. After feature extraction and pre-processing they have performed a deep belief network with a view to training and testing the system. It shows an accuracy of 97.56% sensitivity along with 97.03% specificity. However, for elder people, it is not always possible to carry a cell phone in an indoor environment.

In [

8], the authors conducted a highly promising experiment to develop a hybrid model using a machine learning method combining with deep learning. After analyzing different comparative experiments, they proposed an architecture of CNN with LSTM for posture detection with an accuracy of 98%. This CNN model has been designed with 10 layers without any batch normalization. Unnormalized data and less dropout rate of 20% can lead to a huge training time of the dataset and the performance of the model may also be affected.

Table 1 represents the summarization of this literature discussion.

As deep learning models have outperformed state-of-the-art models, there are many scopes for innovation and development in this research area. However, a computer vision-based model is proposed to classify fall events at the right time, which provides whole information regarding the movement of a person.

3. Workflow of Proposed Architecture

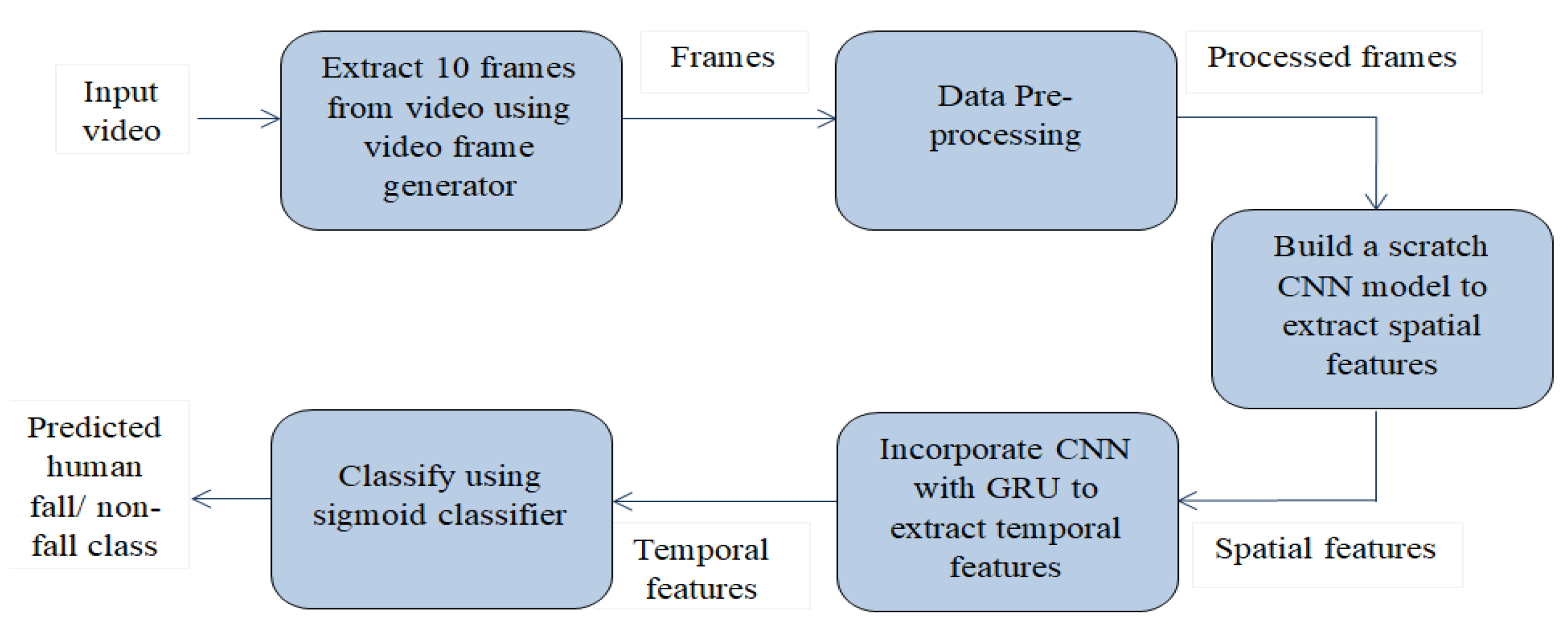

Elderly people monitoring is done through a digital video camera, which will be placed in the room as there are some distance limitations in the Kinect camera. Although it has some privacy concerns, it will give us information about surroundings in case of fall events. Sequential frames are generated from videos of variable length. Frames are passed into the convolutional neural network to extract key features. After that these features are passed into a gated recurrent unit. The output from GRU is passed to a sigmoid classifier to predict the class.

Figure 1 illustrates an overview of the proposed network.

3.1. Frame Generation from Video

There are approximately 300 videos from different datasets of various durations. From each video, we need to pass a sequence of frames to CNN. As mentioned earlier, we picked 10 distributed images from the whole video rather than considering all other frames. The algorithm to generate frame sequence from video is given below. We performed a frame differencing method in Algorithm 1 along with other operations for tweaking important and informative frames from the video.

| Algorithm 1 Video to frame generation. |

Input: Number of frames need to extract Output: Batches of images move_detect = Statistical mean threshold value If move_detect > 0 f = frames [0] f = convert image from RGB to GRAY last = f important_frame = [] for frame number i = 1 to length(frames) f = frame[i] cp = convert image from RGB to GRAY delta = absolute difference(cp, last) threshold = threshold ( delta, thresholdvalue, 255, threshold_binary) threshold = dialate (threshold, structure element, iteration) if np.mean(threshold) > move_detect mark as important_frame end last = cp end nb_ignore = length (important_frame)/nb_frame if length(important_frame) > nb_frame collect frame from last and ignore nb_ignore end end

|

3.2. Preprocessing

For improving image properties, eliminating noisy artifacts and enhancing certain features, it is necessary to preprocess data. We have performed preprocessing by three steps—resizing, augmentation and normalization. In order to minimize computational cost frames are resized to 150 × 150.

On the resized image, augmentation is performed, which transforms frames at each epoch of training. For augmentation, we have performed zoom, horizontal flip, rotation, width shift and height shift. This helps for better generalization of this model.

3.3. Convolutional Neural Network

Convolutional neural network is a type of deep neural network which extracts key features from images using learnable weights and biases and can differentiate one object from another. Comparing with other classification algorithms, it requires much lower preprocessing of images. It consists of an input layer, an output layer along with a series of hidden layers. The hidden layers typically consist of a stack of convolutional layers which perform pixel-wise convolution or multiplication operation and generate a convolved image. The activation function used here is a rectified linear unit (ReLU) layer which is followed by a series of pooling layers and fully connected layers.

There are three types of the pooling operation. These are max pooling, min pooling, and average pooling operation. Among these, the max-pooling operation is most frequently used as it minimizes computational cost along with learnable parameters.

For extracting features from images, CNN uses this series of convolutional layers followed by different pooling layers, flatten layer as well as fully connected layer. Each layer works with different activation functions. Following this, the proposed architecture is also designed with a series of convolution layers with ‘ReLU’ activation function. The ReLU activation function generates a rectified feature map as output. It does not excite all neurons at a time. When the output of linear transformation becomes zero, the neurons will be discarded. Although there are different types of pooling layers, we have used the max-pooling layer

3.4. Custom 2DCNN-GRU Architecture

Preprocessed data are fed to CNN. We have proposed a CNN architecture for extracting spatial features. As the environment in the dataset is less complex, 16, 32, 64, 128, 256, and 512 kernels are used sequentially in the convolution layer to extract features from the image. Weights are tuned for each layer depending on the activation function. This network gives much more accuracy than other pre-trained networks of CNN because an experiment is done for choosing kernel initializer along with activation function. In this model he_uniform kernel initializer is used for choosing the initial kernel and updating weights for training data with ReLU activation function. We have also conducted an experiment for choosing appropriate momentum for batch normalization. We have performed batch normalization with a momentum of 0.9. Batch normalization standardizes the mean and variance to make learning stable. Here, it takes 83 s to complete an epoch using batch normalization. Without batch normalization, it takes 99 s for an epoch in case of training the dataset. After batch normalization, the max pooling operation with stride (2, 2) is performed to extract the strong edges in the image. This also helps to remove shallow edges corresponding to noise. The output of the convolution layer and max-pooling layer are shown, respectively, in

Figure 2 and

Figure 3.

Figure 2 depicts the convolved image and

Figure 3 illustrates the pooled feature map.

After that, a time-distributed layer is used with 512 nodes to prepare data for RNN. Then GRU cells are used to follow the temporal dependency of video frames. The output of the GRU cell is passed to the dense layer. For eliminating overfitting, we have experimented on the dropout rate which is described in this paper. In this regard, a dropout rate of 0.5 is used in the dense layer for better accuracy. Adam optimizer with a learning rate of 0.0001 is used in the model. The detail of the proposed 2DCNN-GRU architecture is depicted in

Figure 4.

3.5. Gated Recurrent Unit (GRU)

Among all kinds of human movements, to classify fall events, we need to consider temporal features along with spatial features. The recurrent neural network can extract temporal features by remembering necessary information from the past. However, during this operation, it faces vanishing and exploding gradient problems. In our model, we have used the gated recurrent unit (GRU) network to solve this problem of RNN using an update gate and a reset gate, which are the vectors to decide what information needs to be passed as output. These gates can be trained to hold information from long ago, without erasing new input through time but pass relevant information for prediction to the next time steps. GRU gives a much better result than a long short term memory (LSTM) cell because of its simple architecture.

Figure 5 depicts the architecture of a GRU cell. There are three gates in GRU called the Update gate, the Reset gate, and the Current memory gate, which has no internal cell state. The Update gate identifies significant preceding information from the antecedent time steps, which is required to be passed along with future information. The reset gate decides how much of the past information needs to be forgotten. The current state gate determines the current state information along with the relevant information from the past.

Mathematical equations learned from [

30] are given below which are used to perform these operations:

Here, represents the mini batch input, acts as the hidden state of utmost time step, and are current weight parameters and , are updated weight parameters.

The output of GRU cell is passed to the dense layer with a dropout rate of 50%. Finally, sigmoid activation function [

31] is applied for binary classification of fall and non-fall action through the following equations.

Deep learning models are efficiently used to lessen uncertainty and entropy measures the uncertainty level. Binary cross entropy plays a significant role to alleviates incongruity between the explorative distribution of training data and the distribution incited by the model. Here following binary cross-entropy loss function [

32] equation is used to compare the predicted class with the actual class.

Here, denotes the truth value and represents the sigmoid probability of ith class.

5. Conclusions

As the deep learning algorithm outperforms other feature extraction algorithms, in this paper, we have proposed a combined architecture where CNN is incorporated with GRU. This is quite challenging to identify human falls at the right time, not only to minimize the negative consequences of a fall but also to increase acceptance level among elderly people. We have used two existing benchmark datasets of fall classification—UR fall detection dataset and multiple cameras fall dataset of variable length videos. The frame number from each video is chosen empirically. To perform classification on these datasets, a scratch model is proposed where CNN followed by GRU is performed, which is our key contribution. Another novelty of our work is we have conducted some transfer learning models along with other deep learning models using the same datasets. However, the proposed model outperforms with an accuracy of 99% with a lower number of parameters than other existing architecture by parameter tunings. Binary cross-entropy loss function outperforms others like mean squared error, hinge loss, and so forth. Despite these, this experiment would be much better if we could enrich our dataset. In the future, we can include an alarm system to take necessary action in time and reduce the after-fall effects. Moreover, the proposed architecture extracts features from the entire frame. Besides, we can reduce the computation time if we can consider only the salient region. Furthermore, this model can also collaborate with people counting techniques to identify the actions of different people residing in the same room.