A Traditional Scientific Perspective on the Integrated Information Theory of Consciousness

Abstract

1. Introduction

2. Integrated Information Theory

2.1. Strengths of IIT

2.2. Potential Weaknesses of IIT

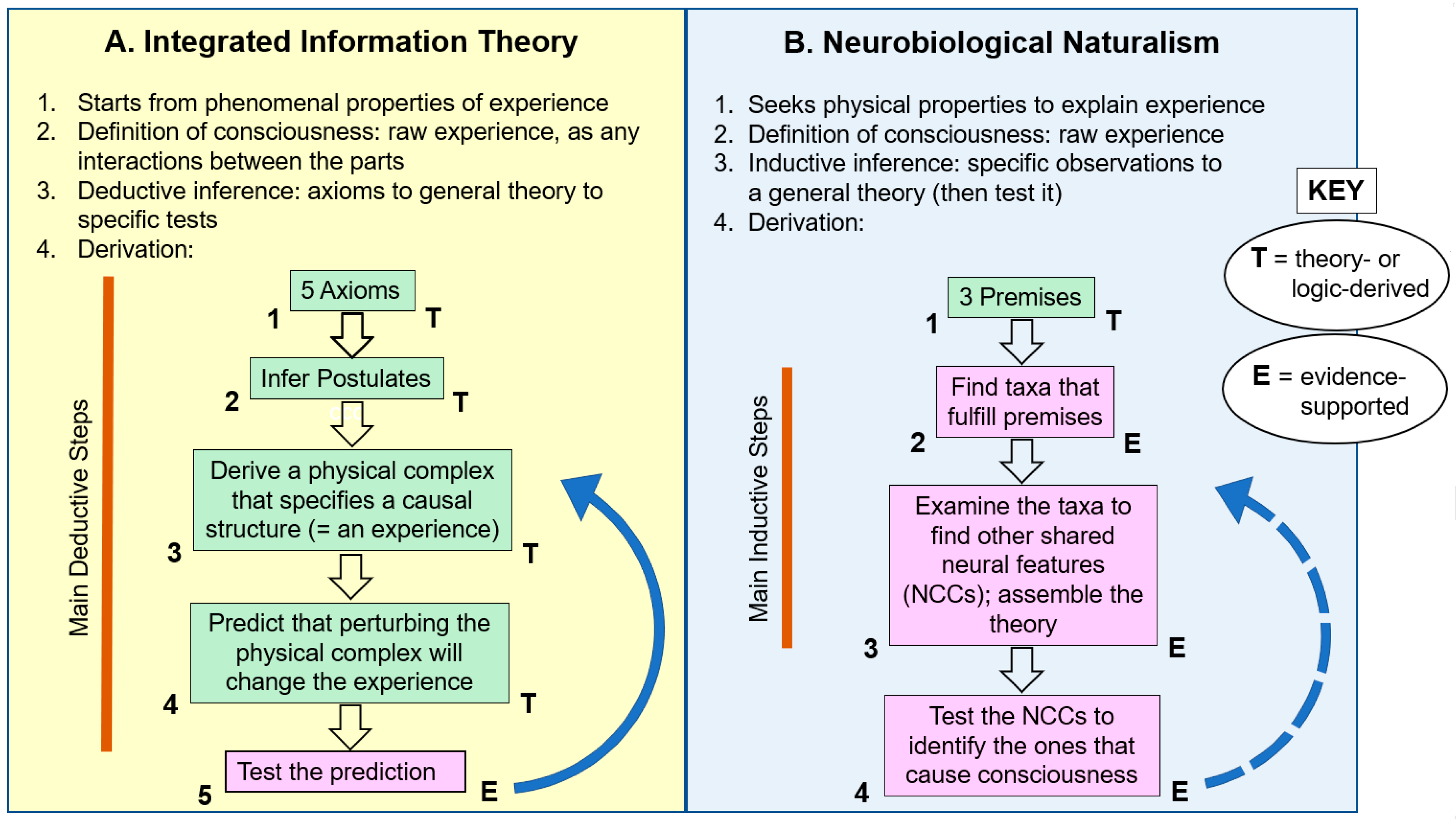

2.2.1. The Way IIT Was Constructed

2.2.2. Too Low a Bar for Consciousness?

2.2.3. Affective Consciousness?

2.2.4. Section Summary

3. Neurobiological Naturalism and IIT

3.1. Introduction and Initial Comparisons

3.2. Derivation of the Neurobiological Naturalism Theory

The typical pattern in science has consisted of three stages. First we find correlations [the neural correlates of consciousness or NCC]... The second step is to check to see whether or not the correlation is a genuine causal correlation... The usual tests for causation, as applied to this problem, would be, first, can you produce consciousness in an unconscious subject by producing the NCC, and, second, can you shut down the consciousness of a conscious subject by shutting down the NCC? All of this is familiar scientific practice. The third step, and we are a long way from reaching this step, is to get a general theoretic account... Why should these causes produce these effects?[77] (p. 172)

... we are in Chalmers’ [96] taxonomy committed Type-C Materialists. We are unimpressed by conceivability arguments [that zombies could exist]. We think that scientific advances will show where such arguments go wrong, as they have in other scientific domains.[97] (p. 2)

3.3. Neurobiological Naturalism is an Inductive Theory, But of What Value?

- how consciousness is a classic example of an emergent property of complex systems (Section 3.1);

- which organisms have consciousness (vertebrates, arthropods, cephalopods), and, by extension, the time when consciousness first evolved (about 550 million years ago, when the fossil record shows the vertebrates and arthropods had diverged [70]);

- a route to determine which of these NCCs generate consciousness, and how this happens, by applying Searle’s recipe that uses the scientific method (Section 3.2);

- how a reconsideration of the known physical barriers can solve some aspects of the mind/body problem (Section 3.2 and [73]).

3.4. Artificial Consciousness

3.5. Potential Weaknesses of Neurobiological Naturalism

4. Conclusions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

- In IIT, this relationship between the physical and the experience is not directly causal. Rather, if the physical elements specify a maximally irreducible, specific, compositional, intrinsic cause-effect power, also called a “MICS” or just a “causal structure,” then that cause-effect power is identical to an experience and everything within that experience must be accounted for in causal terms. Physical elements are the substrate of consciousness, but do not cause it. Instead, if they have the appropriate causal properties, then that causal power will be identical to consciousness, but the substrate itself will not be (Robert Chis-Ciure, personal communication).

- Hard problem and explanatory gap mean basically the same thing, the hard problem just being the difficult problem of bridging the gap. Both ask how physical processes could ever be accompanied by any experience, and by different experiences, such as “red” versus “green”.

- Again, the MICS is a calculated, abstract representation of an experience and the experience’s subparts in multidimensional space [2]. It is the experience, according to IIT.

- A note is in order on the types of evidence to which I refer. Along with the evidence of objectively observable facts, science could probably use and investigate the “phenomenal facts” of experiences, as long as such “facts” seem consistent across different individuals. An example of a phenomenal fact is that the loudness level one experiences roughly doubles with each 10-decibel increase in sound intensity [122]. However, I did not find that the construction of IIT used any phenomenal facts beyond its axiom/postulate stage—it just used math and logic. Thus, I am only considering how IIT uses objective, physical evidence.

- The computational difficulty of ramping up from a simple system with just a few nodes to the “intermediate” level of the C. elegans nervous system is that IIT’s Transition Probability Matrix would be very hard to calculate. C. elegans has 302 neurons (nodes), so all these elements must be perturbed in all possible states, and all possible state transitions must be recorded. Even for just ON/OFF (firing/silence) states of neurons, for 302 nodes there are 8.148 × 1090 possible states. One must compute the probability for each of these states to transition into any other one to kickstart the IIT analysis, and that is far beyond the capacity of existing computers. However, there may be an empirical way around this problem. Marshall et al. [63] argued that the desired, ϕ parameter scales almost linearly with the amount of differentiation of a system’s potential states, which is measured as D. This D is easier and faster to compute than is ϕ and might be used as a proxy for ϕ in the intermediate systems (Robert Chis-Ciure, personal communication).

- Absolute measures of complexity are difficult to obtain, so NN often uses relative measures instead. For example, according NN’s analysis in Section 3.2 the roundworm C. elegans is not conscious with its brain of 140 neurons, but arthropods are conscious with their brains of ≤100,000 neurons [5] (Chapter 9). Thus NN uses a relative measure to say the lower limit for consciousness is a brain that is more complex than the worm’s but less complex than the arthropod’s.

References

- Tononi, G. Integrated information theory. Scholarpedia 2015, 10, 4164. Available online: http://www.scholarpedia.org/article/Integrated_information_theory (accessed on 3 May 2021). [CrossRef]

- Oizumi, M.; Albantakis, L.; Tononi, G. From the phenomenology to the mechanisms of consciousness: Integrated Information Theory 3.0. PLoS Comput. Biol. 2014, 10, e1003588. [Google Scholar] [CrossRef] [PubMed]

- Tononi, G.; Boly, M.; Massimini, M.; Koch, C. Integrated information theory: From consciousness to its physical substrate. Nat. Rev. Neurosci. 2016, 17, 450–461. [Google Scholar] [CrossRef] [PubMed]

- Feinberg, T.E. Neuroontology, neurobiological naturalism, and consciousness: A challenge to scientific reduction and a solution. Phys. Life Rev. 2012, 9, 13–34. [Google Scholar] [CrossRef] [PubMed]

- Feinberg, T.E.; Mallatt, J.M. The Ancient Origins of Consciousness: How the Brain Created Experience; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Feinberg, T.E.; Mallatt, J. The nature of primary consciousness. A new synthesis. Conscious. Cogn. 2016, 43, 113–127. [Google Scholar] [CrossRef] [PubMed]

- Crick, F.; Koch, C. Towards a neurobiological theory of consciousness. Semin. Neurosci. 1990, 2, 263–275. [Google Scholar]

- Blackmore, S.; Troscianko, E.T. Consciousness: An Introduction, 3rd ed.; Routledge: London, UK, 2018. [Google Scholar]

- Koch, C. The Feeling of Life Itself: Why Consciousness is Widespread but Can’t Be Computed; MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Lamme, V.A. Towards a true neural stance on consciousness. Trends Cogn. Sci. 2006, 10, 494–501. [Google Scholar] [CrossRef]

- Min, B.K. A thalamic reticular networking model of consciousness. Theor. Biol. Med. Model. 2010, 7, 1–18. [Google Scholar] [CrossRef]

- Baars, B.J.; Franklin, S.; Ramsøy, T.Z. Global workspace dynamics: Cortical “binding and propagation” enables conscious contents. Front. Psychol. 2013, 4, 200. [Google Scholar] [CrossRef]

- Solms, M.; Friston, K. How and why consciousness arises: Some considerations from physics and physiology. J. Conscious. Stud. 2018, 25, 202–238. [Google Scholar]

- Mashour, G.A.; Roelfsema, P.; Changeux, J.P.; Dehaene, S. Conscious processing and the global neuronal workspace hypothesis. Neuron 2020, 105, 776–798. [Google Scholar] [CrossRef]

- Available online: https://en.wikipedia.org/wiki/Consciousness (accessed on 5 May 2021).

- Nagel, T. What is it like to be a bat? Philos. Rev. 1974, 83, 435–450. [Google Scholar] [CrossRef]

- Mallatt, J.; Blatt, M.R.; Draguhn, A.; Robinson, D.G.; Taiz, L. Debunking a myth: Plant consciousness. Protoplasma 2021, 1–18. [Google Scholar] [CrossRef]

- Chis-Ciure, R.; Ellia, F. Facing up to the hard problem of consciousness as an integrated information theorist. Found. Sci. 2021, 1–17. [Google Scholar] [CrossRef]

- Mallatt, J.; Feinberg, T.E. Sentience in evolutionary context. Anim. Sentience 2020, 5, 14. [Google Scholar] [CrossRef]

- Mallatt, J.; Taiz, L.; Draguhn, A.; Blatt, M.R.; Robinson, D.G. Integrated information theory does not make plant consciousness more convincing. Biochem. Biophys. Res. Commun. 2021. [Google Scholar] [CrossRef] [PubMed]

- Bayne, T. On the axiomatic foundations of the integrated information theory of consciousness. Neurosci. Conscious. 2018, niy007. [Google Scholar] [CrossRef] [PubMed]

- Chalmers, D.J. Facing up to the problem of consciousness. J. Conscious. Stud. 1995, 2, 200–219. [Google Scholar]

- Levine, J. Materialism and qualia: The explanatory gap. Pac. Philos. Q. 1983, 64, 354–361. [Google Scholar] [CrossRef]

- Robb, D.; Heil, J. Mental Causation. In The Stanford Encyclopedia of Philosophy, 2019th ed.; Zalta, E.N., Ed.; Stanford University: Stanford, CA, USA, 1999; Available online: https://plato.stanford.edu/archives/sum2019/entries/mental-causation/ (accessed on 25 February 2021).

- Juel, B.E.; Comolatti, R.; Tononi, G.; Albantakis, L. When is an action caused from within? Quantifying the causal chain leading to actions in simulated agents. In Artificial Life Conference Proceedings, Newcastle, UK, 29 July–2 August 2019; MIT Press: Cambridge, MA, USA, 2019; pp. 477–484. [Google Scholar]

- Albantakis, L. Integrated information theory. In Beyond Neural Correlates of Consciousness; ProQuest Ebook; Overgaard, M., Mogensen, J., Kirkeby-Hinrup, A., Eds.; Taylor and Francis Group: Oxfordshire, UK, 2017; pp. 87–103. [Google Scholar]

- Albantakis, L.; Hintze, A.; Koch, C.; Adami, C.; Tononi, G. Evolution of integrated causal structures in animats exposed to environments of increasing complexity. PLoS Comput. Biol. 2014, 10, e1003966-19. [Google Scholar] [CrossRef]

- Albantakis, L.; Massari, F.; Beheler-Amass, M.; Tononi, G. A macro agent and its actions. arXiv arXiv:2004.00058, 2020.

- Doerig, A.; Schurger, A.; Hess, K.; Herzog, M.H. The unfolding argument: Why IIT and other causal structure theories cannot explain consciousness. Conscious. Cogn. 2019, 72, 49–59. [Google Scholar] [CrossRef]

- Tsuchiya, N.; Andrillon, T.; Haun, A. A reply to “the unfolding argument”: Beyond functionalism/behaviorism and towards a truer science of causal structural theories of consciousness. Conscious. Cogn. 2020, 79, 102877. [Google Scholar] [CrossRef] [PubMed]

- Fallon, F. Integrated Information Theory of Consciousness. Internet Encyclopedia of Philosophy 2016. Available online: https://iep.utm.edu/int-info/ (accessed on 25 February 2021).

- Michel, M.; Beck, D.; Block, N.; Blumenfeld, H.; Brown, R.; Carmel, D.; Carrasco, M.; Chirimuuta, M.; Chun, M.; Cleeremans, A. Opportunities and challenges for a maturing science of consciousness. Nat. Hum. Behav. 2019, 3, 104–107. [Google Scholar] [CrossRef] [PubMed]

- Doerig, A.; Schurger, A.; Herzog, M.H. Hard criteria for empirical theories of consciousness. Cogn. Neurosci. 2020, 1–22. [Google Scholar] [CrossRef]

- Tononi, G.; Koch, C. Consciousness: Here, there and everywhere? Philos. Trans. R. Soc. B Biol. Sci. 2015, 370, 20140167. [Google Scholar] [CrossRef] [PubMed]

- Goff, P.; Seager, W.; Allen-Hermanson, S. Panpsychism. In The Stanford Encyclopedia of Philosophy, 2020th ed.; Zalta, E.N., Ed.; Stanford University: Stanford, CA, USA, 1999; Available online: https://plato.stanford.edu/archives/sum2020/entries/panpsychism/ (accessed on 25 February 2021).

- Cerullo, M.A. The problem with phi: A critique of integrated information theory. PLoS Comput. Biol. 2015, 11, e1004286. [Google Scholar] [CrossRef]

- Casali, A.G.; Gosseries, O.; Rosanova, M.; Boly, M.; Sarasso, S.; Casali, K.R.; Casarotto, S.; Bruno, M.-A.; Laureys, S.; Tononi, G. theoretically based index of consciousness independent of sensory processing and behavior. Sci. Transl. Med. 2013, 2013 5, 198ra105. [Google Scholar] [CrossRef]

- Zenil, H. What Are the Criticisms of (Tononi’s) Integrated Information Theory? Available online: https://www.quora.com/What-are-the-criticisms-of-Tononi%E2%80%99s-Integrated-Information-Theory (accessed on 25 February 2021).

- Haun, A.; Tononi, G. Why does space feel the way it does? Towards a principled account of spatial experience. Entropy 2019, 21, 1160. [Google Scholar] [CrossRef]

- Friedman, D.A.; Søvik, E. The ant colony as a test for scientific theories of consciousness. Synthese 2021, 198, 1457–1480. [Google Scholar] [CrossRef]

- Ginsburg, S.; Jablonka, E. The Evolution of the Sensitive Soul: Learning and the Origins of Consciousness; MIT Press: Cambridge, MA, USA, 2019. [Google Scholar]

- Available online: https://www.dictionary.com/browse/scientific-theory (accessed on 5 May 2021).

- Wikipedia. Scientific Theory. 2021. Available online: https://en.wikipedia.org/wiki/Scientific_theory (accessed on 11 March 2021).

- Strevens, M. The Knowledge Machine: How Irrationality Created Modern Science; Liveright: New York, NY, USA, 2020. [Google Scholar]

- Popper, K. The Logic of Scientific Discovery; Routledge: Philadelphia, PA, USA, 2005. [Google Scholar]

- Tononi, G. Why Scott Should Stare at a Blank Wall and Reconsider (or, the Conscious Grid). Shtetl-Optimized: The Blog of Scott Aaronson. 2014. Available online: http://www.scottaaronson.com/blog/?p=1823 (accessed on 25 February 2021).

- Banach, S.; Tarski, A. Sur la décomposition des ensembles de points en parties respectivement congruentes. Fundamenta Mathematicae 1924, 6, 244–277. [Google Scholar] [CrossRef]

- Wikipedia. Banach-Tarski Paradox. 2021. Available online: en.wikipedia.org/wiki/Banach-Tarski_paradox (accessed on 5 May 2021).

- Streefkirk, R. Inductive Versus Deductive Reasoning. 2019. Available online: https://www.scribbr.com/methodology/inductive-deductive-reasoning (accessed on 5 May 2021).

- Tononi, G. An information integration theory of consciousness. BMC Neurosci. 2004, 5, 1–22. [Google Scholar] [CrossRef] [PubMed]

- Tononi, G. Consciousness as integrated information: A provisional manifesto. Biol. Bull. 2008, 215, 216–242. [Google Scholar] [CrossRef] [PubMed]

- United States Department of Agriculture Hypothesis and Non-hypothesis Research. Available online: https://www.ars.usda.gov (accessed on 21 May 2021).

- Hameroff, S.; Penrose, R. Consciousness in the universe: A review of the ‘Orch OR’ theory. Phys. Life Rev. 2014, 11, 39–78. [Google Scholar] [CrossRef] [PubMed]

- Cisek, P. Resynthesizing behavior through phylogenetic refinement. Atten. Percept. Psychophys. 2019, 81, 2265–2287. [Google Scholar] [CrossRef]

- Key, B.; Brown, D. Designing brains for pain: Human to mollusc. Front. Physiol. 2018, 9, 1027. [Google Scholar] [CrossRef]

- Grossberg, S. Towards solving the hard problem of consciousness: The varieties of brain resonances and the conscious experiences that they support. Neural Netw. 2017, 87, 38–95. [Google Scholar] [CrossRef]

- McFadden, J. Integrating information in the brain’s EM field: The CEMI field theory of consciousness. Neurosci. Conscious. 2020, 2020, niaa016. [Google Scholar] [CrossRef]

- Webb, T.W.; Graziano, M.S. The attention schema theory: A mechanistic account of subjective awareness. Front. Psychol. 2015, 6, 500. [Google Scholar] [CrossRef]

- Calvo, P.; Trewavas, A. Physiology and the (neuro) biology of plant behavior: A farewell to arms. Trends Plant. Sci. 2020, 25, 214–216. [Google Scholar] [CrossRef]

- Horgan, J. Can integrated information theory explain consciousness? Scientific American. 1 December 2015. Available online: https://blogs.scientificamerican.com/cross-check/can-integrated-information-theory-explain-consciousness/ (accessed on 25 February 2021).

- Herzog, M.H.; Esfeld, M.; Gerstner, W. Consciousness & the small network argument. Neural Netw. 2007, 20, 1054–1056. [Google Scholar] [CrossRef] [PubMed]

- Aaronson, S. Why I Am Not an Integrated Information Theorist (or, the Unconscious Expander). Shtetl Optimized: The Blog of Scott Aaronson. 2014. Available online: https://www.scottaaronson.com/blog/?p=1799 (accessed on 25 February 2021).

- Marshall, W.; Gomez-Ramirez, J.; Tononi, G. Integrated information and state differentiation. Front. Psychol. 2016, 7, 926. [Google Scholar] [CrossRef] [PubMed]

- Antonopoulos, C.G.; Fokas, A.S.; Bountis, T.C. Dynamical complexity in the C. elegans neural network. Eur. Phys. J. Spec. Top. 2016, 225, 1255–1269. [Google Scholar] [CrossRef]

- Kunst, M.; Laurell, E.; Mokayes, N.; Kramer, A.; Kubo, F.; Fernandes, A.M.; Forster, D.; Dal Maschio, M.; Baier, H. A cellular-resolution atlas of the larval zebrafish brain. Neuron 2019, 103, 21–38. [Google Scholar] [CrossRef] [PubMed]

- Butler, A.B. Evolution of brains, cognition, and consciousness. Brain Res. Bull. 2008, 75, 442–449. [Google Scholar] [CrossRef]

- LeDoux, J.E.; Brown, R. A higher-order theory of emotional consciousness. Proc. Natl. Acad. Sci. USA 2017, 114, E2016–E2025. [Google Scholar] [CrossRef]

- Ginsburg, S.; Jablonka, E. Sentience in plants: A green red herring? J. Conscious. Stud. 2021, 28, 17–23. [Google Scholar]

- Baluška, F.; Reber, A. Sentience and consciousness in single cells: How the first minds emerged in unicellular species. BioEssays 2019, 41, 1800229. [Google Scholar] [CrossRef]

- Feinberg, T.E.; Mallatt, J.M. Consciousness Demystified; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Tye, K.M. Neural circuit motifs in valence processing. Neuron 2018, 100, 436–452. [Google Scholar] [CrossRef]

- Feinberg, T.E.; Mallatt, J. Subjectivity “demystified”: Neurobiology, evolution, and the explanatory gap. Front. Psychol. 2019, 10, 1686. [Google Scholar] [CrossRef]

- Feinberg, T.E.; Mallatt, J. Phenomenal consciousness and emergence: Eliminating the explanatory gap. Front. Psychol. 2020, 11, 1041. [Google Scholar] [CrossRef] [PubMed]

- Mallatt, J.; Feinberg, T.E. Insect consciousness: Fine-tuning the hypothesis. Anim. Sentience 2016, 1, 10. [Google Scholar] [CrossRef]

- Mallatt, J.; Feinberg, T.E. Consciousness is not inherent in but emergent from life. Anim. Sentience 2017, 1, 15. [Google Scholar] [CrossRef]

- Searle, J.R. The Rediscovery of the Mind; MIT Press: Cambridge MA, USA, 1992. [Google Scholar]

- Searle, J.R. Dualism revisited. J. Physiol. Paris 2007, 101, 169–178. [Google Scholar] [CrossRef] [PubMed]

- Salthe, S.N. Evolving Hierarchical Systems: Their Structure and Representations; Columbia University Press: New York, NY, USA, 1985. [Google Scholar]

- Simon, H.A. The architecture of complexity. In Facets of Systems Science; Klir, G.J., Ed.; Springer: Boston, MA, USA, 1991; pp. 457–476. [Google Scholar]

- Morowitz, H.J. The Emergence of Everything: How the World Became Complex; Oxford University Press: New York, NY, USA, 2002. [Google Scholar]

- Nunez, P.L. The New Science of Consciousness; Prometheus Books: Amherst, NY, USA, 2016. [Google Scholar]

- Seth, A.K. Functions of consciousness. In Elsevier Encyclopedia of Consciousness; Banks, W.P., Ed.; Elsevier: San Francisco, CA, USA, 2009; pp. 279–293. [Google Scholar]

- Chalmers, D.J. The Conscious Mind: In Search of a Fundamental Theory; Oxford University Press: New York, NY, USA, 1996. [Google Scholar]

- Bedau, M.A. Downward causation and the autonomy of weak emergence. In Emergence: Contemporary Readings in Philosophy and Science; Bedau, M.A., Humphreys, P., Eds.; MIT Press: Cambridge, MA, USA, 2008; pp. 155–188. [Google Scholar]

- Shapiro, L.A. The Mind Incarnate; MIT Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Panksepp, J. The cross-mammalian neurophenomenology of primal emotional affects: From animal feelings to human therapeutics. J. Comp. Neurol. 2016, 524, 1624–1635. [Google Scholar] [CrossRef]

- Kaas, J.H. Topographic maps are fundamental to sensory processing. Brain Res. Bull. 1997, 44, 107–112. [Google Scholar] [CrossRef]

- Golomb, J.D.; Kanwisher, N. Higher level visual cortex represents retinotopic, not spatiotopic, object location. Cereb. Cortex 2012, 22, 2794–2810. [Google Scholar] [CrossRef]

- Operant Conditioning. Available online: https://courses.lumenlearning.com/atd-bhcc-intropsych/chapter/operant-conditioning/ (accessed on 26 February 2021).

- Birch, J.; Ginsburg, S.; Jablonka, E. Unlimited Associative Learning and the origins of consciousness: A primer and some predictions. Biol. Philos. 2020, 35, 1–23. [Google Scholar] [CrossRef]

- Radoeva, P.D.; Prasad, S.; Brainard, D.H.; Aguirre, G.K. Neural activity within area V1 reflects unconscious visual performance in a case of blindsight. J. Cogn. Neurosci. 2008, 20, 1927–1939. [Google Scholar] [CrossRef]

- Skora, L.I.; Yeomans, M.R.; Crombag, H.S.; Scott, R.B. Evidence that instrumental conditioning requires conscious awareness in humans. Cognition 2021, 208, 104546. [Google Scholar] [CrossRef]

- Northcutt, R.G. Evolution of centralized nervous systems: Two schools of evolutionary thought. Proc. Natl. Acad. Sci. USA 2012, 109, 10626–10633. [Google Scholar] [CrossRef] [PubMed]

- Shea, N. Methodological encounters with the phenomenal kind. Philos. Phenomenol. Res. 2012, 84, 307. [Google Scholar] [CrossRef] [PubMed]

- Pigliucci, M.; Boudry, M. Prove it! The burden of proof game in science vs. pseudoscience disputes. Philosophia 2014, 42, 487–502. [Google Scholar] [CrossRef]

- Chalmers, D.J. Consciousness and its place in nature. In Blackwell Guide to the Philosophy of Mind; Stich, S.P., Warfield, T.A., Eds.; Blackwell Publishing Ltd: Oxford, UK, 2003; pp. 102–142. [Google Scholar]

- Klein, C.; Barron, A.B. Insect consciousness: Commitments, conflicts and consequences. Anim. Sentience 2016, 1, 21. [Google Scholar] [CrossRef]

- Wikipedia. Neural Correlates of Consciousness. 2021. Available online: https://en.wikipedia.org/wiki/Neural_correlates_of_consciousness (accessed on 6 May 2021).

- Vaz, A.P.; Inati, S.K.; Brunel, N.; Zaghloul, K.A. Coupled ripple oscillations between the medial temporal lobe and neocortex retrieve human memory. Science 2019, 363, 975–978. [Google Scholar] [CrossRef]

- Akam, T.; Kullmann, D.M. Oscillatory multiplexing of population codes for selective communication in the mammalian brain. Nat. Rev. Neurosci. 2014, 15, 111–122. [Google Scholar] [CrossRef]

- Koch, C.; Massimini, M.; Boly, M.; Tononi, G. Neural correlates of consciousness: Progress and problems. Nat. Rev. Neurosci. 2016, 17, 307–321. [Google Scholar] [CrossRef]

- Birch, J. The search for invertebrate consciousness. Noûs 2020, 1–21. [Google Scholar] [CrossRef]

- Ruppert, E.E.; Fox, R.S.; Barnes, R.D. Invertebrate Zoology: A Functional Evolutionary Approach, 7th ed.; Thompson/Brooks/Cole: Belmont, CA, USA, 2004. [Google Scholar]

- Schwitzgebel, E. Is There Something It’s Like to Be a Garden Snail? 2020. Available online: http://www.faculty.ucr.edu/~eschwitz/SchwitzPapers/Snails-201223.pdf (accessed on 25 February 2021).

- Ellia, F.; Chis-Ciure, R. An evolutionary approach to consciousness and complexity: Neurobiological naturalism and integrated information theory. 2021; in preparation. [Google Scholar]

- IBM Cloud Learn Hub. Recurrent Neural Networks. 2020. Available online: https://www.ibm.com/cloud/learn/recurrent-neural-networks (accessed on 26 February 2021).

- Rezk, N.M.; Purnaprajna, M.; Nordström, T.; Ul-Abdin, Z. Recurrent neural networks: An embedded computing perspective. IEEE Access 2020, 8, 57967–57996. [Google Scholar] [CrossRef]

- Kurzweil, R. How to Create a Mind: The Secret of Human Thought Revealed; Penguin: London, UK, 2013. [Google Scholar]

- Manzotti, R.; Chella, A. Good old-fashioned artificial consciousness and the intermediate level fallacy. Front. Robot. AI 2018, 5, 39. [Google Scholar] [CrossRef]

- Boly, M.; Seth, A.K.; Wilke, M.; Ingmundson, P.; Baars, B.; Laureys, S.; Tsuchiya, N. Consciousness in humans and non-human animals: Recent advances and future directions. Front. Psychol. 2013, 4, 625. [Google Scholar] [CrossRef] [PubMed]

- Nieder, A.; Wagener, L.; Rinnert, P. A neural correlate of sensory consciousness in a corvid bird. Science 2020, 369, 1626–1629. [Google Scholar] [CrossRef]

- Barron, A.B.; Klein, C. What insects can tell us about the origins of consciousness. Proc. Natl. Acad. Sci. USA 2016, 113, 4900–4908. [Google Scholar] [CrossRef] [PubMed]

- Bronfman, Z.Z.; Ginsburg, S.; Jablonka, E. The transition to minimal consciousness through the evolution of associative learning. Front. Psychol. 2016, 7, 1954. [Google Scholar] [CrossRef] [PubMed]

- Perry, C.J.; Baciadonna, L.; Chittka, L. Unexpected rewards induce dopamine-dependent positive emotion–like state changes in bumblebees. Science 2016, 353, 1529–1531. [Google Scholar] [CrossRef]

- Birch, J. Animal sentience and the precautionary principle. Anim. Sentience 2017, 2, 1. [Google Scholar] [CrossRef]

- Godfrey-Smith, P. Metazoa; Farrar, Straus, and Giroux: New York, NY, USA, 2020. [Google Scholar]

- Mikhalevich, I.; Powell, R. Minds without spines: Evolutionarily inclusive animal ethics. Anim. Sentience 2020, 5, 1. [Google Scholar] [CrossRef]

- Basso, M.A.; Beck, D.M.; Bisley, T.; Block, N.; Brown, R.; Cai, D.; Carmel, D.; Cleeremans, A.; Dehaene, S.; Fleming, S. Open letter to NIH on Neuroethics Roadmap (BRAIN Initiative) 2019. Available online: https://inconsciousnesswetrust.blogspot.com/2020/05/open-letter-to-nih-on-neuroethics.html (accessed on 26 February 2021).

- Ledoux, J. The Deep History of Ourselves; Penguin: London, UK, 2019. [Google Scholar]

- Ben-Haim, M.S.; Dal Monte, O.; Fagan, N.A.; Dunham, Y.; Hassin, R.R.; Chang, S.W.; Santos, L.R. Disentangling perceptual awareness from nonconscious processing in rhesus monkeys (Macaca mulatta). Proc. Natl. Acad. Sci. USA 2021, 118, 1–9. [Google Scholar] [CrossRef]

- Velmans, M. How could consciousness emerge from adaptive functioning? Anim. Sentience 2016, 1, 6. [Google Scholar] [CrossRef]

- Pautz, A. What is the integrated information theory of consciousness? J. Conscious. Stud. 2019, 26, 188–215. [Google Scholar]

Neural complexity (more than in a simple, core brain)

Memory of perceived objects or events |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mallatt, J. A Traditional Scientific Perspective on the Integrated Information Theory of Consciousness. Entropy 2021, 23, 650. https://doi.org/10.3390/e23060650

Mallatt J. A Traditional Scientific Perspective on the Integrated Information Theory of Consciousness. Entropy. 2021; 23(6):650. https://doi.org/10.3390/e23060650

Chicago/Turabian StyleMallatt, Jon. 2021. "A Traditional Scientific Perspective on the Integrated Information Theory of Consciousness" Entropy 23, no. 6: 650. https://doi.org/10.3390/e23060650

APA StyleMallatt, J. (2021). A Traditional Scientific Perspective on the Integrated Information Theory of Consciousness. Entropy, 23(6), 650. https://doi.org/10.3390/e23060650