Leveraging Stochasticity for Open Loop and Model Predictive Control of Spatio-Temporal Systems

Abstract

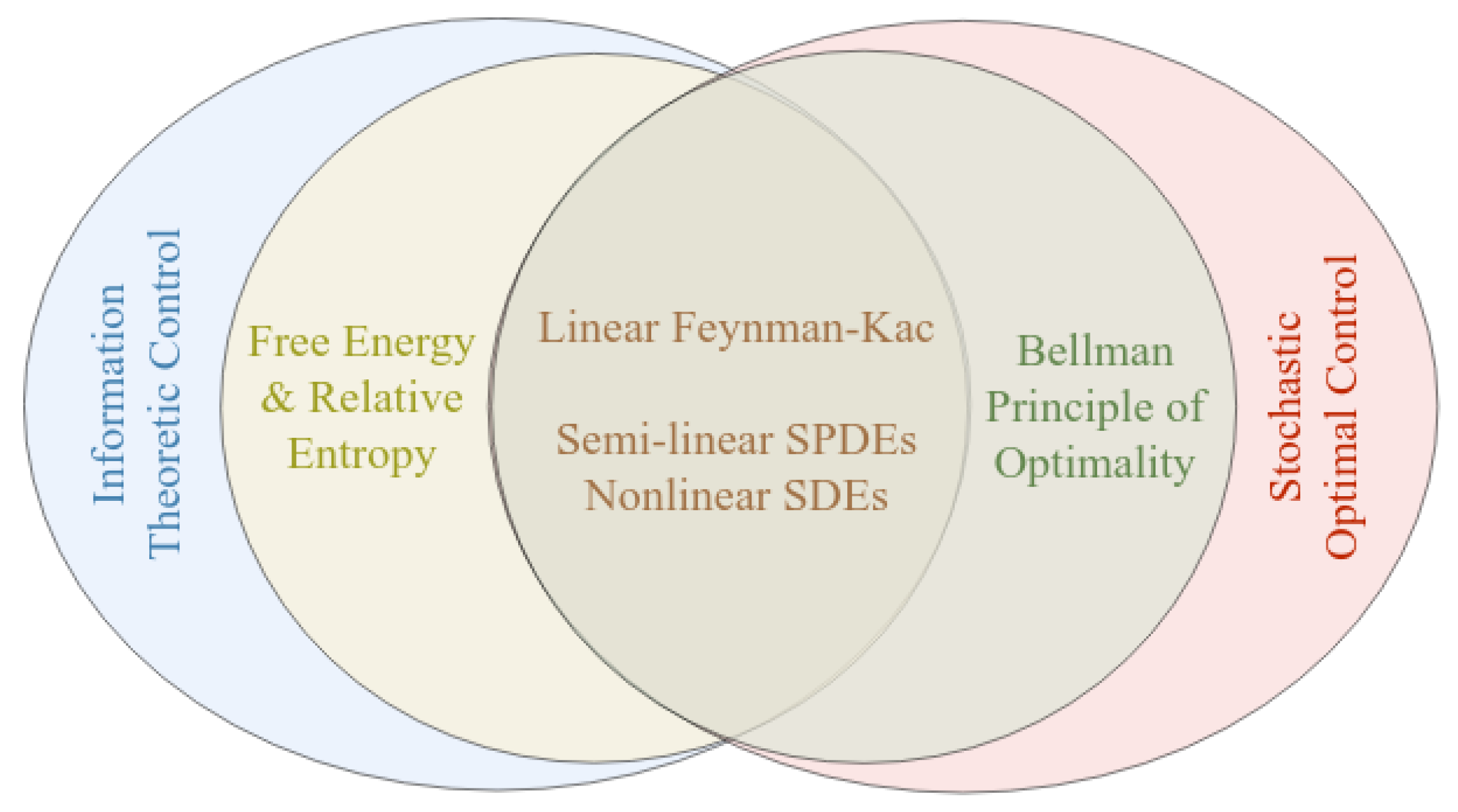

1. Introduction and Related Work

2. Preliminaries and Problem Formulation

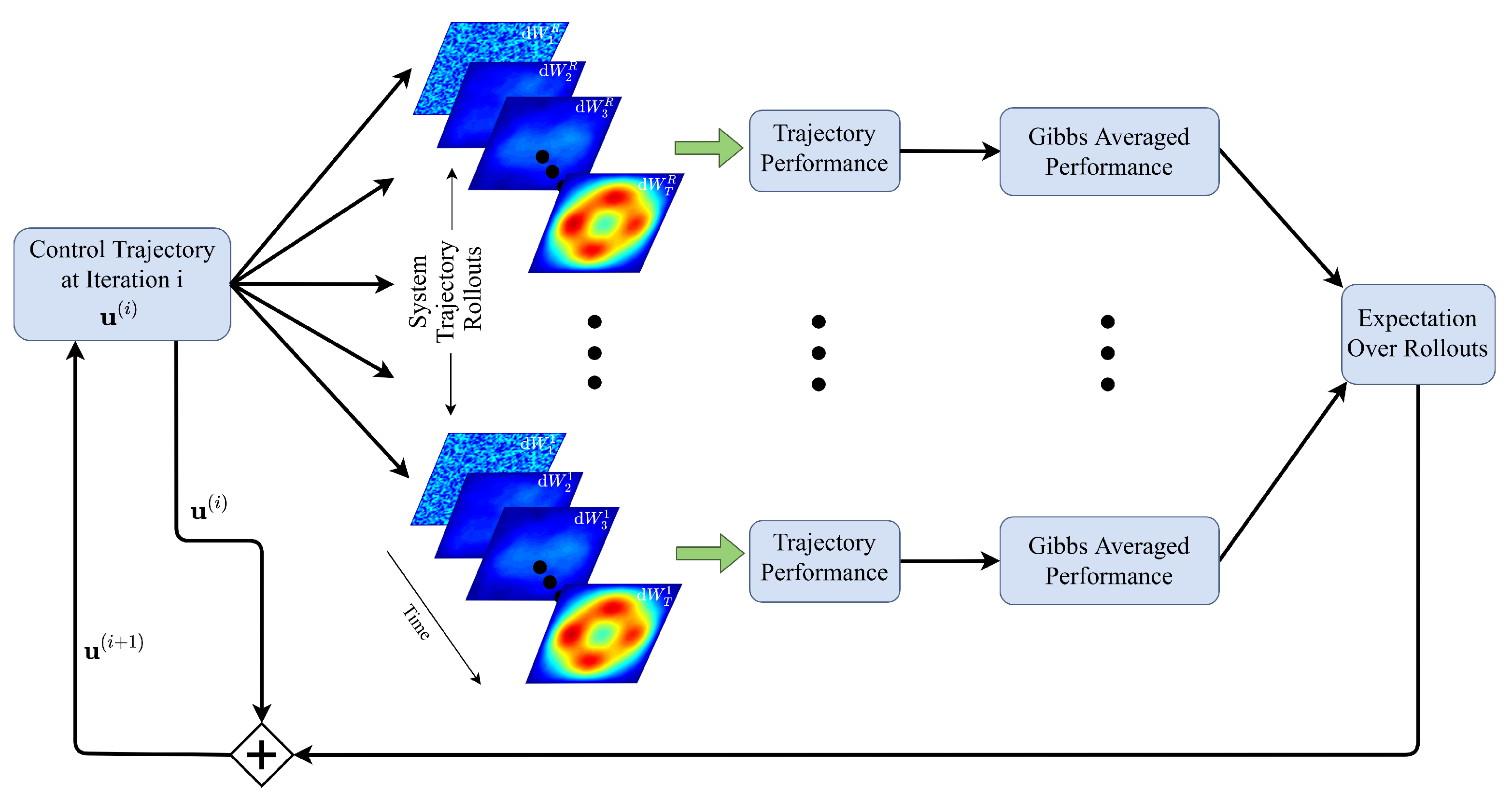

3. Stochastic Optimization in Hilbert Spaces

4. Comparisons to Finite-Dimensional Optimization

5. Numerical Results

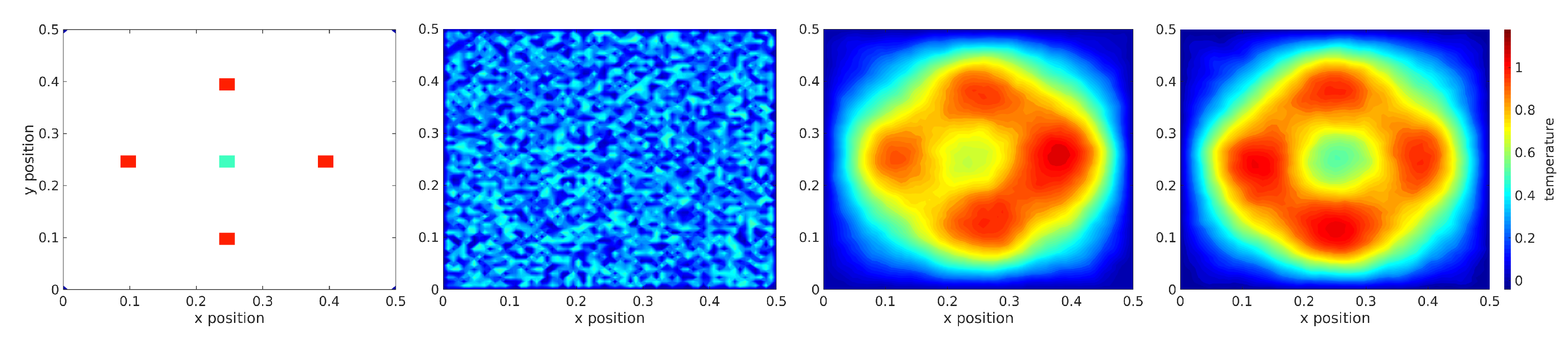

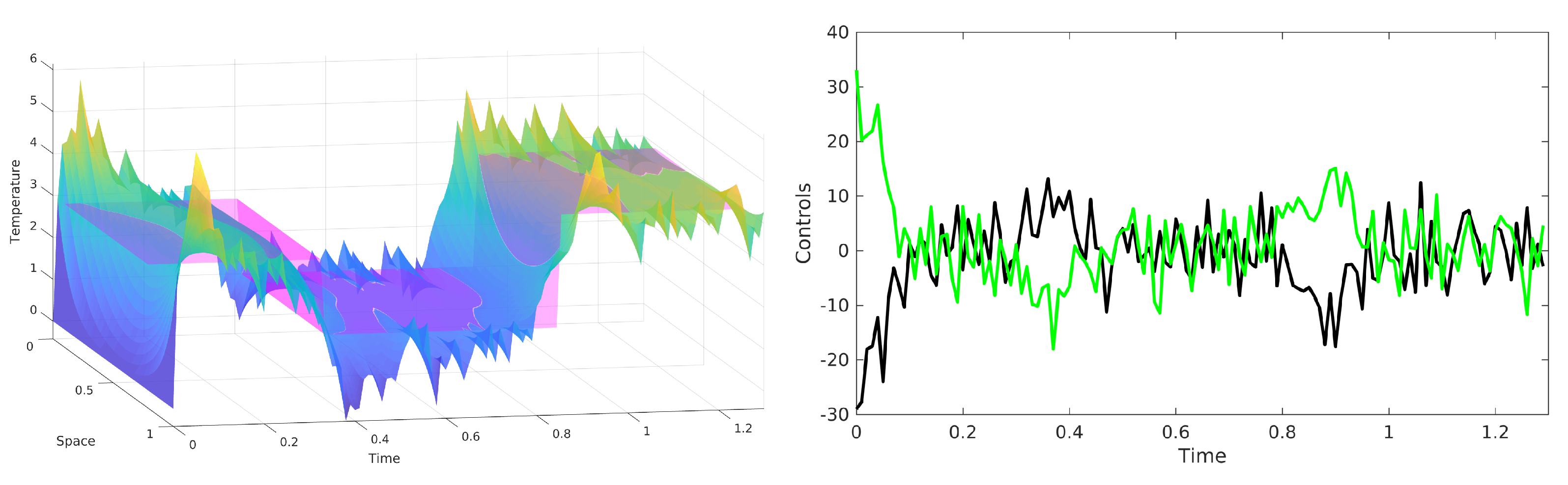

5.1. Distributed Control of Stochastic PDEs in Fluid Physics

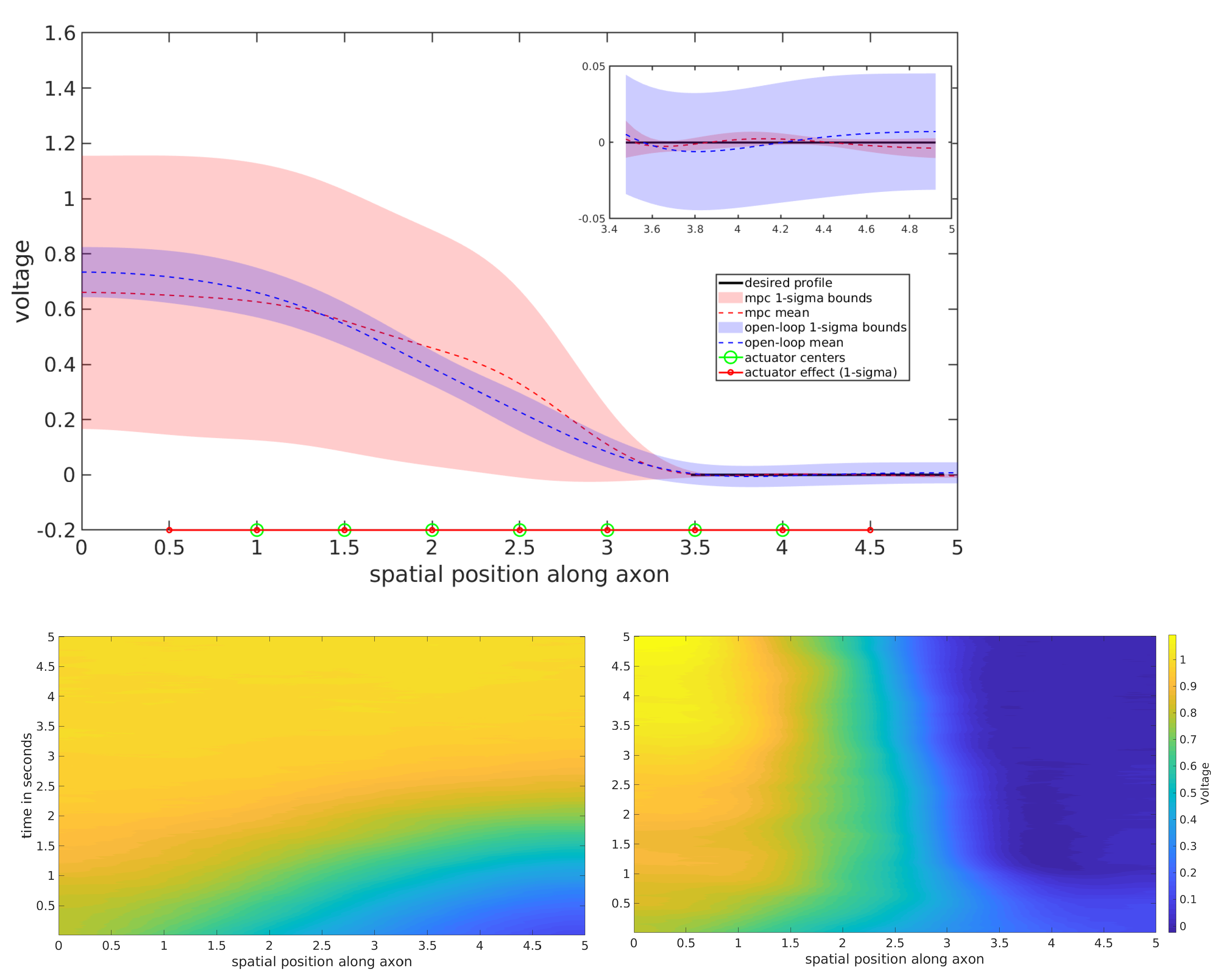

5.2. Boundary Control of Stochastic PDEs

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SPDE | Stochastic Partial Differential Equation |

| PDE | Partial Differential Equation |

| SDE | Stochastic Differential Equation |

| ODE | Ordinary Differential Equation |

| SOC | Stochastic Optimal Control |

| HJB | Hamilton–Jacobi–Bellman |

| MPC | Model Predictive Control |

| RMSE | Root Mean Squared Error |

Appendix A. Description of the Hilbert Space Wiener Process

- (i)

- (ii)

- W has continuous trajectories.

- (iii)

- W has independent increments.

- (iv)

- (v)

- (i)

- For any , there are only finitely many eigenvalues of covariance operator Q such that . That is, the set , where is the positive natural numbers, has finite elements.

- (ii)

- The eigenvalues of covariance operator Q follow a bounded periodic function such that ∀ and .

- (iii)

- Both case (i) and case (ii) are satisfied. In this case, the eigenvalues follow a bounded and convergent periodic function with .

Appendix B. Relative Entropy and Free Energy Dualities in Hilbert Spaces

Appendix C. A Girsanov Theorem for SPDEs

Appendix D. Proof of Lemma 1

Appendix E. Feynman–Kac for Spatio-Temporal Diffusions: From Expectations to Hilbert Space PDEs

Appendix F. Connections to Stochastic Dynamic Programming

Appendix G. SPDEs under Boundary Control and Noise

Appendix H. An Equivalence of the Variational Optimization Approach for SPDEs with Q-Wiener Noise

Appendix I. A Comparison to Variational Optimization in Finite Dimensions

Appendix J. Algorithms for Open Loop and Model Predictive Infinite Dimensional Controllers

| Algorithm A1 Open-loop infinite dimensional controller. |

|

| Algorithm A2 Model predictive infinite dimensional controller. |

|

Appendix K. Brief Description of Each Experiment

Appendix K.1. Heat SPDE

Appendix K.2. Burgers SPDE

Appendix K.3. Nagumo SPDE

References

- Chow, P. Stochastic Partial Differential Equations; Taylor & Francis: Boca Raton, FL, USA, 2007. [Google Scholar]

- Da Prato, G.; Zabczyk, J. Stochastic Equations in Infinite Dimensions; Encyclopedia of Mathematics and its Applications; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Mikulevicius, R.; Rozovskii, B.L. Stochastic Navier–Stokes Equations for Turbulent Flows. SIAM J. Math. Anal. 2004, 35, 1250–1310. [Google Scholar] [CrossRef]

- Dumont, G.; Payeur, A.; Longtin, A. A stochastic-field description of finite-size spiking neural networks. PLoS Comput. Biol. 2017, 13, e1005691. [Google Scholar] [CrossRef]

- Pardoux, E. Stochastic partial differential equations and filtering of diffusion processes. Stochastics 1980, 3, 127–167. [Google Scholar] [CrossRef]

- Bang, O.; Christiansen, P.L.; If, F.; Rasmussen, K.O.; Gaididei, Y.B. Temperature effects in a nonlinear model of monolayer Scheibe aggregates. Phys. Rev. E 1994, 49, 4627–4636. [Google Scholar] [CrossRef]

- Cont, R. Modeling term structure dynamics: An infinite dimensional approach. Int. J. Theor. Appl. Financ. 2005, 8, 357–380. [Google Scholar] [CrossRef]

- Gough, J.; Belavkin, V.P.; Smolyanov, O.G. Hamilton-Jacobi-Bellman equations for quantum optimal feedback control. J. Opt. B Quantum Semiclassical Opt. 2005, 7, S237. [Google Scholar] [CrossRef]

- Bouten, L.; Edwards, S.; Belavkin, V.P. Bellman equations for optimal feedback control of qubit states. J. Phys. B At. Mol. Opt. Phys. 2005, 38, 151. [Google Scholar] [CrossRef][Green Version]

- Pontryagin, L.; Boltyanskii, V.; Gamkrelidze, R.; Mishchenko, E. The Mathematical Theory of Optimal Processes; Pergamon Press: New York, NY, USA, 1962. [Google Scholar]

- Bellman, R.; Kalaba, R. Selected Papers On Mathematical Trends in Control Theory; Dover Publications: Mineola, NY, USA, 1964. [Google Scholar]

- Yong, J.; Zhou, X. Stochastic Controls: Hamiltonian Systems and HJB Equations; Stochastic Modelling and Applied Probability; Springer: New York, NY, USA, 1999. [Google Scholar]

- Lou, Y.; Hu, G.; Christofides, P.D. Model predictive control of nonlinear stochastic PDEs: Application to a sputtering process. In Proceedings of the 2009 American Control Conference, St. Louis, MO, USA, 10–12 June 2009; pp. 2476–2483. [Google Scholar] [CrossRef]

- Gomes, S.; Kalliadasis, S.; Papageorgiou, D.; Pavliotis, G.; Pradas, M. Controlling roughening processes in the stochastic Kuramoto-Sivashinsky equation. Phys. D Nonlinear Phenom. 2017, 348, 33–43. [Google Scholar] [CrossRef]

- Pardoux, E.; Rascanu, A. Stochastic Differential Equations, Backward SDEs, Partial Differential Equations; Springer: Berlin/Heidelberg, Germany, 2014; Volume 69. [Google Scholar] [CrossRef]

- Fleming, W.H.; Soner, H.M. Controlled Markov Processes and Viscosity Solutions, 2nd ed.; Applications of Mathematics; Springer: New York, NY, USA, 2006. [Google Scholar]

- Exarchos, I.; Theodorou, E.A. Stochastic optimal control via forward and backward stochastic differential equations and importance sampling. Automatica 2018, 87, 159–165. [Google Scholar] [CrossRef]

- Williams, G.; Aldrich, A.; Theodorou, E.A. Model Predictive Path Integral Control: From Theory to Parallel Computation. J. Guid. Control. Dyn. 2017, 40, 344–357. [Google Scholar] [CrossRef]

- Evans, E.N.; Pereira, M.A.; Boutselis, G.I.; Theodorou, E.A. Variational Optimization Based Reinforcement Learning for Infinite Dimensional Stochastic Systems. In Proceedings of the Conference on Robot Learning, Osaka, Japan, 30 October–1 November 2019. [Google Scholar]

- Evans, E.N.; Kendall, A.P.; Boutselis, G.I.; Theodorou, E.A. Spatio-Temporal Stochastic Optimization: Theory and Applications to Optimal Control and Co-Design. In Proceedings of the 2020 Robotics: Sciences and Systems (RSS) Conference, 12–16 July 2020. [Google Scholar]

- Evans, E.N.; Kendall, A.P.; Theodorou, E.A. Stochastic Spatio-Temporal Optimization for Control and Co-Design of Systems in Robotics and Applied Physics. arXiv 2021, arXiv:2102.09144. [Google Scholar]

- Bieker, K.; Peitz, S.; Brunton, S.L.; Kutz, J.N.; Dellnitz, M. Deep Model Predictive Control with Online Learning for Complex Physical Systems. arXiv 2019, arXiv:1905.10094. [Google Scholar]

- Nair, A.G.; Yeh, C.A.; Kaiser, E.; Noack, B.R.; Brunton, S.L.; Taira, K. Cluster-based feedback control of turbulent post-stall separated flows. J. Fluid Mech. 2019, 875, 345–375. [Google Scholar] [CrossRef]

- Mohan, A.T.; Gaitonde, D.V. A deep learning based approach to reduced order modeling for turbulent flow control using LSTM neural networks. arXiv 2018, arXiv:1804.09269. [Google Scholar]

- Morton, J.; Jameson, A.; Kochenderfer, M.J.; Witherden, F. Deep dynamical modeling and control of unsteady fluid flows. arXiv 2018, arXiv:1805.07472. [Google Scholar]

- Rabault, J.; Kuchta, M.; Jensen, A.; Réglade, U.; Cerardi, N. Artificial neural networks trained through deep reinforcement learning discover control strategies for active flow control. J. Fluid Mech. 2019, 865, 281–302. [Google Scholar] [CrossRef]

- Curtain, R.F.; Glover, K. Robust stabilization of infinite dimensional systems by finite dimensional controllers. Syst. Control Lett. 1986, 7, 41–47. [Google Scholar] [CrossRef]

- Balas, M. Feedback control of flexible systems. IEEE Trans. Autom. Control 1978, 23, 673–679. [Google Scholar] [CrossRef]

- Fabbri, G.; Gozzi, F.; Swiech, A. Stochastic Optimal Control in Infinite Dimensions—Dynamic Programming and HJB Equations; Number 82 in Probability Theory and Stochastic Modelling; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Da Prato, G.; Debussche, A. Control of the Stochastic Burgers Model of Turbulence. SIAM J. Control Optim. 1999, 37, 1123–1149. [Google Scholar] [CrossRef][Green Version]

- Feng, J. Large deviation for diffusions and Hamilton-Jacobi equation in Hilbert spaces. Ann. Probab. 2006, 34, 321–385. [Google Scholar] [CrossRef]

- Theodorou, E.A.; Boutselis, G.I.; Bakshi, K. Linearly Solvable Stochastic Optimal Control for Infinite-Dimensional Systems. In Proceedings of the 2018 IEEE Conference on Decision and Control (CDC), Miami, FL, USA, 17–19 December 2018; IEEE: New York, NY, USA, 2018; pp. 4110–4116. [Google Scholar]

- Todorov, E. Efficient computation of optimal actions. Proc. Natl. Acad. Sci. USA 2009, 106, 11478–11483. [Google Scholar] [CrossRef] [PubMed]

- Theodorou, E.; Todorov, E. Relative entropy and free energy dualities: Connections to Path Integral and KL control. In Proceedings of the IEEE Conference on Decision and Control, Maui, HI, USA, 10–13 December 2012; pp. 1466–1473. [Google Scholar] [CrossRef]

- Theodorou, E.A. Nonlinear Stochastic Control and Information Theoretic Dualities: Connections, Interdependencies and Thermodynamic Interpretations. Entropy 2015, 17, 3352. [Google Scholar] [CrossRef]

- Kappen, H.J. Path integrals and symmetry breaking for optimal control theory. J. Stat. Mech. Theory Exp. 2005, 11, P11011. [Google Scholar] [CrossRef]

- Maslowski, B. Stability of semilinear equations with boundary and pointwise noise. Ann. Della Sc. Norm. Super. Pisa Cl. Sci. 1995, 22, 55–93. [Google Scholar]

- Debussche, A.; Fuhrman, M.; Tessitore, G. Optimal control of a stochastic heat equation with boundary-noise and boundary-control. ESAIM Control. Optim. Calc. Var. 2007, 13, 178–205. [Google Scholar] [CrossRef]

- Kappen, H.J.; Ruiz, H.C. Adaptive Importance Sampling for Control and Inference. J. Stat. Phys. 2016, 162, 1244–1266. [Google Scholar] [CrossRef]

- Da Prato, G.; Debussche, A.; Temam, R. Stochastic Burgers’ equation. Nonlinear Differ. Equ. Appl. NoDEA 1994, 1, 389–402. [Google Scholar] [CrossRef]

- Jeng, D.T. Forced model equation for turbulence. Phys. Fluids 1969, 12, 2006–2010. [Google Scholar] [CrossRef]

- Lord, G.J.; Powell, C.E.; Shardlow, T. An Introduction to Computational Stochastic PDEs; Cambridge Texts in Applied Mathematics, Cambridge University Press: Cambridge, UK, 2014. [Google Scholar] [CrossRef]

- Duncan, T.E.; Maslowski, B.; Pasik-Duncan, B. Ergodic boundary/point control of stochastic semilinear systems. SIAM J. Control Optim. 1998, 36, 1020–1047. [Google Scholar] [CrossRef]

| Equation Name | Partial Differential Equation | Field State |

|---|---|---|

| Nagumo | Voltage | |

| Heat | Heat/temperature | |

| Burgers (viscous) | Velocity | |

| Allen–Cahn | Phase of a material | |

| Navier–Stokes | Velocity | |

| Nonlinear Schrodinger | Wave function | |

| Korteweg–de Vries | Plasma wave | |

| Kuramoto–Sivashinsky | Flame front |

| RMSE | Average | |||||

|---|---|---|---|---|---|---|

| Targets | Left | Center | Right | Left | Center | Right |

| MPC | 0.0344 | 0.0156 | 0.0132 | 0.0309 | 0.0718 | 0.0386 |

| Open-loop | 0.0820 | 0.1006 | 0.0632 | 0.0846 | 0.0696 | 0.0797 |

| Task | Acceleration | Suppression | ||

|---|---|---|---|---|

| Paradigm | MPC | Open-Loop | MPC | Open-Loop |

| RMSE | 6.605 × 10 | 0.0042 | 0.0021 | 0.0048 |

| Avg. | 0.0059 | 0.0197 | 0.0046 | 0.0389 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boutselis, G.I.; Evans, E.N.; Pereira, M.A.; Theodorou, E.A. Leveraging Stochasticity for Open Loop and Model Predictive Control of Spatio-Temporal Systems. Entropy 2021, 23, 941. https://doi.org/10.3390/e23080941

Boutselis GI, Evans EN, Pereira MA, Theodorou EA. Leveraging Stochasticity for Open Loop and Model Predictive Control of Spatio-Temporal Systems. Entropy. 2021; 23(8):941. https://doi.org/10.3390/e23080941

Chicago/Turabian StyleBoutselis, George I., Ethan N. Evans, Marcus A. Pereira, and Evangelos A. Theodorou. 2021. "Leveraging Stochasticity for Open Loop and Model Predictive Control of Spatio-Temporal Systems" Entropy 23, no. 8: 941. https://doi.org/10.3390/e23080941

APA StyleBoutselis, G. I., Evans, E. N., Pereira, M. A., & Theodorou, E. A. (2021). Leveraging Stochasticity for Open Loop and Model Predictive Control of Spatio-Temporal Systems. Entropy, 23(8), 941. https://doi.org/10.3390/e23080941