Research on Multi-Terminal’s AC Offloading Scheme and Multi-Server’s AC Selection Scheme in IoT

Abstract

:1. Introduction

- Considering the characteristics of MEC’s data processing capacity and SWIPT’s energy collection, a multi-terminal, multi-relay, and multi-server edge offloading and selection architecture with the advantages of MEC and SWIPT is designed. The MEC server can provide high-speed computing services, but it also has computing costs;

- Under a time-varying environment and the time and energy consumption constraints of the IoT, we propose two non-convex problems related to computing rate and cost. Each non-convex problem is decomposed into two subproblems;

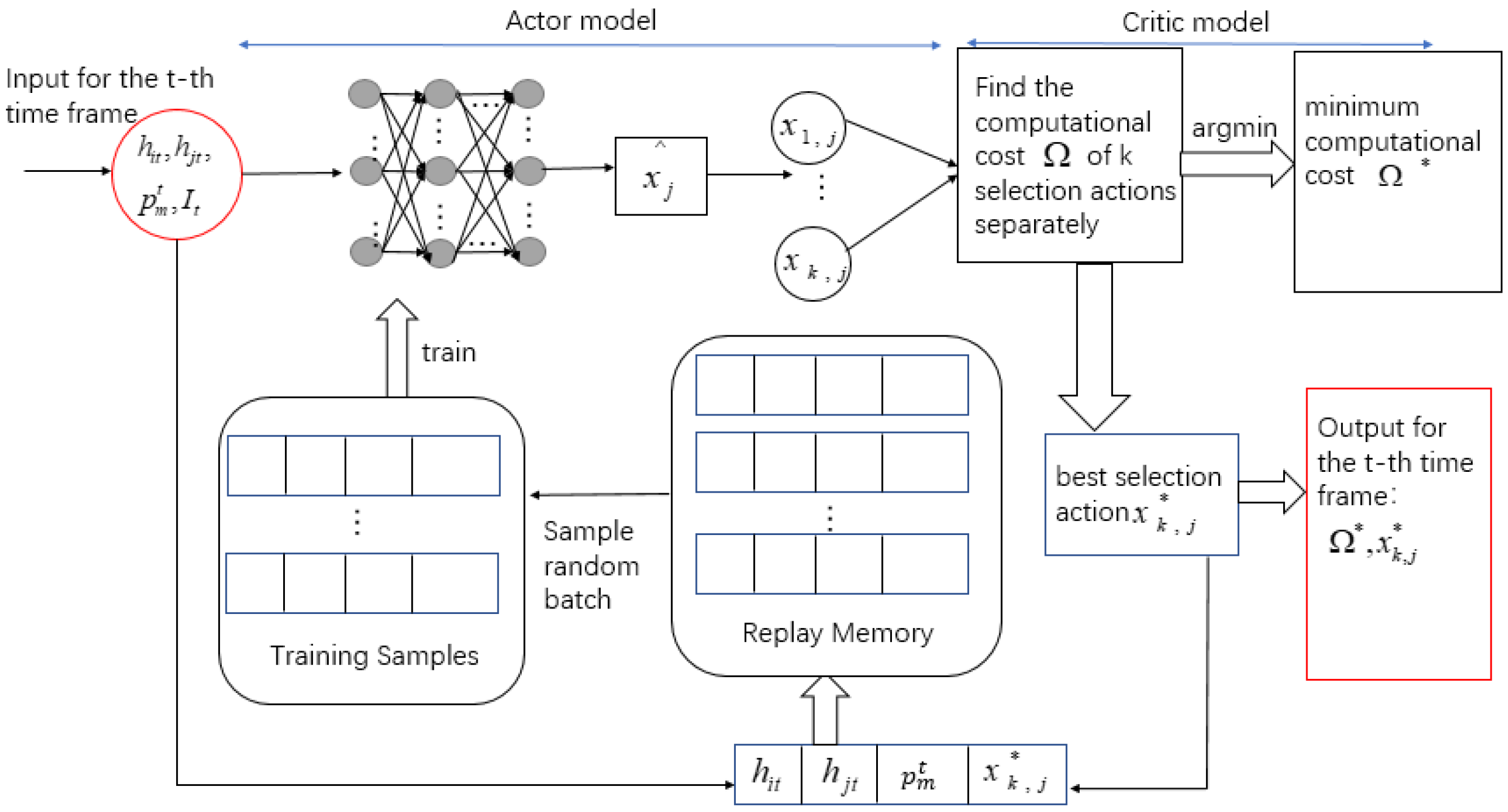

- In contrast to other static optimization method, we propose an AC algorithm of online dynamic optimization by combining the system model. The improved actor module and the critic module are updated iteratively. By the adaptive setting method of k, we can quickly find an offloading scheme that maximizes the computing rate and a selection scheme that minimizes the computing cost.The simulation results verify the effectiveness of the AC scheme.

2. Related Work

3. System Model

4. Problem Formulation

4.1. Offloading and Selection Background

4.2. SWIPT Phase

4.3. Computing Phase

4.3.1. Local Computing

4.3.2. Offloading Phase

4.4. Cost Phase

4.5. Solving Formula

5. Ac Algorithm

5.1. Application of the Offloading Scheme

5.2. Application of the Selection Scheme

6. Simulation Analysis

6.1. Comparison of Computing Rates for Adding Relay

6.2. Performance Analysis of Offloading Scheme

6.2.1. Influence of Neural Network Parameters on Offloading Scheme

6.2.2. Ac Offloading Scheme Performance

6.3. Performance Analysis of Selection Scheme

6.3.1. Influence of Neural Network Parameters on Selection Scheme

6.3.2. Ac Selection Scheme Performance

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Mainak, A.; Abhishek, H. 6G-Enabled Ultra-Reliable Low-Latency Communication in Edge Networks. IEEE Commun. Mag. 2022, 6, 67–74. [Google Scholar]

- Chao, Z. Intelligent Internet of things service based on artificial intelligence technology. In Proceedings of the 2021 IEEE 2nd International Conference on Big Data, Artificial Intelligence and Internet of Things Engineering (ICBAIE), Nanchang, China, 26–28 March 2021; pp. 731–734. [Google Scholar]

- Fei, T.; Ying, C.; Li, D.X.; Lin, Z.; Bo, H.L. CCIoT-CMfg: Cloud Computing and Internet of Things-Based Cloud Manufacturing Service System. IEEE Trans. Ind. Inform. 2014, 10, 1435–1442. [Google Scholar]

- Thomas, R.; Andreas, K.; Odej, K. The Device Cloud—Applying Cloud Computing Concepts to the Internet of Things. In Proceedings of the 2014 IEEE 11th Intl Conf on Ubiquitous Intelligence Computing, Bali, Indonesia, 9–12 December 2014; pp. 396–401. [Google Scholar]

- Peng, M.; Wang, C.; Lau, V.; Poor, H.V. Fronthaul-constrained cloud radio access networks: Insights and challenges. IEEE Wirel. Commun. 2015, 22, 152–160. [Google Scholar] [CrossRef]

- Aguerri, I.E.; Zaidi, A.; Caire, G.; Shitz, S.S. On the capacity of cloud radio access networks with oblivious relaying. IEEE Trans. Inf. Theory 2019, 65, 4575–4596. [Google Scholar] [CrossRef]

- Yu, Y.M.; Chang, S.Y.; Jun, Z.A. Survey on Mobile Edge Computing: The Communication Perspective. IEEE Commun. Surv. Tutor. 2017, 19, 2322–2358. [Google Scholar]

- Varshney, L.R. Transporting information and energy simultaneously. In Proceedings of the 2008 IEEE International Symposium on Information Theory, Toronto, ON, Canada, 6–11 July 2008; pp. 1612–1616. [Google Scholar]

- Wen, Z.; Yang, K.; Liu, X.; Li, S.; Zou, J. Joint Offloading and Computing Design in Wireless Powered Mobile-Edge Computing Systems with Full-Duplex Relaying. IEEE Access 2018, 6, 72786–72795. [Google Scholar] [CrossRef]

- Tang, H.; Wu, H.; Zhao, Y.; Li, R. Joint Computation Offloading and Resource Allocation Under Task-Overflowed Situations in Mobile-Edge Computing. IEEE Trans. Netw. Serv. Manag. 2022, 19, 1539–1553. [Google Scholar] [CrossRef]

- Han, H.; Xiang, Z.H.; Qun, W.; Rose, Q.H. Online computation offloading and trajectory scheduling for UAV-enabled wireless powered mobile edge computing. IEEE China Commun. 2022, 19, 257–273. [Google Scholar]

- Hamed, M.; Luc, V.; Mateen, A. Optimal Online Resource Allocation for SWIPT-Based Mobile Edge Computing Systems. In Proceedings of the 2020 IEEE Wireless Communications and Networking Conference (WCNC), Seoul, Korea, 25–28 May 2020; pp. 1–8. [Google Scholar]

- Wu, H.; Wolter, K.; Jiao, P.; Deng, Y.; Zhao, Y.; Xu, M. EEDTO: An Energy-Efficient Dynamic Task Offloading Algorithm for Blockchain-Enabled IoT-Edge-Cloud Orchestrated Computing. IEEE Internet Things J. 2021, 8, 2163–2176. [Google Scholar] [CrossRef]

- Chen, J.; Wu, H.; Li, R.; Jiao, P. Green-Parallel Online Offloading for DSCI-Type Tasks in IoT-Edge Systems. IEEE Trans. Ind. Inform. 2022, 8, 7955–7966. [Google Scholar] [CrossRef]

- Chen, X.; Jiao, L.; Li, W.; Fu, X. Efficient Multi-User Computation Offloading for Mobile-Edge Cloud Computing. IEEE Trans. Netw. 2016, 24, 2795–2808. [Google Scholar] [CrossRef]

- Alessio, Z.; Marco, D.R.; Mérouane, D. Wireless Networks Design in the Era of Deep Learning: Model-Based, AI-Based, or Both? IEEE Trans. Commun. 2019, 67, 7331–7376. [Google Scholar]

- Giorgos, M.; Pavlos, A.; Eirini, E. Intelligent Dynamic Data Offloading in a Competitive Mobile Edge Computing Market. Future Internet 2019, 11, 118–137. [Google Scholar]

- Jingming, X.; Peng, W.; Bin, L.; Zesong, F. Intelligent task offloading and collaborative computation in multi-UAV-enabled mobile edge computing. IEEE China Commun. 2022, 19, 244–256. [Google Scholar]

- Abdulhameed, A. An efficient method of computation offloading in an edge cloud platform. J. Parallel. Distrib. Comput. 2019, 127, 58–64. [Google Scholar]

- Nguyen, C.L.; Dinh, T.H.; Shimin, G.; Dusit, N.; Ping, W.; YingChang, L.; Dong, I.K. Applications of Deep Reinforcement Learning in Communications and Networking: A Survey. IEEE Commun. Surv. Tutor. 2019, 21, 3133–3174. [Google Scholar]

- Huang, L.; Bi, S.; Zhang, Y.J.A. Deep Reinforcement Learning for Online Computation Offloading in Wireless Powered Mobile-Edge Computing Networks. IEEE Trans. Mob. Comput. 2020, 19, 2581–2593. [Google Scholar] [CrossRef]

- Tang, H.; Wu, H.; Qu, G.; Li, R. Double Deep Q-Network based Dynamic Framing Offloading in Vehicular Edge Computing. IEEE Trans. Netw. Sci. Eng. 2022. [Google Scholar] [CrossRef]

- Qu, G.; Wu, H.; Li, R.; Jiao, P. DMRO: A Deep Meta Reinforcement Learning-Based Task Offloading Framework for Edge-Cloud Computing. IEEE Trans. Netw. Serv. Manag. 2021, 18, 3448–3459. [Google Scholar] [CrossRef]

- Wang, F.; Xu, J.; Wang, X.; Cui, S. Joint Offloading and Computing Optimization in Wireless Powered Mobile-Edge Computing Systems. IEEE Trans. Wirel. 2018, 17, 1784–1797. [Google Scholar] [CrossRef]

- Yuyi, M.; Jun, Z.; Khaled, B.L. Dynamic Computation Offloading for Mobile-Edge Computing with Energy Harvesting Devices. IEEE J. Sel. Areas Commun. 2016, 34, 3590–3605. [Google Scholar]

- Tom, B.; Robert, B. Processor design for portable systems. J. Signal. Process. Syst. 1996, 13, 203–221. [Google Scholar]

- Suzhi, B.; Ying, J.Z. Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading. IEEE Trans. Wirel. 2018, 17, 4177–4190. [Google Scholar]

- Ye, X.; Li, M.; Si, P.; Yang, R.; Sun, E.; Zhang, Y. Blockchain and MEC-assisted reliable billing data transmission over electric vehicular network: An actor—Critic RL approach. China Commun. 2021, 18, 279–296. [Google Scholar] [CrossRef]

- Ivo, G.; Lucian, B.; Gabriel, A.D.L.; Robert, B. A Survey of Actor-Critic Reinforcement Learning: Standard and Natural Policy Gradients. IEEE Trans. Syst. Man Cybern. 2012, 42, 1291–1307. [Google Scholar]

- Diederik, P.K.; Jimmy, B. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| AC Algorithm | DQN Algorithm | Traversal Algorithm | |

|---|---|---|---|

| offloading delay(s) | 0.0548 | 0.1035 | 3.526 |

| selection delay(s) | 0.0073 | 0.0102 | 0.196 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Lin, F.; Liu, K.; Zhao, Y.; Li, J. Research on Multi-Terminal’s AC Offloading Scheme and Multi-Server’s AC Selection Scheme in IoT. Entropy 2022, 24, 1357. https://doi.org/10.3390/e24101357

Liu J, Lin F, Liu K, Zhao Y, Li J. Research on Multi-Terminal’s AC Offloading Scheme and Multi-Server’s AC Selection Scheme in IoT. Entropy. 2022; 24(10):1357. https://doi.org/10.3390/e24101357

Chicago/Turabian StyleLiu, Jiemei, Fei Lin, Kaixu Liu, Yingxue Zhao, and Jun Li. 2022. "Research on Multi-Terminal’s AC Offloading Scheme and Multi-Server’s AC Selection Scheme in IoT" Entropy 24, no. 10: 1357. https://doi.org/10.3390/e24101357