Compositional Sequence Generation in the Entorhinal–Hippocampal System

Abstract

:1. Introduction

2. Methods

2.1. Cognitive Generators

2.2. Sequence Sampling

2.3. Roles of Grid Cells and Place Cells in a Linear Feedback Network

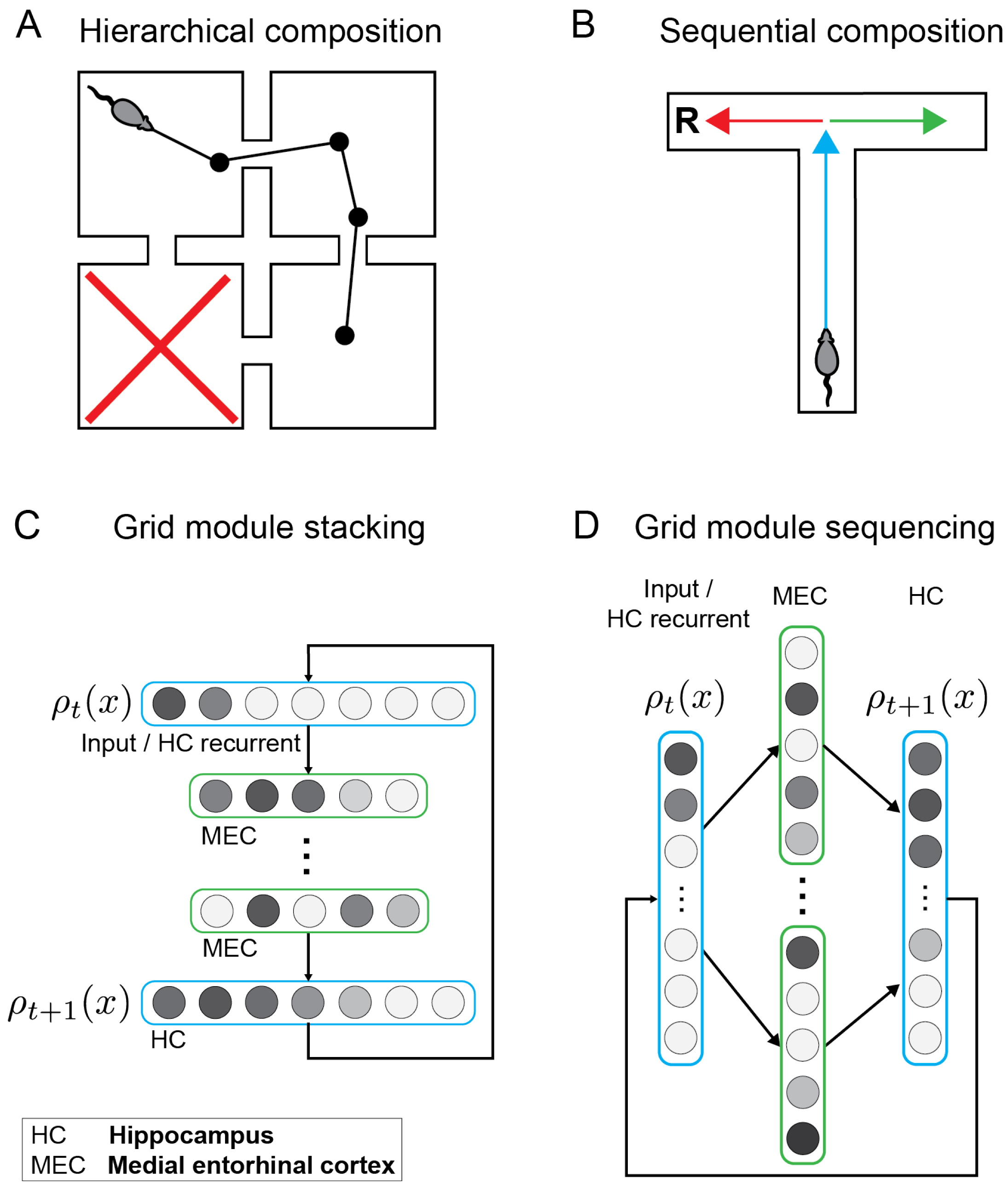

2.4. Propagator Composition

2.5. Generator Composition

3. Results

3.1. Composing Environment Information for Directed Exploratory Trajectories

3.2. Combining Generators for Sequential Compositional Replay

3.3. Hierarchical Sequence Generation Results in Rate-Mapping Place Codes

4. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Compositional Mechanisms

Appendix A.1. Composing Noncommutative Propagators via Symmetrization

Appendix A.2. Commutative Composition for Compatible Generators

Appendix A.3. Noncommutative Composition for Generators

Appendix A.3.1. Conjunctive Generator Composition

Appendix A.3.2. Interfaces for Noncommutative Generator Compositions

References

- Lashley, K. The problem of serial order in behavior. In Cerebral Mechanisms in Behavior; Jeffress, L.A., Ed.; Wiley: New York, NY, USA, 1951; pp. 112–131. [Google Scholar]

- Miller, G.A.; Galanter, E.; Pribram, K.H. Plans and the Structure of Behavior; Henry Holt and Co.: New York, NY, USA, 1960. [Google Scholar]

- Fodor, J.A.; Pylyshyn, Z.W. Connectionism and cognitive architecture: A critical analysis. Cognition 1988, 28, 3–71. [Google Scholar] [CrossRef] [PubMed]

- Todorov, E. Compositionality of optimal control laws. Adv. Neural Inf. Process. Syst. 2009, 3, 1856–1864. [Google Scholar]

- Goldberg, A.E. Compositionality. In The Routledge Handbook of Semantics; Routledge: London, UK, 2015; pp. 435–449. [Google Scholar]

- Frankland, S.; Greene, J. Concepts and compositionality: In search of the brain’s language of thought. Annu. Rev. Psychol. 2020, 71, 273–303. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lake, B.; Salakhutdinov, R.; Tenenbaum, J. Human-level concept learning through probabilistic program induction. Science 2015, 350, 1332–1338. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Higgins, I.; Sonnerat, N.; Matthey, L.; Pal, A.; Burgess, C.P.; Botvinick, M.; Hassabis, D.; Lerchner, A. SCAN: Learning abstract hierarchical compositional visual concepts. arXiv 2017, arXiv:1707.03389. [Google Scholar]

- Saxe, A.M.; Earle, A.C. Hierarchy through composition with multitask lmdps. In Proceedings of the 34th International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; Volume 70, pp. 3017–3026. [Google Scholar]

- Baroni, M. Linguistic generalization and compositionality in modern artificial neural networks. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2020, 375, 20190307. [Google Scholar] [CrossRef] [Green Version]

- Smolensky, P. Tensor product variable binding and the representation of symbolic structures in connectionist systems. Artif. Intell. 1990, 46, 159–216. [Google Scholar] [CrossRef]

- Van der Velde, F.; De Kamps, M. Neural blackboard architectures of combinatorial structures in cognition. Behav. Brain Sci. 2006, 29, 37–70. [Google Scholar] [CrossRef] [Green Version]

- Eliasmith, C. How to Build a Brain: A Neural Architecture for Biological Cognition; Oxford University Press: Oxford, UK, 2013. [Google Scholar]

- Craik, K.J.W. The Nature of Explanation; Cambridge University Press: Cambridge, UK, 1943; Volume 445. [Google Scholar]

- Hassabis, D.; Maguire, E.A. The Construction System of the Brain. Philos. Trans. R. Soc. B 2011, 364, 1263–1271. [Google Scholar] [CrossRef]

- Ólafsdóttir, H.F.; Barry, C.; Saleem, A.B.; Hassabis, D.; Spiers, H.J. Hippocampal place cells construct reward related sequences through unexplored space. eLife 2015, 4, e06063. [Google Scholar] [CrossRef]

- Dusek, J.A.; Eichenbaum, H. The hippocampus and memory for orderly stimulus relations. Proc. Natl. Acad. Sci. USA 1997, 94, 7109–7114. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Koster, R.; Chadwick, M.; Chen, Y.; Berron, D.; Banino, A.; Düzel, E.; Hassabis, D.; Kumaran, D. Big-Loop Recurrence within the Hippocampal System Supports Integration of Information across Episodes. Neuron 2018, 99, 1342–1354.e6. [Google Scholar] [CrossRef] [PubMed]

- Barron, H.C.; Dolan, R.J.; Behrens, T.E.J. Online evaluation of novel choices by simultaneous representation of multiple memories. Nat. Neurosci. 2013, 16, 1492–1498. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, Y.; Dolan, R.J.; Kurth-Nelson, Z.; Behrens, T.E. Human Replay Spontaneously Reorganizes Experience. Cell 2019, 178, 640–652.e14. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kaplan, R.; Tauste Campo, A.; Bush, D.; King, J.; Principe, A.; Koster, R.; Ley-Nacher, M.; Rocamora, R.; Friston, K.J. Human hippocampal theta oscillations reflect sequential dependencies during spatial planning. Cogn. Neurosci. 2018, 11, 122–131. [Google Scholar] [CrossRef]

- Alvernhe, A.; Save, E.; Poucet, B. Local remapping of place cell firing in the Tolman detour task. Eur. J. Neurosci. 2011, 33, 1696–1705. [Google Scholar] [CrossRef]

- Witter, M.P.; Canto, C.B.; Couey, J.J.; Koganezawa, N.; O’Reilly, K.C. Architecture of spatial circuits in the hippocampal region. Philos. Trans. R. Soc. B Biol. Sci. 2014, 369, 20120515. [Google Scholar] [CrossRef] [Green Version]

- Schlesiger, M.; Cannova, C.; Boublil, B.; Hales, J.; Mankin, E.; Brandon, M.; Leutgeb, J.; Leibold, C.; Leutgeb, S. The medial entorhinal cortex is necessary for temporal organization of hippocampal neuronal activity. Nat. Neurosci. 2015, 18, 1123–1132. [Google Scholar] [CrossRef]

- Yamamoto, J.; Tonegawa, S. Direct medial entorhinal cortex input to hippocampal CA1 is crucial for extended quiet awake replay. Neuron 2017, 96, 217–227.e4. [Google Scholar] [CrossRef] [Green Version]

- Buzsáki, G.; Tingley, D. Space and Time: The Hippocampus as a Sequence Generator. Trends Cogn. Sci. 2018, 22, 853–869. [Google Scholar] [CrossRef]

- McNamee, D.C.; Stachenfeld, K.L.; Botvinick, M.M.; Gershman, S.J. Flexible modulation of sequence generation in the entorhinal–hippocampal system. Nat. Neurosci. 2021, 24, 851–862. [Google Scholar] [CrossRef] [PubMed]

- Sternberg, S. Lie Algebras; University Press of Florida: Gainesville, FL, USA, 2009. [Google Scholar]

- Sun, C.; Yang, W.; Martin, J.; Tonegawa, S. Hippocampal neurons represent events as transferable units of experience. Nat. Neurosci. 2020, 23, 651–663. [Google Scholar] [CrossRef] [PubMed]

- Tweed, D.B.; Haslwanter, T.P.; Happe, V.; Fetter, M. Non-commutativity in the brain. Nature 1999, 399, 261–263. [Google Scholar] [CrossRef] [PubMed]

- O’Neill, J.; Boccara, C.; Stella, F.; Schoenenberger, P.; Csicsvari, J. Superficial layers of the medial entorhinal cortex replay independently of the hippocampus. Science 2017, 355, 184–188. [Google Scholar] [CrossRef]

- Norris, J. Markov Chains; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Weber, M.F.; Frey, E. Master equations and the theory of stochastic path integrals. Rep. Prog. Phys. 2017, 80, 046601. [Google Scholar] [CrossRef] [Green Version]

- Moler, C.; Van Loan, C. Nineteen dubious ways to compute the exponential of a matrix, twenty-five years later. SIAM Rev. 2003, 45, 3–49. [Google Scholar] [CrossRef] [Green Version]

- Campbell, M.; Ocko, S.; Mallory, C.; Low, I.; Ganguli, S.; Giocomo, L. Principles governing the integration of landmark and self-motion cues in entorhinal cortical codes for navigation. Nat. Neurosci. 2018, 21, 1096–1106. [Google Scholar] [CrossRef]

- Hills, T.; Todd, P.; Lazer, D.; Redish, A.; Couzin, I.; Cognitive, S.R.G. Exploration versus exploitation in space, mind, and society. Trends Cogn. Sci. 2015, 19, 46–54. [Google Scholar] [CrossRef] [Green Version]

- Gershman, S. Deconstructing the human algorithms for exploration. Cognition 2018, 173, 34–42. [Google Scholar] [CrossRef]

- Kuśmierz, L.; Toyoizumi, T. Emergence of Lévy Walks from Second-Order Stochastic Optimization. Phys. Rev. Lett. 2017, 119, 250601. [Google Scholar] [CrossRef] [Green Version]

- Dunn, B.; Wennberg, D.; Huang, Z.; Roudi, Y. Grid cells show field-to-field variability and this explains the aperiodic response of inhibitory interneurons. bioRxiv 2017. [Google Scholar] [CrossRef] [Green Version]

- Gupta, A.S.; van der Meer, M.A.A.; Touretzky, D.S.; Redish, A.D. Segmentation of spatial experience by hippocampal theta sequences. Nat. Neurosci. 2012, 15, 1032–1039. [Google Scholar] [CrossRef]

- Ólafsdóttir, H.F.; Carpenter, F.; Barry, C. Coordinated grid and place cell replay during rest. Nat. Neurosci. 2016, 19, 792–794. [Google Scholar] [CrossRef] [PubMed]

- Wood, E.R.; Dudchenko, P.a.; Eichenbaum, H. The global record of memory in hippocampal neuronal activity. Nature 1999, 397, 613–616. [Google Scholar] [CrossRef]

- Nieh, E.; Schottdorf, M.; Freeman, N.; Low, R.; Lewallen, S.; Koay, S.; Pinto, L.; Gauthier, J.; Brody, C.; Tank, D. Geometry of abstract learned knowledge in the hippocampus. Nature 2021, 595, 80–84. [Google Scholar] [CrossRef] [PubMed]

- Schapiro, A.; Rogers, T.; Cordova, N.; Turk-Browne, N.; Botvinick, M. Neural representations of events arise from temporal community structure. Nat. Neurosci. 2013, 16, 486–492. [Google Scholar] [CrossRef] [Green Version]

- Behrens, T.; Muller, T.; Whittington, J.; Mark, S.; Baram, A.; Stachenfeld, K.; Kurth-Nelson, Z. What Is a Cognitive Map? Organizing Knowledge for Flexible Behavior. Neuron 2018, 100, 490–509. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sutton, R.; Barto, A. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Gershman, S.J.; Moore, C.D.; Todd, M.T.; Norman, K.A.; Sederberg, P.B. The successor representation and temporal context. Neural Comput. 2012, 24, 1553–1568. [Google Scholar] [CrossRef]

- Stachenfeld, K.; Botvinick, M.; Gershman, S. The hippocampus as a predictive map. Nat. Neurosci. 2017, 20, 1643–1653. [Google Scholar] [CrossRef]

- Ólafsdóttir, H.; Carpenter, F.; Barry, C. Task Demands Predict a Dynamic Switch in the Content of Awake Hippocampal Replay. Neuron 2017, 96, 925–935.e6. [Google Scholar] [CrossRef] [Green Version]

- Waaga, T.; Agmon, H.; Normand, V.; Nagelhus, A.; Gardner, R.; Moser, M.; Moser, E.; Burak, Y. Grid-cell modules remain coordinated when neural activity is dissociated from external sensory cues. Neuron 2022, 110, 1843–1856.e6. [Google Scholar] [CrossRef] [PubMed]

- Constantinescu, A.O.; O’Reilly, J.X.; Behrens, T.E.J. Organizing conceptual knowledge in humans with a grid-like code. Science 2016, 352, 1464–1468. [Google Scholar] [CrossRef] [PubMed]

- Schmidhuber, J. Learning Factorial Codes by Predictability Minimization. Neural Comput. 1992, 4, 863–879. [Google Scholar] [CrossRef]

- Achille, A.; Soatto, S. Emergence of invariance and disentanglement in deep representations. J. Mach. Learn. Res. 2018, 19, 1947–1980. [Google Scholar]

- Cohen, T. Learning the Irreducible Representations of Commutative Lie Groups. In Proceedings of the 31st International Conference on Machine Learning, Beijing, China, 21–26 June 2014; Volume 32, pp. 1755–1763. [Google Scholar]

- Caselles-Dupré, H.; Garcia-Ortiz, M.; Filliat, D. Symmetry-Based Disentangled Representation Learning requires Interaction with Environments. arXiv 2019, arXiv:1904.00243. [Google Scholar]

- Higgins, I.; Racanière, S.; Rezende, D. Symmetry-Based Representations for Artificial and Biological General Intelligence. Front. Comput. Neurosci. 2022, 16, 836498. [Google Scholar] [CrossRef] [PubMed]

- Piray, P.; Daw, N. Linear reinforcement learning in planning, grid fields, and cognitive control. Nat. Commun. 2021, 12, 4942. [Google Scholar] [CrossRef]

- Dayan, P. Improving generalization for temporal difference learning: The successor representation. Neural Comput. 1993, 5, 613–624. [Google Scholar] [CrossRef] [Green Version]

- Ho, M.; Abel, D.; Correa, C.; Littman, M.; Cohen, J.; Griffiths, T. People construct simplified mental representations to plan. Nature 2022, 606, 129–136. [Google Scholar] [CrossRef]

- Smolensky, P.; McCoy, R.T.; Fernandez, R.; Goldrick, M.; Gao, J. Neurocompositional computing: From the Central Paradox of Cognition to a new generation of AI systems. arXiv 2022, arXiv:2205.01128. [Google Scholar] [CrossRef]

- Casas, F.; Murua, A.; Nadinic, M. Efficient computation of the Zassenhaus formula. Comput. Phys. Commun. 2012, 183, 2386–2391. [Google Scholar] [CrossRef]

- Dragt, A.J. Lie Methods for Nonlinear Dynamics with Applications to Accelerator Physics; University of Maryland: College Park, MD, USA, 2021. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

McNamee, D.C.; Stachenfeld, K.L.; Botvinick, M.M.; Gershman, S.J. Compositional Sequence Generation in the Entorhinal–Hippocampal System. Entropy 2022, 24, 1791. https://doi.org/10.3390/e24121791

McNamee DC, Stachenfeld KL, Botvinick MM, Gershman SJ. Compositional Sequence Generation in the Entorhinal–Hippocampal System. Entropy. 2022; 24(12):1791. https://doi.org/10.3390/e24121791

Chicago/Turabian StyleMcNamee, Daniel C., Kimberly L. Stachenfeld, Matthew M. Botvinick, and Samuel J. Gershman. 2022. "Compositional Sequence Generation in the Entorhinal–Hippocampal System" Entropy 24, no. 12: 1791. https://doi.org/10.3390/e24121791

APA StyleMcNamee, D. C., Stachenfeld, K. L., Botvinick, M. M., & Gershman, S. J. (2022). Compositional Sequence Generation in the Entorhinal–Hippocampal System. Entropy, 24(12), 1791. https://doi.org/10.3390/e24121791