Abstract

This study aims to propose modified semiparametric estimators based on six different penalty and shrinkage strategies for the estimation of a right-censored semiparametric regression model. In this context, the methods used to obtain the estimators are ridge, lasso, adaptive lasso, SCAD, MCP, and elasticnet penalty functions. The most important contribution that distinguishes this article from its peers is that it uses the local polynomial method as a smoothing method. The theoretical estimation procedures for the obtained estimators are explained. In addition, a simulation study is performed to see the behavior of the estimators and make a detailed comparison, and hepatocellular carcinoma data are estimated as a real data example. As a result of the study, the estimators based on adaptive lasso and SCAD were more resistant to censorship and outperformed the other four estimators.

1. Introduction

Consider the partially linear (or semiparametric) regression model

where are the observations of the response variable, is known dimensional vectors of explanatory variables, is the value of an extra explanatory variable , is an unknown k-dimensional parameter vector to be estimated, is an unknown univariate smooth function, and are supposed to be uncorrelated independent random variables with mean zero and finite variance . Partially linear models through a nonparametric component are flexible enough to cover many situations; in fact, these models may be an appropriate choice when it is suspected that the response variable is linearly dependent on , indicating parametric effects, but nonlinearly related to denoting nonparametric effects. Note that model (1) can be expressed in matrix and vector form as

where , is an () design matrix with denoting the i.thdimensional row vector of , , and is a random error vector with and . For more discussions on model (1), see [1,2,3], among others.

In this paper, we are interested in estimating the parametric and nonparametric components of model (1) when the observations of the response variable are incompletely observed and right-censored by the random censoring variable , , but and are completely observed. In the case where ’s are the censored from the right, then any estimation procedure cannot be applied to due to censoring. To add the effect of the censorship to the model estimation process, it should be revealed with the help of auxiliary variables that the censorship problem should be solved accordingly. Therefore, instead of observing the values of the response variable , we observe the dataset () with

where ’s are the observations of the updated new response variable according to censorship and ’s are the values of the censor indicator related to’s. If the observation of is censored, we choose and ; otherwise, we consider and In this case, model (1) transforms into a semiparametric model with the right-censored data, which can also be updated in terms of the values of the new response variable.

In the literature, there are several studies about the right-censored linear model ( in model (1)), including [4,5,6,7]. The right-censored nonparametric regression model ( in model (1)) has been studied by [8,9], among others. In addition, right-censored partially linear models have been studied by [10], who used smoothing splines based on Kaplan–Meier weights as an estimation procedure, and [11] considered censored partial linear models and illustrated the theoretical properties of the semiparametric estimators based on the synthetic data transformation. Aydın and Yilmaz [12] suggested three semiparametric estimators based on regression splines, kernel smoothing, and smoothing spline methods using synthetic data transformation. Regarding the partially linear models with penalty functions, in the case of the noncensored data, [13] studied the estimation of the semiparametric model based on the two absolute penalty functions, which are lasso and adaptive lasso with B-splines. Moreover, they conducted a technical analysis of the estimators meticulously with asymptotic properties.

This paper considers model (1) under a right-censored response variable and a large number of covariates in the parametric component. Notice that right-censored data cause biased estimates due to incomplete observations that manipulate the data structure. Therefore, if the censorship is ignored, inferences based on estimated models may be wrong or deviated. For instance, in clinical trials, some of the observed patients may withdraw from the study before it ends, or they may die from another reason, which makes the corresponding observation right-censored. In particular, in medical studies as in the given example, preventing information loss and obtaining less biased estimates are quite important. This paper, therefore, solves both variable selection and censorship problems. To achieve the variable selection, six different penalty functions are considered: ridge, lasso, adaptive lasso, SCAD, MCP, and elasticnet. Notice that a detailed study about penalty functions and shrinkage techniques is provided by [14]. Local polynomial regression is used as the smoothing method. Finally, the censorship problem is solved using the synthetic data transformation proposed by [6].

In the light of the information given, the main difference and most important contribution of this article from previous studies is that it proposes quasi-parametric estimators based on six different penalty functions with the local polynomial technique for the right-censored model (1). To the best of our knowledge, this kind of detailed study has not yet been made in the literature.

The paper is organized as follows: Section 2 introduces the right-censored data phenomenon and some preliminaries. Section 3 explains the local polynomial smoothing method, and the modified semiparametric estimators are introduced based on the six penalty functions with theoretical properties. In Section 4, the evaluation metrics are shown. Section 5 performs a Monte Carlo simulation study, and the results are presented. Section 6 presents an analysis of hepatocellular carcinoma data as a real data example. Finally, conclusions are given in Section 7.

2. Preliminaries and General Estimation Procedure

Let , and be distribution functions of the variables, and , respectively. More precisely, let the probability distribution functions of these variables be

and their corresponding survival functions are given by

The key idea here is to examine the effect of the explanatory variables on the response variable by estimating the expected value of by the regression function. In the setting of a semiparametric regression problem, first, we need to make some identification conditions on the response variable, censoring, and explanatory variables and their dependence relationships. In other words, we take some assumptions to ensure that the model is identifiable.

Assumption 1.

and are conditionally independent given ; .

It should be emphasized that Assumption 1 and Assumption 1 are commonly accepted assumptions regarding right-censored models and survival analysis (see [15,16]). Assumption 1 is an independency condition that provides identifiability for the model. Assumption 1 indicates that covariates provide the same information about the response variable independent of the existence of censorship (see [17]).

Because of the censoring, the classical methods for estimating the parametric and nonparametric components of model (3) are inapplicable. The most important reason for this is that the censored observations and updated random observations have different expectations. This problem can be overcome by using so-called synthetic data, as in censored linear models. We refer, for example, to the studies of [6,12], among others. In this context, when the distribution is known, we use synthetic data transformation

where and denotes the distribution functions of censoring variables as defined in the introduction to this section. The nature of the synthetic data method ensures that are independent random variables with , as described in Lemma 1.

Lemma 1.

If, instead of response observations only is observed in the context of a semiparametric regression model and the censoring distribution is known, then the regression function (or mean vector) is a conditional expectation; that is,.

A proof of Lemma 1 is given in Appendix A.1.

Lemma 1 cannot be directly applied to the estimation if the distribution is unknown. To overcome this difficulty, [6] recommends replacing with its Kaplan–Meier estimator [18]:

where are the order statistics of and is the corresponding indicator related to , as defined in previous sections. In this case, that is, when the distribution is unknown, we consider the following synthetic data transformation:

3. Local Polynomial Estimator

Consider the semiparametric regression model defined in (1). Here, we approximate the regression function locally by a polynomial of order p (see [19]). Using a Taylor series expansion in at a neighborhood of fixed , the degree polynomial approximation of yields

Note that fixed is determined in the range for a small real-valued and used to estimate locally (see [8] for details). The idea is to estimate the components of a semiparametric model, leading to the minimization of the local weighted least squares criterion:

where are the values of the synthetic variable, as defined in (6), is a kernel function assigning weights to each point, and is the bandwidth parameter controlling the size of the local neighborhood of . Additionally, note that vector and matrix notation (8) can be written as follows:

where is a weights matrix whose properties are provided in Assumption 4. Note that the minimum problem (9) has a unique solution based on the following matrices:

For technical convenience, we assume that is known to be the true parameter. Then the solution to minimizing (9) is

It can be seen from the Taylor series expansion given in (7) that one needs to select the first element of the vector in order to obtain Then, for the fixed neighborhood, the deconvoluted local polynomial estimator of the regression function can be written as

where denotes the deconvoluted local polynomial smoother matrix, dimensional matrix having 1 in the first position and 0 otherwise, and the matricesare as defined above.

After the theoretical confirmation by giving Equations (10) and (11), the cases of both model parameters ) are unknown. To obtain the local polynomial-based estimates , the smoother matrix given right after Equation (11) is used to calculate the following partial residuals in matrix form:

and

where

Thus, we obtain a transformed set of data based on local residuals. Considering these partial residuals for the vector yields the following least squares instead of criterion (9):

where is the row of the matrix . Under Assumptions 2–4, by applying the least squares technique to (14), we obtain a “modified local polynomial estimator” for the parametric part of the semiparametric model (3), given by

Correspondingly, a “modified local polynomial estimator” of the function for the nonparametric part in the semiparametric model (3) is defined as

The implementation details of the Equations (15) and (16) are given in Appendix A2. We conclude this section with the following assumptions necessary to obtain the main results. These assumptions are quite general and easily fulfilled.

Assumption 2.

When the covariates () are fixed design points, there exist continuous functions defined on such that each component of satisfies

where is a sequence of real numbers satisfying

and is a () dimensional nonsingular matrix.

Assumption 3.

The functions and are Lipschitz continuous of order 1 for .

Note that Assumption 2 generalizes the conditions of [20,21], where () are fixed design points for a partially linear model with uncensored data. Assumption 3 is required to establish asymptotic normality with an observed value

Assumption 4.

The weight functions satisfy these conditions:

- (i.)

- (ii.)

- (iii.)

where is an indicator function, satisfies , and satisfies .

3.1. Ridge-Type Local Polynomial Estimator

In this paper, we confine ourselves to the local polynomial estimators of the vector parameter and the unknown smooth function in a semiparametric model. For a given bandwidth parameter , the corresponding estimators and based on model (2) are described by (14) and (15), respectively. Multiplying both sides of model (2) by , we obtain

where , , and , similar to (12) and (13).

This consideration turns model (17) into an optimization problem to obtain the estimator of the vector corresponding parametric part of the semiparametric model in (2). In this context, this model leads to the following penalized least squares (PLS) criterion for the ridge regression problem:

where is a positive shrinkage parameter that controls the magnitude of the penalty. The solution to this minimization problem (17) provides the following Theorem 1.

Theorem 1.

Ridge-type local polynomial estimator for is presented by and is expressed based on the local polynomial smoothing matrix by

where is a vector of updated response observations, as defined in Equations (6) and (13).

A proof of Theorem 1 is given in Appendix A.3.

As shown in Theorem 1, when , the ridge-type local polynomial estimate reduces to an ordinary least squares estimate problem based on the local residuals defined in Equations (12) and (13). It should be noted that in order to estimate the unknown function , we imitate Equation (16) and define

Thus, the estimator (19b) is stated as the ridge-type local polynomial estimator of the unknown function in the semiparametric model (1.2).

3.2. Penalty Estimation Strategies Based on Local Polynomial

Several penalty functions are discussed for linear and generalized regression models in the literature (see [22]). In this paper, we study the minimax concave penalty (MCP), the least absolute shrinkage and selection operation (lasso), the smoothly clipped absolute deviation method (SCAD), the adaptive lasso, and the elasticnet method, which is a regularized regression technique that linearly combines the and penalties of the lasso and the ridge regression methods, respectively.

In this paper, we suggest local polynomial estimators based on different penalties for the components of the semiparametric regression model. For a given penalty function and tuning parameter the general form of the penalized least squares () of penalty estimators can be expressed as

Note that the vector that minimizes (20) for lasso and ridge penalties is known as a bridge estimator, proposed by [23]. On the other hand, elasticnet, SCAD, MCP, and adaptive lasso involve different penalties, which are inspected in the remainder of this paper. It should be emphasized that in the mentioned four penalty functions, in the penalty term satisfies the norm of the regression coefficients (see [24,25,26]). Thus, the different penalty estimators corresponding to the parametric and nonparametric components of the semiparametric model can be defined for different values of degree and shrinkage parameter .

3.2.1. Estimation Procedure for the Parametric Component

From (20), we see that for , ridge estimates corresponding to the parametric component can be obtained by minimizing the following penalized residual sum of squares

where is the ith synthetic observation of and is the th row of the matrix . Notice that the solution (21) has the same regularization estimate stated in (19a). It should also be noted that when in (20), we obtain the estimator known as the lasso.

Lasso: Proposed by [24], lasso, a penalized least squares method, is a regularization method for simultaneous estimation and variable selection that estimates with the penalty. The modified local polynomial estimators based on the lasso penalty can be defined as

Although Equation (22) may seem subtle, the absolute penalty term makes it impossible to find an analytical solution for the lasso. Initially, lasso solutions are obtained through quadratic programming.

Adaptive lasso: Zou [25] suggested modifying the lasso penalty by using adaptive weights on penalties on the regression coefficients. This weighted lasso, which has oracle properties, is referred to as the adaptive lasso. The local polynomial estimator using the adaptive lasso penalty can be defined as follows:

where is a weight function given by

It should be noted that is an appropriate estimator of here. For example, an ordinary least squares (OLS) estimate can be used as a reference value. To obtain the adaptive lasso estimates in (23), it is necessary to choose and compute the weights after obtaining the OLS estimate.

SCAD: A disadvantage of the lasso method is that the penalty term is linear in the size of the regression coefficient, so it tends to give highly biased estimates for large regression coefficients. To account for this bias, [26] proposed a SCAD penalty obtained by replacing in (22) with . A modified local estimator based on the SCAD penalty can be described as

where is the SCAD penalty defined by

It should be stated that here and are the penalty parameters, is the indicator function, and . In addition, (24b) is equivalent to the penalty for

Elasticnet: The elastic net, proposed by [27], is a penalized least squares regression technique that has been widely used in regularization and automatic variable selection to select groups of correlated variables. Note that the elastic net method linearly combines the penalty term, which enforces the sparsity of the elastic net estimator, and the penalty term, which ensures appropriate selection of correlated variable groups. Accordingly, the modified local estimator using an elasticnet penalty is the solution of the following minimization problem:

where and are the postive regularization parameters. Equation (25) ensures the estimates corresponding to the parametric part of the semiparametric regression model (2), as in the other methods.

MCP: Introduced by [28], MCP is an alternative method to obtain less biased estimates of the nonzero regression coefficients in a sparse model. For the given regularization parameters and , the local polynomial estimator based on the MCP penalty can be defined as

where is the MCP penalty given by

3.2.2. The Estimation Procedure for the Nonparametric Component

Equations (21)–(26) provide modified local polynomial estimates based on different penalties for the parametric part of the semiparametric model in (2). Similar in spirit to (19b), the vector of estimated parametric coefficients given in (21) can be used to construct the estimation of the nonparametric part in the same model. In this case, we obtain the modified local estimates based on the lasso penalty of the unknown function, given by

as defined in the previous section.

Note that when defined in (23) is written instead of in Equation (27), local estimates of the nonparametric part based on the adaptive lasso penalty are obtained and are stated as symbolically. Similarly, replacing in (27) with , and yields modified local polynomial estimators, denoted as , and based on the SCAD, elasticnet, and MCP penalties, respectively, for the nonparametric part of the right-censored semiparametric model (2).

3.2.3. Some Remarks on the Penalties

Remarks on the penalties can be stated as follows:

- The regularization based on the norm produces sparse solutions as well as feature selection. However, the norm produces nonsparse solutions and does not have feature selection.

- Although all of the regularization methods shrink most of the coefficients towards zero, SCAD, MCP, and adaptive lasso apply less shrinkage to nonzero coefficients. This is known as bias reduction.

- As noted earlier, the tuning parameter , used for SCAD and MCP estimations, controls how quickly the penalty rate goes to zero. This affects the bias and stability of the estimates, in the sense that there is a greater chance for more than one local minimum to exist as the penalty becomes more concave.

- As , the MCP and SCAD penalties converge to the norm penalty. Conversely, as , the bias is minimized, but both MCP and SCAD estimates become unstable. Note also that lower values of the tuning parameter for SCAD and MCP produce more highly variable, but less biased, estimates.

- The elasticnet penalty is designed to deal with highly correlated covariates more intelligently than other sparse penalties, such as the lasso. Note that the lasso penalty tends to choose one among highly correlated variables, while elasticnet uses them all.

4. Performance Indicators

Several performance measurements are described in this section with which to evaluate the performance of the modified six semiparametric local polynomial estimators based on penalty functions: ridge (), lasso (), adaptive lasso (), SCAD (), MCP (), and elasticnet (). Note that the abbreviations given in parentheses here denote the estimators. The performance of the estimators are examined individually for the parametric component, nonparametric component, and overall estimated model. Accordingly, evaluation metrics are given by:

4.1. Measures for the Parametric Component

Root mean squared error () of estimated regression coefficients . The calculation of is given by:

where is the obtained estimate of by any of the introduced six methods. It is replaced by , and to obtain the score for each estimator.

Coefficient of determination for the estimated models. Note that allows us to see overall model performance of the six methods. It can be calculated as follows:

Sensitivity, specificity, and accuracy scores obtained from a confusion matrix. If true values of an interested variable are available, the confusion matrix can be obtained as in Table 1. This matrix allows us to measure the performance of the penalty functions for right-censored data. Accordingly, sensitivity, specificity, and accuracy values can be calculated as follows:

Table 1.

Confusion matrix for variable selection.

G score calculated by geometric mean of sensitivity and specificity given in Equation (31):

4.2. Measures for the Nonparametric Component

Mean squared error (MSE) is used to measure the performance of the estimated nonparametric components by six methods: , and . Assume that is the fitted nonparametric function obtained from any of the six methods. Accordingly, the MSE is computed as follows:

Relative MSE (ReMSE) is used to make a comparison between performances of the six methods on the estimation of the nonparametric component. The calculation of the ReMSE is given by

where denotes the number of methods, which are six for this paper.

5. Simulation Study

We carried out an intense simulation study to evaluate the finite sample performance of the introduced six semiparametric estimators for a right-censored partially linear model. These estimators are compared with each other to evaluate their respective strengths and weaknesses in handling the right-censored problem. To obtain reproducibility, simulation codes with functions are provided in the following GitHub link: https://github.com/yilmazersin13?tab=repositories accessed on 31 August 2022. The estimators are computed using the formulations in Section 3. The simulation design and data generation are described as follows:

Simulation Design: Two main scenarios are considered for generating the zero and nonzero coefficients of the model because the focus of the penalty functions is on making an accurate variable selection. In each scenario, simulation runs are made for

- Three sample sizes:

- Two censoring levels:

- Two numbers of parametric covariates

All possible simulation configurations are repeated 1000 times. To evaluate the performance of the methods, the performance indicators described in Section 4 are used. The scenarios are defined in the data generation section below.

Data Generation: Regarding model (1), , each element of the model obtained as

The true values of regression coefficients are determined for both Scenarios 1 and 2 as follows:

For both scenarios, there are 10 nonzero ’s to be estimated and sparse coefficients. The main purpose of using these two scenarios is that it allows us to measure the capacity of the estimators on the selection of nonzero coefficients when ’s are close to zero. In addition, these scenarios make it possible to see how the censoring level (CL) affects their performances. These scenarios allow us to inspect the convergence of the estimated coefficients to the true ones when the sample size is becoming larger practically under censored data, which can be counted as an important contribution of this paper.

Regarding the censoring data, the censoring variable is generated as independently of the initially observed variable . An algorithm is provided by [29]. Another important point for this study is the selection of the shrinkage parameters and the bandwidth parameter for the local polynomial approach for the introduced six estimators. In this study, the improved Akaike information criterion , proposed by [30], is used. It can be calculated as follows:

where is the estimated coefficient based on the shrinkage parameter , and the bandwidth and is the variance of the model, and denotes the number of nonzero regression coefficients. Note that, due to the projection (hat) matrix, the introduced estimation procedures (except ridge regression) cannot be written; the number of nonzero coefficients is used instead of the hat matrix.

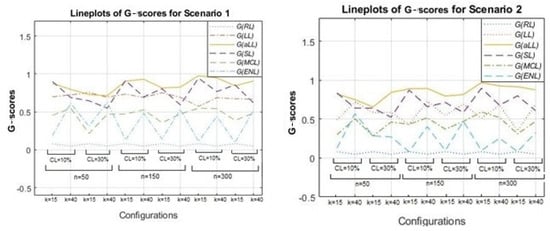

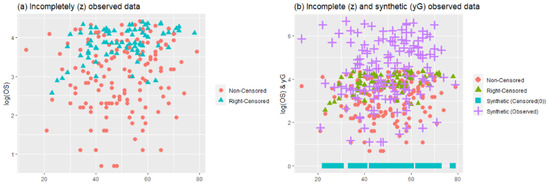

The results of the simulation study are presented individually for parametric and nonparametric components below. Before that, Figure 1 is presented to provide some information about the generated data. Figure 1 is formed by four panels. Panels (a) and (b) show the original, right-censored, and synthetic response values for . Panels (c) and (d) show the same for CL = 30%.

Figure 1.

Overview for the generated data based on different simulation configurations.

In Figure 1, two plots () are represented for the different configurations of Scenarios 1 and 2. In plot (), scatter plots of the data points for and are given. In panel (b) of (), the working procedure of synthetic data transformation can be seen clearly. It gives zero to right-censored observations and increases the magnitude of observed data points. Thus, it makes equal the expected value of the synthetic response variable and original response variable, as indicated in Section 2. Similarly, plot () shows the scatter plots of data points for , which makes it possible to see heavily censored cases. In panel (b) of (), due to heavy censorship, the magnitude of the increments in the observed data points is larger than (i), which is the working principle of the synthetic data. This is a disadvantage because it significantly manipulates the data structure, although it still solves the censorship problem.

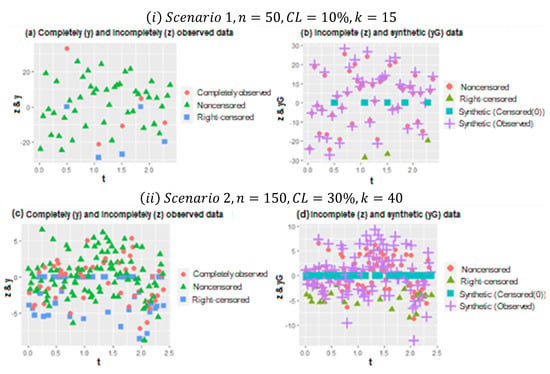

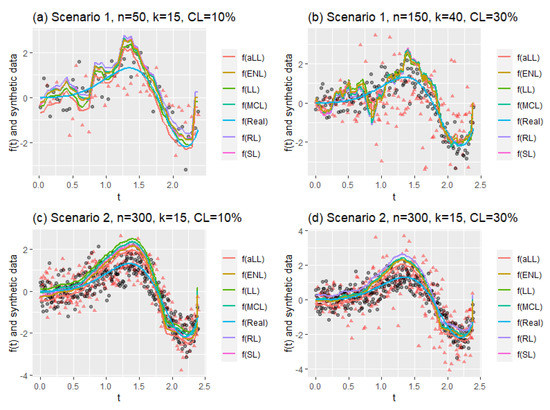

To describe the generated dataset further, Figure 2 shows the nonparametric component of the right-censored semiparametric model. In panel (a), the smooth function can be seen for the small sample size (), low censoring level (), and low number of covariates (). Panel (b) shows the nonparametric smooth function for , and . It should be emphasized that the censoring level or number of the parametric covariates does not affect the shape of the nonparametric component. Thus, there is no need to show all possible generated functions here. Note that the nonparametric component affects the number of covariates and the censoring level indirectly in the estimation process.

Figure 2.

The generated nonparametric component of the semiparametric model for two simulation combinations.

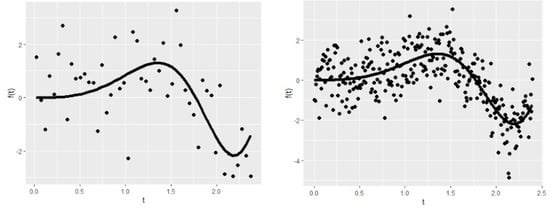

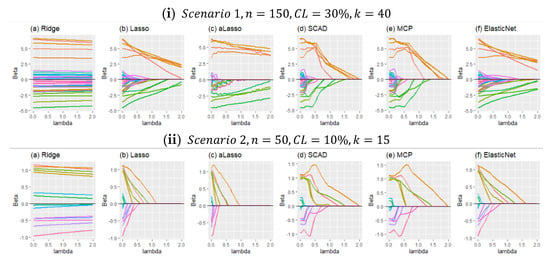

As previously mentioned, this paper introduces six modified estimators based on penalty and shrinkage strategies. In Figure 3, the shrinkage process of the estimators, according to the shrinkage parameter “lambda,” is provided in panels (i) and (ii). In panel (i), shrunk regression coefficients are shown for scenario 1, . Panel (ii) is drawn for scenario 2, . When Figure 3 is inspected carefully, it can be seen that in panel (i), due to a high censoring level and many covariates, the shrinkage of the coefficients is more challenging than in panel (ii). One of the reasons for that is, in Scenario 2, coefficients are determined as smaller than the coefficients in Scenario 1 while generating data. For both panels, it can be observed that the SCAD and MCP methods behave similarly. As expected, they shrunk the coefficients quicker than the others. Additionally, lasso and ElasticNet seem close to each other for both panels. However, adaptive lasso differs from the others in both panels. The reason for this is discussed with the results given in Section 5.1.

Figure 3.

The behaviors of the introduced modified penalty functions according to shrinkage parameters for two different cases.

5.1. Analysis of Parametric Component

In this section, the estimation of the parametric component of a right-censored semiparametric model is analyzed, and results are presented for all simulation scenarios in Table 2, Table 3, Table 4 and Table 5 and Figure 4, Figure 5, Figure 6 and Figure 7. The performance of the estimators is evaluated using the metrics given in Section 4: , , sensitivity, specificity, accuracy, and -score. In addition to the performance criteria, the selection ratio of the methods is calculated for the estimators. The selection ratio can be defined as follows:

Table 2.

RMSE and values obtained from all simulation runs (Scenario 1).

Table 3.

RMSE and values obtained for Scenario 2.

Table 4.

-score and values obtained for all simulation combinations (Scenario 1).

Table 5.

-score and metrics for all simulation combinations (Scenario 2).

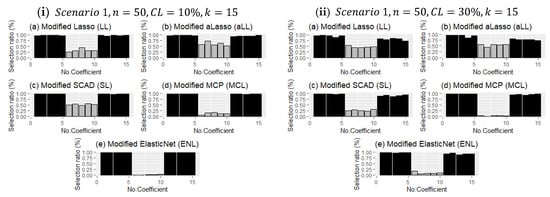

Figure 6.

Comparison of the modified estimators regarding deciding the true coefficient for all simulation runs. The dark-colored bars denote the selection ratios of nonzero regression coefficients, and the gray-colored ones represent the ratios for sparse coefficients.

Figure 7.

Comparison of the modified estimators as in Figure 6 but for different simulation configurations when .

Selection Ratio: The ratio of the selected true nonzero coefficients by the corresponding estimator in 1000 simulation repetitions. The formulation can be given by:

where is the estimated coefficient for simulation by any of the introduced estimators. Results are given in Figure 6 and Figure 7.

Table 1 and Table 2 include the RMSE scores for the estimated coefficients calculated from (4.1) and of the model for Scenarios 1 and 2. The best scores are indicated with bold text. If the two tables are inspected carefully, two observations can be made about the performance of the methods for both Scenarios 1 and 2. Regarding Scenario 1, when the sample size is small ), ENL and SL estimators give smaller RMSE scores and higher values than the other four methods. On the other hand, when the sample size becomes larger (), aLL takes the lead in terms of estimation performance. The results for different censoring levels show that aLL is less affected by censorship than SL and the other methods. This can be observed in Table 1 clearly.

In Table 2, RMSE and scores are provided for all simulation configurations of Scenario 2. The results can be distinguished from the results in Table 1 by the higher values obtained from the modified ridge estimator. However, the RMSE scores of the modified ridge estimator are the largest. This can be explained by the fact that the ridge penalty uses all covariates, whether sparse or nonsparse. Therefore, the estimated model based on the ridge penalty has larger values. On the other hand, the RMSE scores prove that for small sample sizes (), ENL and aLL perform satisfactorily. Moreover, as in the case of Scenario 1, when the sample size becomes larger, aLL gives the most satisfying performance. SL- and LL-based estimators also show good performances in the general frame. If Table 2 is inspected in detail, it can be seen that when , the SL method comes to the front for both low () and high () censoring levels. The same is true for the aLL method regarding strength against censorship.

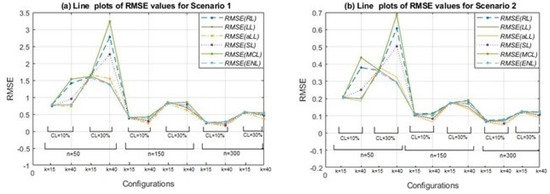

Figure 4 presents the line plots of the RMSE scores for all simulation cases. As expected, the negative effects of increment on the censoring level and the positive effect of growth on the sample size can be clearly observed from panels (a) and (b). For both scenarios, a peak can be seen when the sample size is small () and the censorship level increases from 10% to 30%. The methods most affected by censorship are MCL, RL, and SL. The least affected are aLL, lasso, and ENL. Thus, Figure 4 supports the results and inferences obtained from Table 2 and Table 3.

Together with the RMSE scores, one other important metric to evaluate the performance of the parametric component estimation is -score, which measures the true selection made by the estimators for the sparse and nonzero subsets based on the confusion matrix given in Table 1. In this context, Figure 5 is drawn to illustrate the -scores of the methods for all simulation combinations using line plots. Note that the -score changes between the range [0,1] and the lines of methods that are close to 1 are notated as better than the others in terms of successful determination of sparsity.

Figure 5 is formed by two panels: Scenario 1 (left) and Scenario 2 (right). As expected, for all methods except for RL (which does not involve any sparse subset and is therefore not shown in Figure 5 and Table 4), the -scores diminish when the censoring level is high, and the number of covariates () is large. In addition, there is an increasing trend from the small to large sample sizes for LL, aLL, SL, and MCL. This trend is most evident for the aLL line, which makes aLL distinguishable. However, interestingly, ENL is not influenced by the change in sample size, and the -scores of ENL do not take a value greater than 0.5. In general, aLL, SL, and LL provide the highest -scores. All -scores for the simulation study are provided in Table 4 and Table 5 together with the accuracy values of the methods.

Table 4 and Table 5 present the accuracy rates and -scores for all methods and simulation configurations for both Scenarios 1 and 2. Note that, because the ridge penalty is unable to shrink the estimated coefficients towards zero, the specificity of RL is always calculated as zero. Thus, RL does not have a -score. When the tables are examined, it can be clearly seen that the prominent methods are aLL, LL, and SL. The aLL produces satisfactory results for each simulation configuration. On the other hand, the other two methods, LL and SL, give good results under different conditions. When this situation is examined in detail, it can be seen that the SL method produces better results when , and the LL method when with aLL. In addition, it can be said that the level of censorship and the sample size do not affect this situation, except for an increase or decrease in the values. These inferences apply to both scenarios. Here, the difference between the scenarios emerges in the size of the -scores and accuracy values obtained. It can be said that they are slightly less than the values obtained for Scenario 2.

Unlike the evaluation criteria given in Section 4, the frequency of choosing the correct coefficients for each method in the simulation study is analyzed, and the results are presented for both scenarios in Figure 6 and Figure 7 with bar plots. Figure 6 presents two panels (I and ii), which demonstrate the impact of censorship, one of the main purposes of this article. As expected, as censorship increases, the frequency of selection decreases for the nonzero coefficients. The point here is to reveal which methods are less affected by this. It can be observed in Figure 6 that the MCL and ENL methods are less affected by censorship in terms of the frequency of selection of nonzero coefficients. However, since these methods are less efficient than the SL, LL, and aLL methods in determining ineffective coefficients, their overall performance is lower (see Table 4 and Table 5). On the other hand, the SL, LL, and aLL methods make a balanced selection for both subsets (no effect-non-zero), which indirectly makes them more resistant to censorship.

Figure 7 presents the different configurations for Scenario 2. It shows both effects of sample size and censoring level increment and bar plots for . The detection performance of the methods of nonzero coefficients is less affected than in Figure 6. However, the selection of the ineffective set plays a decisive role in terms of the performance of each of the methods. For example, MCL and ENL performed poorly in the correct determination of ineffective coefficients when the censorship level increased, but LL, aLL, and SL were able to make the right choice under heavy censorship.

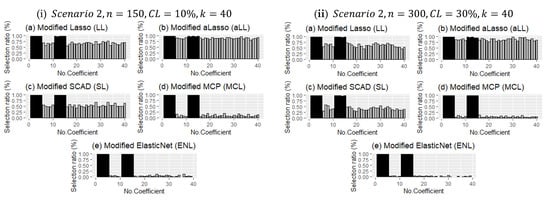

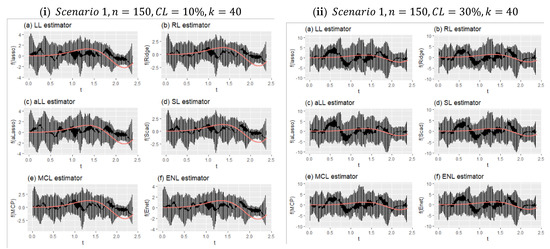

5.2. Analysis of Nonparametric Component

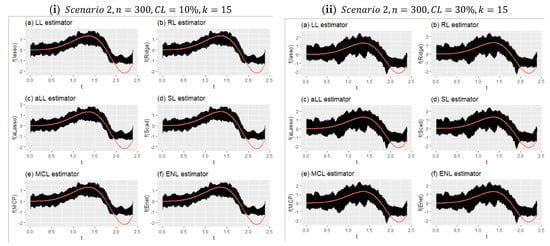

This section is prepared to show the behaviors of the estimation of nonparametric components by the introduced six estimators. Performance scores of the methods are given in Table 6 and Table 7, and and metrics are used. Additionally, Figure 8 and Figure 9 are provided to show the real smooth function versus all estimated curves for individual simulation repeats. These figures can provide information about the variation of the estimates according to both scenarios and censoring effects. Finally, in Figure 10, estimated curves obtained from all methods are inspected with four different configurations.

Table 6.

Performance scores of fitted curves by the modified estimators for Scenario 1.

Table 7.

Performance scores of fitted curves by the modified estimators for Scenario 2.

Figure 8.

Obtained fitted curves for the methods from all simulation runs for Scenario 1.

Figure 9.

Fitted curves for Scenario 2 for the simulation settings given in (i) and (ii).

Figure 10.

Mean of fitted curves obtained for the different simulation configurations. Red triangles show the synthetic response values ( vs. ), and black dots (.) denote the original data points vs. .

Table 6 and Table 7 include the MSE and ReMSE values for the two scenarios. For Scenario 1, the aLL method gives more dominant values than others, followed by SL and LL. As expected, RL shows the worst performance; however, the difference from the others is small. Note that, when the sample size becomes larger, all methods begin to give similar results. Dependent on this similarity, the ReMSE scores become closer to one, which is an expected result. Thus, even if the censoring level increases, ReMSE scores may decrease. If the tables are inspected carefully, as mentioned in Section 5.1, aLL overcomes the censorship problem better than the others regarding Scenario 1, which means that contributions of covariates are high. However, in Scenario 2, SL shows better performance in high censoring levels, especially in small and medium sample sizes. Additionally, it is clearly observed that the number of covariates affects the performances. In Table 7, when , the LL and SL methods show good performances.

Figure 8 shows two different simulation configurations for Scenario 1. The purpose of this figure is to illustrate the effect of censorship in curve estimation. Therefore, panel (i) is obtained for 10% censorship, and panel (ii) for 30% censorship. As can be seen at a glance, the minimum and maximum points of the prediction points obtained from all simulations around the real curve are shown with vertical lines. This reveals the range of variation of the estimators. Accordingly, when the difference between the effect of censorship panel (i) and panel (ii) is examined, it can be seen how the range of variation widens. It can be said, with the help of the values in Table 6, that the estimator with the least expansion is aLL and the method with the most is RL. It should also be noted that the SL and LL methods also showed satisfactory results.

Figure 9 shows the effect of censorship on the estimated curves for Scenario 2 with a large sample size and relatively few covariates (). Because there are too many data points, the lines appear as a black area. Compared with Figure 8, the effect of censorship is less, and the estimators obtain curves closer to the true curve. In addition, due to the large sample size, each method estimated very close curves. This can be clearly seen in Table 7. The obtained performance values were very close to each other. It can therefore be said that the introduced six estimators produce satisfactory results in high samples, and they are relatively less affected by censorship in this scenario.

Figure 10 consists of four panels (a)–(d) containing four different simulation cases. The first two panels (a and b) show the estimated curves of Scenario 1 for different sample sizes, different censorship levels, and different numbers of explanatory variables. It can be clearly seen that the curves in panel (a) are smoother than in panel (b). This can be explained by the messy scattering of synthetic data, which can be observed in all panels. The censorship level increases the corruption of the data structure. Similarly, panels (c) and (d) are obtained for Scenario 2, but only to observe the effect of the change in censorship level. However, the effect of the large sample size is clearly visible, and the curves appear smooth in panel (d), despite the deterioration in the data structure. If examined carefully, the aLL method gives the closest curve to the true curve. At the same time, the other methods have shown satisfactory results in representing the data.

6. Hepatocellular Carcinoma Dataset

This section contains the estimation of a right-censored partially linear model for real data, the Hepatocellular carcinoma dataset, by the introduced six estimators (RL, LL, aLL, SL, MCL, and ENL). Their performances are compared, and the results are presented in Table 8 and Figure 11, Figure 12, Figure 13 and Figure 14. The dataset was collected by [31] to study CXCL17 gene expression for hepatocellular carcinoma.

Table 8.

Scores of the evaluation metrics obtained from the hepatocellular carcinoma dataset.

Figure 11.

Descriptive plots for the right-censored hepatocellular carcinoma dataset.

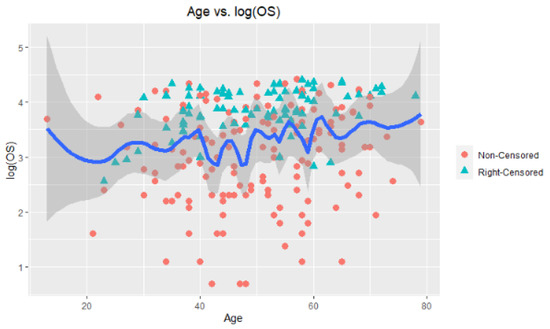

Figure 12.

Plot for nonparametric covariate (age) with hypothetical curve (blue line) with confidence intervals (gray areas).

Figure 13.

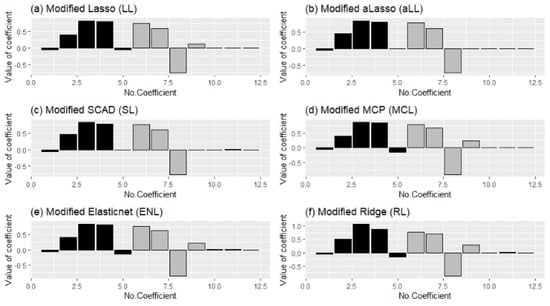

Bar plots of estimated coefficients obtained from the modified six estimators.

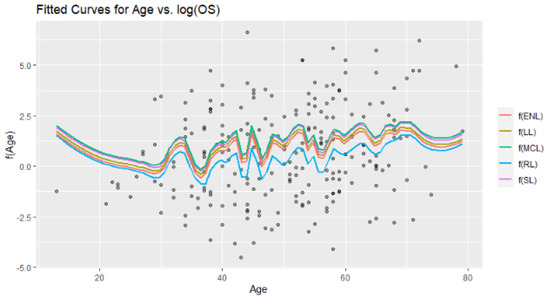

Figure 14.

Fitted curves for a nonparametric component of the model obtained from the six modified estimators.

The aforementioned dataset involves 227 data points and 13 explanatory variables, including age, recurrence-free survival (), gender (), and HBsAg (). Some variables that were obtained from blood tests to measure liver damage include (), (), and . The covariates of tumors detected in the liver are tumor size (), (tumor node and metastasis), and values of genes related to liver cancer: CXCL17T (), CXCL17P (), and CXCL17N (). Note that the logarithm of the overall survival time () is used as a response variable. Note also that the age variable is used as a nonparametric covariate because of its nonlinear structure. The remaining 12 explanatory variables are added to the parametric component of the model. Accordingly, the right-censored partially linear model can be written as follows:

where

is the -dimensional covariate matrix for the parametric component of the model, and is the -dimensional vector of the regression coefficients to be estimated. Note that in the estimation process, cannot be used directly because of censoring. Therefore, synthetic data transformation is applied to as in (6). Note also that the dataset includes 84 right-censored survival times, which means that the censoring level is . This ratio can be interpreted as a heavy censoring level in the simulation study. Therefore, it is expected that the results of the real data example should be in harmony with the results of corresponding simulation configurations (, ).

To describe the hepatocellular carcinoma dataset, Figure 11 and Figure 12 are provided. Figure 11 is constructed by two panels, (a) and (b). In panel (a), a scatter plot of the data points can be seen with censored and noncensored points. As can be observed, there are a lot of right-censored points in the dataset. To solve this problem, synthetic data transformation is realized and is shown in panel (b). The synthetic data give zero value to right-censored points and increase the magnitude of the remaining data. Thus, it aims to make equal the expected values of and completely observed response (but we do not know in real cases). Figure 12 presents the plot for response variable versus nonparametric covariate age to show the nonlinear relationship between them. Accordingly, a hypothetical curve is presented, which proves our claim on the nonlinear relationship.

General outcomes for the analysis of the hepatocellular carcinoma dataset are presented in Table 8, which involves the performance scores of the six estimators. Note that, here, -score cannot be calculated due to real regression coefficients being unknown. In Table 8, RL gives the highest value because it uses all 12 covariates in model estimation, and sparse and nonzero subsets are considered. The aLL and SL methods provide satisfying values with fewer covariates, especially aLL. Regarding the estimation of the nonparametric component, SL gives the best estimation, which supports our inference given before. In addition, aLL gives a smaller MSE value than the other four estimators.

The estimated coefficients are shown with bar plots in Figure 13 to illustrate how the methods work and to make a healthy comparison. In panels (b) and (c), the similar process of aLL and SL can be observed clearly. The ENL and RL methods also look similar to each other, which can be understood from Table 8.

Figure 14 involves the six fitted curves obtained by the introduced estimators. At first glance, all the fitted curves are very close to each other, which can be monitored in the MSE scores given in Table 8. However, the difference between RL and the other five methods can be easily observed. Due to the data structure having excessive variation, in the modeling process, the local polynomial method gains importance because it takes into account the local densities. This case can be counted as one of the important contributions of this paper.

7. Conclusions

The results of the paper obtained from the simulation study are given in Table 2, Table 3, Table 4, Table 5, Table 6 and Table 7 and Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10. The analysis is made for both parametric and nonparametric components of the model individually. The advantage of the simulation study is knowing the real values of the regression coefficients; the accuracy and sensitivity of the estimators are thus evaluated, using the confusion matrix in Table 1. From the results, the aLL and SL estimators showed the best performance and gave satisfactory results for the model estimation. In addition, the behaviors of the methods are inspected for three cases, which are sample sizes (), number of covariates (), and censoring level (). These effects are also observed by the figures. A real data example using the hepatocellular carcinoma dataset is analyzed using the introduced estimators. The results of that dataset are compared with the related simulation configurations. By using the mentioned results, concluding remarks are given as follows:

- From the simulation results regarding the parametric component estimation, Table 2, Table 3, Table 4 and Table 5 prove that the aLL and SL methods give satisfying results in terms of the metrics RMSE, , accuracy, and G-score. In more detail, for small sample sizes and low censoring levels, SL generally shows better performance than the other five methods. However, for the problematic scenarios, the aLL estimator is the best in both estimation performance and making a true selection between zero and nonzero subsets. Figure 4 and Figure 5 support these inferences.

- In addition to introduced evaluation metrics, the selection frequency of the estimators is inspected for the simulation study, and results are shown in bar plots given in Figure 6 and Figure 7. These figures demonstrate the consistency of the estimators in terms of their selection of the sparse and nonzero subsets for each coefficient. Under heavy censorship, it can be seen that LL, aLL, and SL gave the best performances. The ENL and MCL estimators did not show a good performance in this case.

- The introduced six estimators provide closer performances on the estimation of the nonparametric component. Corresponding results are given in Table 6 and Table 7 and Figure 8, Figure 9 and Figure 10. Note that Figure 8 and Figure 9 are drawn to show the individual fitted curves obtained from each simulation, which provides information about the variation of the estimators. Although the estimators give similar evaluation scores and closer fitted curves (which is seen in Figure 10), aLL and SL are the best.

- In the hepatocellular carcinoma dataset analysis, the outcomes are found in harmony with the corresponding simulation scenarios. The results are provided in Table 8 and Figure 13 and Figure 14. Similar to the simulation study, SL and aLL show the best performance. However, from Figure 14, it can be seen that the fitted curves are very close to each other, which can be explained by the large sample size. The real data study demonstrates that all six estimators show considerably good model estimates, which makes valuable the contribution of the paper.

Finally, from the results of both the simulation and real data studies, the introduced six estimators for the right-censored partially linear models based on penalty and shrinkage strategies are compared, and results are presented. It is found that the adaptive lasso (aLL) and SCAD (SL) methods are more resistant than the other four estimators against the effects of censorship and the number of covariates. In general, the ridge (RL) estimator showed poor performance. On the other hand, the lasso (LL), MCP (MCL), and elasticnet (ENL) methods provided good performance for both the parametric and nonparametric components. This study recommends the aLL and SL estimators for the problematic scenarios.

Author Contributions

Conceptualization: S.E.A. and D.A.; methodology: S.E.A. and D.A.; formal analysis and investigation: D.A. and E.Y.; writing—original draft preparation: D.A.; writing—review and editing: S.E.A. and D.A.; data curation: E.Y.; visualization: E.Y.; software: E.Y.; supervision: S.E.A. and D.A.; funding acquisition: E.Y.; resources: S.E.A. and D.A.; supervision: S.E.A. and D.A. All authors have read and agreed to the published version of the manuscript.

Funding

The research of S. Ejaz Ahmed was supported by the Natural Sciences and the Engineering Research Council (NSERC) of Canada.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The simulation dataset can be regenerated by the codes provided in https://github.com/yilmazersin13?tab=repositories accessed on 31 August 2022. The hepatocellular carcinoma dataset is publicly available in R-package named “asaur”.

Acknowledgments

We would like to thank to the editor and referees. The research of S. Ejaz Ahmed was supported by the Natural Sciences and the Engineering Research Council (NSERC) of Canada.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Appendix A.1. Proof of Lemma 1

Proof.

From A(i) and A(ii) given in Assumption 1, we obtain

This result shows that Lemma 1 has been proven. □

Appendix A.2. Derivation of Equations (15) and (16)

From Equation (14), the minimum criterion is rewritten as

By solving this equation with a partial derivative of (A1) with respect to , Equation (15) can be obtained as follows:

Thus, Equation (15) is obtained. By using , and the corresponding smoothing matrix , a modified local polynomial estimator of is given by Equation (16).

Appendix A.3. Proof of Theorem 1

To obtain the ridge-penalty-based local polynomial estimators, the key point is to calculate the partial residuals and minimize (18). From that, as mentioned before, and are calculated, and the minimization of (18) is realized based on the synthetic response variable as below:

References

- Speckman, P. Kernel smoothing in partial linear models. J. R. Stat. Soc. Ser. B 1988, 50, 413–436. [Google Scholar] [CrossRef]

- Green, P.J.; Silverman, B.W. Nonparametric Regression and Generalized Linear Models: A Roughness Penalty Approach; Crc Press: Boca Raton, FL, USA, 1993. [Google Scholar]

- Ruppert, D.; Wand, M.P.; Carroll, R.J. Semiparametric Regression (No. 12); Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Miller, R.G. Least squares regression with censored data. Biometrika 1976, 63, 449–464. [Google Scholar] [CrossRef]

- Buckley, J.; James, I. Linear regression with censored data. Biometrika 1979, 66, 429–436. [Google Scholar] [CrossRef]

- Koul, H.; Susarla, V.; Van Ryzin, J. Regression Analysis with Randomly Right-Censored Data. Ann. Stat. 1981, 9, 1276–1288. [Google Scholar] [CrossRef]

- Lai, T.; Ying, Z.; Zheng, Z. Asymptotic Normality of a Class of Adaptive Statistics with Applications to Synthetic Data Methods for Censored Regression. J. Multivar. Anal. 1995, 52, 259–279. [Google Scholar] [CrossRef][Green Version]

- Fan, J.; Gijbels, I. Censored regression: Local linear approximations and their applications. J. Am. Stat. Assoc. 1994, 89, 560–570. [Google Scholar] [CrossRef]

- Guessoum, Z.; Saïd, E.O. Kernel regression uniform rate estimation for censored data under α-mixing condition. Electron. J. Stat. 2010, 4, 117–132. [Google Scholar] [CrossRef]

- Orbe, J.; Ferreira, E.; Núñez-Antón, V. Censored partial regression. Biostatistics 2003, 4, 109–121. [Google Scholar] [CrossRef]

- Qin, G.; Jing, B.-Y. Censored Partial Linear Models and Empirical Likelihood. J. Multivar. Anal. 2001, 78, 37–61. [Google Scholar] [CrossRef]

- Aydin, D.; Yilmaz, E. Modified estimators in semiparametric regression models with right-censored data. J. Stat. Comput. Simul. 2018, 88, 1470–1498. [Google Scholar] [CrossRef]

- Raheem, S.E.; Ahmed, S.E.; Doksum, K.A. Absolute penalty and shrinkage estimation in partially linear models. Comput. Stat. Data Anal. 2012, 56, 874–891. [Google Scholar] [CrossRef]

- Ahmed, S.E. Penalty, Shrinkage and Pretest Strategies: Variable Selection and Estimation (p. 2014); Springer: New York, NY, USA, 2014. [Google Scholar]

- Stute, W. Consistent Estimation Under Random Censorship When Covariables Are Present. J. Multivar. Anal. 1993, 45, 89–103. [Google Scholar] [CrossRef]

- Stute, W. Nonlinear censored regression. Statistica Sinica 1999, 9, 1089–1102. [Google Scholar]

- Wei, L.J.; Ying, Z.; Lin, D.Y. Linear regression analysis of censored survival data based on rank tests. Biometrika 1990, 77, 845–851. [Google Scholar] [CrossRef]

- Kaplan, E.L.; Meier, P. Nonparametric estimation from incomplete observations. J. Am. Stat. Stat. association 1958, 53, 457–481. [Google Scholar] [CrossRef]

- Fan, J.; Gasser, T.; Gijbels, I.; Brockmann, M.; Engel, J. Local Polynomial Regression: Optimal Kernels and Asymptotic Minimax Efficiency. Ann. Inst. Stat. Math. 1997, 49, 79–99. [Google Scholar] [CrossRef]

- Heckman, N.E. Spline smoothing in a partly linear model. J. R. Stat. Soc. Ser. B 1986, 48, 244–248. [Google Scholar] [CrossRef]

- Rice, J. Convergence rates for partially splined models. Stat. Probab. Lett. 1986, 4, 203–208. [Google Scholar] [CrossRef]

- Ahmed, E.S.; Raheem, E.; Hossain, S. Absolute penalty estimation. In International Encyclopedia of Statistical Science; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Frank, L.E.; Friedman, J.H. A Statistical View of Some Chemometrics Regression Tools. Technometrics 1993, 35, 109–135. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable Selection via Nonconcave Penalized Likelihood and its Oracle Properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Zhang, C.-H. Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 2010, 38, 894–942. [Google Scholar] [CrossRef] [PubMed]

- Aydın, D.; Ahmed, S.E.; Yılmaz, E. Right-Censored Time Series Modeling by Modified Semi-Parametric A-Spline Estimator. Entropy 2021, 23, 1586. [Google Scholar] [CrossRef]

- Hurvich, C.M.; Simonoff, J.S.; Tsai, C.-L. Smoothing parameter selection in nonparametric regression using an improved Akaike information criterion. J. R. Stat. Soc. Ser. B 1998, 60, 271–293. [Google Scholar] [CrossRef]

- Li, L.; Yan, J.; Xu, J.; Liu, C.-Q.; Zhen, Z.-J.; Chen, H.-W.; Ji, Y.; Wu, Z.-P.; Hu, J.-Y.; Zheng, L.; et al. CXCL17 Expression Predicts Poor Prognosis and Correlates with Adverse Immune Infiltration in Hepatocellular Carcinoma. PLoS ONE 2014, 9, e110064. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).