Trajectory Tracking within a Hierarchical Primitive-Based Learning Approach

Abstract

:1. Introduction

- -

- Capability to deal with multivariable MIMO systems in level L1 and with MIMO CLCS in level L2 was proven in previous studies.

- -

- Theoretical convergence and stability guarantee at all learning levels, via different mechanisms. This is based on common data-driven assumptions in level L1, on data reusability at level L2 and on approximation error boundedness assumptions in level L3.

- -

- Ability to deal with desired trajectories of varying length at level L3.

- -

- Ability to handle inequality-type, amplitude- and rate-constraints on the output trajectory indirectly in a soft-wise style, by desired trajectory clipping at level L3 and by good linear model reference matching in level L1.

- -

- Displaying intelligent reasoning based on memorization, learning, feedback used on different levels, adaptability and robustness and generalization from previously accumulated experience to infer optimal behavior towards new unseen tasks. These traits make the framework cognitive-based.

2. Model Reference Control with Virtual State-Feedback

2.1. The Unknown Dynamic System Observability

2.2. The Reference Model

2.3. The Model Reference Control

3. The Active Temperature Control System

3.1. System Description

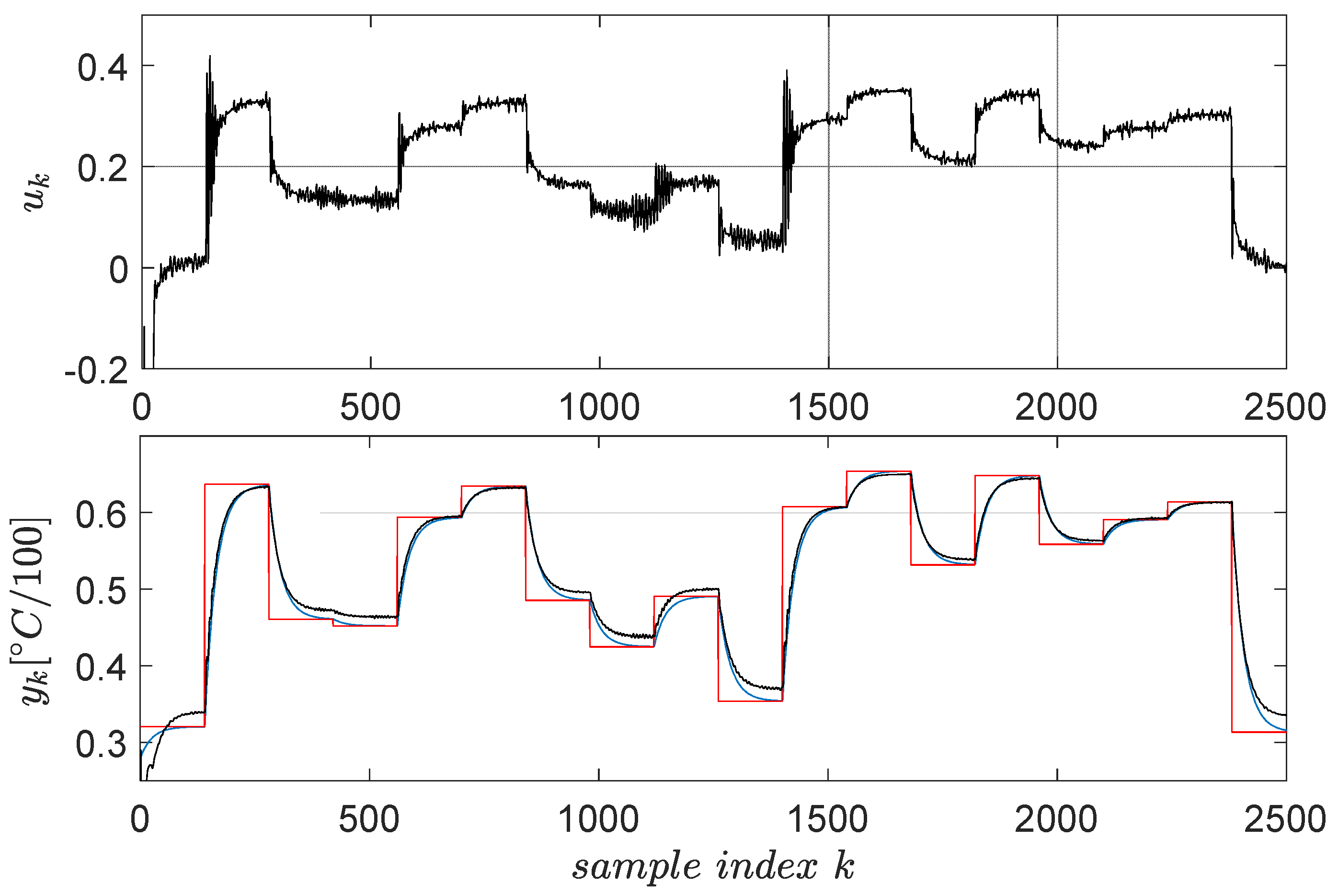

3.2. ATCS Input–Output Data Collection for Learning Low-Level L1 Control Dedicated to Model Reference Tracking

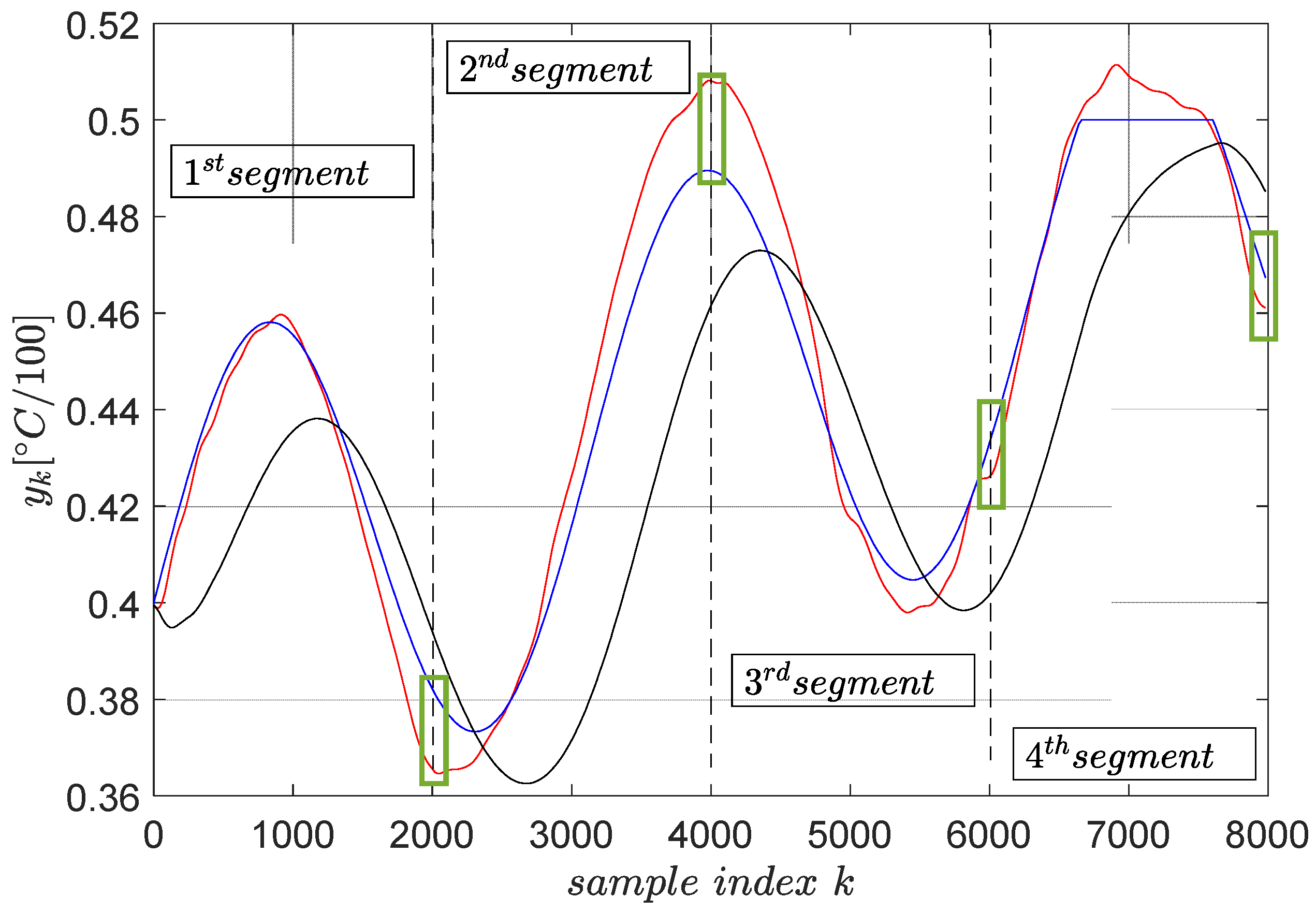

3.3. Intermediate L2 Level Primitives Learning with EDMFILC

3.4. Optimal Tracking Using Primitives at the Uppermost Level L3

4. The Electrical Braking System (EBS)

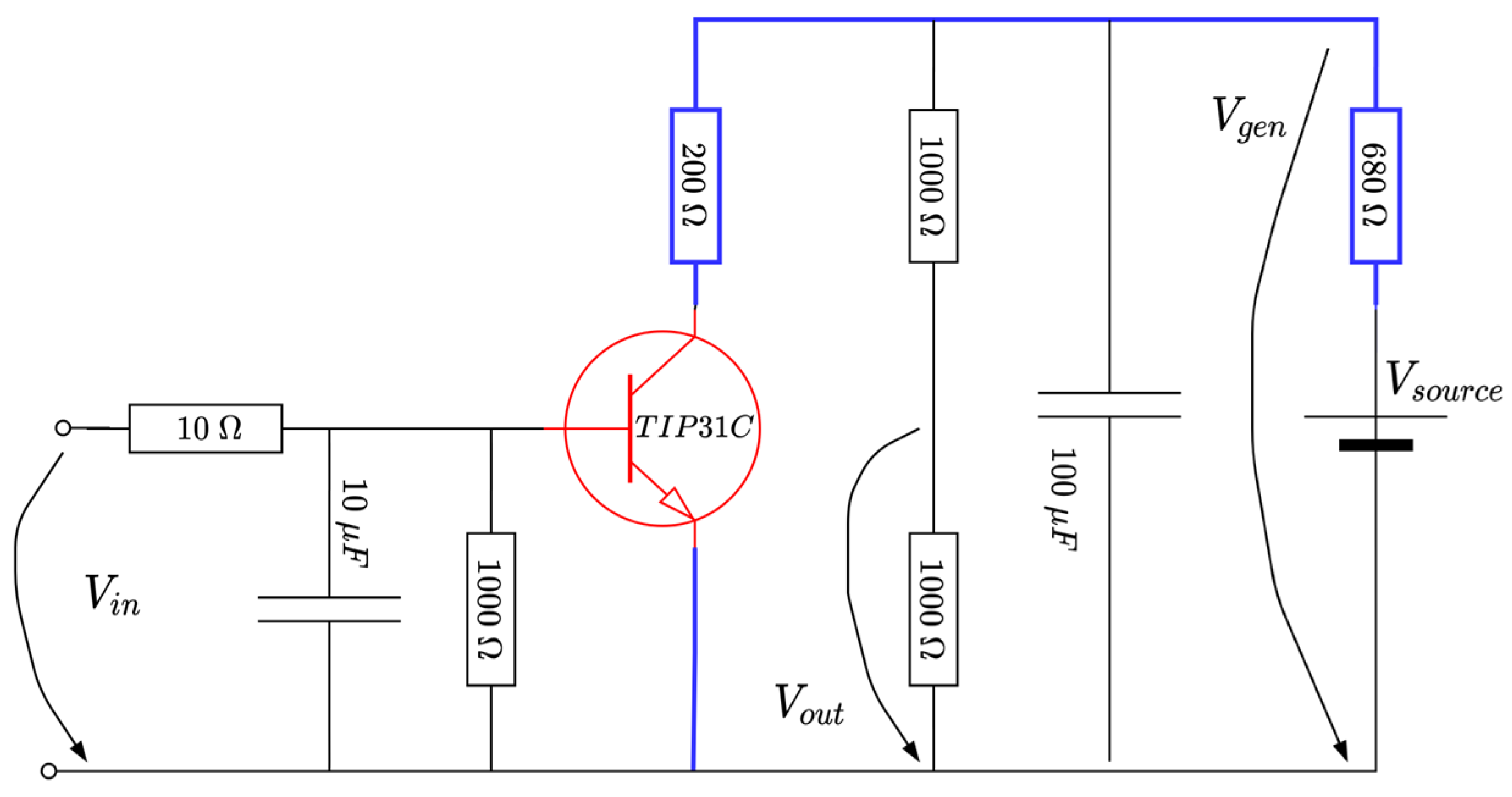

4.1. System Description

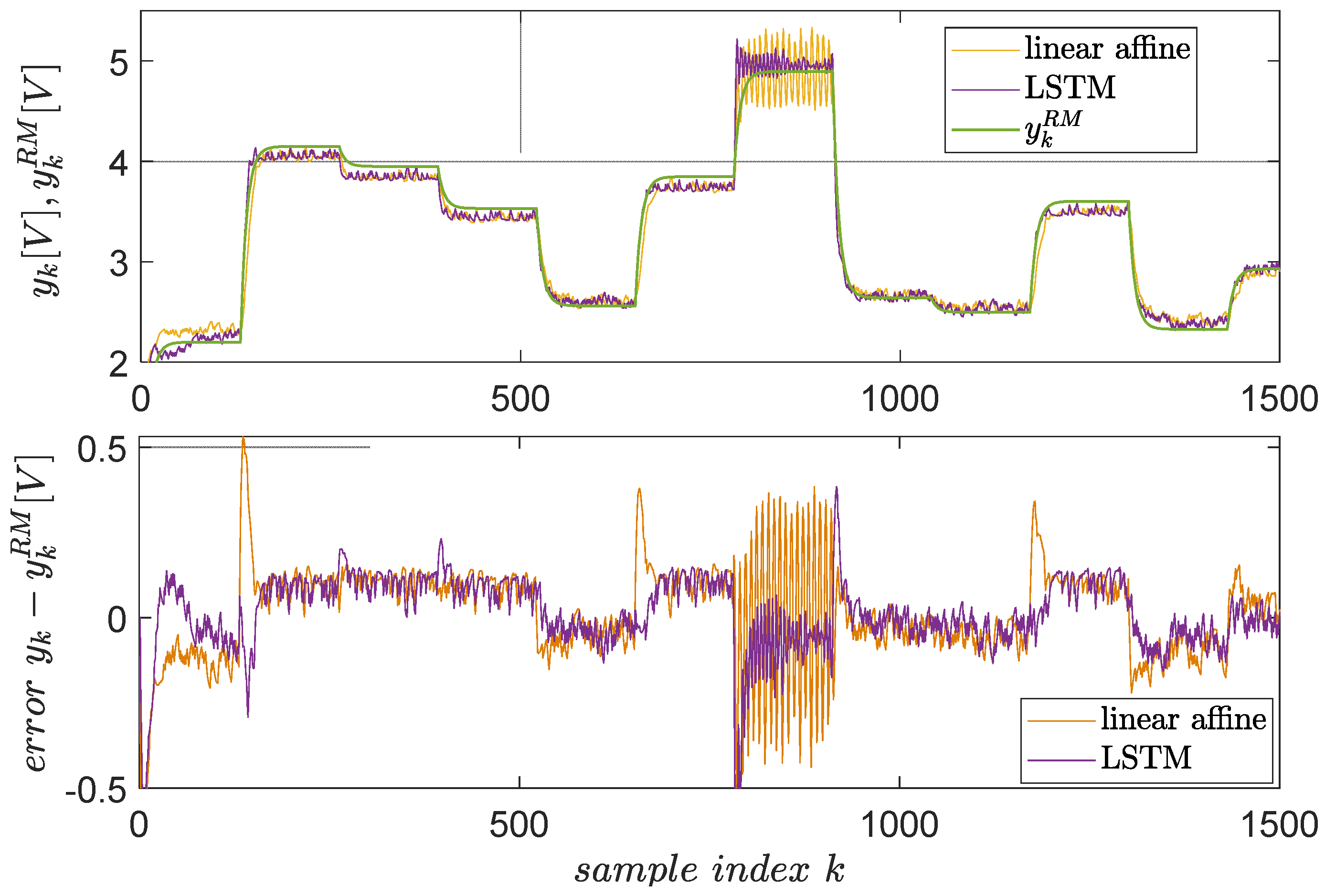

4.2. EBS Input–Output Data Collection for Learning Low-Level L1 Control Dedicated to Model Reference Tracking

4.3. Intermediate L2 Level Primitives Learning with EDMFILC

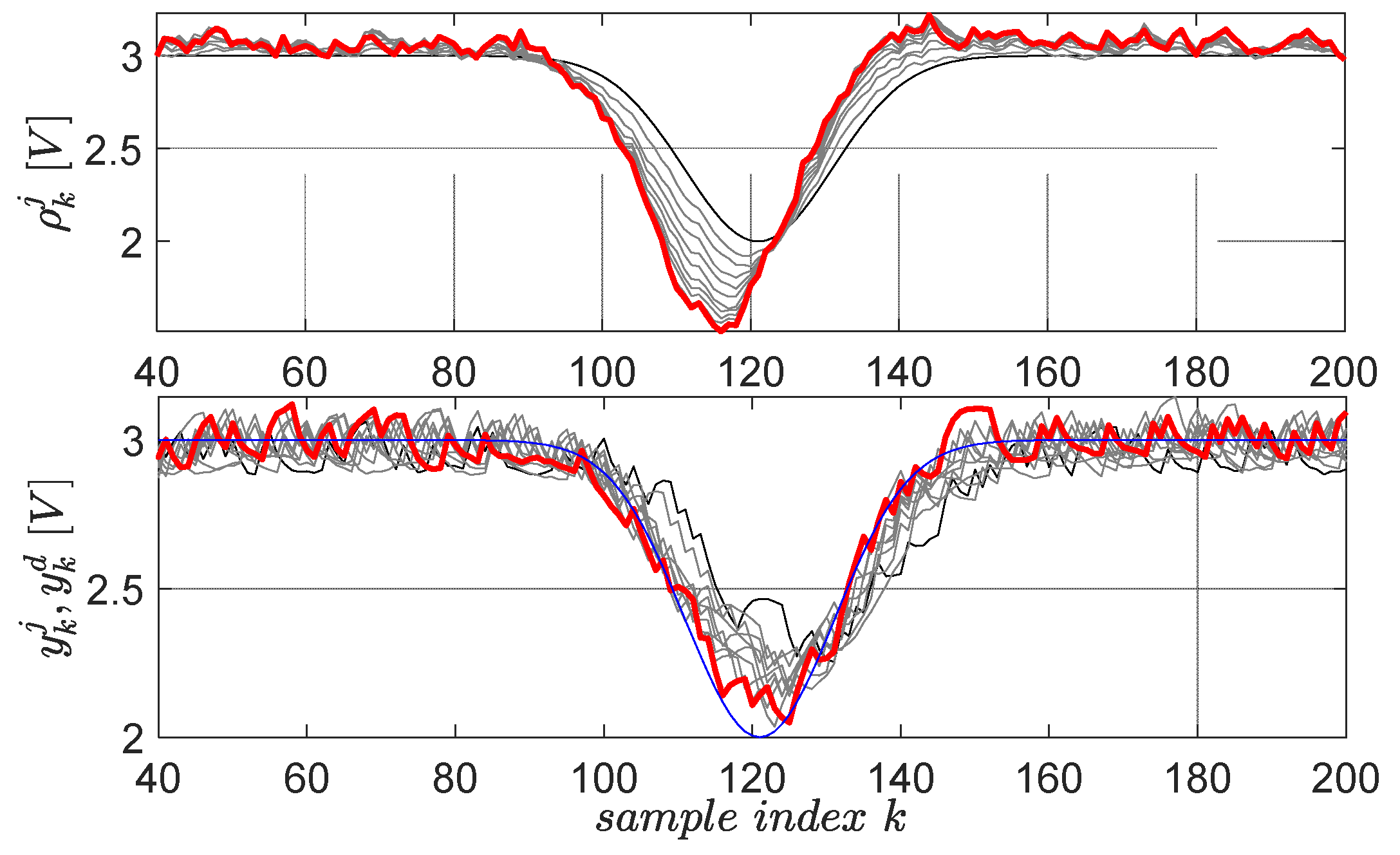

4.4. Optimal Tracking Using Primitives at the Uppermost Level L3

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lala, T.; Radac, M.-B. Learning to extrapolate an optimal tracking control behavior towards new tracking tasks in a hierarchical primitive-based framework. In Proceedings of the 2021 29th Mediterranean Conference on Control and Automation (MED), Puglia, Italy, 22–25 June 2021; pp. 421–427. [Google Scholar]

- Radac, M.-B.; Lala, T. A hierarchical primitive-based learning tracking framework for unknown observable systems based on a new state representation. In Proceedings of the 2021 European Control Conference (ECC), Delft, The Netherlands, 29 June–2 July 2021; pp. 1472–1478. [Google Scholar]

- Radac, M.-B.; Lala, T. Hierarchical cognitive control for unknown dynamic systems tracking. Mathematics 2021, 9, 2752. [Google Scholar] [CrossRef]

- Radac, M.-B.; Borlea, A.I. Virtual state feedback reference tuning and value iteration reinforcement learning for unknown observable systems control. Energies 2021, 14, 1006. [Google Scholar] [CrossRef]

- Lala, T.; Chirla, D.-P.; Radac, M.-B. Model Reference Tracking Control Solutions for a Visual Servo System Based on a Virtual State from Unknown Dynamics. Energies 2021, 15, 267. [Google Scholar] [CrossRef]

- Radac, M.-B.; Lala, T. Robust Control of Unknown Observable Nonlinear Systems Solved as a Zero-Sum Game. IEEE Access 2020, 8, 214153–214165. [Google Scholar] [CrossRef]

- Campi, M.C.; Lecchini, A.; Savaresi, S.M. Virtual reference feedback tuning: A direct method for the design of feedback controllers. Automatica 2002, 38, 1337–1346. [Google Scholar] [CrossRef] [Green Version]

- Formentin, S.; Savaresi, S.M.; Del Re, L. Non-iterative direct data-driven controller tuning for multivariable systems: Theory and application. IET Control Theory Appl. 2012, 6, 1250–1257. [Google Scholar] [CrossRef]

- Eckhard, D.; Campestrini, L.; Christ Boeira, E. Virtual disturbance feedback tuning. IFAC J. Syst. Control 2018, 3, 23–29. [Google Scholar] [CrossRef]

- Matsui, Y.; Ayano, H.; Masuda, S.; Nakano, K. A Consideration on Approximation Methods of Model Matching Error for Data-Driven Controller Tuning. SICE J. Control. Meas. Syst. Integr. 2020, 13, 291–298. [Google Scholar] [CrossRef]

- Chiluka, S.K.; Ambati, S.R.; Seepana, M.M.; Babu Gara, U.B. A novel robust Virtual Reference Feedback Tuning approach for minimum and non-minimum phase systems. ISA Trans. 2021, 115, 163–191. [Google Scholar] [CrossRef]

- D’Amico, W.; Farina, M.; Panzani, G. Advanced control based on Recurrent Neural Networks learned using Virtual Reference Feedback Tuning and application to an Electronic Throttle Body (with supplementary material). arXiv 2021, arXiv:2103.02567. [Google Scholar]

- Buşoniu, L.; de Bruin, T.; Tolić, D.; Kober, J.; Palunko, I. Reinforcement learning for control: Performance, stability, and deep approximators. Annu. Rev. Control 2018, 46, 8–28. [Google Scholar] [CrossRef]

- Xue, W.; Lian, B.; Fan, J.; Kolaric, P.; Chai, T.; Lewis, F.L. Inverse Reinforcement Q-Learning Through Expert Imitation for Discrete-Time Systems. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Treesatayapun, C. Knowledge-based reinforcement learning controller with fuzzy-rule network: Experimental validation. Neural Comput. Appl. 2019, 32, 9761–9775. [Google Scholar] [CrossRef]

- Zhao, B.; Liu, D.; Luo, C. Reinforcement Learning-Based Optimal Stabilization for Unknown Nonlinear Systems Subject to Inputs with Uncertain Constraints. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 4330–4340. [Google Scholar] [CrossRef]

- Luo, B.; Yang, Y.; Liu, D. Policy Iteration Q-Learning for Data-Based Two-Player Zero-Sum Game of Linear Discrete-Time Systems. IEEE Trans. Cybern. 2021, 51, 3630–3640. [Google Scholar] [CrossRef]

- Wang, W.; Chen, X.; Fu, H.; Wu, M. Data-driven adaptive dynamic programming for partially observable nonzero-sum games via Q-learning method. Int. J. Syst. Sci. 2019, 50, 1338–1352. [Google Scholar] [CrossRef]

- Andrychowicz, M.; Wolski, F.; Ray, A.; Schneider, J.; Fong, R.; Welinder, P.; McGrew, B.; Tobin, J.; Abbeel, P.; Zaremba, W. Hindsight experience replay. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5049–5059. [Google Scholar]

- Kong, W.; Zhou, D.; Yang, Z.; Zhao, Y.; Zhang, K. UAV autonomous aerial combat maneuver strategy generation with observation error based on state-adversarial deep deterministic policy gradient and inverse reinforcement learning. Electronics 2020, 9, 1121. [Google Scholar] [CrossRef]

- Fujimoto, S.; Van Hoof, H.; Meger, D. Addressing Function Approximation Error in Actor-Critic Methods. In Proceedings of the 35th International Conference on Machine Learning, ICML, Stockholm, Sweden, 10–15 July 2018; Volume 4, pp. 2587–2601. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the 35th International Conference on Machine Learning, ICML, Stockholm, Sweden, 10–15 July 2018; Volume 5, pp. 2976–2989. [Google Scholar]

- Zhao, D.; Zhang, Q.; Wang, D.; Zhu, Y. Experience Replay for Optimal Control of Nonzero-Sum Game Systems with Unknown Dynamics. IEEE Trans. Cybern. 2016, 46, 854–865. [Google Scholar] [CrossRef]

- Wei, Q.; Liu, D.; Lin, Q.; Song, R. Adaptive Dynamic Programming for Discrete-Time Zero-Sum Games. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 957–969. [Google Scholar] [CrossRef]

- Ni, Z.; He, H.; Zhao, D.; Xu, X.; Prokhorov, D.V. GrDHP: A general utility function representation for dual heuristic dynamic programming. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 614–627. [Google Scholar] [CrossRef]

- Mu, C.; Ni, Z.; Sun, C.; He, H. Data-Driven Tracking Control with Adaptive Dynamic Programming for a Class of Continuous-Time Nonlinear Systems. IEEE Trans. Cybern. 2017, 47, 1460–1470. [Google Scholar] [CrossRef] [PubMed]

- Deptula, P.; Rosenfeld, J.A.; Kamalapurkar, R.; Dixon, W.E. Approximate Dynamic Programming: Combining Regional and Local State Following Approximations. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2154–2166. [Google Scholar] [CrossRef] [PubMed]

- Sardarmehni, T.; Heydari, A. Suboptimal Scheduling in Switched Systems with Continuous-Time Dynamics: A Least Squares Approach. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2167–2178. [Google Scholar] [CrossRef] [PubMed]

- Guo, W.; Si, J.; Liu, F.; Mei, S. Policy Approximation in Policy Iteration Approximate Dynamic Programming for Discrete-Time Nonlinear Systems. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 2794–2807. [Google Scholar] [CrossRef]

- Al-Dabooni, S.; Wunsch, D. The Boundedness Conditions for Model-Free HDP(λ). IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 1928–1942. [Google Scholar] [CrossRef]

- Luo, B.; Yang, Y.; Liu, D.; Wu, H.N. Event-Triggered Optimal Control with Performance Guarantees Using Adaptive Dynamic Programming. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 76–88. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, H.; Yu, R.; Xing, Z. H∞ Tracking Control of Discrete-Time System with Delays via Data-Based Adaptive Dynamic Programming. IEEE Trans. Syst. Man, Cybern. Syst. 2020, 50, 4078–4085. [Google Scholar] [CrossRef]

- Na, J.; Lv, Y.; Zhang, K.; Zhao, J. Adaptive Identifier-Critic-Based Optimal Tracking Control for Nonlinear Systems with Experimental Validation. IEEE Trans. Syst. Man, Cybern. Syst. 2022, 52, 459–472. [Google Scholar] [CrossRef]

- Staessens, T.; Lefebvre, T.; Crevecoeur, G. Adaptive control of a mechatronic system using constrained residual reinforcement learning. IEEE Trans. Ind. Electron. 2022, 69, 10447–10456. [Google Scholar] [CrossRef]

- Wang, K.; Mu, C. Asynchronous learning for actor-critic neural networks and synchronous triggering for multiplayer system. ISA Trans. 2022. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, H.; Ma, D.; Wang, R.; Wang, T.; Xie, X. Real-Time Leak Location of Long-Distance Pipeline Using Adaptive Dynamic Programming. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Yu, X.; Hou, Z.; Polycarpou, M.M. A Data-Driven ILC Framework for a Class of Nonlinear Discrete-Time Systems. IEEE Trans. Cybern. 2021, 52, 6143–6157. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Liu, J.; Ruan, X. Equivalence and convergence of two iterative learning control schemes with state feedback. Int. J. Robust Nonlinear Control 2021, 32, 1561–1582. [Google Scholar] [CrossRef]

- Meng, D.; Zhang, J. Design and Analysis of Data-Driven Learning Control: An Optimization-Based Approach. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Chi, R.; Wei, Y.; Wang, R.; Hou, Z. Observer based switching ILC for consensus of nonlinear nonaffine multi-agent systems. J. Frankl. Inst. 2021, 358, 6195–6216. [Google Scholar] [CrossRef]

- Ma, J.; Cheng, Z.; Zhu, H.; Li, X.; Tomizuka, M.; Lee, T.H. Convex Parameterization and Optimization for Robust Tracking of a Magnetically Levitated Planar Positioning System. IEEE Trans. Ind. Electron. 2022, 69, 3798–3809. [Google Scholar] [CrossRef]

- Shen, M.; Wu, X.; Park, J.H.; Yi, Y.; Sun, Y. Iterative Learning Control of Constrained Systems with Varying Trial Lengths Under Alignment Condition. IEEE Trans. Neural Netw. Learn. Syst. 2021, 1–7. [Google Scholar] [CrossRef]

- Chi, R.; Li, H.; Shen, D.; Hou, Z.; Huang, B. Enhanced P-type Control: Indirect Adaptive Learning from Set-point Updates. IEEE Trans. Aut. Control 2022. [Google Scholar] [CrossRef]

- Xing, J.; Lin, N.; Chi, R.; Huang, B.; Hou, Z. Data-driven nonlinear ILC with varying trial lengths. J. Franklin Inst. 2020, 357, 10262–10287. [Google Scholar] [CrossRef]

- Yonezawa, A.; Yonezawa, H.; Kajiwara, I. Parameter tuning technique for a model-free vibration control system based on a virtual controlled object. Mech. Syst. Signal Process. 2022, 165, 108313. [Google Scholar] [CrossRef]

- Zhang, H.; Chi, R.; Hou, Z.; Huang, B. Quantisation compensated data-driven iterative learning control for nonlinear systems. Int. J. Syst. Sci. 2021, 53, 275–290. [Google Scholar] [CrossRef]

- Fenyes, D.; Nemeth, B.; Gaspar, P. Data-driven modeling and control design in a hierarchical structure for a variable-geometry suspension test bed. In Proceedings of the 2021 60th IEEE Conference on Decision and Control (CDC), Austin, TX, USA, 14–17 December 2021. [Google Scholar]

- Wu, B.; Gupta, J.K.; Kochenderfer, M. Model primitives for hierarchical lifelong reinforcement learning. Auton. Agent. Multi. Agent. Syst. 2020, 34, 28. [Google Scholar] [CrossRef]

- Li, J.; Li, Z.; Li, X.; Feng, Y.; Hu, Y.; Xu, B. Skill Learning Strategy Based on Dynamic Motion Primitives for Human-Robot Cooperative Manipulation. IEEE Trans. Cogn. Dev. Syst. 2021, 13, 105–117. [Google Scholar] [CrossRef]

- Kim, Y.L.; Ahn, K.H.; Song, J.B. Reinforcement learning based on movement primitives for contact tasks. Robot. Comput. Integr. Manuf. 2020, 62, 101863. [Google Scholar] [CrossRef]

- Camci, E.; Kayacan, E. Learning motion primitives for planning swift maneuvers of quadrotor. Auton. Robots 2019, 43, 1733–1745. [Google Scholar] [CrossRef]

- Yang, C.; Chen, C.; He, W.; Cui, R.; Li, Z. Robot Learning System Based on Adaptive Neural Control and Dynamic Movement Primitives. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 777–787. [Google Scholar] [CrossRef]

- Zhou, Y.; Vamvoudakis, K.G.; Haddad, W.M.; Jiang, Z.P. A secure control learning framework for cyber-physical systems under sensor and actuator attacks. IEEE Trans. Cybern. 2020, 51, 4648–4660. [Google Scholar] [CrossRef]

- Niu, H.; Sahoo, A.; Bhowmick, C.; Jagannathan, S. An optimal hybrid learning approach for attack detection in linear networked control systems. IEEE/CAA J. Autom. Sin. 2019, 6, 1404–1416. [Google Scholar] [CrossRef]

- Jafari, M.; Xu, H.; Carrillo, L.R.G. A biologically-inspired reinforcement learning based intelligent distributed flocking control for multi-agent systems in presence of uncertain system and dynamic environment. IFAC J. Syst. Control 2020, 13, 100096. [Google Scholar] [CrossRef]

- Marvi, Z.; Kiumarsi, B. Barrier-Certified Learning-Enabled Safe Control Design for Systems Operating in Uncertain Environments. IEEE/CAA J. Autom. Sin. 2021, 9, 437–449. [Google Scholar] [CrossRef]

- Rosolia, U.; Lian, Y.; Maddalena, E.; Ferrari-Trecate, G.; Jones, C.N. On the Optimality and Convergence Properties of the Iterative Learning Model Predictive Controller. IEEE Trans. Automat. Control 2022. [Google Scholar] [CrossRef]

- Radac, M.-B.; Borlea, A.-B. Learning model-free reference tracking control with affordable systems. In Intelligent Techniques for Efficient Use of Valuable Resources-Knowledge and Cultural Resources; Springer Book Series; Springer: Berlin/Heidelberg, Germany, 2022; in press. [Google Scholar]

- Borlea, A.-B.; Radac, M.-B. A hierarchical learning framework for generalizing tracking control behavior of a laboratory electrical system. In Proceedings of the 17th IEEE International Conference on Control & Automation (IEEE ICCA 2022), Naples, Italy, 27–30 June 2022; pp. 231–236. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radac, M.-B. Trajectory Tracking within a Hierarchical Primitive-Based Learning Approach. Entropy 2022, 24, 889. https://doi.org/10.3390/e24070889

Radac M-B. Trajectory Tracking within a Hierarchical Primitive-Based Learning Approach. Entropy. 2022; 24(7):889. https://doi.org/10.3390/e24070889

Chicago/Turabian StyleRadac, Mircea-Bogdan. 2022. "Trajectory Tracking within a Hierarchical Primitive-Based Learning Approach" Entropy 24, no. 7: 889. https://doi.org/10.3390/e24070889