Counterfactual Supervision-Based Information Bottleneck for Out-of-Distribution Generalization

Abstract

1. Introduction

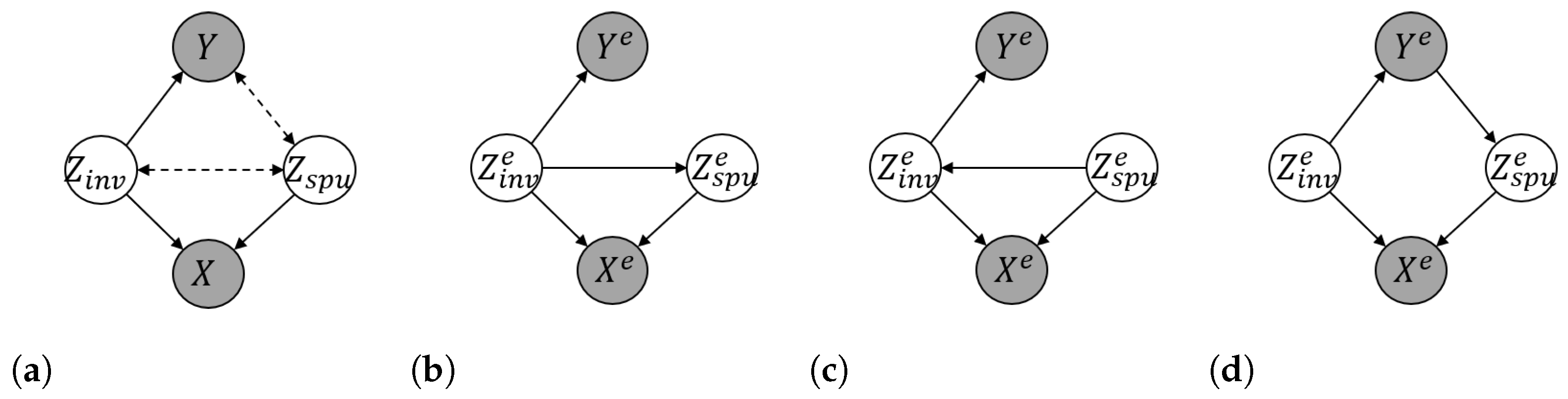

2. OOD Generalization: Background and Formulations

2.1. Background on Structural Equation Models

2.2. Formulations of OOD Generalization

2.3. Background on IRM and IB-IRM

3. OOD Generalization: Assumptions and Learnability

3.1. Assumptions about the Training Environments

3.2. Assumptions about the OOD Environments

4. Failures of IRM and IB-IRM

4.1. Failure under Spurious Correlation 2

4.2. Failure under Spurious Correlation 3

4.3. Understanding the Failures

5. Counterfactual Supervision-Based Information Bottleneck

| Algorithm 1 Counterfactual Supervision-based Information Bottleneck (CSIB) |

| Input:, , , , , and is an example randomly drawn from . |

| Output: classifier , feature extractor . |

| Begin: |

|

| End |

6. Experiments

6.1. Toy Experiments on Synthetic Datasets

6.1.1. Datasets

6.1.2. Summary of Results

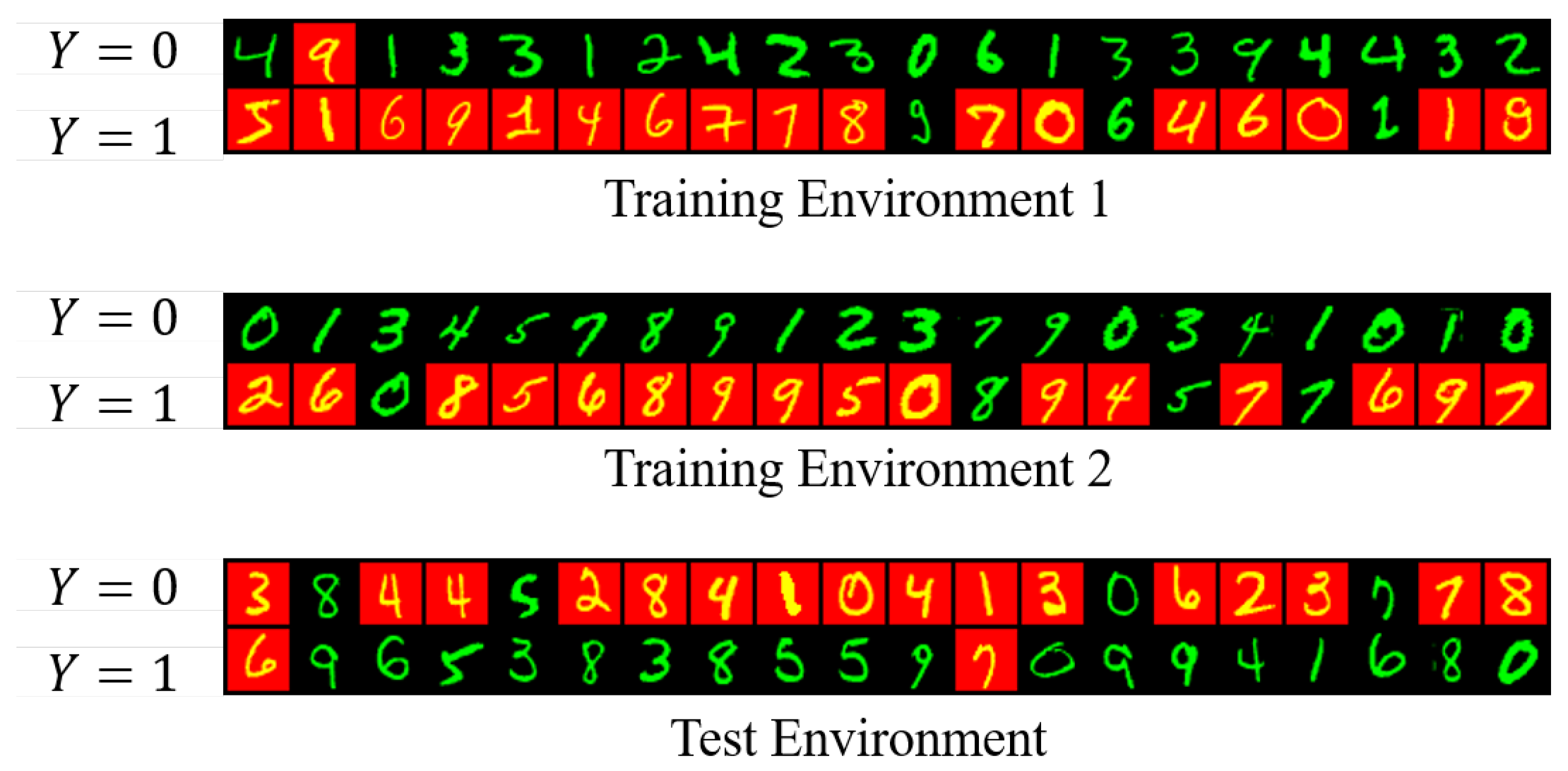

6.2. Experiments on Color MNIST Dataset

7. Related Works

7.1. Theory of OOD Generalization

7.2. Methods of OOD Generalization

8. Conclusions, Limitations and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| OOD | Out-of-distribution |

| ERM | Empirical risk minimization |

| IRM | Invariant risk minimization |

| IB-ERM | Information bottleneck-based empirical risk minimization |

| IB-IRM | Information bottleneck-based invariant risk minimization |

| CSIB | Counterfactual supervision-based information bottleneck |

| DAG | Directed acyclic graph |

| SEM | Structure equation model |

| SVD | Singular value decomposition |

Appendix A. Experiments Details

Appendix A.1. Optimization Loss of IB-ERM

Appendix A.2. Experiments Setup

Appendix A.3. Supplementary Experiments

| #Envs | ERM (min) | IRM (min) | IB-ERM (min) | IB-IRM (min) | CSIB (min) | Oracle (min) | |

|---|---|---|---|---|---|---|---|

| Example 1 | 1 | 0.50 ± 0.01 (0.49) | 0.50 ± 0.01 (0.49) | 0.23 ± 0.02 (0.22) | 0.31 ± 0.10 (0.25) | 0.23 ± 0.02 (0.22) | 0.00 ± 0.00 (0.00) |

| Example 1S | 1 | 0.50 ± 0.00 (0.49) | 0.50 ± 0.00 (0.49) | 0.09 ± 0.04 (0.04) | 0.30 ± 0.10 (0.25) | 0.08 ± 0.04 (0.04) | 0.00 ± 0.00 (0.00) |

| Example 2 | 1 | 0.40 ± 0.20 (0.00) | 0.00 ± 0.00 (0.00) | 0.50 ± 0.00 (0.49) | 0.48 ± 0.03 (0.43) | 0.00 ± 0.00 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 2S | 1 | 0.50 ± 0.00 (0.50) | 0.30 ± 0.25 (0.00) | 0.50 ± 0.00 (0.50) | 0.50 ± 0.01 (0.48) | 0.00 ± 0.00 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 3 | 1 | 0.16 ± 0.06 (0.09) | 0.03 ± 0.00 (0.03) | 0.50 ± 0.01 (0.49) | 0.41 ± 0.09 (0.25) | 0.02 ± 0.01 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 3S | 1 | 0.16 ± 0.06 (0.10) | 0.04 ± 0.01 (0.02) | 0.50 ± 0.00 (0.50) | 0.41 ± 0.12 (0.26) | 0.01 ± 0.01 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 1 | 3 | 0.44 ± 0.01 (0.44) | 0.44 ± 0.01 (0.44) | 0.21 ± 0.00 (0.21) | 0.21 ± 0.10 (0.06) | 0.21 ± 0.00 (0.21) | 0.00 ± 0.00 (0.00) |

| Example 1S | 3 | 0.45 ± 0.00 (0.44) | 0.45 ± 0.00 (0.44) | 0.09 ± 0.03 (0.05) | 0.23 ± 0.13 (0.01) | 0.09 ± 0.03 (0.05) | 0.00 ± 0.00 (0.00) |

| Example 2 | 3 | 0.13 ± 0.07 (0.00) | 0.00 ± 0.00 (0.00) | 0.50 ± 0.00 (0.50) | 0.33 ± 0.04 (0.25) | 0.00 ± 0.00 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 2S | 3 | 0.50 ± 0.00 (0.50) | 0.14 ± 0.20 (0.00) | 0.50 ± 0.00 (0.50) | 0.34 ± 0.01 (0.33) | 0.00 ± 0.00 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 3 | 3 | 0.17 ± 0.04 (0.14) | 0.02 ± 0.00 (0.02) | 0.50 ± 0.01 (0.49) | 0.43 ± 0.08 (0.29) | 0.01 ± 0.00 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 3S | 3 | 0.17 ± 0.04 (0.13) | 0.02 ± 0.00 (0.02) | 0.50 ± 0.00 (0.50) | 0.36 ± 0.18 (0.07) | 0.01 ± 0.00 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 1 | 6 | 0.46 ± 0.01 (0.44) | 0.46 ± 0.09 (0.41) | 0.22 ± 0.01 (0.21) | 0.41 ± 0.11 (0.26) | 0.22 ± 0.01 (0.21) | 0.00 ± 0.00 (0.00) |

| Example 1S | 6 | 0.46 ± 0.02 (0.44) | 0.46 ± 0.02 (0.44) | 0.06 ± 0.04 (0.02) | 0.45 ± 0.07 (0.41) | 0.06 ± 0.04 (0.02) | 0.00 ± 0.00 (0.00) |

| Example 2 | 6 | 0.21 ± 0.03 (0.17) | 0.00 ± 0.00 (0.00) | 0.50 ± 0.00 (0.50) | 0.36 ± 0.03 (0.31) | 0.00 ± 0.00 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 2S | 6 | 0.50 ± 0.00 (0.50) | 0.10 ± 0.20 (0.00) | 0.50 ± 0.00 (0.50) | 0.19 ± 0.16 (0.01) | 0.00 ± 0.00 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 3 | 6 | 0.17 ± 0.03 (0.14) | 0.02 ± 0.00 (0.02) | 0.50 ± 0.00 (0.49) | 0.37 ± 0.16 (0.16) | 0.01 ± 0.00 (0.00) | 0.00 ± 0.00 (0.00) |

| Example 3S | 6 | 0.17 ± 0.03 (0.14) | 0.02 ± 0.00 (0.02) | 0.50 ± 0.00 (0.50) | 0.46 ± 0.09 (0.28) | 0.01 ± 0.00 (0.00) | 0.00 ± 0.00 (0.00) |

| #Envs | ? | q | ERM | IB-ERM | IB-IRM | CSIB | IRM | Oracle | |

|---|---|---|---|---|---|---|---|---|---|

| Example 1 | 1 | Yes | 0 | 0.50 ± 0.01 | 0.23 ± 0.02 | 0.31 ± 0.10 | 0.23 ± 0.02 | 0.50 ± 0.01 | 0.00 ± 0.00 |

| Example 1S | 1 | Yes | 0 | 0.50 ± 0.00 | 0.46 ± 0.04 | 0.30 ± 0.10 | 0.46 ± 0.04 | 0.50 ± 0.00 | 0.00 ± 0.00 |

| Example 1 | 3 | Yes | 0 | 0.45 ± 0.01 | 0.22 ± 0.01 | 0.23 ± 0.13 | 0.22 ± 0.01 | 0.45 ± 0.01 | 0.00 ± 0.00 |

| Example 1S | 3 | Yes | 0 | 0.45 ± 0.00 | 0.41 ± 0.04 | 0.27 ± 0.11 | 0.41 ± 0.04 | 0.45 ± 0.00 | 0.00 ± 0.00 |

| Example 1 | 6 | Yes | 0 | 0.46 ± 0.01 | 0.22 ± 0.01 | 0.37 ± 0.14 | 0.22 ± 0.01 | 0.46 ± 0.09 | 0.00 ± 0.00 |

| Example 1S | 6 | Yes | 0 | 0.46 ± 0.02 | 0.35 ± 0.10 | 0.42 ± 0.12 | 0.35 ± 0.10 | 0.46 ± 0.02 | 0.00 ± 0.00 |

| Example 1 | 1 | No | 0 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.15 ± 0.20 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| Example 1S | 1 | No | 0 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.12 ± 0.19 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| Example 1 | 3 | No | 0 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| Example 1S | 3 | No | 0 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.01 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| Example 1 | 6 | No | 0 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.30 ± 0.20 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.00 ± 0.00 |

| Example 1S | 6 | No | 0 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.31 ± 0.20 | 0.00 ± 0.00 | 0.04 ± 0.06 | 0.00 ± 0.00 |

| Example 1 | 1 | No | 0.05 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.32 ± 0.22 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.05 ± 0.00 |

| Example 1S | 1 | No | 0.05 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.19 ± 0.17 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.05 ± 0.00 |

| Example 1 | 3 | No | 0.05 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.07 ± 0.03 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.05 ± 0.00 |

| Example 1S | 3 | No | 0.05 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.05 ± 0.00 |

| Example 1 | 6 | No | 0.05 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.30 ± 0.21 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.05 ± 0.00 |

| Example 1S | 6 | No | 0.05 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.32 ± 0.19 | 0.05 ± 0.00 | 0.05 ± 0.00 | 0.05 ± 0.00 |

| #Envs | ? | q | ERM | IB-ERM | IB-IRM | IRM | Oracle | |

|---|---|---|---|---|---|---|---|---|

| Example 1 | 1 | Yes | 0 | 0.50 ± 0.01 | 0.25 ± 0.01 | 0.31 ± 0.10 | 0.50 ± 0.01 | 0.00 ± 0.00 |

| Example 1S | 1 | Yes | 0 | 0.50 ± 0.00 | 0.49 ± 0.01 | 0.30 ± 0.10 | 0.50 ± 0.00 | 0.00 ± 0.00 |

| Example 1 | 3 | Yes | 0 | 0.44 ± 0.01 | 0.23 ± 0.01 | 0.21 ± 0.10 | 0.44 ± 0.01 | 0.00 ± 0.00 |

| Example 1S | 3 | Yes | 0 | 0.45 ± 0.00 | 0.44 ± 0.01 | 0.42 ± 0.04 | 0.45 ± 0.00 | 0.00 ± 0.00 |

| Example 1 | 6 | Yes | 0 | 0.46 ± 0.01 | 0.27 ± 0.07 | 0.41 ± 0.11 | 0.46 ± 0.01 | 0.01 ± 0.01 |

| Example 1S | 6 | Yes | 0 | 0.46 ± 0.02 | 0.42 ± 0.08 | 0.46 ± 0.09 | 0.46 ± 0.02 | 0.01 ± 0.02 |

| Example 1 | 1 | No | 0 | 0.50 ± 0.01 | 0.00 ± 0.00 | 0.15 ± 0.20 | 0.50 ± 0.01 | 0.00 ± 0.00 |

| Example 1S | 1 | No | 0 | 0.50 ± 0.00 | 0.00 ± 0.00 | 0.13 ± 0.19 | 0.50 ± 0.00 | 0.00 ± 0.00 |

| Example 1 | 3 | No | 0 | 0.45 ± 0.01 | 0.00 ± 0.00 | 0.00 ± 0.00 | 0.45 ± 0.01 | 0.00 ± 0.00 |

| Example 1S | 3 | No | 0 | 0.45 ± 0.00 | 0.01 ± 0.02 | 0.08 ± 0.14 | 0.46 ± 0.02 | 0.00 ± 0.00 |

| Example 1 | 6 | No | 0 | 0.46 ± 0.01 | 0.10 ± 0.16 | 0.30 ± 0.20 | 0.46 ± 0.01 | 0.01 ± 0.01 |

| Example 1S | 6 | No | 0 | 0.46 ± 0.01 | 0.24 ± 0.19 | 0.41 ± 0.12 | 0.47 ± 0.03 | 0.01 ± 0.02 |

| Example 1 | 1 | No | 0.05 | 0.50 ± 0.01 | 0.05 ± 0.00 | 0.32 ± 0.22 | 0.50 ± 0.01 | 0.05 ± 0.00 |

| Example 1S | 1 | No | 0.05 | 0.50 ± 0.01 | 0.05 ± 0.01 | 0.20 ± 0.17 | 0.50 ± 0.00 | 0.05 ± 0.00 |

| Example 1 | 3 | No | 0.05 | 0.45 ± 0.01 | 0.05 ± 0.00 | 0.07 ± 0.03 | 0.47 ± 0.01 | 0.05 ± 0.00 |

| Example 1S | 3 | No | 0.05 | 0.45 ± 0.01 | 0.07 ± 0.03 | 0.11 ± 0.11 | 0.46 ± 0.01 | 0.05 ± 0.00 |

| Example 1 | 6 | No | 0.05 | 0.47 ± 0.01 | 0.14 ± 0.14 | 0.30 ± 0.21 | 0.47 ± 0.01 | 0.05 ± 0.00 |

| Example 1S | 6 | No | 0.05 | 0.47 ± 0.01 | 0.27 ± 0.18 | 0.42 ± 0.11 | 0.47 ± 0.01 | 0.05 ± 0.01 |

Appendix B. Proofs

Appendix B.1. Preliminary

Appendix B.2. Proof of Theorem 2

Appendix B.3. Proof of Theorem 3

- In the case when each follows Assumption 4 of , we haveThen, for any of , we must have for any to make error no larger than q. Since is zero mean with at least two distinct points in each component, we can conclude that . Similarly, for any of , we have . From Lemma A3 or Lemma A4, we obtain . Therefore, there exists a more optimal solution to IB-ERM with zero weight to , which contradicts the assumption.

- In the case when each follows Assumption 5 of , we haveFrom Lemma A3 or Lemma A4, we obtain . In addition, the spurious features are assumed to be linearly separable. Therefore, there exists a more optimal solution to IB-ERM with zero weight to , which contradicts the assumption.

- In the case when each follows Assumption 6 of , we haveThen, for any of , we must have for any and to make error no larger than q. Since and are both zero mean variables with at least two distinct points in each component, we can conclude that ; Similarly, for any of , we have . From Lemma A3 or Lemma A4, we obtain . Therefore, there exists a more optimal solution to IB-ERM with zero weight to , which contradicts the assumption.

References

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Rosenfeld, A.; Zemel, R.; Tsotsos, J.K. The elephant in the room. arXiv 2018, arXiv:1808.03305. [Google Scholar]

- Geirhos, R.; Rubisch, P.; Michaelis, C.; Bethge, M.; Wichmann, F.A.; Brendel, W. ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Nguyen, A.; Yosinski, J.; Clune, J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. In Proceedings of the Computer Vision and Pattern Recognition Conference, Boston, MA, USA, 7–12 June 2015; pp. 427–436. [Google Scholar]

- Gururangan, S.; Swayamdipta, S.; Levy, O.; Schwartz, R.; Bowman, S.R.; Smith, N.A. Annotation Artifacts in Natural Language Inference Data. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers); Association for Computational Linguistics: New Orleans, LA, USA, 2018. [Google Scholar]

- Geirhos, R.; Jacobsen, J.H.; Michaelis, C.; Zemel, R.; Brendel, W.; Bethge, M.; Wichmann, F.A. Shortcut learning in deep neural networks. Nat. Mach. Intell. 2020, 2, 665–673. [Google Scholar] [CrossRef]

- Beery, S.; Van Horn, G.; Perona, P. Recognition in terra incognita. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 456–473. [Google Scholar]

- Arjovsky, M.; Bottou, L.; Gulrajani, I.; Lopez-Paz, D. Invariant risk minimization. arXiv 2019, arXiv:1907.02893. [Google Scholar]

- Krueger, D.; Caballero, E.; Jacobsen, J.H.; Zhang, A.; Binas, J.; Zhang, D.; Le Priol, R.; Courville, A. Out-of-distribution generalization via risk extrapolation (rex). In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 5815–5826. [Google Scholar]

- Ahuja, K.; Shanmugam, K.; Varshney, K.; Dhurandhar, A. Invariant risk minimization games. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 13–18 July 2020; pp. 145–155. [Google Scholar]

- Pezeshki, M.; Kaba, O.; Bengio, Y.; Courville, A.C.; Precup, D.; Lajoie, G. Gradient starvation: A learning proclivity in neural networks. In Proceedings of the Neural Information Processing Systems, Virtual, 6–14 December 2021; Volume 34. [Google Scholar]

- Ahuja, K.; Caballero, E.; Zhang, D.; Gagnon-Audet, J.C.; Bengio, Y.; Mitliagkas, I.; Rish, I. Invariance principle meets information bottleneck for out-of-distribution generalization. In Proceedings of the Neural Information Processing Systems, Virtual, 6–14 December 2021; Volume 34. [Google Scholar]

- Pearl, J. Causality; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Peters, J.; Janzing, D.; Schölkopf, B. Elements of Causal Inference: Foundations and Learning Algorithms; The MIT Press: Cambridge, MA, USA, 2017. [Google Scholar]

- Peters, J.; Bühlmann, P.; Meinshausen, N. Causal inference by using invariant prediction: Identification and confidence intervals. J. R. Stat. Soc. Ser. B 2016, 78, 947–1012. [Google Scholar] [CrossRef]

- Tishby, N. The information bottleneck method. In Proceedings of the Annual Allerton Conference on Communications, Control and Computing, Monticello, IL, USA, 22–24 September 1999; pp. 368–377. [Google Scholar]

- Aubin, B.; Słowik, A.; Arjovsky, M.; Bottou, L.; Lopez-Paz, D. Linear unit-tests for invariance discovery. arXiv 2021, arXiv:2102.10867. [Google Scholar]

- Soudry, D.; Hoffer, E.; Nacson, M.S.; Gunasekar, S.; Srebro, N. The implicit bias of gradient descent on separable data. J. Mach. Learn. Res. 2018, 19, 2822–2878. [Google Scholar]

- Heinze-Deml, C.; Peters, J.; Meinshausen, N. Invariant causal prediction for nonlinear models. arXiv 2018, arXiv:1706.08576. [Google Scholar] [CrossRef]

- Rojas-Carulla, M.; Schölkopf, B.; Turner, R.; Peters, J. Invariant models for causal transfer learning. J. Mach. Learn. Res. 2018, 19, 1309–1342. [Google Scholar]

- Rosenfeld, E.; Ravikumar, P.K.; Risteski, A. The Risks of Invariant Risk Minimization. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Kamath, P.; Tangella, A.; Sutherland, D.; Srebro, N. Does invariant risk minimization capture invariance? In Proceedings of the International Conference on Artificial Intelligence and Statistics, PMLR, San Diego, CA, USA, 13–15 April 2021; pp. 4069–4077. [Google Scholar]

- Lu, C.; Wu, Y.; Hernández-Lobato, J.M.; Schölkopf, B. Invariant Causal Representation Learning for Out-of-Distribution Generalization. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 December 2022. [Google Scholar]

- Liu, C.; Sun, X.; Wang, J.; Tang, H.; Li, T.; Qin, T.; Chen, W.; Liu, T.Y. Learning causal semantic representation for out-of-distribution prediction. In Proceedings of the Neural Information Processing Systems, Virtual, 6–14 December 2021; Volume 34. [Google Scholar]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Rezende, D.J.; Mohamed, S.; Wierstra, D. Stochastic backpropagation and approximate inference in deep generative models. In Proceedings of the International Conference on Machine Learning, PMLR, Beijing, China, 21–26 June 2014; pp. 1278–1286. [Google Scholar]

- Lu, C.; Wu, Y.; Hernández-Lobato, J.M.; Schölkopf, B. Nonlinear invariant risk minimization: A causal approach. arXiv 2021, arXiv:2102.12353. [Google Scholar]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Schölkopf, B.; Locatello, F.; Bauer, S.; Ke, N.R.; Kalchbrenner, N.; Goyal, A.; Bengio, Y. Toward causal representation learning. Proc. IEEE 2021, 109, 612–634. [Google Scholar] [CrossRef]

- Namkoong, H.; Duchi, J.C. Stochastic gradient methods for distributionally robust optimization with f-divergences. In Proceedings of the Neural Information processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Sinha, A.; Namkoong, H.; Volpi, R.; Duchi, J. Certifying some distributional robustness with principled adversarial training. arXiv 2017, arXiv:1710.10571. [Google Scholar]

- Lee, J.; Raginsky, M. Minimax statistical learning with wasserstein distances. In Proceedings of the Neural Information Processing Systems, Montreal, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Duchi, J.C.; Namkoong, H. Learning models with uniform performance via distributionally robust optimization. Ann. Stat. 2021, 49, 1378–1406. [Google Scholar] [CrossRef]

- Bühlmann, P. Invariance, causality and robustness. Stat. Sci. 2020, 35, 404–426. [Google Scholar] [CrossRef]

- Blanchard, G.; Lee, G.; Scott, C. Generalizing from several related classification tasks to a new unlabeled sample. In Proceedings of the Neural Information Processing Systems, Granada, Spain, 12–15 December 2011; Volume 24. [Google Scholar]

- Muandet, K.; Balduzzi, D.; Schölkopf, B. Domain generalization via invariant feature representation. In Proceedings of the International Conference on Machine Learning, PMLR, Atlanta, GA, USA, 16–21 June 2013; pp. 10–18. [Google Scholar]

- Deshmukh, A.A.; Lei, Y.; Sharma, S.; Dogan, U.; Cutler, J.W.; Scott, C. A generalization error bound for multi-class domain generalization. arXiv 2019, arXiv:1905.10392. [Google Scholar]

- Ye, H.; Xie, C.; Cai, T.; Li, R.; Li, Z.; Wang, L. Towards a Theoretical Framework of Out-of-Distribution Generalization. In Proceedings of the Neural Information Processing Systems, Virtual, 6–14 December 2021. [Google Scholar]

- Xie, C.; Chen, F.; Liu, Y.; Li, Z. Risk variance penalization: From distributional robustness to causality. arXiv 2020, arXiv:2006.07544. [Google Scholar]

- Jin, W.; Barzilay, R.; Jaakkola, T. Domain extrapolation via regret minimization. arXiv 2020, arXiv:2006.03908. [Google Scholar]

- Mahajan, D.; Tople, S.; Sharma, A. Domain generalization using causal matching. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 7313–7324. [Google Scholar]

- Bellot, A.; van der Schaar, M. Generalization and invariances in the presence of unobserved confounding. arXiv 2020, arXiv:2007.10653. [Google Scholar]

- Li, B.; Shen, Y.; Wang, Y.; Zhu, W.; Reed, C.J.; Zhang, J.; Li, D.; Keutzer, K.; Zhao, H. Invariant information bottleneck for domain generalization. In Proceedings of the Association for the Advancement of Artificial Intelligence, Virtual, 22 Februay–1 March 2022. [Google Scholar]

- Alesiani, F.; Yu, S.; Yu, X. Gated information bottleneck for generalization in sequential environments. Knowl. Informat. Syst. 2022; 1–23, in press. [Google Scholar] [CrossRef]

- Wang, H.; Si, H.; Li, B.; Zhao, H. Provable Domain Generalization via Invariant-Feature Subspace Recovery. In Proceedings of the International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Ganin, Y.; Lempitsky, V. Unsupervised domain adaptation by backpropagation. In Proceedings of the International conference on machine learning, PMLR, Lille, France, 6–11 July 2015; pp. 1180–1189. [Google Scholar]

- Li, Y.; Tian, X.; Gong, M.; Liu, Y.; Liu, T.; Zhang, K.; Tao, D. Deep domain generalization via conditional invariant adversarial networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 624–639. [Google Scholar]

- Zhao, S.; Gong, M.; Liu, T.; Fu, H.; Tao, D. Domain generalization via entropy regularization. In Proceedings of the Neural Information Processing Systems, Virtual, 6–12 December 2020; Volume 33, pp. 16096–16107. [Google Scholar]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Pereira, F. Analysis of representations for domain adaptation. In Proceedings of the Neural Information Processing Systems, Hong Kong, China, 3–6 October 2006; Volume 19. [Google Scholar]

- Ben-David, S.; Blitzer, J.; Crammer, K.; Kulesza, A.; Pereira, F.; Vaughan, J.W. A theory of learning from different domains. Mach. Learn. 2010, 79, 151–175. [Google Scholar] [CrossRef]

- Zhao, H.; Des Combes, R.T.; Zhang, K.; Gordon, G. On learning invariant representations for domain adaptation. In Proceedings of the International Conference on Machine Learning. PMLR, Long Beach, CA, USA, 10–15 June 2019; pp. 7523–7532. [Google Scholar]

- Xu, Q.; Zhang, R.; Zhang, Y.; Wang, Y.; Tian, Q. A fourier-based framework for domain generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14383–14392. [Google Scholar]

- Zhou, K.; Yang, Y.; Qiao, Y.; Xiang, T. Domain Generalization with MixStyle. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Zhang, X.; Cui, P.; Xu, R.; Zhou, L.; He, Y.; Shen, Z. Deep stable learning for out-of-distribution generalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 21–25 June 2021; pp. 5372–5382. [Google Scholar]

- Wang, H.; Ge, S.; Lipton, Z.; Xing, E.P. Learning robust global representations by penalizing local predictive power. In Proceedings of the Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 32. [Google Scholar]

- Gulrajani, I.; Lopez-Paz, D. In Search of Lost Domain Generalization. In Proceedings of the International Conference on Learning Representations, Virtual, 2–4 December 2020. [Google Scholar]

- Wiles, O.; Gowal, S.; Stimberg, F.; Rebuffi, S.A.; Ktena, I.; Dvijotham, K.D.; Cemgil, A.T. A Fine-Grained Analysis on Distribution Shift. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Thomas, M.; Joy, A.T. Elements of Information Theory; Wiley-Interscience: Hoboken, NJ, USA, 2006. [Google Scholar]

| Datasets | Margin Relationship | Entropy Relationship | ||

|---|---|---|---|---|

| Example 1/1S | 5 | 5 | ||

| Example 2/2S | 5 | 5 | ||

| Example 3/3S | 5 | 5 |

| #Envs | ERM (min) | IRM (min) | IB-ERM (min) | IB-IRM (min) | CSIB (min) | |

|---|---|---|---|---|---|---|

| Example 1 | 1 | 0.50 ± 0.01 (0.49) | 0.50 ± 0.01 (0.49) | 0.23 ± 0.02 (0.22) | 0.31 ± 0.10 (0.25) | 0.23± 0.02 (0.22) |

| Example 1S | 1 | 0.50 ± 0.00 (0.49) | 0.50 ± 0.00 (0.50) | 0.46 ± 0.04 (0.39) | 0.30 ± 0.10 (0.25) | 0.46 ± 0.04 (0.39) |

| Example 2 | 1 | 0.40 ± 0.20 (0.00) | 0.50 ± 0.00 (0.49) | 0.50 ± 0.00 (0.49) | 0.46 ± 0.02 (0.45) | 0.00 ± 0.00 (0.00) |

| Example 2S | 1 | 0.50 ± 0.00 (0.50) | 0.31 ± 0.23 (0.00) | 0.50 ± 0.00 (0.50) | 0.45 ± 0.01 (0.43) | 0.10 ± 0.20 (0.00) |

| Example 3 | 1 | 0.16 ± 0.06 (0.09) | 0.18 ± 0.03 (0.14) | 0.50 ± 0.01 (0.49) | 0.40 ± 0.20 (0.01) | 0.11 ± 0.20 (0.00) |

| Example 3S | 1 | 0.17 ± 0.07 (0.10) | 0.09 ± 0.02 (0.07) | 0.50 ± 0.00 (0.50) | 0.50 ± 0.00 (0.50) | 0.21 ± 0.24 (0.00) |

| Example 1 | 3 | 0.45 ± 0.01 (0.45) | 0.45 ± 0.01 (0.45) | 0.22 ± 0.01 (0.21) | 0.23 ± 0.13 (0.02) | 0.22 ± 0.01 (0.21) |

| Example 1S | 3 | 0.45 ± 0.00 (0.45) | 0.45 ± 0.00 (0.45) | 0.41 ± 0.04 (0.34) | 0.27 ± 0.11 (0.11) | 0.41 ± 0.04 (0.34) |

| Example 2 | 3 | 0.40 ± 0.20 (0.00) | 0.50 ± 0.00 (0.50) | 0.50 ± 0.00 (0.50) | 0.33 ± 0.04 (0.25) | 0.00 ± 0.00 (0.00) |

| Example 2S | 3 | 0.50 ± 0.00 (0.50) | 0.37 ± 0.15 (0.15) | 0.50 ± 0.00 (0.50) | 0.34 ± 0.01 (0.33) | 0.10 ± 0.20 (0.00) |

| Example 3 | 3 | 0.18 ± 0.04 (0.15) | 0.21 ± 0.02 (0.20) | 0.50 ± 0.01 (0.49) | 0.50 ± 0.01 (0.49) | 0.11 ± 0.20 (0.00) |

| Example 3S | 3 | 0.18 ± 0.04 (0.15) | 0.08 ± 0.03 (0.03) | 0.50 ± 0.00 (0.50) | 0.43 ± 0.09 (0.31) | 0.01 ± 0.00 (0.00) |

| Example 1 | 6 | 0.46 ± 0.01 (0.44) | 0.46 ± 0.09 (0.41) | 0.22 ± 0.01 (0.20) | 0.37 ± 0.14 (0.17) | 0.22 ± 0.01 (0.20) |

| Example 1S | 6 | 0.46 ± 0.02 (0.44) | 0.46 ± 0.02 (0.44) | 0.35 ± 0.10 (0.23) | 0.42 ± 0.12 (0.28) | 0.35 ± 0.10 (0.23) |

| Example 2 | 6 | 0.49 ± 0.01 (0.48) | 0.50 ± 0.01 (0.48) | 0.50 ± 0.00 (0.50) | 0.30 ± 0.01 (0.28) | 0.00 ± 0.00 (0.00) |

| Example 2S | 6 | 0.50 ± 0.00 (0.50) | 0.35 ± 0.12 (0.25) | 0.50 ± 0.00 (0.50) | 0.30 ± 0.01 (0.29) | 0.20 ± 0.24 (0.00) |

| Example 3 | 6 | 0.18 ± 0.04 (0.15) | 0.20 ± 0.01 (0.19) | 0.50 ± 0.00 (0.49) | 0.37 ± 0.16 (0.16) | 0.01 ± 0.01 (0.00) |

| Example 3S | 6 | 0.18 ± 0.04 (0.14) | 0.05 ± 0.04 (0.01) | 0.50 ± 0.00 (0.50) | 0.50 ± 0.00 (0.50) | 0.11 ± 0.20 (0.00) |

| Methods | ERM | IRM | IB-ERM | IB-IRM | CSIB | Oracle |

|---|---|---|---|---|---|---|

| Accuracy | 9.94 ± 0.28 | 20.39 ± 2.76 | 9.94 ± 0.28 | 43.84 ± 12.48 | 60.03 ± 1.28 | 84.72 ± 0.65 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, B.; Jia, K. Counterfactual Supervision-Based Information Bottleneck for Out-of-Distribution Generalization. Entropy 2023, 25, 193. https://doi.org/10.3390/e25020193

Deng B, Jia K. Counterfactual Supervision-Based Information Bottleneck for Out-of-Distribution Generalization. Entropy. 2023; 25(2):193. https://doi.org/10.3390/e25020193

Chicago/Turabian StyleDeng, Bin, and Kui Jia. 2023. "Counterfactual Supervision-Based Information Bottleneck for Out-of-Distribution Generalization" Entropy 25, no. 2: 193. https://doi.org/10.3390/e25020193

APA StyleDeng, B., & Jia, K. (2023). Counterfactual Supervision-Based Information Bottleneck for Out-of-Distribution Generalization. Entropy, 25(2), 193. https://doi.org/10.3390/e25020193