A Novel Hierarchical Extreme Machine-Learning-Based Approach for Linear Attenuation Coefficient Forecasting

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

3.1. Materials

3.2. Mixing Procedure

3.3. Chemical and Physical Characteristics

3.4. XCOM Simulation

3.5. Database Organization

3.6. Data Preprocessing

3.7. Outliers Analysis

3.8. Data Normalization

3.9. ML Input Data Organization

3.10. Conventional Shallow Machine Learning (ML) Classifiers

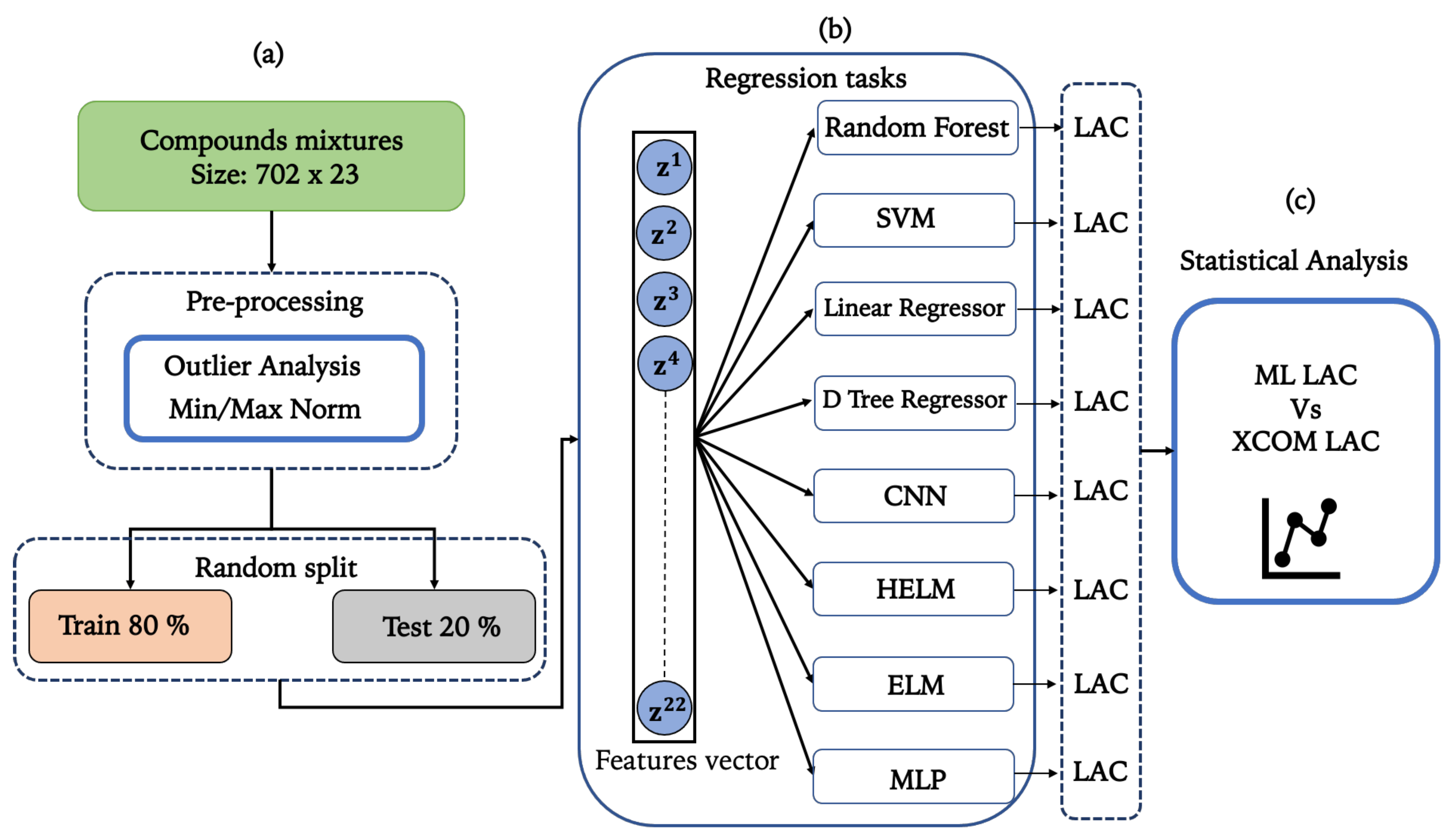

- The dataset was created using an engineering design approach to estimate the amount and proportion of each component. The shielding properties of radioactive concrete and mineral powders particle mixtures were estimated using XCOM.

- To eliminate outliers and normalize the data, the mineral powders particles, magnetite, water, and radiation samples were fed into a preprocessing pipeline.

- The sample data were divided into training and test sets and fed into ML predictors. A grid search approach was used to improve the kernel parameters, penalty factor, and ML model parameters.

- Nonlinear relationships between concrete radiation strength and concrete mixes were found to be effectively captured by the ML models.

- The trained ML model weights were used to calculate concrete radiation strength, by effectively generalizing from unmonitored data to unseen test data.

- The predictability of ML models was assessed by contrasting forecasted samples with empirically calculated data.

3.11. Extreme Machine Learning

3.12. Hierarchical Extreme Machine Learning (HELM)

| Algorithm 1 HELM Framework. |

|

| Algorithm 2 ELM-Autoencoder Overview. |

|

3.13. Multi-Layer Perceptron

3.14. Proposed 1d-Convolutional Neural Network (CNN)

3.15. Parameter Tuning for Training

4. Experimental Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sakdinawat, A.; Attwood, D. Nanoscale X-ray imaging. Nat. Photonics 2010, 4, 840–848. [Google Scholar] [CrossRef]

- Zhao, C.; Fezzaa, K.; Cunningham, R.W.; Wen, H.; De Carlo, F.; Chen, L.; Rollett, A.D.; Sun, T. Real-time monitoring of laser powder bed fusion process using high-speed X-ray imaging and diffraction. Sci. Rep. 2017, 7, 3602. [Google Scholar] [CrossRef] [PubMed]

- Fan, F.; Gao, S.; Ji, S.; Fu, Y.; Zhang, P.; Xu, H. Gamma radiation-responsive side-chain tellurium-containing polymer for cancer therapy. Mater. Chem. Front. 2018, 2, 2109–2115. [Google Scholar] [CrossRef]

- Luckey, T. Biological effects of ionizing radiation: A perspective for Japan. J. Am. Phys. Surg. 2011, 16, 45–46. [Google Scholar]

- El-Taher, A. Elemental analysis of granite by instrumental neutron activation analysis (INAA) and X-ray fluorescence analysis (XRF). Appl. Radiat. Isot. 2012, 70, 350–354. [Google Scholar] [CrossRef] [PubMed]

- Rybkina, V.L.; Bannikova, M.V.; Adamova, G.V.; Dörr, H.; Scherthan, H.; Azizova, T.V. Immunological markers of chronic occupational radiation exposure. Health Phys. 2018, 115, 108–113. [Google Scholar] [CrossRef]

- Demir, E.; Roters, F.; Raabe, D. Bending of single crystal microcantilever beams of cube orientation: Finite element model and experiments. J. Mech. Phys. Solids 2010, 58, 1599–1612. [Google Scholar] [CrossRef]

- Akkurt, I.; Basyigit, C.; Kilincarslan, S.; Mavi, B.; Akkurt, A. Radiation shielding of concretes containing different aggregates. Cem. Concr. Compos. 2006, 28, 153–157. [Google Scholar] [CrossRef]

- Akkas, A.; Tugrul, A.; Buyuk, B.; Addemir, A.; Marsoglu, M.; Agacan, B. Shielding effect of boron carbide aluminium metal matrix composite against gamma and neutron radiation. Acta Phys. Pol. A 2015, 128, 176–179. [Google Scholar] [CrossRef]

- Bashter, I. Calculation of radiation attenuation coefficients for shielding concretes. Ann. Nucl. Energy 1997, 24, 1389–1401. [Google Scholar] [CrossRef]

- Kansouh, W.; El-Sayed Abdo, A.; Megahid, R. Radiation shielding properties of dolomite and ilmenite concretes. In Proceedings of the 4th Conference and Workshop on Cyclotrones and Applications, Cairo, Egypt, 17–21 February 2001; pp. 17–21. [Google Scholar]

- Wood, J. Computational Methods in Reactor Shielding; Elsevier: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Akkurt, I.; Tekin, H.; Mesbahi, A. Calculation of detection efficiency for the gamma detector using MCNPX. Acta Phys. Pol. A 2015, 128, 332–334. [Google Scholar] [CrossRef]

- Medhat, M.; Wang, Y. Estimation of background spectrum in a shielded HPGe detector using Monte Carlo simulations. Appl. Radiat. Isot. 2014, 84, 13–18. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Qiao, H.; Zhu, F. Reliability analysis of fiber concrete freeze–thaw damage based on the Weibull method. Emerg. Mater. Res. 2020, 9, 70–77. [Google Scholar] [CrossRef]

- Sariyer, D. Investigation of Neutron Attenuation through FeB, Fe2B and Concrete. Acta Phys. Pol. A 2020, 137, 539–541. [Google Scholar] [CrossRef]

- Al-Sarray, E.; Akkurt, I.; Günoğlu, K.; Evcin, A.; Bezir, N. Radiation Shielding Properties of Some Composite Panel. Acta Phys. Pol. A 2017, 132, 490–492. [Google Scholar] [CrossRef]

- SARIYER, D.; KÜÇER, R. Effect of Different Materials to Concrete as Neutron Shielding Application. Acta Phys. Pol. A 2020, 137, 477. [Google Scholar] [CrossRef]

- Akkurt, I.; El-Khayatt, A. The effect of barite proportion on neutron and gamma-ray shielding. Ann. Nucl. Energy 2013, 51, 5–9. [Google Scholar] [CrossRef]

- Gedik, S.; Baytaş, A. Shielding of gamma radiation by using porous materials. Acta Phys. Pol. A 2015, 128, 174–175. [Google Scholar] [CrossRef]

- Akkurt, I.; Akyıldırım, H.; Mavi, B.; Kilincarslan, S.; Basyigit, C. Photon attenuation coefficients of concrete includes barite in different rate. Ann. Nucl. Energy 2010, 37, 910–914. [Google Scholar] [CrossRef]

- Akkurt, I. Effective atomic and electron numbers of some steels at different energies. Ann. Nucl. Energy 2009, 36, 1702–1705. [Google Scholar] [CrossRef]

- Sarıyer, D.; Küçer, R.; Küçer, N. Neutron Shielding Properties of Concrete and Ferro-Boron. Acta Phys. Pol. A 2015, 128, 201–202. [Google Scholar] [CrossRef]

- Celiktas, C. A method to determine the gamma-ray linear attenuation coefficient. Ann. Nucl. Energy 2011, 38, 2096–2100. [Google Scholar] [CrossRef]

- Buyuk, B.; Tugrul, A.B. Gamma and neutron attenuation behaviours of boron carbide–silicon carbide composites. Ann. Nucl. Energy 2014, 71, 46–51. [Google Scholar] [CrossRef]

- Akman, F.; Durak, R.; Turhan, M.; Kaçal, M. Studies on effective atomic numbers, electron densities from mass attenuation coefficients near the K edge in some samarium compounds. Appl. Radiat. Isot. 2015, 101, 107–113. [Google Scholar] [CrossRef] [PubMed]

- Jalali, M.; Mohammadi, A. Gamma ray attenuation coefficient measurement for neutron-absorbent materials. Radiat. Phys. Chem. 2008, 77, 523–527. [Google Scholar] [CrossRef]

- Sayyed, M.; Tekin, H.; Kılıcoglu, O.; Agar, O.; Zaid, M. Shielding features of concrete types containing sepiolite mineral: Comprehensive study on experimental, XCOM and MCNPX results. Results Phys. 2018, 11, 40–45. [Google Scholar] [CrossRef]

- Shirmardi, S.; Shamsaei, M.; Naserpour, M. Comparison of photon attenuation coefficients of various barite concretes and lead by MCNP code, XCOM and experimental data. Ann. Nucl. Energy 2013, 55, 288–291. [Google Scholar] [CrossRef]

- Bagheri, R.; Moghaddam, A.K.; Yousefnia, H. Gamma ray shielding study of barium–bismuth–borosilicate glasses as transparent shielding materials using MCNP-4C code, XCOM program, and available experimental data. Nucl. Eng. Technol. 2017, 49, 216–223. [Google Scholar] [CrossRef]

- Obaid, S.S.; Sayyed, M.; Gaikwad, D.; Pawar, P.P. Attenuation coefficients and exposure buildup factor of some rocks for gamma ray shielding applications. Radiat. Phys. Chem. 2018, 148, 86–94. [Google Scholar]

- Sharma, A.; Sayyed, M.; Agar, O.; Tekin, H. Simulation of shielding parameters for TeO2-WO3-GeO2 glasses using FLUKA code. Results Phys. 2019, 13, 102199. [Google Scholar] [CrossRef]

- Biswas, R.; Sahadath, H.; Mollah, A.S.; Huq, M.F. Calculation of gamma-ray attenuation parameters for locally developed shielding material: Polyboron. J. Radiat. Res. Appl. Sci. 2016, 9, 26–34. [Google Scholar] [CrossRef]

- Kaçal, M.; Akman, F.; Sayyed, M. Evaluation of gamma-ray and neutron attenuation properties of some polymers. Nucl. Eng. Technol. 2019, 51, 818–824. [Google Scholar] [CrossRef]

- Mahmoud, K.; Sayyed, M.; Tashlykov, O. Gamma ray shielding characteristics and exposure buildup factor for some natural rocks using MCNP-5 code. Nucl. Eng. Technol. 2019, 51, 1835–1841. [Google Scholar] [CrossRef]

- Medhat, M. Gamma-ray attenuation coefficients of some building materials available in Egypt. Ann. Nucl. Energy 2009, 36, 849–852. [Google Scholar] [CrossRef]

- Akman, F.; Kaçal, M.; Sayyed, M.; Karataş, H. Study of gamma radiation attenuation properties of some selected ternary alloys. J. Alloys Compd. 2019, 782, 315–322. [Google Scholar] [CrossRef]

- Sayyed, M.; Akman, F.; Kumar, A.; Kaçal, M. Evaluation of radioprotection properties of some selected ceramic samples. Results Phys. 2018, 11, 1100–1104. [Google Scholar] [CrossRef]

- Awasarmol, V.V.; Gaikwad, D.K.; Raut, S.D.; Pawar, P.P. Photon interaction study of organic nonlinear optical materials in the energy range 122–1330 keV. Radiat. Phys. Chem. 2017, 130, 343–350. [Google Scholar] [CrossRef]

- Gaikwad, D.K.; Pawar, P.P.; Selvam, T.P. Mass attenuation coefficients and effective atomic numbers of biological compounds for gamma ray interactions. Radiat. Phys. Chem. 2017, 138, 75–80. [Google Scholar] [CrossRef]

- Lin, Y.; Lai, C.P.; Yen, T. Prediction of ultrasonic pulse velocity (UPV) in concrete. Mater. J. 2003, 100, 21–28. [Google Scholar]

- Kheder, G. A two stage procedure for assessment of in situ concrete strength using combined non-destructive testing. Mater. Struct. 1999, 32, 410. [Google Scholar] [CrossRef]

- Trtnik, G.; Kavčič, F.; Turk, G. Prediction of concrete strength using ultrasonic pulse velocity and artificial neural networks. Ultrasonics 2009, 49, 53–60. [Google Scholar] [CrossRef]

- Ieracitano, C.; Mammone, N.; Versaci, M.; Varone, G.; Ali, A.R.; Armentano, A.; Calabrese, G.; Ferrarelli, A.; Turano, L.; Tebala, C.; et al. A Fuzzy-enhanced Deep Learning Approach for Early Detection of Covid-19 Pneumonia from Portable Chest X-ray Images. Neurocomputing 2022, 481, 202–215. [Google Scholar] [CrossRef]

- Varone, G.; Boulila, W.; Lo Giudice, M.; Benjdira, B.; Mammone, N.; Ieracitano, C.; Dashtipour, K.; Neri, S.; Gasparini, S.; Morabito, F.C.; et al. A Machine Learning Approach Involving Functional Connectivity Features to Classify Rest-EEG Psychogenic Non-Epileptic Seizures from Healthy Controls. Sensors 2021, 22, 129. [Google Scholar] [CrossRef]

- Özyüksel Çiftçioğlu, A.; Naser, M. Hiding in plain sight: What can interpretable unsupervised machine learning and clustering analysis tell us about the fire behavior of reinforced concrete columns? Structures 2022, 40, 920–935. [Google Scholar] [CrossRef]

- Chen, I.F.; Lu, C.J. Sales forecasting by combining clustering and machine-learning techniques for computer retailing. Neural Comput. Appl. 2017, 28, 2633–2647. [Google Scholar] [CrossRef]

- Hussain, T.; Siniscalchi, S.M.; Lee, C.C.; Wang, S.S.; Tsao, Y.; Liao, W.H. Experimental study on extreme learning machine applications for speech enhancement. IEEE Access 2017, 5, 25542–25554. [Google Scholar] [CrossRef]

- Hussain, T.; Tsao, Y.; Wang, H.M.; Wang, J.C.; Siniscalchi, S.M.; Liao, W.H. Audio-Visual Speech Enhancement using Hierarchical Extreme Learning Machine. In Proceedings of the 2019 27th European Signal Processing Conference (EUSIPCO), Coruña, Spain, 2–6 September 2019; pp. 1–5. [Google Scholar]

- Hussain, T.; Siniscalchi, S.M.; Wang, H.L.S.; Tsao, Y.; Salerno, V.M.; Liao, W.H. Ensemble hierarchical extreme learning machine for speech dereverberation. IEEE Trans. Cogn. Dev. Syst. 2019, 12, 744–758. [Google Scholar] [CrossRef]

- Gencel, O. The application of artificial neural networks technique to estimate mass attenuation coefficient of shielding barrier. Int. J. Phys. Sci. 2009, 4, 743–751. [Google Scholar]

- Başyigit, C.; Akkurt, I.; Kilincarslan, S.; Beycioglu, A. Prediction of compressive strength of heavyweight concrete by ANN and FL models. Neural Comput. Appl. 2010, 19, 507–513. [Google Scholar] [CrossRef]

- Gencel, O.; Brostow, W.; Del Coz Diaz, J.J.; Martínez-Barrera, G.; Beycioglu, A. Effects of elevated temperatures on mechanical properties of concrete containing haematite evaluated using fuzzy logic model. Mater. Res. Innov. 2013, 17, 382–391. [Google Scholar] [CrossRef]

- Juncai, X.; Qingwen, R.; Zhenzhong, S. Prediction of the strength of concrete radiation shielding based on LS-SVM. Ann. Nucl. Energy 2015, 85, 296–300. [Google Scholar] [CrossRef]

- Yadollahi, A.; Nazemi, E.; Zolfaghari, A.; Ajorloo, A. Application of artificial neural network for predicting the optimal mixture of radiation shielding concrete. Prog. Nucl. Energy 2016, 89, 69–77. [Google Scholar] [CrossRef]

- Medhat, M. Application of neural network for predicting photon attenuation through materials. Radiat. Eff. Defects Solids 2019, 174, 171–181. [Google Scholar] [CrossRef]

- El-Sayed, A.A.; Fathy, I.N.; Tayeh, B.A.; Almeshal, I. Using artificial neural networks for predicting mechanical and radiation shielding properties of different nano-concretes exposed to elevated temperature. Constr. Build. Mater. 2022, 324, 126663. [Google Scholar] [CrossRef]

- Amin, M.N.; Ahmad, I.; Iqbal, M.; Abbas, A.; Khan, K.; Faraz, M.I.; Alabdullah, A.A.; Ullah, S. Computational AI Models for Investigating the Radiation Shielding Potential of High-Density Concrete. Materials 2022, 15, 4573. [Google Scholar] [CrossRef]

- Berger, M.J.; Hubbell, J. XCOM: Photon Cross Sections on a Personal Computer; Technical Report; National Bureau of Standards: Washington, DC, USA, 1987. [Google Scholar]

- Leys, C.; Ley, C.; Klein, O.; Bernard, P.; Licata, L. Detecting outliers: Do not use standard deviation around the mean, use absolute deviation around the median. J. Exp. Soc. Psychol. 2013, 49, 764–766. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Tang, J.; Deng, C.; Huang, G.B. Extreme learning machine for multilayer perceptron. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 809–821. [Google Scholar] [CrossRef]

- Patterson, D.W. Artificial Neural Networks: Theory and Applications; Prentice Hall PTR: Hoboken, NJ, USA, 1998. [Google Scholar]

- Nazemi, E.; Feghhi, S.; Roshani, G.; Peyvandi, R.G.; Setayeshi, S. Precise void fraction measurement in two-phase flows independent of the flow regime using gamma-ray attenuation. Nucl. Eng. Technol. 2016, 48, 64–71. [Google Scholar] [CrossRef]

- Roshani, G.; Feghhi, S.; Mahmoudi-Aznaveh, A.; Nazemi, E.; Adineh-Vand, A. Precise volume fraction prediction in oil–water–gas multiphase flows by means of gamma-ray attenuation and artificial neural networks using one detector. Measurement 2014, 51, 34–41. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Goyal, P.; Dollár, P.; Girshick, R.; Noordhuis, P.; Wesolowski, L.; Kyrola, A.; Tulloch, A.; Jia, Y.; He, K. Accurate, large minibatch sgd: Training imagenet in 1 hour. arXiv 2017, arXiv:1706.02677. [Google Scholar]

- Quinlan, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Lin, S.W.; Lee, Z.J.; Chen, S.C.; Tseng, T.Y. Parameter determination of support vector machine and feature selection using simulated annealing approach. Appl. Soft Comput. 2008, 8, 1505–1512. [Google Scholar] [CrossRef]

- Pham, B.T.; Hoang, T.A.; Nguyen, D.M.; Bui, D.T. Prediction of shear strength of soft soil using machine learning methods. Catena 2018, 166, 181–191. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)?—Arguments against avoiding RMSE in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Oto, B.; Gür, A. Gamma-ray shielding of concretes including magnetite in different rate. Int. J. Phys. Sci. 2013, 8, 310–314. [Google Scholar]

- Davraz, M.; Pehlivanoglu, H.; Kilincarslan, S.; Akkurt, I. Determination of radiation shielding of concrete produced from portland cement with boron additives. Acta Phys. Pol. A 2017, 132, 702–704. [Google Scholar] [CrossRef]

| Constituent | Weight (g/cm) | ||||

|---|---|---|---|---|---|

| 0.390 | 0.152 | 0.187 | 0.218 | 0.247 | |

| 0.390 | 0.284 | 0.188 | 0.099 | 0.0187 | |

| 0.006 | 0.004 | 0.003 | 0.002 | 0 | |

| 0.011 | 0.008 | 0.005 | 0.003 | 0.0002 | |

| 0.008 | 0.089 | 0.163 | 0.230 | 0.292 | |

| 0 | 0.0005 | 0.001 | 0.001 | 0.001 | |

| 0.082 | 0.060 | 0.040 | 0.022 | 0.005 | |

| 0.249 | 0.258 | 0.267 | 0.274 | 0.282 | |

| 0.039 | 0.034 | 0.029 | 0.025 | 0.021 | |

| 0.005 | 0.004 | 0.004 | 0.004 | 0.003 | |

| 0 | 0.0000916 | 0.000175109 | 0.0002515420 | 000321765 | |

| 0 | 0.0000458 | 0.00008755 | 0.000125771 | 0.000160883 | |

| 0 | 0.002404626 | 0.004596616 | 0.00660298 | 0.008446339 | |

| 0 | 0.000480925 | 0.000919323 | 0.001320596 | 0.001689268 | |

| 0 | 0.000549629 | 0.001050655 | 0.001509252 | 0.001930592 | |

| 0 | 0.00001374 | 0.000026266 | 0.000037731 | 0.000048265 | |

| 0 | 0.002184774 | 0.004176354 | 0.005999279 | 0.007674102 | |

| 0 | 0.25 | 0.5 | 0.75 | 1 | |

| 2.44 | 2.55 | 2.67 | 2.78 | 2.9 | |

| 0.0787 | 0.0751 | 0.0717 | 0.068 | 0.065 | |

| MAE | RMSE | R2score | |

|---|---|---|---|

| SVM | 0.441 ± 0.356 | 0.457 ± 0.362 | 0.286 ± 0.315 |

| Decision Tree | 0.457 ± 0.314 | 0.543 ± 0.294 | 0.437 ± 0.293 |

| Polynomial Regression | 0.328 ± 0.301 | 0.369 ± 0.322 | 0.369 ± 0.321 |

| Random Forest | 0.553 ± 0.303 | 0.487 ± 0.305 | 0.439 ± 0.305 |

| MLP | 0.359 ± 0.374 | 0.348 ± 0.393 | 0.667 ± 0.277 |

| CNN | 0.574 ± 0.387 | 0.422 ± 0.308 | 0.530 ± 0.334 |

| ELM | 0.442 ± 0.356 | 0.408 ± 0.361 | 0.516 ± 0.296 |

| HELM | 0.360 ± 0.304 | 0.158 ± 0.302 | 0.753 ± 0.292 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Varone, G.; Ieracitano, C.; Çiftçioğlu, A.Ö.; Hussain, T.; Gogate, M.; Dashtipour, K.; Al-Tamimi, B.N.; Almoamari, H.; Akkurt, I.; Hussain, A. A Novel Hierarchical Extreme Machine-Learning-Based Approach for Linear Attenuation Coefficient Forecasting. Entropy 2023, 25, 253. https://doi.org/10.3390/e25020253

Varone G, Ieracitano C, Çiftçioğlu AÖ, Hussain T, Gogate M, Dashtipour K, Al-Tamimi BN, Almoamari H, Akkurt I, Hussain A. A Novel Hierarchical Extreme Machine-Learning-Based Approach for Linear Attenuation Coefficient Forecasting. Entropy. 2023; 25(2):253. https://doi.org/10.3390/e25020253

Chicago/Turabian StyleVarone, Giuseppe, Cosimo Ieracitano, Aybike Özyüksel Çiftçioğlu, Tassadaq Hussain, Mandar Gogate, Kia Dashtipour, Bassam Naji Al-Tamimi, Hani Almoamari, Iskender Akkurt, and Amir Hussain. 2023. "A Novel Hierarchical Extreme Machine-Learning-Based Approach for Linear Attenuation Coefficient Forecasting" Entropy 25, no. 2: 253. https://doi.org/10.3390/e25020253

APA StyleVarone, G., Ieracitano, C., Çiftçioğlu, A. Ö., Hussain, T., Gogate, M., Dashtipour, K., Al-Tamimi, B. N., Almoamari, H., Akkurt, I., & Hussain, A. (2023). A Novel Hierarchical Extreme Machine-Learning-Based Approach for Linear Attenuation Coefficient Forecasting. Entropy, 25(2), 253. https://doi.org/10.3390/e25020253