Position-Wise Gated Res2Net-Based Convolutional Network with Selective Fusing for Sentiment Analysis

Abstract

:1. Introduction

- (1)

- This paper proposed a PG-Res2Net module to learn different scales of sentiment features over a large range. In contrast to convolution filters with fixed window sizes or dense connections for learning sentiment features, a single residual block in the module can learn multi-scale sentiment features within a certain range. Essentially, the module achieves the first selection of multi-scale features based on local statistics.

- (2)

- Moreover, a selective fusing module is proposed to fully reuse and selectively fuse all scales of sentiment features. This is the second selection of multi-scale sentiment features based on global statistics. The module also effectively alleviates the loss of local detailed information caused by the convolution operation.

- (3)

- The model is extensively evaluated on five datasets. The experimental results demonstrated the competitive performance of the model on these datasets. In the best case, the model outperforms the other models by up to 1.2%. In addition, visualizations and ablation studies demonstrated the effectiveness of the model.

2. Related Work

2.1. CNNs and ResNets in SA Tasks

2.2. Attention Mechanisms in SA Tasks

3. Material and Methods

3.1. Task Modeling

3.2. Overview

3.3. Text Representation

3.4. PG-Res2Net Module

3.5. Selective Fusing Module

3.6. Objective Function

4. Results and Discussion

4.1. Datasets

- MR: The dataset was built by searching for movie reviews from review websites. In this dataset, 10,662 samples are separated into two categories.

- SST-2: The dataset is a binary version of the Stanford Sentiment Treebank dataset, which is an extension of MR. It comprises 9163 samples, which are separated into two categories.

- Yelp.F: The Yelp review dataset was obtained from the 2015 Yelp Dataset Challenge. It has five-star polarity labels. Each star label contains 130,000 training samples and 10,000 testing samples.

- S&O and T&G: These two datasets contain product reviews and metadata from SNAP, including 142.8 million reviews from Amazon. In this study, only reviews of Sports & Outdoor and Toy & Game products were used.

4.2. Models for Comparison

- Bi-LSTM [43] directly inputs the entire document as a single sequence into a bi-directional LSTM network for SA.

- HAN [44] uses hierarchical attention networks to classify documents.

- Classical CNN [11] uses multiple filters with different window sizes in a single convolutional layer to learn the sentiment features.

- VDCNN [24] uses only small convolution and pooling operations at the character level with a depth of 29 convolutional layers.

- Word-DenseNet [45] is an adaptation of DenseNet for text classification.

- HUSN [46] utilizes user review habits to enhance an LSTM-based hierarchical neural network for SA.

- CAHAN [47] is a modification of HAN that can make context-aware attentional decisions.

- AGCNN [31] introduces an attention-gated layer before the pooling layer to help the CNN focus on critical abstract features.

- TextConvoNet [21] applies multidimensional convolution to extract inter-token and inter-sentence N-gram features.

- DenseNet with multi-scale feature attention [6] is an improved version of DenseNet and is equipped with multi-scale feature attention.

- SAHSSC [48] is a self-attentive hierarchical model for text summarization and sentiment classification.

- Sentiment-Aware Transformer [49] is a new type of transformer model designed to predict both word and sentence sentiment.

4.3. Experimental Setup

- (1)

- Input. Data preprocessing was performed because the datasets were obtained from web reviews and had complex and arbitrary characteristics. Anomalous symbols were eliminated, and upper-case letters were converted to lower-case letters. A word embedding corpus pre-trained by GloVe was used [50]. Words in a target dataset that were not in the corpus were initialized using a random vector with element values between −0.01 and 0.01. Because the input of the model requires a constant length L, all samples whose length was not L were padded with zero vectors or truncated. In the experiments, L was set to 50 for MR and SST-2 and 500 for the other datasets.

- (2)

- Architecture configuration. The feature dimension d of the output of the first convolutional block was set to 128. For the PG-Res2Net module, the reduction ratio γ was set to 2, and the number S of convolution ways of a residual block was set to 4. The number D of residual blocks was set to 2 for MR and SST-2, 4 for S&O and T&G, and 7 for Yelp.F. S and D were determined by the experimental results.

- (3)

- Training setting. The objective function was minimized by stochastic gradient descent (SGD) with a batch size of 256, a learning rate of 0.01, and a momentum of 0.99. For all datasets except SST-2, the learning rate dropped to 0.1 times every 5 epochs. For SST-2, the period was 10 epochs. L2 regularization was also added to the objective function, and its coefficient was set to 0.0001. Random dropout [51] with a drop rate of 0.5 was applied to the input of the classifier. The training processes lasted for at most 20 epochs on all datasets, and all experiments were conducted using PyTorch v1.9 (Linux Foundation, San Francisco, CA, USA).

4.4. Experimental Results

4.5. Study of PG-Res2Net

4.5.1. Tuning of Hyperparameters

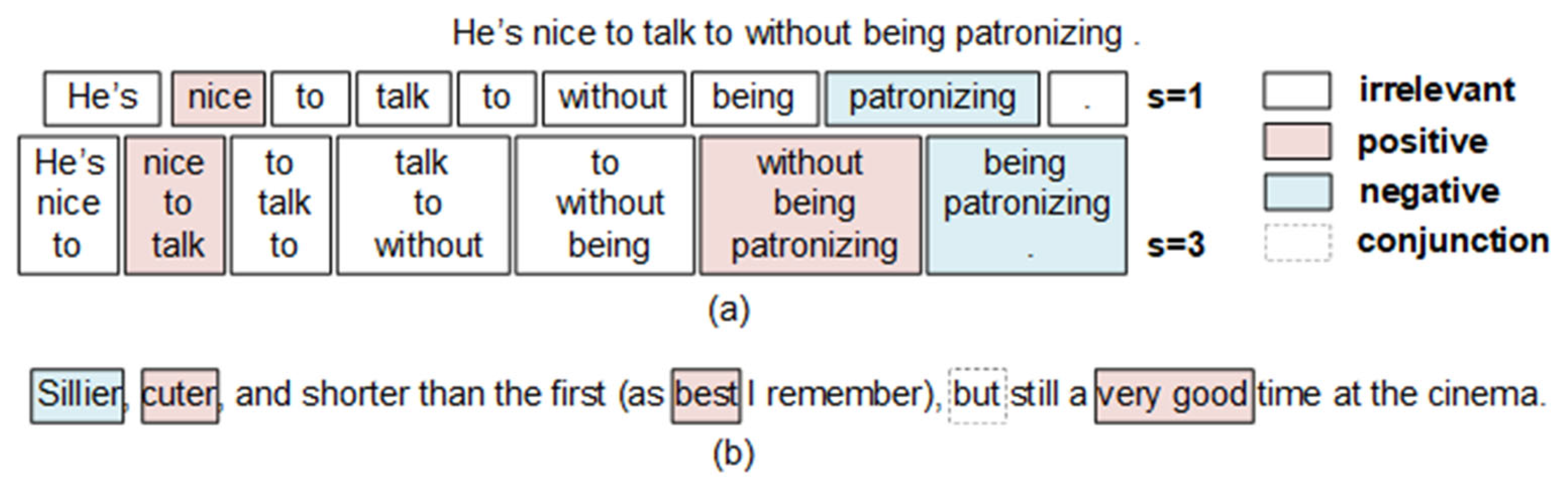

4.5.2. Visualization of Multi-Scale Sentiment Features

4.6. Effectiveness of Selective Fusing Module

- 3-Blocks-W-SF: The structure has a PG-Res2Net module containing 3 residual blocks and a selective fusing module for MR.

- 3-Blocks-WO-SF: The structure is similar to 3-Blocks-W-SF except that an average method replaces the selective fusing module.

- 7-Blocks-W-SF: The structure has a PG-Res2Net module containing 7 residual blocks and a selective fusing module for Yelp.F.

- 7-Blocks-WO-SF: The structure is similar to 7-Blocks-W-SF except that an average method replaces the selective fusing module.

| 3-Blocks-W-SF | 3-Blocks-WO-SF | 7-Blocks-W-SF | 7-Blocks-WO-SF | |

| MR | 81.7 | 81.4 | - | - |

| Yelp.F | - | - | 66.5 | 64.3 |

4.7. Analysis of Model Scalability

4.8. Error Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Park, J.H.; Choi, B.J.; Lee, S.K. Examining the impact of adaptive convolution on natural language understanding. Expert Syst. Appl. 2022, 189, 49–69. [Google Scholar] [CrossRef]

- Ren, J.; Wu, W.; Liu, G.; Chen, Z.; Wang, R. Bidirectional gated temporal convolution with attention for text classification. Neurocomputing 2021, 455, 265–273. [Google Scholar] [CrossRef]

- Tan, C.; Ren, Y.; Wang, C. An adaptive convolution with label embedding for text classification. Appl. Intell. 2022, 33, 804–812. [Google Scholar] [CrossRef]

- Zou, H.; Xiang, K. Sentiment classification method based on blending of emoticons and short texts. Entropy 2022, 24, 398. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, L.; Shi, T.; Li, J. Detection of spam reviews through a hierarchical attention architecture with N-gram CNN and Bi-LSTM. Inf. Syst. 2022, 103, 101865. [Google Scholar] [CrossRef]

- Wang, S.; Huang, M.; Deng, Z. Densely connected CNN with multi-scale feature attention for text classification. In Proceedings of the 28th International Joint Conference on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018; pp. 4468–4474. [Google Scholar]

- Xiang, Z.; Zhao, J.; LeCun, Y. Character-level convolutional networks for text classification. In Proceedings of the 28th Advances in Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 7–12 December 2015; pp. 649–657. [Google Scholar]

- Dashtipour, K.; Gogate, M.; Adeel, A.; Larijani, H.; Hussain, A. Sentiment analysis of persian movie reviews using deep learning. Entropy 2021, 23, 596. [Google Scholar] [CrossRef]

- Xue, W.; Li, T. Aspect based sentiment analysis with gated convolutional networks. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (ACL), Melbourne, VIC, Australia, 15–20 July 2018; pp. 2514–2523. [Google Scholar]

- Liu, F.; Zheng, J.; Zheng, L.; Chen, C. Combining attention-based bidirectional gated recurrent neural network and two-dimensional convolutional neural network for document-level sentiment classification. Neurocomputing 2020, 371, 39–50. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional neural networks for sentence classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Yan, L.; Han, J.; Yue, Y.; Zhang, L.; Qian, Y. Sentiment analysis of short texts based on parallel densenet. Comput. Mater. Contin. 2021, 69, 51–65. [Google Scholar] [CrossRef]

- Ma, Q.; Yan, J.; Lin, Z.; Yu, L.; Chen, Z. Deformable self-attention for text classification. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 1570–1581. [Google Scholar] [CrossRef]

- Xu, Y.; Yu, Z.; Cao, W.; Chen, C.L.P. Adaptive dense ensemble model for text classification. IEEE Trans. Cybern. 2022, 52, 7513–7526. [Google Scholar] [CrossRef]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P. Res2net: A new multi-scale backbone architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 652–662. [Google Scholar] [CrossRef]

- Shi, W.; Li, F.; Li, J.; Fei, H.; Ji, D. Effective token graph modeling using a novel labeling strategy for structured sentiment analysis. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (ACL), Dublin, Ireland, 22–27 May 2022; pp. 4232–4241. [Google Scholar]

- Chen, S.; Shi, X.; Li, J.; Wu, S.; Fei, H.; Li, F.; Ji, D. Joint alignment of multi-task feature and label spaces for emotion cause pair extraction. In Proceedings of the 27th International Conference on Computational Linguistics (COLING), Gyeongju, Republic of Korea, 12–17 October 2022; pp. 6955–6965. [Google Scholar]

- Fei, H.; Li, F.; Li, C.; Wu, S.; Li, J.; Ji, D. Inheriting the wisdom of predecessors: A multiplex cascade framework for unified aspect-based sentiment analysis. In Proceedings of the 31st International Joint Conference on Artificial Intelligence (IJCAI), Vienna, Austria, 23–29 July 2022; pp. 4121–4128. [Google Scholar]

- Yan, H.; Dai, J.; Ji, T.; Qiu, X.; Zhang, Z. A unified generative framework for aspect-based sentiment analysis. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (ACL-IJCNLP), Bangkok, Thailand, 1–6 August 2021; pp. 2416–2429. [Google Scholar]

- Yao, C.; Cai, M. A novel optimized convolutional neural network based on attention pooling for text classification. J. Phys. Conf. Ser. 2021, 1971, 012079. [Google Scholar] [CrossRef]

- Soni, S.; Chouhan, S.S.; Rathore, S.S. TextConvoNet: A convolutional neural network based architecture for text classification. Appl. Intell. 2022, 33, 1–12. [Google Scholar] [CrossRef]

- Fei, H.; Chua, T.-S.; Li, C.; Ji, D.; Zhang, M.; Ren, Y. On the robustness of aspect-based sentiment analysis: Rethinking model, data, and training. ACM Trans. Inf. Syst. 2023, 41, 1–32. [Google Scholar] [CrossRef]

- Chen, Y.; Dai, X.; Liu, M.; Chen, D.; Yuan, L.; Liu, Z. Dynamic convolution: Attention over convolution kernels. In Proceedings of the 14th IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 11027–11036. [Google Scholar]

- Conneau, A.; Schwenk, H.; Cun, Y.L.; Barrault, L. Very deep convolutional networks for text classification. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics (EACL), Valencia, Spain, 3–7 April 2017; pp. 1107–1116. [Google Scholar]

- Brauwers, G.; Frasincar, F. A general survey on attention mechanisms in deep learning. IEEE Trans. Knowl. Data Eng. 2021, 1, 3279–3298. [Google Scholar] [CrossRef]

- Lee, G.; Jeong, J.; Seo, S.; Kim, C.Y.; Kang, P. Sentiment classification with word localization based on weakly supervised learning with a convolutional neural network. Knowledge-Based Syst. 2018, 152, 70–82. [Google Scholar] [CrossRef]

- Liu, P.; Chang, S.; Huang, X.; Tang, J.; Cheung, J.C.K. Contextualized non-local neural networks for sequence learning. In Proceedings of the 33rd Innovative Applications of Artificial Intelligence Conference (AAAI), Honolulu, HI, USA, 27 January–1 February 2019; pp. 6762–6769. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Advances in Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 5999–6009. [Google Scholar]

- Ambartsoumian, A.; Popowich, F. Self-attention: A better building block for sentiment analysis neural network classifiers. In Proceedings of the 9th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis (WASSA), Brussels, Belgium, 31 October 2018; pp. 130–139. [Google Scholar]

- Tang, S.; Chai, H.; Yao, Z.; Ding, Y.; Gao, C.; Fang, B.; Liao, Q. Affective knowledge enhanced multiple-graph fusion networks for aspect-based sentiment analysis. In Proceedings of the 7th Conference on Empirical Methods in Natural Language Processing (EMNLP), Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 5352–5362. [Google Scholar]

- Liu, Y.; Ji, L.; Huang, R.; Ming, T.; Gao, C.; Zhang, J. An attention-gated convolutional neural network for sentence classification. Intell. Data Anal. 2019, 23, 1091–1107. [Google Scholar] [CrossRef]

- Choi, G.; Oh, S.; Kim, H. Improving document-level sentiment classification using importance of sentences. Entropy 2020, 22, 1336. [Google Scholar] [CrossRef]

- Xianlun, T.; Yingjie, C.; Jin, X.; Xinxian, Y. Deep global-attention based convolutional network with dense connections for text classification. J. China Univ. Posts Telecommun. 2020, 27, 46–55. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the 32nd International Conference on Machine Learning (ICML), Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Hinton, G.E. Rectified linear units improve restricted boltzmann machines. J. Appl. Biomech. 2017, 33, 384–387. [Google Scholar]

- Li, X.; Wu, X.; Lu, H.; Liu, X.; Meng, H. Channel-wise gated res2net: Towards robust detection of synthetic speech attacks. In Proceedings of the Annual Conference of the International Speech Communication Association (INTERSPEECH), Brno, Czech Republic, 30 August–3 September 2021; pp. 4314–4318. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar]

- Socher, R.; Perelygin, A.; Wu, J.Y.; Chuang, J.; Manning, C.D.; Ng, A.Y.; Potts, C. Recursive deep models for semantic compositionality over a sentiment treebank. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Seattle, WA, USA, 18–21 October 2013; pp. 1631–1642. [Google Scholar]

- Pang, B.; Lee, L. Seeing stars: Exploiting class relationships for sentiment categorization with respect to rating scales. In Proceedings of the 43rd Annual Meeting of the Association for Computational Linguistics (ACL), Ann Arbor, MI, USA, 25–30 June 2005; pp. 115–124. [Google Scholar]

- He, R.; McAuley, J. Ups and downs: Modeling the visual evolution of fashion trends with one-class collaborative filtering. In Proceedings of the 25th International World Wide Web Conference (WWW), Montreal, QC, Canada, 11–15 May 2016; pp. 507–517. [Google Scholar]

- Wei, L.; Hu, D.; Zhou, W.; Tang, X.; Zhang, X.; Wang, X.; Han, J.; Hu, S. Hierarchical interaction networks with rethinking mechanism for document-level sentiment analysis. In Proceedings of the European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases (ECML PKDD), Ghent, Belgium, 14–18 September 2020; pp. 633–649. [Google Scholar]

- Zhao, J.; Zhan, Z.; Yang, Q.; Zhang, Y.; Hu, C.; Li, Z.; Zhang, L.; He, Z. Adaptive learning of local semantic and global structure representations for text classification. In Proceedings of the 27th International Conference on Computational Linguistics (COLING), Santa Fe, NM, USA, 20–26 August 2018; pp. 2033–2043. [Google Scholar]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL), San Diego, CA, USA, 12–17 June 2016; pp. 1480–1489. [Google Scholar]

- Le, H.T.; Cerisara, C.; Denis, A. Do convolutional networks need to be deep for text classification? arXiv 2017, arXiv:1707.04108. [Google Scholar]

- Chen, J.; Yu, J.; Zhao, S.; Zhang, Y. User’s review habits enhanced hierarchical neural network for document-level sentiment classification. Neural Process. Lett. 2021, 53, 2095–2111. [Google Scholar] [CrossRef]

- Remy, J.-B.; Tixier, A.J.-P.; Vazirgiannis, M. Bidirectional context-aware hierarchical attention network for document understanding. arXiv 2019, arXiv:1908.06006. [Google Scholar]

- Wang, H.; Ren, J. A self-attentive hierarchical model for jointly improving text summarization and sentiment classification. In Proceedings of the 10th Asian Conference on Machine Learning (ACML), Beijing, China, 14–16 November 2018; pp. 630–645. [Google Scholar]

- Huang, H.; Jin, Y.; Rao, R. Sentiment-aware transformer using joint training. In Proceedings of the 32nd International Conference on Tools with Artificial Intelligence (ICTAI), Baltimore, MD, USA, 9–11 November 2020; pp. 1154–1160. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global vectors for word representation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R.; Srivastava, N. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics (NAACL), Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Ding, S.; Shang, J.; Wang, S.; Sun, Y.; Tian, H.; Wu, H.; Wang, H. Ernie-Doc: A retrospective long-document modeling transformer. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and 11th International Joint Conference on Natural Language Processing (ACL-IJCNLP), Bangkok, Thailand, 1–6 August 2021; pp. 2914–2927. [Google Scholar]

- Wu, Z.; Dai, X.Y.; Yin, C.; Huang, S.; Chen, J. Improving review representations with user attention and product attention for sentiment classification. In Proceedings of the 32nd Innovative Applications of Artificial Intelligence Conference on Artificial Intelligence (AAAI), New Orleans, LA, USA, 2–7 February 2018; pp. 5989–5996. [Google Scholar]

- Fei, H.; Ren, Y.; Wu, S.; Li, B.; Ji, D. Latent target-opinion as prior for document-level sentiment classification: A variational approach from fine-grained perspective. In Proceedings of the 30th World Wide Web Conference (WWW), Ljubljana, Slovenia, 12–23 April 2021; pp. 553–564. [Google Scholar]

| Dataset | MR | SST-2 | Yelp.F | S&O | T&G |

| Training | 7.1 K | 6.9 K | 650 K | 294.0 K | 165.4 K |

| Testing | 3.6 K | 1.8 K | 50 K | 1 K | 1 K |

| Classes | 2 | 2 | 5 | 5 | 5 |

| Avg-Len | 21 | 19 | 155 | 99 | 114 |

| Max-Len | 62 | 56 | 1214 | 6467 | 6224 |

| Model | MR | SST-2 | Yelp.F | S&O | T&G | |

|---|---|---|---|---|---|---|

| RNN | Bi-LSTM [43] | 79. 7 | 83. 2 | 54.8 | 71.9 | 70.7 |

| HAN [44] | 77.1 | - | - | 72.3 | 69.1 | |

| CAHAN [47] | - | 79.8 | - | 73.0 | 70.8 | |

| HUSN [46] | 81.5 * | 82.2 | - | - | - | |

| CNN | Classical CNN [11] | 81.5 | 87.2 | 65.5 | 72.0 | 70.5 |

| AGCNN [31] | 81.9 | 87.4 | 62.4 | - | - | |

| TextConvoNet [21] | - | - | 63.1 | 71.3 * | 73.2 * | |

| ResNet | VDCNN (29 layers) [24] | 72.8 | 78.2 | 64.7 | 72.3 * | 74.8 * |

| Word-DenseNet [45] | 79.6 * | 82.2 * | 64.5 | 67.6 * | 72.6 * | |

| DenseNet with Multi-scale Feature Attention [6] | 81.5 | 84.3 * | 66.0 | 71.6 * | 74.2 * | |

| Transformer | SAHSSC [48] | - | - | - | 73.6 | 72.5 |

| Sentiment-Aware Transformer [49] | 79.5 | 84.3 | - | - | - | |

| This work | CNN with PG-Res2Net and Selective fusing | 82.3 (D = 2) | 85.5 (D = 2) | 66.5 (D = 7) | 74.8 (D = 4) | 75.5 (D = 4) |

| S | MR (D = 2) | SST-2 (D = 2) | Yelp.F (D = 7) | S&O (D = 4) | T&G (D = 4) | |

|---|---|---|---|---|---|---|

| with ResNet | 3 | 81.0 | 84.4 | 66.1 | 73.3 | 75.0 |

| 4 | 82.0 | 84.6 | 65.9 | 73.6 | 74.7 | |

| 5 | 81.2 | 83.9 | 66.1 | 72.3 | 74.4 | |

| 6 | 81.2 | 84.1 | 66.2 | 72.6 | 74.6 | |

| with PG-Res2Net | 3 | 81.5 | 84.6 | 65.6 | 73.8 | 74.2 |

| 4 | 82.3 | 85.5 | 66.5 | 74.8 | 75.5 | |

| 5 | 81.7 | 84.0 | 66.3 | 72.8 | 75.2 | |

| 6 | 82.1 | 84.2 | 66.3 | 73.0 | 74.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Zeng, X.; Zou, Y.; Zhu, H. Position-Wise Gated Res2Net-Based Convolutional Network with Selective Fusing for Sentiment Analysis. Entropy 2023, 25, 740. https://doi.org/10.3390/e25050740

Zhou J, Zeng X, Zou Y, Zhu H. Position-Wise Gated Res2Net-Based Convolutional Network with Selective Fusing for Sentiment Analysis. Entropy. 2023; 25(5):740. https://doi.org/10.3390/e25050740

Chicago/Turabian StyleZhou, Jinfeng, Xiaoqin Zeng, Yang Zou, and Haoran Zhu. 2023. "Position-Wise Gated Res2Net-Based Convolutional Network with Selective Fusing for Sentiment Analysis" Entropy 25, no. 5: 740. https://doi.org/10.3390/e25050740

APA StyleZhou, J., Zeng, X., Zou, Y., & Zhu, H. (2023). Position-Wise Gated Res2Net-Based Convolutional Network with Selective Fusing for Sentiment Analysis. Entropy, 25(5), 740. https://doi.org/10.3390/e25050740