Improving Semantic Segmentation via Decoupled Body and Edge Information

Abstract

1. Introduction

- A body-edge dual-stream CNN framework for semantic segmentation tasks is proposed. The framework is able to decouple the semantic feature map into two parts, the body and the edge, and explicitly treats the edge information as a separate processing branch.

- The body stream uses pixel similarity inside the segmented object. By learning the flow-field offsets to warp each pixel toward object inner parts, the body-stream module produces a consistent feature representation for the body part of the segmented object, increasing the consistency of pixel features inside the body.

- A non-edge suppression layer is added to the edge stream. The body stream contains higher-level information, and the non-edge suppression layer in the edge stream takes advantage of this feature to suppress the interference of other low-level information so that the edge stream can only process edge information and let the edge information flow in the specified direction.

2. Related Work

3. Method

3.1. Body Stream

3.1.1. Flow Field Generation

3.1.2. Feature Warping

3.2. Edge Stream

Procedure of Edge Processing

3.3. Fusion Module

3.4. Multitask Learning

4. Experimental Results

4.1. Experiment Details

4.1.1. Cityscapes

4.1.2. Experiment Settings

4.1.3. Evaluation Metrics

- (1)

- IoU and mIoU are standard evaluation metrics for semantic segmentation, which can effectively assess the performance of model prediction regions and reflect the accuracy of model segmentation.

- (2)

- The approach in this paper hopes to obtain better edge-segmentation performance with a finely designed edge-processing module, where we introduce a boundary-accuracy evaluation metric [29] by calculating a small relaxed F-score at a given distance along the boundary of the predicted label to show the predicted high-quality segmentation boundary and compare it with the current edge processing of different boundary detection algorithms, with thresholds we set to 0. 00088, 0.001875, 0.00375, and 0.005, corresponding to 3, 5, 9, and 12 pixels, respectively.

- (3)

- When the segmentation object is far away from the camera, the segmentation difficulty increases, and we hope that our model can still maintain high accuracy in segmentation. In order to evaluate the performance of the segmentation model segmenting objects at different distances from the camera, we use a distance-based evaluation method [22] as follows, where we take different size crops around an approximate vanishing point as a proxy for distance. Given a predefined crop factor c (pixels), we crop c from the top and bottom, and crop c × 2 from the left and right. This is achieved by continuously cropping pixels around each image except the top, with the center of the final crop being the default approximate vanishing point. A smaller centered crop will give greater weight to smaller objects that are farther from the camera.

4.2. Quantitative Evaluation

4.3. Ablation

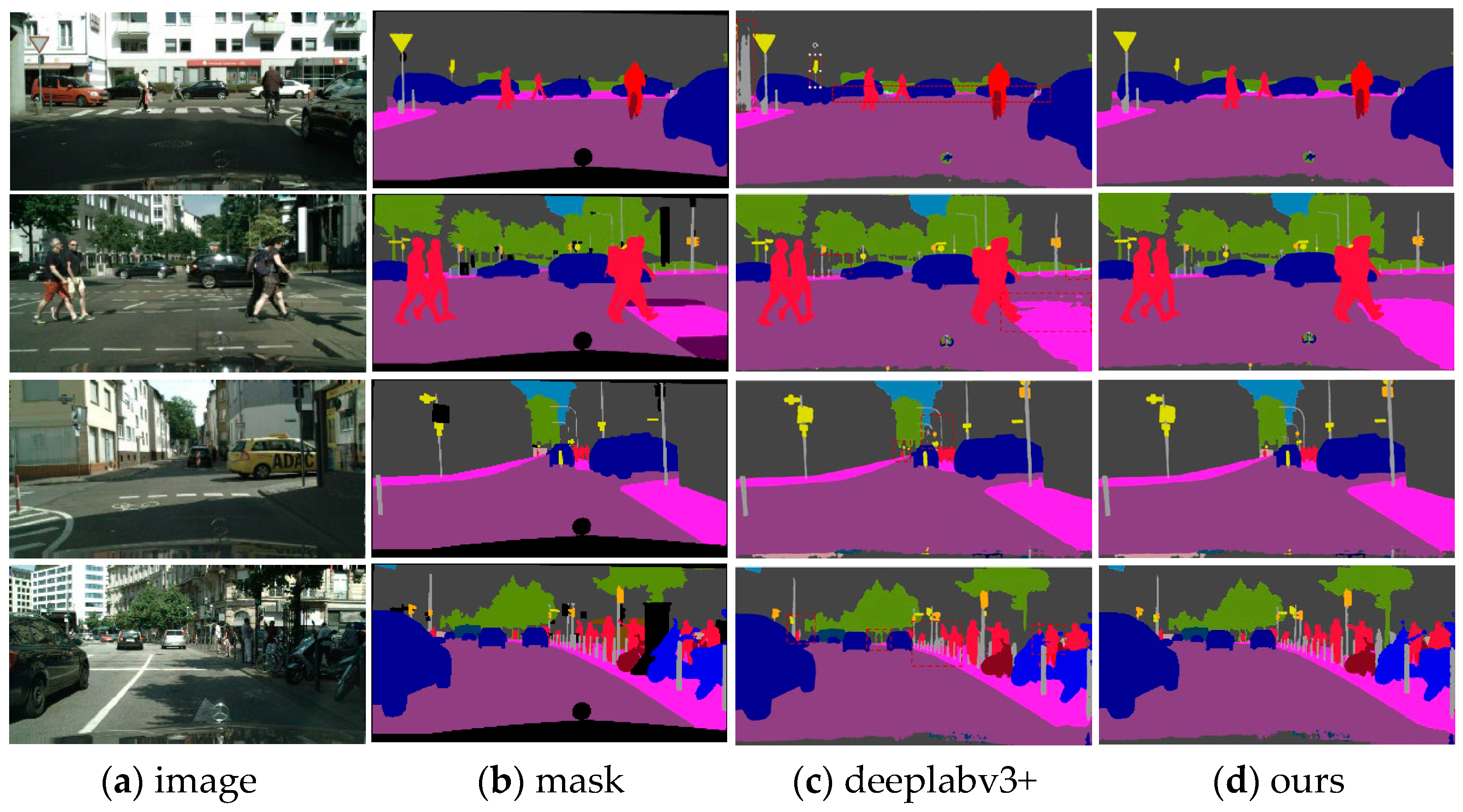

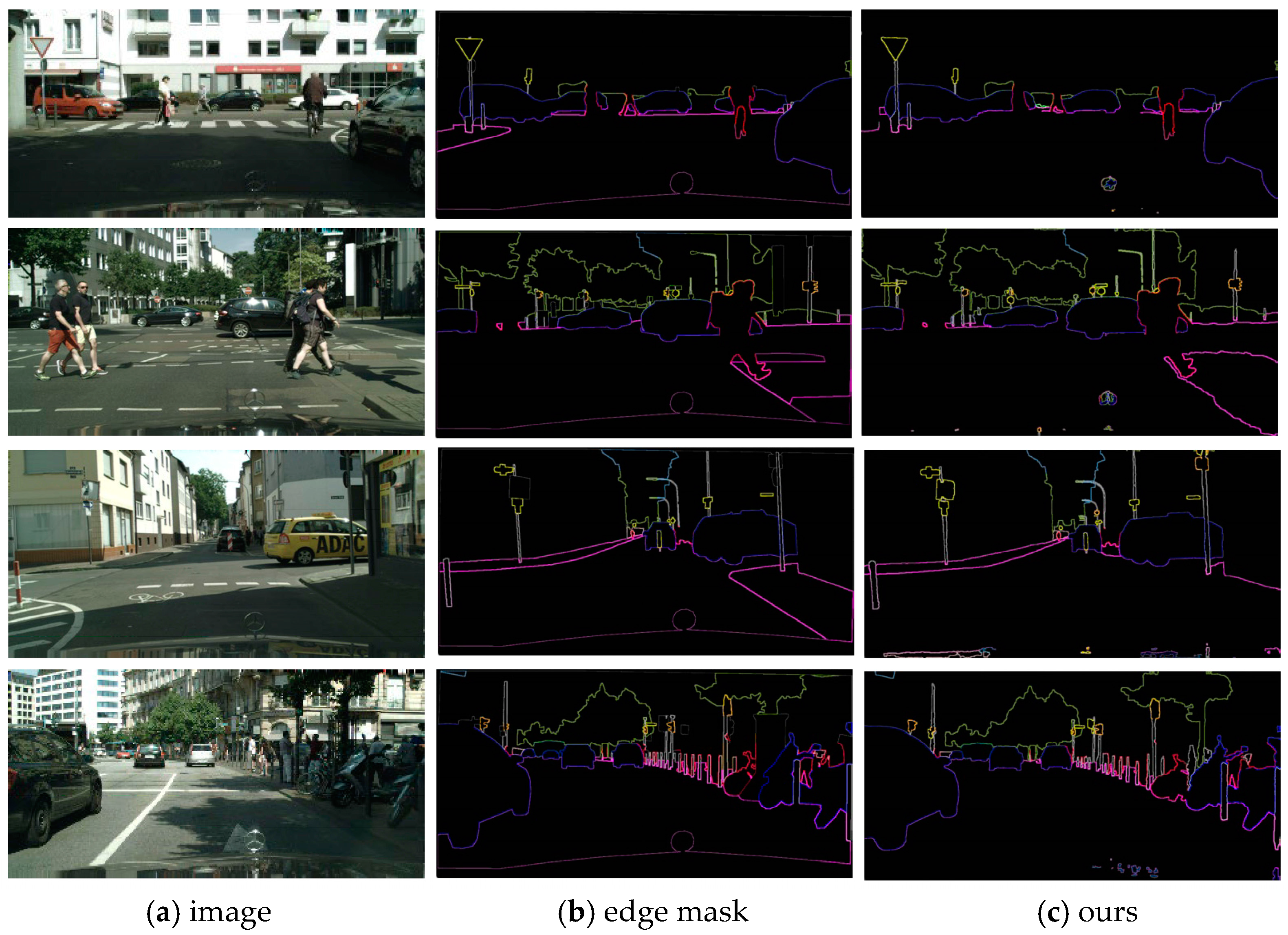

4.4. Qualitative Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, X.; You, A.; Zhu, Z.; Zhao, H.; Yang, M.; Yang, K.; Tan, S. Semantic flow for fast and accurate scene parsing. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part I 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 775–793. [Google Scholar]

- Gu, Z.; Cheng, J.; Fu, H.; Zhou, K.; Hao, H.; Zhao, Y.; Zhang, T.; Gao, S.; Liu, J. Ce-net: Context encoder network for 2d medical image segmentation. IEEE Trans. Med. Imaging 2019, 38, 2281–2292. [Google Scholar] [CrossRef] [PubMed]

- Chen, G.; Wu, T.; Duan, J.; Hu, Q.; Huang, D.; Li, H. Centerpnets: A multi-task shared network for traffic perception. Sensors 2023, 23, 2467. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Duan, J.; Hao, Y.; Chen, G.; Zhang, H. Semantic-guided polarization image fusion method based on a dual-discriminator GAN. Opt. Express 2022, 30, 43601–43621. [Google Scholar] [CrossRef] [PubMed]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Jang, D.-H.; Chu, S.; Kim, J.; Han, B. Pooling revisited: Your receptive field is suboptimal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 549–558. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Zheng, S.; Jayasumana, S.; Romera-Paredes, B.; Vineet, V.; Su, Z.; Du, D.; Huang, C.; Torr, P.H.S. Conditional random fields as recurrent neural networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 1529–1537. [Google Scholar]

- Gong, K.; Liang, X.; Li, Y.; Chen, Y.; Yang, M.; Lin, L. Instance-level human parsing via part grouping network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 770–785. [Google Scholar]

- Bertasius, G.; Shi, J.; Torresani, L. Semantic segmentation with boundary neural fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3602–3610. [Google Scholar]

- Tao, A.; Sapra, K.; Catanzaro, B. Hierarchical multi-scale attention for semantic segmentation. arXiv 2020, arXiv:2005.10821. [Google Scholar]

- Li, X.; Li, X.; Zhang, L.; Cheng, G.; Shi, J.; Lin, Z.; Tan, S. Improving semantic segmentation via decoupled body and edge supervision. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XVII 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 435–452. [Google Scholar]

- Ling, H.; Gao, J.; Kar, A.; Chen, W.; Fidler, S. Fast interactive object annotation with curve-gcn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5257–5266. [Google Scholar]

- Takikawa, T.; Acuna, D.; Jampani, V.; Fidler, S. Gated-scnn: Gated shape cnns for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5229–5238. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 3213–3223. [Google Scholar]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. Multi-path refinement networks for high-resolution semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1925–1934. [Google Scholar]

- Cheng, T.; Wang, X.; Huang, L.; Liu, W. Boundary-preserving mask r-cnn. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIV 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 660–676. [Google Scholar]

- Lin, G.; Shen, C.; Van Den Hengel, A.; Reid, I. Efficient piecewise training of deep structured models for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3194–3203. [Google Scholar]

- Krhenbühl, P.; Koltun, V. Efficient Inference in Fully Connected CRFs with Gaussian Edge Potentials; Curran Associates Inc.: Red Hook, NY, USA, 2012. [Google Scholar]

- Chen, L.C.; Barron, J.T.; Papandreou, G.; Murphy, K.; Yuille, A.L. Semantic image segmentation with task-specific edge detection using cnns and a discriminatively trained domain transform. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4545–4554. [Google Scholar]

- Yuan, Y.; Xie, J.; Chen, X.; Wang, J. Segfix: Model-agnostic boundary refinement for segmentation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XII 16. Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 489–506. [Google Scholar]

- Kirillov, A.; Wu, Y.; He, K.; Girshick, R. Pointrend: Image segmentation as rendering. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 9799–9808. [Google Scholar]

- Cheng, B.; Girshick, R.; Dollár, P.; Berg, A.C.; Kirillov, A. Boundary iou: Improving object-centric image segmentation evaluation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 15334–15342. [Google Scholar]

- Wang, C.; Zhang, Y.; Cui, M.; Ren, P.; Yang, Y.; Xie, X.; Hua, X.-S.; Bao, H.; Xu, W. Active boundary loss for semantic segmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 2397–2405. [Google Scholar]

- Zhu, Y.; Sapra, K.; Reda, F.A.; Shih, K.J.; Newsam, S.; Tao, A.; Catanzaro, B. Improving semantic segmentation via video propagation and label relaxation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8856–8865. [Google Scholar]

- Jang, E.; Gu, S.; Poole, B. Categorical reparameterization with gumbel-softmax. arXiv 2016, arXiv:1611.01144. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. In Proceedings of the NeurIPS Workshop, Long Beach, CA, USA, 9 December 2017. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Perazzi, F.; Pont-Tuset, J.; McWilliams, B.; Van Gool, L.; Gross, M.; Sorkine-Hornung, A. A benchmark dataset and evaluation methodology for video object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 724–732. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 3146–3154. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Yuan, L.; Hou, Q.; Jiang, Z.; Feng, J.; Yan, S. Volo: Vision outlooker for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 6575–6586. [Google Scholar] [CrossRef] [PubMed]

| Method | Road | Swalk | Build | Wall | Fence | Pole | tlight | tsign | veg | Terrain | Sky | Person | Rider | Car | Truck | Bus | Train | Motor | Bike | mIoU |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSP-Net [7] | 98.0 | 84.5 | 92.9 | 54.9 | 61.9 | 66.5 | 72.2 | 80.9 | 92.6 | 65.6 | 94.8 | 83.1 | 63.5 | 95.4 | 83.9 | 90.6 | 84.0 | 67.6 | 78.5 | 79.6 |

| DeeplabV3+ [28] | 98.2 | 85.3 | 92.8 | 58.4 | 65.4 | 65.6 | 70.4 | 79.2 | 92.6 | 65.2 | 94.8 | 82.4 | 63.3 | 95.3 | 83.2 | 90.7 | 84.1 | 66.1 | 77.9 | 79.7 |

| Gscnn [12] | 98.3 | 86.3 | 93.3 | 55.8 | 64.0 | 70.8 | 75.9 | 83.1 | 93.0 | 65.1 | 95.2 | 85.3 | 67.9 | 96.0 | 80.8 | 91.2 | 83.3 | 69.6 | 80.4 | 80.8 |

| DAnet [30] | 98.6 | 85.8 | 92.7 | 55.3 | 60.2 | 70.2 | 75.5 | 81.2 | 93.1 | 71.2 | 92.5 | 87.7 | 74.9 | 93.8 | 78.9 | 89.7 | 91.2 | 73.8 | 78.6 | 81.3 |

| DecoupleSegNets [10] | 98.3 | 86.5 | 93.6 | 60.7 | 66.8 | 70.7 | 73.9 | 81.9 | 93.1 | 66.1 | 95.2 | 84.3 | 67.5 | 95.8 | 86.1 | 92.3 | 85.5 | 72.1 | 80.1 | 81.5 |

| TransUnet [31] | 98.7 | 86.5 | 94.0 | 57.6 | 61.3 | 70.4 | 76.6 | 81.3 | 94.4 | 71.9 | 94.8 | 88.7 | 73.6 | 94.5 | 82.1 | 90.6 | 86.1 | 73.2 | 80.2 | 81.9 |

| VOLO-D4 [32] | 98.7 | 88.5 | 93.8 | 56.2 | 62.4 | 72.6 | 79.7 | 82.2 | 94.7 | 72.3 | 95.8 | 89.7 | 76.7 | 95.6 | 78.4 | 88.2 | 85.8 | 73.8 | 79.2 | 82.3 |

| Ours | 98.7 | 86.5 | 94.2 | 57.9 | 62.6 | 71.8 | 78.3 | 83.7 | 94.8 | 72.4 | 94.6 | 87.1 | 72.3 | 96.4 | 82.3 | 94.4 | 85.7 | 73.9 | 82.1 | 82.6 |

| Method | Baseline | Flow Warp | Edge Stream | Gradient | Edge Relaxation | Regularizer | mIoU | F-Score | |||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Th = 3 px | Th = 5 px | Th = 9 px | Th = 12 px | ||||||||

| Ours | √ | × | × | × | × | × | 79.7 | 69.7 | 74.7 | 78.7 | 81.1 |

| √ | √ | × | × | × | × | 80.6 | 70.2 | 75.5 | 80.3 | 81.2 | |

| √ | √ | √ | × | × | × | 81.8 | 74.8 | 77.1 | 82.5 | 83.3 | |

| √ | √ | √ | √ | × | × | 82.1 | 75.3 | 77.6 | 82.7 | 84.1 | |

| √ | √ | √ | √ | √ | × | 82.3 | 75.9 | 78.1 | 83.3 | 84.5 | |

| √ | √ | √ | √ | √ | √ | 82.6 | 76.5 | 78.9 | 83.6 | 84.7 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, L.; Yao, A.; Duan, J. Improving Semantic Segmentation via Decoupled Body and Edge Information. Entropy 2023, 25, 891. https://doi.org/10.3390/e25060891

Yu L, Yao A, Duan J. Improving Semantic Segmentation via Decoupled Body and Edge Information. Entropy. 2023; 25(6):891. https://doi.org/10.3390/e25060891

Chicago/Turabian StyleYu, Lintao, Anni Yao, and Jin Duan. 2023. "Improving Semantic Segmentation via Decoupled Body and Edge Information" Entropy 25, no. 6: 891. https://doi.org/10.3390/e25060891

APA StyleYu, L., Yao, A., & Duan, J. (2023). Improving Semantic Segmentation via Decoupled Body and Edge Information. Entropy, 25(6), 891. https://doi.org/10.3390/e25060891