Memory Effects, Multiple Time Scales and Local Stability in Langevin Models of the S&P500 Market Correlation

Abstract

:1. Introduction

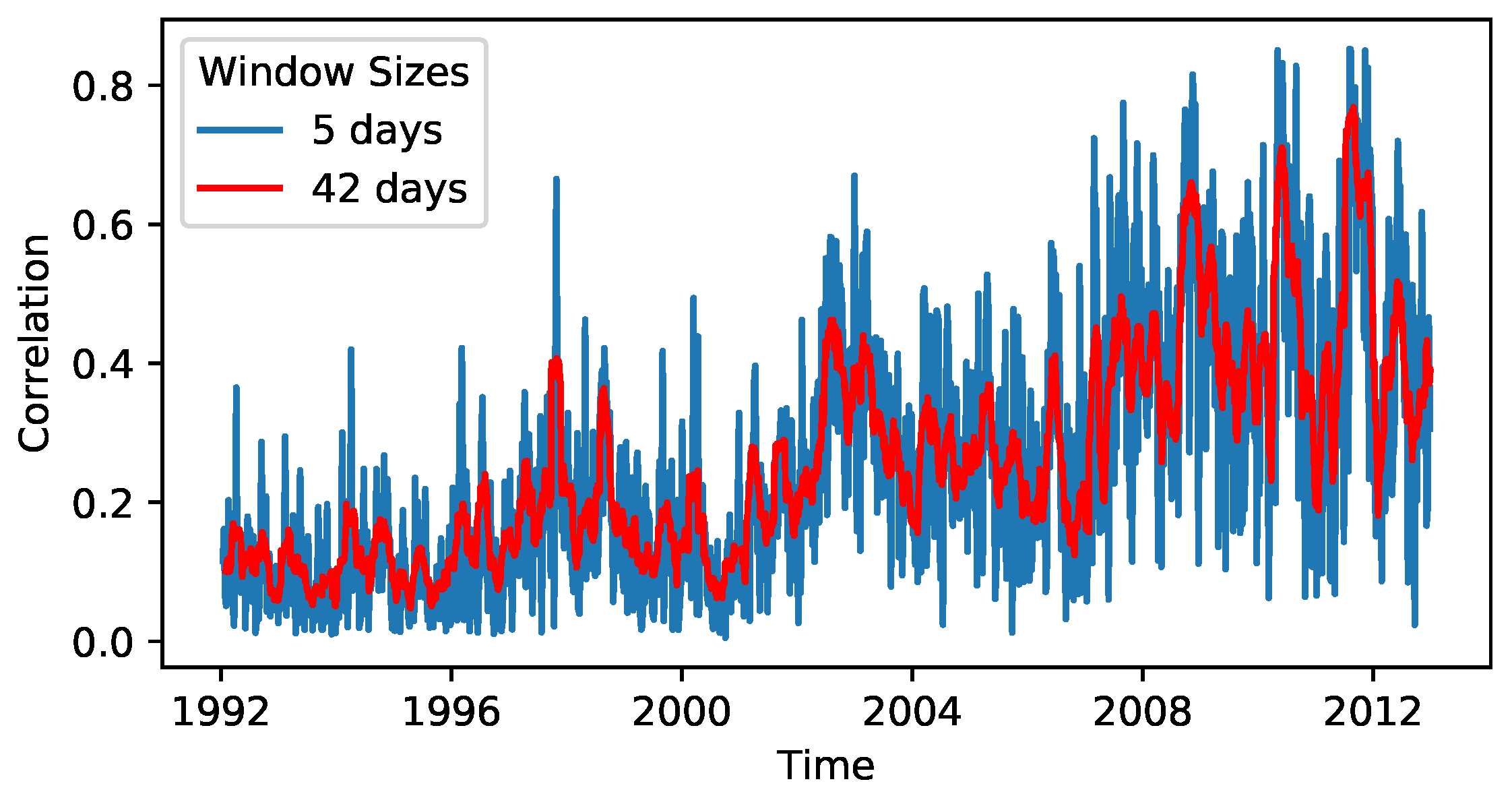

2. Data and Methods

2.1. Data Preparation

2.2. Bayesian Statistics

2.3. Fitting a Generalised Langevin Equation with Memory Kernel

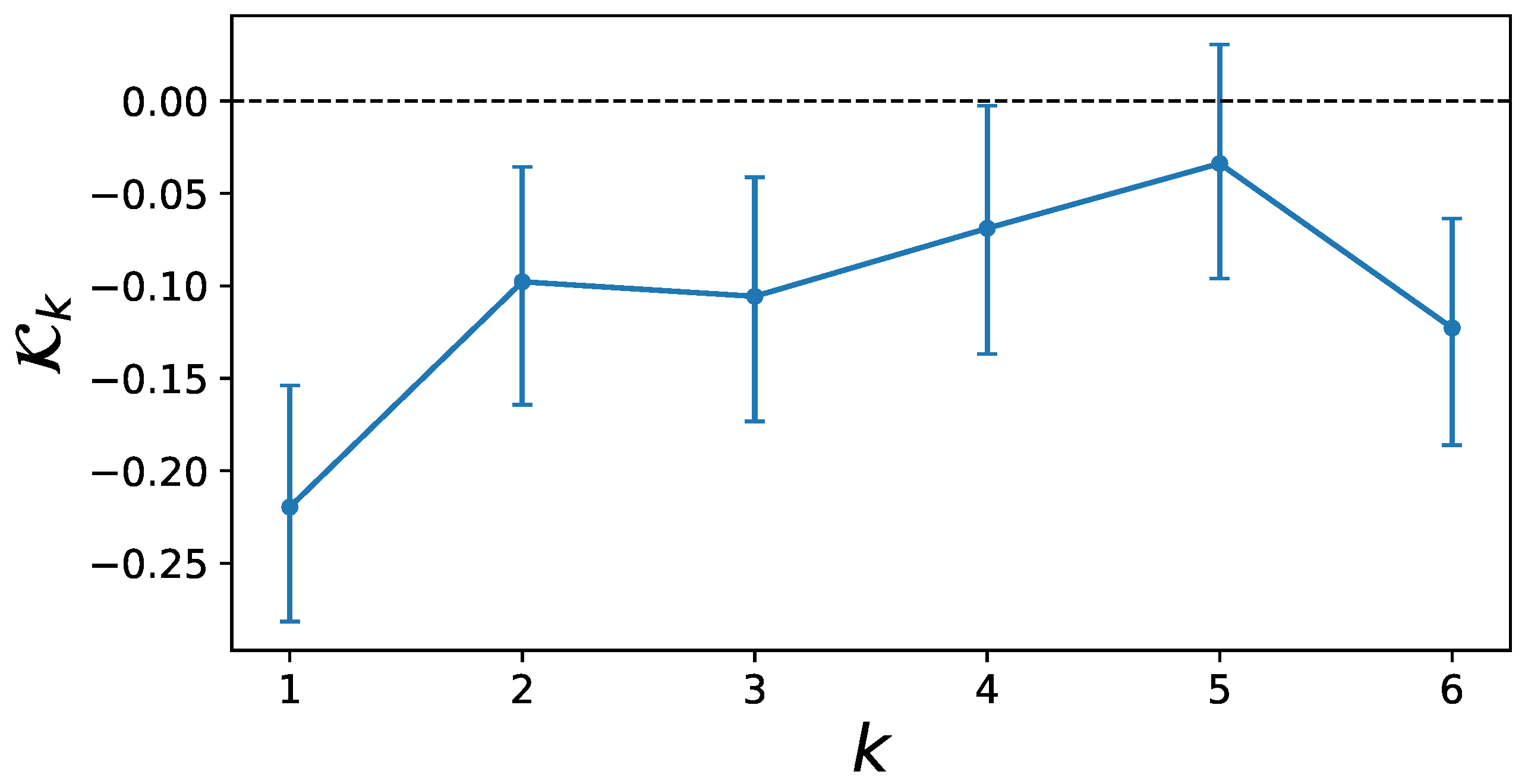

2.4. Resilience Estimation

3. Results

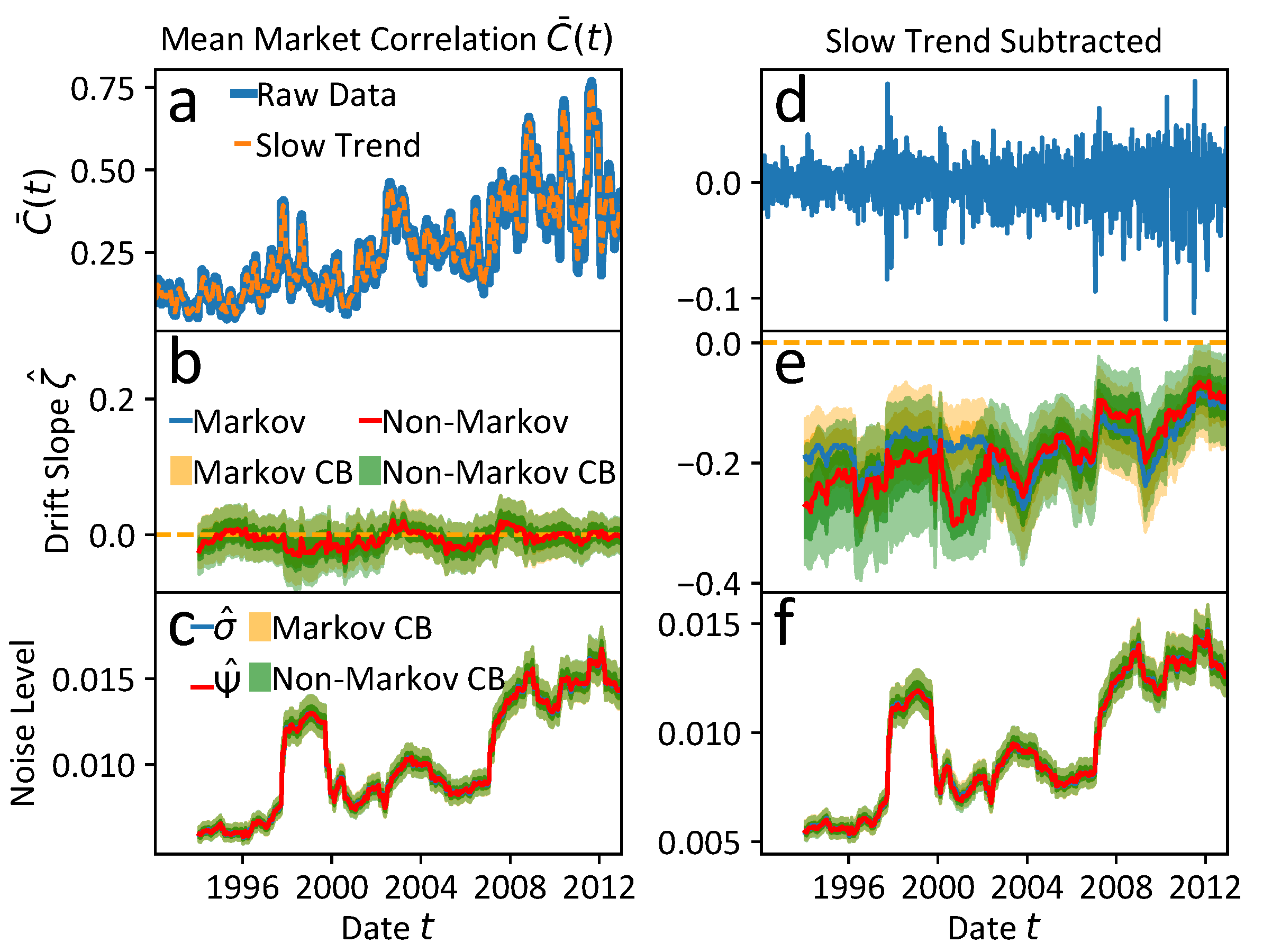

3.1. Estimated GLE Model

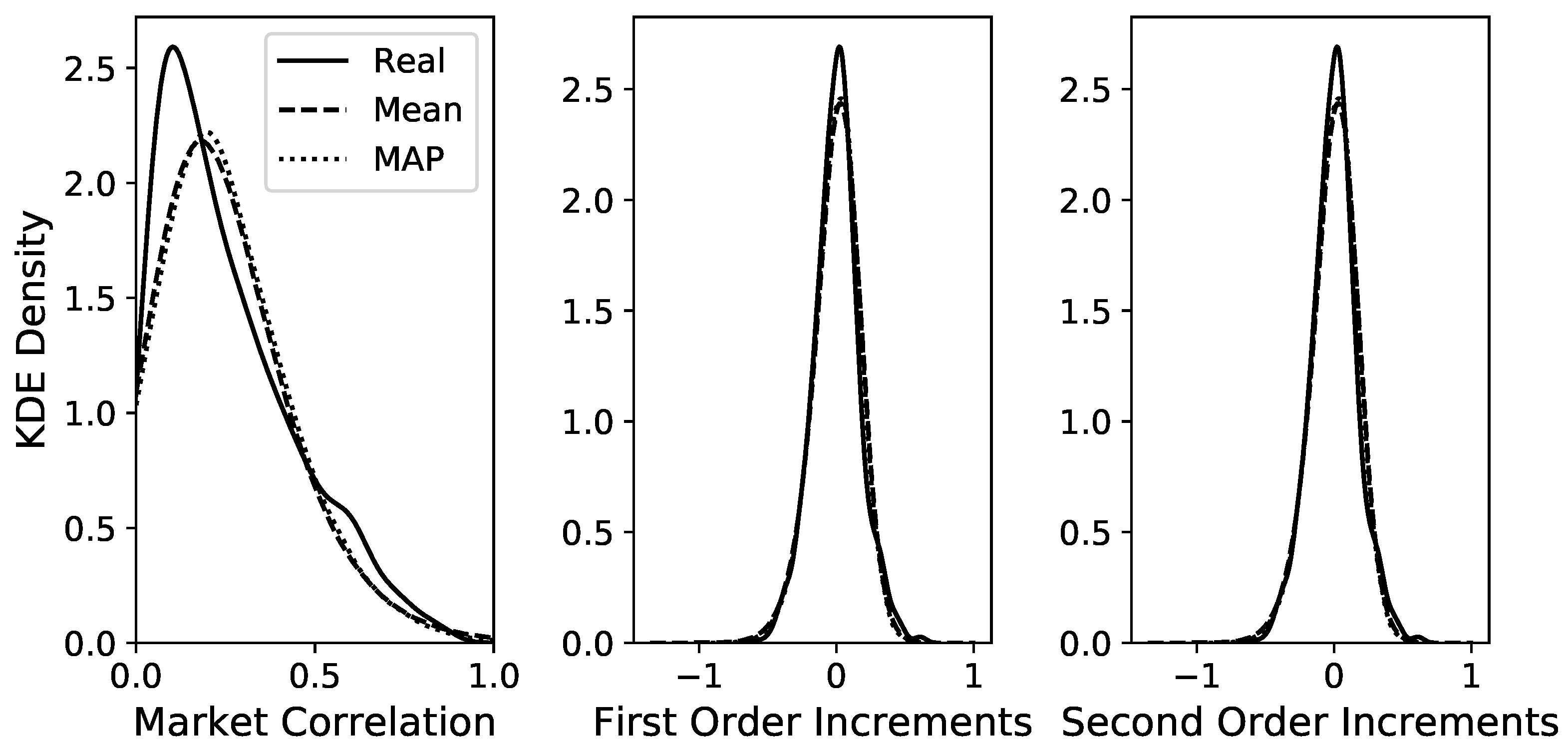

3.1.1. Goodness-of-Fit

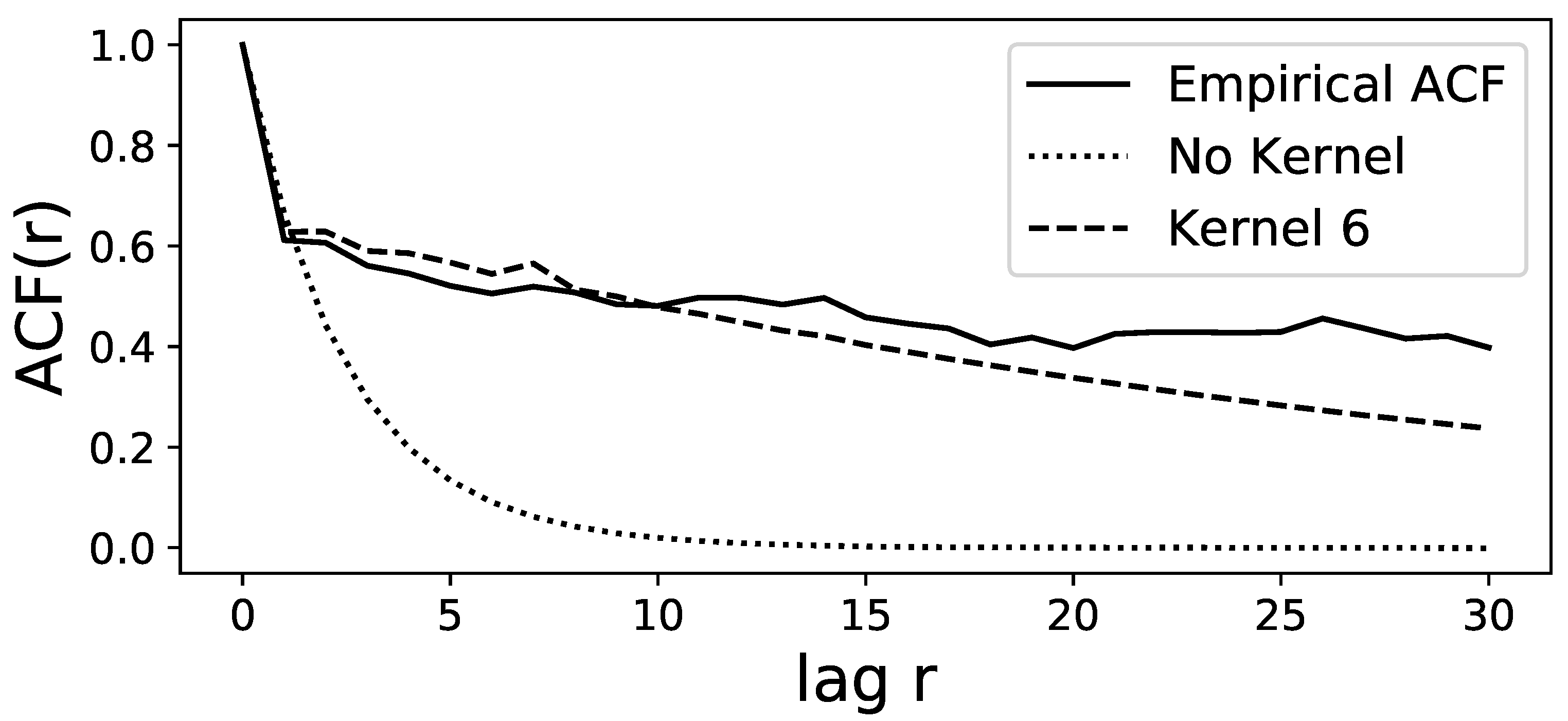

3.1.2. Estimated Memory Kernel

3.1.3. Prediction via the GLE with Kernel Length 3

3.2. Hidden Slow Time Scale and Non-Markovianity

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ACF | Autocorrelation function |

| B-tipping | bifurcation-induced tipping |

| CI | Credible Intervals |

| GLE | Generalised Langevin Equation |

| LE | Langevin Equation |

| MAP | Maximum a posteriori (estimation) |

| MCMC | Markov chain Monte Carlo |

| N-tipping | noise-induced tipping |

Appendix A. GLE: Overlapping Windows for the Mean Correlation

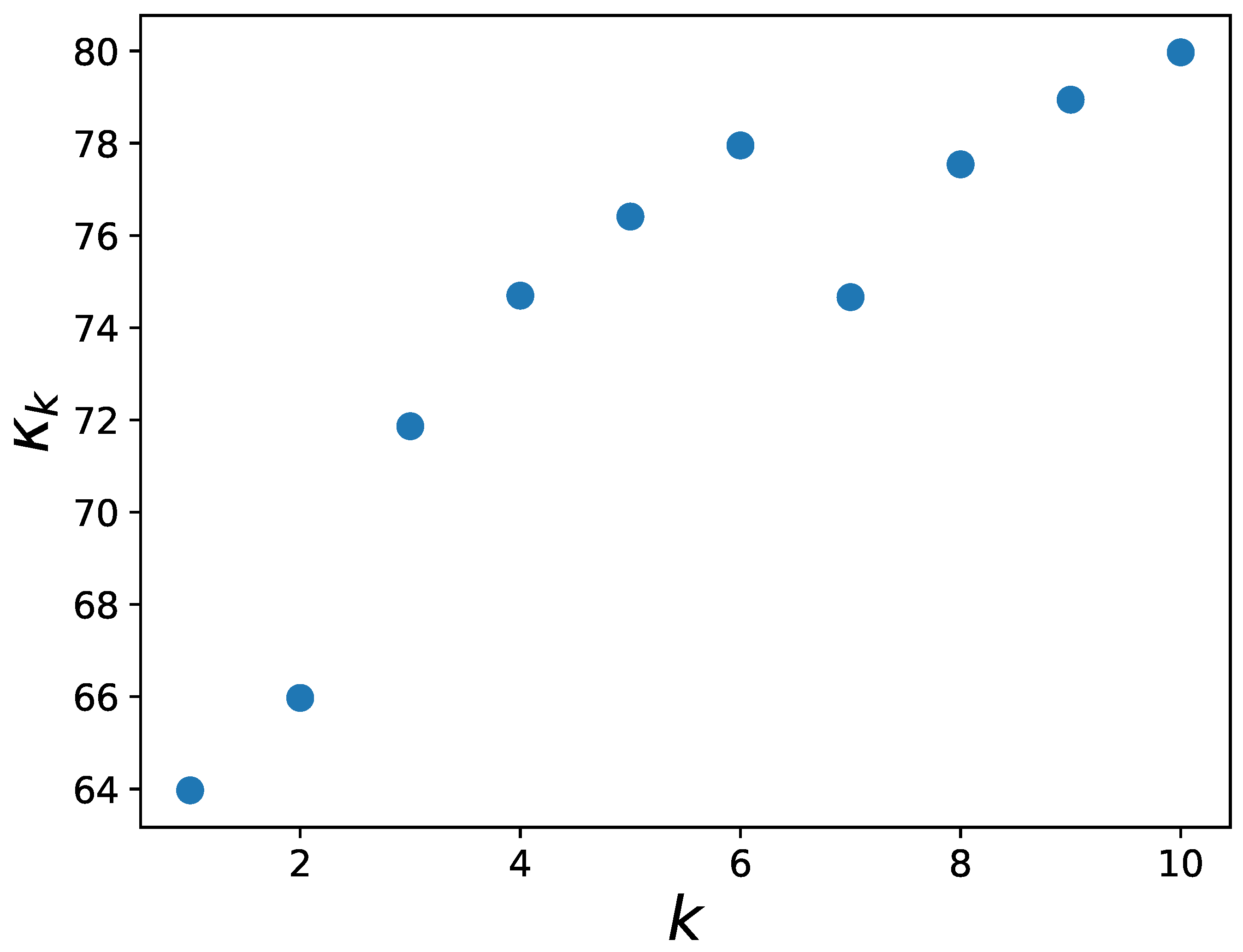

Appendix B. GLE: Selection of Reasonable Kernel Length

Appendix C. LE: Increment Distribution

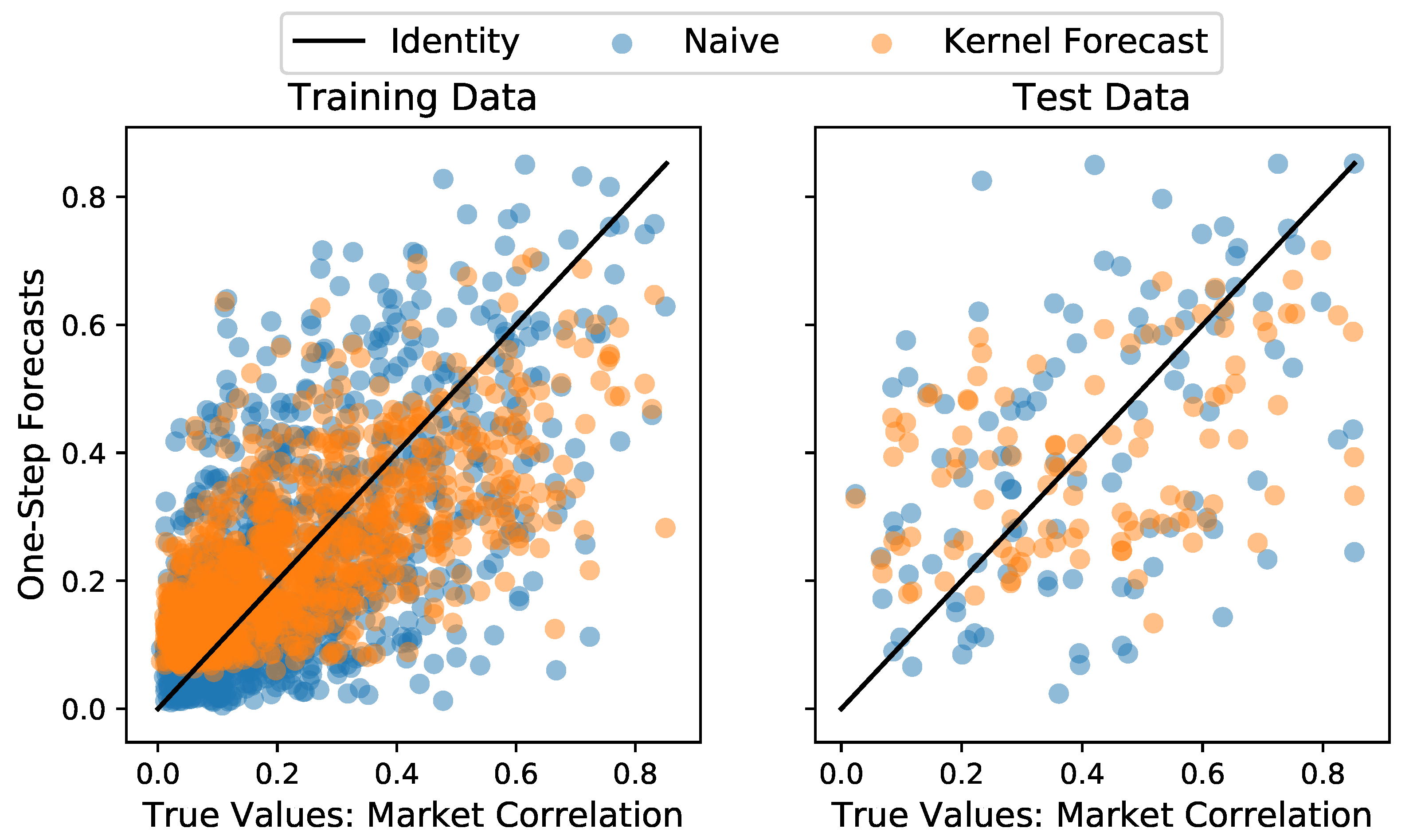

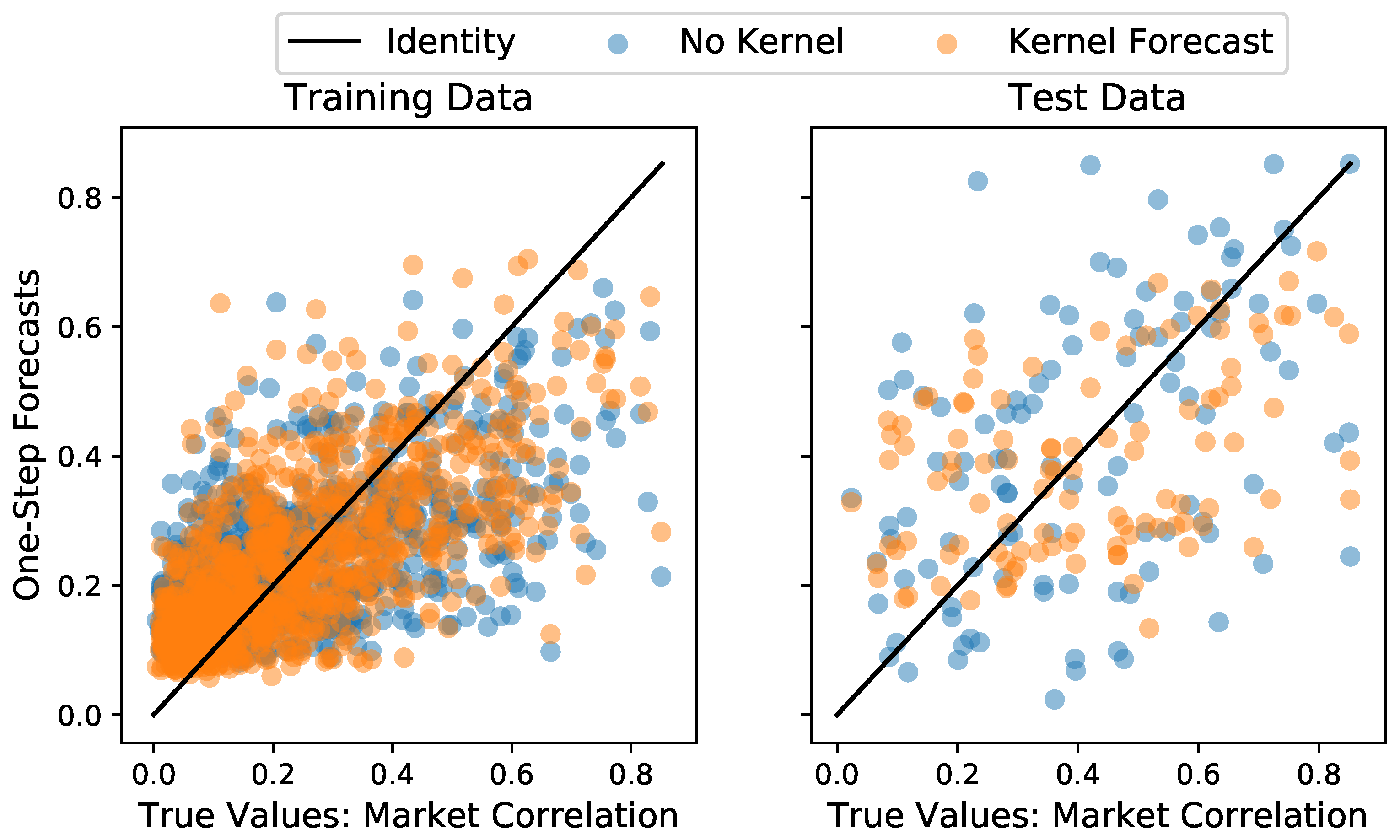

Appendix D. GLE: Prediction

Appendix E. Resilience Analyses

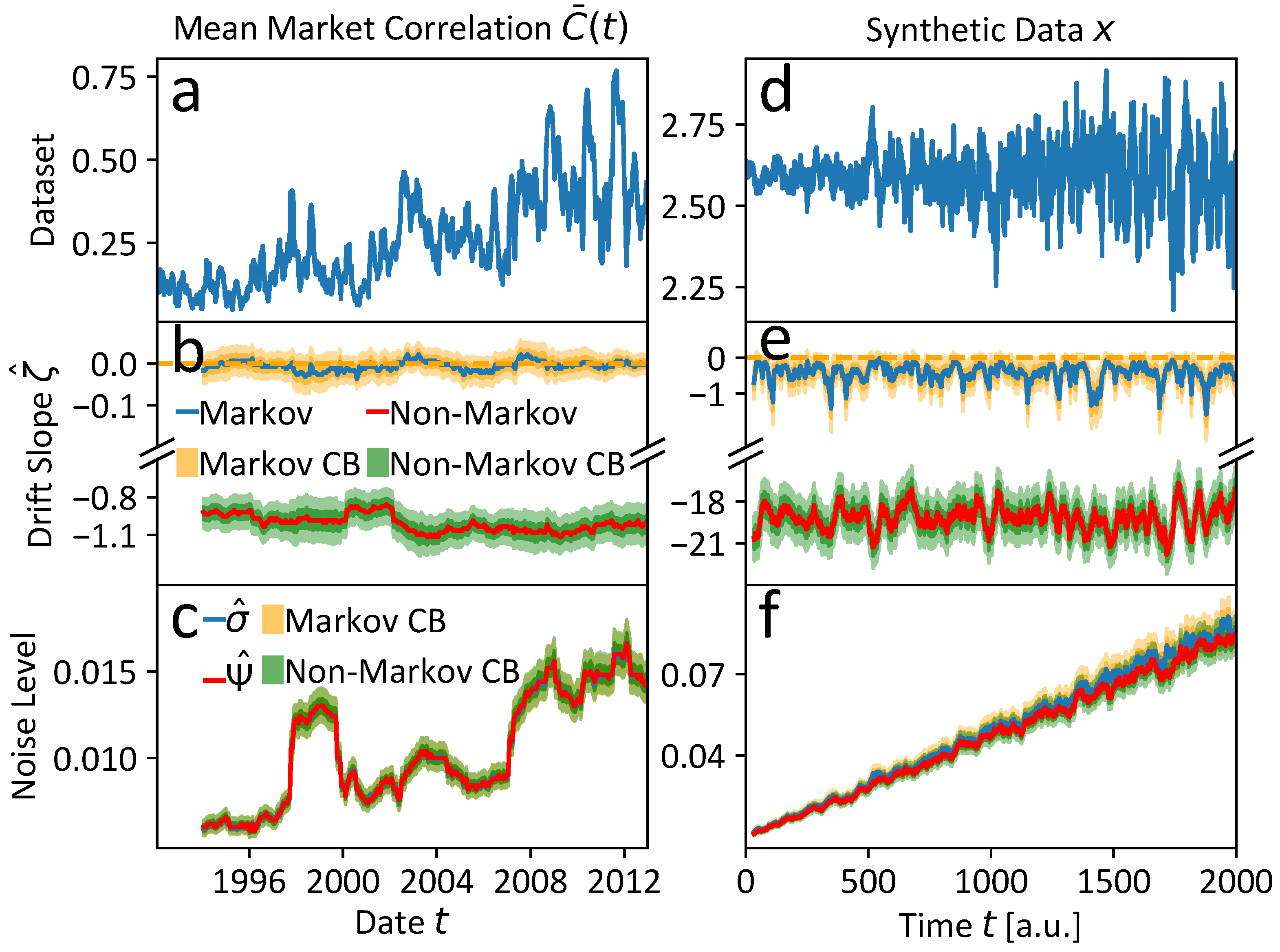

Appendix E.1. Synthetic Time Series

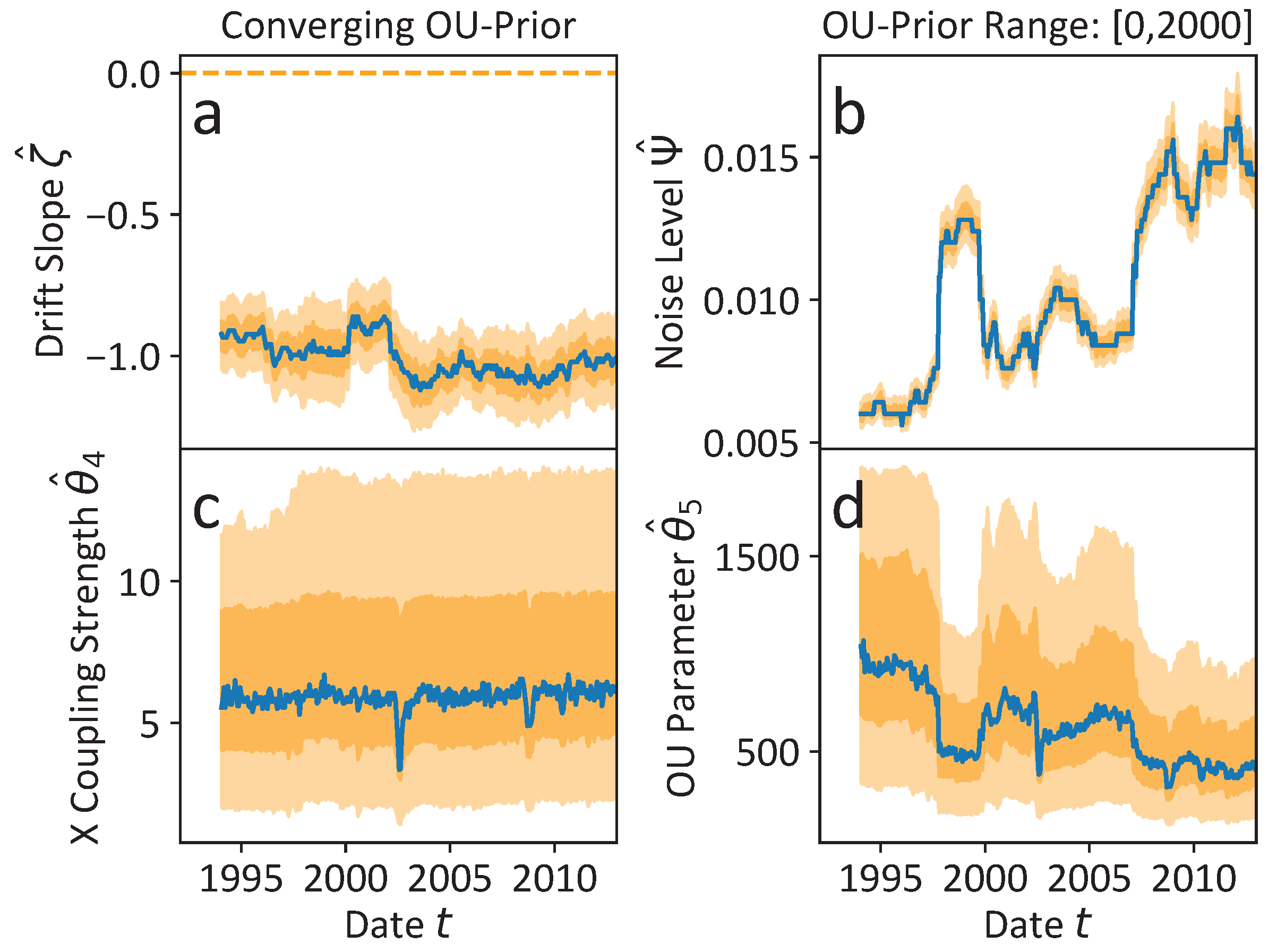

Appendix E.2. Additional Results

Appendix E.3. Parameter Documentation

| Figure | Model | Rolling Window | MCMC Parameters | |||

|---|---|---|---|---|---|---|

| Size | Shift | Walkers | Steps | Burn In | ||

| Figure 6a–c | Markov | 500 | 15 | 50 | 15,000 | 200 |

| Figure 6a–c | Non-Markov | 500 | 15 | 50 | 20,000 | 200 |

| Figure 6d–f | Markov | 500 | 15 | 50 | 20,000 | 200 |

| Figure 6d–f | Non-Markov | 500 | 15 | 50 | 30,000 | 200 |

| Figure A5a–c | Non-Markov | 500 | 15 | 50 | 20,000 | 200 |

| Figure A5d–f | Markov | 500 | 15 | 50 | 15,000 | 200 |

| Figure A5d–f | Non-Markov | 500 | 15 | 50 | 20,000 | 200 |

| Figure A6a–d | Non-Markov | 500 | 15 | 50 | 30,000 | 200 |

| Figure | Model | Prior (Range) | ||||||

|---|---|---|---|---|---|---|---|---|

| TSS | ||||||||

| Figure 6a–c | Markov | — | — | |||||

| Figure 6a–c | Non-Markov | |||||||

| Figure 6d–f | Markov | — | — | |||||

| Figure 6d–f | Non-Markov | |||||||

| Figure A5a–c | Non-Markov | |||||||

| Figure A5d–f | Markov | — | — | |||||

| Figure A5d–f | Non-Markov | |||||||

| Figure A6a–d | Non-Markov | |||||||

References

- Markowitz, H. Portfolio Selection. J. Financ. 1952, 7, 77. [Google Scholar]

- Mantegna, R.; Stanley, H. An Introduction to Econophysics; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Kwapień, J.; Drożdż, S. Physical approach to complex systems. Phys. Rep. 2012, 515, 115–226. [Google Scholar] [CrossRef]

- Brown, S.J. The Number of Factors in Security Returns. J. Financ. 1989, 44, 1247–1262. [Google Scholar] [CrossRef]

- Laloux, L.; Cizeau, P.; Bouchaud, J.P.; Potters, M. Noise Dressing of Financial Correlation Matrices. Phys. Rev. Lett. 1999, 83, 1467–1470. [Google Scholar] [CrossRef]

- Mantegna, R.N. Degree of correlation inside a financial market. AIP Conf. Proc. 1997, 411, 197–202. [Google Scholar]

- Marti, G.; Nielsen, F.; Bińkowski, M.; Donnat, P. A Review of Two Decades of Correlations, Hierarchies, Networks and Clustering in Financial Markets. In Progress in Information Geometry: Theory and Applications; Nielsen, F., Ed.; Springer International Publishing: Cham, Switzerland, 2021; pp. 245–274. [Google Scholar]

- Plerou, V.; Gopikrishnan, P.; Rosenow, B.; Nunes Amaral, L.A.; Stanley, H.E. Universal and Nonuniversal Properties of Cross Correlations in Financial Time Series. Phys. Rev. Lett. 1999, 83, 1471–1474. [Google Scholar] [CrossRef]

- Münnix, M.C.; Shimada, T.; Schäfer, R.; Leyvraz, F.; Seligman, T.H.; Guhr, T.; Stanley, H.E. Identifying States of a Financial Market. Sci. Rep. 2012, 2, 644. [Google Scholar] [CrossRef] [PubMed]

- Rinn, P.; Stepanov, Y.; Peinke, J.; Guhr, T.; Schäfer, R. Dynamics of quasi-stationary systems: Finance as an example. EPL Europhys. Lett. 2015, 110, 68003. [Google Scholar] [CrossRef]

- Stepanov, Y.; Rinn, P.; Guhr, T.; Peinke, J.; Schäfer, R. Stability and hierarchy of quasi-stationary states: Financial markets as an example. J. Stat. Mech. Theory Exp. 2015, 2015, P08011. [Google Scholar] [CrossRef]

- Heckens, A.J.; Krause, S.M.; Guhr, T. Uncovering the dynamics of correlation structures relative to the collective market motion. J. Stat. Mech. Theory Exp. 2020, 2020, 103402. [Google Scholar] [CrossRef]

- Heckens, A.J.; Guhr, T. A new attempt to identify long-term precursors for endogenous financial crises in the market correlation structures. J. Stat. Mech. Theory Exp. 2022, 2022, 043401. [Google Scholar] [CrossRef]

- Heckens, A.J.; Guhr, T. New collectivity measures for financial covariances and correlations. Phys. A Stat. Mech. Appl. 2022, 604, 127704. [Google Scholar] [CrossRef]

- Bachelier, L. Théorie de la spéculation. Ann. Sci. L’école Norm. Supér. 1900, 17, 21–86. [Google Scholar] [CrossRef]

- Friedrich, R.; Siegert, S.; Peinke, J.; Lück, S.; Siefert, M.; Lindemann, M.; Raethjen, J.; Deuschl, G.; Pfister, G. Extracting model equations from experimental data. Phys. Lett. A 2000, 271, 217–222. [Google Scholar] [CrossRef]

- Siegert, S.; Friedrich, R.; Peinke, J. Analysis of data sets of stochastic systems. Phys. Lett. A 1998, 243, 275–280. [Google Scholar] [CrossRef]

- Ragwitz, M.; Kantz, H. Indispensable Finite Time Corrections for Fokker-Planck Equations from Time Series Data. Phys. Rev. Lett. 2001, 87, 254501. [Google Scholar] [CrossRef] [PubMed]

- Friedrich, R.; Renner, C.; Siefert, M.; Peinke, J. Comment on “Indispensable Finite Time Corrections for Fokker-Planck Equations from Time Series Data”. Phys. Rev. Lett. 2002, 89, 149401. [Google Scholar] [CrossRef]

- Ragwitz, M.; Kantz, H. Ragwitz and Kantz Reply. Phys. Rev. Lett. 2002, 89, 149402. [Google Scholar] [CrossRef]

- Kleinhans, D.; Friedrich, R. Maximum likelihood estimation of drift and diffusion functions. Phys. Lett. A 2007, 368, 194–198. [Google Scholar] [CrossRef]

- Willers, C.; Kamps, O. Non-parametric estimation of a Langevin model driven by correlated noise. Eur. Phys. J. B 2021, 94, 149. [Google Scholar] [CrossRef]

- Sieber, M.; Paschereit, C.O.; Oberleithner, K. Stochastic modelling of a noise-driven global instability in a turbulent swirling jet. J. Fluid Mech. 2021, 916, A7. [Google Scholar] [CrossRef]

- Klippenstein, V.; Tripathy, M.; Jung, G.; Schmid, F.; van der Vegt, N.F.A. Introducing Memory in Coarse-Grained Molecular Simulations. J. Phys. Chem. B 2021, 125, 4931–4954. [Google Scholar] [CrossRef] [PubMed]

- Czechowski, Z. Reconstruction of the modified discrete Langevin equation from persistent time series. Chaos Interdiscip. J. Nonlinear Sci. 2016, 26, 053109. [Google Scholar] [CrossRef] [PubMed]

- Friedrich, R.; Peinke, J.; Sahimi, M.; Reza Rahimi Tabar, M. Approaching complexity by stochastic methods: From biological systems to turbulence. Phys. Rep. 2011, 506, 87–162. [Google Scholar] [CrossRef]

- Mori, H. Transport, Collective Motion, and Brownian Motion. Prog. Theor. Phys. 1965, 33, 423–455. [Google Scholar] [CrossRef]

- Sivia, D.; Skilling, J. Data Analysis, 2nd ed.; Oxford University Press: London, UK, 2006. [Google Scholar]

- Von der Linden, W.; Dose, V.; Von Toussaint, U. Bayesian Probability Theory: Applications in the Physical Sciences; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Willers, C.; Kamps, O. Efficient Bayesian estimation of the generalized Langevin equation from data. arXiv 2021, arXiv:2107.04560. [Google Scholar] [CrossRef]

- Willers, C.; Kamps, O. Efficient Bayesian estimation of a non-Markovian Langevin model driven by correlated noise. arXiv 2022, arXiv:2207.10637. [Google Scholar] [CrossRef]

- Heßler, M.; Kamps, O. Bayesian on-line anticipation of critical transitions. New J. Phys. 2021, 24, 063021. [Google Scholar] [CrossRef]

- Aroussi, R. yfinance 0.1.70, 2022. Available online: https://pypi.org/project/yfinance/ (accessed on 21 August 2023).

- Wand, T.; Heßler, M.; Kamps, O. Identifying dominant industrial sectors in market states of the S&P 500 financial data. J. Stat. Mech. Theory Exp. 2023, 2023, 043402. [Google Scholar] [CrossRef]

- Reback, J.; jbrockmendel; McKinney, W.; Van Den Bossche, J.; Roeschke, M.; Augspurger, T.; Hawkins, S.; Cloud, P.; gfyoung; Sinhrks; et al. pandas-dev/pandas: Pandas 1.4.3. 2022. Available online: https://zenodo.org/record/6702671 (accessed on 21 August 2023).

- McKinney, W. Data Structures for Statistical Computing in Python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010; pp. 56–61. [Google Scholar] [CrossRef]

- Schäfer, R.; Guhr, T. Local normalization. Uncovering correlations in non-stationary financial time series. Phys. A 2010, 389, 3856–3865. [Google Scholar] [CrossRef]

- Wand, T. S&P500 Mean Correlation Time Series (1992–2012). Available online: https://zenodo.org/record/8167592 (accessed on 21 August 2023).

- Bayes, T. LII. An essay towards solving a problem in the doctrine of chances. Philos. Trans. R. Soc. Lond. 1763, 53, 370–418. [Google Scholar] [CrossRef]

- Hastings, W.K. Monte Carlo sampling methods using Markov chains and their applications. Biometrika 1970, 57, 97–109. [Google Scholar] [CrossRef]

- Strogatz, S.H. Nonlinear Dynamics and Chaos. With Applications to Physics, Biology, Chemistry and Engineering, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Heßler, M. AntiCPy. 2021. Available online: https://github.com/MartinHessler/antiCPy (accessed on 21 August 2023).

- Heßler, M. AntiCPy’s Documentation. Available online: https://anticpy.readthedocs.io (accessed on 31 December 2021).

- Seabold, S.; Perktold, J. Statsmodels: Econometric and statistical modeling with python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010; pp. 92–96. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Marx, K. Das Kapital—Kritik der Politischen Ökonomie; Nikol Verlagsges mbH: Hamburg, Germany, 2014; ISBN 9780140445688. [Google Scholar]

- Harvey, A.C.; Trimbur, T.M.; Van Dijk, H.K. Trends and cycles in economic time series: A Bayesian approach. J. Econom. 2007, 140, 618–649. [Google Scholar] [CrossRef]

- Bernard, L.; Gevorkyan, A.V.; Palley, T.; Semmler, W. Time Scales and Mechanisms of Economic Cycles: A Review of Theories of Long Waves 1; PERI Working Paper Series WP337; University of Massachusetts: Amherst, MA, USA, 2013. [Google Scholar] [CrossRef]

- Kondratieff, N.D.; Stolper, W.F. The Long Waves in Economic Life. Rev. Econ. Stat. 1935, 17, 105–115. [Google Scholar] [CrossRef]

- Schumpeter, J. Konjunkturzyklen; Vandenhoeck & Ruprecht: Göttingen, Germany, 1961. [Google Scholar]

- Zhang, W.-B. Synergetic Economics–Time and Change in Nonlinear Economics; Springer: Berlin/Heidelberg, Germany, 1991. [Google Scholar]

- Turchin, P. Historical Dynamics: Why States Rise and Fall; Princeton University Press: Princeton, NJ, USA, 2003. [Google Scholar]

- Wand, T.; Hoyer, D. The Characteristic Time Scale of Cultural Evolution. arXiv 2023, arXiv:2212.00563. [Google Scholar] [CrossRef]

- Gaunersdorfer, A.; Hommes, C.H.; Wagener, F.O. Bifurcation Routes to Volatility Clustering under Evolutionary Learning. J. Econ. Behav. Organ. 2008, 67, 27–47. [Google Scholar] [CrossRef]

- He, X.Z.; Li, K.; Wang, C. Volatility clustering: A nonlinear theoretical approach. J. Econ. Behav. Organ. 2016, 130, 274–297. [Google Scholar] [CrossRef]

- Sato, Y.; Kanazawa, K. Can we infer microscopic financial information from the long memory in market-order flow?: A quantitative test of the Lillo-Mike-Farmer model. arXiv 2023, arXiv:2301.13505. [Google Scholar] [CrossRef]

- Marsili, M. Dissecting financial markets: Sectors and states. Quant. Financ. 2002, 2, 297–302. [Google Scholar] [CrossRef]

- Heßler, M.; Wand, T.; Kamps, O. Efficient Multi-Change Point Analysis to decode Economic Crisis Information from the S&P500 Mean Market Correlation. arXiv 2023, arXiv:2308.00087. [Google Scholar] [CrossRef]

- Thompson, E.; Smith, L. Escape from model-land. Economics 2019, 13, 20190040. [Google Scholar] [CrossRef]

- Ghashghaie, S.; Breymann, W.; Peinke, J.; Talkner, P.; Dodge, Y. Turbulent cascades in foreign exchange markets. Nature 1996, 381, 767–770. [Google Scholar] [CrossRef]

- Müller, U.A.; Dacorogna, M.M.; Davé, R.D.; Olsen, R.B.; Pictet, O.V.; von Weizsäcker, J.E. Volatilities of different time resolutions—Analyzing the dynamics of market components. J. Empir. Financ. 1997, 4, 213–239. [Google Scholar] [CrossRef]

| Model | In-Sample | Out-of-Sample | ||||

|---|---|---|---|---|---|---|

| 80% | 85% | 90% | 80% | 85% | 90% | |

| Naive | 0.07 | 0.15 | 0.19 | −0.21 | −0.15 | −0.11 |

| LE | 0.29 | 0.32 | 0.35 | −0.14 | −0.01 | 0.08 |

| GLE | 0.39 | 0.42 | 0.45 | 0.01 | 0.08 | 0.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wand, T.; Heßler, M.; Kamps, O. Memory Effects, Multiple Time Scales and Local Stability in Langevin Models of the S&P500 Market Correlation. Entropy 2023, 25, 1257. https://doi.org/10.3390/e25091257

Wand T, Heßler M, Kamps O. Memory Effects, Multiple Time Scales and Local Stability in Langevin Models of the S&P500 Market Correlation. Entropy. 2023; 25(9):1257. https://doi.org/10.3390/e25091257

Chicago/Turabian StyleWand, Tobias, Martin Heßler, and Oliver Kamps. 2023. "Memory Effects, Multiple Time Scales and Local Stability in Langevin Models of the S&P500 Market Correlation" Entropy 25, no. 9: 1257. https://doi.org/10.3390/e25091257

APA StyleWand, T., Heßler, M., & Kamps, O. (2023). Memory Effects, Multiple Time Scales and Local Stability in Langevin Models of the S&P500 Market Correlation. Entropy, 25(9), 1257. https://doi.org/10.3390/e25091257