1. Introduction

With the progress of society and changes in the economic environment, investment and finance have become mainstream trends in modern society. Among numerous investment options such as bonds, stocks, funds, and futures, stock trading is a popular choice for many people. In stock trading, quantitative trading is an important area that utilizes extensive historical data and market information to predict stock price trends [

1,

2,

3]. Through these predictions, investors can obtain signals about stock price movements earlier and execute buying or selling actions with appropriate timing [

4,

5]. This behavior is sometimes likened to the concept of insider trading, as investors can act earlier than others based on their predictions to gain better trading opportunities. Therefore, in quantitative trading, how to forecast stock prices is a crucial issue. In recent years, with the development of artificial intelligence, there is an increasing amount of research confirming that neural network models outperform traditional time series methods in stock price prediction [

6,

7,

8,

9].

In addition to neural network models, deep learning models are currently the most important models in the field of artificial intelligence, especially in time series forecasting [

10,

11,

12,

13]. Among them, the LSTM model [

14] is one of the most commonly used deep learning models and has shown outstanding performance in time series forecasting [

15,

16,

17,

18,

19,

20,

21,

22,

23,

24]. However, after the Transformer model [

25] achieved breakthroughs in the field of natural language processing (NLP), people began to explore whether the Transformer model could also be applied to time series forecasting. The LSTM method was originally developed for NLP, and it was later applied to time series forecasting, which also achieved good results. When Transformer technology was successful in NLP tasks, many data scientists began to apply its technology to time series forecasting, showing the potential of the Transformer model to replace the LSTM model and showing a better performance in time series forecasting [

26,

27,

28,

29,

30,

31,

32,

33]. Recently, it has become one of the most noteworthy new developments.

Traditional LSTM and recent Transformer models only focus on the temporal relationship between data points, and predict future information by capturing the dependencies between before and after data collection. They overlook the spatial relationships that exist among variables, which can be complex and dynamic in the context of stocks [

34,

35]. For example, some companies may hold important positions in the Apple’s supply chain at certain times, while other companies may replace these positions at different times. Furthermore, the relationships among these companies are not fixed and may change over time. Therefore, considering the interaction among multiple stocks is crucial for stock price forecasting. However, most advanced models primarily concentrate on the time series characteristics of individual stocks and pay relatively little attention to the interactions among multiple stocks. However, some scholars have proposed methods that integrate spatial and temporal concepts, such as the spatiotemporal attention-based convolutional network (STACN) model introduced by Lin et al. [

36]. STACN uses the convolutional neural network to extract spatial feature maps from news headlines to capture the market structure of stocks. Simultaneously, LSTM extracts temporal features from historical stock prices and relevant fundamental information to capture price variations and trends. Finally, STACN employs attention mechanisms to learn and select the most important features, utilizing spatiotemporal information for stock price prediction. Hou et al. [

37] also proposed an approach incorporating the graph structure relationships between different companies into the time series forecasting task. Firstly, the method utilizes the Variational Autoencoder (VAE) to learn the low-dimensional latent features from the fundamental data of companies. It further calculates the Euclidean distances between companies to establish a graph network and explore inter-company correlations. Then, a hybrid deep neural network consisting of a graph convolutional network and a long-short term memory network (GCN-LSTM) is used to model the graph structure interaction among stocks and their price fluctuations over time. The method that combines spatial and temporal information for stock price prediction is called VAE-GCN-LSTM by Hou et al. [

37]. We can observe that both Lin et al. [

36] and Hou et al. [

37] conducted research based on the LSTM model and did not utilize the Transformer model.

Based on the research background and motivation mentioned above, this study aims to explore the impact of spatiotemporal relationships on the stock price predictions. To capture the relationships between different stocks at different time points, we employ the Spacetimeformer model [

38], which incorporates spatiotemporal mechanisms, as the predictive model for stock prices. Additionally, to avoid the influence of the cross-day prediction, we adopt the daily scan window approach in our experimental design. The main focus of this research is multi-time-step forecasting for the prices of individual stock. In the multi-time-step forecasting task, the Spacetimeformer model predicts the stock prices for every ten minutes within the next hour (six steps in total). Finally, we compare the predictions of the Spacetimeformer model, which integrates spatiotemporal concepts, with the latest Transformer model that purely considers time and the more mature LSTM model in time series forecasting. The goal is to investigate the performance differences between models with spatiotemporal considerations and models that only consider time, as well as to assess the impact of spatiotemporal mechanisms on prediction accuracy.

2. Materials and Methods

2.1. Datasets

The data for this research come from the stock information of the Taiwan Stock Exchange provided by the stock trading system of Yuanta Securities Co., Ltd. (

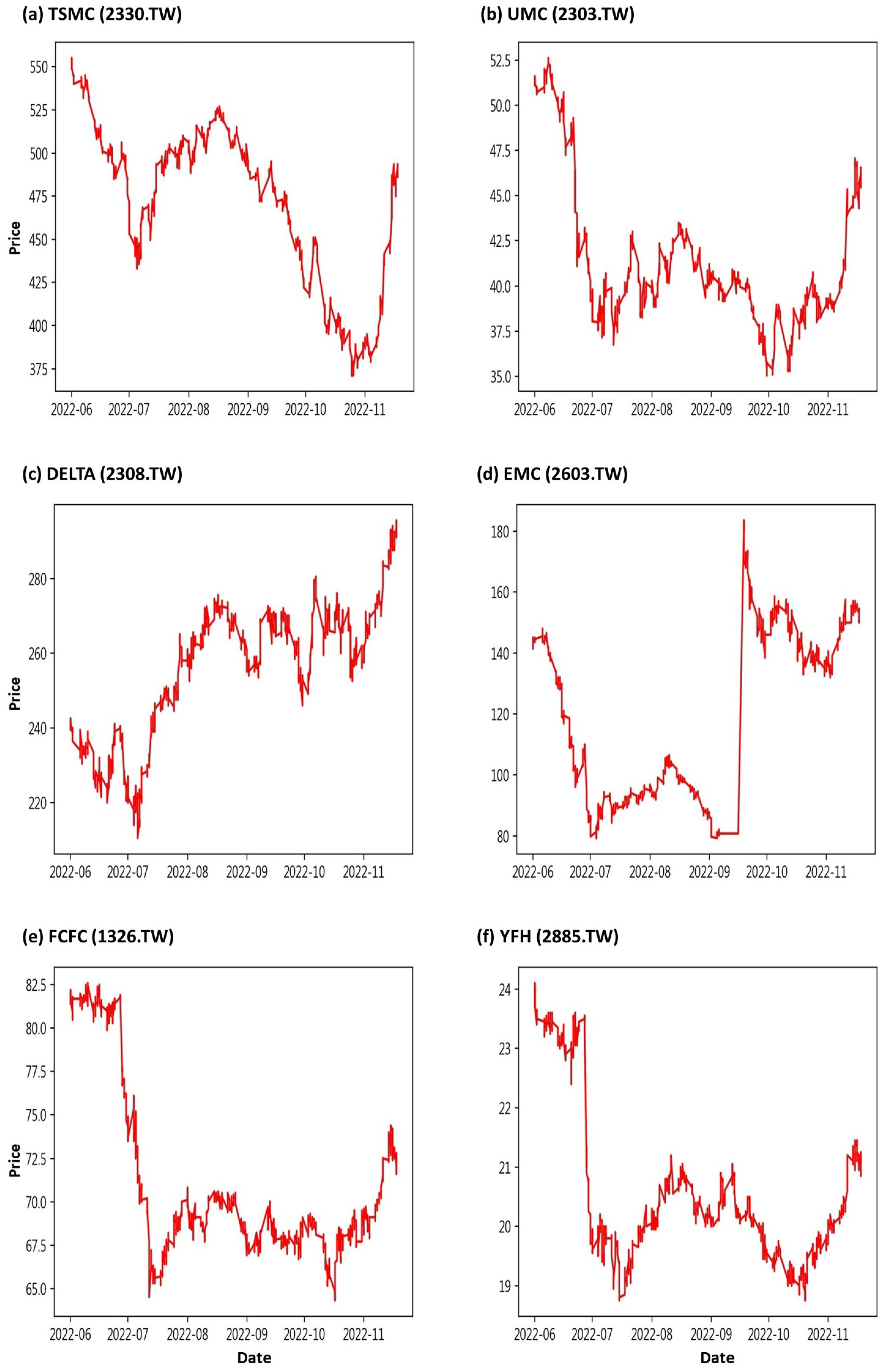

https://www.yuanta.com.tw/eYuanta/Securities/Stock, accessed on 1 May 2022), which is updated every minute. In order to effectively demonstrate the performance of the Spacetimeformer model, we selected six important constituent stocks from the constituent stocks of the Taiwan 50 Index (as shown in

Table 1) announced in June 2022 as our research objects. They are Taiwan Semiconductor Manufacturing Co., Ltd. (TSMC, 2330.TW), United Microelectronics Corporation (UMC, 2303.TW), Delta Electronics, Inc., (DELTA, 2308.TW), Evergreen Marine Corporation (EMC, 2603.TW), Formosa Chemicals & Fibre Corporation (FCFC, 1326.TW), and Yuanta Financial Holding Co., Ltd. (YFH, 2885.TW).

Among these companies, TSMC and UMC are the two leaders in Taiwan’s foundry industry, and they have been able to stay within the top five in the global semiconductor foundry field for a long time. Especially in the 2022 global wafer foundry industry revenue ranking, TSMC ranks first in the world and UMC ranks third in the world. The operating conditions of the two companies affect Taiwan’s economic development and also affect the trend of Taiwan stocks. DELTA is a leading manufacturer of power management and thermal management solutions, and it holds a world-class position in many product fields. It has been included in the Dow Jones Sustainability Indexes for twelve consecutive years (2011–2022). EMC has written many brilliant records in the history of container shipping. So far, it has taken the leading position in the world in terms of fleet size and container carrying capacity. FCFC is one of the main members of the Formosa Plastics Group. Whether it is textile, fiber products or petrochemical products, the company has a leading position in Taiwan and Asia. YFH is a financial holding company that develops on the dual axes of securities investment and commercial banking. Its market share in each business is one of the main market leaders, and it has long been recognized by investors.

We use the ten minute interval trading prices of these six stocks as input data for the model, and the data range is from 1 June 2022 to 18 November 2022. In order to avoid the sharp fluctuations in stock prices caused by “opening” and “closing”, the trading time interval of this study is locked from the original [9:00,13:00] to [9:01,13:21] for discussion. That is to say, we only consider twenty-seven time cut-off points, 9:01, 9:11, …, and 13:21, every day. The descriptive statistics of the stock prices of these six companies are shown in

Table 2, where S.D. is the abbreviation of the standard deviation. We can observe that the average stock prices of TSMC, DELTA, and EMC are relatively high, so the stock price variation is also large. The stock price trend chart is shown in

Figure 1. We can observe that except for EMC re-listing due to capital reduction on 19 September 2022, the stock price was faulted. The trend of other stocks seems to be very similar to the double bottoms pattern. Therefore, we reasonably suspect that there is some complex relationship among these stocks [

39], which we refer to as a spatial correlation.

2.2. A Brief Review of LSTM Model

Predicting stock prices using LSTM models has gained significant attention in financial markets due to their ability to capture complex temporal dependencies in historical price data. LSTM models are a type of recurrent neural network designed to overcome the vanishing gradient problem, making them particularly well suited for time series forecasting. The architecture of an LSTM model is composed of distinct layers, each serving a crucial role in processing sequential data. This design is particularly effective for capturing intricate temporal relationships within the data. A fundamental characteristic of LSTM models is their ability to stack multiple LSTM cells (shown in

Figure 2) on top of each other in a layered fashion. This stacking facilitates the hierarchical learning of patterns and relationships within sequential data, making it a robust choice for various time series tasks.

Within each LSTM cell, a series of gates regulates the flow of information throughout the sequence. Their primary function is to control how information is introduced into the network, stored within the cell state, and ultimately released for prediction. The forget gate, in particular, is responsible for filtering out information that the model deems irrelevant or outdated. Only information deemed pertinent and aligned with the model’s learning objectives is retained, while less relevant data are actively discarded. This selective retention and discarding of information through the forget gate enables LSTM models to focus on the most meaningful aspects of the input sequence, enhancing their ability to make accurate predictions and capture underlying patterns effectively.

Let

be the input at time

t. We also use

and

to denote the previous hidden state and cell state, respectively, at time

. The initial stage in the LSTM architecture is the forget gate. This gate plays a crucial role in determining the relevance of elements within the cell state, essentially acting as the neural network’s filter for long-term memory. It makes this determination based on information from both the prior hidden state and the new input data. The network operating within the forget gate is trained to assign values close to 0 to information it deems irrelevant and values close to 1 to information it considers relevant. This process allows the LSTM to selectively retain or discard information from the cell state, enabling it to focus on what is most important for its learning objectives. The calculation method of the forgetting probability is given by

where

and

are weight matrix and bias vector parameters, respectively, corresponding to the forget gate which needs to be learned during training.

In the subsequent phase of the LSTM process, we encounter the input gate and the new memory network. Their primary purpose at this stage is to determine what fresh information should be integrated into the network’s long-term memory, known as the cell state. This decision hinges on a careful evaluation of both the previous hidden state and the current input data. Similar to the forget gate, the output value from the input gate holds significant meaning. A low output value signals that the corresponding element of the cell state should remain unaltered, indicating a decision to not update that specific aspect of the memory. Crucially, the new memory update vector serves as a blueprint for adjusting each component of the long-term memory, the cell state. It essentially guides the LSTM in determining how much each memory element should be modified based on the most recent data, ensuring the model’s adaptability and responsiveness to evolving information. Let

,

,

, and

be the corresponding weight matrices and bias vectors. The functions of the input gate and the new memory network are

and

respectively. The internal state can be updated by

where ⊙ denotes the Hadamard product.

In the concluding phase of an LSTM’s operation, the pivotal task is to derive the new hidden state by leveraging the recently updated cell state, the preceding hidden state, and the incoming input data. The output gate essentially acts as a decision-maker, regulating which parts of the updated cell state and the previous hidden state should contribute to the final hidden state. This filtration mechanism ensures that only the most pertinent and contextually relevant information is incorporated, enabling the LSTM model to maintain a precise and informative hidden state while avoiding unnecessary complexity. The output gate is calculated as

where

and

are weight matrices and bias vector parameters with respect to the output gate, respectively. The updated cell state is constrained to [−1,1] through the tanh activation function, and then the final new hidden state is given by

2.3. Spacetimeformer Model

Time series forecasting plays an important role in many fields, including weather forecasting, traffic conditions, and financial forecasting. In the past, LSTM had excellent performance in NLP tasks. However, the input of LSTM is a vector, and the input must be processed step by step. Moreover, due to the recursive structure, LSTM cannot capture the long-term correlation in the sequence. Compared with LSTM, the input of the Transformer is a matrix which can eliminate the order of the input so that each Token in the sequence can be processed in parallel. Therefore, the Transformer has been more widely used in time series forecasting tasks recently. A Transformer basically consists of a series of encoder and decoder layers whose input is a matrix. The encoder uses the attention mechanism to understand the correlation between Tokens, while the decoder uses the information obtained from the encoder to produce task-specific predictions.

Time series forecasting is typically based on the sequence-to-sequence approach, where past variable values within a time range of

k steps are used to predict future target values for

h steps. We assume that

represents the timestamp value at time

t (e.g., year, month, date), and that

, where

N is the number of variables, represents the response vector at time

t. Given the timestamps

and response sequences

, the model would output the response sequences

for the future steps

. The Informer, proposed by Zhou et al. [

33], is an encoder–decoder Transformer architecture for time series forecasting models. This model embeds the time series into a high-dimensional space and uses zeros as placeholders for the unknown target sequence

to embed them into the same dimension. The model adds the timestamps (

x) and response vectors (

) of the sequence to create an input sequence

consisting of

Tokens. This architecture has been demonstrated by Zhou et al. [

33] for long-term forecasting. However, this setting will cause the model to learn only temporal features, while ignoring the spatial correlation between response variables.

In the past, many advanced models for multivariate time series forecasting tasks relied on attention mechanisms between time steps. Such models may be able to capture temporal correlations, but unfortunately have not been able to capture the different spatial relationships between variables. In order to solve this issue, Grigsby et al. [

38] proposed the Spacetimeformer model based on the Informer encoder–decoder architecture. The model flattens each multivariate vector (

) along the dimension of time into a timestamped

N vector to represent the transformation of the input data into a spatiotemporal sequence (shown in

Figure 3). Therefore, the new embedded sequence

is

. Consequently, when

passes through the attention layer, there is a direct path between each Token, allowing the model to capture both temporal and spatial information. This enables the Spacetimeformer model to capture correlations between different variables at different time steps.

The order of the inputs cannot be interpreted because the attention mechanism puts the input sequence into the model at the same time. Therefore, we need to add relative position information through position embedding. Time marks are an important feature in time series forecasting; thus, time vector embedding, called Time2Vec embedding [

40], is added to capture the periodic and aperiodic relationships of time to produce accurate forecasts. We combine the straightened Time2Vec output with the stock price and project it onto the model through the forward propagation layer, which is called value and time embedding. This is the standard input sequence of the time series forecasting model, which enables each Token to contain time and stock price information. Furthermore, the model also needs to distinguish various stocks at different times; thus, variable embedding is added. The variable embedding straightens the variable indices along the dimension of time and projects each straightened variable index to the same dimension. Finally, the variable values, time embedding, and variable embedding are combined to create the input sequence (shown in

Figure 3b) for the encoder, so that each Token carries information about times, stocks, and prices. The attention mechanism is then used to accurately interpret the temporal and spatial information embedded in the sequence.

2.4. Experimental Design

In order to avoid affecting the performance of the model due to cross-day forecasting, we use daily moving windows, as shown in

Figure 4, to define the training set and testing set. Given any trading day from 3 June 2022 to 4 November 2022, we take time points 9:01, 9:11, …, and 12:21 as starting points. The fifty-five time points forward from this starting point are regarded as the input sequence, and we could predict the stock price (target sequence) every ten minutes in the next hour (a total of six steps). Let us take 9:01 as an example. The model can predict the stock price in the next 6 steps, 9:11, 9:21, …, and 10:01. Until we sample the last time point at 12:21, the model will predict the stock prices at 12:31, 12:41, …, and 13:21. This process, as shown in

Figure 5, is repeated until all the training data have been entered into the model, a total of 2205 sequence data. At the same time, we use a total of ten trading days from 7 November 2022 to 18 November 2022 as the testing data.

Finally, we compare the predictions of the Spacetimeformer model, which considers the interaction of time and space, with the Transformer model that only considers temporal correlations. We also explore whether the performance of the above two models is different from that of the earlier LSTM model. We use the mean absolute error rate (MAPE) and root mean square error (RMSE) as the indicators of the performance evaluation among the three models. The formulae of MAPE and RMSE are

and

respectively.

3. Results

First, we draw the trend chart of stock price predictions, as shown in

Figure 6,

Figure 7,

Figure 8,

Figure 9,

Figure 10 and

Figure 11, to show the performance results of different models. In all figures, the red line represents the real value, the blue line represents the Spacetimeformer model, the orange line represents the Transformer model, and the green line represents the LSTM model. Among them, “Step

i”, i = 1, 2, …6, represents the

ith step predictions through each model. At the same time, we use MAPE (shown in

Table 3,

Table 4,

Table 5,

Table 6,

Table 7 and

Table 8) and RMSE (shown in

Table 9,

Table 10,

Table 11,

Table 12,

Table 13 and

Table 14, respectively) to evaluate the error between the stock price predicted by each model and the real stock price. The trend chart, MAPE, and RMSE help us to more comprehensively evaluate the performance of stock price prediction between models.

Firstly, let us discuss the performance of the stock price forecast for TSMC. From

Figure 6, it is evident that the Spacetimeformer model provides the closest predictions to the true stock prices. While the Transformer model exhibits a decent performance in stock price prediction before 16 November, it becomes noticeably distorted thereafter. On the other hand, the LSTM model shows the opposite trend, performing poorly before 16 November and improving afterwards. Furthermore, from the evaluation indexes of MAPE and RMSE in

Table 3 and

Table 9, respectively, we can observe that the Spacetimeformer model is indeed significantly better than the other two.

For the performance of UMC’s stock price prediction, we can observe from

Figure 7 that the Spacetimeformer model maintains robustness. Its forecasted stock price is very close to the actual value. However, the performance of the Transformer model is inferior to the traditional LSTM model. Moreover, from the evaluation indexes of MAPE and RMSE in

Table 4 and

Table 10, respectively, it is clear that the Spacetimeformer model is better than the other two methods in UMC stock price prediction. However, some predictions of the Transformer model are indeed not as good as the LSTM model.

Next, we discuss the respective predictions of the three models for DELTA’s stock prices. From

Figure 8, we can observe that the Spacetimeformer model continues to exhibit the best predictive performance. The Transformer model performed next, but with a significantly larger deviation than previous predictions for TSMC and UMC. On the other hand, the LSTM model performed poorly, with significant bias in stock price predictions. As shown in

Table 5 and

Table 11, the stock price forecast generated by the Spacetimeformer model is indeed better than the other two models. The predictions of the LSTM model were even more disappointing.

Regarding the stock price forecast of EMC, from

Figure 9, we can observe that the stock price forecast of the Spacetimeformer model is still stable. The prediction performance of the LSTM model is the best prediction result so far. The Transformer model performed poorly, with the worst prediction results so far. From

Table 6 and

Table 12, we can clearly observe that the performance of the Spacetimeformer model and the LSTM model are almost comparable. It is worth mentioning that under the RMSE evaluation standard, LSTM is slightly better than Spacetimeformer in the fourth step of the prediction. However, the stock price prediction of the Transformer model is significantly inferior to the other two.

From

Figure 10, we can observe that the Spacetimeformer model’s predictions of TSMC’s stock prices are still better than the other two. The performance of the Transformer model or the LSTM model is still not as good as expected because there is a gap between the prediction and the true stock prices. From

Table 7 and

Table 13, we can observe that the stock price prediction of the Spacetimeformer model is significantly better than the other two, and the performance of the Transformer model or the LSTM model is poor.

Finally, we discuss the stock price forecast of YFH. From

Figure 11, we can observe that the stock price forecast of the Spacetimeformer model is still excellent. However, the Transformer model performs slightly better than the LSTM model, but both often underestimate the stock price. From

Table 8 and

Table 14, we can clearly observe that the stock price prediction of the Spacetimeformer model is also better than the other two, and the performance of the Transformer model or the LSTM model really needs to be strengthened.

According to the above results, the Spacetimeformer model can predict the stock price in the next ten minutes, twenty minutes or even sixty minutes with the smallest error. It significantly outperforms the other two models for stock price prediction at all steps. The Transformer model is next, and the LSTM model has the highest error. Most of the predictions from the Spacetimeformer model fall close to the true value, despite errors from the true data. However, these errors can be explained as natural fluctuations. Although the Transformer model outperforms the LSTM model, the predictions at some time points are significantly biased. It shows that its smoothness processing is poor, and it also confirms again that the Spacetimeformer model with the concept of time and space performs better in stock price prediction. The Spacetimeformer model can continuously capture important trend changes in stock price forecasts and provide relatively stable forecast results. In the predictions of multiple stock prices, both the Transformer and LSTM models show very unstable performances, which further proves that the Transformer model combined with the daily moving windows method may perform better in long-term forecasting.

4. Discussion

Investment and financial management has become an important topic in modern society, and stock trading is an investment project that people have taken much interest in. In stock trading, quantitative trading is a field worthy of research. We can use a large amount of historical data and market information to predict the stock price trend in order to obtain better trading opportunities. In recent years, with the development of artificial intelligence and deep learning, the LSTM and Transformer models have shown good performance in stock price prediction. The traditional LSTM and the latest Transformer models mainly focus on the time-to-time correlation but ignore the spatial relationship between stocks. There are often complex and dynamic relationships between stocks, such as AI concept stocks, electric vehicle concept stocks, etc. It is important to consider the mutual influence between stocks, but LSTM and Transformer models mainly focus on the time series characteristics of individual stocks. The interaction between multiple stocks is relatively less explored. Therefore, this article uses the Spacetimeformer model with a space–time mechanism as a model for predicting stock prices. The model is trained through the interaction mechanism of space and time to capture the relationship between different stocks at different times.

This study uses TSMC, UMC, DELTA, EMC, FCFC, and YFH from the constituent stocks of the Taiwan 50 Index as our research targets. The stock price every ten minutes from 1 June 2022 to 18 November 2022 is used as our research data. At the same time, we avoid the influence of cross-day forecasts, and use the method of daily moving windows to define the training set and testing set. In addition, through multi-time-step forecasting, we can predict the stock prices every ten minutes in the next hour. We compare it with the Transformer and LSTM models that only consider the temporal relationship, and finally use the MAPE index to evaluate the performance of the three models.

The research results show that the Spacetimeformer model with the space–time concept performs better in stock price prediction than the Transformer and LSTM models. The Spacetimeformer model can continuously capture important trend changes in stock price forecasts and provide relatively stable forecast results. Furthermore, the Spacetimeformer model’s predictions for the next ten minutes, twenty minutes, and even one hour are very close to the true values. This highlights the excellent performance of the Spacetimeformer model in both short- and long-term predictions. In contrast, the predictions of the Transformer and LSTM models at different time points might vary, indicating a less stable performance. This also further demonstrates the superior performance of models based on the Spacetimeformer architecture in both short- and long-term predictions. The experimental design of this study adopts a daily moving window approach to avoid the problems of cross-day forecasting and using long-term models for prediction. This allows us to more accurately assess model performance and leverage the adaptive nature of deep learning. In summary, the Spacetimeformer model combined with daily moving windows demonstrates a superior performance in stock price prediction compared to the Transformer and LSTM models, indicating the significance and value of spatiotemporal concepts for predictive modeling.

Therefore, we suggest that people who want to predict stock prices or other financial instruments use the Spacetimeformer model with a time–space interaction mechanism to obtain better results. Based on the above research results, we hope to further verify whether the forecasting performance is also excellent for the stock markets of different countries in the future. We hope to use it to discuss different financial instruments, such as foreign exchange, futures contract, cryptocurrency, etc. This will help us provide more diverse investment forecasts and insights.