Abstract

The paper makes a case that the current discussions on replicability and the abuse of significance testing have overlooked a more general contributor to the untrustworthiness of published empirical evidence, which is the uninformed and recipe-like implementation of statistical modeling and inference. It is argued that this contributes to the untrustworthiness problem in several different ways, including [a] statistical misspecification, [b] unwarranted evidential interpretations of frequentist inference results, and [c] questionable modeling strategies that rely on curve-fitting. What is more, the alternative proposals to replace or modify frequentist testing, including [i] replacing p-values with observed confidence intervals and effects sizes, and [ii] redefining statistical significance, will not address the untrustworthiness of evidence problem since they are equally vulnerable to [a]–[c]. The paper calls for distinguishing between unduly data-dependant ‘statistical results’, such as a point estimate, a p-value, and accept/reject , from ‘evidence for or against inferential claims’. The post-data severity (SEV) evaluation of the accept/reject results, converts them into evidence for or against germane inferential claims. These claims can be used to address/elucidate several foundational issues, including (i) statistical vs. substantive significance, (ii) the large n problem, and (iii) the replicability of evidence. Also, the SEV perspective sheds light on the impertinence of the proposed alternatives [i]–[iii], and oppugns [iii] the alleged arbitrariness of framing and which is often exploited to undermine the credibility of frequentist testing.

1. Introduction

The replication crisis has dominated discussions on empirical evidence and their trustworthiness in scientific journals for the last two decades. The broad agreement is that the non-replicability of such empirical evidence provides prima facie evidence of their untrustworthiness; see National Academy of Sciences [1], Wasserstein and Lazar [2], Baker [3], Hoffler [4]. A statistical study is said to be replicable if its empirical results can be independently confirmed – with very similar or consistent results – by other researchers using akin data and modeling the same phenomenon of interest.

Using the Medical Diagnostic Screening (MDS) perspective on Neyman-Pearson (N-P) testing Ioannidis [5] declares “… most published research findings are false”, attributing the untrustworthiness of evidence to several abuses of frequentist testing, such as p-hacking, multiple testing, cherry-picking and low power. This diagnosis is anchored on apparent analogies between the type I/II error probabilities and the false negative/positive probabilities of the MDS model, tracing the untrustworthiness to ignoring the Bonferroni-type adjustments needed to ensure that the actual error probabilities approximate the nominal ones. In light of that, leading statisticians in different applied fields called for reforms which include replacing p-values with observed Confidence Intervals (CIs), using effect sizes, and redefining statistical significance; see Benjamin et al. [6].

In the discussion that follows, a case is made that Ioannidis’ assessment about the untrustworthiness of published empirical findings is largely right. Still, the veracity of viewing the MDS model as a surrogate for N-P testing, and its pertinence in diagnosing the untrustworthiness of evidence problem are highly questionable. This stems from the fact that the invoked analogies between the type I/II error probabilities and the false negative/positive probabilities of the MDS model are more apparent than real, since the former are hypothetical/unobservable/unconditional and the latter are observable conditional probabilities; see Spanos [7].

A more persuasive case can be made that the untrustworthiness of empirical evidence stems from the broader problem of the uninformed and recipe-like implementation of statistical modeling and inference that contributes to untrustworthy evidence in several interrelated ways, including:

[a] Statistical misspecification: invalid probabilistic assumptions imposed (implicitly or explicitly) on one’s data, comprising the invoked statistical model .

[b] ‘Empirical evidence’ is often conflated with raw ‘inference results’, such as point estimates, effect sizes, observed CIs, accept/reject results, and p-values, giving rise to (i) erroneous evidential interpretations of these results, and (ii) unwarranted claims relating to their replicability.

[c] Questionable modeling strategies that rely on curve-fitting of hybrid models—an amalgam of substantive subject matter and probabilistic assumptions—guided by error term assumptions and evaluated on goodness-of-fit/prediction grounds. The key weakness of this strategy is that excellent goodness-of-fit/prediction is neither necessary nor sufficient for the statistical adequacy of the selected model since it depends crucially on the invoked loss function whose choice is based on information other than the data. It can shown that statistical models chosen on goodness-of-fit/prediction grounds are often statistically misspecified; see Spanos [8].

Viewed in the broader context of [a]–[c], the abuses of frequentist testing represent the tip of the untrustworthy evidence iceberg. It also questions the presumption that replicability attests to the trustworthiness of empirical evidence. As argued by Leek and Peng [9]: “… an analysis can be fully reproducible and still be wron.” (p. 1314). For instance, dozens of MBA students confirm the efficient market hypothesis (EMH) on a daily basis because they follow the same uninformed and recipe-like, implementation of statistics, unmindful of what it takes to ensure the trustworthiness of the ensuing evidence by addressing the issues [a]–[c]; see Spanos [10].

The primary focus of the discussion that follows is on [b] with brief comments on [a] and [c], but citing relevant published papers. The discussion revolves around the distinction between unduly data-specific ‘inference results’, such as point estimates, observed CIs, p-values, effect sizes, and the accept/reject results, and ensuing inductive generalizations from such results in the form of ‘evidence for or against germane inferential claims’ framed in terms of the unknown parameters The crucial difference between ‘results’ and ‘evidence’ is twofold:

(a) the evidence is framed in terms of post-data error probabilities aiming to account for the uncertainty arising from the fact that ‘inference results’ rely unduly on the particular data which constitutes a single realization of the sample , and

(b) the evidence, in the form of warranted inferential claims, enhances learning from data about the stochastic mechanism that could have given rise to this data.

As a prelude to the discussion that follows, Section 2 provides a brief overview of Fisher’s model-based frequentist statistics with special emphasis on key concepts and pertinent interpretations of inference procedures that are invariably misconstrued by the uninformed and recipe-like implementation of statistical modeling and inference. Section 3 discusses a way to bridge the gap between unduly data-specific inference results and an evidential interpretation of such results, in the form of the post-data severity (SEV) evaluation of the accept/reject results. The SEV evaluation is used to elucidate or/and address several foundational issues that have bedeviled frequentist testing since the 1930s, including the large n problem, statistical vs. substantive significance and the replicability of evidence, as opposed to the replicability of statistical results. In Section 4 the SEV evaluation is used to appraise several proposed alternatives to (or modifications of) N-P testing by the replication literature, including replacing the p-value with effect sizes and observed CIs and redefining statistical significance. Section 5 compares and contrasts the evidential account based on the SEV evaluation with Royall’s [11] Likelihood Ratio approach to statistical evidence.

2. Model-Based Frequentist Inference

2.1. Fisher’s Statistical Induction

Fisher [12] pioneered modern frequentist statistics by viewing data as a typical realization of a prespecified parametric statistical model whose generic form is:

where and denote the parameter and sample space, respectively, refers to the (joint) distribution of the sample . The initial choice (specification) of should be a response to the question: “Of what population is this a random sample?” (Fisher, [12], p. 313), underscoring that: ‘the adequacy of our choice may be tested a posteriori’ (ibid., p. 314). This can be secured by establishing the statistical adequacy (approximate validity) of using thorough Mis-Specification (M-S) testing; see Spanos [13].

Selecting for data has a twofold objective (Spanos [14]):

(i) is selected with a view to account for the chance regularity patterns exhibit by data by accounting for these regularities using appropriate probabilistic assumptions relating to ∈ from three broad categories: Distribution (D), Dependence (M) and Heterogeneity (H).

(ii) is parametrized [∈] in a way that can shed light on the substantive questions of interest using data . When such questions are framed in terms of a substantive model, say ∈, one needs to bring out the implicit statistical model without restricting its parameters and ensure that and are related via a set of restrictions connecting to the data via .

Example 1. Consider the well-known simple Normal model:

where ‘’ stands for ‘ is Normal (D), Independent (M) and Identically Distributed (H)’, It is important to emphasize that revolves around in (1) since it encapsulates all its probabilistic assumptions:

and provides the cornerstone for all forms of statistical inference.

The primary objective of model-based frequentist inference is to ‘learn from data ’ about where denotes the ‘true’ in . This is shorthand for saying that there exists a ∈ such that ∈ could have generated .

The main variants of statistical inference in frequentist statistics are: (i) point estimation, (ii) interval estimation, (iii) hypothesis testing, and (iv) prediction. These forms of statistical inference share the following features:

(a) They assume that the prespecified statistical model is valid vis-à-vis data

(b) They aim is to learn about using statistical approximations relating to

(c) Their inferences are based on a statistic (estimator, test statistic, predictor), say , whose sampling distribution, , (∀ stands ‘for all’) is derived directly from the distribution of the sample of using two different forms of reasoning with prespecified values of :

(a) factual (estimation and prediction): presume that , and

(b) hypothetical (testing): : (what if vs. : (what if . The crucial difference between these two forms of reasoning is that factual reasoning does not extend to post-data (after is known) evaluations relating to evidence, but hypothetical reasoning does. This plays a key role in the following discussion.

The primary role of the sampling distribution of a statistic , is to frame the uncertainty relating to the fact that is just one, out of all realizations, of so as to provide (i) the basis for the optimality of the statistic as well as (ii) the relevant error probabilities to ‘calibrate’ the capacity (optimality) of inference based on ; how often the inference procedure errs.

The statistical adequacy (approximate validity) of plays a pivotal role in securing the reliability of inference and the trustworthiness of ensuing evidence because it ensures that the nominal optimality—derived by assuming the validity of —is also actual for data and secures the approximate equality between the actual (based on and the nominal error probabilities. In contrast, when is statistically misspecified:

(a) the joint distribution of the sample and the likelihood function are both erroneous,

(b) all sampling distributions derived by invoking the validity of will be incorrect, (i) giving rise to ‘non-optimal’ estimators, and (ii) sizeable discrepancies between the actual and nominal error probabilities.

Applying a significance level test when the actual type I error probability is 0.97 due to invalid probabilistic assumptions will yield untrustworthy evidence. Increasing the sample size will often worsen the untrustworthiness by increasing the discrepancy between actual and nominal error probabilities; see Spanos [15], p. 691. Hence, the best way to keep track of the relevant error probabilities is to establish the statistical adequacy of . It is important to emphasize that other forms of statistical inference, including Bayesian and Akaike-type model selection procedures, are equally vulnerable to statistical misspecification since they rely on the likelihood function ; see Spanos [16].

In the discussion that follows, it is assumed that the invoked statistical model is statistically adequate to avoid repetitions and digressions, but see Spanos [17] and [18] on why [a] statistical misspecification calls into question important aspects of the current replication crisis literature.

2.2. Frequentist Inference: Estimation

Point estimation revolves around an estimator, say that pinpoints (as closely as possible) . The clause ‘as closely as possible’ is framed in terms of certain ‘optimal’ properties stemming from the sampling distribution including: unbiasedness, efficiency, sufficiency, consistency, etc.; see Casella and Berger [19]. Regrettably, the factual reasoning, presuming underlying the derivation of the relevant sampling distributions is often implicit in traditional textbook discussions, resulting in erroneous interpretations and unwarranted claims.

Example 1 (continued). The relevant sampling distributions associated with (2), are appositely stated as:

where denote the ‘true’ values of the unknown parameters, denotes the chi-square distribution, and the Student’s t distribution, with degrees of freedom. The problem is that without ‘’ the distributional results in (4) will not hold. For instance, what ensures in [i] that and The answer is the unbiasedness and full efficiency of , respectively, both of which are defined at . There is no such a thing as a sampling distribution since the NIID assumptions imply that each element of the sample comes from a single Normal distribution with a unique mean and variance around which all forms of statistical inference revolve. Hence, the claim by Schweder and Hjort [20] that “has a fixed distribution regardless of the values of the interest parameter and the (in this context) nuisance parameter ” (p. 15), i.e.,

makes no sense from a statistical inference perspective.

Point estimation is very important since it provides the basis for all other forms of optimal inference (CIs, testing, and prediction) via the optimality of a point estimator A point estimate , by itself, however, is considered inadequate for learning from data since it is unduly data specific; it ignores the relevant uncertainty stemming from the fact that constitutes a single realization (out of as framed by the sampling distribution, of hence is often reported as .

Interval estimation accounts for the relevant uncertainty in terms of an error probability of ‘overlaying’ the true value of , based on , in the form of the Confidence Interval (CI):

where the statistics and denote the lower and upper (random) bounds that ‘overlay’ with probability An CI is optimal when its expected length is the shortest and referred to as Uniformly Most Accurate (UMA); see Lehmann and Romano [21].

2.3. Frequentist Inference: Neyman-Pearson (N-P) Testing

The reasoning underlying hypothesis testing is hypothetical, based on prespecified values of as they relate to : and : .

Example 1 (continued). Consider testing the hypotheses of interest:

in the context of (2). An optimal N-P test for the hypotheses in (9) is defined in terms of a test statistic and the rejection region:

whose error probabilities are evaluated using:

where is the Student’s t distribution with degrees of freedom, and:

where St is a noncentral Student’s t distribution with It is important to emphasize that (12) differs from (11) in terms of their mean, variance, and higher moments, rendering (12) non-symmetric for ; see Owen [22].

The sampling distribution in (11) is used to evaluate the pre-data type I error probability and the post-data [ is known] p-value:

The sampling distribution in (12) is used to evaluate the power of :

as well as the type II error probability:

The test in (10) is optimal in the sense of being Uniformly Most Powerful (UMP), i.e., is the most effective -level test for detecting any discrepancy () of interest from ; see Lehmann and Romano [21].

Why prespecify at a low threshold, such as ? Neyman and Pearson [23] put forward two crucial stipulations relating to the framing of : and : to ensure the effectiveness of N-P testing and the informativeness of its results:

[1] and should form a partition of (p. 293) to avoid

[2] and should be framed in such a way so as to ensure that the type I error is the more serious of the two.

To provide some intuition for [2], they use the analogy with a criminal trial where to ensure [2] one should use the framing, : not guilty vs. : guilty, to render the type I error of sending an innocent person to prison, more serious than acquitting a guilty person (p. 296). Hence, prespecifying at a small value and maximizing the power over requires deliberation about the framing. A moment’s reflection suggests that stipulation [2] implies that high power is needed around the potential neighborhood of . Regrettably, stipulations [1]–[2] are often ignored, undermining the proper implementation and effectiveness of N-P testing; see Section 4.1.

Returning to the power of the noncentrality parameter indicates that the power increases monotonically with and and decreases with . This suggests that the inherent trade-off between the type I and II error probabilities in N-P testing, in conjunction with the sample size n and plays a crucial role in determining the capacity of a N-P test. This means that the selection of the significance level should always take into account the particular n for data , since an uninformed choice of can give rise to two problems.

The small n problem. This arises when the sample size n is not large enough generate any learning from data about since it has insufficient power to detect particular discrepancies of interest. To avoid underpowered tests the formula in (14) can be used pre-data (before is known) to evaluate the sample size n necessary for to detect such discrepancies with high enough probability (power). That is, for a given , there is always a small enough n that would accept despite the presence of a sizeable discrepancy of interest. This also undermines the M-S testing to evaluate the statistical adequacy of the invoked since a small n will ensure that M-S tests do not have sufficient power to detect existing departures from the model assumptions; see Spanos [18].

The large n problem. This arises when a practitioner uses conventional significance levels, say , for very large sample sizes, say 10,000. The source of this problem is that for a given as n increases the power of a test increases and the p-value decreases, giving rise to over-sensitive tests. Fisher [24] explained why: “By increasing the size of the experiment [n], we can render it more sensitive, meaning by this that it will allow of the detection of … quantitatively smaller departures from the null hypothesis.” (pp. 21–22). Hence, for a given there is always a large enough n that would reject for any discrepancy (however small, say ) from a null value ; see Spanos [25].

It is very important to emphasize at the outset that the pre-data testing error probabilities (type I, II, and power) are Spanos [7]:

(i) hypothetical and unobservable in principle since they revolve around ,

(ii) not conditional on values of since ‘presuming ’ constitute neither events nor random variables, and

(iii) assigned to the test procedure to ‘calibrate’ its generic (for any ) capacity to detect different discrepancies from for a prespecified .

As mentioned above, the cornerstone of N-P testing is the in-built trade-off between the type I and II error probabilities, which Neyman and Pearson [23] addressed by prespecifying at a low value and maximizing , seeking an optimal test; see Lehmann and Romano [21]. The primary role of the testing error probabilities is to operationalize the notions of ‘statistically significant/insignificant’ in terms of statistical approximations relating to and framed in terms of the sampling distribution of a test statistic .

This relates directly to the replication crisis since for a misspecified one cannot keep track of the relevant error probabilities to be able to adjust them for p-hacking, data-dredging, multiple testing and cherry-picking, in light of the fact that the actual error probabilities will be different from the nominal ones; see Spanos and McGuirk [26].

2.4. Statistical Inference ‘Results’ vs. ‘Evidence’ for or against Inferential Claims

Statistical results, such as a point estimate, say an observed CI, say an effect size, a p-value and the accept/reject results, are not replicable in principle, in the sense that akin data do not often yield very similar numbers since they are unduly data-specific when contrasted with broader inferential claims relating to inductive generalizations stemming from such results. In particular, the accept/reject results, (i) are unduly data-specific, (ii) are too coarse to provide informative enough evidence relating to , and (iii) depend crucially on the particular statistical context:

which includes the statistical adequacy of as well as the sample size n.

Example 1 (continued). It is often erroneously presumed that the optimality of the point estimators, , can justify the following inferential claims for the particular data when n is large enough.

(a) The point estimates and based on data ‘approximate closely’ (≃) the true parameter values and i.e.,

Invoking limit theorems, such as strong consistency, will not alleviate the problem since, as argued by Le Cam [27], p. xiv: “… limit theorems ‘as n tends to infinity’ are logically devoid of content about what happens at any particular n.” The inferential claims in (16) are unwarranted since and ignore the relevant uncertainty associated with their representing a single point, from the relevant sampling distributions: see Spanos [7].

(b) The inferential claim associated with an optimal CI for in (7) relates to CI overlaying with probability but its optimality does not justify the claim that the observed CI:

overlays with probability As argued by Neyman [28]: “… valid probability statements about random variables usually cease to be valid if the random variables are replaced by their particular values.” (p. 288). In terms of the underlying factual reasoning, post-data has been revealed but it is unknowable whether is within or outside (17); see Spanos [29]. Indeed, one can make a case that the widely held impression that an effect size ( [30]) provides more reliable information about the ‘scientific effect’ than p-values and observed CIs stems from the unwarranted inferential claim in (16), i.e., an optimal estimator of justifies the inferential claim for n large enough.

(c) The N-P testing ‘accept ’ with a large p-value, and rejecting with a small p-value, do not entail evidence for and , respectively, since such evidential interpretations are fallacious; see Mayo and Spanos [14].

3. Post-Data Severity Evaluation of Testing Results

3.1. Accept/Reject Results vs. Evidence for or against Inferential Claims

Bridging the gap between the binary accept/reject results and learning from data about using statistical approximations framed in terms of the sampling distribution of a test statistic , has been confounding frequentist testing. Mayo and Spanos [31] proposed the post-data severity (SEV) evaluation of the accept/reject results as a way to convert them into evidence for germane inferential claims. The SEV differs from other attempts to address this issue in so far as:

(i) The SEV evaluation constitutes a principled argument framed in terms of a germane inferential claim relating to (learning from data ).

(ii) The SEV evaluation is guided by the sign and magnitude of the observed test statistic, and not by the prespecified significance level ; see Spanos [32].

(iii) The SEV evaluation accounts fully for the relevant statistical context in (15).

(iv) Its germane inferential claim, in the form of the discrepancy from the null value, is warranted with high probability with and when all the different ways it can be false have been adequately probed and forfended (Mayo [33]).

The most crucial way to forfend a false accept/reject result is to ensure that is statistically adequate for data , before any inferences are drawn. This is because the discrepancies induced by invalid probabilistic assumptions will render impossible the task of controlling (keeping track of) the relevant error probabilities in terms of which N-P tests are framed. Hence, for the discussion that follows it is assumed that is statistically adequate for the particular data .

Example 2. Consider the simple Bernoulli (Ber) model:

where . Let the hypotheses of interest be:

in the context of (18). It can be shown that the t-type test:

where is Uniformly Most Powerful (UMP); see Lehmann and Romano [21]. The sampling distribution of evaluated under (hypothetical), is:

For the ‘standardized’ Binomial distribution, can be approximated (≃) by the N The latter can be used to evaluate the type I error probability and the p-value:

The sampling distribution of evaluated under (hypothetical) is:

whose tail area probabilities can be approximated using:

(24) is used to derive the type II error probability and the power of the test in (20) which increases monotonically with and and decreases with .

The post-data severity (SEV) evaluation transforms the ‘accept/reject results’ into ‘evidence’ for or against germane inferential claims framed in terms of . The post-data severity evaluation is defined as follows:

A hypothesis H ( or ) passes a severe test with data if:

(C-1) accords with H, and

(C-2) with very high probability, test would have produced a result that ‘accords less well’ with H than does, if H were false; see Mayo and Spanos [31,34].

Example 2 (continued). Consider data referring to newborns during 1995 in Cyprus, 5152 boys and 4717 girls . In this case, there is no reason to question the validity of the IID probabilistic assumptions since nature ensures their validity when such data are collected over a sufficiently long period of time in a particular locality. Applying the optimal test in (20) with (large n) yields:

Broadly speaking, this result indicates that the ‘true’ value of lies within the interval which is too coarse to engender any learning about

The post-data severity outputs a germane evidential claim that revolves around a discrepancy warranted by test and data with high probability. In contrast to pre-data testing error probabilities (type I, II, and power), severity is a post-data error probability that uses additional information in the form of the sign and magnitude of , but shares with the former the underlying hypothetical reasoning: presuming that .

Given that ,

[C-1] accords with and since , the relevant inferential claim takes the form

[C-2] revolves around the event: “outcomes that accord less well with than does”, i.e., event : and its probability:

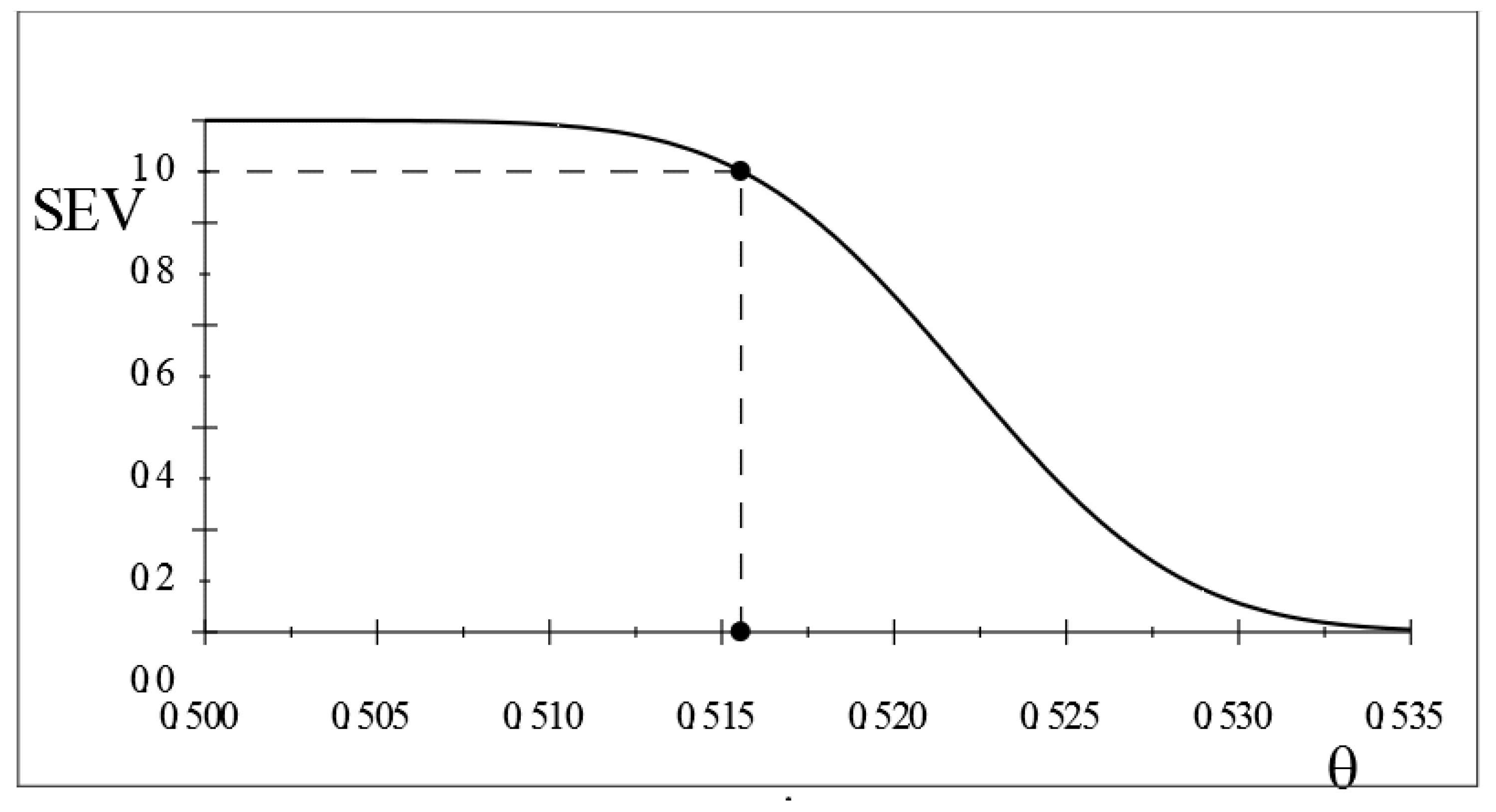

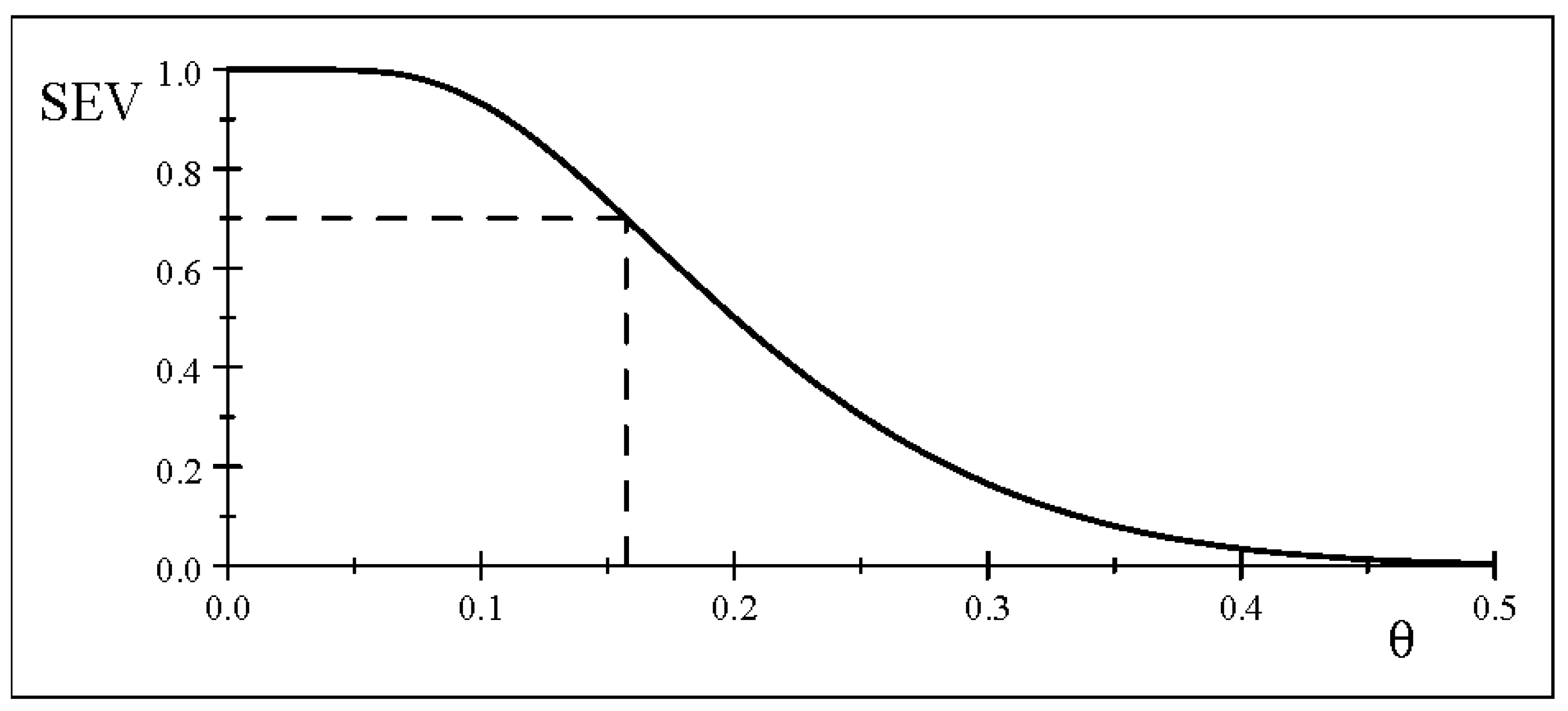

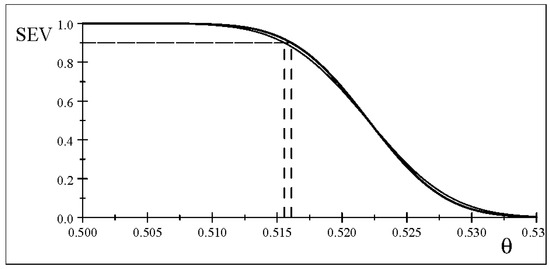

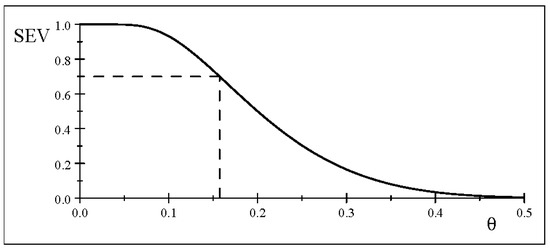

stemming from (23). This severity curve is depicted in Figure 1.

Figure 1.

Severity curve for test and data .

In the case of ‘reject ’ the objective is to evaluate the largest such that any less than would very probably, at least 0.9, have resulted in a smaller observed difference, warranted by test and data :

Like all error probabilities, the SEV evaluation is always attached to the procedure itself as it pertains to the inferential claim . The inferential claim warranted with probability , however, can be ‘informally’ interpreted as evidence for a germane neighborhood of , for some , arising from the SEV evaluation narrowing down the coarse associated with the ‘reject ’. This narrowing down of the potential neighborhood of enhances learning from data.

It is also important to emphasize that the SEV evaluation of the inferential claim , with discrepancy based on , gives rise to which implies that there is no evidence for More generally, the SEV evaluation will invariably provide evidence against the inferential claim Hence, the importance of distinguishing between ‘statistical results’, such as , and ‘evidence’ for or against inferential claims relating to .

What is the nature of evidence the post-data severity (SEV) gives rise to? Since the objective of inference is to learn from data about phenomena of interest via learning about , the evidence from the SEV comes in the form of an inferential claim that revolves around the discrepancy warranted by the particular data and test with high enough probability, pinpointing the neighborhood of as closely as possible. In the above case, the warranted discrepancy is or equivalently, , with probability 0.9. Although all probabilities are assigned to the inference procedure itself as it relates to the inferential claim the SEV evaluation can be viewed intuitively as narrowing the coarse reject result entailing down to

The most important attributes of the SEV evaluation are:

[i] It is a post-data error probability stemming from hypothetical reasoning that takes into account the statistical context in (15) and is guided by .

[ii] Its evaluation is invariably based on a discrepancy relating to the noncentral distribution in (24), where and use the same n to output the warranted discrepancy .

3.2. The Robustness of the Post-Data Severity Evaluation

To exemplify the robustness of the SEV evaluation with respect to changing , consider replacing in (19) with the Nicolas Bernoulli value .

Example 2 (continued). Applying the same test yields

with . Is this ‘accept ’ result at odds with the previous ‘reject ’ result? In light of the relevant inferential claim is identical to the case with as it relates to the event : Thus, the severity curve is identical to one in Figure 1, but now defined with respect to , i.e.,

as shown in Table 1, a feature of a sound account of statistical evidence.

Table 1.

SEV for ‘reject ’ and ‘accept ’ with (.

3.3. Post-Data Severity and the Replicability of Evidence

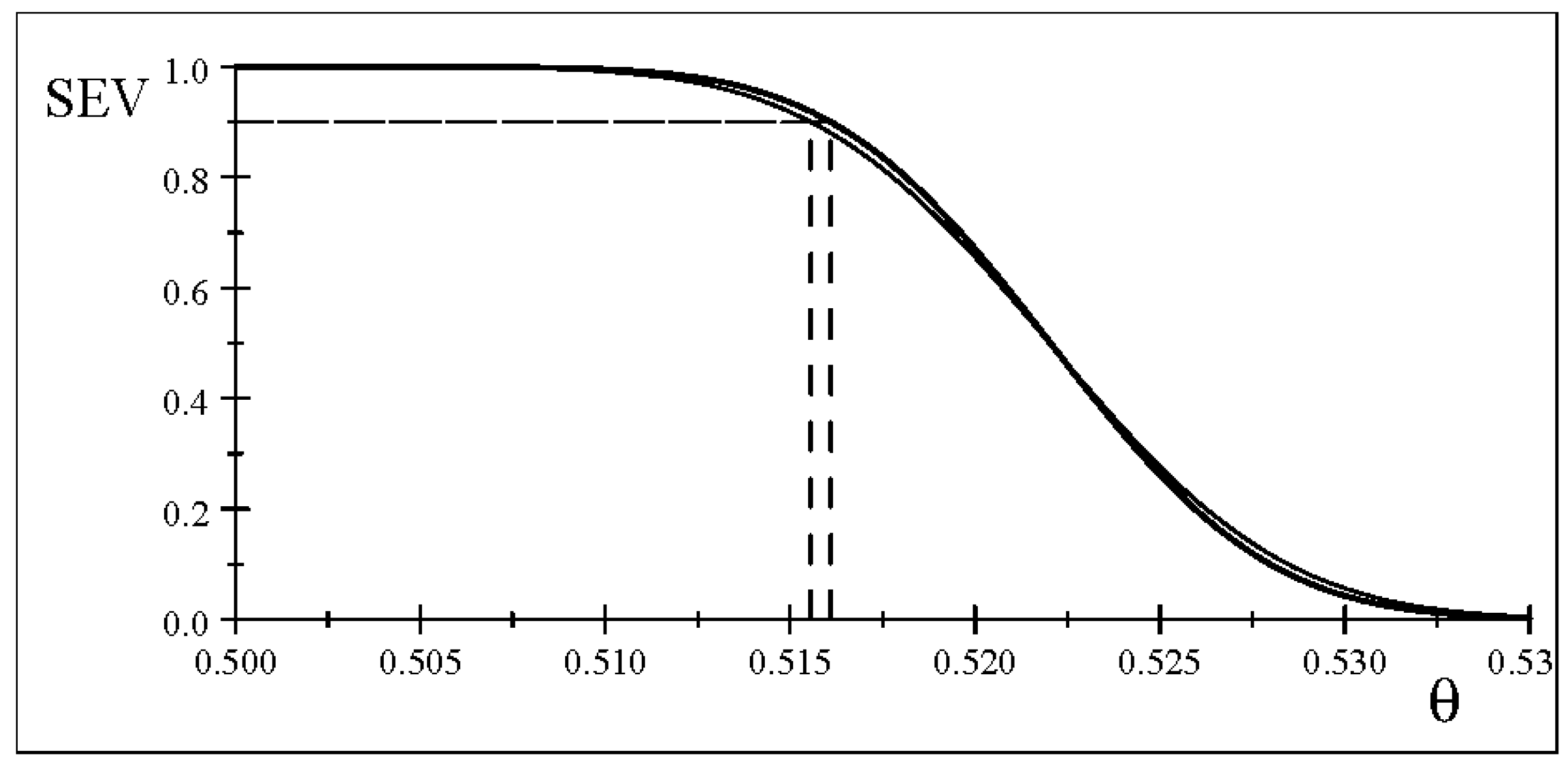

The post-data severity evaluation of the ‘accept/reject ’ results can also provide a more robust way to evaluate the replicability of empirical evidence based on comparing the discrepancies from the null value, warranted with similar data with high enough severity. To illustrate this, consider the following example that uses similar data from a different country more than three centuries apart.

Example 3. Data refer to newborns during 1668 in London (England), 6073 boys, 5560 girls, 11,633; see Arbuthnot [35]. The optimal test in (20) yields with rejecting : This result is almost identical to the result with data from Cyprus for 1995, but the question is whether the latter can be viewed as a successful replication with trustworthy evidence.

Evaluating the post-data severity with the same probability , the warranted discrepancy from by test and data is , which is very close to ; a fourth decimal difference.

As shown in Figure 2, the severity curves for data and almost coincide. This suggests that, for a statistically adequate , the post-data severity could provide a more robust measure of replicability associated with trustworthy evidence, than point estimates, effect sizes, observed CIs, or p-values. Indeed, it can be argued that the warranted discrepancy with high probability provides a much more robust testing-based effect size for the scientific effect; see Spanos [7].

Figure 2.

Severity curves for data .

3.4. Statistical vs. Substantive Significance

The post-data severity evaluation can also address this problem by relating the discrepancy from () warranted by test and data with high probability, to the substantively (human biology) determined value . For that, one needs to supplement the statistical information in data with reliable substantive subject matter information to evaluate the ‘scientific effect’.

Example 2 (continued). Human biology informs us that the substantive value for the ratio of boys to all newborns is ; see Hardy [36]. Comparing with the severity-based warranted discrepancy, () indicates that the statistically determined entails the substantive significance, since . Hence, the statistical value also implies substantive significance.

3.5. Post-Data Severity and the Large n Problem

To alleviate the large n problem, some textbooks in statistics advise practitioners to keep reducing as n increases beyond see Lehmann and Romano [21]. A less arbitrary method is to agree that, say, for seems a reasonable standard, and then modify Good’s [37] standardization of the p-values into thresholds using the formula: as shown in Table 2. This standardization is also ad hoc since (i) it depends on an agreed standard, (ii) the simple scaling, but (iii) for the implied thresholds are tiny.

Table 2.

Standardized relative to .

The post-data severity evaluation of the accept/reject results can be used to shed light on the link between and Let us return to example 1 and assume that is large enough (a) to establish the statistical adequacy of the simple Bernoulli model in (18), (b) to avoid the small n problem, and (c) to provide a reliable enough estimate for . There are two possible scenarios one can consider.

Scenario 1 assumes that all different values of yield the same observed value of the test statistic . This scenario has been explored in the context of the SEV evaluation by Mayo and Spanos [31].

Scenario 2 assumes that as n increases beyond the changes in the estimate will be ‘relatively small’ to render approximately constant when the IID assumptions are valid for .

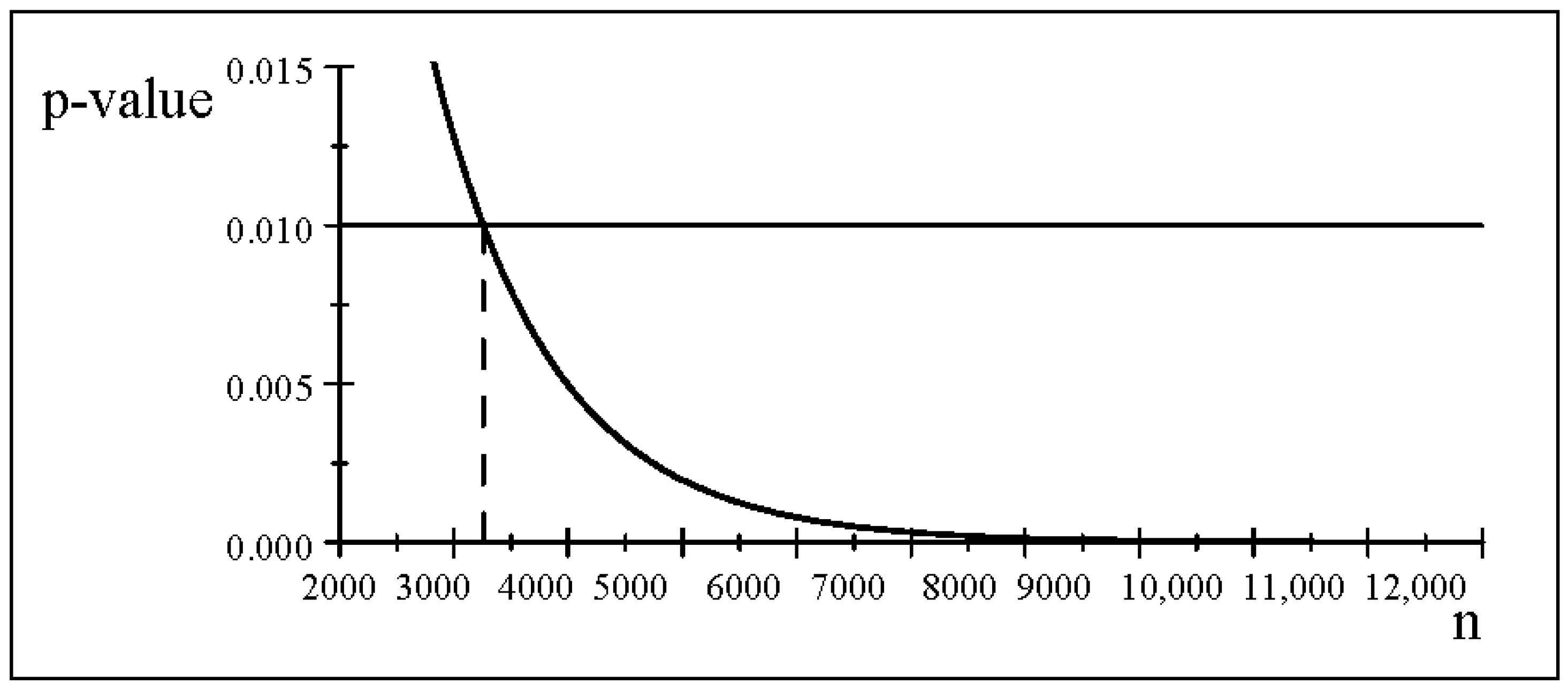

To explore scenario 2, let us return to example 2, related to testing : vs. : in the context of the simple Bernoulli model in (18), using data on newborns in Cyprus during 1995, 5152 (male) and 4717 (female). Particular values for n and are given in Table 3, indicating clearly that for 20,000 the p-value goes to zero very fast, and thus, the thresholds needed to counter the increase in n will be tiny.

Table 3.

The p-value with increasing n ( constant).

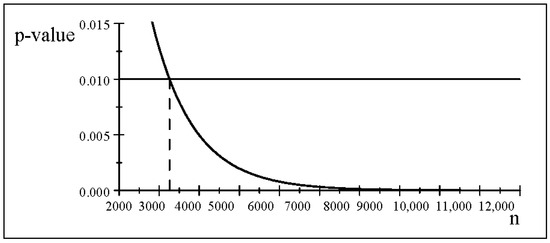

Figure 3 shows the p-value for different values of n, indicating that for the null hypothesis will be rejected, but for smaller n will be accepted. This indicates clearly that for a given the accept/reject results are highly vulnerable to abuse stemming from manipulating the sample size to obtain the desired result. This abuse can be addressed by the SEV evaluation for such results.

Figure 3.

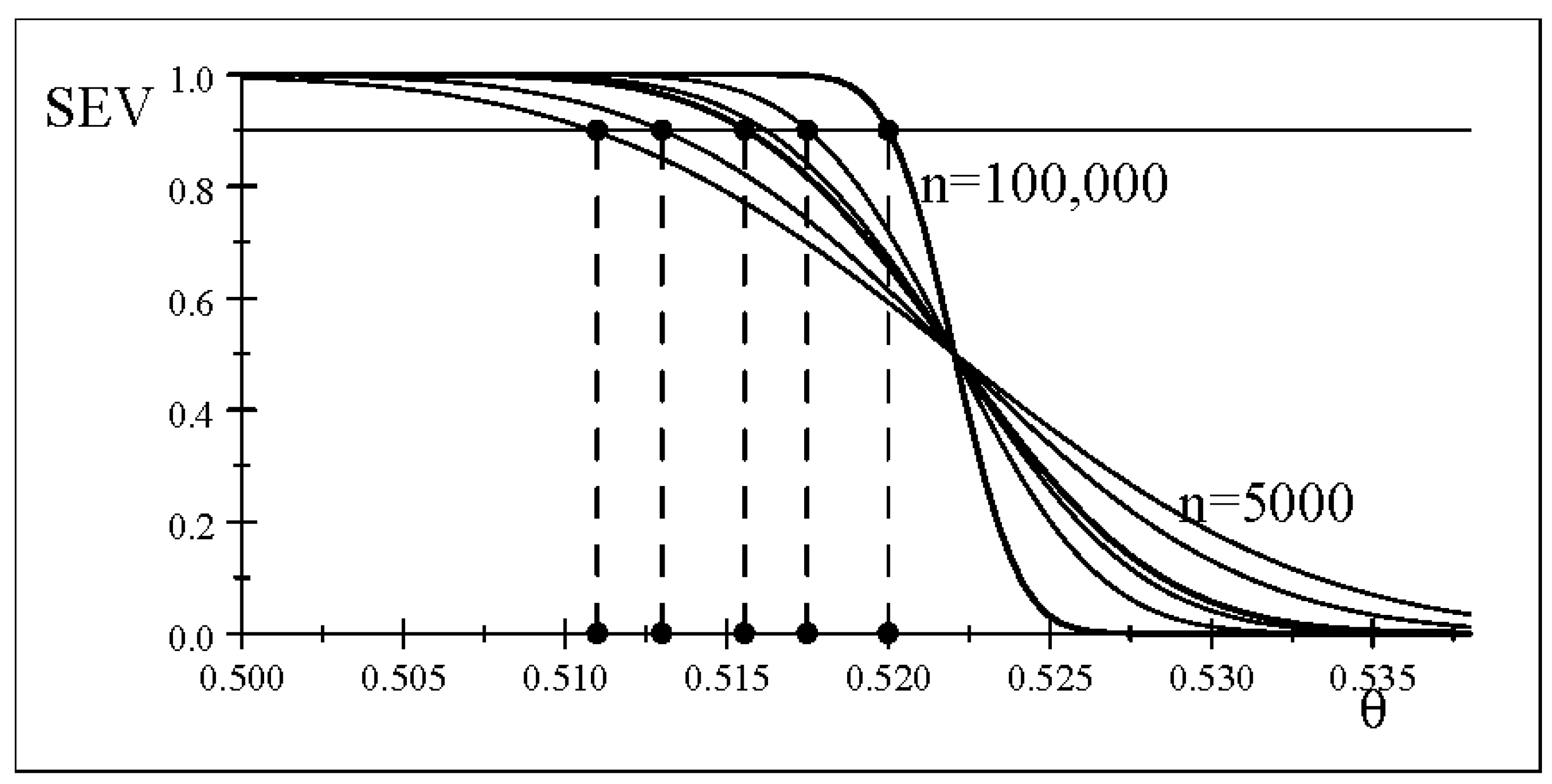

The p-value for and different n.

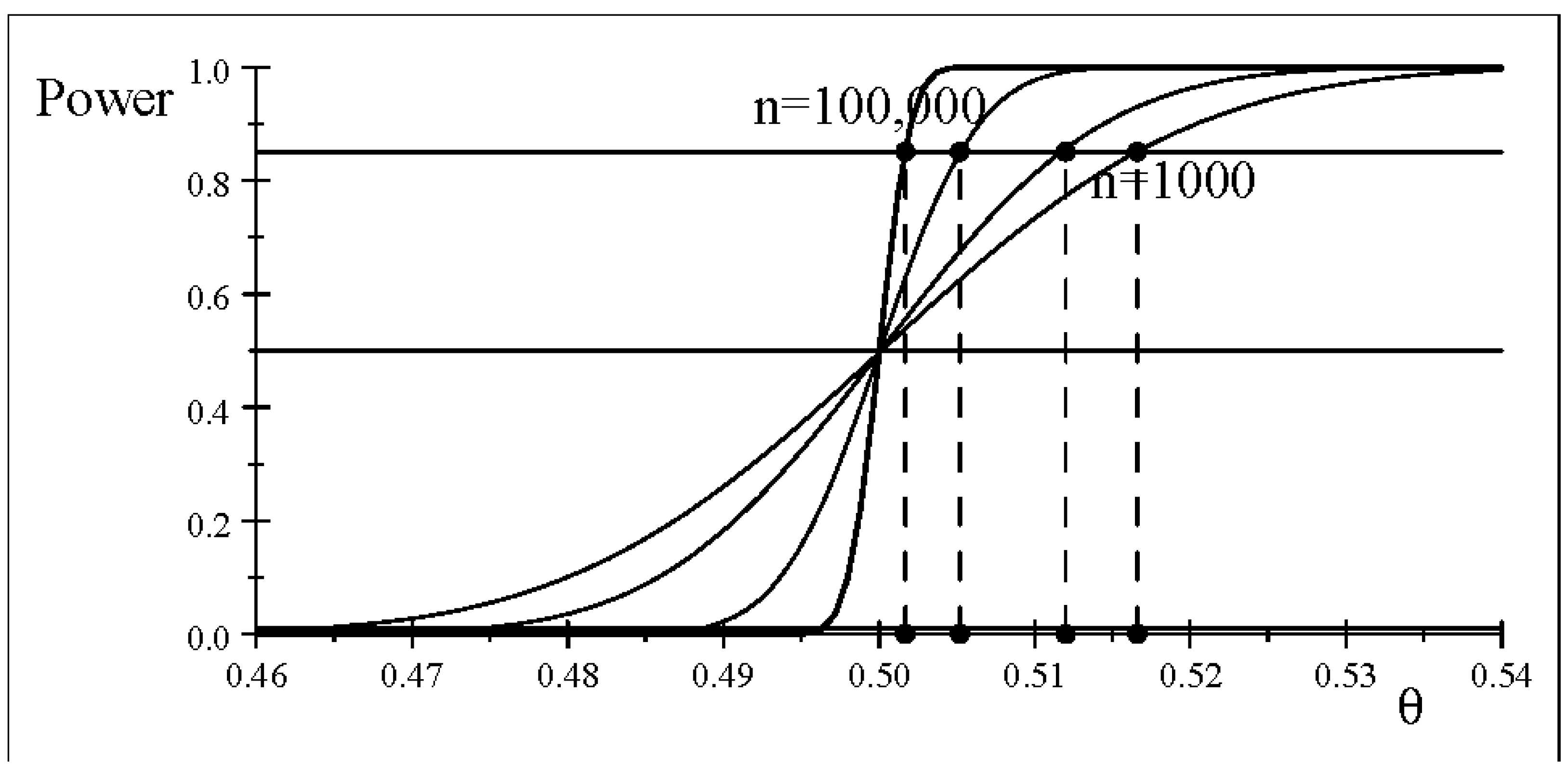

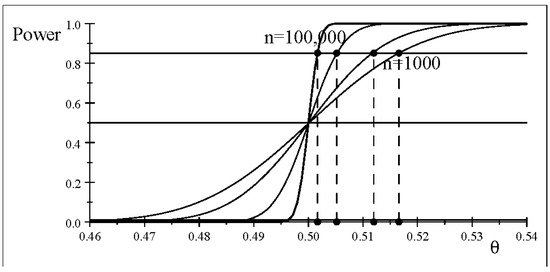

The other side of the large n coin relates to the increase in power for a given as n increases. Figure 4 shows that the power curve becomes steeper and steeper as n increases, reflecting the detection of smaller and smaller discrepancies from with probability . This stems from the fact that the power of the test in (20) is evaluated using the difference between a fixed and , which increases with , based on (24).

Figure 4.

The power curves for and different n.

The above numerical examples relating to test (20) under scenario 2 suggest that rules of thumb relating to decreasing as n increases, in an attempt to meliorate potentially spurious results, can be useful in tempering the trade-off between the type I and II error probabilities. They do not, however, address the large n problem, since they are ad hoc and their standardized thresholds decrease to zero beyond 100,000.

The post-data severity evaluation (SEV) of the accept/reject results constitutes a principled argument that addresses the large n problem by ensuring that the same n is used in both terms and when the warranted discrepancy is evaluated based on

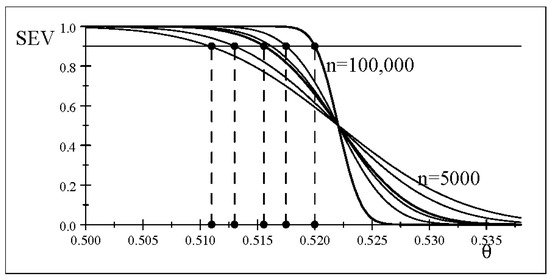

using (24), in contrast to the power, which replaces with in (26). To illustrate this argument, Table 4 reports the SEV evaluations using scenario 2, where is retained and n is allowed to vary below and above the original for values that give rise to reject . The numbers indicate that for a given , as n increases, the warranted discrepancy increases, or equivalently the for increases with

Table 4.

SEV with changing n ( is held constant).

The severity curves for the different n in Table 4 are shown in Figure 5, with the original and in heavy lines. The curves confirm the results in Table 4, and provide additional details, indicating the need to increase the benchmark of how high the probability should be to counter-balance the increase in n; hence the use of for example 2 () and 3 ( 11,633), and () for example 4.

Figure 5.

Severity curves for test and data .

4. Post-Data Severity and the Remedies Proposed by the Replication Literature

4.1. The Alleged Arbitrariness in Framing and

The issue of the framing of and in N-P testing has been widely misconstrued by the replication crisis literature, questioning its coherence and blaming the accept/reject results and the p-value, as providing misleading evidence and often non-replicable results. Contrary to that, in addition to Neyman and Pearson [23] warning against misinterpreting ‘accept ’ as evidence for and ‘reject ’ as evidence for they also put forward two stipulations (1–2) (Section 2.3) relating to the framing of and whose objective is to ensure the effectiveness of N-P testing and the informativeness of the ensuing results. The following example illustrates how a ‘nominally’ optimal test can be transformed into a wild goose chase when (1–2) are ignored.

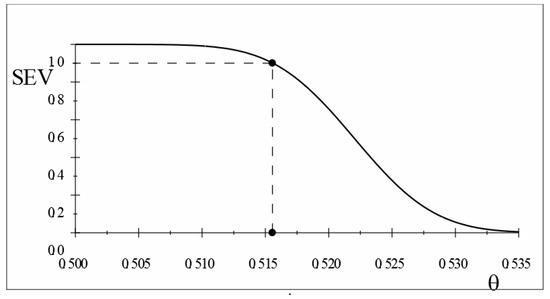

Example 4. In an attempt to demonstrate the ineptitude of the p-value as it compares to Bayesian testing, Berger [38] p. 4, put forward an example of testing:

in the context of the simple Bernoulli model in (18), with , and . Applying the UMP test yields: with a p-value , indicating ‘accept ’. Berger avails this “ridiculous”result to make a case for Bayesian statistics: “A sensible Bayesian analysis suggests that the evidence indeed favors, but only by a factor of roughly 5 to 1.” (p. 4). Viewing this result in the context of the N-P stipulations (1–2) (Section 2.3) reveals that the real source of this absurd result is likely to be the framing in (27) since it flouts both stipulations. Assuming the statistical adequacy of the invoked in (18), gives a broad indication of the potential neighborhood of In contrast, the framing in (27) ensures that the test has no power to detect any discrepancies around , since the implicit power is confirming that is the result of ignoring stipulations (1–2).

How can one avoid such abuses of N-P testing and secure trustworthy evidence? When there is no reliable information about the potential neighborhood around , one should always use the two-sided framing

that accords with the N-P stipulations (1–2). Applying the UMP unbiased test

(Lehmann and Romano [21]), reject with , with for . An even stronger rejection, with , will result by testing the hypotheses

using the UMP test

Applying the SEV evaluation to the result ‘reject ’, with based on (28), it is clear that the sign and magnitude of indicate that the relevant inferential claim is (C-2) implies that the relevant event is : to infer the warranted discrepancy with high probability, say, ():

which yields with the severity curve in Figure 6.

Figure 6.

Severity curve for test and data .

Note: the severity curve for the two-sided test would have been identical to that in Figure 6, since determines the direction of departure, irrespective of

Applying the post-data severity evaluation for discrepancies () gives which is the strongest possible evidence against , repudiating the Bayesian posterior odds of 5 to 1 for .

A potential counter-argument to the above discussion, claiming that the estimate could be the result of a bad draw, is not a well-grounded argument, since misspecification testing of the invoked would reveal whether is atypical, i.e., a bad draw from the sample . Recall that the adequacy of ensures that is a ‘typical’ realization thereof. It is worth mentioning, however, that example 4 with could be vulnerable to the small n problem discussed in Section 2.3.

4.2. The Call for Redefining Statistical Significance

In light of the above discussion on the large n problem, the call by Benjamin et al. [6] “For fields where the threshold for defining statistical significance for new discoveries is , we propose a change to .” (p. 6) seems visceral! It brushes aside the inherent trade-off between the type I and II error probabilities and the implied inverse relationship between the sample size n and the appropriate to avoid the large/small n problems; see Section 2.3. The main argument used by Benjamin et al. [6] is that empirical evidence from large-scale replications indicates that studies with are more likely to be replicable than those based on .

When this claim is viewed in the context of the broader problem of the uninformed and recipe-like implementation of statistical modeling and inference, in conjunction with its many different ways it can contribute to the untrustworthiness of empirical evidence, including (a–c), and the fact that replicability is neither necessary nor sufficient for the trustworthiness of empirical evidence, the above argument is unpersuasive. The threshold was never meant to be either arbitrary or fixed for all frequentist tests, and the above discussion of the large n problem shows that using for a large n, say 10,000, will often give rise to spurious significance results. Aware of the loss of power when decreases to , Benjamin et al. [6] call for increasing to ensure a high power of at some arbitrary . The problem with the proposed remedy is twofold. First, increasing n is often impracticable with observational data, and second, securing high power for arbitrary discrepancies is not conducive to learning about .

Another argument for lowering the threshold put forward by Benjamin et al. [6] stems from a misleading comparison between the two-sided p-value for the thresholds and and the corresponding Bayes factor:

where and denote the prior and the posterior distributions. This is an impertinent comparison since the Bayesian perspective on evidence, based on has a meager connection to the p-value as an indicator of discordance between and This is because the presumed comparability (analogy) between the tail areas of that varies over , and revolves around with the ratio in that varies with is ill-thought-out! The uncertainty accounted for by the former has nothing to do with that of the latter, since the posterior distribution accounts for the uncertainty stemming from the prior distribution, weighted by the likelihood function, both of which vary over .

4.3. The Severity Perspective on the p-Value, Observed CIs, and Effect Sizes

The p-value and the accept/reject results. The real problem is their binary nature created by the threshold , which gives rise to counter-intuitive results, such as for one rejects when and accepts when The SEV-based evidential account does away with this binary dimension since its inferential claim and the discrepancy from warranted with high probability are guided by the sign and magnitude of the observed test statistic . The SEV evaluation uses the statistical context in (15), in conjunction with the statistical approximations framed by the relevant sampling distribution of to deal with the binary nature of the results, as examples 2–4 illustrate.

Example 2 (continued). Let us return to the data denoting newborns in Cyprus, 5152 boys and 4717 girls during 1995, where the optimal test with indicates ‘reject ’ with When is viewed from the severity vantage point, it is directly related to evaluating the post-data severity for a zero discrepancy, i.e., since (Mayo and Spanos, [31]):

which suggests that a small p-value indicates the existence of some discrepancy but provides no information about its magnitude warranted by The severity evaluation remedies that by outputting the missing magnitude in terms of the discrepancy warranted by data and test with high probability by taking into account the relevant statistical context in (15). The key problem is that the p-value is evaluated using N, and thus, it contains no information relating to different discrepancies from , unlike the post-data severity evaluation, since it is based on (N.

Observed CIs and effect sizes. The question that arises is why the claim

with probability is unwarranted. As argued in Section 2.4 this stems from the fact that factual reasoning is baseless post-data, and thus, one cannot assign a probability to . This calls into question calls by the reformers in the replication crises to replace the p-value with the analogous observed CI because the latter is (i) less vulnerable to the large n problem, and (ii) more informative than the p-value since it provides a measure of the ‘effect size’. Cohen’s [39] recommendation is: “routinely report effect sizes in the form of confidence intervals” (p. 1002).

Claim (i) is questionable because a CI is equally as vulnerable to the large n problem as the p-value since the expected length of a consistent CI shrinks to zero as In the case of (7) this takes the form

and thus, as n increases, the width of the observed decreases.

This also questions claim (ii), that it provides a reliable measure of the ‘effect size’, since the larger the n the smaller the observed CI. Worse, the concept of the ‘effect size’ was introduced partly to address the large n problem using a measure that is free of n: “…the raw size effect as a measure is that its expected value is independent of the size of the sample used to perform the significance test.” (Abelson [40], p. 46).

Example 2 (continued). The effect size for is known as Cohen’s (Ellis [30]). When evaluated using the Arbuthnot value , , which is rather small, and when evaluated using the Bernoulli value it is even smaller, . How do these values provide a better measure of the ‘scientific effect’? They do not, since Cohen’s g is nothing more than another variant of the unwarranted claim that for a large enough n; see Spanos [7]. On the other hand, the post-data severity evaluation outputs the discrepancy warranted by data and test with probability This implies that the severity-based effect size is which takes into account the relevant error probabilities that calibrate the uncertainty relating to the single realization . In addition, the SEV evaluation gives rise to identical severity curves (Figure 1) and the same evidence for both null values and

Severity and observed CIs. The great advantage of hypothetical reasoning is that it applies both pre-data and post-data, enabling the SEV to shed light on several foundational problems relating to frequentist inference more broadly. In particular, the severity evaluation of ‘reject ’ relating to the inferential claim has a superficial resemblance to in (8)-(b), especially if one were to consider as a relevant discrepancy of interest in the SEV evaluation; see Mayo and Spanos [31]. This resemblance, however, is more apparent than real since:

(i)The relevant sampling distribution for is and that for the SEV evaluation is St, ;

(ii) They are derived under two different forms of reasoning, factual and hypothetical, respectively, which are not interchangeable. Indeed, the presence of for the SEV evaluation renders the two assignments of probabilities very different. Hence, any attempt to relate the SEV evaluation to the illicit assignment is ill-thought-out since (a) it will (implicitly) impose the restriction , since leaves no room for the discrepancy and (b) the assigned faux probability will be unrelated to the coverage probability; see Spanos [29]. Also, the SEV evaluation can be used to shed light on several confounds relating to different attempts to assign probabilities to different values of within an observed CI, including the most recent attempt based on confidence distributions by Schweder and Hjort [20] above.

5. The SEV Evaluation vs. the Law of Likelihood

Royall [11] popularized an alternative approach to converting inference results into evidence using the likelihood ratio anchored on the Maximum Likelihood (ML) estimator . He rephrased Hacking’s [41] Law of Likelihood (LL): Data support hypothesis over hypothesis if and only if The degree to which supports better than is given by the Likelihood Ratio (LR):

by replacing the second sentence with “The likelihood ratio measures the strength of evidence for ”. The term “strength of evidence" is borrowed from Fisher’s [42] questionable claim about the p-value: “The actual value of p … indicates the strength of evidence against the hypothesis” (p. 80).

To avoid the various contradictions arising from allowing or to be composite hypotheses (Spanos, [32,43]), Royall’s account of evidence revolves around simple hypotheses vs. This, however, creates a problem with nuisance parameters that does not arise in the context of N-P testing where all the parameters of the invoked are viewed as an integral part of the inductive premises, irrespective of whether any one parameter is of particular interest.

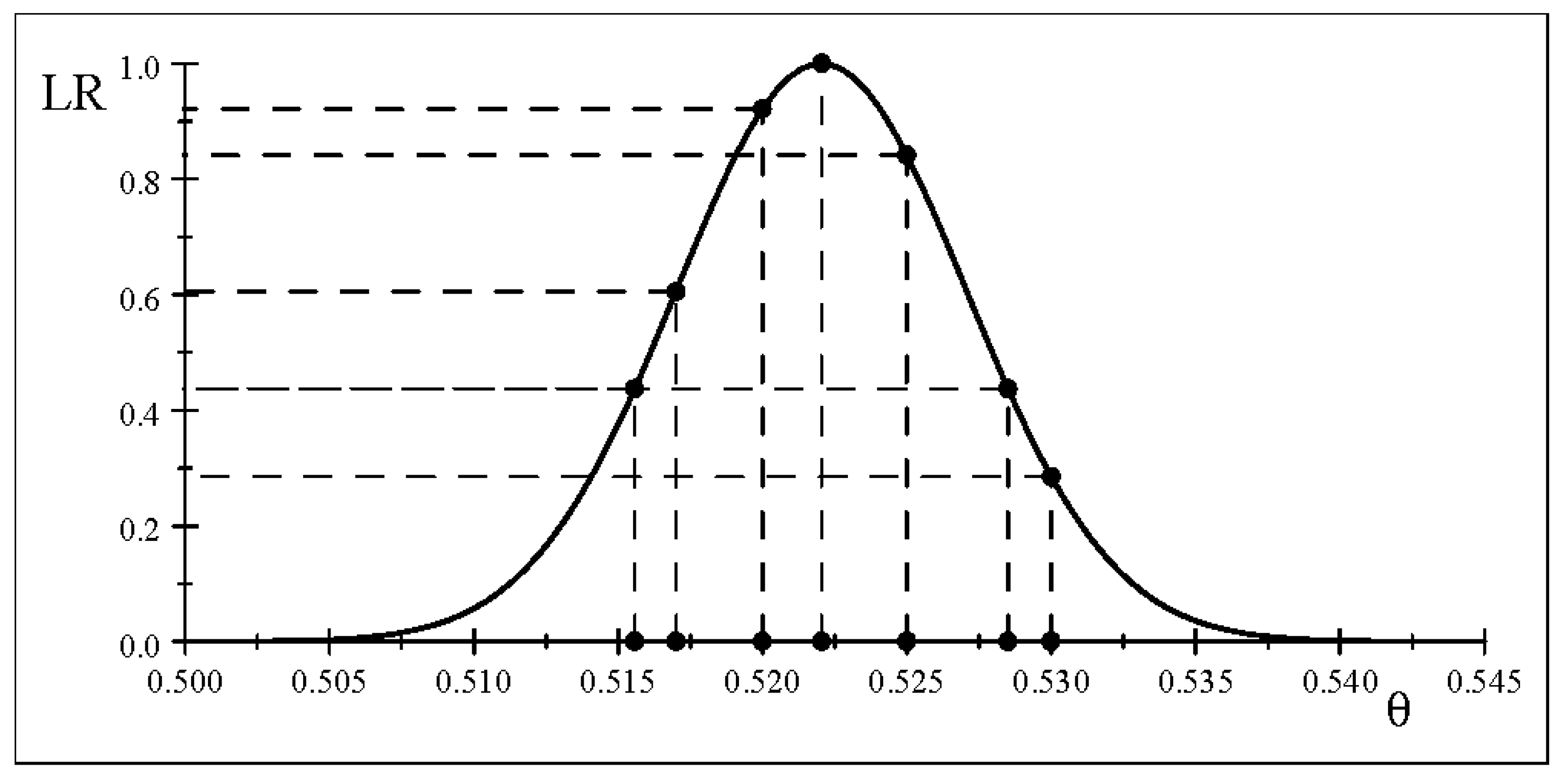

To exemplify the Royall LR (RLR) account of evidence let us return to example 2.

Example 2 (continued). Consider the data 5152 boys and 4717 girls in the context of the simple Bernoulli model in (18), yielding the Maximum Likelihood (ML) estimate of . For the likelihood function takes the form:

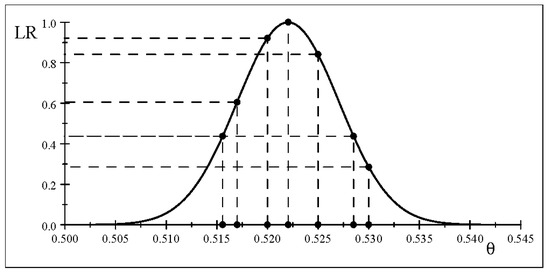

The likelihood function scaled by the ML estimate is (Figure 7):

Consider the hypotheses : vs. : whose likelihood ratio yields:

The first issue that arises is how to interpret in terms of the strength of evidence. Royall [11] proposes three thresholds:

Irrespective of how justified these thresholds are in the proposer’s mind, they can be easily disputed as ad hoc and arbitrary since the likelihood function , as well as the LR depend crucially on the invoked statistical model Hence, when is used to determine the appropriate distance between the two likelihoods, the notion of universal thresholds is dubious. This is because every likelihood function has an in-built natural distance relating to each of its parameters known as the score function: the derivative of the log-likelihood , evaluated at a particular point . Its key role stems from the fact that its mean is zero and its variance is equal to Fisher’s information; see Casella and Berger [19]. The score function indicates the sensitivity of to infinitesimal changes of . The problem is that the score function differs for different statistical models. For instance, for the simple Normal in (2) the score function is linear in , but for the simple Bernoulli model in (18) the score function is highly non-linear in , even though both parameters denote the mean of their underlying distributions. This calls into question the notion of LR universal thresholds, and could explain why so many different such thresholds have been proposed in the literature; see Reid [44].

Figure 7.

The RLR strength of evidence for 0.52204 vs. .

To shed light on Royall’s strength of evidence notion, Table 5 reports for and different values of including the value related to the SEV warranted discrepancy, [] with probability , as shown in Figure 7.

Table 5.

RLR strength of evidence for vs. different values of .

Using the above thresholds, the result indicates that the strength of evidence for vs. is ‘weak’, and the evidence will be equal or weaker for any . If one were to take a firm stand on providing ‘fairly strong’ evidence for the relevant range of values for will be .

What is even more problematic for the RLR approach is that has the same strength of evidence against the two values and , shown in Figure 7. This calls into question the nature of evidence the RLR approach gives rise to since it undermines the primary objective of frequentist inference. Its strength of evidence for is identical against two values on either side of the ML estimate value 0.52204, and as the threshold increases the distance between them increases. This derails any learning from data since it undermines the primary objective of narrowing down the relevant neighborhood for unless the choice is invariably the ML point estimate as it relates to the fallacious claim . This weakness was initially pointed out by an early pioneer of the likelihood ratio approach, Barnard [45], p. 129, in his belated review of Hacking [41]. He argued that for any prespecified value :

“… there always is such a rival hypothesis, viz. that things just had to turn out the way they actually did.”

As evidenced in Figure 7, the RLR will pinpoint the ML estimate no matter what the other value in happens to be since it is the maximally likely value; see Mayo [46]. No wonder, Hacking [47], p. 137, in his book review of Edward’s [48] “ Likelihood” changed his mind and abandoned the LR approach altogether:

“I do not know how Edwards’s favoured concept [the difference of log-likelihoods] will fare. The only great thinker who tried it out was Fisher, and he was ambivalent. Allan Birnbaum and myself are very favourably reported in this book for things we have said about likelihood, but Birnbaum has given it up and I have become pretty dubious.”

Indeed, Hacking [49], p. 141, not only rejected the Law of Likelihood but went a step further by reversing his original viewpoint and wholeheartedly endorsing the N-P testing:

“This paper will show that the Neyman-Pearson theories of testing hypotheses and of confidence interval estimation are sound theories of probable inference.”

It is worth noting that even before the optimal N-P theory of testing was finalized in 1933, Pearson and Neyman [50] confronted the problem of how to construe by emphasizing the crucial role of the relevant error probabilities:

“But if we accept the criterion suggested by the method of the likelihood it is still necessary to determine its sampling distribution in order to control the errors involved in rejecting a true hypothesis, a knowledge of [] alone is not adequate to insure control of the error. … In order to fix a limit between "small" and "large" value of we must know how often such values appear when we deal with a true hypothesis.” (p. 106).

A critical weakness of RLR approach is that learning from data about using two points at a time becomes untenable since the parameter space is usually uncountable, and thus the point will always belong to a set of measure zero; see Williams [51]. In addition, the ‘maximally likely value’ problem is compounded by the fact that the framing of runs afoul of the two crucial stipulations [1]–[2] introduced by Neyman and Pearson [23] in Section 2.3.

A likely counter-argument might be that the RLR approach ignores any potentially relevant error probabilities, but asymptotically the likelihood function will pinpoint , by invoking the Strong Law of Large Numbers (SLLN):

This argument is misplaced since it does not address the key problems relating to narrowing down the potential neighborhood of , to give rise to any learning from data. To begin with, as the above quote from Le Cam [27], such limits theorems are uninformative as to what happens with the particular data. Also, the probabilistic assignment in (29) relies on revolves around , and has nothing to do with and Indeed, for the same reason the Kullback-Leibler (K-L) divergence (Lele, [52] in [53]) defines the difference between and , and provides a comparative account relative to , and not as specified by (29). This implies that the RLR and the K-L divergence invoke (implicitly) a variant of the fallacious claim .

A closer look at the RLR approach to evidence reveals that provides nothing more than a ranking of all the different pairs of values of in relative to , regardless of the ‘true’ value This can be easily demonstrated using simulation with a known to show that the RLR search using different replications , and the associated ML estimates , is unlikely that any one of them will be equal or very close to the true value. To get anything close to one should use all the replications for a sufficiently large N to approximate closely the sampling distribution, of , and use the overall average This, however, is based on N data sets of sample size n not just the one, . Hence, the ratio contains no information relating to , beyond being a single observation. In this sense, the RLR is as unduly data-dependent as the point estimate in (16) and the associated observed CI in (17) since it also ignores the uncertainty stemming from being a single observation from

More importantly, comparing the results of the SEV evaluation in Table 1 with those based on in Table 5 indicates clearly that the two approaches to evidence are incompatible. The SEV evaluation relating to the ML estimate will always yield , rendering it unwarranted in principle! Worse, the SEV evaluation of the discrepancy associated with is warranted with probability , but the value , that the RLR approach assigns the same ‘strength of evidence’ relative to is warranted with probability Which of the two approaches gives rise to pertinent evidence stemming from constituting a sound inductive generalization of the relevant statistical results, the accept/reject for the SEV, and the ML estimate for the RLR?

One can make a credible case that the ML estimate , would lie in some broad neighborhood of , but narrowing that down sufficiently to learn about cannot be attained using . This stems from the fact that the RLR approach revolves around the ML estimate which is based on an estimation perspective that cannot be deployed post-data since has occurred, and thus has transpired. In contrast, the SEV evaluation is based on a testing perspective, which is equally pertinent for evaluating error probabilities pre-data and post-data.

The most crucial feature of the SEV evaluation is that it converts the accept/reject results into evidence by taking into account the statistical context in (15) including the power of the test. This is important since detecting a particular discrepancy, say , provides stronger evidence for its presence when the power is lower than higher; see Spanos [18]. The underlying intuition can be illustrated by imagining two people searching for metallic objects on the same beach, one is using a highly sensitive metal detector that can detect small nails, and the other a much less sensitive one. If both detectors begin to buzz simultaneously, the one more likely to have found something substantial is the less sensitive one! The SEV evaluation harnesses this intuition by custom-tailoring the power of the test, replacing the original with the post-data , to establish the discrepancy warranted by data with high enough probability. As argued above, this enables the SEV evidential account to circumvent several foundational problems, including the large n problem. In contrast, the RLR account will yield the same strength of evidence for any two values of , irrespective of the size of n.

6. Summary and Conclusions

The replication crisis has exposed the apparent untrustworthiness of published empirical evidence, but its narrow attribution to certain abuses of frequentist testing can be called into question as ‘missing the forest for the trees’. A stronger case can be made that the real culprit is the much broader problem of the uninformed and recipe-like, implementation of statistical methods, which contributes to the untrustworthiness in many different ways, including [a] imposing invalid probabilistic assumptions on one’s data, and [b] conflating unduly data-specific inference results’ with ‘evidence for or against inferential claims about ’, which represent inductive generalizations of such results.

The above discussion makes a case that the post-data severity (SEV) evaluation provides an evidential account of the accept/reject results, in the form of a discrepancy from warranted with high enough probability by data and test . The SEV evaluation is framed in terms of a post-data error probability that accounts for the statistical context in (15), as well as the uncertainty stemming from inference results relying on a single realization of the sample . The SEV evaluation perspective is used to call into question Royall’s [11] LR approach to evidence as another rendering of the fallacious claim for a large enough

The SEV evaluation is also shown to elucidate/address several foundational issues confounding frequentist testing since the 1930s, including (i) statistical vs. substantive significance, (ii) the large n problem, and (iii) the alleged arbitrariness of the N-P framing and , used to undermine the coherence of frequentist testing. The SEV also oppugns the proposed alternatives to replace or modify frequentist testing, using statistical results, such as observed CIs, effects sizes, and redefining significance, all of which are equally vulnerable to [a]–[c] undermining the trustworthiness of empirical evidence. In conclusion, it is important to reiterate that unless one has already established the statistical adequacy of the invoked , any discussions relating to reliable inference results and trustworthy evidence based on tail area probabilities are unwarranted.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

All data used are available in published sources.

Conflicts of Interest

The author declares no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| N-P | Neyman–Pearson |

| MDS | Medical diagnostic screening |

| M-S | Misspecification |

| CI | Confidence interval |

| SEV | Post-data severity |

References

- National Academy of Sciences. Statistical Challenges in Assessing and Fostering the Reproducibility of Scientific Results: Summary of a Workshop; NA Press: Washington, DC, USA, 2016. [Google Scholar]

- Wasserstein, R.L.; Lazar, N.A. ASA’s statement on p-values: Context, process, and purpose. Am. Stat. 2016, 70, 129–133. [Google Scholar] [CrossRef]

- Baker, M. Reproducibility crisis. Nature 2016, 533, 353–366. [Google Scholar]

- Hoffler, J.H. Replication and Economics Journal Policies. Am. Econ. Rev. 2017, 107, 52–55. [Google Scholar] [CrossRef]

- Ioannidis, J.P.A. Why most published research findings are false. PLoS Med. 2005, 2, e124. [Google Scholar] [CrossRef] [PubMed]

- Benjamin, D.J.; Berger, J.O.; Johannesson, M.; Nosek, B.A.; Wagenmakers, E.J.; Berk, R.; Bollen, K.A.; Brembs, B.; Brown, L.; Camerer, C.; et al. Redefine statistical significance. Nat. Hum. Behav. 2017, 33, 6–10. [Google Scholar] [CrossRef] [PubMed]

- Spanos, A. Revisiting noncentrality-based confidence intervals, error probabilities and estimation-based effect sizes. J. Mathematical Stat. Psychol. 2021, 104, 102580. [Google Scholar] [CrossRef]

- Spanos, A. Curve-Fitting, the Reliability of Inductive Inference and the Error-Statistical Approach. Philos. Sci. 2007, 74, 1046–1066. [Google Scholar] [CrossRef]

- Leek, J.T.; Peng, R.D. Statistics: P values are just the tip of the iceberg. Nature 2015, 520, 520–612. [Google Scholar] [CrossRef]

- Spanos, A. On theory testing in Econometrics: Modeling with nonexperimental data. J. Econom. 1995, 67, 189–226. [Google Scholar] [CrossRef]

- Royall, R. Statistical Evidence: A Likelihood Paradigm; Chapman & Hall: New York, NY, USA, 1997. [Google Scholar]

- Fisher, R.A. On the mathematical foundations of theoretical statistics. Philos. Trans. R. Soc. A 1922, 222, 309–368. [Google Scholar]

- Spanos, A. Mis-Specification Testing in Retrospect. J. Econ. Surv. 2018, 32, 541–577. [Google Scholar] [CrossRef]

- Spanos, A. Where Do Statistical Models Come From? Revisiting the Problem of Specification. In Optimality: The Second Erich L. Lehmann Symposium; Rojo, J., Ed.; Lecture Notes-Monograph Series; Institute of Mathematical Statistics: Beachwood, OH, USA, 2006; Volume 49, pp. 98–119. [Google Scholar]

- Spanos, A. Probability Theory and Statistical Inference: Empirical Modeling with Observational Data; Cambridge University Press: Cambridge, UK, 2019. [Google Scholar]

- Spanos, A. Akaike-type Criteria and the Reliability of Inference: Model Selection vs. Statistical Model Specification. J. Econom. 2010, 158, 204–220. [Google Scholar] [CrossRef]

- Spanos, A. Frequentist Model-based Statistical Induction and the Replication crisis. J. Quant. Econ. 2022, 20 (Suppl. 1), 133–159. [Google Scholar] [CrossRef]

- Spanos, A. Severity and Trustworthy Evidence: Foundational Problems versus Misuses of Frequentist Testing. Philos. Sci. 2022, 89, 378–397. [Google Scholar] [CrossRef]

- Casella, G.; Berger, R.L. Statistical Inference, 2nd ed.; Duxbury: Pacific Grove, CA, USA, 2002. [Google Scholar]

- Schweder, T.; Hjort, N.L. Confidence, Likelihood, Probability: Statistical Inference with Confidence Distributions; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Lehmann, E.L.; Romano, J.P. Testing Statistical Hypotheses; Springer: New York, NY, USA, 2005. [Google Scholar]

- Owen, D.B. Survey of Properties and Applications of the Noncentral t-Distribution. Technometrics 1968, 10, 445–478. [Google Scholar] [CrossRef]

- Neyman, J.; Pearson, E.S. On the problem of the most efficient tests of statistical hypotheses. Philos. Trans. R. A 1933, 231, 289–337. [Google Scholar]

- Fisher, R.A. The Design of Experiments; Oliver and Boyd: Edinburgh, UK, 1935. [Google Scholar]

- Spanos, A. Revisiting the Large n (Sample Size) Problem: How to Avert Spurious Significance Results. Stats 2023, 6, 1323–1338. [Google Scholar] [CrossRef]

- Spanos, A.; McGuirk, A. The Model Specification Problem from a Probabilistic Reduction Perspective. J. Am. Agric. Assoc. 2001, 83, 1168–1176. [Google Scholar] [CrossRef]

- Le Cam, L. Asymptotic Methods in Statistical Decision Theory; Springer: New York, NY, USA, 1986. [Google Scholar]

- Neyman, J. Note on an article by Sir Ronald Fisher. J. R. Stat. Ser. B 1956, 18, 288–294. [Google Scholar] [CrossRef]

- Spanos, A. Recurring Controversies about P values and Confidence Intervals Revisited. Ecology 2014, 95, 645–651. [Google Scholar] [CrossRef]

- Ellis, P.D. The Essential Guide to Effect Sizes: Statistical Power, Meta-Analysis, and the Interpretation of Research Results; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Mayo, D.G.; Spanos, A. Severe Testing as a Basic Concept in a Neyman-Pearson Philosophy of Induction. Br. J. Philos. Sci. 2006, 57, 323–357. [Google Scholar] [CrossRef]

- Spanos, A. Who Should Be Afraid of the Jeffreys-Lindley Paradox? Philos. Sci. 2013, 80, 73–93. [Google Scholar] [CrossRef]

- Mayo, D.G. Error and the Growth of Experimental Knowledge; The University of Chicago Press: Chicago, IL, USA, 1996. [Google Scholar]

- Mayo, D.G.; Spanos, A. Error Statistics. In Handbook of Philosophy of Science, Volume 7: Philosophy of Statistics; Gabbay, D., Thagard, P., Woods, J., Eds.; Elsevier: Amsterdam, The Netherlands, 2011; pp. 151–196. [Google Scholar]

- Arbuthnot, J. An argument for Divine Providence, taken from the constant regularity observed in the birth of both sexes. Philos. Trans. 1710, 27, 186–190. [Google Scholar]

- Hardy, I.C.W. (Ed.) Sex Ratios: Concepts and Research Methods; Cambridge University Press: Cambridge, UK, 2002. [Google Scholar]

- Good, I.J. Standardized tail-area probabilities. J. Stat. Comput. Simul. 1982, 16, 65–66. [Google Scholar] [CrossRef]

- Berger, J. Four Types of Frequentism and their Interplay with Bayesianism. N. Engl. J. Stat. Data Sci. 2022, 1–12. [Google Scholar] [CrossRef]

- Cohen, J. The Earth is round (p < 0.05). Am. Psychol. 1994, 49, 997–1003. [Google Scholar]

- Abelson, R.P. Statistics as Principled Argument; Lawrence Erlbaum: Mahwah, NJ, USA, 1995. [Google Scholar]

- Hacking, I. Logic of Statistical Inference; Cambridge University Press: Cambridge, UK, 1965. [Google Scholar]

- Fisher, R.A. Statistical Methods for Research Workers; Oliver and Boyd: Edinburgh, UK, 1925. [Google Scholar]

- Spanos, A. Revisiting the Likelihoodist Evidential Account. J. Stat. Pract. 2013, 7, 187–195. [Google Scholar] [CrossRef]

- Reid, N. Likelihood. In Statistics in the 21st Century; Raftery, A.E., Tanner, M.A., Wells, M.T., Eds.; Chapman & Hall: London, UK, 2002; pp. 419–430. [Google Scholar]

- Barnard, G.A. The logic of statistical inference. Br. J. Philos. Sci. 1972, 23, 123–132. [Google Scholar] [CrossRef]

- Mayo, D.G. Statistical Inference as Severe Testing: How to Get Beyond the Statistical Wars; Cambridge University Press: Cambridge, UK, 2018. [Google Scholar]

- Hacking, I. Review: Likelihood. Br. J. Philos. Sci. 1972, 23, 132–137. [Google Scholar] [CrossRef]

- Edwards, A.W.F. Likelihood; Cambridge University Press: Cambridge, UK, 1972. [Google Scholar]

- Hacking, I. The Theory of Probable Inference: Neyman, Peirce and Braithwaite. In Science, Belief and Behavior: Essays in Honour of R. B. Braithwaite; Mellor, D., Ed.; Cambridge University Press: Cambridge, UK, 1980; pp. 141–160. [Google Scholar]

- Pearson, E.S.; Neyman, J. On the problem of two samples. Bull. Acad. Pol. Sci. 1930, 73–96. [Google Scholar]

- Williams, D. Weighing the Odds: A Course in Probability and Statistics; Cambridge University Press: Cambridge, UK, 2001. [Google Scholar]

- Lele, S.R. Evidence functions and the optimality of the law of likelihood. In The Nature of Scientific Evidence: Statistical, Philosophical, and Empirical Considerations; Taper, M.L., Lele, S.R., Eds.; University of Chicago Press: Chicago, IL, USA, 2004; pp. 191–216. [Google Scholar]

- Taper, M.L.; Lele, S.R. The Nature of Scientific Evidence: Statistical, Philosophical, and Empirical Considerations; University of Chicago Press: Chicago, IL, USA, 2004. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).