Abstract

Concurrence is a crucial entanglement measure in quantum theory used to describe the degree of entanglement between two or more qubits. Local unitary (LU) invariants can be employed to describe the relevant properties of quantum states. Compared to quantum state tomography, observing LU invariants can save substantial physical resources and reduce errors associated with tomography. In this paper, we use LU invariants as explanatory variables and employ methods such as multiple regression, tree models, and BP neural network models to fit the concurrence of 2-qubit quantum states. For pure states and Werner states, by analyzing the correlation between data, a functional formula for concurrence in terms of LU invariants is obtained. Additionally, for any two-qubit quantum states, the prediction accuracy for concurrence reaches 98.5%.

1. Introduction

The concept of quantum entanglement was first introduced as a peculiar physical phenomenon in the early stages of quantum mechanics, demonstrating a unique connection between two or more quantum systems []. It holds significant importance in the field of quantum cryptography, quantum teleportation, and quantum computing. Because of its importance, detecting and quantifying entanglement have been pivotal tasks in quantum information processing [,,,,]. In [,], the authors have proposed experimentally feasible direct detection of quantum entanglement based on the separability criterion of positive maps and the direct measure of a nonlocal wave function of a bipartite system using modular values, respectively.

Currently, scholars have proposed numerous highly practical measures of entanglement for various scenarios, such as entanglement formation [], negativity [], and the relative entropy of entanglement [], tailored to the nature of the research question. Among these entanglement measures, concurrence is a highly important one in quantum theory, which serves as an effective tool in studying quantum entanglement, enabling the description of quantum phase transition in many-body quantum systems’ interactions [,]. Furthermore, the value of concurrence can be used to estimate the formation of entanglement []. In 1997, Wootters et al. proposed an elegant formula in Ref. [] for calculating the concurrence of any arbitrary two-qubit quantum state as follows:

where are the non-negative square roots, in descending order, of the eigenvalues of the non-Hermitian matrix . And , where is the complex conjugate of matrix and stands for the Pauli-Y matrix.

However, in order to compute the concurrence of a bipartite system, it is necessary to possess complete information about the system’s density matrix, which can be achieved through quantum tomography. Even though the density matrix structure of a two-qubit quantum system is not excessively intricate, it still demands a certain quantum resource allocation and may entail measurement errors during tomography [,,]. Therefore, we aim to describe quantum systems using local unitary (LU) invariants of quantum states to mitigate errors introduced by tomography. In this scenario, prior knowledge of the system’s density matrix is not required. It suffices to determine the system’s Hamiltonian and subject it to specific transformations to compute these LU invariants.

In this paper, we endeavor to use machine learning techniques, including multivariate regression models, tree models, and neural network models, to uncover the mathematical relationship between LU invariants and concurrence within two-qubit systems. Our objective is to establish a model capable of estimating the degree of entanglement solely based on LU invariants, thus facilitating entanglement characterization without prior knowledge of the density matrix.

2. LU Invariants of Two-Qubit States

Before introducing LU invariants, let us first describe quantum states using Bloch representation. The Bloch representation of a two-qubit state can be then expressed as follows:

where represent the Pauli-X, Pauli-Y, and Pauli-Z matrices, respectively, and

In Ref. [], Makhlin proved that, in general, two two-body quantum states are local unitary equivalent if and only if the following 18 invariants (see Table 1 in detail) are equal.

Table 1.

The table shows the local unitary invariants along with their corresponding variable names. Here, stands for the triple scalar product , and is the Levi-Cevita symbol.

Due to concurrence also exhibiting local unitary invariance, we seek to describe each 2-qubit state using this set of LU invariants. Concurrence is a significant measure of entanglement for -dimension bipartite systems. For a bipartite pure state , where () denotes the m (n)-dimensional vector space associated with the subsystem A (B) such that , the concurrence [,] is defined by

with the reduced matrix obtained by tracing over the subsystem B, and denotes the tracing of the density matrix. The concurrence is then extended to mixed states by the convex roof:

where the minimum is taken over all possible ensemble decompositions of , and . The value of concurrence ranges from 0 to , where a concurrence of 0 indicates a separable state, values greater than 0 signify the presence of entanglement within the system, and represents a maximally entangled state. For two-qubit states, estimating its concurrence using machine learning methods can be viewed as a regression problem with values ranging from 0 to 1.

3. Constructing Regression Models

Machine learning is a method for extracting patterns, trends, and correlations from large-scale noisy data. By analyzing the features and patterns within the data, machine learning algorithms can automatically uncover hidden information and learn from it. This learning process enables machines to continuously improve and optimize their performance to better handle unknown data and scenarios. Faced with vast and complex datasets, machine learning becomes a powerful tool for solving real-world problems and making predictions. In this paper, the models we use are the multiple linear regression model, the BP neural network [], and the two tree models, eXtreme Gradient Boosting (XGBoost) [] and Light Gradient Boosting Machine(LGBM) []. The metrics of the assessment model adopted in this paper are and , and the definitions are as follows:

where n is the sample size, is the actual observations, is the model predictions, and is the mean of the observations. The smaller the , the closer the is to 1, the better the model explains the dependent variable.

In the following, we aim to use data analysis methods to input LU invariants as explanatory variables into an algorithmic model and fit the values of concurrence.

Due to the varying structures of density matrices representing quantum states, which reflect different properties and complexities of quantum systems, this experiment will progress from specific to general quantum state structures. Specifically, fitting concurrence will be conducted starting from Werner states, followed by pure states, and then mixed states.

3.1. For Werner States

The Werner states are a family of quantum states with a particular structure, proposed by R. Werner in 1989 []. It is a type of two-qubit quantum state with highly symmetric properties. The density matrix expression for the two-qubit Werner state is as follows:

where , representing the Bell state; I denotes the four-dimensional identity matrix; and p represents a real coefficient with . The value of p describes the evolution of the quantum system from a mixed state to a pure state. The degree of entanglement of the quantum system depends on the value of p: when , the Werner states are separable states; when , the Werner states are entanglement states; and when , the Werner states represent the maximal entangled states, i.e., Bell states.

For two-qubit Werner states, we can construct a family of quantum states by setting different values of p. Due to its specific structure, we can directly derive its concurrence using LU invariants. In Ref. [], Chen et al. proposed that the concurrence of Werner states is dependent on their parameters, and its formula is given by the following:

Accordingly, the LU invariant of the Werner state can be calculated,

Therefore, the formula can be obtained by using LU invariants to express the degree of concurrence

3.2. For Pure States

Pure states refer to quantum systems being in definite and precise states, where the pure states’ system will always collapse to specific measurement outcomes with deterministic probabilities under any measurement. Its density matrix can be represented by a state vector as

According to the definition of pure states, we randomly generate complex vectors in four dimensions where the complex vector consists of a real part and an imaginary part, both of which are randomly drawn from a uniform distribution. Next, dividing it by its L2-norm, we obtain normalized complex vectors . We calculate its concurrence using Equation (1) as the target value for our model. Additionally, we compute 18 LU invariants of the quantum state as explanatory variables. We generate a total of 100,000 quantum states as experimental data.

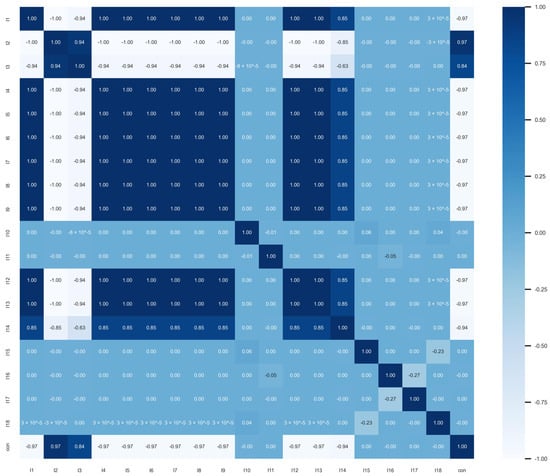

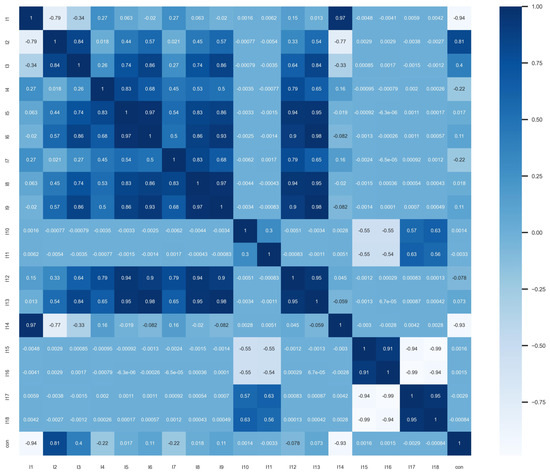

First, we calculate the correlation coefficient to check if there is a linear correlation between the variables. The results are presented in the form of a heatmap, as shown in Figure 1. For two-qubit pure states, there is a strong linear relationship between LU invariants and concurrence, such as between variables , , , and . Additionally, based on the calculation results, there is also a strong auto correlation between the explanatory variables. For example, and , and , and and exhibit a linear correlation of 1. We selected a penalized multivariate regression model, a nonlinear tree model, and a neural network model for model fitting.

Figure 1.

The figure shows a heatmap illustrating the linear correlation between 2−qubit pure state LU invariants and concurrence. In the heatmap, the linear correlation between concurrence and both and is particularly high.

The experimental data are split into training and testing sets in an 8:2 ratio, randomly selecting 80% of the samples for the training set and 20% for the testing set. Next, the models are fitted, and the evaluation results on the testing set are shown in Table 2. All five models demonstrated good performance.

Table 2.

Evaluation results of 2-qubit pure state models.

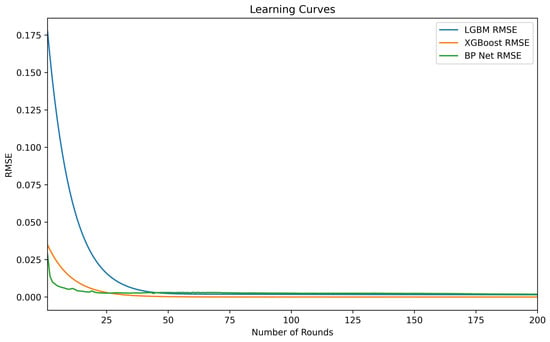

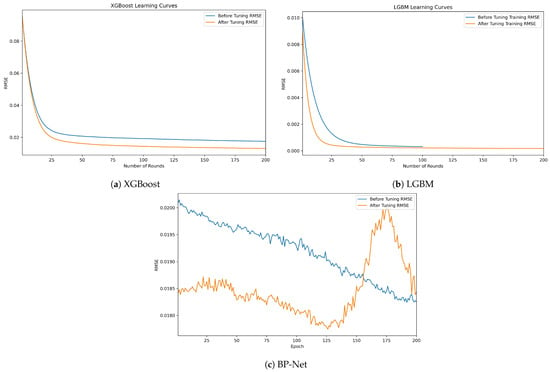

When fitting the model using a linear approach, we employed the penalized Lasso model due to multicollinearity among the variables. Compared to the multivariate linear regression model, the Lasso model reduced the multicollinearity among the independent variables, though at the cost of some accuracy, resulting in an R-squared value of 0.8779. When using nonlinear tree models for fitting, both the XGBoost and LGBM models exhibited better fitting performance than the linear model, with R-squared values reaching 0.9999. As shown in the learning curves of the training models in Figure 2, the XGBoost model converged faster than the LGBM model. Although the BP neural network model has the lowest of , its fitting performance was not as superior as the tree models.

Figure 2.

The learning curves for the 2-qubit pure state models show that the BP-Net converges the fastest, followed by XGBoost.

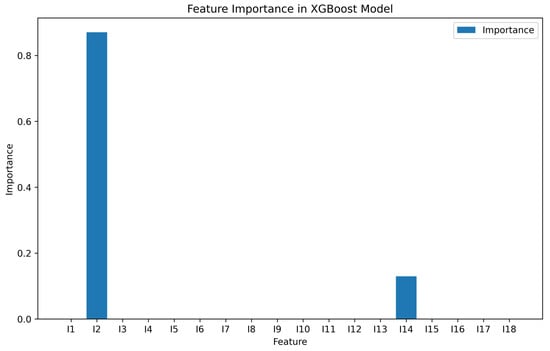

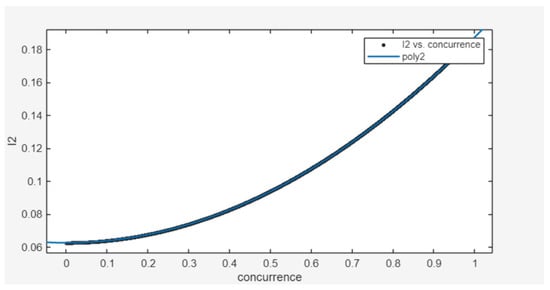

Since XGBoost demonstrated superior performance in the previous fitting, we output the feature importance of the model, as shown in Figure 3. In the XGBoost model, the concurrence of a 2-qubit pure state is related to the variables and . Therefore, we hypothesize that there must be some nonlinear relationship between the concurrence of a 2-qubit pure state and the variables and . After trying both primary and binary quadratic polynomials, it was found that the quadratic polynomial, which is satisfied when is used as the target variable and concurrence is used as the descriptor variable, was the most superior performing fit, as shown in Figure 4.

Figure 3.

The feature importance values from the XGBoost model indicate that concurrence is related to and , as shown in the figure.

Figure 4.

Using Matlab(R2024a)’s Curve Fitting Tool to fit a function between concurrence and the variable , when is the target variable and concurrence is the descriptive variable, it fits a quadratic polynomial. The fitting coefficient is 1, and the is nearly 0.

The functional relationship is as follows:

Further, the functional relationship between and concurrence can be obtained:

For this formula, we hope to achieve a more rigorous mathematical derivation in future research. Next, we will fit the concurrence for the general quantum states’ structure.

3.3. For General Structure States

To generate general two-qubit quantum states, we express the density matrices as follows:

where M is a randomly generated complex matrix where the real and imaginary parts of the elements in M are randomly drawn from a uniform distribution. Based on the above equation, we randomly generate 100,000 complex density matrices and calculate the 18 LU invariants and the concurrence of each density matrix as the experimental data. A boxplot is then drawn to observe the distribution of the variables.

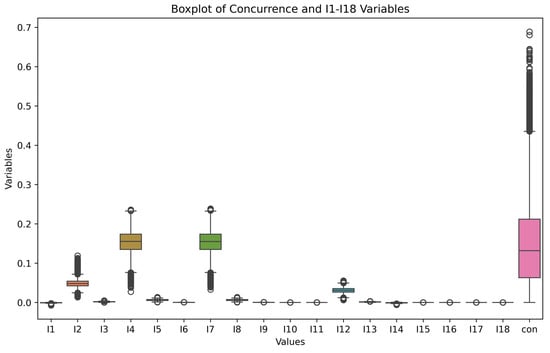

As shown in Figure 5, the concurrence of generated quantum states is mostly concentrated between 0 and 0.45. This is because the generated quantum states are mostly mixed states, and the entanglement of mixed states is weaker compared to pure states. The distributions of the invariants , , , and are more varied; the distributions of , , , , and are less varied; all the values of , , and are 1 because the 2-norms are themselves; and the values of the invariants , , and fluctuate around 0.

Figure 5.

The boxplot shows the numerical distribution of 2-qubit quantum state variables calculated using normalized s, p, and .

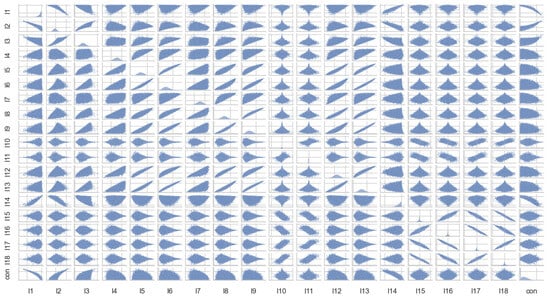

The scatterplot and correlation coefficient are then used to observe the linear correlation between the variables. As shown in Figure 6 and Figure 7, the concurrence of the target variable only has an obvious linear relationship with , , and , and the independent variables all have different degrees of linear correlation.

Figure 6.

Heatmap of correlation coefficients of 2−qubit quantum state variables; there is no obvious linear relationship between the variables and concurrence.

Figure 7.

Scatterplot of 2−qubit quantum state variables.

We substitute data into multiple linear regression models, the Lasso model, XGBoost model, LGBM model, and BP neural network model, for training. The performance results of the models on the test set are shown in Table 3. Due to the complexity of the universal quantum state density matrix structure, compared with the results of the simple pure state and the Werner state with a special structure, the fitting result of the multiple linear regression model is also great. However, due to the possibility of multicollinearity in the model, the model is overfitted. Therefore, the Lasso model is used to reduce the collinearity, and the decision coefficient of the model after fitting is only 0.8302. The tree model with the nonlinear structure XGBoost and LGBM still have the best fitting effect, with decision coefficients of 0.9665 and 0.9717, respectively, followed by the BP neural network model with a decision coefficient of 0.9488.

Table 3.

Evaluation results of 2-qubit generated state models.

In the following, we try to optimize the model.

Since there is no obvious linear relationship between the variables, we no longer consider using a linear model to fit the degree of concurrence.

For the tree model, the grid search algorithm is used to find the optimal parameters of the model, that is, to set the candidate value list of the hyperparameters, we traverse all the hyperparameters to combine them, train and evaluate each group of hyperparameters, and finally select the best performing hyperparameter combination as the hyperparameter of the model. The hyperparameters of the XGBoost model and LGBM model are shown in Table 4. The learning curve after parameter adjustment is shown in Figure 8. The blue one is the initial learning curve, and the orange one is the learning curve after parameter adjustment. The RMSE of the XGBoost model decreases significantly, and the convergence speed of the LGBM model also increases significantly.

Table 4.

Table of tree model parameters.

Figure 8.

Model learning curve after parameter tuning.

For the neural network model, grid search is also used to determine the hyperparameters of the model: the ReLU function is selected as the hidden layer activation function, four fully connected layers are set, Adam is used as the model optimizer, and the learning curve becomes stable when the number of iterations is 200 so as to build the final neural network model.

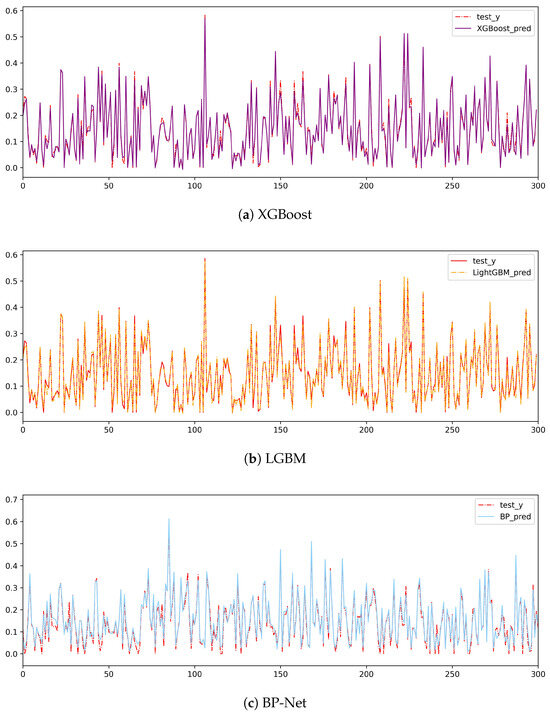

After the test set is substituted into the model to calculate the score, the results of which are shown in Table 5, the decision coefficients of the optimized model are improved to varying degrees. Among them, the tree model has the best fitting effect, followed by the BP neural network model. The comparison curve between the predicted value of the model and the true value is shown in Figure 9. Then, the pure state and Werner state of the special structure are substituted into the model for fitting. In the generated state, LGBM is the best performer, with the lowest and of 0.9855. XGBoost and BP-Net also perform better but slightly less well than LGBM. In the pure state, XGBoost and LGBM are very close to each other, with a very low RMSE and an R² of nearly 1. In the Werner state, all the models have a very low RMSE and an R² of nearly 1, which indicates that the models perform very well in the Werner state.

Table 5.

Evaluation results of 2-qubit quantum state models.

Figure 9.

Boxplot of the distribution of 2-qubit quantum state variables.

4. Conclusions and Discussion

Using classical machine learning to solve quantum information problems is one of the hot issues in current research. Classical machine learning methods provide more perspectives for exploring the structure of quantum states and studying the properties of quantum states. In this chapter, LU invariants are used as descriptor variables to train the model and predict the concurrence of quantum states. Compared with the calculation formula for concurrence, it does not need tomography of every element in the quantum state, saving a lot of physical resources. Moreover, the error of observing LU invariants is much smaller than that of tomography. For quantum states with a general structure, a more accurate prediction value of concurrence is obtained. The strong correlation between the concurrence and , , and and giving a lower bound on the concurrence by using , , and is a worthwhile thing to consider. In fact, has already given some theories on the lower bound on the concurrence degree, while the use of and to analyze the lower bound of concurrence presents a deeper research problem.

Author Contributions

Conceptualization, L.L.; Methodology, M.L., X.Z. and S.S.; Investigation, W.W.; Writing—original draft, X.Z.; Writing—review & editing, M.L. and J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the Shandong Provincial Natural Science Foundation for Quantum Science No. ZR2021LLZ002 and the Fundamental Research Funds for the Central Universities Nos. 22CX03005A and 24CX03003A.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

No data were used for the research described in this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Horodecki, R.; Horodecki, P.; Horodecki, M. Quantum entanglement. Rev. Mod. Phys. 2009, 81, 865. [Google Scholar] [CrossRef]

- Peres, A. Separability criterion for density matrices. Phys. Rev. Lett. 1996, 77, 1413. [Google Scholar] [CrossRef] [PubMed]

- Audenaert, K.; Verstraete, F.; De Moor, B. Variational characterizations of separability and entanglement of formation. Phys. Rev. A 2001, 64, 052304. [Google Scholar] [CrossRef]

- Eisert, J.; Brandao, F.G.; Audenaert, K.M. Quantitative entanglement witnesses. New J. Phys. 2007, 9, 46. [Google Scholar] [CrossRef]

- Gühne, O.; Tóth, G. Entanglement detection. Phys. Rep. 2009, 474, 1–75. [Google Scholar] [CrossRef]

- Spengler, C.; Huber, M.; Brierley, S.; Adaktylos, T.; Hiesmayr, B.C. Entanglement detection via mutually unbiased bases. Phys. Rev. A 2012, 86, 022311. [Google Scholar] [CrossRef]

- Horodecki, P.; Ekert, A. Method for Direct Detection of Quantum Entanglement. Phys. Rev. Lett. 2002, 89, 127902. [Google Scholar] [CrossRef]

- Pan, W.W.; Xu, X.Y.; Kedem, Y.; Wang, Q.Q.; Chen, Z.; Jan, M.; Sun, K.; Xu, J.S.; Han, Y.J.; Li, C.F.; et al. Direct Measurement of a Nonlocal Entangled Quantum State. Phys. Rev. Lett. 2019, 123, 150402. [Google Scholar] [CrossRef]

- Bennett, C.H.; DiVincenzo, D.P.; Smolin, J.A.; Wootters, W.K. Mixed-state entanglement and quantum error correction. Phys. Rev. A 1996, 54, 3824. [Google Scholar] [CrossRef]

- Życzkowski, K.; Horodecki, P.; Sanpera, A.; Lewenstein, M. Volume of the Set of Separable States. Phys. Rev. A 1998, 58, 883. [Google Scholar] [CrossRef]

- Schumacher, B.; Westmorel, M.D. Relative entropy in quantum information theory. Contemp. Math. 2002, 305, 265–290. [Google Scholar]

- Osterloh, A.; Amico, L.; Falci, G.; Fazio, R. Scaling of entanglement close to a quantum phase transition. Nature 2002, 416, 608–610. [Google Scholar] [CrossRef] [PubMed]

- Vedral, V. Entanglement hits the big time. Nature 2003, 425, 28–29. [Google Scholar] [CrossRef] [PubMed]

- Wootters, W.K. Entanglement of formation and concurrence. Quantum Inf. Comput. 2001, 1, 27–44. [Google Scholar] [CrossRef]

- Wootters, W.K. Entanglement of formation of an arbitrary state of two qubits. Phys. Rev. Lett. 1998, 80, 2245. [Google Scholar] [CrossRef]

- Steffen, M.; Ansmann, M.; Bialczak, R.C.; Katz, N.; Lucero, E.; McDermott, R.; Neeley, M.; Weig, E.M.; Clel, A.N.; Martinis, J.M. Measurement of the entanglement of two superconducting qubits via state tomography. Science 2006, 313, 1423–1425. [Google Scholar] [CrossRef]

- D’Ariano, G.M.; Paris, M.G.A.; Sacchi, M.F. Quantum tomography. Adv. Imaging Electron Phys. 2003, 128, S1076–S5670. [Google Scholar]

- O’Donnell, R.; Wright, J. Efficient quantum tomography. In Proceedings of the Forty-Eighth Annual ACM Symposium on Theory of Computing, Cambridge, MA, USA, 19–21 June 2016; pp. 899–912. [Google Scholar]

- Makhlin, Y. Nonlocal Properties of Two-Qubit Gates and Mixed States, and the Optimization of Quantum Computations. Quantum Inf. Process. 2002, 4, 243–252. [Google Scholar] [CrossRef]

- Hill, S.; Wootters, W.K. Entanglement of a Pair of Quantum Bits. Phys. Rev. Lett. 1997, 78, 5022. [Google Scholar] [CrossRef]

- Zhou, Z.H. Machine Learning; Tsinghua University Press: Beijing, China, 2016. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’16), San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. LightGBM: A highly efficient gradient boosting decision tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Long Beach, CA, USA, 4–9 December 2017; pp. 3149–3157. [Google Scholar]

- Werner, R.F. Quantum states with Einstein-Podolsky-Rosen correlations admitting a hidden-variable model. Phys. Rev. A 1989, 40, 4277. [Google Scholar] [CrossRef]

- Chen, K.; Albeverio, S.; Fei, S.M. Concurrence-based entanglement measure for Werner states. Rep. Math. Phys. 2006, 58, 325–334. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).