Abstract

In this paper, we consider classes of decision tables with many-valued decisions closed under operations of the removal of columns, the changing of decisions, the permutation of columns, and the duplication of columns. We study relationships among three parameters of these tables: the complexity of a decision table (if we consider the depth of the decision trees, then the complexity of a decision table is the number of columns in it), the minimum complexity of a deterministic decision tree, and the minimum complexity of a nondeterministic decision tree. We consider the rough classification of functions characterizing relationships and enumerate all possible seven types of relationships.

1. Introduction

In this paper, we consider closed classes of decision tables with many-valued decisions and study the relationships among three parameters of these tables: the complexity of a decision table (if we consider the depth of decision trees, then the complexity of a decision table is the number of columns in it), the minimum complexity of a deterministic decision tree, and the minimum complexity of a nondeterministic decision tree.

A decision table with many-valued decisions is a rectangular table in which columns are labeled with attributes, rows are pairwise different, and each row is labeled with a nonempty, finite set of decisions. Rows are interpreted as tuples of values of the attributes. For a given row, it is required to find a decision from the set of decisions attached to the row. To this end, we can use the following queries: we can choose an attribute and ask what is the value of this attribute in the considered row. We study two types of algorithms based on these queries: deterministic and nondeterministic decision trees. One can interpret nondeterministic decision trees for a decision table as a way to represent an arbitrary system of true decision rules for this table that covers all rows. We consider in some sense arbitrary complexity measures that characterize the time complexity of decision trees. Among them, we distinguish so-called limited complexity measures, for example, the depth of decision trees.

Decision tables with many-valued decisions often appear in data analysis, where they are known as multilabel decision tables [1,2,3]. Moreover, decision tables with many-valued decisions are common in such areas as combinatorial optimization, computational geometry, and fault diagnosis, where they are used to represent and explore problems.

Decision trees [4,5,6,7] and decision rule systems [8,9,10,11,12] are widely used as classifiers as a means for knowledge representation and as algorithms for solving various problems of combinatorial optimization, fault diagnosis, etc. Decision trees and rules are among the most interpretable models in data analysis [13].

The depth of deterministic and nondeterministic decision trees for computation Boolean functions (variables of a function are considered as attributes) has been studied quite intensively [14,15,16]. Note that the minimum depth of a nondeterministic decision tree for a Boolean function is equal to its certificate complexity [17].

We study classes of decision tables with many-valued decisions closed under four operations: the removal of columns, the changing of decisions, the permutation of columns, and the duplication of columns. The most natural examples of such classes are closed classes of decision tables generated by information systems [18]. An information system consists of a set of objects (universe) and a set of attributes (functions) defined on the universe and with values from a finite set. A problem over an information system is specified by a finite number of attributes that divide the universe into nonempty domains in which these attributes have fixed values. A nonempty finite set of decisions is attached to each domain. For a given object from the universe, it is required to find a decision from the set attached to the domain containing this object.

A decision table with many-valued decisions corresponds to this problem in a natural way: the columns of this table are labeled with the considered attributes, and the rows correspond to domains and are labeled with sets of decisions attached to domains. The set of decision tables corresponding to problems over an information system forms a closed class generated by this system. Note that the family of all closed classes is essentially wider than the family of closed classes generated by information systems. In particular, the union of two closed classes generated by two information systems is a closed class. However, generally, there is not an information system that generates this class.

Various classes of objects that are closed under different operations have been intensively studied. Among them, in particular, are classes of Boolean functions closed under the operation of superposition [19], minor-closed classes of graphs [20], classes of read-once Boolean functions closed under the removal of variables and the renaming of variables, languages closed under taking factors, etc. Decision tables represent an interesting mathematical object deserving mathematical research, particularly regarding the study of closed classes of decision tables.

This paper continues the study of closed classes of decision tables that started with the work of [21] and that were frozen for various reasons for many years. In [21], we studied the dependence of the minimum depth of deterministic decision trees and the depth of deterministic decision trees constructed by a greedy algorithm on the number of attributes (columns) for conventional decision tables from classes closed under operations of the removal of columns and the changing of decisions.

In the present paper, we study so-called t pairs , where is a class of decision tables closed under the considered four operations, and is a complexity measure for this class. The t pair is called limited if is a limited complexity measure. For any decision table , we have three parameters:

- —The complexity of the decision table T. This parameter is equal to the complexity of a deterministic decision tree for the table T, which sequentially computes the values of all attributes attached to columns of T.

- —The minimum complexity of a deterministic decision tree for the table T.

- —The minimum complexity of a nondeterministic decision tree for the table T.

We investigate the relationships between any two such parameters for decision tables from . Let us consider, for example, the parameters and . Let . We study relations of the kind , which are true for any table . The minimum value of u is the most interesting for us. This value (if it exists) is equal to

We also study relations of the kind . In this case, the maximum value of l is the most interesting for us. This value (if it exists) is equal to

The two functions and describe how the behavior of the parameter depends on the behavior of the parameter for tables from .

There are 18 similar functions for all ordered pairs of parameters , , and . These 18 functions well describe the relationships among the considered parameters. It would be very interesting to point out the 18 tuples of these functions for all t pairs and all limited t pairs. But, this is a very difficult problem.

In this paper, instead of functions, we study types of functions. With any partial function , we associate its type from the set . For example, if the function f has an infinite domain, and it is bounded from above, then its type is equal to . If the function f has an infinite domain, is not bounded from above, and the inequality holds for a finite number of , then its type is equal to . Thus, we enumerate the 18 tuples of the types of functions. These tuples are represented in tables called the types of t-pairs. We prove that there are only seven realizable types of t pairs and only five realizable types of limited t pairs.

First, we study 9 tuples of the types of functions , . These tuples are represented in tables called upper types of t pairs. We enumerate all the realizable upper types of t pairs and limited t pairs. After that, we extend the results obtained for the upper types of t pairs to the case of the types of t pairs. We also define the notion of a union of two t pairs and study the upper type of the resulting t pair, thus depending on the upper types of the initial t pairs.

The obtained results allow us to point out cases where the complexity of deterministic and nondeterministic decision trees is essentially less than the complexity of the decision table (see Section 2.3). This finding may prove useful in related applications.

This paper is based on the work of [22], in which similar results were obtained for classes of problems over information systems. We have generalized proofs from [22] to the case of decision tables from closed classes and use some results from this paper to prove the existence of t pairs and limited t pairs with given upper types.

In our previous work [7], we considered functions characterizing the growth in the worst case of the minimum complexity of deterministic and nondeterministic decision trees with the growth of the complexity of the set of attributes attached to columns of the conventional decision table and also obtained preliminary results on the behavior of the function characterizing the relationship between the former two parameters. In the current work, we mainly focus on the rough classification of types.

The paper consists of eight sections. In Section 2, the basic definitions are considered. In Section 3, we provide the main results related to the types of t pairs and limited t pairs. In Section 4, Section 5 and Section 6, we study the upper types of t pairs and the limited t pairs. Section 7 contains proofs of the main results, and Section 8 provides short conclusions.

2. Basic Definitions

2.1. Decision Tables and Closed Classes

Let be the set of non-negative integers. For any , let . The set of nonempty finite subsets of the set will be denoted by . Let F be a nonempty set of attributes (really, the names of attributes).

Definition 1.

We now define the set of decision tables . An arbitrary decision table T from this set is a rectangular table with columns labeled with attributes , where any two columns labeled with the same attribute are equal. The rows of this table are pairwise different and are filled in with numbers from . Each row is interpreted as a tuple of values of attributes . For each row in the table, a set from is attached, which is interpreted as a set of decisions for this row.

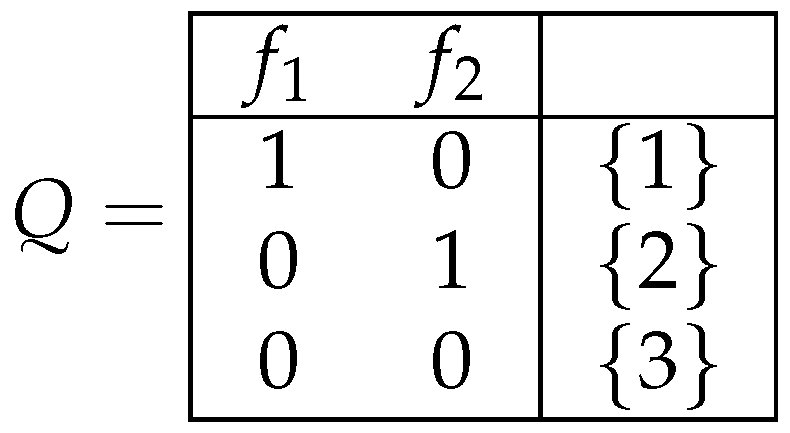

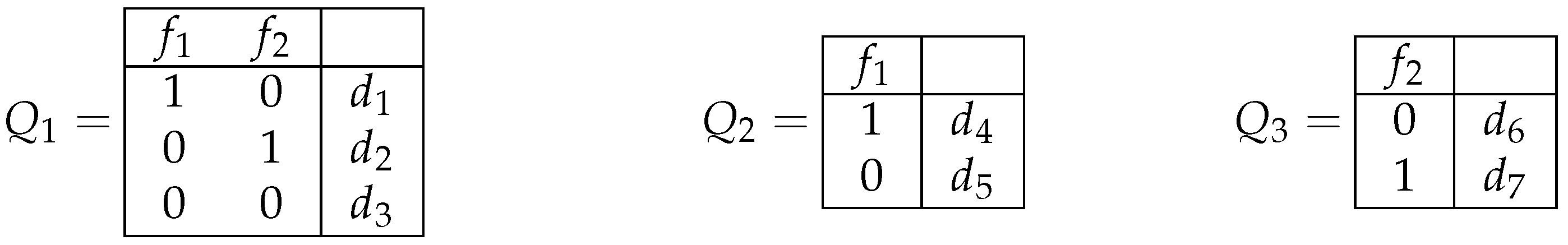

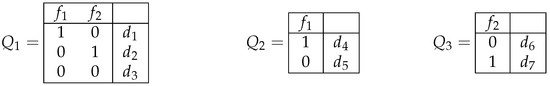

Example 1.

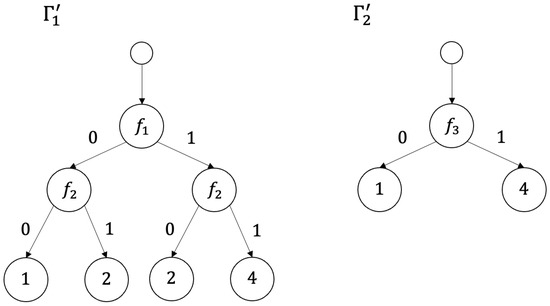

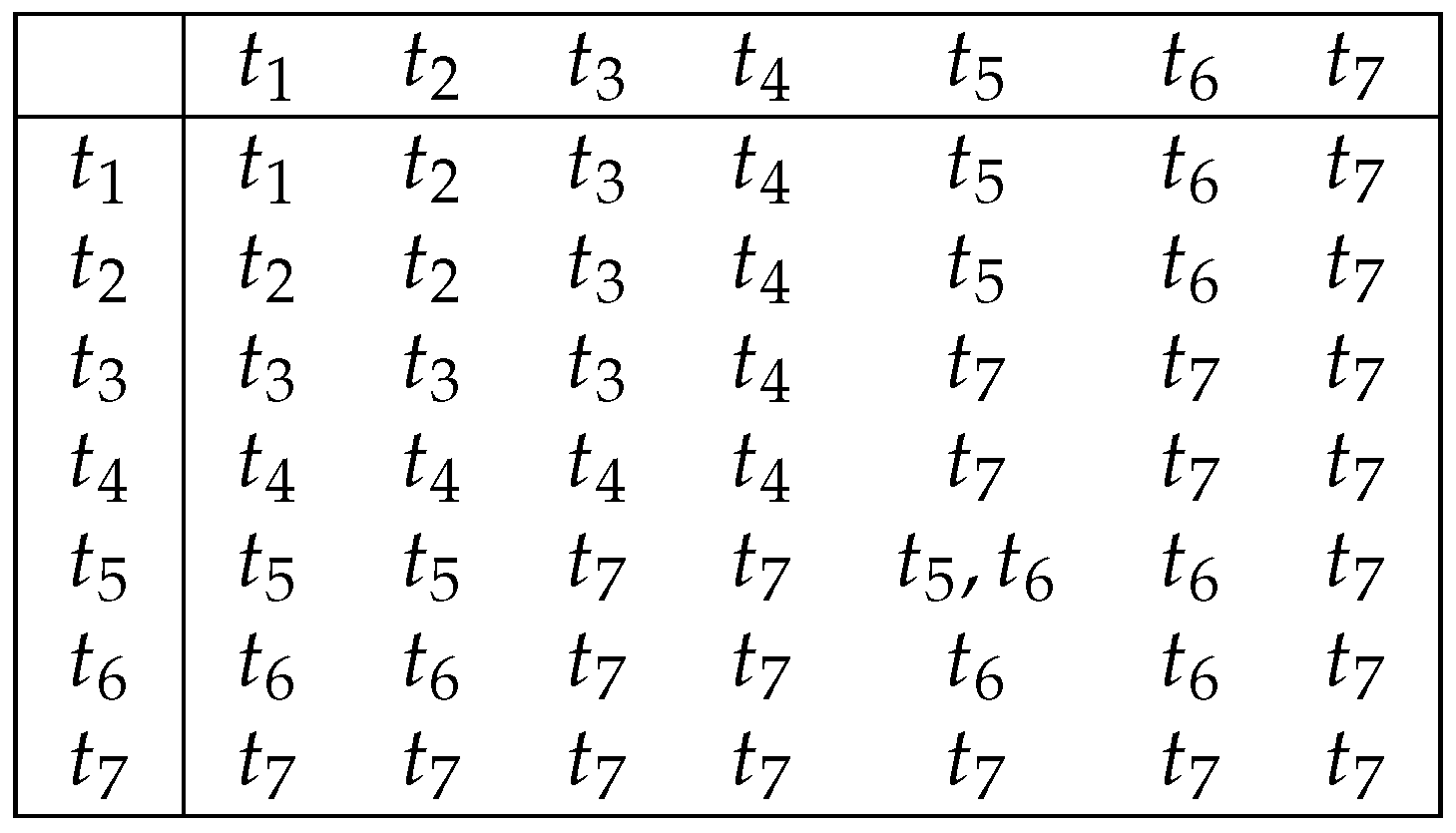

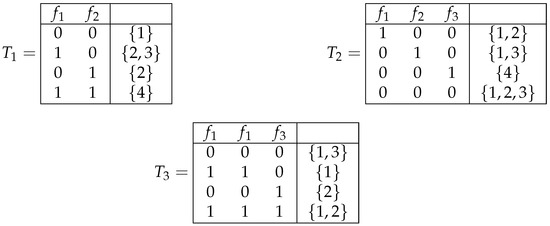

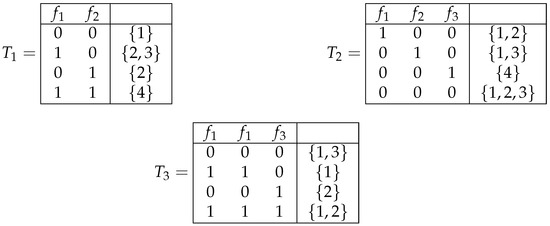

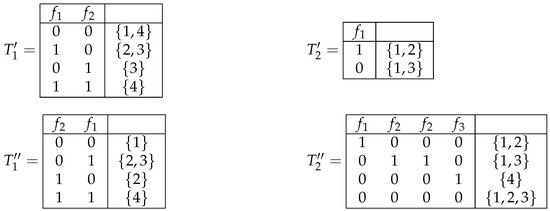

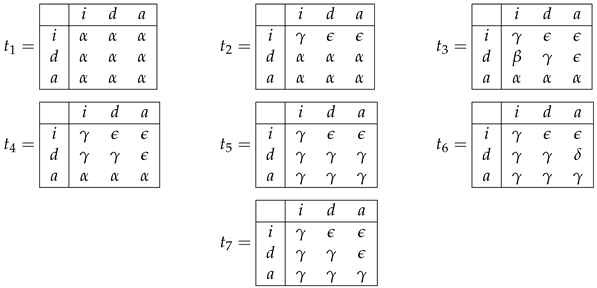

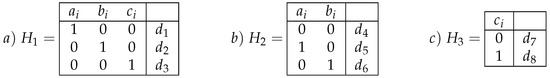

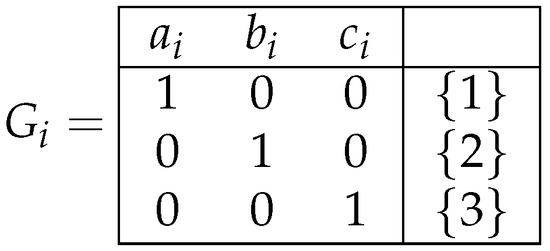

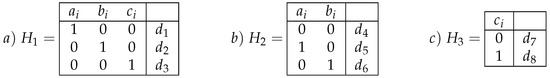

Three decision tables , , and from the set , where , are shown in Figure 1.

Figure 1.

Decision tables , , and .

We correspond to the table T the following problem: for a given row of T, we should recognize a decision from the set of decisions attached to this row. To this end, we can use queries about the values of the attributes for this row.

We denote as the set of attributes attached to the columns of T. denotes the intersection of the sets of decisions attached to the rows of T, and by , we denote the set of rows of the table T. Decisions from are called common decisions for T. The table T will be called degenerate if or if . We denote as the set of degenerate decision tables from .

Example 2.

Two degenerate decision tables, and , are shown in Figure 2.

Figure 2.

Degenerate decision tables and .

Definition 2.

A subtable of the table T is a table obtained from T through the removal of some of its rows. Let and be the set of all finite words in the alphabet , including the empty word λ. Let . We now define a subtable of the table T. If , then . Let . Then, consists of all the rows of T that, in the intersection with columns , have values , respectively.

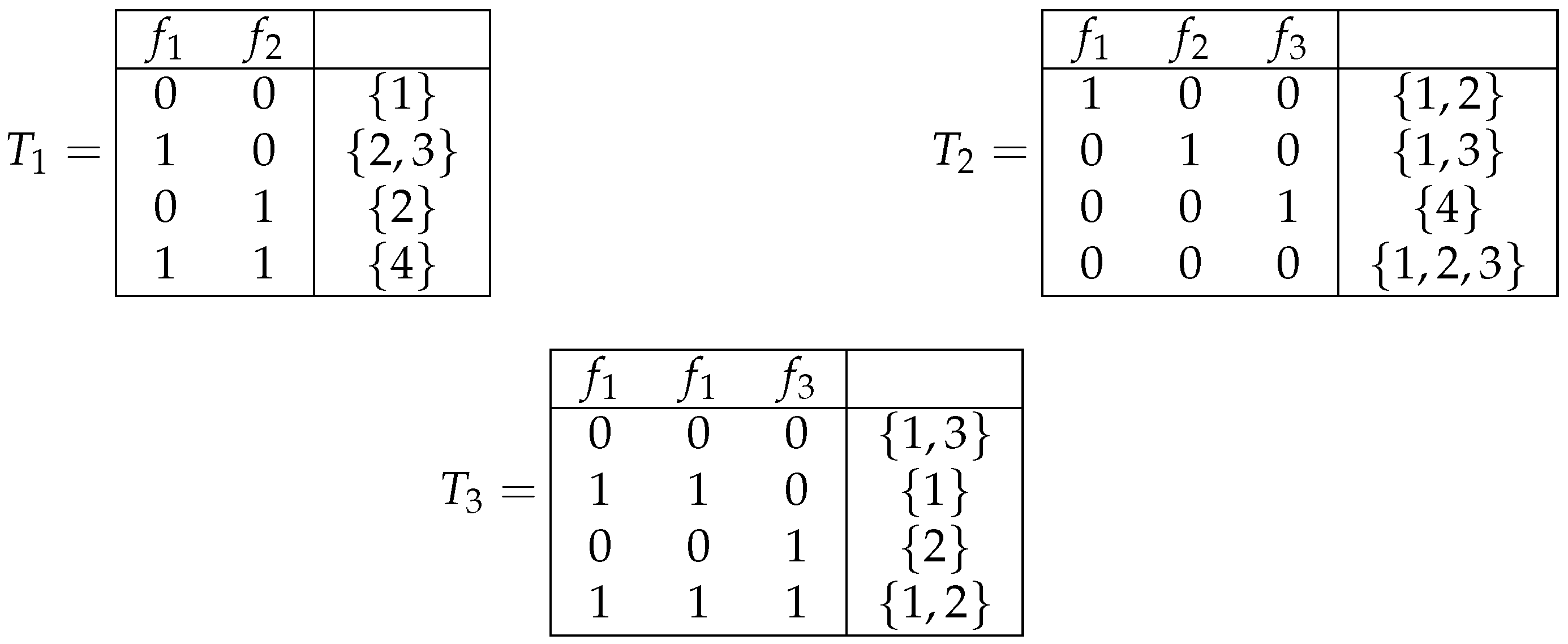

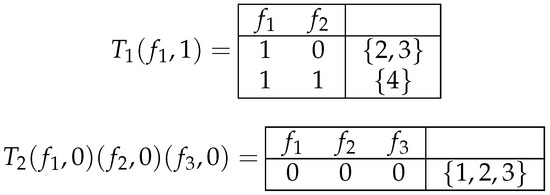

Example 3.

Figure 3.

Subtables and of tables and shown in Figure 1.

We now define four operations on the set of decision tables:

Definition 3.

Removal of columns: We can remove an arbitrary column in a table T with at least two columns. As a result, the obtained table can have groups of equal rows. We keep only the first row in each such group.

Definition 4.

Changing of decisions: In a given table T, we can change in an arbitrary way sets of decisions attached to rows.

Definition 5.

Permutation of columns: We can swap any two columns in a table T, including the attached attribute names.

Definition 6.

Duplication of columns: For any column in a table T, we can add its duplicate next to that column.

Definitions 5 and 6 characterize the two most natural examples of operations applied to information systems. Definitions 3 and 4 allows us to say that we cover important classes of information systems (see Section 2.4).

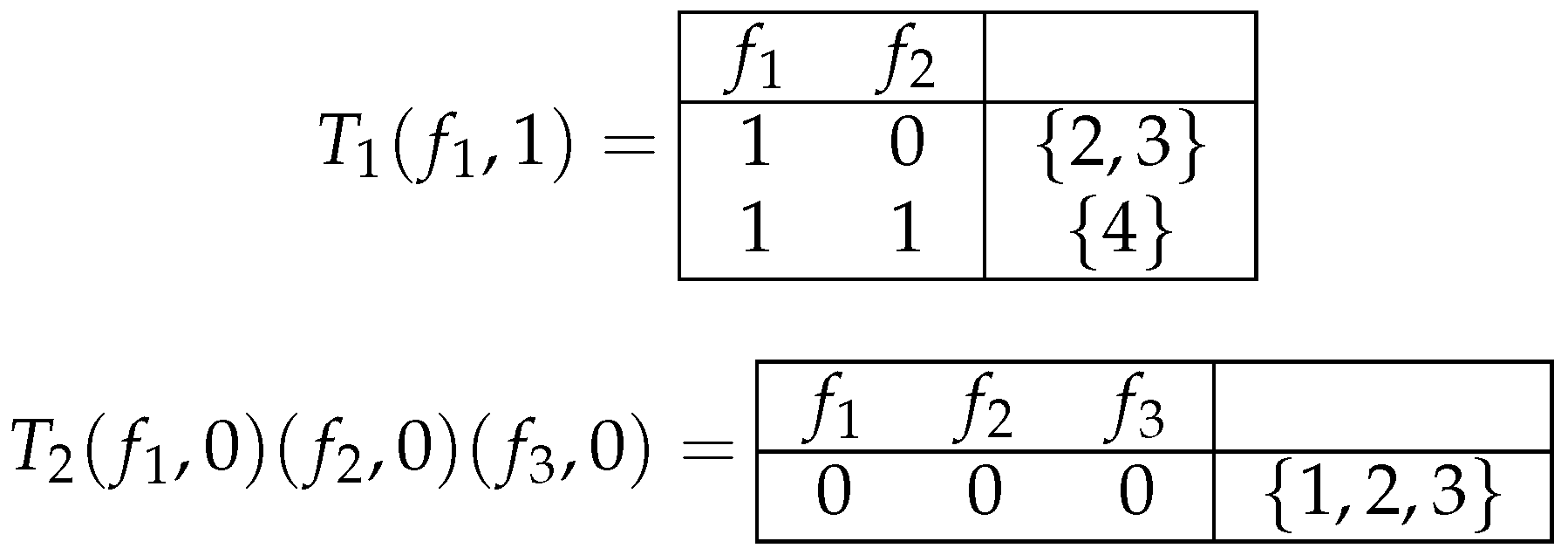

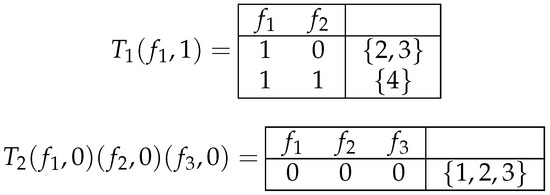

Example 4.

Decision tables ,,, and depicted in Figure 4 are obtained from decision tables and shown in Figure 1 by operations of changing the decisions, removal of columns, permutation of columns, and duplication of columns, respectively.

Figure 4.

Decision tables ,,, and obtained from tables and shown in Figure 1 by operations of changing the decisions, removal of columns, permutation of columns, and duplication of columns, respectively.

Definition 7.

Let . The closure of the table T is a set, which contains all the tables that can be obtained from T by the operations of the removal of columns, the changing of decisions, the permutation of columns, and the duplication of columns using only such tables. We denote the closure of the table T by . It is clear that .

Definition 8.

Let . The closure of the set is defined in the following way: . We say that is a closed class if . In particular, the empty set of tables is a closed class.

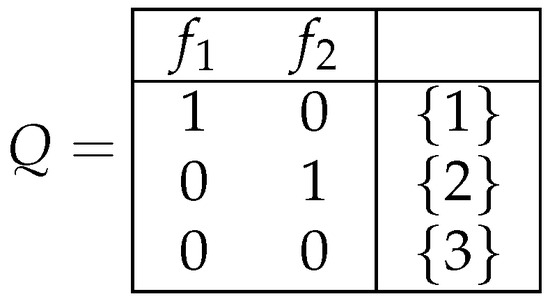

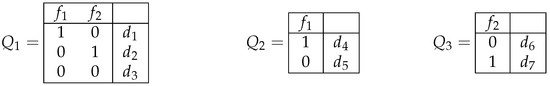

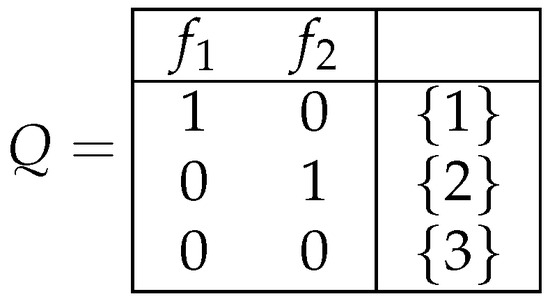

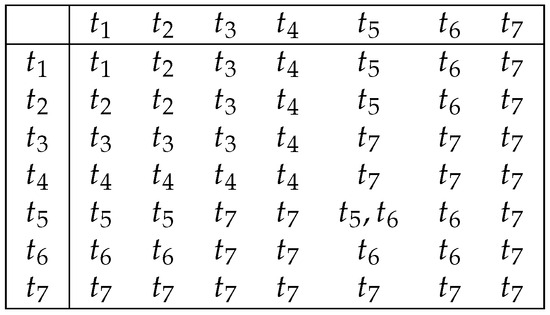

Example 5.

We now consider a closed class of decision tables from the set , which is equal to , where the decision table Q is depicted in Figure 5. The closed class contains all the tables depicted in Figure 6 and all the tables that can be obtained from them by the operations of the duplication of columns and the permutation of columns.

Figure 5.

Decision table Q.

Figure 6.

Decision tables from closed class , where .

If and are closed classes belonging to , then is also a closed class. We can consider closed classes and belonging to different sets of decision tables. Let and . Then, is a closed class, and .

2.2. Deterministic and Nondeterministic Decision Trees

A finite directed tree with the root is a finite directed tree in which exactly one node has no entering edges. This node is called the root. Nodes of the tree, which have no outgoing edges, are called terminal nodes. Nodes that are neither the root nor the terminal are called worker nodes. A complete path in a finite directed tree with the root is any sequence of nodes and edges starting from the root node and ending with a terminal node , where is the edge outgoing from the node and entering the node .

Definition 9.

A decision tree over the set of decision tables is a labeled finite directed tree with the root with at least two nodes (the root and a terminal node) possessing the following properties:

- •

- The root and the edges outgoing from the root are not labeled.

- •

- Each worker node is labeled with an attribute from the set F.

- •

- Each edge outgoing from a worker node is labeled with a number from .

- •

- Each terminal node is labeled with a number from .

We denote as the set of decision trees over the set of decision tables .

Definition 10.

A decision tree from is called deterministic if it satisfies the following conditions:

- •

- Exactly one edge leaves the root.

- •

- The edges outgoing from each worker node are labeled with pairwise different numbers.

Let be a decision tree from . Denote as the set of attributes attached to the worker nodes of . Set . Denote as the set of all finite words in the alphabet , including the empty word . We correspond to an arbitrary complete path in , as well as a word . If , then . Let and, for , the node is labeled with an attribute , and the edge is labeled with the number . Then, . We denote as the number attached to the terminal node of the path . We denote as the set of complete paths in the tree .

Definition 11.

Let . A nondeterministic decision tree for the table T is a decision tree Γ over satisfying the following conditions:

- For any row and any complete path , if , then belongs to the set of decisions attached to the row r.

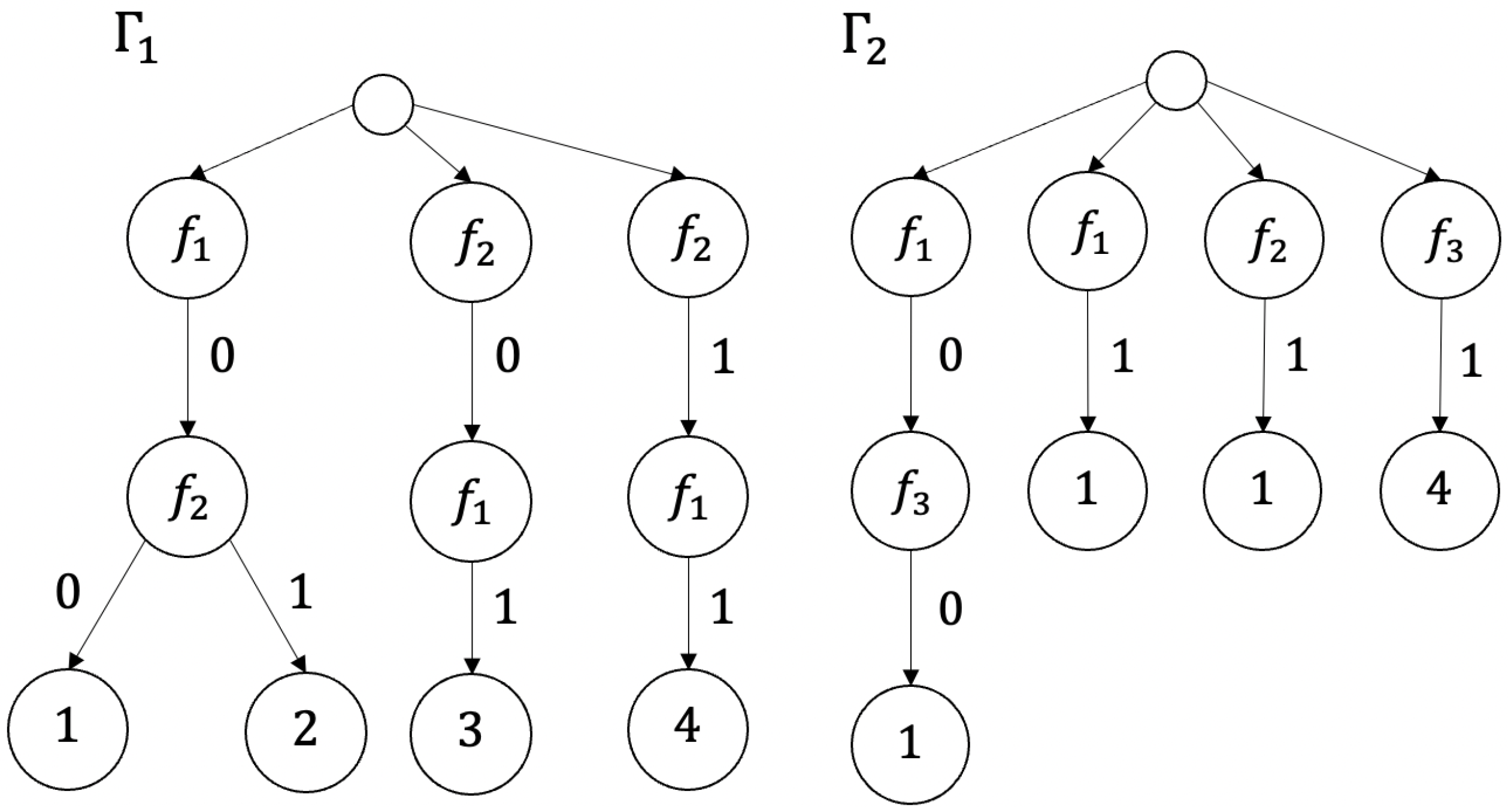

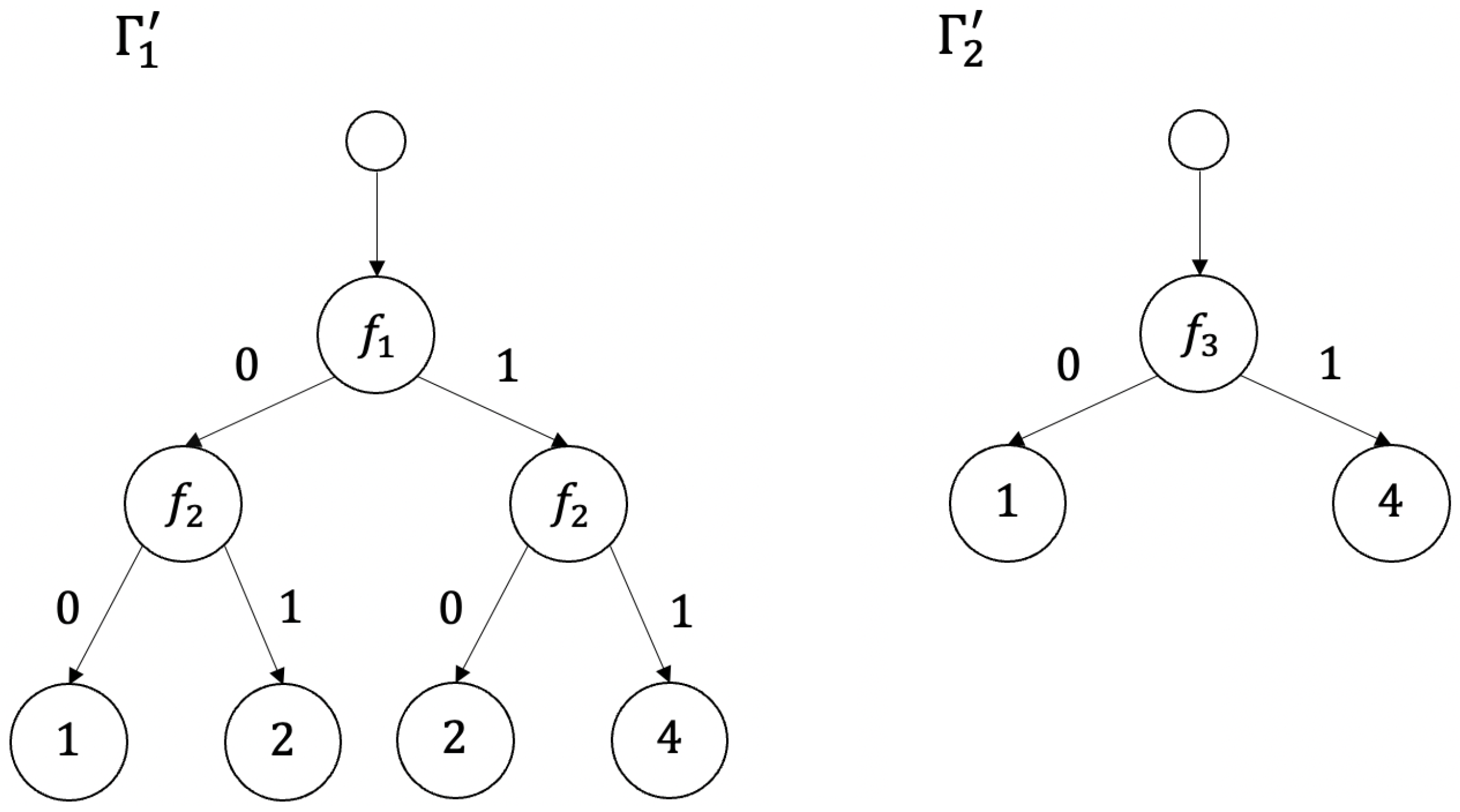

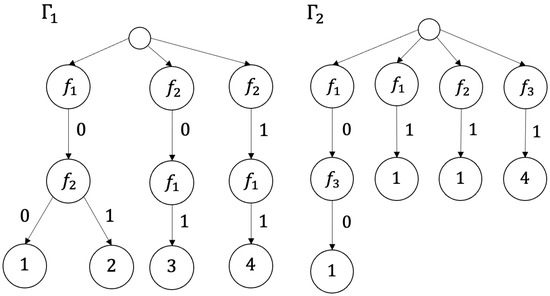

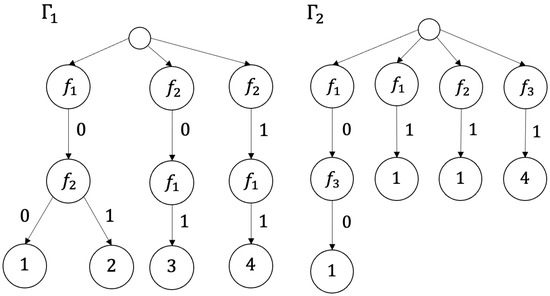

Example 6.

Nondeterministic decision trees and for decision tables and shown in Figure 1 are depicted in Figure 7.

Figure 7.

Nondeterministic decision trees and for decision tables and depicted in Figure 1.

Definition 12.

A deterministic decision tree for the table T is a deterministic decision tree over , which is a nondeterministic decision tree for the table T.

2.3. Complexity Measures

Denote as the set of all finite words over the alphabet F, including the empty word .

Definition 13.

A complexity measure over the set of decision tables is any mapping .

Definition 14.

The complexity measure ψ will be called limited if it possesses the following properties:

- (a)

- for any .

- (b)

- for any .

- (c)

- For any , the inequality holds, where is the length of α.

We extend an arbitrary complexity measure onto the set in the following way. Let . Then, , where if and if . The value will be called the complexity of the decision tree Γ.

We now consider an example of a complexity measure. Let . We define the function in the following way: if and if . The function is a limited complexity measure over , and it is called a weighted depth. If , then the function is called the depth and is denoted by h.

Let be a complexity measure over and T be a decision table from , in which rows are labeled with attributes . The value is called the complexity of the decision table T. We denote by the minimum complexity of a deterministic decision tree for the table T. We denote by the minimum complexity of a nondeterministic decision tree for the table T.

2.4. Information Systems

Let A be a nonempty set and F be a nonempty set of functions from A to .

Definition 15.

Functions from F are called attributes, and the pair is called an information system.

Definition 16.

A problem over U is any tuple , where , , and .

The problem z can be interpreted as a problem of searching for at least one number from the set for a given . We denote as the set of problems over the information system U.

We correspond to the problem z a decision table . This table has n columns labeled with attributes . A tuple is a row of the table if and only if the system of equations

has a solution from the set A. This row is labeled with the set of decisions . Let . One can show that the set is a closed class of decision tables.

Closed classes of decision tables based on information systems are the most natural examples of closed classes. However, the notion of a closed class is essentially wider. In particular, the union , where and are information systems, is a closed class, but generally, we cannot find an information system U such that .

2.5. Types of T Pairs

First, we define the notion of a t pair.

Definition 17.

A pair , where is a closed class of decision tables from , and ψ is a complexity measure over , is called a test pair (or t pair for short). If ψ is a limited complexity measure, then t pair will be called a limited t pair.

Let be a t pair. We have three parameters , and for any decision table . We now define functions that describe the relationships among these parameters. Let .

Definition 18.

We define the partial functions and as

If the value is definite, then it is the unimprovable upper bound on the values for tables satisfying . If the value is definite, then it is the unimprovable lower bound on the values for tables satisfying .

Let g be a partial function from to . We denote as the domain of g. Denote and .

Definition 19.

Now, we define the value as the type of g. Then, we have the following:

- If is an infinite set and g is bounded from the above function, then .

- If is an infinite set, is a finite set, and g is unbounded from the above function, then .

- If both sets and are infinite, then .

- If is an infinite set and is a finite set, then .

- If is a finite set, then .

Example 8.

One can show that , , , , and .

Definition 20.

We now define the table , which is called the type of t pair . This is a table with three rows and three columns, in which the rows from top to bottom and the columns from left to right are labeled with the indices . The pair is in the intersection of the row with index and the column with index .

3. Main Results

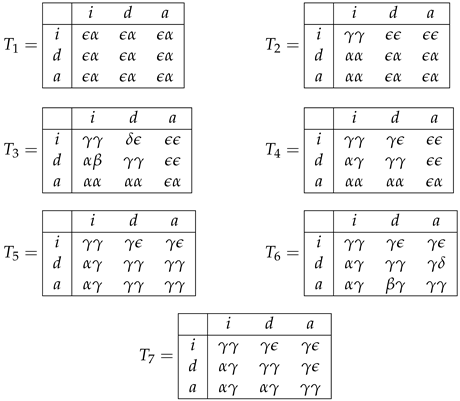

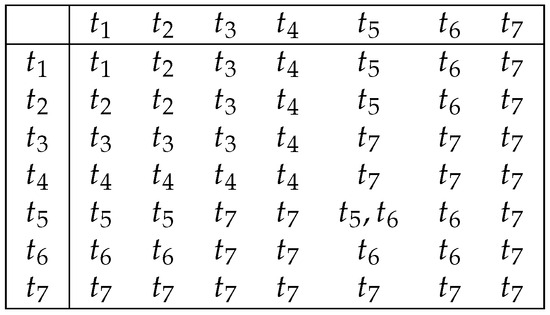

The main problem investigated in this paper is finding all the types of t pairs and limited t pairs. The solution to this problem describes all the possible (in terms of functions and types, ) relationships among the complexity of decision tables, the minimum complexity of the nondeterministic decision trees for them, and the minimum complexity of the deterministic decision trees for these tables. We now define seven tables:

Theorem 1.

For any t pair , the relation holds. For any , there exists a t pair such that .

Theorem 2.

For any limited t pair , the relation holds. For any , there exists a limited t pair such that .

4. Possible Upper Types of T Pairs

We begin our study by considering the upper type of t pair, which is a simpler object than the type of t pair.

Definition 21.

Let be a t pair. We now define table , which will be called the upper type of t pair . This is a table with three rows and three columns, in which the rows from top to bottom and the columns from left to right are labeled with the indices . The value is in the intersection of the row with index and the column with index . The table is called the upper type of t pair .

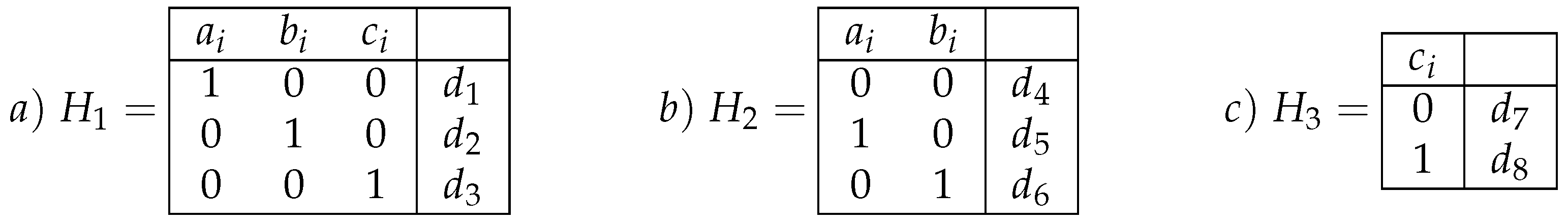

In this section, all possible upper types of t pairs are enumerated. We now define seven tables:

Proposition 1.

For any t pair , the relation holds.

Proposition 2.

For any limited t pair , the relation holds.

We divide the proofs of the propositions into a sequence of lemmas.

Lemma 1.

Let T be a decision table from a set of decision tables , and let ψ be a complexity measure over . Then, the inequalities hold.

Proof.

Let the columns of table T be labeled with the attributes . It is not difficult to construct a deterministic decision tree for table T, which sequentially computes the values of attributes . Evidently, . Therefore, . If a decision tree is a deterministic decision tree for T, then is a nondeterministic decision tree for T. Therefore, . □

Let be a t pair, , and . The notation means that the set is infinite. The notation means that the set X is empty. Evidently, if , then . It is not difficult to prove the following statement.

Lemma 2.

Let be a t pair, and . Then, we have the following:

(a) If there exists such that , then .

(b) If there is no such that , then , where .

Let be a t pair, and . The notation means that, for any , the following statements hold:

(a) If the value is definite, then either or the value is definite, and the inequality holds.

(b) If , then .

Let ⪯ be a linear order on the set such that .

Lemma 3.

Let be a t pair. Then, and for any .

Proof.

From the definition of the functions and from Lemma 1, it follows that and for any . Using these relations and Lemma 2, we obtain the statement of the lemma. □

Lemma 4.

Let be a t pair, and . Then, we have the following:

(a) if and only if the function is bounded from above on the closed class .

(b) If the function is unbounded from above on , then .

Proof.

The statement (a) is obvious. For (b), let the function be unbounded from above on . One can show that in this case the equality holds for infinitely many . Therefore, . □

Corollary 1.

Let be a t pair, and . Then, .

Lemma 5.

Let be a t pair, and . Then,

Proof.

Using Lemma 4, we conclude that the function is unbounded from above on . Let . Then, there exists a decision table for which the inequality holds. Let us consider a degenerate decision table obtained from T by replacing the sets of decisions attached to the rows by the set . It is clear that . Let be a decision tree that consists of the root, the terminal node labeled with 0, and the edge connecting these two nodes. One can show that is a deterministic decision tree for the table . Therefore, . Taking into account that m is an arbitrary number from , we obtain and . Using Lemma 2, we conclude that . □

Example 9.

Let us consider a t pair , where is a closed class described in Example 5. It is clear that the function is unbounded from above on , and the functions and are bounded from above on . Using Lemma 4, we obtain that for any , and . Using Lemma 5, . Therefore, .

Lemma 6.

Let be a t pair. Then, .

Proof.

Using Lemma 3 and Corollary 1, we obtain . Using Lemma 2, for some . Set . Assume that . Then, there exists such that for any . Let us prove by induction on n that, for any decision table T from , if , then , where . Using Lemma 1, we conclude that the considered statement holds under the condition . Let it hold for some . Let us show that this statement holds for too. Let , , and let the columns of the table T be labeled with the attributes . Since , we obtain . Let be a nondeterministic decision tree for the table T, and . Assume that in , there exists a complete path in which there are no worker nodes. In this case, a decision tree that consists of the root, the terminal node labeled with , and the edge connecting these two nodes is a nondeterministic decision tree for the table T. Therefore, . Assume now that each complete path in the decision tree contains a worker node. Let , and, for , the node is labeled with the attribute , and the edge is labeled with the number . Let the decision table be obtained from the decision table T using the operations of the permutation of columns and the duplication of columns so that its columns are labeled with attributes . We obtain the decision table from by removal of the last k columns. Let us denote as the decision table obtained from by changing the set of decisions corresponding to the row with and for the remaining rows with . It is clear that . Using the inductive hypothesis, we conclude that there exists a nondeterministic decision tree for the table such that . We denote as a tree obtained from by the removal of all the nodes and edges that satisfy the following condition: there is not a complete path in that contains this node or edge and for which . Let . Let us identify the roots of the trees . We denote as G the obtained tree. It is not difficult to show that G is a nondeterministic decision tree for the table T, and . Thus, the considered statement holds. Using Lemma 4, we conclude that . The obtained contradiction shows that . □

Let T be a decision table from . We now give the definitions of the parameters and of the table T.

Definition 22.

We denote as the number of rows in the table T.

Definition 23.

Let the columns of table T be labeled with the attributes . We now define the parameter . If table T is degenerate, then . Let T now be a nondegenerate table, and . Then, is the minimum natural m such that there exist attributes for which is a degenerate table. We denote .

The following statement follows immediately from Theorem 3.5 [23].

Lemma 7.

Let T be a nonempty decision table from in which each row is labeled with a set containing only one decision. Then,

Lemma 8.

Let be a limited t pair, and . Then, .

Proof.

Using Lemma 4, we conclude that there exists such that the inequality holds for any table . □

Let T be a nonempty table from in which the columns are labeled with the attributes and . We now show that there exist attributes such that the subtable is equal to the subtable , and if is a row of T; as well, if is not a row of T.

Let be a row of T. Let us change the set of decisions attached to the row with the set and for the remaining rows of T with the set . We denote the obtained table as . It is clear that . Taking into account that and the complexity measure has the property (c), it is not difficult to show that there exist attributes such that , and contains only the row . From here, it follows that .

Let be not a row of T. Let us show that there exist attributes such that , and the subtable is empty. If is empty, then the considered statement holds. Otherwise, there exists such that the subtable is nonempty, but the subtable is empty. We denote as the table obtained from T by the removal of the attributes . It is clear that , and is a row of . According to what has been proven above, there exist attributes such that

and . Using this fact, one can show that is empty and is equal to .

Let . We denote as the decision table obtained from by the removal of all the columns in which all the numbers are equal. Let the columns of be labeled with attributes . We now consider the decision table , which is obtained from by changing the decisions so that the decision set attached to each row of table contains only one decision and, for any two non-equal rows, the corresponding decisions are different. It is clear that . It is not difficult to show that .

We now show that the inequality holds for any attribute . Let us denote as the decision table obtained from by the removal of all the columns except the column labeled with the attribute f. If there is more than one column in , which is labeled with the attribute f, then we keep only one of them. Let the decision table be obtained from by changing the set of decisions for each row with the set of decisions . It is clear that . Let be a nondeterministic decision tree for the table , and . Since the column f contains different numbers, we have . Using the property (b) of the complexity measure , we obtain . Consequently, .

Taking into account that, for any , there exist attributes such that , and contains only the row , it is not difficult to show that

According to what has been proven above, for any , there exist attributes such that , and . Taking into account this equality, one can show that

Using Lemma 7, as well as inequalities (1) and (2), we conclude that there exists a deterministic decision tree for the table with . Taking into account that for any attribute and that the complexity measure has the property (a), we obtain

Consequently, . Taking into account that the complexity measure has the property (c), we obtain . Since is an arbitrary decision table from , we have that is a finite set. Therefore, . Using Lemma 3 and Corollary 1, we obtain . □

Proof of Proposition 1.

Let be a t pair. Using Corollary 1, we conclude that . Using Corollary 1 and Lemma 3, we obtain . From Lemma 6, it follows that . Then, we have the following:

(a) Let . Using Lemmas 3 and 4, we obtain .

(b) Let and . Using Lemmas 3, 4, and 5, we obtain .

(c) Let and . From Lemma 5, it follows that . Using Lemmas 3 and 6, we obtain . From this equality and from Lemma 4, it follows that . Using the equality , Lemma 3, and Corollary 1, we obtain . From the equalities, and from Lemmas 2 and 4, it follows that . Thus, .

(d) Let and . Using Lemma 5, we obtain . From Lemma 4, it follows that . Using Lemma 3 and Corollary 1, we obtain . From this equality, equality , and from Lemmas 2 and 4, it follows that . Thus, .

(e) Let . Using Lemma 5, we conclude that . Using Lemma 3 and Corollary 1, we obtain . Using Lemma 3, we obtain . Therefore, . □

Proof of Proposition 2.

Let be a limited t pair. Taking into account that the complexity measure has the property (c) and using Lemma 4, we obtain . Therefore, . Using Lemma 8, we obtain . From these relations and Proposition 1, it follows that the statement of the proposition holds. □

5. Realizable Upper Types of T Pairs

In this section, all realizable upper types of t pairs are enumerated.

Proposition 3.

For any , there exists a t pair such that

Proposition 4.

For any , there exists a limited t pair such that

The proofs of these propositions are based on the results obtained for information systems [22].

Let be an information system, where the attributes from F have values from , and is a complexity measure over U [22]. Note that is also a complexity measure over the set of decision tables . Let be a problem over U. In [22], three parameters of the problem z were defined: was called the complexity of the problem z description, was called the minimum complexity of a decision tree with attributes from the set —which solves the problem z deterministically—and was called the minimum complexity of a decision tree with attributes from the set , which solves the problem z nondeterministically.

Let . In [22], the partial function was defined as follows:

The table for the pair was defined in [22] as follows: this is a table with three rows and three columns, in which the rows from top to bottom and the columns from left to right are labeled with the indices . The value is in the intersection of the row with the index and the column with the index .

We now prove the following proposition:

Proposition 5.

Let U be an information system and ψ be a complexity measure over U. Then,

Proof.

Let be a problem over U and be the decision table corresponding to this problem. It is easy to see that . One can show that the set of decision trees solving the problem z nondeterministically and using only the attributes from the set (see corresponding definitions in [22]) is equal to the set of nondeterministic decision trees for the table . From here, it follows that and . Using these equalities, we can show that . □

This proposition allows us to transfer the results obtained for information systems in [22] to the case of closed classes of decision tables. Before each of the following seven lemmas, we define a pair , where U is an information system, and is a complexity measure over U.

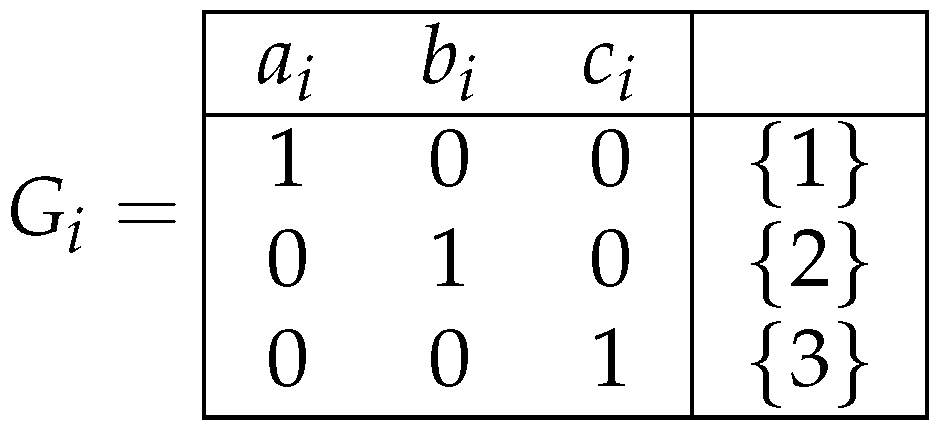

Let us define a pair as follows: , where , , and .

Lemma 9.

.

Proof.

From Lemma 4.1 [22], it follows that . Using Proposition 5, we obtain . □

Let us define a pair as follows: , where .

Lemma 10.

.

Proof.

From Lemma 4.2 [22], it follows that . Using Proposition 5, we obtain . □

Let us define a pair as follows: , where and, for any , if , then , and if , then .

Lemma 11.

.

Proof.

From Lemma 4.3 [22], it follows that . Using Proposition 5, we obtain . □

Let us define a pair as follows: , where if or , and in other cases.

Lemma 12.

.

Proof.

From Lemma 4.4 [22], it follows that . Using Proposition 5, we obtain . □

Let us define a pair as follows: , where and, for any , if , then , and if , then .

Lemma 13.

.

Proof.

From Lemma 4.5 [22], it follows that . Using Proposition 5, we obtain . □

Let us define a pair as follows: , where , and, for any , if , then , and if , then .

Lemma 14.

.

Proof.

From Lemma 4.6 [22], it follows that . Using Proposition 5, we obtain . □

Let us define a pair as follows: , where .

Lemma 15.

.

Proof.

From Lemma 4.7 [22], it follows that . Using Proposition 5, we obtain . □

Proof of Proposition 3.

The statement of the proposition follows from Lemmas 9–15. □

Proof of Proposition 4.

The statement of the proposition follows from Lemmas 10, 11, 13, 14, and 15. □

6. Union of T Pairs

In this section, we define a union of two t pairs, which is also a t pair, and study its upper type. Let and be t pairs, where , and . These two t pairs are called compatible if and . We now define a t pair , which is called a union of compatible t pairs and .

Definition 24.

The closed class in τ is defined as follows: . The complexity measure ψ in τ is defined for any word in the following way: if , then ; if , then ; if α contains letters from both and , then can have an arbitrary value from . In particular, if , then with ψ we can use the depth h.

We now consider the upper type of t pair . We denote as the function maximum for the linear order .

Theorem 3.

The equality holds for any , except for the case that and . In the last case, .

Proof.

Let and . We now define the value , where , and . Both and have values from the set (see the definitions before Lemma 2). If , then . If one of is equal to ⌀ and another one is equal to a number , then . If , then . If at least one of is equal to ∞, then . □

The following equality follows from the definition of the partial function , where , and : . Later in the proof, we will use this equality without special mention. From this equality, we obtain and . We now consider two different cases separately: (1) and (2) . Thus, we have the following:

(1) Let .

(a) Let . Since the functions and are both bounded from above, we obtain that the function is also bounded from above. From this, it follows that .

(b) Let . From the fact that and are both finite, we obtain that is also finite. Similarly, one can show that is unbounded from above on . From here, it follows that .

(c) Let . From here, it follows that the function is unbounded from above on . From Proposition 1, it follows that belongs to the set . Let . Using Lemma 4, we obtain . Let . Using Lemma 3 and the inequalities and , we obtain . The only case left is when . Since there is no for which or , then according to Lemma 2, we obtain that is an infinite set. Therefore, , and hence, . From Proposition 6, it follows that both cases are possible. Thus, we have the following:

(d) Let . From here, it follows that there is no for which or . Using Lemma 2, we conclude that is an infinite set. From the fact that and are both finite, we obtain that is also finite. Therefore, .

(e) Let . Since both and are finite sets, we obtain that is also a finite set. Therefore, .

(2) Let . Denote and . Let . We now consider a number of cases.

(a) Let . From here, it follows that is a finite set. Taking into account this fact, we obtain that is also a finite set. Therefore, . Later, we assume that .

(b) Let . Then, both f and g are nondecreasing functions, f is bounded from above, and g is unbounded from above. From here, it follows that there exists such that for any . Using this fact, we conclude that for . Therefore, . Later, we will assume that . It means we should only consider the pairs .

(c) Let . From here, it follows that are both infinite sets, and are both finite sets. Taking into account that both f and g are nondecreasing functions, we obtain that there exists such that for any . Therefore, .

(d) Let . Then, is an infinite set. Taking into account that is an infinite set and that is a finite set, we obtain that is also an infinite set. Therefore, .

(e) Let . From here, it follows that is an infinite set, and is a finite set. Therefore, . □

The next statement follows immediately from Proposition 1 and Theorem 3.

Corollary 2.

Let and be compatible t pairs, and let τ be a union of these t pairs. Then, the possible values of are in the table shown in Figure 9 in the intersection of the row labeled with and the column labeled with .

Figure 9.

Possible upper types of a union of two compatible t pairs.

To finalize the study of unions of t pairs, we prove the following statement:

Proposition 6.

(a) There exist compatible t pairs and and their union such that .

(b) There exist compatible t pairs and and their union such that and .

Proof.

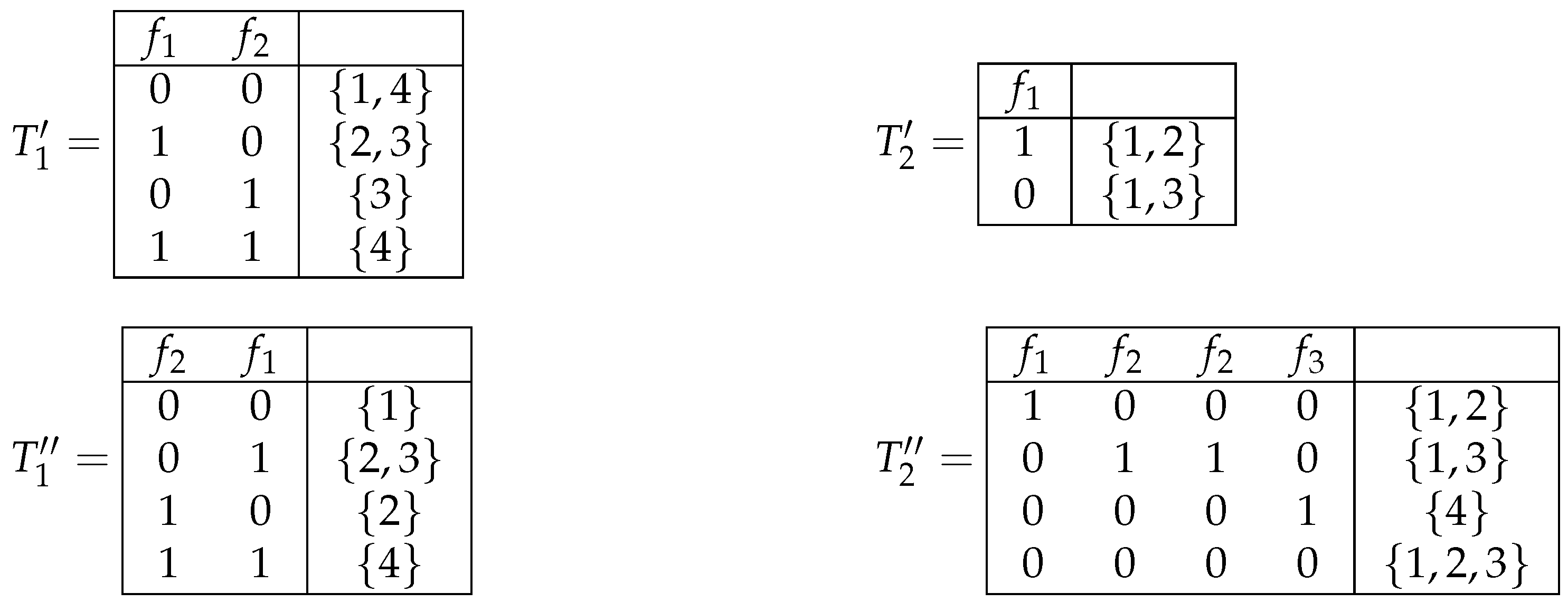

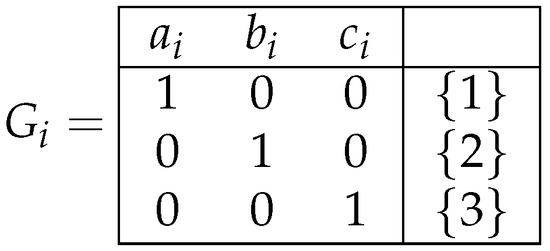

For , we denote , and in the decision table depicted in Figure 10. We study the t pair , where is the closed class of decision tables from , which is equal to , and is a complexity measure over defined in the following way: and if and . □

Figure 10.

Decision table .

We now study the function . Since the operations of the duplication of columns and the permutation of columns do not change the minimum complexity of the deterministic and nondeterministic decision trees, we only consider the operations of the changing of decisions and the removal of columns.

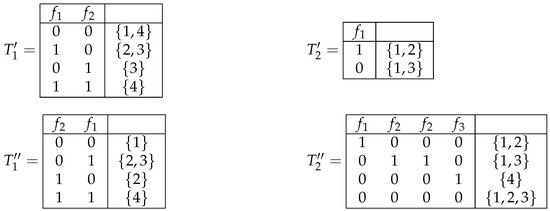

Using these operations, the decision tables from can be obtained from in three ways: (a) only through the changing of decisions, (b) by removing one column and through the changing of decisions, and (c) by removing two columns and through the changing of decisions. Figure 11 demonstrates examples of the decision tables from for each case. Without loss of generality, we can restrict ourselves to considering these three tables: , , and .

Figure 11.

Decision tables from closed class , where .

We consequently have the following:

(a) There are three different cases for the table : (i) the sets of decisions are pairwise disjoint, (ii) there are such that and , and (iii) . In the first case, and . In the second case, and . In the third case, and .

(b) There are three different cases for the table : (i) the sets of decisions are pairwise disjoint, (ii) there are such that and , and (iii) . In the first case, , and . In the second case, we have either or depending on the intersecting decision sets. In the third case, , and .

(c) There are two different cases for the table : (i) and (ii) . In the first case, , and . In the second case, , and .

As a result, we obtain that, for any ,

Let K be an infinite subset of the set . Denote and . It is clear that is a closed class of decision tables from . We now define a complexity measure over . Let . If for some ; then, . If contains letters from both and , and if , then .

Let and for any . We define a function as follows. Let . If , then . Let, for some , that . Then, . Using (3), one can show that, for any ,

Using this equality, one can prove that if the set is infinite and that if the set is finite.

Denote , and . Denote , , and . One can show that the t pairs and are compatible and that is a union of and . It is easy to prove that . Using Proposition 2, we obtain .

Denote , and . Denote , , and . One can show that the t pairs and are compatible and that is a union of and . It is easy to prove that and . Using Proposition 2, we obtain and . □

7. Proofs of Theorems 1 and 2

First, we consider some auxiliary statements.

Definition 25.

Let us define a function as follows: .

Proposition 7

(Proposition 5.1 [22]). Let X be a nonempty set , and for any . Then, .

Using Proposition 7, we obtain the following statement:

Proposition 8.

Let be a t pair, and . Then, .

Corollary 3.

Let be a t pair, and . Then, if and only if .

Proof of Theorem 1.

The statement of the theorem follows from Propositions 1 and 3 and from Corollary 3. □

Proof of Theorem 2.

The statement of the theorem follows from Propositions 2 and 4 and from Corollary 3. □

8. Conclusions

This paper is devoted to a comparative analysis of the deterministic and nondeterministic decision tree complexity for decision tables from closed classes. It is a qualitative research: we have considered a finite number of types of the behavior of functions characterizing the relationships among different parameters of decision tables. In this paper, we have enumerated all the realizable types of t pairs and limited t pairs. We have also defined the notion of a union of two t pairs and studied the upper type of the resulting t pair, thus depending on the upper types of the initial t pairs. The obtained results allow us to point out cases where the complexity of deterministic and nondeterministic decision trees is essentially less than the complexity of the decision table. Future publications will be related to a quantitative research: we will study the lower and upper bounds on the considered functions.

Author Contributions

Conceptualization, A.O. and M.M.; methodology, A.O. and M.M.; validation, A.O.; formal analysis, A.O. and M.M.; investigation, A.O.; resources, A.O. and M.M.; writing—original draft preparation, A.O. and M.M.; writing—review and editing, A.O. and M.M.; visualization, A.O.; supervision, M.M.; funding acquisition, M.M. All authors have read and agreed to the published version of the manuscript.

Funding

The research was funded by King Abdullah University of Science and Technology.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The research reported in this publication was supported by King Abdullah University of Science and Technology (KAUST). The authors are grateful to the anonymous reviewers for their useful remarks and suggestions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Boutell, M.R.; Luo, J.; Shen, X.; Brown, C.M. Learning multi-label scene classification. Pattern Recognit. 2004, 37, 1757–1771. [Google Scholar] [CrossRef]

- Vens, C.; Struyf, J.; Schietgat, L.; Dzeroski, S.; Blockeel, H. Decision trees for hierarchical multi-label classification. Mach. Learn. 2008, 73, 185–214. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhang, M.; Huang, S.; Li, Y. Multi-instance multi-label learning. Artif. Intell. 2012, 176, 2291–2320. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Wadsworth and Brooks: Monterey, CA, USA, 1984. [Google Scholar]

- Quinlan, J.R. C4.5: Programs for Machine Learning; Morgan Kaufmann: Burlington, MA, USA, 1993. [Google Scholar]

- Rokach, L.; Maimon, O. Data Mining with Decision Trees—Theory and Applications; Series in Machine Perception and Artificial Intelligence; World Scientific: Singapore, 2007; Volume 69. [Google Scholar]

- Ostonov, A.; Moshkov, M. On Complexity of Deterministic and Nondeterministic Decision Trees for Conventional Decision Tables from Closed Classes. Entropy 2023, 25, 1411. [Google Scholar] [CrossRef] [PubMed]

- Boros, E.; Hammer, P.L.; Ibaraki, T.; Kogan, A. Logical analysis of numerical data. Math. Program. 1997, 79, 163–190. [Google Scholar] [CrossRef]

- Boros, E.; Hammer, P.L.; Ibaraki, T.; Kogan, A.; Mayoraz, E.; Muchnik, I.B. An Implementation of Logical Analysis of Data. IEEE Trans. Knowl. Data Eng. 2000, 12, 292–306. [Google Scholar] [CrossRef]

- Fürnkranz, J.; Gamberger, D.; Lavrac, N. Foundations of Rule Learning; Cognitive Technologies; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Pawlak, Z. Rough Sets—Theoretical Aspects of Reasoning about Data; Theory and Decision Library: Series D; Kluwer: Alphen aan den Rijn, The Netherlands, 1991; Volume 9. [Google Scholar]

- Pawlak, Z.; Skowron, A. Rudiments of rough sets. Inf. Sci. 2007, 177, 3–27. [Google Scholar] [CrossRef]

- Molnar, C. Interpretable Machine Learning. A Guide for Making Black Box Models Explainable, 2nd ed.; Independent Publishers: Chicago, IL, USA, 2022; Available online: https://christophm.github.io/interpretable-ml-book/ (accessed on 1 May 2024).

- Blum, M.; Impagliazzo, R. Generic Oracles and Oracle Classes (Extended Abstract). In Proceedings of the 28th Annual Symposium on Foundations of Computer Science, Los Angeles, CA, USA, 27–29 October 1987; IEEE Computer Society: Washington, DC, USA, 1987; pp. 118–126. [Google Scholar]

- Hartmanis, J.; Hemachandra, L.A. One-way functions, robustness, and the non-isomorphism of NP-complete sets. In Proceedings of the Second Annual Conference on Structure in Complexity Theory, Ithaca, NY, USA, 16–19 June 1987; IEEE Computer Society: Washington, DC, USA, 1987. [Google Scholar]

- Tardos, G. Query complexity, or why is it difficult to separate NPA∩coNPA from PA by random oracles A? Combinatorica 1989, 9, 385–392. [Google Scholar] [CrossRef]

- Buhrman, H.; de Wolf, R. Complexity measures and decision tree complexity: A survey. Theor. Comput. Sci. 2002, 288, 21–43. [Google Scholar] [CrossRef]

- Pawlak, Z. Information systems theoretical foundations. Inf. Syst. 1981, 6, 205–218. [Google Scholar] [CrossRef]

- Post, E. Two-Valued Iterative Systems of Mathematical Logic; Annals of Mathematics Studies; Princeton University Press: Princeton, NJ, USA, 1941; Volume 5. [Google Scholar]

- Robertson, N.; Seymour, P.D. Graph Minors. XX. Wagner’s conjecture. J. Comb. Theory, Ser. B 2004, 92, 325–357. [Google Scholar] [CrossRef]

- Moshkov, M. On depth of conditional tests for tables from closed classes. In Combinatorial-Algebraic and Probabilistic Methods of Discrete Analysis; Markov, A.A., Ed.; Gorky University Press: Gorky, Russia, 1989; pp. 78–86. (In Russian) [Google Scholar]

- Moshkov, M. Comparative Analysis of Deterministic and Nondeterministic Decision Tree Complexity. Local Approach. Trans. Rough Sets 2005, 4, 125–143. [Google Scholar]

- Moshkov, M. Time Complexity of Decision Trees. Trans. Rough Sets 2005, 3, 244–459. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).