On Superposition Lattice Codes for the K-User Gaussian Interference Channel

Abstract

:1. Introduction

Roadmap

- In Section 3.1.1, we show it is possible to obtain the HK rate region for a two-user interference channel with the intersection of two two-user multiple access channels.

- In Section 3.1.2, we express the HK rate region for a two-user Gaussian interference channel with lattice distribution (Section 3.1.2 for a K-user Gaussian interference channel). For this, we introduce restrictions over the flatness factor of lattices given by Lemmas 3 and 4, as well as Theorem 2.

- Finally, in Section 4.1, for Lemma 9, we apply power constraints to the private and common messages of a two-user weak Gaussian interference channel (Lemma 10 for a K-user weak Gaussian interference channel). These constraints are then applied to obtain conditions for the channel coefficients (Theorem 3 for a two-user weak Gaussian interference channel and Theorem 4 for a K-user weak Gaussian interference channel), which finally lead to the constant gap to the optimal rate and the GDoF of the two-user interference channel obtained in [9].

2. Preliminaries

2.1. Outer and Inner Bounds for the Two-User Weak Gaussian Interference Channel [9]

- (1)

- and . In this case, ([9] [Corollary 1]) the achievable region contains all the rate pairs , satisfying:

- (2)

- and . In this case, the achievable region contains all the rate pairs:

- (3)

- and . In this case, the achievable region is similar to the one before.

- (4)

- and . In this case, the achievable region contains only the following rate pairs:

2.2. Lattice Gaussian Coding

3. Materials and Methods

3.1. Lattice Gaussian Coding for the Two-User Gaussian Interference Channel

3.1.1. Finding the Han–Kobayashi Rate Region with the Intersection of Two Two-User MACs

3.1.2. Two-User Gaussian Interference Channel Using Lattice Gaussian Coding

- Decoding , then and finally : If we decode the desired common message first, , to consider the rest of the messages as noise, we must apply Lemmas 4 and 3. Lemma 4 is applied to , while Lemma 3 is applied to and . Thus, we decode from , where is the new semi-spherical noise. This is valid from Lemma 4 with the flatness factor conditionand from Lemma 3 with the flatness factor conditionsandConsider now Theorem 2. We have thatwith the conditionHere, we decode the desired private message with a subset of the flatness factor conditions that were already defined in the first step. Thus, we decode from , where is the estimated , considering (64) and (66), which are the flatness factor conditions that make and part of the noise. Utilizing Theorem 2, we obtainwhereFinally, we can decode using , where and are the estimated and , respectively. Again, using Lemma 4, we can consider as part of the noise, with its respective flatness factor condition (64), and we can apply Theorem 2 to obtainwhere

- Decoding , then and finally :If we start by decoding the interference common message first, , to consider the rest of the messages as noise, we apply Lemma 3 to and with the flatness factor conditions (65) and (67), and Lemma 4 to with the flatness factor condition (64).Then, using Theorem 2, we obtainwhereHere, we decode the desired common message from again, where is the estimated , considering, as previously mentioned, and as noise with the conditions (67) and (64). Using Theorem 2, we obtainwhereFinally, once both common messages have been found, we can decode using , where and are the estimated and , respectively. Again using Lemma 4, we can consider as part of the noise, with its respective flatness factor condition (64), and we can apply Theorem 2 to obtainwhere

3.2. Lattice Gaussian Coding for the K-User Interference Channel

K-User Gaussian Interference Channel Using Lattice Gaussian Coding

- At receiver 1, we decode to lattice and then to lattice , where , or to lattice and then to lattice .

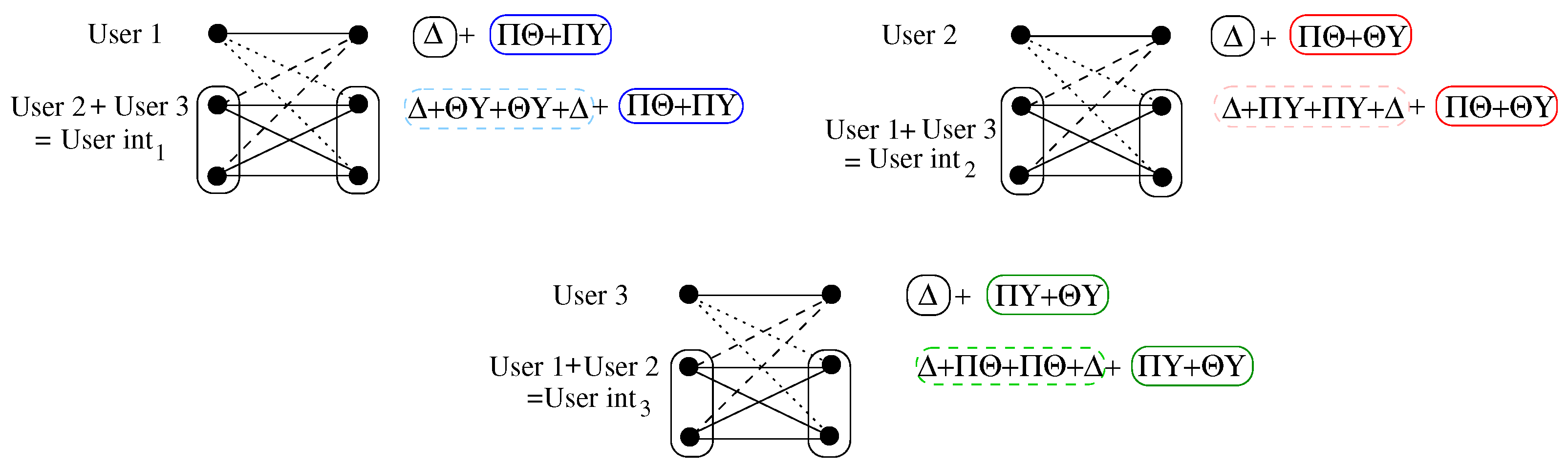

- At receiver , we decode to lattice , where , and then to or first to and then to .The process is similar for the other users. Thus, for our three-user interference channel example using only common messages, we need seven lattices to be able to decode three users and three interference users. This can be observed in Figure 4.

- Decoding at receiver i:

- (a)

- Decoding , then and finally : If we decode the desired common message first, , to consider the rest of the messages as noise, we have to apply Lemma 4 to and Lemma 3 to and . Thus, we decode from , where is the new semi-spherical noise. This is valid from Lemma 4 with the flatness factor conditionand from Lemma 3 with the flatness factor conditionsandFrom Theorem 2, we have thatwith the conditionWe now decode the desired private message with a subset of the flatness factor conditions, which were already defined in the first step. Thus, we decode from , where is the estimated , considering (104) and (106), which are the flatness factor conditions that make and part of the noise. Utilizing Theorem 2, we obtainwhereFinally, we can decode using , where and are the estimated and , respectively. Again, using Lemma 4, we can consider as part of the noise, with its respective flatness factor condition (104), and we can apply Theorem 2 to obtainwhere

- (b)

- Decoding , then and finally :If we start by decoding the interference common message first, , to consider the rest of the messages as noise, we apply Lemma 3 to and with the flatness factor conditions (105) and (108) and Lemma 4 to with the flatness factor conditions (104).Then, using Theorem 2, we obtainwhereHere, we decode the desired common message from , where is the estimated , considering, as previously mentioned, and as noise with the conditions (108) and (104). Using Theorem 2, we obtainwhereFinally, once both common messages have been found, we can decode using , where and are the estimated and , respectively. Again, using Lemma 4, we can consider as part of the noise, with its respective flatness factor condition (104), and we can apply Theorem 2 to obtainwhere

- We will now decode at receiver :

- (a)

- Decoding , then and finally : If we decode the desired common message first, , to consider the rest of the messages as noise, we must apply Lemmas 4 and 3. Lemma 4 is applied to , while Lemma 3 is applied to and . Thus, we decode from , where is the new semi-spherical noise. This is valid from Lemma 4 with the flatness factor condition (104) and from Lemma 3 with the flatness factor conditions (109) and (106).Thus, from Theorem 2, we have thatwith the conditionHere, we decode the desired private message with a subset of flatness factor conditions, which were already defined in the first step. Thus, we decode from , where is the estimated , considering (109) and (106), which are the flatness factor conditions that make and part of the noise. Using Theorem 2, we obtainwhereFinally, we can decode using , where and are the estimated and , respectively. Again, using Lemma 4, we can consider as part of the noise, with its respective flatness factor condition (104), and we can apply Theorem 2 to obtainwhere

- (b)

- Decoding , then and, finally, :If we start by decoding the interference common message first, , to consider the rest of the messages as noise, we apply Lemma 3 to and with the flatness factor conditions (107) and (109) and Lemma 4 to with the flatness factor conditions (104).Then, utilizing Theorem 2, we obtainwhereHere, we decode the desired common message from again, where is the estimated , considering, as previously mentioned, and as noise with the conditions (109) and (104). Using Theorem 2, we obtainwhereFinally, once both common messages have been found, we can decode by , where and are the estimated and , respectively. Again, using Lemma 4, we can consider as part of the noise, with its respective flatness factor condition (104), and we can apply Theorem 2 to obtainwhere

4. Results

4.1. The Power Constraints and GDoF of the Two-User Weak Gaussian Interference Channel with Lattice Gaussian Coding

4.2. The Power Constraints and GDoF of the K-User Weak Gaussian Interference Channel with Lattice Gaussian Coding

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| SNR, S | Signal-to-noise ratio |

| DoF | Degrees of freedom |

| HK | Han and Kobayashi |

| GDoF | Generalized degrees of freedom |

| AWGN | Additive white Gaussian noise |

| INR, I | Interference-to-noise ratio |

| MAC | Multiple access channel |

| IC | Interference channel |

Appendix A. Proof of Lemma 7

- •

- The contribution from MAC 1 and the contribution of the private message rate given by MAC 2

- •

- The contribution from MAC 2 and the contribution of the private message rate given by MAC 1

- •

- The contribution from the intersection of both MAC, where and

- •

- Finally, the contribution from the intersection of both MACs, where and , is actually redundant and, therefore, discarded, as shown in [20].

- •

- The contribution of from MAC 1 and the contribution of and from MAC 2:

- •

- The contribution of from MAC 2 and the contribution of and from MAC 1, which is actually redundant and, therefore, discarded, as shown in [20]

- •

- The contribution of from MAC 1 and the contribution of and from MAC 2, which is actually redundant and, therefore, discarded, as shown in [20],

- •

- The contribution of from MAC 2 and the contribution of and from MAC 1:

Appendix B. Proof of Lemma 9

| User 1 | User 2 | |||

|---|---|---|---|---|

Appendix C. Proof of Theorem 3

Appendix D. Proof of Lemma 10

Appendix E. Proof of Theorem 4

References

- Carleial, A. A case where interference does not reduce capacity (corresp.). IEEE Trans. Inf. Theory 1975, 21, 569–570. [Google Scholar] [CrossRef]

- Cadambe, V.; Jafar, S. Interference alignment and degrees of freedom of the K-user interference channel. IEEE Trans. Inf. Theory 2008, 54, 3425–3441. [Google Scholar] [CrossRef]

- Han, T.; Kobayashi, K. A new achievable rate region for the interference channel. IEEE Trans. Inf. Theory 1981, 27, 49–60. [Google Scholar] [CrossRef]

- Bresler, G.; Parekh, A.; Tse, D. The approximate capacity of the many-to-one and one-to-many Gaussian interference channels. IEEE Trans. Inf. Theory 2010, 56, 4566–4592. [Google Scholar] [CrossRef]

- Cadambe, V.; Jafar, S.; Shamai, S. Interference alignment on the deterministic channel and application to fully connected Gaussian interference networks. IEEE Trans. Inf. Theory 2009, 55, 269–274. [Google Scholar] [CrossRef]

- Jafar, S.A.; Vishwanath, S. Generalized degrees of freedom of the symmetric Gaussian K user interference channel. IEEE Trans. Inf. Theory 2010, 56, 3297–3303. [Google Scholar] [CrossRef]

- Sridharan, S.; Jafarian, A.; Vishwanath, S.; Jafar, S. Capacity of symmetric k-user gaussian very strong interference channels. In Proceedings of the IEEE GLOBECOM 2008—2008 IEEE Global Telecommunications Conference, New Orleans, LA, USA, 30 November–4 December 2008; pp. 1–5. [Google Scholar]

- Sridharan, S.; Jafarian, A.; Vishwanath, S.; Jafar, S.; Shamai, S. A layered lattice coding scheme for a class of three user Gaussian interference channel. In Proceedings of the 46th Annual Allerton Conference on Communication, Control, and Computing 2008, Monticello, IL, USA, 23–26 September 2008; pp. 531–538. [Google Scholar]

- Etkin, R.; Tse, D.; Wang, H. Gaussian interference channel capacity to within one bit. IEEE Trans. Inf. Theory 2008, 54, 5534–5562. [Google Scholar] [CrossRef]

- Geng, C.; Naderializadeh, N.; Avestimehr, A.S.; Jafar, S.A. On the optimality of treating interference as noise. IEEE Trans. Inf. Theory 2015, 61, 1753–1767. [Google Scholar] [CrossRef]

- Chen, J. Multi-layer interference alignment and GDoF of the k-user asymmetric interference channel. IEEE Trans. Inf. Theory 2021, 67, 3986–4000. [Google Scholar] [CrossRef]

- Peng, S.; Chen, X.; Lu, W.; Deng, C.; Chen, J. Spatial Interference Alignment with Limited Precoding Matrix Feedback in a Wireless Multi-User Interference Channel for Smart Grids. Energies 2022, 15, 1820. [Google Scholar] [CrossRef]

- Lu, J.; Li, J.; Yu, F.R.; Jiang, W.; Feng, W. UAV-Assisted Heterogeneous Cloud Radio Access Network With Comprehensive Interference Management. IEEE Trans. Veh. Technol. 2024, 73, 843–859. [Google Scholar] [CrossRef]

- Li, J.; Chen, G.; Zhang, T.; Feng, W.; Jiang, W.; Quek, T.Q.S.; Tafazolli, R. UAV-RIS-Aided Space-Air-Ground Integrated Network: Interference Alignment Design and DoF Analysis. IEEE Trans. Wirel. Commun. 2024; early access. [Google Scholar] [CrossRef]

- Ling, C.; Luzzi, L.; Belfiore, J.-C.; Stehlé, D. Semantically Secure Lattice Codes for the Gaussian Wiretap Channel. IEEE Trans. Inf. Theory 2014, 60, 6399–6416. [Google Scholar] [CrossRef]

- Campello, A.; Ling, C.; Belfiore, J.-C. Semantically Secure Lattice Codes for Compound MIMO Channels. IEEE Trans. Inf. Theory 2020, 66, 1572–1584. [Google Scholar] [CrossRef]

- Xie, J.; Ulukus, S. Secure Degrees of Freedom of K -User Gaussian Interference Channels: A Unified View. IEEE Trans. Inf. Theory 2015, 61, 2647–2661. [Google Scholar] [CrossRef]

- Estela, M. Interference Management for Interference Channels: Performance Improvement and Lattice Techniques. Ph.D. Thesis, Imperial College London, London, UK, 2014. Available online: http://hdl.handle.net/10044/1/24678 (accessed on 2 July 2021).

- Ling, C.; Belfiore, J.-C. Achieving AWGN channel capacity with lattice Gaussian coding. IEEE Trans. Inf. Theory 2014, 60, 5918–5929. [Google Scholar] [CrossRef]

- Etkin, R. Spectrum Sharing: Fundamental Limits, Scaling Laws, and Self-Enforcing Protocols. Ph.D. Thesis, University of California at Berkeley, Berkeley, CA, USA, 2006. [Google Scholar]

- Han, T.; Kobayashi, K. A further consideration of the HK and CMG regions for the interference channel. In Proceedings of the ITA Workshop 2007, San Diego, CA, USA, 29 January–2 February 2007. [Google Scholar]

- Oggier, F.; Viterbo, E. Algebraic Number Theory and Code Design for Rayleigh Fading Channels; Now Foundations and Trends: Boston, MA, USA, 2004; Volume 1, pp. 333–415. [Google Scholar]

- Estela, M.; Luzzi, L.; Ling, C.; Belfiore, J. Analysis of lattice codes for the many-to-one interference channel. In Proceedings of the 2012 IEEE Information Theory Workshop, Lausanne, Switzerland, 3–7 September 2012; pp. 417–421. [Google Scholar]

- Belfiore, J.-C. Lattice codes for the compute-and-forward protocol: The flatness factor. In Proceedings of the 2011 IEEE Information Theory Workshop, Paraty, Brazil, 6–20 October 2011; pp. 1–4. [Google Scholar]

- Loeliger, H.-A. Averaging bounds for lattices and linear codes. IEEE Trans. Inf. Theory 1997, 43, 1767–1773. [Google Scholar] [CrossRef]

- Zamir, R. Lattice Coding for Signal and Networks; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

| User 1 | |||||||||

| User 2 | |||||||||

| User 3 | |||||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Estela, M.C.; Valencia-Cordero, C. On Superposition Lattice Codes for the K-User Gaussian Interference Channel. Entropy 2024, 26, 575. https://doi.org/10.3390/e26070575

Estela MC, Valencia-Cordero C. On Superposition Lattice Codes for the K-User Gaussian Interference Channel. Entropy. 2024; 26(7):575. https://doi.org/10.3390/e26070575

Chicago/Turabian StyleEstela, María Constanza, and Claudio Valencia-Cordero. 2024. "On Superposition Lattice Codes for the K-User Gaussian Interference Channel" Entropy 26, no. 7: 575. https://doi.org/10.3390/e26070575