Unification of Mind and Matter through Hierarchical Extension of Cognition: A New Framework for Adaptation of Living Systems

Abstract

:1. Introduction

2. Overview of This Study

- (1)

- Previous works suggested that LSs are characterized as systems with the following properties: (i) creating an internal organization, (ii) establishing a relationship with external states, (iii) reproduction, (iv) formation of ecosystems, and (v) evolution. Adaptation is the core concept that concerns all these properties. In this study, adaptation is defined as the property of a system to change states to maintain a particular relationship with their environments and an internal organizational order for self-making (survival) and reproduction. Differential survival and reproduction lead to the evolution of a population of LSs. Natural selection theory explains the spread of adaptive traits rather than adaptation. However, a comprehensive theory of adaptation has yet to be established [Section 3].

- (2)

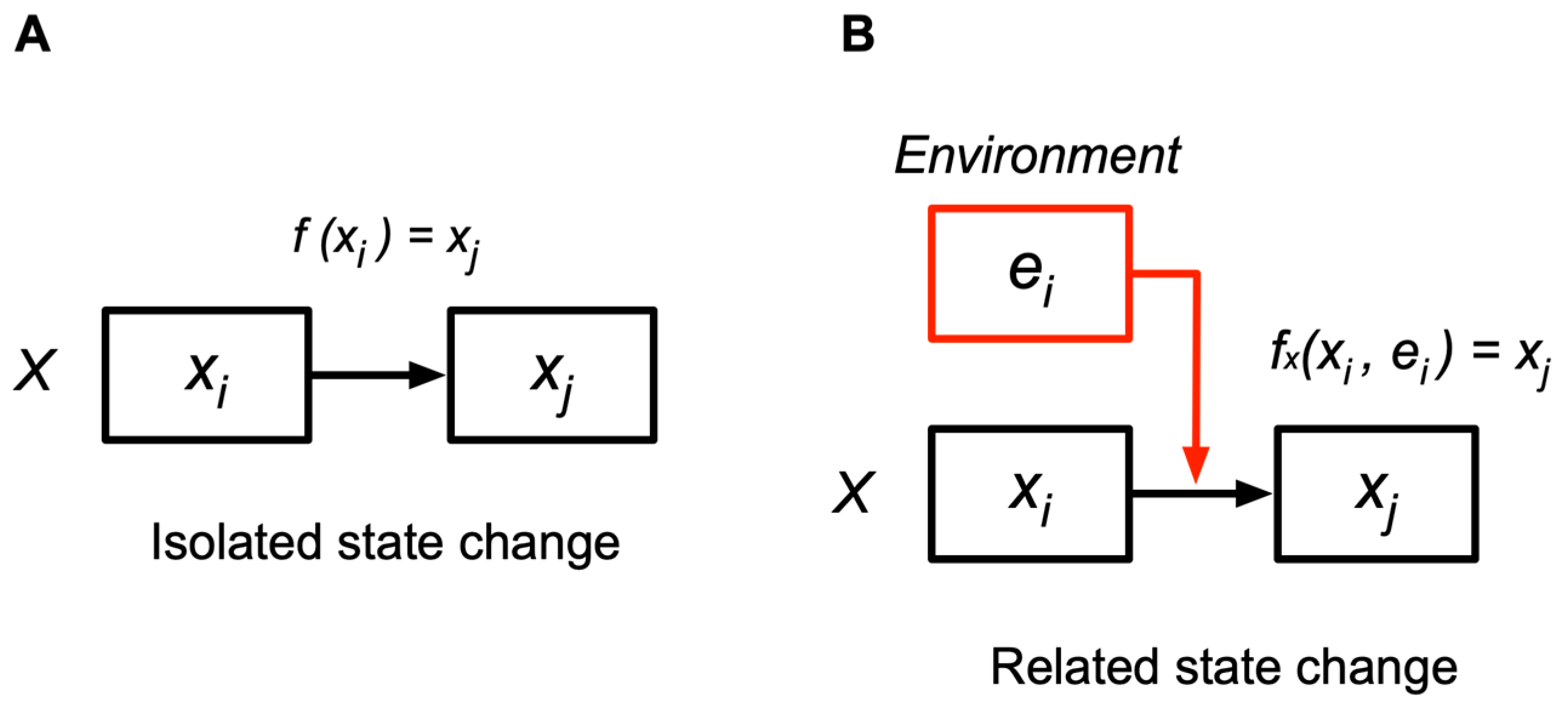

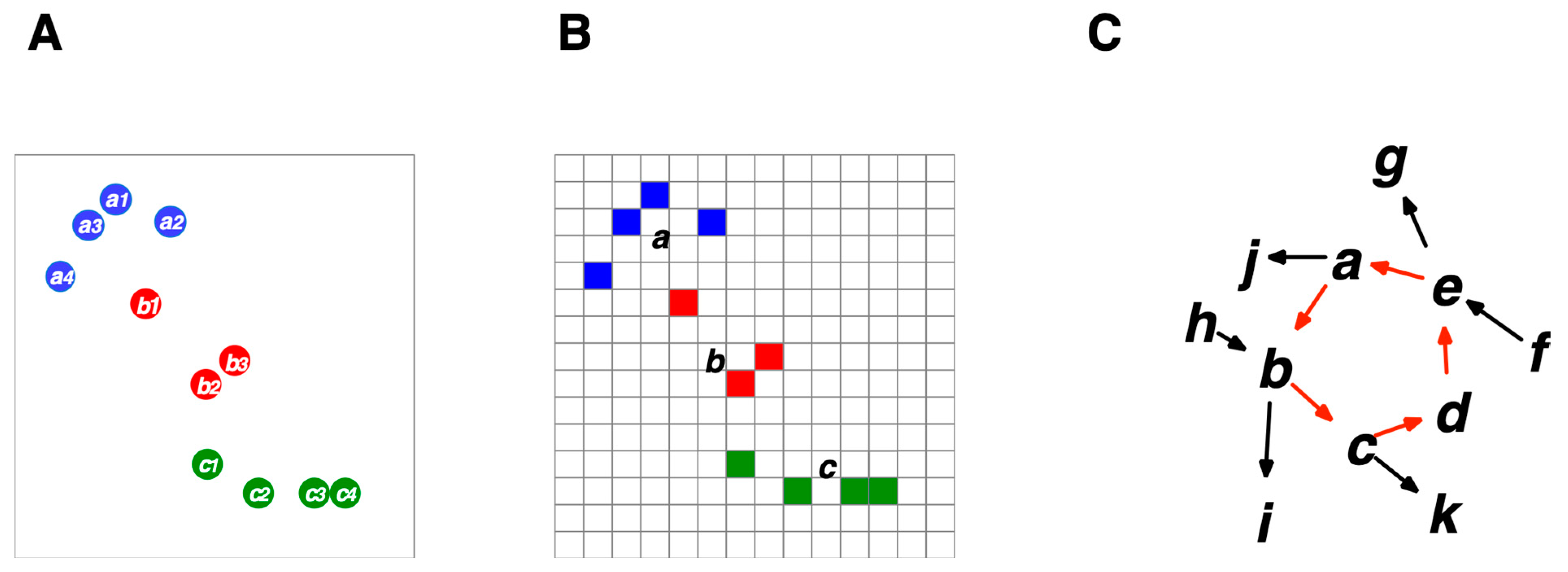

- LSs must solve the serious adaptation problem of detecting external states and relate them appropriately to their surroundings. This problem is a biological version of the philosophical enigma of how the self can know the external world and escape solipsism (Appendix A). To address this problem, the author proposes a hierarchical extension of the cognition concept. According to it, “cognition” is defined as a “related (relational) state change” occurring at three levels of the nested hierarchy: physical, chemical, and semiotic cognitions. Physical and chemical entities can detect their surroundings through related state changes; therefore, their detection capabilities are defined as cognitive capabilities at the physical or chemical level. Cognitions at these levels play an essential role in the adaptation of LSs to their environments. However, cognition at these levels does not imply that physical and chemical entities are aware of their surroundings or conscious; they do not possess this property. Awareness (consciousness) emerges at the semiotic level. Nonetheless, cognitive (i.e., detection) capabilities at the physical or chemical level are essential for making semiotic cognition possible (see (5) and Section 7 in detail). Any entity at any level that cognizes its outside (surroundings) is called a “cognizer”. In other words, cognizer refers to any “entity that changes its state in relation to external states” [Section 4].

- (3)

- Adaptation enables LSs to reduce the uncertainty of events that may occur after cognition (or action) in a manner that allows them to experience a favorable overall probability distribution throughout their lifetime to survive and reproduce. Probability, entropy, and information are vital concepts in understanding adaptation. However, they contain various meanings under the same name or mathematical representation. A comprehensive adaptation theory must coherently integrate these concepts into a theoretical framework. To this aim, adaptation is explained in terms of the probability of events using simple thought experiments, in which a player draws balls from boxes under various setups. Each box is the environment for a player, and the player experiences events such as colors and sizes of balls drawn, as well as events occurring at distant places. Probability includes various interpretations. Internal probability theory clarifies the probabilities of events occurring not to an external observer, but to a system component, such as a player drawing balls. Internal probability includes the certainty of events occurring after a given cognition (or action), and the relative frequency of events after an overall range of actions. The experiments demonstrated how cognitions occurring at the physical, chemical, and semiotic levels affect the probability of events [Section 5].

- (4)

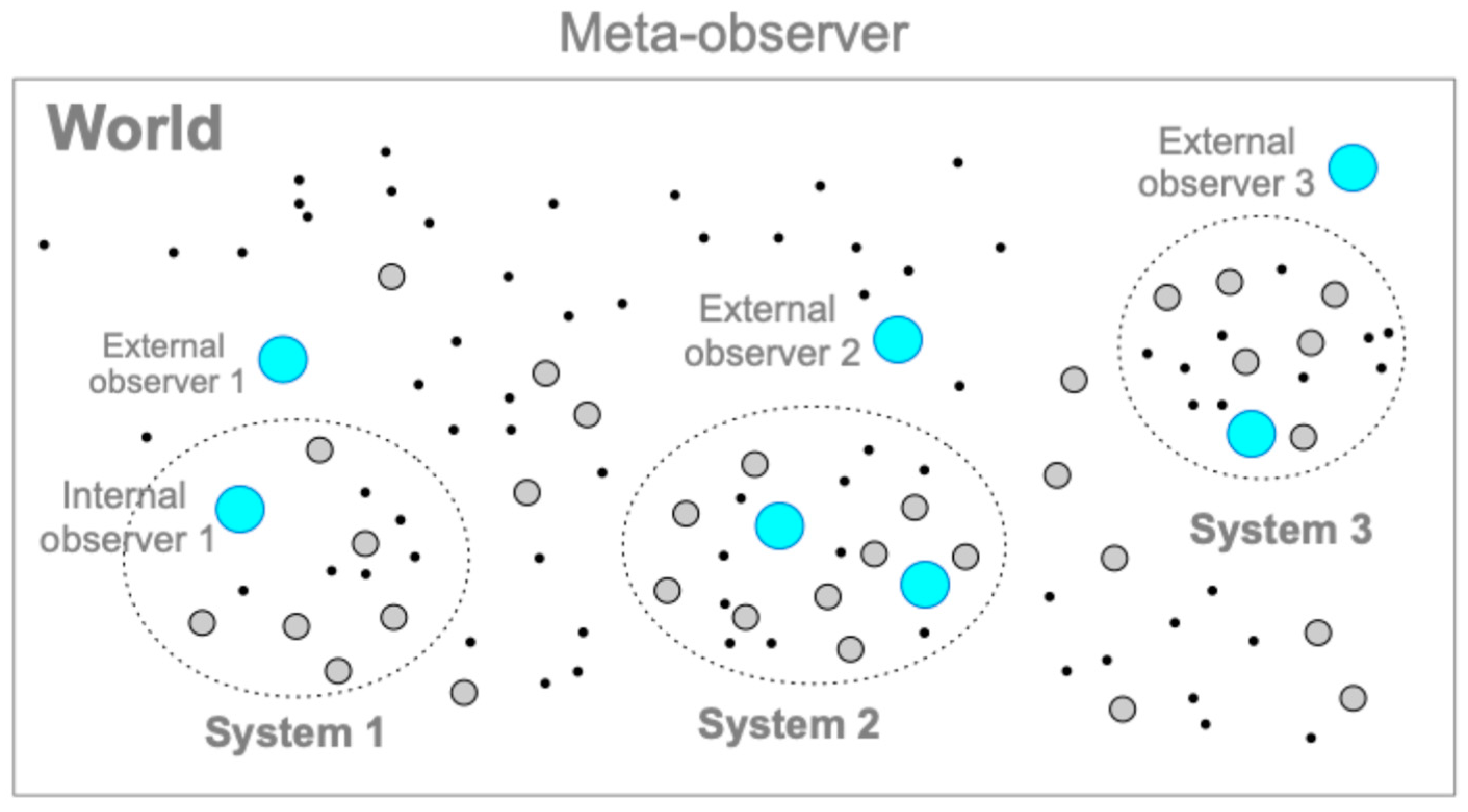

- A mathematical formalism for the cognizers system (the “CS model”) is presented, providing explicit definitions of cognition, cognizer, system, internal/external observers, the world, the causal principle, event/state, and causation as foundations for theorizing adaptation of LSs using the CS model [Section 6].

- (5)

- For semiotic cognition to operate, LSs must measure the external states and produce symbols that signify these external states (local or global), based on which they change their effector states. A semiotic cognition model is presented based on the CS model that operates on the principle of “inverse causality (IC)”; it is not “reverse causation” or “backward causation”. The IC principle postulates that if a system (or a component of a system) changes from state x to y1 in some cases and from x to y2 in others (where y1 ≠ y2), then distinct external states must exist for x ⟼ y1 and x ⟼ y2, respectively (⟼ denotes a state change). LSs invented a measurement system based on the IC principle, a process called IC operation (or IC measurement), by which a subject LS produces symbols internally (in the form of system component states) that signify past states of the external reality hidden to the subject. By producing such symbols, a system can be aware of the external world [16], which is the core function of consciousness. “Consciousness” (or “awareness”) can thus be defined in a scientifically tractable form, as the production of internal states as symbols that signify external states, which are processed for actions. According to this definition, bacteria may be conscious (aware), although their IC measurement systems are much simpler than those used in humans [Section 7].

- (6)

- A possible scenario of a primary evolutionary process or the origin of semiotic cognition is presented, in which chemical autopoietic systems operating with chemical (i.e., non-semiotic) cognition evolutionarily develop a primitive form of semiotic cognition by IC measurement systems, similar to those observed in signal transduction in contemporary bacteria. By acquiring IC operation systems, the autopoietic metabolic system can manage the probability distribution of events to be beneficial for survival and reproduction, thereby overcoming the limitations of a primitive stage where only physical and chemical cognitions operate [Section 8].

- (7)

- In conclusion, mind and matter can be unified as cognizers in the monistic framework of the CS model. Here, physical and chemical entities as cognizers can generate a higher level of cognition (i.e., semiotic cognition) if they form a specific structure to operate inverse causality, enabling the system to produce symbols that signify hidden external states and act (physically or behaviorally) to adapt to their surroundings. This operation makes LSs aware of the external world [Section 9].

3. What Is Life?

3.1. Five Properties of Living Systems (LSs)

- (i)

- Making an internal organization: LSs produce system components through metabolism and maintain organizational order in the states of these components. They incorporate material/energy resources from outside and discard waists. This process forms a unity of self-making (within a generation), separating itself from others with a boundary (i.e., self-organizing). Survival refers to the maintenance of this organization [19,20,21,22,23].

- (ii)

- Making a relationship with external states: LSs create external relationships beneficial to maintaining internal organization (i.e., survival), including obtaining resources and avoiding natural enemies and physical risks. LSs sense and respond to their environments (biotic and abiotic conditions) to maintain beneficial relationships for self-making at a high degree of certainty.

- (iii)

- Reproduction: LSs reproduce their self-making organizations, transmitting orders over generations, forming a population of LSs [24].

- (iv)

- Formation of an ecosystem: The population of LSs exists as a member of an ecosystem, where they can obtain material/energy resources (as mentioned in (i)) by maintaining beneficial relationships with others (as mentioned in (ii)), which characterize specific ecological niches (positions relative to heterospecific LSs) [25].

- (v)

- Evolution: The population of LSs evolves by generating new types of self-making systems, with some replacing old types through natural selection or drift, while others, coexisting with the old, creating diversity [24].

3.2. Adaptation

3.3. Natural Selection Theory Does Not Explain Adaptation

3.4. Is “Information” an Answer for the Adaptation Problem?

4. Hierarchical Extension of Cognition

4.1. Conventional Meaning of Cognition

4.2. Cognition as a Related State Change

4.3. Cognition at Three Levels

- (1)

- Physical cognition

- (2)

- Chemical cognition

- (3)

- Semiotic cognition

5. Adaptation as Managing the Probability Distribution of Events Occurring to LSs

5.1. Understanding Adaptation as Managing the Probability of Events

- (i)

- The degree of certainty of an event occurring following a specific cognition (i.e., a state change) of the environment by a focal cognizer, named “internal Pcog”. Precisely, consider a focal state change of a cognizer in a finite length of a system’s temporal state sequence (e.g., “tossing a coin from a height of 1 m”; “moving in the left direction”) as the specific condition for subsequent events to occur; the degree of certainty is measured by the ratio of the number of a focal event type that occurred (e.g., heads up; encounter with a car) to the total number of all the events that occurred (e.g., {heads, tails}; {encounter with a car, encounter with a bike, …}) following the same cognition (state change) by a focal cognizer.

- (ii)

- The relative frequency of an event occurring following an overall range of cognitions (i.e., including all types of cognition, state changes, without specifying any particular one) by a focal cognizer during the system process, named “internal Poverall”. Specifically, consider all types of state changes of a focal cognizer occurring over a finite length of a system’s temporal state sequence (e.g., {tossing from heights of 1 m, 2 m, … and 10 m}; {moving in the left, right, and straight}) as the overall conditions for subsequent events to occur. The relative frequency is measured by the ratio of the number of events of a focal type (e.g., heads, encounter with a car) to the total number of all types of events that occurred in the sequence (e.g., {heads, tails}; {encounter with a car, encounter with a bike, …}).

5.2. Thought Experiments for Understanding Semiotic Cognition as Adaptation

5.2.1. Experiment A

5.2.2. Experiment B

5.2.3. Experiment C

5.2.4. Experiment D

5.2.5. Experiment E

5.3. Probability, Entropy, and the Amount of Information

5.4. Cognizers vs. Demons

5.5. Environment and Subject-Dependent Environment

6. Cognizers in the World

6.1. Overview

6.2. Cognizers System (CS) Model

6.3. Selectivity and Discriminability of Cognition

6.4. Causal Principle (The Principle of Causality) and Freedom

6.5. State and Event

6.6. Causation (Cause–Effect Relationship)

6.7. Principle of Local Causation

6.8. Hierarchical Structures of Cognizers Systems

7. Internalist Model of Semiotic Cognition toward Unification of Matter and Mind

7.1. The Problem of Escaping Solipsism for LSs

7.2. Semiotic Cognition by Inverse Causality Operation

7.3. The Measurement System by IC Operation

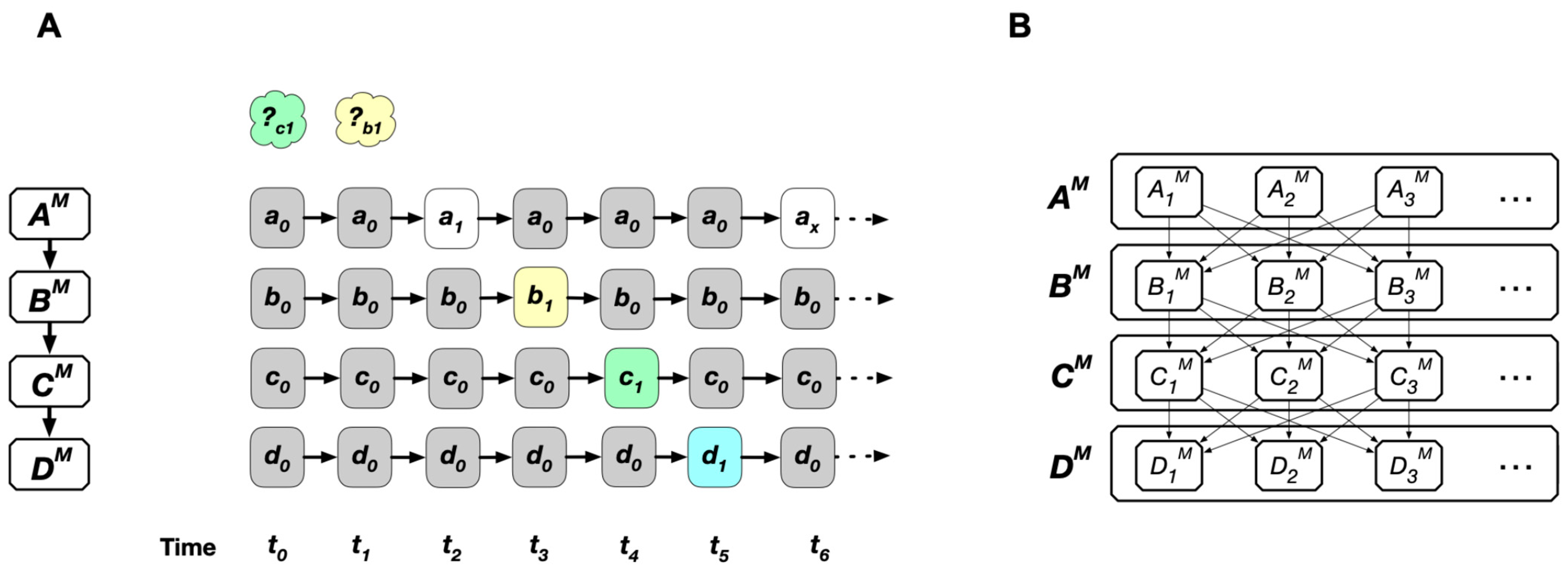

- AM is a sensor whose states are data. Data are given because they are not produced (entailed) by something else in the internalist framework (no external reality is assumed).

- BM measures AM states. The BM is the reader of the data (AM states). BM states are symbols that signify local external states. Examples of BM may be second messengers of signal transduction in cells.

- CM measures BM states, whose states signify non-local external states that cannot be derived by inverse causality in AM-BM coupling. CM states are not copies of the BM states. Therefore, CM interprets (decodes) the BM states. CM links the BM to the DM (see below) semiotically, leading to a final action. In some cases, BM states may be linked directly to DM without mediation by CM (see Section 8.3 for examples).

- DM is the effector, a measurer that transforms the symbols produced in BM or CM to structural changes of the subject LS in a particular manner. For example, the effector may include the translation of enzyme proteins in gene expression systems (Appendix C) and locomotor apparatus, including motor neurons and muscle cells.

7.4. Adaptation and the Production of Symbols That Signify External Objects

7.5. Where Is the Mind in a Cognizer?

8. IC Measurement Systems in Unicellular LSs

8.1. Molecular System as Cognizers System

8.2. Chemical Systems Operating with Chemical Cognition

8.3. How Semiotic Cognition Can Emerge in Chemical Systems

9. Conclusions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Solipsism Problem in LSs and Their Evolution

Appendix B

Costs of Dualism and the Need for a Monistic Model

Appendix C

Semiotic Cognition in Gene Expression

Appendix D

References

- Armstrong, D.M. A Materialist Theory of the Mind, Kindle ed.; Taylor & Francis: Abington, UK, 2002. [Google Scholar]

- Schrödinger, E. Mind and matter. In What is Life?: With Mind and Matter and Autobiographical Sketches (Canto Classics); Cambridge University Press: New York, NY, USA, 2000. [Google Scholar]

- Goff, P. Is Consciousness Everywhere? Kindle ed.; Andrews UK Limited: Luton, UK, 2022. [Google Scholar]

- Russell, B. The Analysis of Mind; Sanage Publishing House LLP: Mumbai, India, 2023. [Google Scholar]

- Josephson, B.D.; Rubik, A.R. The Challenge of Consciousness Research. Front. Perspect. 1992, 3, 15–19. [Google Scholar]

- Josephson, B.D. Beyond quantum theory: A realist psycho-biological interpretation of reality revisited. Biosystems 2002, 64, 43–45. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, T. Biological probability: Cognitive processes of generating probabilities of events in biological systems. J. Theor. Biol. 1999, 200, 77–95. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, T. Probabilities of encounters between objects in biological systems: Meta-observer view. J. Theor. Biol. 2001, 211, 347–363. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, T. Probabilities of Encounters between Objects in Biological Systems 2: Cognizer View. J. Theor. Biol. 2003, 221, 39–51. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, T. Probability in biology: Overview of a comprehensive theory of probability in living systems. Prog. Biophys. Mol. Biol. 2013, 113, 67–79. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, T. Unification of epistemic and ontic concepts of information, probability, and entropy, using cognizers-system model. Entropy 2019, 21, 216. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, T. Is the world deterministic? Mental algorithmic process generating external reality by inverse causality. Int. J. Gen. Syst. 2001, 30, 681–702. [Google Scholar] [CrossRef]

- Nakajima, T. How living systems manage the uncertainty of events: Adaptation by semiotic cognition. In Biosemiotic Research Trends; Barbieri, M., Ed.; Nova Science Publishers, Inc.: New York, NY, USA, 2007; pp. 157–172. [Google Scholar]

- Nakajima, T. Biologically inspired information theory: Adaptation through construction of external reality models by living systems. Prog. Biophys. Mol. Biol. 2015, 119, 634–648. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, T. Living systems escape solipsism by inverse causality to manage the probability distribution of events. Philosophies 2021, 6, 11. [Google Scholar] [CrossRef]

- Nakajima, T. Computation by inverse causality: A universal principle to produce symbols for the external reality in living systems. BioSystems 2022, 218, 104692. [Google Scholar] [CrossRef] [PubMed]

- Nakajima, T. Synchronic and diachronic hierarchies of living systems. Int. J. Gen. Syst. 2004, 33, 505–526. [Google Scholar] [CrossRef]

- Barbieri, M. The Organic Codes: An Introduction to Semantic Biology, Kindle ed.; Cambridge University Press: New York, NY, USA, 2002. [Google Scholar]

- Eigen, M.; Schuster, P. The hypercycle: A principle of natural self-organization, part A: The emergence of the hypercycle. Naturwissenshaften 1977, 64, 541–565. [Google Scholar] [CrossRef]

- Maturana, H.R.; Varela, F.J. Autopoiesis and Cognition—The Realization of the Living; Reidel: Dordrecht, The Netherlands, 1980; ISBN 9-0277-1015-5. [Google Scholar]

- Ganti, T. Biogenesis itself. J. Ther. Biol. 1997, 187, 583–593. [Google Scholar] [CrossRef]

- Prigogine, I. From Being to Becoming: Time and Complexity in the Physical Sciences; W.H. Freeman: San Francisco, CA, USA, 1980. [Google Scholar]

- Letelier, J.C.; Cárdenas, M.L.; Cornish-Bowden, A. From L’Homme Machine to metabolic closure: Steps towards understanding life. J. Theor. Biol. 2011, 286, 100–113. [Google Scholar] [CrossRef] [PubMed]

- Maynard Smith, J.; Szathmáry, E. The Major Transitions in Evolution; Oxford University Press: Oxford, UK, 1998. [Google Scholar]

- Nakajima, T. Ecological extension of the theory of evolution by natural selection from a perspective of Western and Eastern holistic philosophy. Prog. Biophys. Mol. Biol. 2017, 131, 298–311. [Google Scholar] [CrossRef]

- Elton, C. Animal Ecology; The Macmillan Company: New York, NY, USA, 1927. [Google Scholar]

- Hutchinson, G.E. Concluding remarks. Cold Spring Harb. Symp. Quant. Biol. 1957, 22, 415–427. [Google Scholar] [CrossRef]

- Leibold, M.A. The niche concept revisited: Mechanistic models and community context. Ecology 1995, 76, 1371–1382. [Google Scholar] [CrossRef]

- Darwin, C. The Origin of Species, Sixth London ed.; Filibooks: Odense, Danmark, 2015. [Google Scholar]

- Lewontin, R.C. The unit of selection. Ann. Rev. Ecol. Syst. 1970, 1, 1–18. [Google Scholar] [CrossRef]

- Lewontin, R.C. Adaptation. In The Dialectical Biologist; Levins, R., Lewontin, R.C., Eds.; Harvard University Press: Cambridge, MA, USA, 1985. [Google Scholar]

- Mayr, E. The Growth of Biological Thought; Harvard University Press: Cambridge, MA, USA, 1982. [Google Scholar]

- Mayr, E. Toward a New Philosophy of Biology; Harvard University Press: Cambridge, MA, USA, 1988. [Google Scholar]

- Sober, E. Philosophy of Biology, 2nd ed.; Westview Press: Boulder, CO, USA, 2000. [Google Scholar]

- Brady, R.H. Natural selection and the criteria by which a theory is judged. System. Zool. 1979, 28, 600–621. [Google Scholar] [CrossRef]

- Rosenberg, A. The Structure of Biological Science; Cambridge University Press: Cambridge, MA, USA, 1985. [Google Scholar]

- Brandon, R.N. Adaptation and Environment; Princeton University Press: Princeton, NJ, USA, 1990. [Google Scholar]

- von Weizsäcker, C.F. Matter, Energy, Information. In Carl Friedrich von Weizsäcker: Major Texts in Physics; Drieschner, M., Ed.; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Stonier, T. Information and the Internal Structure of the Universe: An Exploration into Information Physics; Springer: London, UK, 1990. [Google Scholar]

- Barreiro, C.; Barreiro, J.M.; Lara, J.A.; Lizcano, D.; Martínez, M.A.; Pazos, J. The Third Construct of the Universe: Information. Found. Sci. 2020, 25, 425–440. [Google Scholar] [CrossRef]

- Available online: https://www.etymonline.com/word/cognition (accessed on 31 July 2024).

- Bayne, T.; Brainard, D.; Byrne, R.W.; Chittka, L.; Clayton, N.; Heyes, C.; Webb, B. What is cognition? Curr. Biol. 2019, 29, R608–R615. [Google Scholar] [CrossRef] [PubMed]

- Healy, S.D. Adaptation and the Brain; Oxford Series in Ecology and Evolution; OUP: Oxford, UK, 2021. [Google Scholar]

- Shettleworth, S.J. Cognition, Evolution, and Behavior, 2nd ed.; Oxford University Press: Oxford, UK, 2010. [Google Scholar]

- Young, P. The Nature of Information; Praeger: Connecticut, CT, USA, 1987. [Google Scholar]

- Bhushan, B. Biomimetics: Lessons from Nature—An overview. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2009, 367, 1445–1486. [Google Scholar] [CrossRef] [PubMed]

- Wagner, G.J.; Wang, E.; Shephers, R.W. New approaches for studying and exploiting an old protuberance, the plant trichome. Ann. Bot. 2004, 93, 3–11. [Google Scholar] [CrossRef] [PubMed]

- Koch, K.; Bhushan, B.; Barthlott, W. Multifunctional surface structures of plants: An inspiration for biomimetics. Prog. Mater. Sci. 2009, 54, 137–178. [Google Scholar] [CrossRef]

- Nave, G.K.; Hall, N.; Somers, K.; Davis, B.; Gruszewski, H.; Powers, C.; Collver, M.; Schmale, D.G.; Ross, S.D. Wind dispersal of natural and biomimetic maple samaras. Biomimetics 2021, 6, 23. [Google Scholar] [CrossRef] [PubMed]

- Autumn, K.; Liang, Y.A.; Hsieh, S.T.; Zesch, W.; Chan, W.P.; Kenny, T.W.; Fearing, R.; Full, R.J. Adhesive force of a single gecko foot-hair. Nature 2000, 405, 681–685. [Google Scholar] [CrossRef] [PubMed]

- Gorb, S. Attachment Devices of Insect Cuticle; Kluwer Academic: Dordrecht, The Netherlands, 2001. [Google Scholar]

- Bhushan, B.; Sayer, R.A. Surface characterization and friction of a bio-inspired reversible adhesive tape. Microsyst. Technol. 2007, 13, 71–78. [Google Scholar] [CrossRef]

- Bechert, D.W.; Bruse, M.; Hage, W.; van der Hoeven, J.G.T.; Hoppe, G. Experiments on drag-reducing surfaces and their optimization with an adjustable geometry. J. Fluid Mech. 1997, 338, 59–87. [Google Scholar] [CrossRef]

- Fersht, A.R. The hydrogen bond in molecular recognition. Trends Biochem. Sci. 1987, 12, 301–304. [Google Scholar] [CrossRef]

- Buckingham, A.D. Intermolecular forces. In Principles of Molecular Recognition; Buckingham, A.D., Legon, A.C., Roberts, S.M., Eds.; Chapman & Hall: London, UK, 1993; pp. 1–16. [Google Scholar]

- Root-Bernstein, R.S.; Dillon, P.F. Molecular complementarity I: The complementarity theory of the origin and evolution of life. J. Theor. Biol. 1997, 188, 447–479. [Google Scholar] [CrossRef] [PubMed]

- Marijuán, P.C.; Navarro, J. From molecular recognition to the “vehicles” of evolutionary complexity: An informational approach. Int. J. Mol. Sci. 2021, 22, 11965. [Google Scholar] [CrossRef] [PubMed]

- Luisi, P.L. The Emergence of Life: From Chemical Origins to Synthetic Biology; Cambridge University Press: New York, NY, USA, 2006. [Google Scholar]

- Hoffmeyer, J. Semiotic scaffolding of living systems. In Introduction to Biosemiotics; Barbieri, M., Ed.; Springer: Dordrecht, The Netherlands, 2007. [Google Scholar]

- Online Etymology Dictionary. Available online: https://www.etymonline.com/ (accessed on 31 June 2024).

- Marks, F.; Klingmüller, U.; Müller-Decker, K. Cellular Signal Processing, 2nd ed.; Taylor and Francis: Abington, UK, 2017. [Google Scholar]

- Monod, J. Chance and Necessity; Alfred. A. Knopf: New York, NY, USA, 1971. [Google Scholar]

- Barbieri, M. Codepoiesis—The deep logic of life. Biosemiotics 2012, 5, 297–299. [Google Scholar] [CrossRef]

- Kull, K. Biosemiotics and biophysics—The fundamental approaches to the study of life. In Introduction of Biosemiotics: The New Synthesis; Barbieri, M., Ed.; Springer: Dordrecht, The Netherlands, 2008; Chapter 7. [Google Scholar]

- Kull, K. Life Is Many, and Sign Is Essentially Plural: On the Methodology of Biosemiotics in towards a Semiotic Biology: Life Is the Action of Signs; Kull, K., Claus Emmeche, C., Eds.; Imperial College Press: London, UK, 2011; Chapter 6. [Google Scholar]

- Kolmogorov, A. Foundations of the Theory of Probability, 2nd ed.; Chelsea Publishing Company: New York, NY, USA, 1933. [Google Scholar]

- Hacking, I. The Emergence of Probability: A Philosophical Study of Early Ideas about Probability, Induction and Statistical Inference, 2nd ed.; Cambridge University Press: New York, NY, USA, 2006. [Google Scholar]

- Popper, K.R. The propensity interpretation of probability. Br. J. Philos. Sci. 1959, 10, 25–42. [Google Scholar] [CrossRef]

- Popper, K.R. The Open Universe: An Argument for Indeterminism; Routledge: London, UK, 1982. [Google Scholar]

- Mills, S.K.; Beatty, J.H. The propensity interpretation of fitness. Philos. Sci. 1979, 46, 263–286. [Google Scholar] [CrossRef]

- Millstein, R.L. Probability in Biology: The Case of Fitness. In The Oxford Handbook of Probability and Philosophy; Oxford University Press: Oxford, UK, 2016. [Google Scholar]

- Popper, K.R. A World of Propensities; Thoemmes Press: Bristol, UK, 1990. [Google Scholar]

- Buzsáki, G. The Brain from Inside Out, Kindle ed.; Oxford University Press: Oxford, UK, 2021. [Google Scholar]

- Gillies, D. Philosophical Theories of Probability; Routledge: New York, NY, USA, 2000. [Google Scholar]

- Nakajima, T. Internal entropy as a measure of the amount of disorder of the subject-dependent environment. In Proceedings of the FIS2005 3rd Conference on the Foundations of Information Science, Paris, France, 4–7 July 2005; MDPI: Basel, Switzerland, 2005; Available online: https://www.mdpi.org/fis2005/proceedings.html (accessed on 31 June 2024).

- Maxwell, J.C. Theory of Heat; Dover Publications, Inc.: New York, NY, USA, 2001. [Google Scholar]

- Andrade, E. On Maxwell’s demons and the origin of evolutionary variations. Acta Biotheor. 2004, 52, 17–40. [Google Scholar] [CrossRef] [PubMed]

- von Uexküll, J. Theoretical Biology; Harcourt, Brace: New York, NY, USA, 1926. [Google Scholar]

- von Uexküll, J. A Foray into the Worlds of Animals and Humans: With A Theory of Meaning, Kindle ed.; University of Minnesota Press: Minneapolis, MN, USA, 2010. [Google Scholar]

- Sagan, D. Introduction: Umwelt after Uexküll. In Jakob von Uexkull. A Foray into the Worlds of Animals and Humans: With a Theory of Meaning, Kindle ed.; University of Minnesota Press: Minneapolis, MN, USA, 2010. [Google Scholar]

- Odling-Smee, F.J.; Laland, K.N.; Feldman, M.W. Niche Construction: The Neglected Process in Evolution; Princeton University Press: Princeton, NJ, USA; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Baker, B.W.; Hill, E.P. Beaver (Castor canadensis). In Wild Mammals of North America: Biology, Management, and Conservation, 2nd ed.; Feldhamer, G.A., Thompson, B.C., Chapman, J.A., Eds.; The Johns Hopkins University Press: Baltimore, MD, USA, 2003; pp. 288–310. [Google Scholar]

- Matsuno, K. Protobiology: Physical Basis of Biology; CRC Press: Boca Raton, FL, USA, 1989. [Google Scholar]

- Salthe, S.N. Evolving Hierarchical Systems; Columbia University Press: New York, NY, USA, 1985. [Google Scholar]

- O’Neill, R.V.; Deangelis, D.L.; Waide, J.B.; Allen, T.F.H. A Hierarchical Concept of Ecosystems; Princeton University Press: Princeton, NJ, USA, 1986. [Google Scholar]

- Leibniz, G.W. Monadology, Kindle ed.; Latta, R., Translator; Ale’mar Contracting Company: Nablus, Amman, 2020. [Google Scholar]

- Husserl, E. Ideas Pertaining to a Pure Phenomenology and to a Phenomenological Philosophy, First Book, General Introduction to a Pure Phenomenology; Kersten, F., Translator; Springer: Dordrecht, The Netherlands, 1983. [Google Scholar]

- Husserl, E. Cartesian Meditations; Springer: Dordrecht, The Netherlands, 1970. [Google Scholar]

- Kadir, L.A.; Stacey, M.; Barrett-Jolley, R. Emerging roles of the membrane potential: Action beyond the action potential. Front. Physiol. 2018, 9, 1661. [Google Scholar]

- Hörner, M.; Weber, W. Molecular switches in animal cells. FEBS Lett. 2012, 586, 2084–2096. [Google Scholar] [CrossRef]

- Rosen, R. Fundamentals of Measurement and Representation of Natural Systems; Elsevier North-Holland, Inc.: New York, NY, USA, 1978. [Google Scholar]

- Wheeler, J.A. Information, physics, quantum: The search for links. In Proceedings of the 3rd International Symposium on Foundations of Quantum Mechanics, Tokyo, Japan, 28–31 August 1989; Hey, A.J.G., Ed.; (Reproduced in Feynman and Computation). Perseus Books: Cambridge, UK, 1999; pp. 309–336, ISBN 0-7382-0057-3. [Google Scholar]

- Shannon, C.E.; Weaver, W. The Mathematical Theory of Communication; University of Illinois Press: Champaign, IL, USA, 1949. [Google Scholar]

- Fish, W. Philosophy of Perception: A Contemporary Introduction, 2nd ed., Kindle ed.; Routledge: London, UK, 2021. [Google Scholar]

- Yuille, A.; Bülthoff, H. Bayesian decision theory and psychophysics. In Perception as Bayesian Inference; Knill, D., Richards, W., Eds.; Cambridge University Press: Cambridge, MA, USA, 1996. [Google Scholar]

- Geisler, W.S.; Diehl, R.L. A Bayesian approach to the evolution of perceptual and cognitive systems. Cogn. Sci. 2003, 27, 379–402. [Google Scholar] [CrossRef]

- Lehar, S. Gestalt isomorphism and the primacy of subjective conscious experience: A Gestalt Bubble model. Behav. Brain Sci. 2003, 26, 375–444. [Google Scholar] [CrossRef]

- Hoffman, D.D. The interface theory of perception. In Object Categorization: Computer and Human Vision Perspectives; Dickinson, S., Tarr, M., Leonardis, A., Schiele, B., Eds.; Cambridge University Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Hoffman, D.D.; Singh, M.; Prakash, C. The Interface Theory of Perception. Psychon. Bull. Rev. 2015, 22, 1480–1506. [Google Scholar] [CrossRef]

- Mark, J.T.; Marion, B.B.; Hoffman, D.D. Natural selection and veridical perceptions. J. Theor. Biol. 2010, 266, 504–515. [Google Scholar] [CrossRef] [PubMed]

- Saussure, F. Course in General Linguistics, Kindle ed.; Harris, R., Translator; Bloomsbury Publishing: London, UK, 2013. [Google Scholar]

- Culler, J. Saussure; Fontana Press: London, UK, 1976. [Google Scholar]

- Chalmers, D. Facing up to the problem of consciousness. J. Conscious. Stud. 1995, 2, 200–219. [Google Scholar]

- Chalmers, D. Debunking Arguments for Illusionism. J. Conscious. Stud. 2002, 27, 258–281. [Google Scholar]

- Nagel, T. What is it like to be a bat? Philos. Rev. 1974, 83, 435–450. [Google Scholar] [CrossRef]

- Smith, E.; Morowitz, H.J. The Origin and Nature of Life on Earth: The Emergence of the Fourth Geosphere; Cambridge University Press: New York, NY, USA, 2016. [Google Scholar]

- Schrum, J.P.; Zhu, T.F.; Szostak, J.W. The origins of cellular life. Cold Spring Harb. Perspect. Biol. 2010, 2, a002212. [Google Scholar] [CrossRef]

- Matsuno, K. From Quantum Measurement to Biology via Retrocausality. Prog. Biophys. Mol. Biol. 2017, 131, 131–140. [Google Scholar] [CrossRef] [PubMed]

- Dai, S.; Xie, Z.; Wang, B.; Ye, R.; Ou, X.; Wang, C.; Tian, B. An inorganic mineral-based protocell with prebiotic radiation fitness. Nat. Commun. 2023, 14, 7699. [Google Scholar] [CrossRef]

- Loewenstein, W.R. The Touchstone of Life: Molecular Information, Cell Communication, and the Foundations of Life; Oxford University Press: Oxford, UK, 1999. [Google Scholar]

- Bitbol, M.; Luisi, P.L. Autopoiesis with or without cognition: Defining life at its edge. J. R. Soc. Interface 2004, 1, 99–107. [Google Scholar] [CrossRef]

- Bourgine, P.; Stewart, J. Autopoiesis and cognition. Artif. Life 2004, 10, 327–345. [Google Scholar] [CrossRef]

- Webre, D.J.; Wolanin, P.M.; Stock, J.B. Bacterial chemotaxis. Curr. Biol. 2003, 13, R47–R49. [Google Scholar] [CrossRef] [PubMed]

- Peirce, C. The Essential Peirce, Volume 2 (1893–1913): Selected Philosophical Writings, Kindle ed.; Indiana University Press: Bloomington, IN, USA, 1998. [Google Scholar]

- Brier, S. How Peircean semiotic philosophy connects Western science with Eastern emptiness ontology. Prog. Biophys. Mol. Biol. 2017, 131, 377–386. [Google Scholar] [CrossRef] [PubMed]

- Champagne, M. Consciousness and the Philosophy of Signs: How Peircean Semiotics Combines Phenomenal Qualia and Practical Effects; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Wigner, E.P. Remarks on the Mind-Body Question. In The Scientist Speculates: An Anthology of Partly-Baked Ideas; Good, I.J., Ed.; Heinemann: London, UK, 1961. [Google Scholar]

- Esfeld, M. Wigner’s view of physical reality. Stud. Hist. Philos. Sci. Part B Studies Hist. Philos. Mod. Phys. 1999, 30, 145–154. [Google Scholar]

- Zurek, W.H. Decoherence q-c transition. Los Alamos Sci. 2002, 27, 2–25. Available online: https://arxiv.org/ftp/quant-ph/papers/0306/0306072.pdf (accessed on 31 June 2024).

- Carpenter, R.H.S.; Anderson, A.J. The death of Schrodinger’s cat and of consciousness-based quantum wave-function collapse. Ann. Fond. Louis Broglie 2006, 31, 45–52. [Google Scholar]

| Internal (Cognizers within a System) | External (External Observers) | |

|---|---|---|

| Probability | Pcog, Poverall | Pcog, Poverall |

| Entropy | Hcog, Hoverall | Hcog, Hoverall |

| Amount of Information | Icog, Ioverall | Icog, Ioverall |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nakajima, T. Unification of Mind and Matter through Hierarchical Extension of Cognition: A New Framework for Adaptation of Living Systems. Entropy 2024, 26, 660. https://doi.org/10.3390/e26080660

Nakajima T. Unification of Mind and Matter through Hierarchical Extension of Cognition: A New Framework for Adaptation of Living Systems. Entropy. 2024; 26(8):660. https://doi.org/10.3390/e26080660

Chicago/Turabian StyleNakajima, Toshiyuki. 2024. "Unification of Mind and Matter through Hierarchical Extension of Cognition: A New Framework for Adaptation of Living Systems" Entropy 26, no. 8: 660. https://doi.org/10.3390/e26080660