Abstract

A weak second-order numerical method for generating trajectories based on stochastic differential equations (SDE) is developed. The proposed approach bypasses direct noise realization by updating the system’s state using independent Gaussian random variables so as to reproduce the first three cumulants of the state variable at each time step to the second order in the time-step size. The update rule for the state variable is derived based on the system’s Fokker–Planck equation in an arbitrary number of dimensions. The high accuracy of the method as compared to the standard Milstein algorithm is demonstrated on the example of Büttiker’s ratchet. While the method is second-order accurate in the time step, it can be extended to systematically generate higher-order terms of the stochastic Taylor expansion approximating the solution of the SDE.

1. Introduction

Stochastic differential equations (SDEs) are an essential tool for research and modelling across a wide range of disciplines, from physics to biology, climatology, and finance [1,2,3]. The standard schemes to solve a SDE are based on the stochastic Taylor expansion [4,5,6,7,8,9] illustrated here on a one-dimensional Langevin equation (LE):

in which and are known functions of the state variable z, the overdot indicates the time derivative, and the noise is a random function of time. To derive the update rule for the state variable from the current time t to the next time , an integration of Equation (1) is first performed:

over an interval not exceeding the time step intended to be used in the numerical solution of (1). Within the integral (2), the functions and are Taylor-expanded around the initial point to a desired order, and the original Equation (2) is then back-substituted into this expansion. Finally, the state variable in the integral is replaced with its initial value , and the upper integration limit is set to . As a result, one obtains the next-step value in terms of the initial value . In the lowest order, one recovers the Milstein algorithm [10], a standard method of solving SDEs, whose various extensions and refinements exist [8,11,12,13,14,15].

The so-obtained numerical scheme is a strong one, i.e., it reproduces the stochastic trajectory for each specific noise function . For most practical purposes, however, the explicit stochastic trajectory corresponding to a particular noise realization is usually unnecessary. Instead, one typically aims to generate multiple trajectories that are statistically consistent with the SDE, allowing for the computation of meaningful quantities, such as average values and correlation functions of various types. This statistical approach, also known as a weak integration scheme of the SDE (1), is equally valuable as the strong one.

Building upon this perspective, alternative methods were introduced [16,17], in which the system’s state at the next time step is treated as a random variable sampled from the conditional probability density at time given the initial state at time t. Due to the smallness of , efficient approximations for the function can be developed.

As a matter of fact, the full conditional probability density is not necessary for the generation of stochastic trajectories; knowing only its first few moments suffices to develop an effective numerical procedure for trajectory generation [18]. This principle was applied in a recent study [19], where the Langevin Equation (1) driven by Gaussian white noise was analyzed for a one-dimensional system.

The present work generalizes the weak second-order scheme from [19] to the white noise-driven LE in arbitrary dimensionality N. The significance of the method developed here lies in its capacity to systematically generate the terms in the weakly convergent stochastic Taylor expansion. In this respect, it plays a role analogous to the stochastic Taylor expansion formulated by Kloeden and Platen for strongly convergent schemes [4]. Notably, the Milstein algorithm emerges as the first-order truncation of either expansion in : the strong Taylor expansion of Kloeden–Platen or the weak one presented in this work. The formulation developed here can serve as a benchmark framework for assessing the accuracy of integration schemes not explicitly derived from stochastic Taylor expansions, such as midpoint-type algorithms, leapfrog methods, predictor–corrector schemes, or stochastic Runge–Kutta methods.

This paper is structured as follows. In the next section, the main result of this work is formulated, which is a recipe to generate the system’s state at the time given the initial state at the earlier simulation time step t for a multidimensional version of the LE (1) with Gaussian white noises. The subsequent section focuses on the derivation of this update rule. An illustrative example, Büttiker ratchet driven by a spatially periodic temperature profile, is then provided and analyzed using the method developed in this paper and with the help of the classical Milstein scheme. Finally, a possible systematic improvement of the method is briefly discussed and a few concluding remarks are made.

2. Numerical Generation of Stochastic Trajectories

Consider a system whose state, represented by a collection of variables z = , , , evolves in time according to the N-dimensional LE:

in which the functions and are real-valued and differentiable at least three times, the matrix is non-degenerate for all values of the state variable z, the independent noises are unbiased, Gaussian, and white, the noise term is interpreted in the Stratonovich sense, and the summation is implied for repeated indices.

Suppose that the system was in a state at time t and entered a state

at the next time . The central result of this paper is the propagation rule :

where are independent Gaussian random variables drawn from the probability density

and the coefficients are given by

For notational brevity the argument z is suppressed in the right-hand sides, where and

are likewise functions of the initial state z. Partial differentiation is represented by a comma, e.g., , and square brackets around subscripts indicate antisymmetrization, whereas the round brackets will signify symmetrization. Namely, for any object that depends on k indices , its symmetrized and antisymmetrized versions are

where summation is performed over all permutations of the subscripts , and equals 1 or for even and odd permutations, respectively. We, furthermore, adopt the convention that the dummy indices inside the brackets are not to be symmetrized over; hence, placement of the brackets is important. For example,

is, in general, not equal to

unless is symmetric in the first three subscripts.

3. Derivation of the Propagation Rule

The idea of the derivation is to choose the coefficients , , and in Equation (5) so as to correctly reproduce the first three moments , , and of the displacements . The moments can be obtained from the characteristic function

by differentiation, e.g., , etc. To ensure the existence of , the parameters are purely imaginary.

Alternatively, we may focus on the first three cumulants, defined as the derivatives of the characteristic function’s natural logarithm [2],

The first three cumulants happen to coincide with the respective central moments and are expressed in terms of the unknown coefficients in Equation (5) as

where the round brackets indicate symmetrization with respect to the free indices, but not the dummy ones. The derivation details of Equation (14) are provided in Appendix A. The extra symmetrization brackets are placed around the indices i and j in the first term of the last expression in order to emphasize that it is only the symmetric part of that contributes to the third cumulant .

To obtain the cumulants (13) based on the initial state variable z, we resort to the Fokker–Planck equation (FPE) for the transition probability density from the state at time to the state z at time t [2]:

with the initial condition

The formal solution of the FPE reads

Replacing the earlier time with t and the later time with , we obtain the expectation value of an arbitrary state function at given that, at time t, the system was in the state z:

Here, the adjoint Fokker–Planck operator is defined by

and the functions and are introduced in Equation (8).

With , we obtain the characteristic function (12)

Its natural logarithm is written with the help of the Taylor series

Specifically, to the second order in

Here, the terms that multiply and were grouped together, and then the identity

was used together with the “product rule” valid for arbitrary state functions and :

Differentiating Equation (22), we obtain the first three cumulants:

The first line immediately gives an expression for the coefficient from Equation (5) according to the first Equation (14). We now need to solve the remaining two Equations in (14) with the cumulants and from Equation (25) to find the coefficients and . We look for them in the form of expansions in the time step:

Substitution of these expansions into Equation (14) gives the second and the third cumulants in terms of the yet-unknown coefficients , , and :

Comparing the term proportional to in with the second Equation (25), we find that . Since this equality must hold for an arbitrary function , we can identify

Next, we go on to the calculation of based on the third cumulant in Equation (25), which is rewritten as

Here, we used the fact that and .

Due to the symmetrization, we can interchange the indices n and m in Equation (29). Further, we interchange the dummy indices j and k and write

A comparison of Equation (31) with the arithmetic average of Equations (29) and (30) allows one to identify

as stated in the third Equation (7) and Equation (8).

We substitute the expressions for and into the first Equation (27) and compare the terms that multiply with the respective terms in the second Equation (25). Based on the product rule (24), we can write

where the previously obtained expression for is used in the last equality. The first term in the brackets of the second expression (25) is . Hence,

To deal with the difference of the ’s in the second line, we express these coefficients as a sum of symmetric and antisymmetric parts, , and note that . Then, the second line in Equation (34) is just

The symmetrization brackets are placed around the subscripts in the last step to emphasize that the expression obtained is symmetric with respect to the free indices n and m, as is obvious from the right-hand side of Equation (35). Substitution of this result into Equation (34) finally gives

thereby completing the derivation of the coefficients (7) in the propagation rule (5).

4. Case Study: Transport Induced by Periodic Spatial Modulation of Temperature

Ratchet effect refers to transport in a noisy system, whose parameters are periodically modulated around the average values in such a way that transport in the absence of this modulation is impossible [20]. Usually, the parameter modulation occurs in time [20]; however, the above definition may as well be applied to the situations in which the modulation happens in space, as demonstrated by Büttiker [21] in the earliest example of a ratchet effect induced by spatial modulation of temperature.

To evaluate the improvements introduced by the present scheme over the first-order methods, we compare it with the Milstein algorithm, which arises as the leading-order truncation of our formulation. In particular, we wish to examine the role of higher-order contributions—specifically, the terms of order and —that are absent in the Milstein method. As a testing ground, we choose the Büttiker ratchet system, which permits comparison of not only the static properties via the equilibrium probability distribution, but also the dynamical features, captured by the mean particle velocity.

Let us consider the Büttiker’s ratchet model [21] of an overdamped Brownian particle in a periodic potential with periodicity a in a non-uniform temperature field with the same spatial periodicity as the potential. The LE reads

where is the damping coefficient and is the position-dependent temperature. For definiteness, we assume the potential and the temperature to be given by

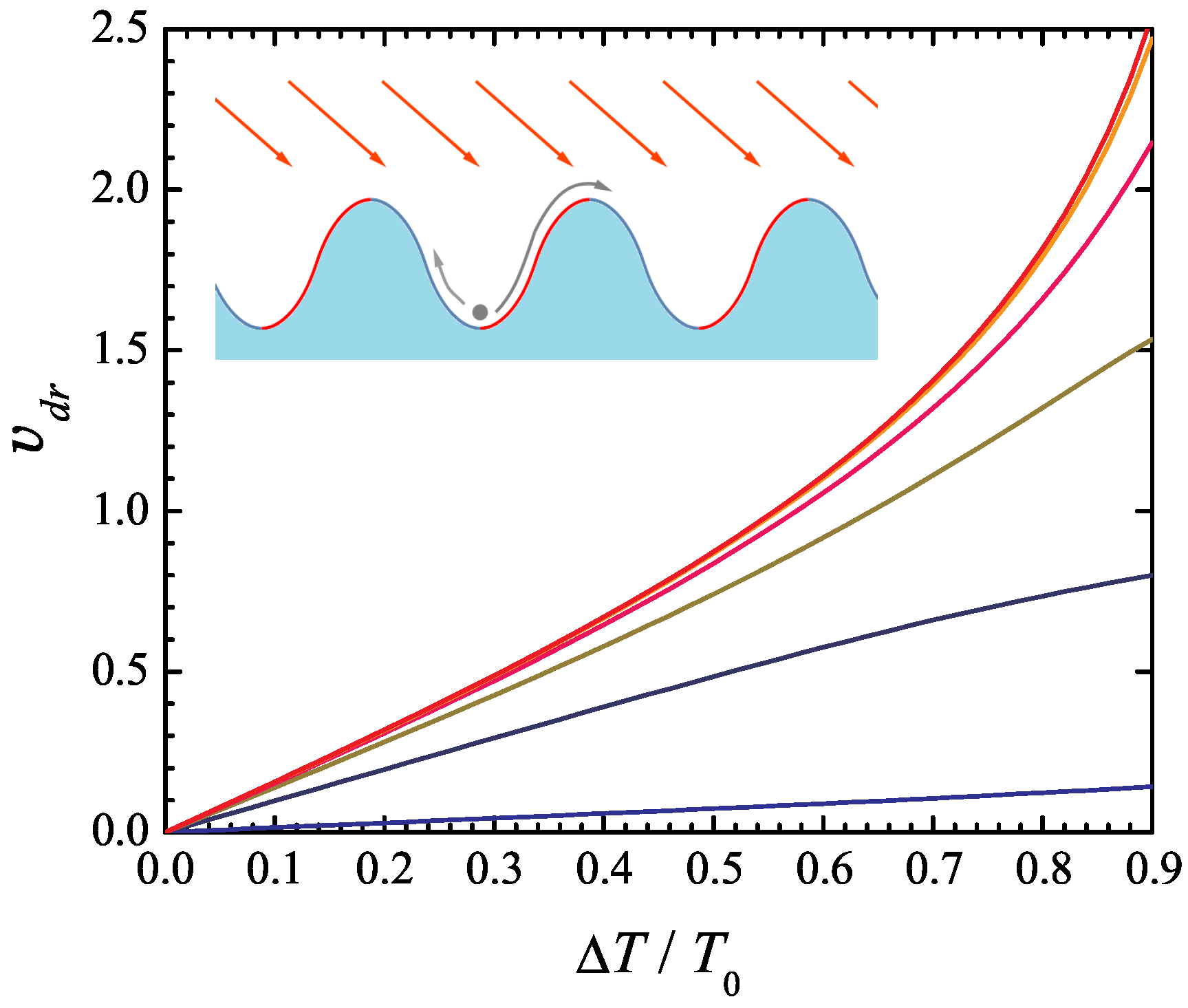

where is the potential corrugation depth, is the average temperature, and is the temperature modulation amplitude. A simple way of thinking about this model is to consider a particle in a gravity field moving in a periodic terrain, see inset in Figure 1. When light is incident on this landscape at an angle, it induces a non-uniform heating effect, with the illuminated regions becoming hotter than the shaded areas.

Figure 1.

Drift velocity of an overdamped Brownian particle in Büttiker’s ratchet model (37), (38) as a function of the temperature modulation amplitude normalized to the average temperature . The model parameters , a, and are set to 1. The six curves represent different values of 20, 5, 2, 1, 0.5, and 0.2 (from top to bottom). The inset illustrates the physical interpretation of the system, where a periodic potential and spatial temperature variations induce directed transport.

In the absence of spatial temperature variations, the model does not exhibit net motion. Likewise, periodic temperature variations alone, without a corresponding periodic potential, do not induce a net drift. However, when both the temperature and potential vary periodically in space and are phase-shifted relative to each other, the probabilities of a particle transitioning from one potential well to an adjacent one—either to the left or right—are generally unequal. Specifically, the probability of transition over the “hotter” side of the potential well is greater than that over the “colder” side. This asymmetry leads to a net transport of the particle. It has recently been suggested [22] that this effect can drive a semiconductor thermoelectric generator.

An analytical expression for the drift velocity can be developed following the treatment of Ref. [21]. Namely, the FPE for the probability density to find the particle near the position x at time t,

has the form of a continuity equation, , where is the probability current. We look for the stationary solution of the FPE (39) that respects the periodic boundary conditions and is normalized to 1 within one period:

The probability current is constant and equals

By solving Equation (41) with the periodicity conditions (38), we first express in terms of the probability current as

Imposing the normalization condition (40) and noting that the probability current is related to the drift velocity of the particle by , we obtain the drift velocity as [21]

In the numerical simulations, the parameters a, , and are set to 1, thereby fixing the units of length, energy, and time. The drift velocity (43) vs. the ratio of the temperature modulation amplitude to the average temperature is shown in Figure 1 for , and 0.2 (from top to bottom). As might be expected, the drift velocity increases with the temperature modulation amplitude, as well as with the average temperature at a fixed ratio . Somewhat less obvious is the fact that the drift velocity vs. curve becomes less sensitive to the average temperature with increasing its value. Indeed, the curves vs. obtained for and differ very little; further increase in above the value 20 does not result in its noticeable change.

The stochastic trajectories of the Brownian particle (38) were simulated according to the algorithm from Section 2, which, in the one-dimensional case, simplifies to

If only the first-order term is kept in the expression for a and only the term of the order of is kept in the expression for b, the scheme (44) becomes identical with the standard Milstein method [10].

The simulations were performed according to the algorithm (44) and following the Milstein scheme at several average temperatures , and 5. For all values of , the temperature amplitude was kept at . To determine the drift velocity from the simulations, the particle trajectory was generated over a long time with the initial condition . The statistical uncertainty of the simulation results was below 1% in all cases.

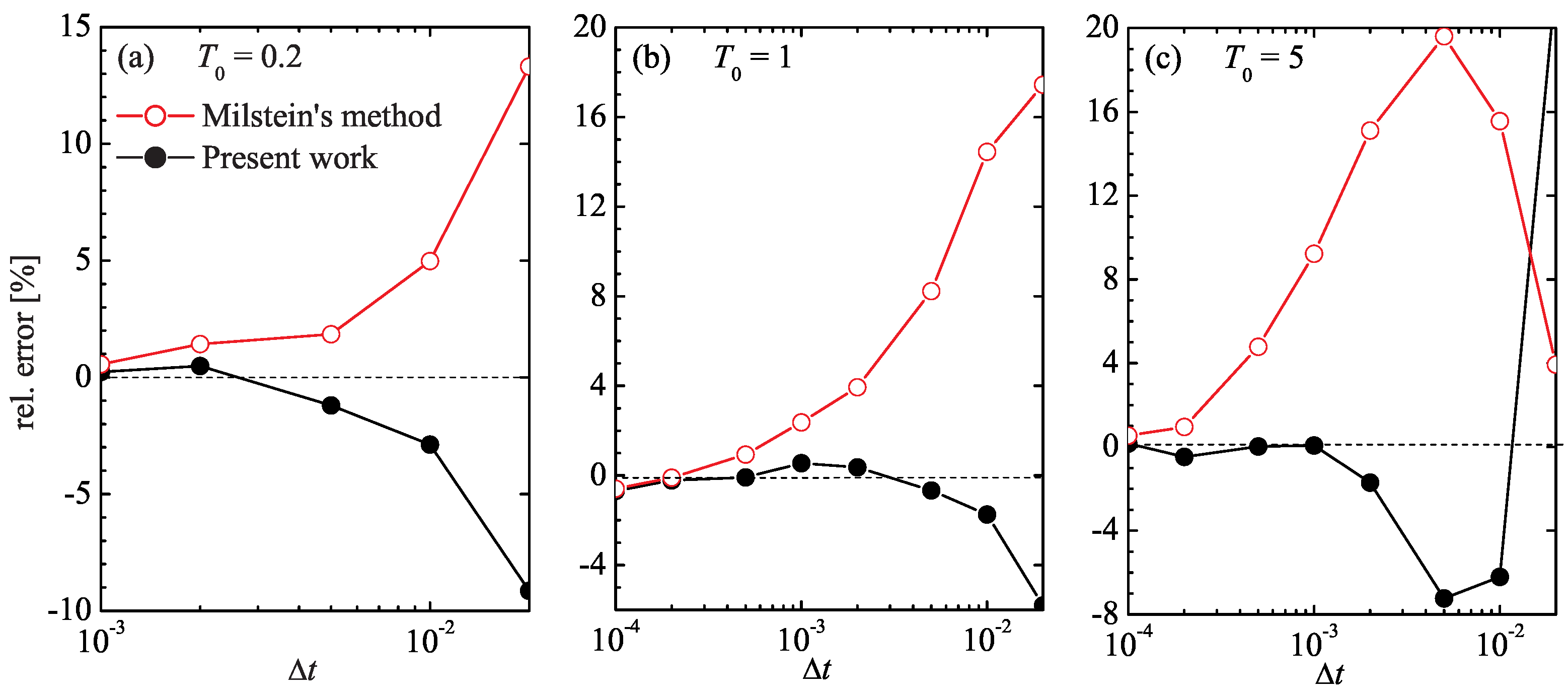

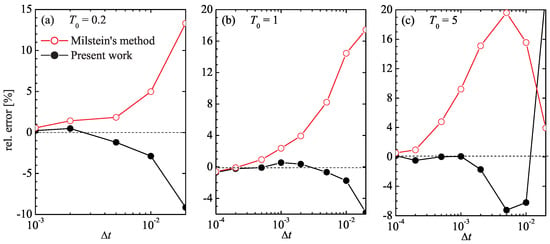

Shown in Figure 2 is the relative deviation of the average velocity from the exact value (43), , for both simulation algorithms at different time-step values . It is seen that, at low average temperature , both schemes exhibit about the same accuracy, even though the Milstein scheme overestimates the drift velocity, while the algorithm (43) underestimates it by a slightly smaller amount; for example, at , the error of the Milstein method is close to 5%, while the scheme (43) has an error of about 3%.

Figure 2.

Relative error, , of the drift velocity obtained from the numerical simulation of Equation (37) using the Milstein method (red open circles) and the method of the present work (black filled circles) for different values of the simulation time step .

The discrepancy of the two methods becomes more evident at higher temperatures. At , the scheme (43) achieves a 1% accuracy at , whereas the Milstein approach requires . Likewise, at , the scheme (43) achieves this accuracy at , whereas the Milstein procedure requires a time step ten times as small.

The similarity in the mean velocity calculation by the two methods at low temperature, Figure 2a, does not necessarily imply that they are equally accurate in this regime. Indeed, in the deterministic limit , our scheme (43) reduces to a second-order Taylor expansion of with velocity and acceleration , whereas the Milstein scheme only contains the velocity term. Nevertheless, although the Milstein scheme is only first-order accurate, it still correctly yields the zero drift velocity at zero temperature . One can expect that at low but finite temperatures, even a rudimentary method can capture the near-zero drift velocity with seemingly good accuracy. To properly differentiate the performance of integration schemes in this regime, one would need to examine a quantity more sensitive than .

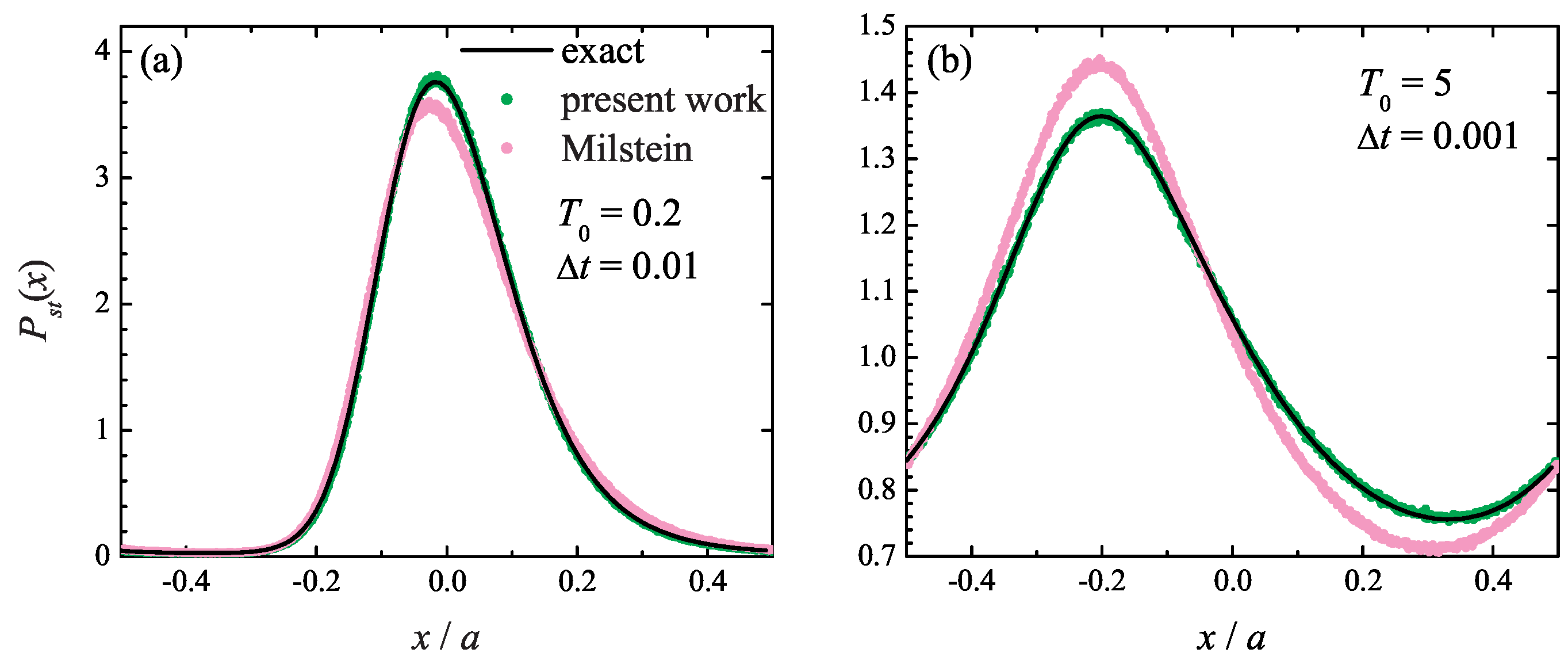

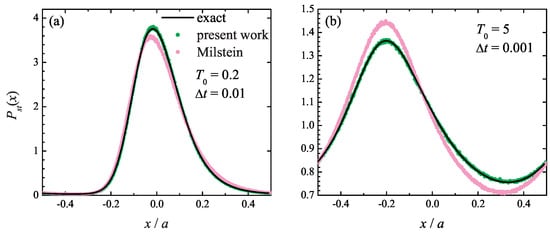

Such a quantity may be the equilibrium probability distribution itself, given by Equation (42). It is shown in Figure 3 at (a) and (b) , where the exact distribution (42) is compared with the one found from the simulations based on the present method (5)–(7) and the Milstein algorithm. It is seen that the Milstein integration method yields quantitatively inaccurate steady-state probability distribution at both temperatures, even though its estimate of the drift velocity is close to the correct value. At the same time, the numerical results obtained with the present method (5)–(7) are in excellent agreement with the theoretical curve, which highlights the importance of the higher-order terms in the stochastic Taylor expansion (5).

Figure 3.

Particle steady-state periodic probability distribution in the Büttiker ratchet model at at (a) and integration time step and (b) and . Solid line: exact curve (44); green symbols: simulation results obtained with the present method; magenta symbols: simulation results using the Milstein scheme.

5. Concluding Remarks

A numerical method is worked out for generating stochastic trajectories that preserve the cumulants of the state variable up to the second order in the time step. The derivation presented here applies to the white noise-driven systems of arbitrary dimensionality N. The accuracy of this approach in computing observables is comparable to the accuracy of the second-order stochastic Taylor expansion-based methods [4,5]. The advantage of the present approach lies in the fact that it reduces computational complexity, because it requires a single set of N-independent Gaussian random variables. In contrast, the stochastic Taylor expansion-based methods require random numbers of the order with specific correlations among them (see, e.g., Equations (10)–(15) of Ref. [8]). Their generation is a non-trivial task, especially at large N.

In terms of the idea used in the derivation, the closest multidimensional algorithm published in the literature is by Cao and Pope (abbreviated as CP; see Section 2.4 of [18]), as both methods provide a second-order weak integration scheme for the Langevin Equation (3), and both are based on matching the average properties of the updated system’s state using the associated Fokker–Planck Equation (15). While the conceptual foundation is similar, there are several important distinctions between the present algorithm and the CP method. First, the CP algorithm is a midpoint scheme: it requires one to evaluate the state variable at time before computing the full time-step update . In contrast, the numerical scheme (5)–(7) performs this time-step propagation directly. Second, the CP scheme uses three N-dimensional sets of uncorrelated Gaussian random variables; the present method requires only one such set. Finally, the CP scheme is formulated for the special case in which the noise-coupling matrix reduces to a scalar function common to all components of the state vector z, whereas the scheme developed here allows for a fully general, position-dependent noise matrix.

To improve the performance speed of the scheme (5), one may be tempted to replace Gaussian random numbers with a different type of random numbers that can be generated more quickly [8,23]. However, there is strong evidence [24] that using non-Gaussian random variables can worsen the accuracy of the method. Indeed, the properties of the Gaussian numbers were explicitly used in deriving the cumulant expressions (14); attempting to replace with non-Gaussian random variables may require a major modification of the derivation of the propagation rule (5), and thus to a major modification of this rule itself.

If the terms of order higher than are neglected in the scheme (5)–(7), it reduces to the general multidimensional form of the Milstein method [10]. For this reason, the update rule (5)–(7) may be regarded as a second-order explicit weak Milstein method. It is logical to focus the future research on exploring the higher-order corrections to the scheme (5), (7). When doing this, adding terms of the higher order in to the coefficient , , and may not necessarily lead to better accuracy of the algorithm. The reason is that increasing the order of the algorithm results in the emergence of the higher-order cumulants, as can be shown based on Equations (20) and (21). In particular, the leading term in the fourth cumulant is of the order of ; hence, if one wishes to extend the order of the scheme (5), (7) to , one would need to impose an extra condition that the fourth cumulant is correctly reproduced by the updated state variable . This, in turn, implies that an extra term should be added to the stochastic Taylor expansion (5) with the unknown parameters . Thus, going to the higher order in will result in higher computational complexity, but may potentially be beneficial for the accuracy of the method.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

I am grateful to ACEnet for providing computational resources.

Conflicts of Interest

The author declares no conflicts of interest.

Appendix A. Properties of Gaussian Random Numbers

To derive Equation (14), Equation (5) was used together with the average value of the product of Gaussian random variables (6):

see, e.g., Section 2.3.3 of Ref. [2]. The last line represents a sum over all different combinations of Kronecker’s deltas; for example, .

More generally, the mean value of the product

is a sum of different products of Kronecker deltas with all combinations of the pairs of indices from , , excluding the ones that contain the deltas found in the brackets, i.e., , …, . This can be proven by induction. Indeed, this statement is obviously true for and arbitrary k, as

Assuming that it holds for some n and arbitrary k, we write the expectation value for as a difference:

By the inductive assumption, the first average represents the sum of products of deltas , , …, , from which the ones that contain , …, are excluded. The second term represents the subset of products of deltas found in the first average that contain . The difference between the two averages is, therefore, the sum of products of all deltas, from which , …, are excluded.

With these properties, we immediately obtain the first cumulant in Equation (14). The second cumulant is

In the last line, can be replaced with just , because . The third cumulant in Equation (14) is derived in a similar manner using the identities

References

- Coffey, W.T.; Kalmykov, Y.P. Langevin Equation: With Applications to Stochastic Problems in Physics, Chemistry, and Electrical Engineering; World Scientific: Singapore, 2012. [Google Scholar] [CrossRef]

- Risken, H. Fokker-Planck Equation; Springer: Berlin/Heidelberg, Germany, 1996. [Google Scholar] [CrossRef]

- Øksendal, B. Stochastic Differential Equations; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar] [CrossRef]

- Kloeden, P.; Platen, E. Numerical Solution of Stochastic Differential Equations; Springer: Berlin/Heidelberg, Germany, 1995. [Google Scholar] [CrossRef]

- Milstein, G. Numerical Integration of Stochastic Differential Equations; Springer: Berlin/Heidelberg, Germany, 1995. [Google Scholar] [CrossRef]

- Platen, E. An introduction to numerical methods for stochastic differential equations. Acta Numer. 1999, 8, 197–246. [Google Scholar] [CrossRef]

- Greiner, A.; Strittmatter, W.; Honerkamp, J. Numerical integration of stochastic differential equations. J. Stat. Phys. 1988, 51, 95–108. [Google Scholar] [CrossRef]

- Qiang, J.; Habib, S. Second-order stochastic leapfrog algorithm for multiplicative noise Brownian motion. Phys. Rev. E 2000, 62, 7430–7437. [Google Scholar] [CrossRef] [PubMed]

- Duan, W.L.; Fang, H.; Zeng, C. Second-order algorithm for simulating stochastic differential equations with white noises. Phys. A 2019, 525, 491–497. [Google Scholar] [CrossRef]

- Mil’shtejn, G. Approximate integration of stochastic differential equations. Theory Probab. Its Appl. 1975, 19, 557–562. [Google Scholar] [CrossRef]

- Sotiropoulosa, V.; Kaznessis, Y.N. An adaptive time step scheme for a system of stochastic differential equations with multiple multiplicative noise: Chemical Langevin equation, a proof of concept. J. Chem. Phys. 2008, 128, 014103. [Google Scholar] [CrossRef] [PubMed]

- Yin, Z.; Gan, S. An improved Milstein method for stiff stochastic differential equations. Adv. Differ. Equ. 2015, 369. [Google Scholar] [CrossRef]

- Jentzen, A.; Röckner, M. A Milstein scheme for SPDEs. Found. Comput. Math. 2015, 15, 313–362. [Google Scholar] [CrossRef]

- Tripura, T.; Hazra, B.; Chakraborty, S. Novel Girsanov correction based Milstein schemes for analysis of nonlinear multi-dimensional stochastic dynamical systems. Appl. Math. Model. 2023, 122, 350–372. [Google Scholar] [CrossRef]

- Wu, X.; Yan, Y. Milstein scheme for a stochastic semilinear subdiffusion equation driven by fractionally integrated multiplicative noise. Fractal Fract. 2025, 9, 314. [Google Scholar] [CrossRef]

- Pechenik, L.; Levine, H. Interfacial velocity corrections due to multiplicative noise. Phys. Rev. E 1999, 59, 3893–3900. [Google Scholar] [CrossRef]

- Dornic, I.; Chaté, H.; Muñoz, M.A. Integration of Langevin equations with multiplicative noise and the viability of field theories for absorbing phase transitions. Phys. Rev. Lett. 2005, 94, 100601. [Google Scholar] [CrossRef] [PubMed]

- Cao, R.; Pope, S.B. Numerical integration of stochastic differential equations: Weak second-order mid-point scheme for application in the composition PDF method. J. Comput. Phys. 2003, 185, 194–212. [Google Scholar] [CrossRef]

- Evstigneev, M.; Kacmazer, D. Fast and accurate numerical integration of the Langevin equation with multiplicative Gaussian white noise. Entropy 2025, 26, 879. [Google Scholar] [CrossRef] [PubMed]

- Reimann, P. Brownian motors: Noisy transport far from equilibrium. Phys. Rep. 2002, 361, 57–265. [Google Scholar] [CrossRef]

- Büttiker, M. Transport as a consequence of state-dependent diffusion. Z. Phys. B Condens. Matter 1987, 68, 161–167. [Google Scholar] [CrossRef]

- Kompatscher, A.; Kemerink, M. On the concept of an effective temperature Seebeck ratchet. Appl. Phys. Lett. 2021, 119, 023303. [Google Scholar] [CrossRef]

- Dünweg, B.; Paul, W. Brownian dynamics simulations without Gaussian random numbers. Int. J. Mod. Phys. C 1991, 2, 817–827. [Google Scholar] [CrossRef]

- Grønbech-Jensen, N. On the application of non-Gaussian noise in stochastic Langevin simulations. J. Stat. Phys. 2023, 190, 96. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).