Computer Aided Diagnosis of Melanoma Using Deep Neural Networks and Game Theory: Application on Dermoscopic Images of Skin Lesions

Abstract

1. Introduction

2. Results

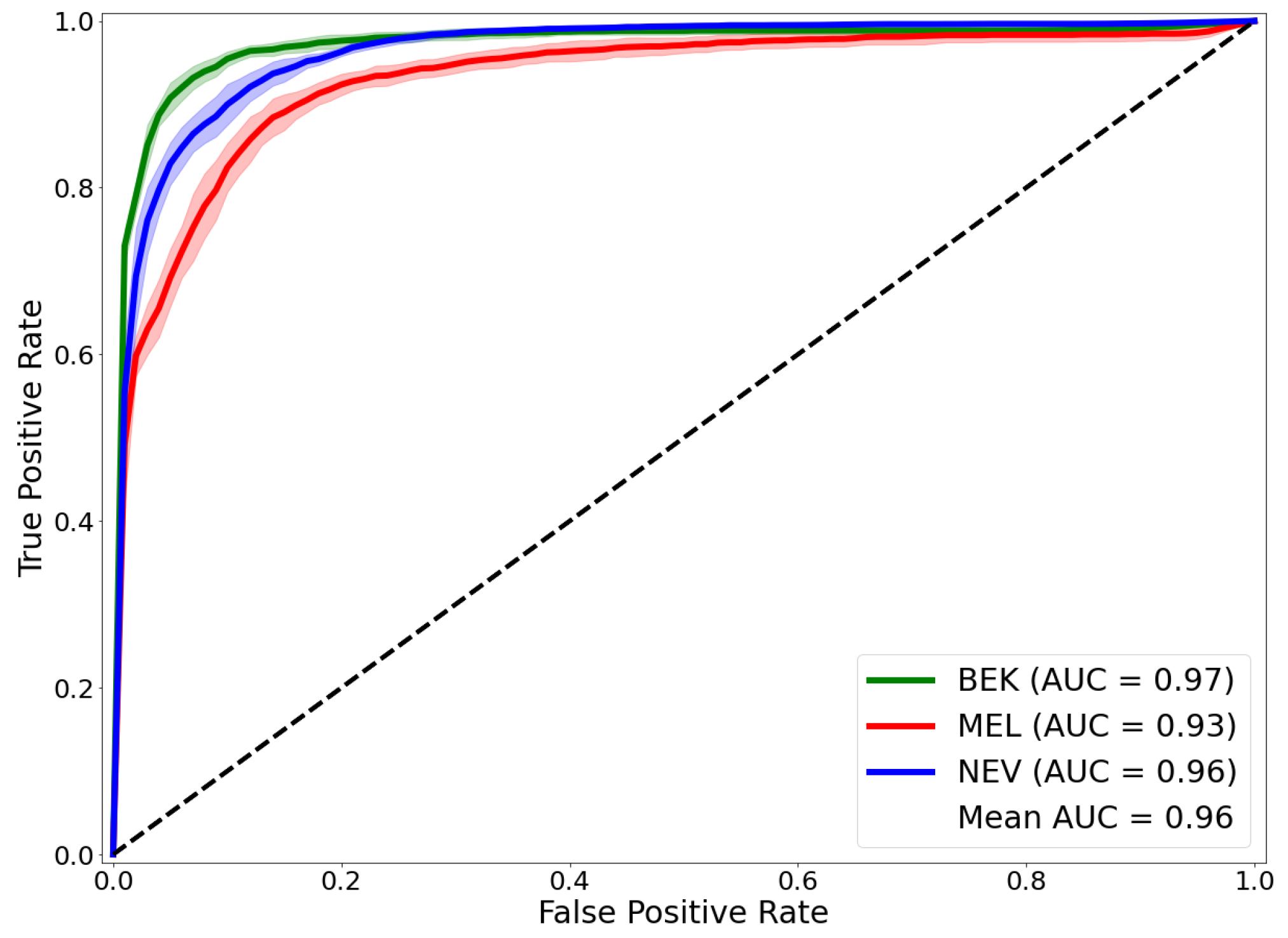

2.1. Results of Our Approach on the Test Dataset

2.2. Comparing Our Approach with Prior Works

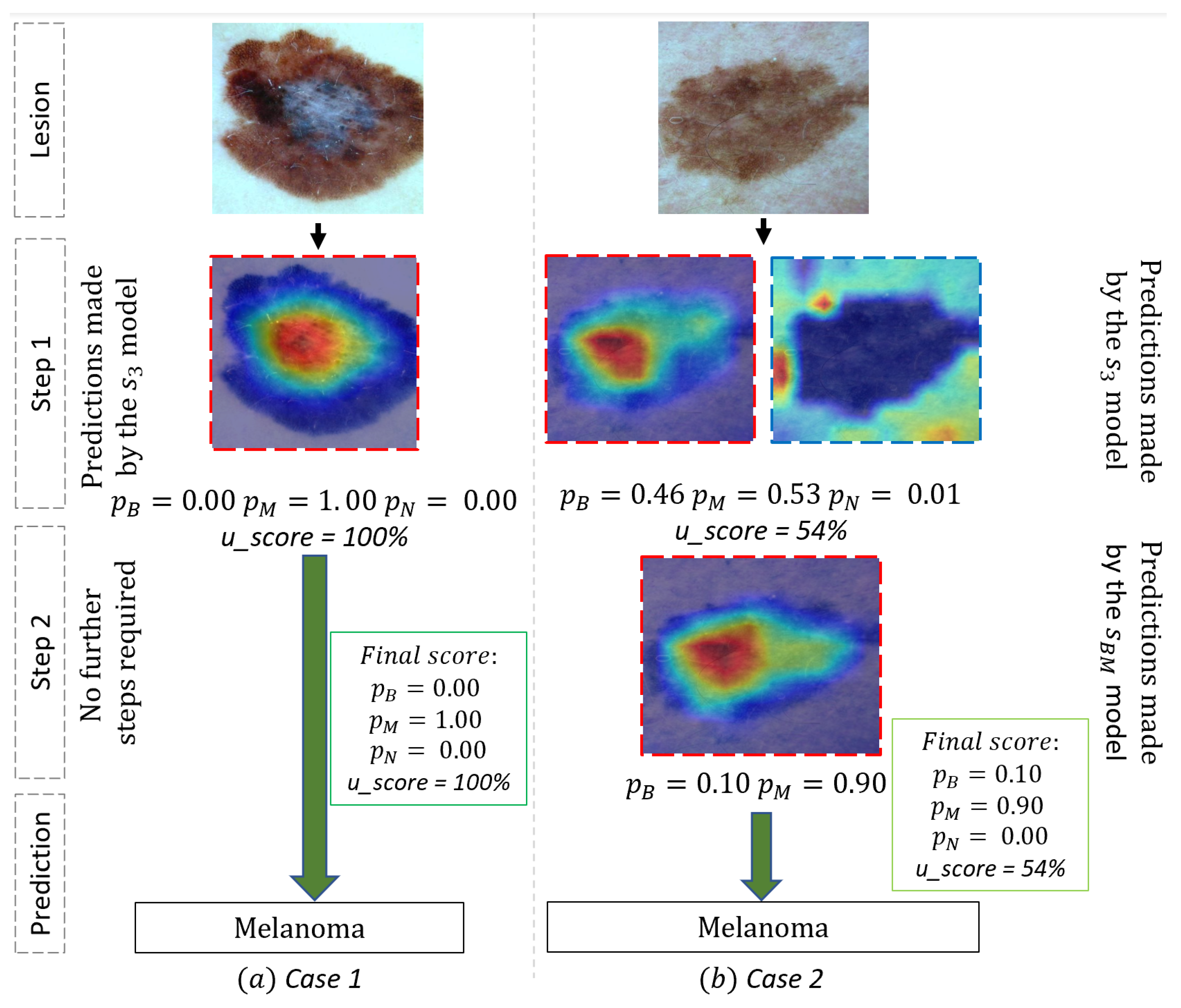

2.3. Use Case and Performance Analysis

2.4. Performances of the Individual Model Used in Our Framework

2.5. Ablation Study: Choice of the Best Hyper-Parameters and

3. Discussion

4. Methods and Materials

4.1. Convolutional Neural Network

4.2. Game Theory

4.3. Class Activation Map

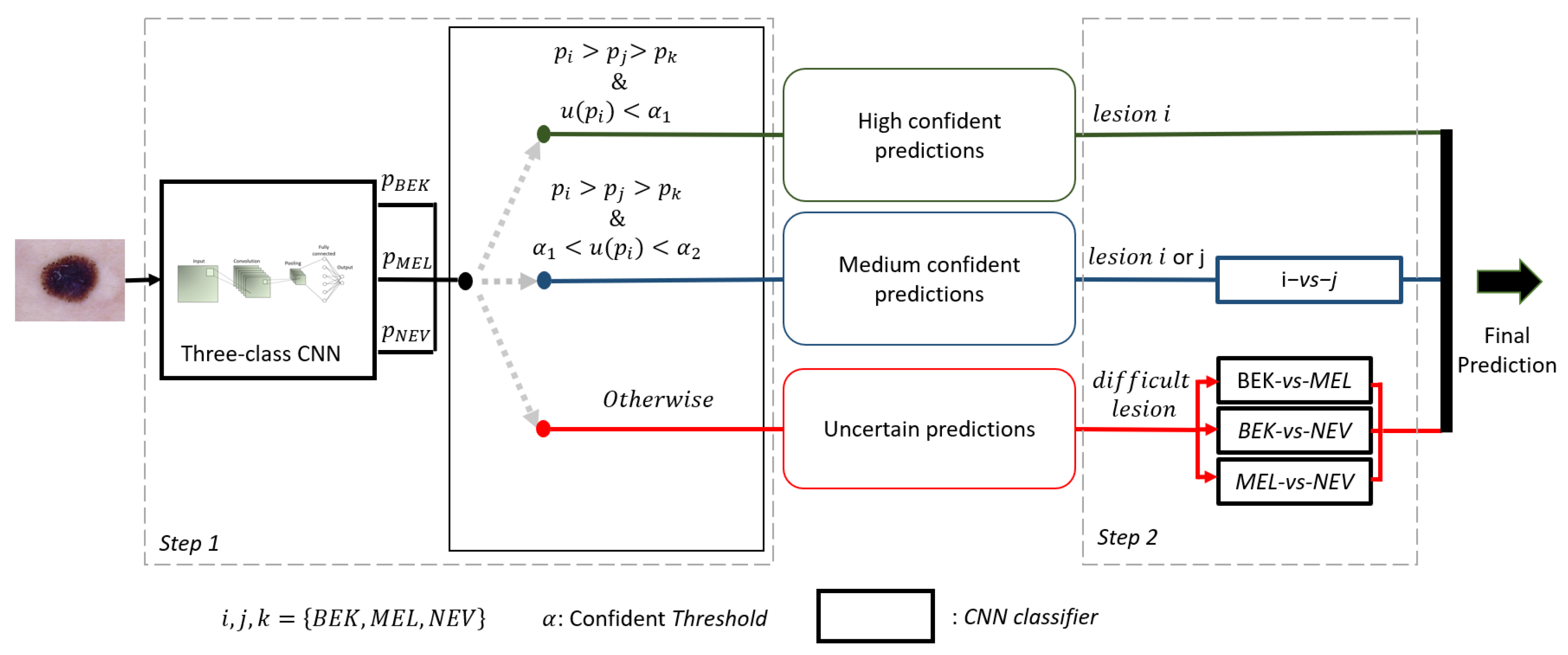

4.4. Description of Our Framework

| Algorithm 1 Pseudo-code of our framework. |

| Require: Image x, 3 pairwise CNNs , 1 3-class CNN , 3 pairwise CNNs , list of the three classes Ensure: predicted class of x Generate the prediction probability of the class associated to x with Generate the probability of belonging to the class i by the model specialized to this class Estimate the confidence level of the prediction made by using the equation if then categorizes the prediction as being certain x belongs to class i else if & then Categorizes the prediction as medium confident Generate prediction probabilities and made by the model if then x belongs to class i else x belongs to class J end if else Categorizes the prediction as being uncertain Generate prediction probabilities of all the 3 pairwise CNNs x belongs to class obtain by applying Max-Win rule on the 3 pairwise CNNs end if |

4.5. Experimental Setup

4.5.1. Dataset Preparation and Preprocessing

4.5.2. Fine-Tuning the Networks

4.5.3. Metrics

5. Conclusions and Future Works

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tschandl, P.; Rinner, C.; Apalla, Z.; Argenziano, G.; Codella, N.; Halpern, A.; Janda, M.; Lallas, A.; Longo, C.; Malvehy, J.; et al. Human–computer collaboration for skin cancer recognition. Nat. Med. 2020, 26, 1229–1234. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Tschandl, P.; Codella, N.; Akay, B.N.; Argenziano, G.; Braun, R.P.; Cabo, H.; Kittler, H. Comparison of the accuracy of human readers versus machine-learning algorithms for pigmented skin lesion classification: An open, web-based, international, diagnostic study. Lancet Oncol. 2019, 20, 938–947. [Google Scholar] [CrossRef]

- Haggenmüller, S.; Maron, R.C.; Hekler, A.; Utikal, J.S.; Barata, C.; Barnhill, R.L.; Beltraminelli, H.; Berking, C.; Betz-Stablein, B.; Blum, A.; et al. Skin cancer classification via convolutional neural networks: Systematic review of studies involving human experts. Eur. J. Cancer 2021, 156, 202–216. [Google Scholar] [CrossRef] [PubMed]

- Nie, Y.; Sommella, P.; Carratù, M.; Ferro, M.; O’nils, M.; Lundgren, J. Recent Advances in Diagnosis of Skin Lesions using Dermoscopic Images based on Deep Learning. IEEE Access 2022, 10, 95716–95747. [Google Scholar] [CrossRef]

- Jain, S.; Singhania, U.; Tripathy, B.; Nasr, E.A.; Aboudaif, M.K.; Kamrani, A.K. Deep Learning-Based Transfer Learning for Classification of Skin Cancer. Sensors 2021, 21, 8142. [Google Scholar] [CrossRef] [PubMed]

- Pérez, E.; Reyes, O.; Ventura, S. Convolutional neural networks for the automatic diagnosis of melanoma: An extensive experimental study. Med Image Anal. 2021, 67, 101858. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Shuai, R.; Ma, L.; Liu, W.; Hu, D.; Wu, M. Dermoscopy image classification based on StyleGAN and DenseNet201. IEEE Access 2021, 9, 8659–8679. [Google Scholar] [CrossRef]

- Maron, R.C.; Haggenmüller, S.; von Kalle, C.; Utikal, J.S.; Meier, F.; Gellrich, F.F.; Hauschild, A.; French, L.E.; Schlaak, M.; Ghoreschi, K.; et al. Robustness of convolutional neural networks in recognition of pigmented skin lesions. Eur. J. Cancer 2021, 145, 81–91. [Google Scholar] [CrossRef]

- Foahom Gouabou, A.C.; Iguernaissi, R.; Damoiseaux, J.L.; Moudafi, A.; Merad, D. End-to-End Decoupled Training: A Robust Deep Learning Method for Long-Tailed Classification of Dermoscopic Images for Skin Lesion Classification. Electronics 2022, 11, 3275. [Google Scholar] [CrossRef]

- Mahbod, A.; Schaefer, G.; Wang, C.; Dorffner, G.; Ecker, R.; Ellinger, I. Transfer learning using a multi-scale and multi-network ensemble for skin lesion classification. Comput. Methods Programs Biomed. 2020, 193, 105475. [Google Scholar] [CrossRef] [PubMed]

- Foahom Gouabou, A.C.; Damoiseaux, J.L.; Monnier, J.; Iguernaissi, R.; Moudafi, A.; Merad, D. Ensemble Method of Convolutional Neural Networks with Directed Acyclic Graph Using Dermoscopic Images: Melanoma Detection Application. Sensors 2021, 21, 3999. [Google Scholar] [CrossRef] [PubMed]

- Gessert, N.; Nielsen, M.; Shaikh, M.; Werner, R.; Schlaefer, A. Skin lesion classification using ensembles of multi-resolution EfficientNets with meta data. MethodsX 2020, 7, 100864. [Google Scholar] [CrossRef] [PubMed]

- Gong, A.; Yao, X.; Lin, W. Classification for Dermoscopy Images Using Convolutional Neural Networks Based on the Ensemble of Individual Advantage and Group Decision. IEEE Access 2020, 8, 155337–155351. [Google Scholar] [CrossRef]

- Kausar, N.; Hameed, A.; Sattar, M.; Ashraf, R.; Imran, A.S.; Abidin, M.Z.u.; Ali, A. Multiclass Skin Cancer Classification Using Ensemble of Fine-Tuned Deep Learning Models. Appl. Sci. 2021, 11, 10593. [Google Scholar] [CrossRef]

- Raza, R.; Zulfiqar, F.; Tariq, S.; Anwar, G.B.; Sargano, A.B.; Habib, Z. Melanoma classification from dermoscopy images using ensemble of convolutional neural networks. Mathematics 2021, 10, 26. [Google Scholar] [CrossRef]

- El-Khatib, H.; Popescu, D.; Ichim, L. Deep Learning–Based Methods for Automatic Diagnosis of Skin Lesions. Sensors 2020, 20, 1753. [Google Scholar] [CrossRef]

- Goyal, M.; Knackstedt, T.; Yan, S.; Hassanpour, S. Artificial intelligence-based image classification methods for diagnosis of skin cancer: Challenges and opportunities. Comput. Biol. Med. 2020, 127, 104065. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Van Molle, P.; De Strooper, M.; Verbelen, T.; Vankeirsbilck, B.; Simoens, P.; Dhoedt, B. Visualizing convolutional neural networks to improve decision support for skin lesion classification. In Understanding and Interpreting Machine Learning in Medical Image Computing Applications; Springer: Berlin/Heidelberg, Germany, 2018; pp. 115–123. [Google Scholar]

- Wang, S.; Yin, Y.; Wang, D.; Wang, Y.; Jin, Y. Interpretability-based multimodal convolutional neural networks for skin lesion diagnosis. IEEE Trans. Cybern. 2021, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Alche, M.N.; Acevedo, D.; Mejail, M. EfficientARL: Improving skin cancer diagnoses by combining lightweight attention on EfficientNet. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3354–3360. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A Unified Approach to Interpreting Model Predictions. In Advances in Neural Information Processing Systems 30; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; pp. 4765–4774. [Google Scholar]

- Mohammad, S. An explainable stacked ensemble of deep learning models for improved melanoma skin cancer detection. Multimed. Syst. 2021, 28, 1309–1323. [Google Scholar]

- Tschandl, P.; Argenziano, G.; Razmara, M.; Yap, J. Diagnostic accuracy of content-based dermatoscopic image retrieval with deep classification features. Br. J. Dermatol. 2019, 181, 155–165. [Google Scholar] [CrossRef] [PubMed]

- Allegretti, S.; Bolelli, F.; Pollastri, F.; Longhitano, S.; Pellacani, G.; Grana, C. Supporting skin lesion diagnosis with content-based image retrieval. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 8053–8060. [Google Scholar]

- Barhoumi, W.; Khelifa, A. Skin lesion image retrieval using transfer learning-based approach for query-driven distance recommendation. Comput. Biol. Med. 2021, 137, 104825. [Google Scholar] [CrossRef] [PubMed]

- Barata, C.; Santiago, C. Improving the explainability of skin cancer diagnosis using CBIR. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September 27–1 October 2021; pp. 550–559. [Google Scholar]

- Codella, N.C.; Lin, C.C.; Halpern, A.; Hind, M.; Feris, R.; Smith, J.R. Collaborative Human-AI (CHAI): Evidence-based interpretable melanoma classification in dermoscopic images. In Understanding and Interpreting Machine Learning in Medical Image Computing Applications; Springer: Berlin/Heidelberg, Germany, 2018; pp. 97–105. [Google Scholar]

- Kaymak, S.; Esmaili, P.; Serener, A. Deep Learning for Two-Step Classification of Malignant Pigmented Skin Lesions. In Proceedings of the 2018 14th Symposium on Neural Networks and Applications (NEUREL), Belgrade, Serbia, 20–21 November 2018; pp. 1–6. [Google Scholar]

- Barata, C.; Celebi, M.E.; Marques, J.S. Explainable skin lesion diagnosis using taxonomies. Pattern Recognit. 2021, 110, 107413. [Google Scholar] [CrossRef]

- Argenziano, G.; Soyer, H.P.; Chimenti, S.; Talamini, R.; Corona, R.; Sera, F.; Binder, M.; Cerroni, L.; de Rosa, G.; Ferrara, G.; et al. Dermoscopy of pigmented skin lesions: Results of a consensus meeting via the Internet. J. Am. Acad. Dermatol. 2003, 48, 679–693. [Google Scholar] [CrossRef]

- Alves, B.; Barata, C.; Marques, J.S. Diagnosis of Skin Cancer Using Hierarchical Neural Networks and Metadata. In Proceedings of the Iberian Conference on Pattern Recognition and Image Analysis, Madrid, Spain, 1–4 July 2022; pp. 69–80. [Google Scholar]

- Harangi, B. Skin lesion classification with ensembles of deep convolutional neural networks. J. Biomed. Inform. 2018, 86, 25–32. [Google Scholar] [CrossRef]

- Bisla, D.; Choromanska, A.; Berman, R.S.; Stein, J.A.; Polsky, D. Towards automated melanoma detection with deep learning: Data purification and augmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–19 June 2019. [CrossRef]. [Google Scholar]

- Barata, C.; Marques, J.S.; Emre Celebi, M. Deep attention model for the hierarchical diagnosis of skin lesions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Putra, T.A.; Rufaida, S.I.; Leu, J.S. Enhanced skin condition prediction through machine learning using dynamic training and testing augmentation. IEEE Access 2020, 8, 40536–40546. [Google Scholar] [CrossRef]

- Chen, Y.; Zhu, Y.; Chang, Y. CycleGAN Based Data Augmentation For Melanoma images Classification. In Proceedings of the 2020 3rd International Conference on Artificial Intelligence and Pattern Recognition, Xiamen, China, 26–28 June 2020; pp. 115–119. [Google Scholar]

- Lucieri, A.; Dengel, A.; Ahmed, S. Deep Learning Based Decision Support for Medicine–A Case Study on Skin Cancer Diagnosis. arXiv 2021, arXiv:2103.05112. [Google Scholar]

- Singh, A.; Sengupta, S.; Lakshminarayanan, V. Explainable deep learning models in medical image analysis. J. Imaging 2020, 6, 52. [Google Scholar] [CrossRef]

- Rauber, A.; Trasarti, R.; Giannotti, F. Transparency in algorithmic decision making. ERCIM News 2019, 116, 10–11. [Google Scholar]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Fu, Y.; Xiang, L.; Zahid, Y.; Ding, G.; Mei, T.; Shen, Q.; Han, J. Long-tailed visual recognition with deep models: A methodological survey and evaluation. Neurocomputing 2022, 509, 290–309. [Google Scholar] [CrossRef]

- Foahom Gouabou, A.C.; Iguernaissi, R.; Damoiseaux, J.L.; Moudafi, A.; Merad, D. Rethinking decoupled training with bag of tricks for long-tailed recognition. In Proceedings of the 2022 Digital Image Computing: Techniques and Applications (DICTA), Sydney, NSW, Australia, 30 November–2 December 2022. [Google Scholar]

- Kaluarachchi, T.; Reis, A.; Nanayakkara, S. A review of recent deep learning approaches in human-centered machine learning. Sensors 2021, 21, 2514. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Karki, S.; Kulkarni, P.; Stranieri, A. Melanoma classification using EfficientNets and Ensemble of models with different input resolution. In Proceedings of the 2021 Australasian Computer Science Week Multiconference, Dunedin, New Zealand, 1–5 February 2021; pp. 1–5. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Hazra, T.; Anjaria, K. Applications of game theory in deep learning: A survey. Multimed. Tools Appl. 2022, 81, 8963–8994. [Google Scholar] [CrossRef] [PubMed]

- Gupta, D.; Bhatt, S.; Bhatt, P.; Gupta, M.; Tosun, A.S. Game Theory Based Privacy Preserving Approach for Collaborative Deep Learning in IoT. In Deep Learning for Security and Privacy Preservation in IoT; Springer: Berlin/Heidelberg, Germany, 2021; pp. 127–149. [Google Scholar]

- Friedman, J.H. Another Approach to Polychotomous Classification; Technical Report; Statistics Department, Stanford University: Stanford, CA, USA, 1996. [Google Scholar]

- Codella, N.; Rotemberg, V.; Tschandl, P.; Celebi, M.E.; Dusza, S.; Gutman, D.; Helba, B.; Kalloo, A.; Liopyris, K.; Marchetti, M.; et al. Skin lesion analysis toward melanoma detection 2018: A challenge hosted by the international skin imaging collaboration (isic). arXiv 2019, arXiv:1902.03368. [Google Scholar]

- Tschandl, P.; Rosendahl, C.; Kittler, H. The HAM10000 dataset, a large collection of multi-source dermatoscopic images of common pigmented skin lesions. Sci. Data 2018, 5, 180161. [Google Scholar] [CrossRef]

- Gijsenij, A.; Gevers, T.; Van De Weijer, J. Computational Color Constancy: Survey and Experiments. IEEE Trans. Image Process. 2011, 20, 2475–2489. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar]

- Aurelio, Y.S.; de Almeida, G.M.; de Castro, C.L.; Braga, A.P. Learning from imbalanced data sets with weighted cross-entropy function. Neural Process. Lett. 2019, 50, 1937–1949. [Google Scholar] [CrossRef]

- Pham, T.C.; Luong, C.M.; Hoang, V.D.; Doucet, A. AI outperformed every dermatologist in dermoscopic melanoma diagnosis, using an optimized deep-CNN architecture with custom mini-batch logic and loss function. Sci. Rep. 2021, 11, 17485. [Google Scholar] [CrossRef]

- Smith, L.N. Cyclical Learning Rates for Training Neural Networks. In Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), Santa Rosa, CA, USA, 24–31 March 2017; pp. 464–472. [Google Scholar]

| Works | BACC | Mean-AUROC | MEL-AUROC | BEK-AUROC | NEV-AUROC |

|---|---|---|---|---|---|

| Harangi et al. [35] | - | 0.85 | 0.84 | 0.87 | 0.84 |

| Bisla et al. [36] | - | 0.92 | 0.88 | - | - |

| Barata et al. (2019) [37] | 0.70 | 0.88 | - | - | - |

| Barata et al. (2021) [32] | 0.74 | 0.92 | 0.80 | 0.92 | 0.85 |

| Foahom et al. [12] | 0.77 ± 0.00 | - | 0.87 | 0.93 | 0.88 |

| Proposed framework | 0.86 ± 0.01 | 0.96 ± 0.00 | 0.93 ± 0.01 | 0.97 ± 0.01 | 0.96 ± 0.00 |

| Task | BACC | AUROC |

|---|---|---|

| BEK vs. MEL vs. NEV | 0.84 ± 0.01 | 0.96 ± 0.00 |

| MEL vs. ALL | 0.81 ± 0.02 | 0.94 ± 0.01 |

| NEV vs. ALL | 0.86 ± 0.01 | 0.96 ± 0.00 |

| BEK vs. ALL | 0.90 ± 0.01 | 0.98 ± 0.00 |

| MEL vs. NEV | 0.87 ± 0.01 | 0.95 ± 0.01 |

| MEL vs. BEK | 0.91 ± 0.01 | 0.97 ± 0.00 |

| NEV vs. BEK | 0.94 ± 0.01 | 0.99 ± 0.00 |

| (, ) | MEL-AUROC |

|---|---|

| (0.3, 0.5) | 0.95 |

| (0.3, 0.4) | 0.95 |

| (0.2, 0.5) | 0.95 |

| (0.2, 0.4) | 0.95 |

| (0.2, 0.3) | 0.95 |

| (0.1, 0.5) | 0.96 |

| (0.1, 0.4) | 0.95 |

| Benign Keratosis | Melanoma | Nevi | |

|---|---|---|---|

| ISIC 2018 | 1099 | 1113 | 6705 |

| Ratio | 0.12 | 0.12 | 0.75 |

| Training set | 769 | 779 | 4694 |

| Generated data from training set | 1231 | 1221 | 306 |

| Final training set with data generated | 2000 | 2000 | 5000 |

| Validation set | 110 | 111 | 670 |

| Test set | 220 | 223 | 1341 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Foahom Gouabou, A.C.; Collenne, J.; Monnier, J.; Iguernaissi, R.; Damoiseaux, J.-L.; Moudafi, A.; Merad, D. Computer Aided Diagnosis of Melanoma Using Deep Neural Networks and Game Theory: Application on Dermoscopic Images of Skin Lesions. Int. J. Mol. Sci. 2022, 23, 13838. https://doi.org/10.3390/ijms232213838

Foahom Gouabou AC, Collenne J, Monnier J, Iguernaissi R, Damoiseaux J-L, Moudafi A, Merad D. Computer Aided Diagnosis of Melanoma Using Deep Neural Networks and Game Theory: Application on Dermoscopic Images of Skin Lesions. International Journal of Molecular Sciences. 2022; 23(22):13838. https://doi.org/10.3390/ijms232213838

Chicago/Turabian StyleFoahom Gouabou, Arthur Cartel, Jules Collenne, Jilliana Monnier, Rabah Iguernaissi, Jean-Luc Damoiseaux, Abdellatif Moudafi, and Djamal Merad. 2022. "Computer Aided Diagnosis of Melanoma Using Deep Neural Networks and Game Theory: Application on Dermoscopic Images of Skin Lesions" International Journal of Molecular Sciences 23, no. 22: 13838. https://doi.org/10.3390/ijms232213838

APA StyleFoahom Gouabou, A. C., Collenne, J., Monnier, J., Iguernaissi, R., Damoiseaux, J.-L., Moudafi, A., & Merad, D. (2022). Computer Aided Diagnosis of Melanoma Using Deep Neural Networks and Game Theory: Application on Dermoscopic Images of Skin Lesions. International Journal of Molecular Sciences, 23(22), 13838. https://doi.org/10.3390/ijms232213838