1. Introduction

Accurate recording of color is one of the fundamental tasks in many scientific disciplines, such as chemistry, industry, medicine, or geosciences to name just a few. Color measurement is a crucial aspect in archaeology and specifically in rock art documentation [

1,

2]. The correct measurement of color allows researchers to study, diagnose, and describe rock art specimens and detect chromatic changes or alterations over time. High-precision metric models together with reliable color information data sets provide essential information in modern conservation and preservation works.

The appropriate description of color is not a trivial issue in cultural heritage documentation [

3,

4]. Color is a matter of perception, which largely depends on the subjectivity of the observer. Therefore, correct color registration requires objective colorimetric measurement described in rigorous color spaces. Usually, color spaces defined by the CIE are used as the standard reference framework for colorimetric measurement and management.

To avoid damage into the pigment, direct contact measurements with colorimeters or spectrophotometers on painted rock art panels are not allowed. Instead, indirect and noninvasive methods for color determination are required. Thus, the use of digitization techniques with conventional digital cameras to support rock art documentation is becoming more and more frequent [

5,

6,

7,

8].

The color information obtained from digital images can be easily captured, stored, and processed. The drawback of such color information lays on the response recorded by the sensor, which is not strictly colorimetric. The RGB responses do not satisfy the Luther–Ives condition so that RGB data are not a linear combination of CIE XYZ coordinates [

9]. If the values recorded in the RGB channels were proportional to the input energy, a simple linear relationship between the RGB data acquired by the digital camera and the CIE XYZ tristimulus values would exist. However, in general, the spectral sensitivities of the three RGB channels are not linear combinations of the color-matching functions [

10]. The signals generated by digital cameras are indeed referred to as “device dependent”. Thus, a transformation to convert the input RGB data into device-independent color spaces is necessary.

A widely accepted approach to establish the mathematical relationships between the original RGB data and well-defined independent color spaces is the procedure of digital camera characterization [

10]. Different techniques are used for colorimetric camera characterization. Numerous papers have been written regarding common techniques, such as polynomial transformation with least-squares regressions [

9,

10], interpolation from look-up tables [

11], artificial neural networks [

12], and principal component analysis [

13]. Further studies have focused on optimizing characterization, including the use of pattern search optimization [

14], multiple regression [

15], root-polynomial regression [

16], or spectral reflectance reconstruction [

17,

18,

19].

The colorimetric characterization of digital cameras based on polynomial models is an appropriate starting point; they are widely accepted, mathematically simpler and require smaller training sets and less computing time [

20,

21]. Previous experiments using second-order polynomials applied in rock art paintings gave also good results [

22]. However, they tend to be rigid models and suffer from overestimation or underestimation when many or few data are provided. Furthermore, it is known the lack of reliable generalization of predictions in polynomial models, especially when extrapolating or in the case of modeling wiggly functions [

23]. Therefore, it is desirable to improve the results by means of flexible, robust and more accurate models.

In this work, we introduce a novel approach for documenting rock art paintings based on a Gaussian process (GP) model. GPs are natural, flexible nonparametric models for

N-dimensional functions, with multivariate predictors (input variables) in each dimension [

24,

25]. The defining property of a GP model is that any finite set of values is jointly distributed as a multivariate Gaussian function. A GP is completely defined by its mean and covariance function. The covariance function is the crucial ingredient in a Gaussian process as it encodes correlation structure which characterizes the relationships between function values at different inputs. GP allows not only nonlinear effects and handling implicit interactions between covariates, but also improves generalization of function values for both interpolation and extrapolation purposes. Due to their generality and flexibility, GPs are of broad interest across machine learning and statistics [

25,

26].

GP models are formulated and estimated within a Bayesian framework, and all inference is based on the multivariate posterior distribution. Computing the posterior distribution is often difficult, and for this reason, different computation approaches can be used. The Markov chain Monte Carlo (MCMC) is a sampling method that provides a sample of the joint posterior distribution of the parameters [

27,

28].

The GP model results were compared to the common approach based on polynomial regression models. The main advantage of nonparametric over parametric models is their flexibility [

29,

30]. In a parametric framework, the shape of the functional relationship is a prespecified, either linear or nonlinear, function, limiting the flexibility of the modeling. In a nonparametric framework, the shape of the functional relationship is completely determined by the data, allowing for a higher degree of adaptability.

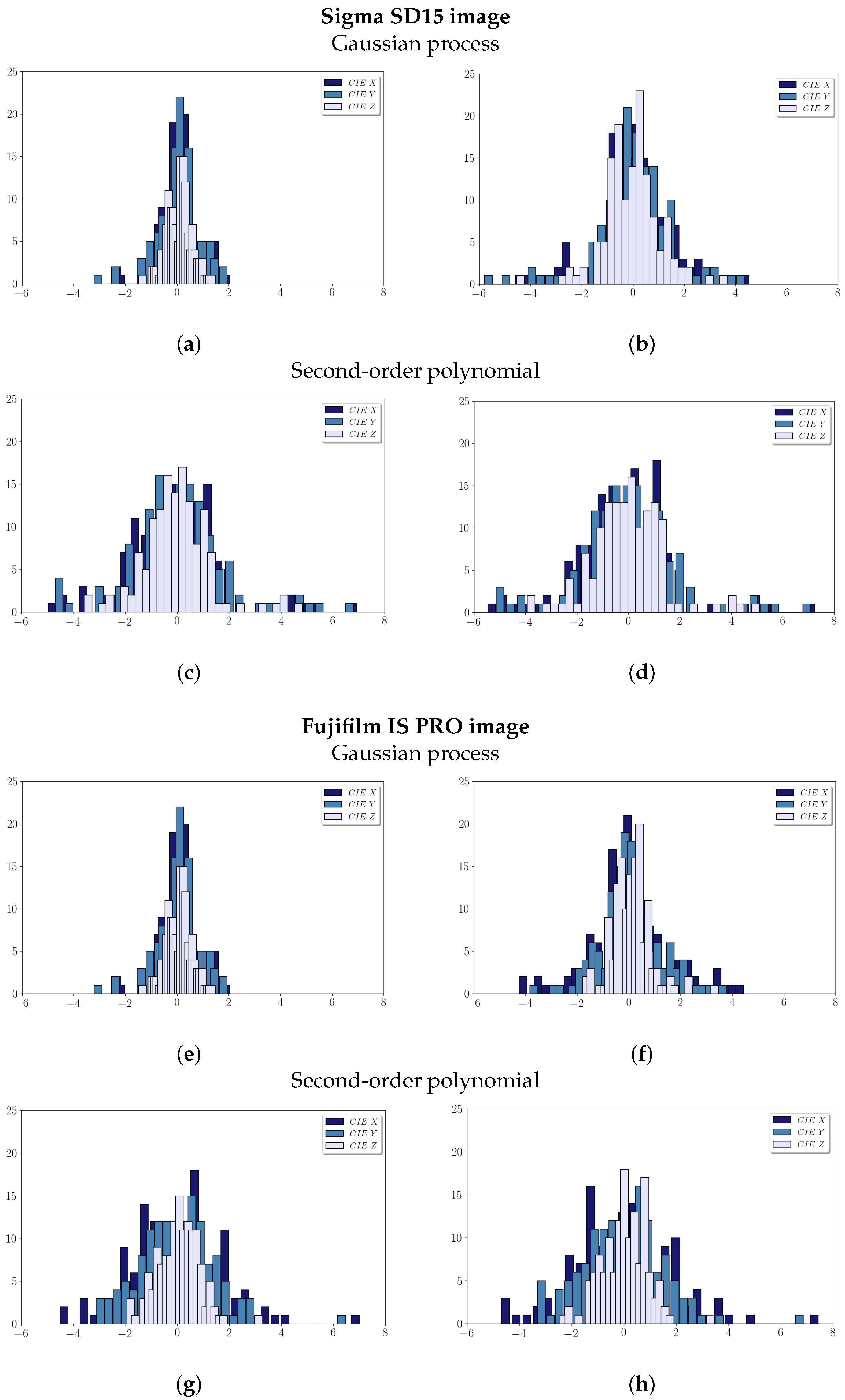

The goodness of fit and predictive performance of the models are assessed by analyzing the adjustment residuals and the leave-one-out cross-validation (LOOCV) residuals. The quality of the characterized image is also evaluated in terms of colorimetric accuracy by means of color differences among observed and fitted colors using the CIE framework. In addition, we evaluate the induced noise into the output image after the characterization which is recognized as a drawback for some image applications such as image matching or pattern recognition. The induced noise is evaluated by computing and comparing the coefficients of variation of digital values between the original and output images.

2. Materials and Methods

2.1. Case Study: Cova dels Cavalls

The working area is a rock art scene located in Shelter II of the Cova dels Cavalls site in the county of Tirig, province of Castelló (Spain). This cave is part of one of the most singular rock art sites of the Mediterranean Basin in the Iberian Peninsula, which is listed by UNESCO as a World Heritage since 1998 [

31].

The images were taken using two different SLR digital cameras, a Sigma SD15 and a Fujifilm IS PRO. The images contain the hunting scene located in the central part of this emblematic archaeological site. Parameters such as focal, exposure time, aperture, and ISO were controlled during the photographic sessions for both cameras. Photographs were taken in the raw format under homogeneous illumination conditions.

The main difference between the Fujifilm IS PRO and the Sigma SD15 cameras is their integrated sensors. The Fujifilm incorporates a 12 megapixels Super CCD imaging sensor, with resolution of 4256 × 2848 pixels and a color filter array (CFA) with a Bayer pattern. The use of CFA implies that the color registered in every individual pixel is not acquired directly but as a result of interpolation between channels. On the other hand, the Sigma carries a three-layer CMOS Foveon

®X3 sensor of 2640 × 1760 pixels, which makes it a true trichromatic digital camera [

32]. The main advantage of this sensor is its ability to capture color without any interpolation.

2.2. Image-Based Camera Characterization Methodology

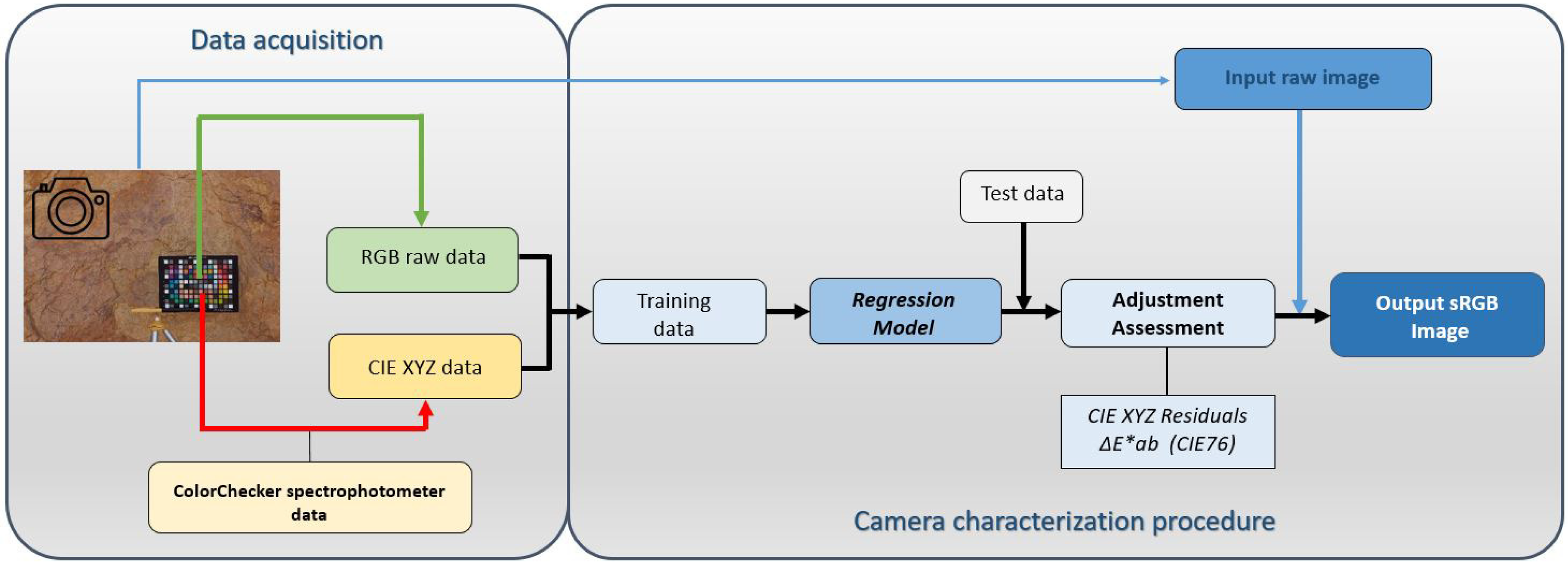

The output RGB digital values registered by the camera depend on three main factors: the sensor architecture, the lighting conditions, and the object being imaged. Even assuming the same object and lighting conditions, other factors can still produce different RGB responses within and across scenes. Some elements such as the internal color filters or user settings (exposure time, aperture, white balance, and so on) can modify the output digital values. As a result, the original RGB data registered by the sensor cannot be used rigorously for the quantitative determination of color, and native RGB camera color spaces are said to be device dependent. A way to transform the signal captured by the camera sensor into a physicaly-based, device-independent color space is by means of camera characterization (See workflow in

Figure 1).

To carry out the characterization, various training and test datasets are required. An important aspect on the camera characterization process is the establishment of the working color spaces. Some of the most common color spaces used are the input RGB data and the output tristimulus coordinates [

10]. In the preliminary stages of the study four different transformations, between color spaces were tested, including RGB–CIE XYZ, RGB-CIELAB, LMS–CIE XYZ, and LMS–CIELAB. The transformation that worked the best was the RGB–CIE XYZ, whose results are reported in the rest of the paper.

On the other hand, the digital RGB values are available after a complex process driven by the built-in software and electronics of the camera [

33]. Usually, a set of preprocessing operations, e.g., demosaicing, white balance, gamut mapping, color enhancement, or compression, are applied automatically to the raw image (

Figure 2). It is thus preferable to work with raw data versus RGB processed or compressed image files.

The raw RGB training and test sample data were extracted from the images using our software pyColourimetry which was developed in previous research. This software was written in the Python programming language following the CIE recommendations. It allows raw RGB data extraction from conventional camera formats and implements other colorimetric functions such as transformation among color spaces, color difference calculation, or spectral data treatment [

34].

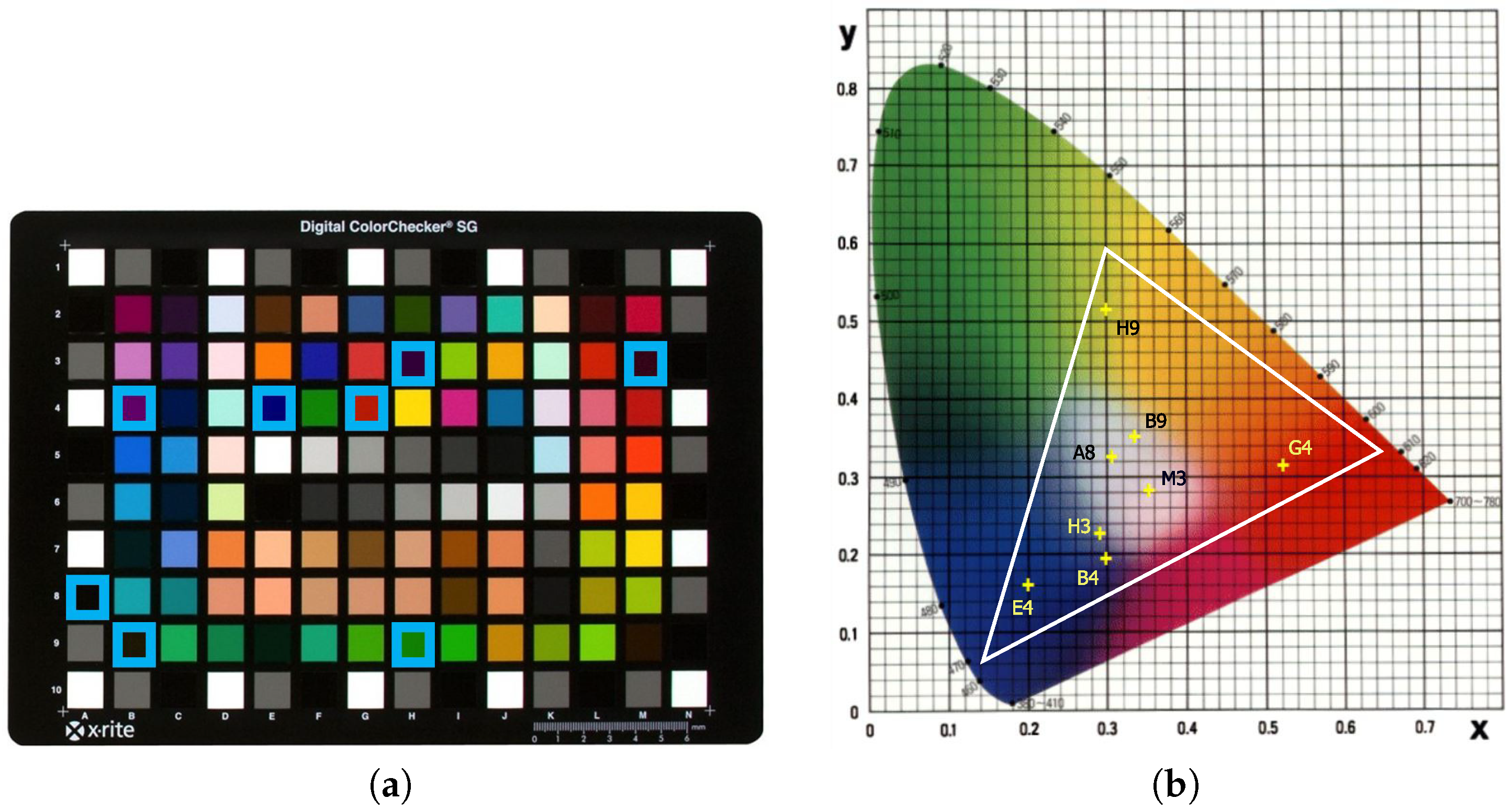

Also, the data acquisition includes the direct measurement of the tristimulus values of the patches present in the color chart and the raw RGB data extraction from the digital image. Thus, a color chart has to be included as a colorimetric reference in the photographic shot to carry out the camera characterization. For this experiment, we used an X-Rite ColorChecker SG Digital Color Chart as a color standard. This chart is routinely used in digital photography for color calibration. It consists of an array of 140 color patches. The number of patches is supposedly enough to cover the color domain present in the scene as well as to provide training and test data sets to analyze the results after the camera characterization.

CIE XYZ values for the ColorChecker patches have to be known prior to undertake the camera characterization. An accepted option is to use those tristimulus values provided by the manufacturer. Nevertheless, it is highly recommended to carry out a new measurement, preferably by means of a colorimeter or spectrophotometer, using the setup of the specific experiment. The spectral reflectance data were acquired using the spectrophotometer Konica Minolta CM-600d, following CIE recommendations (2° standard observer under D65 illuminant). CIE XYZ coordinates can be obtained by transforming the spectral data using well-known CIE formulae [

35].

To visualize the tristimulus coordinates, it is necessary to perform a final transformation of the CIE XYZ values into the sRGB color space, which is compatible with most digital devices. This transformation is carried out based on the technical recommendations published by the International Electrotechnical Commission [

36]. Thus, the final outcome of the characterization consists of an sRGB output image for each regression model.

Once the digital camera is colorimetrically characterized, it can be used for the rigorous measurement of color simulating a colorimeter [

37]. Using a characterized camera, we can obtain accurate color information over complete scenes, which is a very important requirement to properly analyze rock art. The use of conventional cameras for color measurement allows researchers to take pictures with low-cost recording devices, suitable for carrying out heritage documentation tasks using noninvasive methodologies [

22].

2.3. Gaussian Processes for Camera Characterization

The main aim of camera characterization is to find the mapping function between the RGB color values and the CIE XYZ tristimulus coordinates:

Commonly, this multivariate mapping function is divided into independent functions for each each single XYZ tristimulus value. In this paper, we propose a Gaussian process (GP) to estimate these functions, with different model parameters,

,

, and

, for each mapping function:

2.3.1. Gaussian Process Model

A GP is a stochastic process which defines the distribution over a collection of random variables [

24,

25]. The defining property of a GP is that any finite set of random variables is jointly distributed as a multivariate normal distribution. A GP is completely characterized by its mean and covariance functions that control the a priori behavior of the function. GP can be used as prior probability distributions for latent functions in generalized linear models [

38]. However, in this paper, we focus on GP in linear models (a normal outcome), as we can assume that the CIE XYZ color coordinates are normally distributed.

A GP for a normal outcome

, paired with a matrix of

D inputs variables (predictors)

, consists of defining a multivariate Gaussian distribution over

conditioned on X:

where

is a n-vector,

is an

covariance matrix,

is the noise variance, and

I the

diagonal identity matrix. The mean function

can be anything, although it is usually recommended to be a linear model or even zero. The covariance function

must be a positive semidefinite matrix [

25,

26]. In this work, we use the square exponential covariance function, which is the most commonly used function of the Matérn class of isotropic covariance functions [

25]. The squared exponential covariance function for two observed points

i and

j (

) takes the form

where

;

is the marginal variance parameter, which controls the overall scale or magnitude of the range of values of the GP; and

are the lengthscale parameters, which control the smoothness of the function in the direction of the

d-predictor, so that the larger the lengthscale the smoother the function.

2.3.2. Bayesian Inference

Bayesian inference is based on the joint posterior distribution

of parameters given the data, which is proportional to the product of the likelihood and prior distributions,

In the previous equation,

is the likelihood of the model, where the mean function

has been set to zero for the sake of simplicity, and

are the prior distributions of the parameters of the model. These correspond to weakly informative prior distributions based on prior knowledge about the magnitude of the parameters.

Predictive inference for new function values

for a new sequence of input values

can be computed by integrating over the joint posterior distribution

To estimate both the parameter posterior distribution and the posterior predictive distribution for this model, simulation methods and/or distributional approximations methods [

38] must be used. Simulation methods based on MCMC [

27] are general sampling methods to obtain samples from the joint posterior distribution. For quick inferences and large data sets, where iterative simulation algorithms are too slow, modal and distributional approximation methods can be more efficient and approximate alternatives.

2.4. Second-Order Polynomial MOdel

This is the most extended model in practical camera characterization. The

N-dimensional collections of random observations

,

, and

are the CIE color variables, where

,

, and

represent the color coordinates of the

ith order observation

i (

). Each

,

, and

N-dimensional variable is considered to follow a normal distribution depending on an underlying second-order polynomial function

and noise variance

,

where

I is the

identity matrix. The latent second-order polynomials functions

,

, and

take the form

where the vectors

,

and

represent the polynomial coefficients, and

,

, and

are the

N-dimensional variables in the input RGB space.

The likelihood functions of the variables

,

and

(given the coefficients

,

,

), the variance parameters

, and the variables

,

and

, take the form

where the subscript

i represents the

ith observed value.

Bayesian Inference

The joint posterior distributions are proportional to the product of the likelihood and prior distributions:

We set vague prior distributions , , , and for the hyperparameters , , , and , respectively, based on prior knowledge about the magnitude of the parameters.

Predictive inference for new function values

and

for a new sequence of input values

and

can be computed by integrating over the joint posterior distributions

Simulation methods based on MCMC are used for estimating both the parameter posterior distribution and the posterior predictive distribution of these models.

2.5. Model Checking and Comparison

For model assessment, common checking procedures of normality, magnitude and tendencies on the fitted and predicted residuals are used. Fitted residuals can be useful for identifying outliers or misspecified models and give us the goodness of the fit for the sampling patches. Furthermore, the performance of each model was assessed using the LOOCV approach [

39]. The LOOCV procedure has been previously used in color science multiple times [

40,

41,

42,

43], although its origins can be traced back to early practical statistics methods [

39] and is routinely used in modern data science applications [

44].

In our study, the LOOCV consists of setting aside an individual patch and calculating the prediction model. Then, the predicted value is compared to its observed value which gives a measure of the model predictive accuracy. This allows obtaining an average of the predictive accuracy for unobserved patches as well as individual quality indicators for each color patch.

In addition to the residual analysis, it is required the assessment of the models using colorimetry metrics [

1]. Also, a LOOCV procedure was conducted to assess the predictive performance in terms of color differences. In classical colorimetry, color difference metrics are determined using formulas based on the CIELAB color space, such as

, also known as the CIE76 color difference [

35].

The CIE XYZ color space is not uniform, that is, equal distances in this space do not represent equally perceptible differences between color stimuli. Contrarily, CIELAB coordinates are nonlinear functions of CIE XYZ, and more perceptually uniform than the CIE XYZ color space [

35,

45]. The

between the theoretical tristimulus coordinates against the predicted values are computed, which gives a measure of the model adjustment in a well-defined color metric.

Other modern color difference formulas which take

as a reference have been developed by the CIE. An example is the CIEDE2000 formula, which includes corrections for variations in color difference perception due to lightness, chroma, hue, and chroma–hue interaction [

46,

47,

48]. It must be indicated that CIEDE2000 was designed for specialized industry applications [

49]. To use the CIEDE2000 formula, a number of specific requirements have to be fulfilled. Some of these requirements are the sample size (greater than 4 degrees), sample–sensor separation (contact), background field (uniform, neutral gray), and sample homogeneity (textureless). Usually, these conditions cannot be guaranteed in the usual working environments found in rock art documentation. Therefore, it seems more appropriate to use the CIE76 formula herein instead of the CIEDE2000 to determine color differences.

is calculated as the Euclidean distance between two color stimuli in CIELAB coordinates

where

,

, and

are the differences between the

,

, and

coordinates of the two color stimuli.

Numerous guides seek to quantify the maximum value allowed (tolerance) for an acceptable color difference so that it is imperceptible by human vision. This concept is known as ”Just Noticeable Difference” (JND). A good reference is found in the Metamorfoze guideline, which employs the CIE76 color difference formula, and establishes a color accuracy of 4 CIELAB units [

50].

2.6. Induced Noise Analysis

The radiometric response of a digital camera is the outcome of a number of factors, such as electromagnetic radiation, sensor electronics, the optical system, and so forth [

51,

52,

53,

54,

55]. The noise present on a single image is basically composed of two components: the photoresponse noise of every sensor element (pixel) and the spatial nonuniformity or fixed pattern noise of the sensor array [

56,

57].

The nonlinear transformation functions in camera characterization models modify the input data which are themselves affected by noise. In the camera characterization, noise is transferred from the original image to the characterized image and transformed in different ways. In this paper, the analysis of noise is carried out by comparing the coefficients of variation in the original and the characterized images. The noise assessment was conducted on four selected patches of the color checker (C7, D7, C8, and D8).

4. Conclusions

The use of digital images to support cultural heritage documentation techniques has undergone unprecedented advance in the last decades. However, the original RGB data provided by digital cameras cannot be used for rigorous color measurement and communication. To face the lack of colorimetric rigor of the input RGB data recorded by the sensor, it is necessary to conduct a colorimetric camera characterization; alternatively, color profiles can also be used.

In this paper, the experimental assessment of a GP model has been carried out, and compared with a common second-order polynomial model. Although both regression models yielded good results, the use of the GP provides an improvement in colorimetric terms and fits better to the original raw RGB data. The lowest CIE XYZ residual values achieved for the adjustment and color differences supports the use of a GP as a proper model for characterizing digital cameras. However, for practical purposes, the final sRGB characterized images derived from both the GP and the second-order polynomial model can be used with success in cultural heritage documentation and preservation tasks.

Additionally, the GP regression model has been tested on two SLR digital cameras with different built-in sensors to analyze the performance of the model in terms of pixel variability. The noise errors achieved show that similar results were obtained regardless the regression model used. However, the results also reveal that the induced noise highly depends on the camera sensor, which is clearly significant in the Foveon®X3 but not in the Super CCD. Thus, the correct choice of the digital camera is a key factor to be taken into consideration in the camera characterization procedure.

It is observed that the camera characterization procedure allows clear identification of the different pigments used in the scene, a proper separation from the support, the achievement of more accurate digital tracings, and accurate color measurement for monitoring aging effects on pigments. This methodology proves to be highly applicable not only in cultural heritage documentation tasks, but in any scientific and industrial discipline where a correct registration of the color is required.