Joint Angle Estimation of a Tendon-Driven Soft Wearable Robot through a Tension and Stroke Measurement

Abstract

1. Introduction

2. Materials and Methods

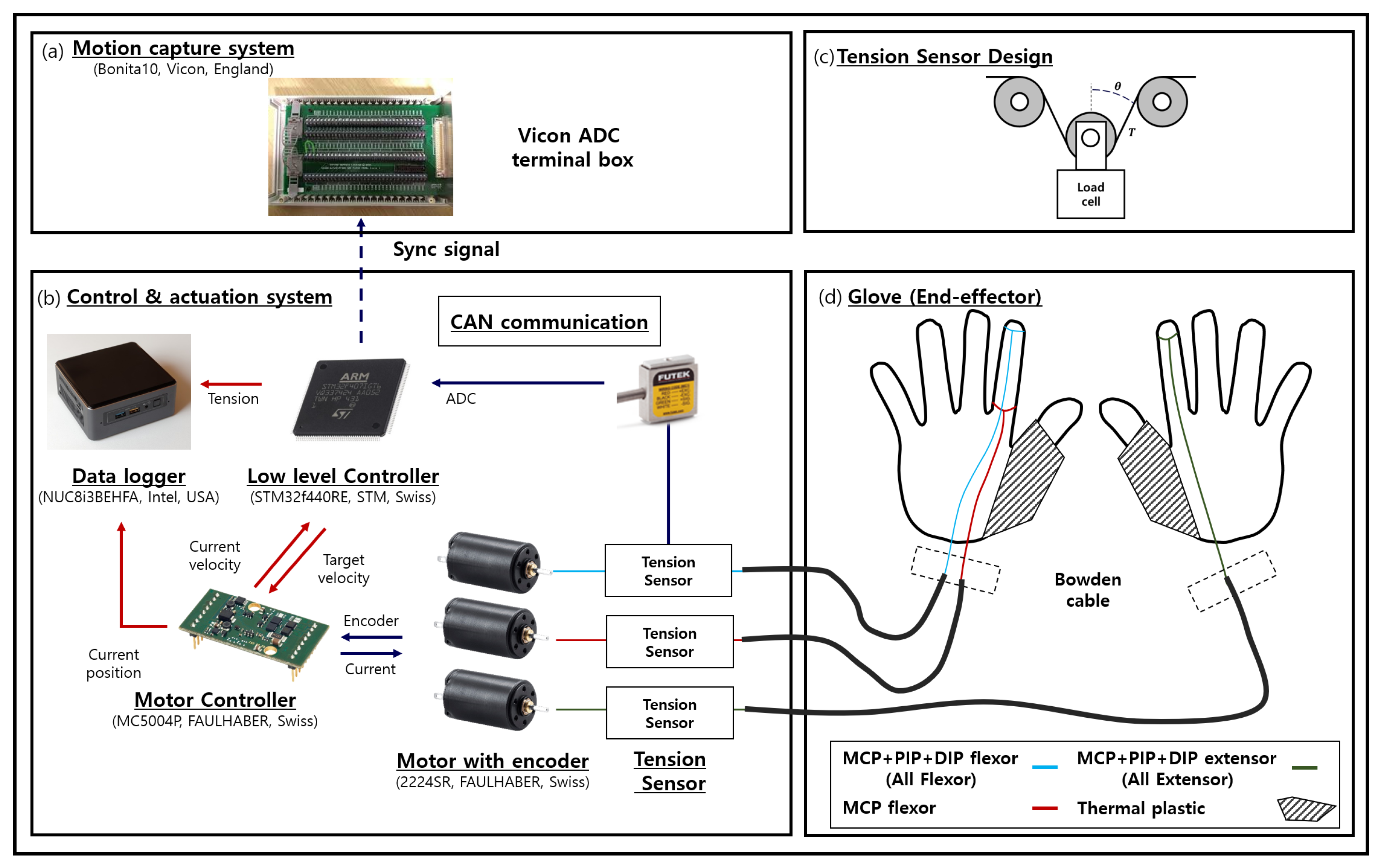

2.1. System Design

2.1.1. Glove Design

2.1.2. Actuation and Control System Design

2.2. Kinematic and Stiffness Parameter Estimation

2.2.1. Kinematic System Identification: The Relationship between the Joint Angle and the Fingertip Position

2.2.2. Stiffness Parameter Estimation: Relationship between Tension and Joint Angle

2.3. Experimental Methodology

3. Results

3.1. Kinematic System Identification: Estimation of the Relationship between Joint Angle and Fingertip Posture

3.2. Stiffness Parameter Estimation—Estimation of the Relationship between Tension and Joint Angle

3.3. Grasp Posture and Range of Motion

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| MCP joint | Metacarpophalangeal joint |

| PIP joint | Proximal interphalangeal joint |

| DIP joint | Distal interphalangeal joint |

| PoE | Product of exponential |

| A.F. | All Flexor |

| A.E. | All Extensor |

| M.F. | MCP Flexor |

| RMSE | Root mean square error |

| DOF | Degree of freedom |

| GPR | Gaussian Process Regression |

Appendix A. Anatomic Expressions of the Human Hand

References

- Lee, Y.; Kim, M.; Lee, Y.; Kwon, J.; Park, Y.L.; Lee, D. Wearable Finger Tracking and Cutaneous Haptic Interface with Soft Sensors for Multi-Fingered Virtual Manipulation. IEEE/ASME Trans. Mechatron. 2018, 1–11. [Google Scholar] [CrossRef]

- Chossat, J.B.; Chen, D.K.; Park, Y.L.; Shull, P.B. Soft Wearable Skin-Stretch Device for Haptic Feedback Using Twisted and Coiled Polymer Actuators. IEEE Trans. Haptic 2019, 12, 521–532. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.T.; Ryu, J.W.; Park, S.H.; Lee, Y.B.; Koo, S.H.; Park, Y.L.; Lee, G. An active compression sleeve with variable pressure levels using a wire-fabric mechanism and a soft sensor. Smart Mater. Struct. 2019, 28. [Google Scholar] [CrossRef]

- In, H.; Kang, B.B.; Sin, M.; Cho, K.J. Exo-Glove: A Wearable Robot for the Hand with a Soft. IEEE Rob. Autom Mag. 2015, 97–105. [Google Scholar] [CrossRef]

- Das, S.; Kishishita, Y.; Tsuji, T.; Lowell, C.; Ogawa, K.; Kurita, Y. ForceHand Glove: A Wearable Force-Feedback Glove with Pneumatic Artificial Muscles (PAMs). IEEE Rob. Autom. Lett. 2018, 3, 2416–2423. [Google Scholar] [CrossRef]

- Agarwal, P.; Yun, Y.; Fox, J.; Madden, K.; Deshpande, A.D. Design, control, and testing of a thumb exoskeleton with series elastic actuation. Int. J. Rob. Res. 2017, 36, 355–375. [Google Scholar] [CrossRef]

- Cappello, L.; Meyer, J.T.; Galloway, K.C.; Peisner, J.D.; Granberry, R.; Wagner, D.A.; Engelhardt, S.; Paganoni, S.; Walsh, C.J. Assisting hand function after spinal cord injury with a fabric-based soft robotic glove. J. NeuroEng. Rehabil. 2018, 15, 1–10. [Google Scholar] [CrossRef]

- Chu, C.Y.; Patterson, R.M. Soft robotic devices for hand rehabilitation and assistance: A narrative review. J. NeuroEng. Rehabil. 2018, 15, 1–14. [Google Scholar] [CrossRef]

- Shahid, T.; Gouwanda, D.; Nurzaman, S.G.; Gopalai, A.A.; Shahid, T.; Gouwanda, D.; Nurzaman, S.G.; Gopalai, A.A. Moving toward Soft Robotics: A Decade Review of the Design of Hand Exoskeletons. Biomimetics 2018, 3, 17. [Google Scholar] [CrossRef]

- Kim, B.; In, H.; Lee, D.; Cho, K. Development and assessment of a hand assist device: GRIPIT. J. NeuroEng. Rehabil. 2017, 1–22. [Google Scholar] [CrossRef]

- Cempini, M.; Cortese, M.; Vitiello, N. A powered finger-thumb wearable hand exoskeleton with self-aligning joint axes. IEEE/ASME Trans. Mechatron. 2015, 20, 705–716. [Google Scholar] [CrossRef]

- Chiri, A.; Vitiello, N.; Giovacchini, F.; Roccella, S.; Vecchi, F.; Carrozza, M.C. Mechatronic design and characterization of the index finger module of a hand exoskeleton for post-stroke rehabilitation. IEEE/ASME Trans. Mechatron. 2012, 17, 884–894. [Google Scholar] [CrossRef]

- Kaneko, M.; Wada, M.; Maekawa, H.; Tanie, K. A new consideration on tendon-tension control system of robot hands. In Proceedings of the 1991 IEEE International Conference on Robotics and Automation, Sacramento, CA, USA, 9–11 April 1991; pp. 1028–1033. [Google Scholar] [CrossRef]

- Kaneko, M.; Yamashita, T.; Tanie, K. Basic Considerations on Transmission Characteristics for Tendon Drive robots. In Proceedings of the 5th International Conference on Advanced Robotics ’Robots in Unstructured Environments, Pisa, Italy, 19–22 June 1991; pp. 827–832. [Google Scholar]

- Ozawa, R.; Kobayashi, H.; Hashirii, K. Analysis, classification, and design of tendon-driven mechanisms. IEEE Trans. Rob. 2014, 30, 396–410. [Google Scholar] [CrossRef]

- Kang, B.B.; Choi, H.; Lee, H.; Cho, K.J. Exo-Glove Poly II: A Polymer-Based Soft Wearable Robot for the Hand with a Tendon-Driven Actuation System. Soft Rob. 2019, 6, 214–227. [Google Scholar] [CrossRef] [PubMed]

- Popov, D.; Gaponov, I.; Ryu, J.H. Portable exoskeleton glove with soft structure for hand assistance in activities of daily living. IEEE/ASME Trans. Mechatron. 2017, 22, 865–875. [Google Scholar] [CrossRef]

- Lee, S.W.; Landers, K.A.; Park, H.S. Development of a biomimetic hand exotendon device (BiomHED) for restoration of functional hand movement post-stroke. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 886–898. [Google Scholar] [CrossRef]

- Vanoglio, F.; Bernocchi, P.; Mulè, C.; Garofali, F.; Mora, C.; Taveggia, G.; Scalvini, S.; Luisa, A. Feasibility and efficacy of a robotic device for hand rehabilitation in hemiplegic stroke patients: A randomized pilot controlled study. Clin. Rehabil. 2017, 31, 351–360. [Google Scholar] [CrossRef]

- Bissolotti, L.; Villafañe, J.H.; Gaffurini, P.; Orizio, C.; Valdes, K.; Negrini, S. Changes in skeletal muscle perfusion and spasticity in patients with poststroke hemiparesis treated by robotic assistance (Gloreha) of the hand. J. Phys. Ther. Sci. 2016, 28, 769–773. [Google Scholar] [CrossRef][Green Version]

- Jeong, U.; Cho, K.J. A feasibility study on tension control of Bowden-cable based on a dual-wire scheme. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 3690–3695. [Google Scholar] [CrossRef]

- Reinecke, J.; Chalon, M.; Friedl, W.; Grebenstein, M. Guiding effects and friction modeling for tendon driven systems. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 6726–6732. [Google Scholar] [CrossRef]

- Park, J.W.; Kim, T.; Kim, D.; Hong, Y.; Gong, H.S. Measurement of finger joint angle using stretchable carbon nanotube strain sensor. PLoS ONE 2019, 14, 1–11. [Google Scholar] [CrossRef]

- Chang, H.T.T.; Cheng, L.W.A.; Chang, J.Y.J. Development of IMU-based angle measurement system for finger rehabilitation. In Proceedings of the 2016 23rd International Conference on Mechatronics and Machine Vision in Practice (M2VIP), Nanjing, China, 28–30 November 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Connolly, J.; Condell, J.; O’Flynn, B.; Sanchez, J.T.; Gardiner, P. IMU Sensor-Based Electronic Goniometric Glove for Clinical Finger Movement Analysis. IEEE Sens. J. 2018, 18, 1273–1281. [Google Scholar] [CrossRef]

- Zhao, W.; Chai, J.; Xu, Y.Q. Combining marker-based mocap and RGB-D camera for acquiring high-fidelity hand motion data. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Lausanne, Switzerland, 29–31 July 2012; pp. 33–42. [Google Scholar]

- Sridhar, S.; Mueller, F.; Zollhöfer, M.; Casas, D.; Oulasvirta, A.; Theobalt, C. Real-time joint tracking of a hand manipulating an object from RGB-D input. In Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 294–310. [Google Scholar] [CrossRef]

- Romero, J.; Kjellström, H.; Kragic, D. Hands in action: Real-time 3D reconstruction of hands in interaction with objects. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 458–463. [Google Scholar] [CrossRef]

- Zhang, X.; Lee, S.W.; Braido, P. Determining finger segmental centers of rotation in flexion-extension based on surface marker measurement. J. Biomech. 2003, 36, 1097–1102. [Google Scholar] [CrossRef]

- Lynch, K.M.; Park, F.C. Introduction to Modern Robotics Mechanics, Planning, and Control; Cambridge University Press: Cambridge, UK, 2016; p. 408. [Google Scholar] [CrossRef]

- Rasmussen, C.E.; Williams, C.K.I.; Processes, G.; Press, M.I.T.; Jordan, M.I. Gaussian Processes for Machine Learning; MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Prattichizzo, D.; Trinkle, J.C. Springer Handbook of Robotics; Springer: Berlin Germany, 2009; Volume 46, pp. 46–3272. [Google Scholar] [CrossRef]

- Whitney, D. Force Feedback Control of Manipulator Fine Motions. Trans. ASME J. Dyn. Syst. Meas. Control 1977, 99, 91–97. [Google Scholar] [CrossRef]

- Li, J.; Zhao, F.; Li, X.; Li, J. Analysis of robotic workspace based on Monte Carlo method and the posture matrix. In Proceedings of the 2016 IEEE International Conference on Control and Robotics Engineering, Singapore, 2–4 April 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Ciocarlie, M.; Hicks, F.M.; Holmberg, R.; Hawke, J.; Schlicht, M.; Gee, J.; Stanford, S.; Bahadur, R. The Velo gripper: A versatile single-actuator design for enveloping, parallel and fingertip grasps. Int. J. Rob. Res. 2014, 33, 753–767. [Google Scholar] [CrossRef]

- Dollar, A.M.; Howe, R.D. The highly adaptive SDM hand: Design and performance evaluation. Int. J. Rob. Res. 2010, 29, 585–597. [Google Scholar] [CrossRef]

- Ciocarlie, M.; Hicks, F.M.; Stanford, S. Kinetic and dimensional optimization for a tendon-driven gripper. In Proceedings of the IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2751–2758. [Google Scholar] [CrossRef]

| Joint | X | Y | Z | Length | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| G | Y | RMSE | G | Y | RMSE | G | Y | RMSE | (mm) | |

| MCP | −6.05 | 48.78 | 9.19 | −16.04 | 23.73 | 5.37 | −0.27 | −18.13 | 5.97 | 42.64 |

| PIP | −2.72 | 33.58 | 0.92 | 1.91 | −1.94 | 1.40 | −0.13 | −20.64 | 0.80 | 20.14 |

| DIP | −0.71 | 20.41 | 0.97 | 0.26 | 2.05 | 1.71 | 0.13 | −19.19 | 0.83 | 18.72 |

| MCP (rad) | PIP (rad) | DIP (rad) | Workspace (mm2) | ||||

|---|---|---|---|---|---|---|---|

| Max | Min | Max | Min | Max | Min | ||

| K.M. | - | - | - | - | - | - | 8712.30 |

| S.M. | 1.34 | −0.35 | 1.56 | 0.08 | 0.92 | 0.07 | 5884.10 |

| A.M. | 32.90 | 21.27 | 0.88 | 3.11 | 4.55 | 1.48 | 3770.80 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, B.; Ryu, J.; Cho, K.-J. Joint Angle Estimation of a Tendon-Driven Soft Wearable Robot through a Tension and Stroke Measurement. Sensors 2020, 20, 2852. https://doi.org/10.3390/s20102852

Kim B, Ryu J, Cho K-J. Joint Angle Estimation of a Tendon-Driven Soft Wearable Robot through a Tension and Stroke Measurement. Sensors. 2020; 20(10):2852. https://doi.org/10.3390/s20102852

Chicago/Turabian StyleKim, Byungchul, Jiwon Ryu, and Kyu-Jin Cho. 2020. "Joint Angle Estimation of a Tendon-Driven Soft Wearable Robot through a Tension and Stroke Measurement" Sensors 20, no. 10: 2852. https://doi.org/10.3390/s20102852

APA StyleKim, B., Ryu, J., & Cho, K.-J. (2020). Joint Angle Estimation of a Tendon-Driven Soft Wearable Robot through a Tension and Stroke Measurement. Sensors, 20(10), 2852. https://doi.org/10.3390/s20102852