YOLO-Tomato: A Robust Algorithm for Tomato Detection Based on YOLOv3

Abstract

:1. Introduction

2. Theoretical Background

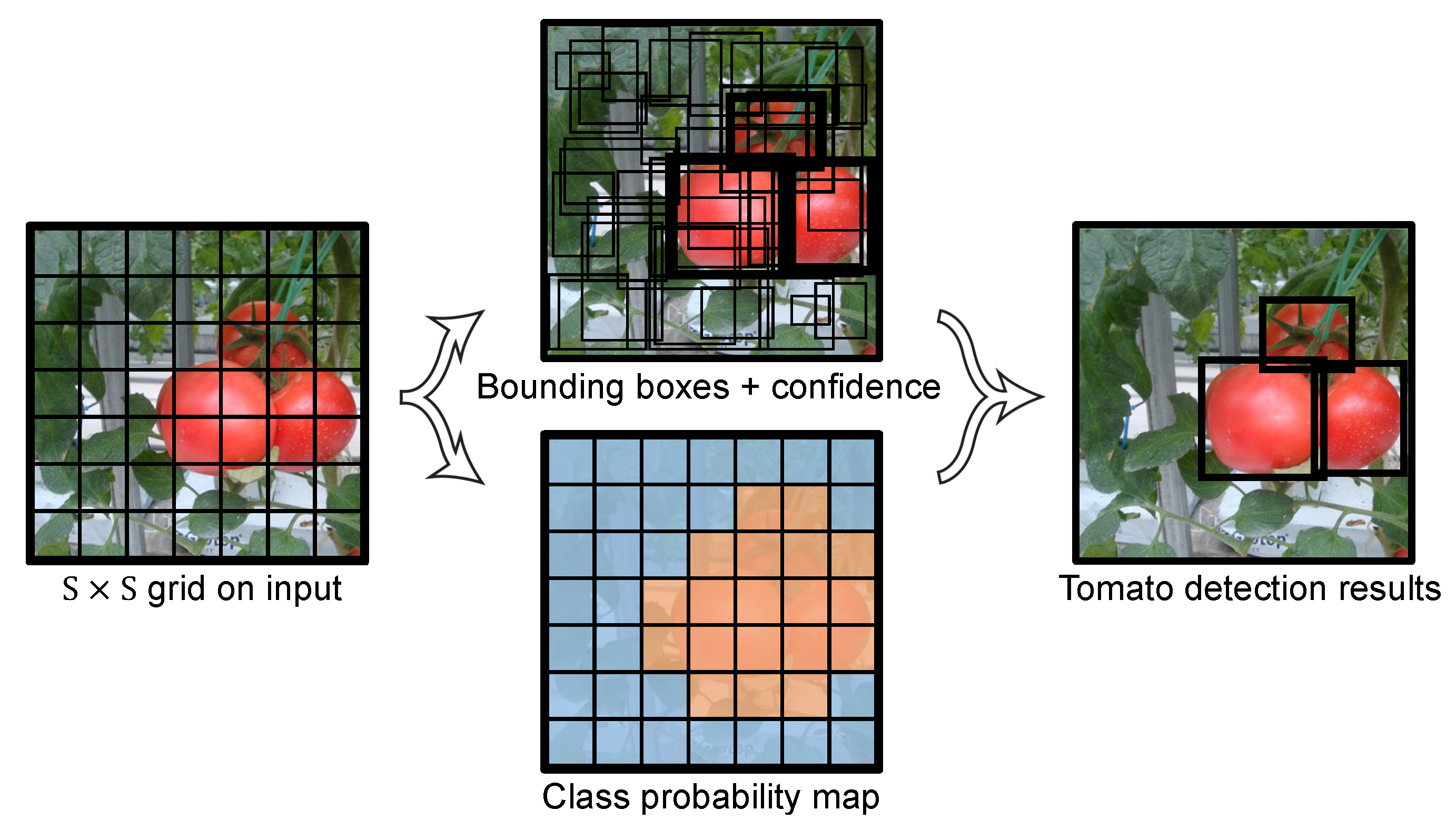

2.1. YOLO Series

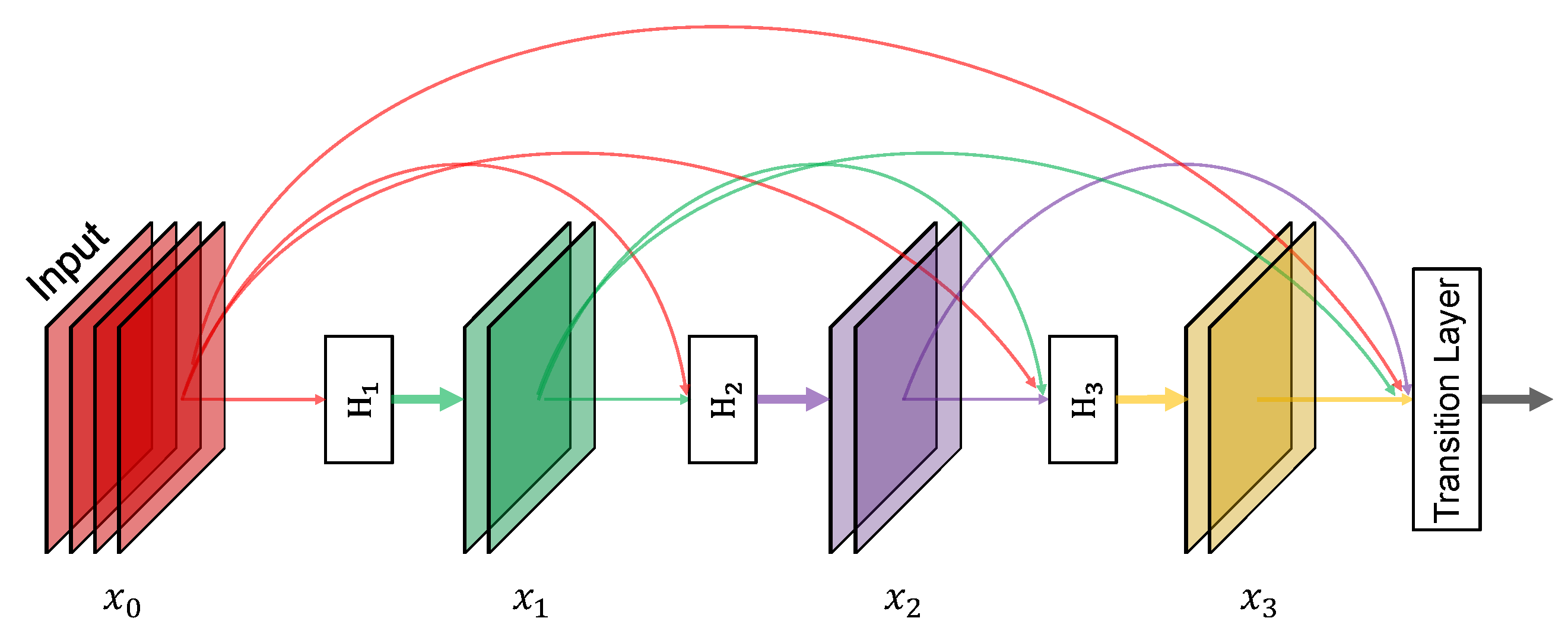

2.2. Densely Connected Block

2.3. The Non-Maximum Suppression for Merging Results

| Algorithm 1. The pseudo code of NMS method |

| Input: , , is the list of initial detection boxes contains corresponding detection confidences is the NMS threshold |

| Output: List of final detection boxes |

| 1: |

| 2: whiledo |

| 3: |

| 4: |

| 5: fordo |

| 6: ifthen |

| 7: |

| 8: end if |

| 9: end for |

| 10: end while |

3. Materials and Methods

3.1. Image Acquisition

3.2. Image Augmentation

- –

- the entire original image

- –

- scaling and cropping

3.3. The Proposed YOLO-Tomato Model

3.4. Dense Architecture for Better Feature Reuse

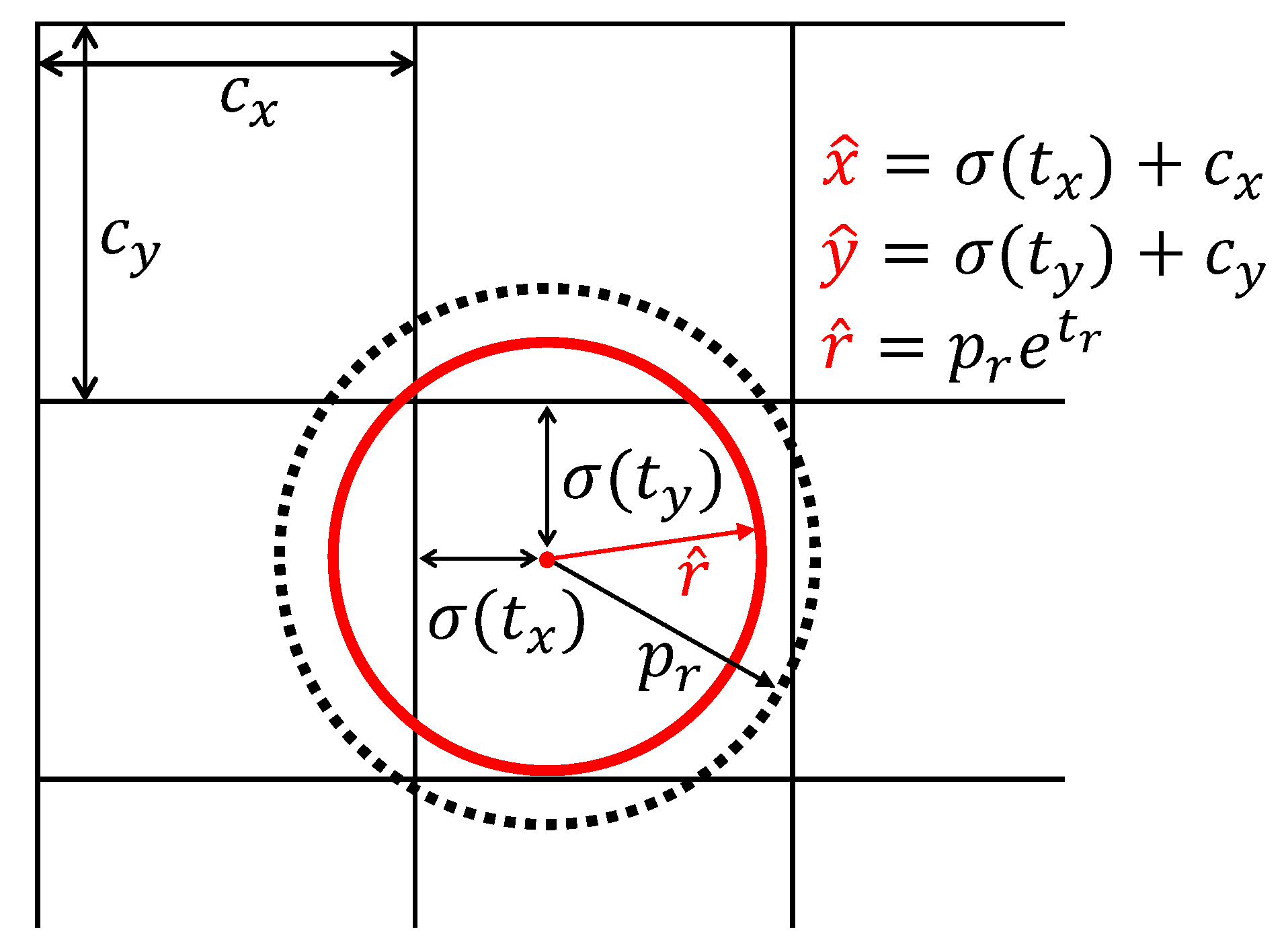

3.5. Circular Bounding Box

3.5.1. IoU of Two C-Bboxes

3.5.2. C-Bbox Location Prediction and Loss Function

3.6. Experimental Setup

4. Results and Discussion

4.1. Average IoU Comparison of C-Bbox and R-Bbox

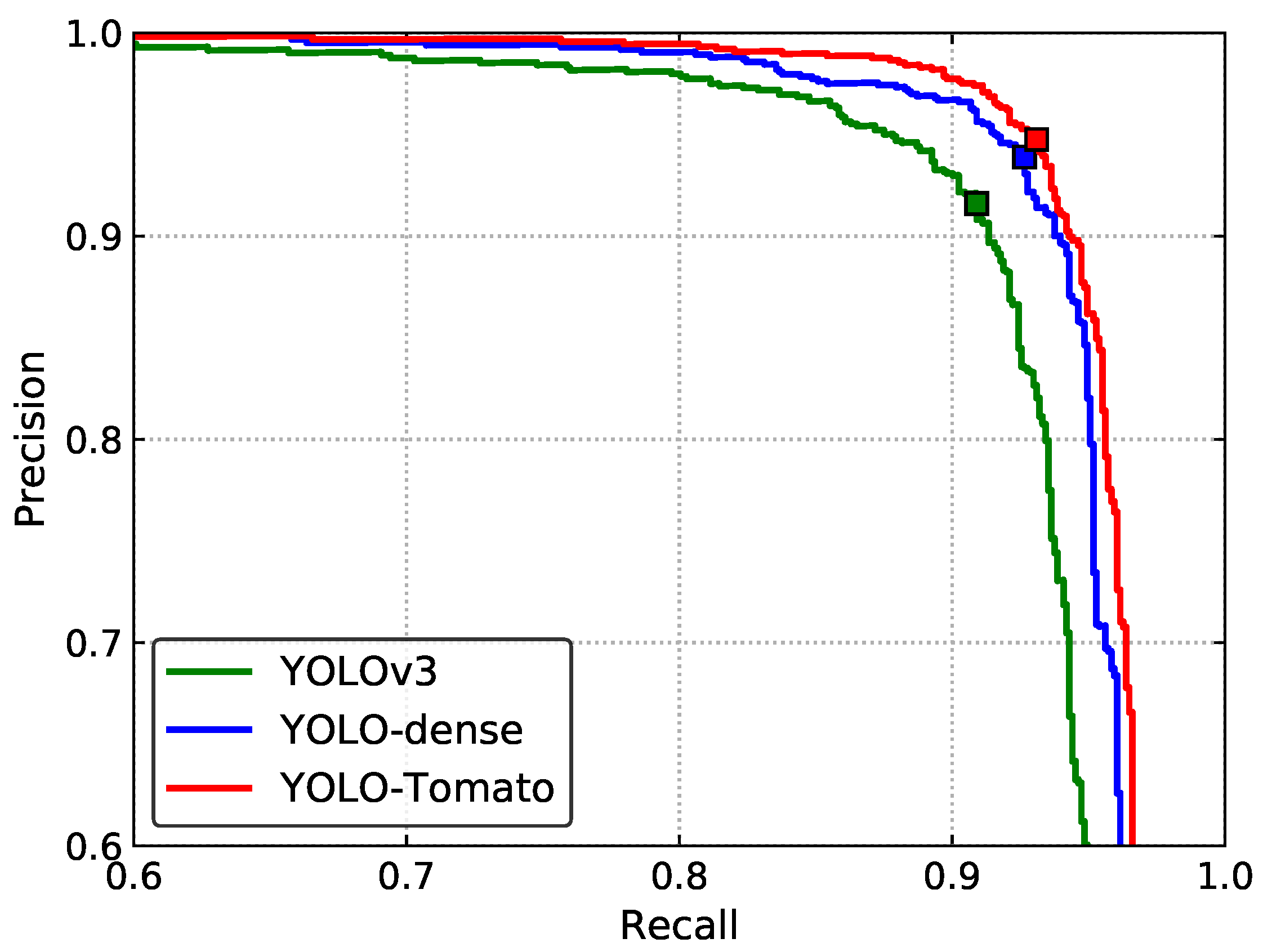

4.2. Ablation Study on Different Modifications

4.3. The Network Visualization

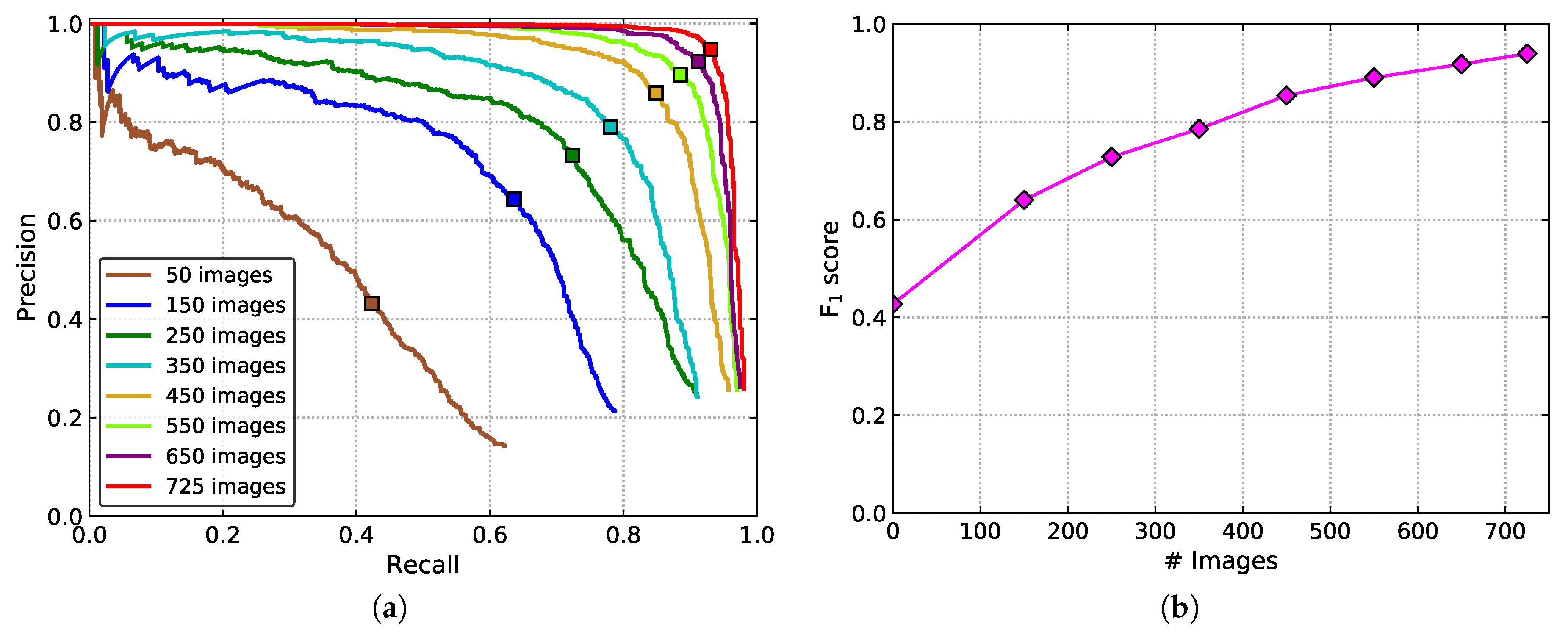

4.4. Impact of Training Dataset Size on Tomato Detection

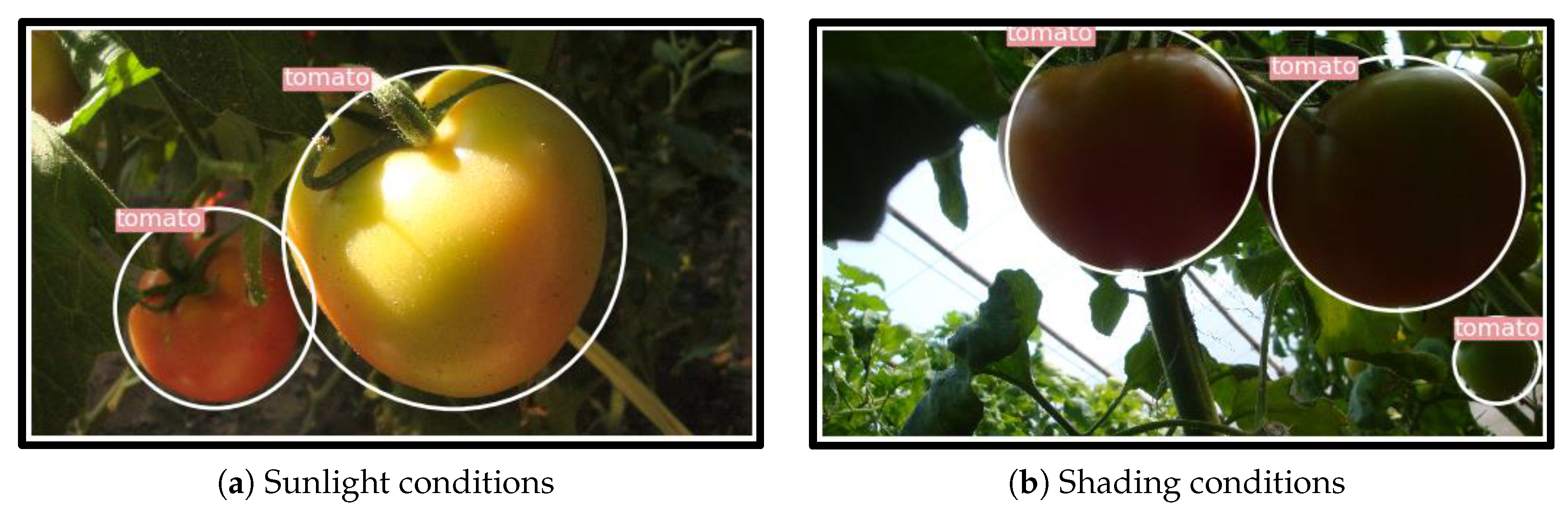

4.5. Performance of the Proposed Model under Different Lighting Conditions

4.6. Performance of the Proposed Model under Different Occlusion Conditions

4.7. Comparison of Different Algorithms

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| R-Bbox | Rectangular Bounding Box |

| C-Bbox | Circular Bounding Box |

| IoU | Intersection-over-Union |

| NMS | Non-Maximum Suppression |

| SVM | Support Vector Machine |

| DCNN | Deep Convolutional Neural Network |

| GT | Ground Truth |

| DenseNet | Dense Convolutional Network |

| TP | True Positive |

| FN | False Negative |

| FP | False Positive |

| P–R curve | Precision–Recall curve |

| AP | Average Precision |

References

- Zhao, Y.; Gong, L.; Huang, Y.; Liu, C. A review of key techniques of vision-based control for harvesting robot. Comput. Electron. Agric. 2016, 127, 311–323. [Google Scholar] [CrossRef]

- Gongal, A.; Amatya, S.; Karkee, M.; Zhang, Q.; Lewis, K. Sensors and systems for fruit detection and localization: A review. Comput. Electron. Agric. 2015, 116, 8–19. [Google Scholar] [CrossRef]

- Linker, R.; Cohen, O.; Naor, A. Determination of the number of green apples in RGB images recorded in orchards. Comput. Electron. Agric. 2012, 81, 45–57. [Google Scholar] [CrossRef]

- Wei, X.; Jia, K.; Lan, J.; Li, Y.; Zeng, Y.; Wang, C. Automatic method of fruit object extraction under complex agricultural background for vision system of fruit picking robot. Optik 2014, 125, 5684–5689. [Google Scholar] [CrossRef]

- Kelman, E.E.; Linker, R. Vision-based localisation of mature apples in tree images using convexity. Biosyst. Eng. 2014, 118, 174–185. [Google Scholar] [CrossRef]

- Payne, A.; Walsh, K.; Subedi, P.; Jarvis, D. Estimating mango crop yield using image analysis using fruit at ‘stone hardening’stage and night time imaging. Comput. Electron. Agric. 2014, 100, 160–167. [Google Scholar] [CrossRef]

- Payne, A.B.; Walsh, K.B.; Subedi, P.; Jarvis, D. Estimation of mango crop yield using image analysis–segmentation method. Comput. Electron. Agric. 2013, 91, 57–64. [Google Scholar] [CrossRef]

- Zhao, Y.; Gong, L.; Huang, Y.; Liu, C. Robust tomato recognition for robotic harvesting using feature images fusion. Sensors 2016, 16, 173. [Google Scholar] [CrossRef] [Green Version]

- Qiang, L.; Jianrong, C.; Bin, L.; Lie, D.; Yajing, Z. Identification of fruit and branch in natural scenes for citrus harvesting robot using machine vision and support vector machine. Int. J. Agric. Biol. Eng. 2014, 7, 115–121. [Google Scholar]

- Kurtulmus, F.; Lee, W.S.; Vardar, A. Immature peach detection in colour images acquired in natural illumination conditions using statistical classifiers and neural network. Precis. Agric. 2014, 15, 57–79. [Google Scholar] [CrossRef]

- Yamamoto, K.; Guo, W.; Yoshioka, Y.; Ninomiya, S. On plant detection of intact tomato fruits using image analysis and machine learning methods. Sensors 2014, 14, 12191–12206. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, Y.; Gong, L.; Zhou, B.; Huang, Y.; Liu, C. Detecting tomatoes in greenhouse scenes by combining AdaBoost classifier and colour analysis. Biosyst. Eng. 2016, 148, 127–137. [Google Scholar] [CrossRef]

- Luo, L.; Tang, Y.; Zou, X.; Wang, C.; Zhang, P.; Feng, W. Robust grape cluster detection in a vineyard by combining the AdaBoost framework and multiple color components. Sensors 2016, 16, 2098. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Mao, S.; Kim, J.H. A mature-tomato detection algorithm using machine learning and color analysis. Sensors 2019, 19, 2023. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the International Conference on Neural Information Processing Systems 25, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Sa, I.; Ge, Z.; Dayoub, F.; Upcroft, B.; Perez, T.; McCool, C. Deepfruits: A fruit detection system using deep neural networks. Sensors 2016, 16, 1222. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the International Conference on Neural Information Processing Systems 28, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Bargoti, S.; Underwood, J. Deep fruit detection in orchards. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 3 June 2017; pp. 3626–3633. [Google Scholar]

- Rahnemoonfar, M.; Sheppard, C. Deep count: Fruit counting based on deep simulated learning. Sensors 2017, 17, 905. [Google Scholar] [CrossRef] [Green Version]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-first AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 9 February 2017. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7 December 2015; pp. 1440–1448. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 26 July 2017; pp. 4700–4708. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 30 June 2016; pp. 770–778. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 26 July 2017; pp. 2117–2125. [Google Scholar]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Ft. Lauderdale, FL, USA, 13 April 2011; pp. 315–323. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 1 November 2014; pp. 580–587. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6 September 2014; pp. 740–755. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Wilcoxon, F. Individual comparisons by ranking methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

| Methods | Dense Architecture | C-Bbox | Recall (%) | Precision (%) | F1 (%) | AP (%) |

|---|---|---|---|---|---|---|

| YOLOv3 | 90.89 | 91.60 | 91.24 | 94.06 | ||

| YOLO-dense | ✓ | 92.65 | 93.88 | 93.26 | 95.56 | |

| YOLO-Tomato | ✓ | ✓ | 93.09 | 94.75 | 93.91 | 96.40 |

| Dataset Size | Recall (%) | Precision (%) | F1 (%) | AP (%) |

|---|---|---|---|---|

| 50 | 42.32 | 43.13 | 42.72 | 34.37 |

| 150 | 63.59 | 64.37 | 63.98 | 60.58 |

| 250 | 72.37 | 73.25 | 72.81 | 74.61 |

| 350 | 78.07 | 79.02 | 78.54 | 81.51 |

| 450 | 84.87 | 85.90 | 85.38 | 89.94 |

| 550 | 88.48 | 89.56 | 89.02 | 93.57 |

| 650 | 91.23 | 92.34 | 91.78 | 95.07 |

| 725 | 93.09 | 94.75 | 93.91 | 96.40 |

| Illumination | Tomato Count | Correctly Identified | Falsely Identified | Missed | |||

|---|---|---|---|---|---|---|---|

| Amount | Rate (%) | Amount | Rate (%) | Amount | Rate (%) | ||

| Sunlight | 487 | 454 | 93.22 | 25 | 5.22 | 33 | 6.78 |

| Shading | 425 | 395 | 92.94 | 22 | 5.28 | 30 | 7.06 |

| Occlusion Condition | Tomato Count | Correctly Identified | Falsely Identified | Missed | |||

|---|---|---|---|---|---|---|---|

| Amount | Rate (%) | Amount | Rate (%) | Amount | Rate (%) | ||

| Slight case | 609 | 576 | 94.58 | 22 | 3.68 | 33 | 5.42 |

| Severe case | 303 | 273 | 90.10 | 25 | 8.39 | 30 | 9.90 |

| Methods | Recall (%) | Precision (%) | F1 (%) | AP (%) | Time (ms) |

|---|---|---|---|---|---|

| YOLOv2 [24] | 86.18 | 87.24 | 86.71 | 88.46 | 30 |

| YOLOv3 [25] | 90.89 | 91.60 | 91.24 | 94.06 | 45 |

| Faster R-CNN [19] | 91.78 | 92.89 | 92.33 | 94.37 | 231 |

| YOLO-Tomato | 93.09 | 94.75 | 93.91 | 96.40 | 54 |

| YOLOv2 | YOLOv3 | Faster R-CNN | YOLO-Tomao | |

|---|---|---|---|---|

| YOLOv2 | 0.000 | 0.000 | 0.000 | |

| YOLOv3 | 0.000 | 0.047 | 0.000 | |

| Faster R-CNN | 0.000 | 0.047 | 0.000 | |

| YOLO-Tomato | 0.000 | 0.000 | 0.000 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, G.; Nouaze, J.C.; Touko Mbouembe, P.L.; Kim, J.H. YOLO-Tomato: A Robust Algorithm for Tomato Detection Based on YOLOv3. Sensors 2020, 20, 2145. https://doi.org/10.3390/s20072145

Liu G, Nouaze JC, Touko Mbouembe PL, Kim JH. YOLO-Tomato: A Robust Algorithm for Tomato Detection Based on YOLOv3. Sensors. 2020; 20(7):2145. https://doi.org/10.3390/s20072145

Chicago/Turabian StyleLiu, Guoxu, Joseph Christian Nouaze, Philippe Lyonel Touko Mbouembe, and Jae Ho Kim. 2020. "YOLO-Tomato: A Robust Algorithm for Tomato Detection Based on YOLOv3" Sensors 20, no. 7: 2145. https://doi.org/10.3390/s20072145

APA StyleLiu, G., Nouaze, J. C., Touko Mbouembe, P. L., & Kim, J. H. (2020). YOLO-Tomato: A Robust Algorithm for Tomato Detection Based on YOLOv3. Sensors, 20(7), 2145. https://doi.org/10.3390/s20072145