Modular Approach for Odometry Localization Method for Vehicles with Increased Maneuverability

Abstract

:1. Introduction

1.1. Background

1.2. State of the Art

2. Odometry Localization Method

2.1. Functionality of a State Estimator

2.2. Sensors

2.3. Vehicle Models

2.3.1. Motion Model

2.3.2. Complementary Models

2.4. Design of Odometry Localization Method Using Modular Approach

- Round1

- Round2

2.4.1. Odometry Basic Version

- Round 1:

- Round 2:

2.4.2. Odometry Version 111

2.4.3. Odometry Version 212

2.4.4. Odometry Version 232

2.4.5. Odometry Version 213

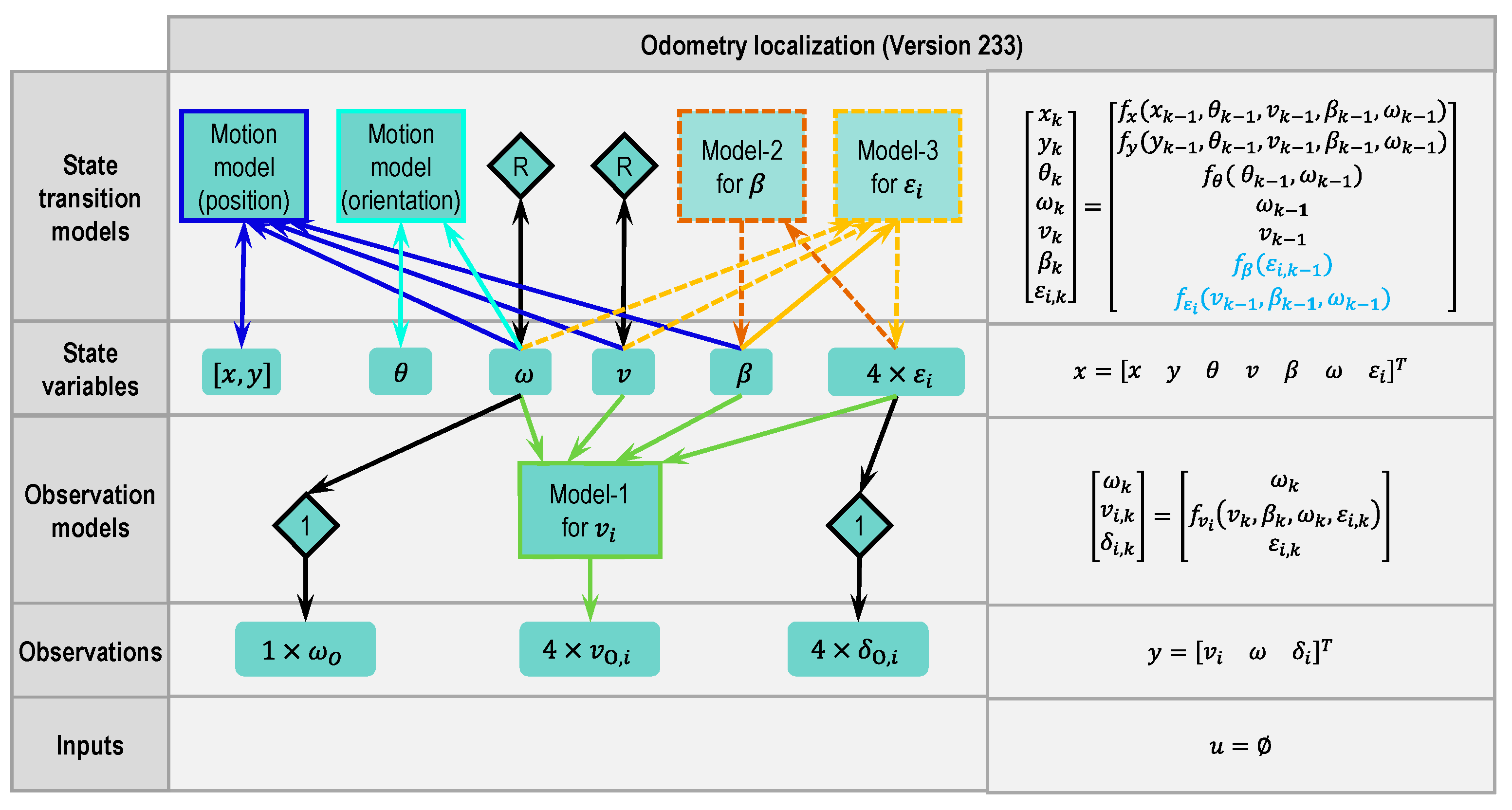

2.4.6. Odometry Version 233

2.4.7. Comparison of Odometry Versions

3. Validation

3.1. Test Vehicle

3.2. Driving Maneuvers

3.3. Validation Environments

3.4. Robustness Analysis

3.5. Evaluation Criteria (EC)

- EC-1: End position and orientation error in global coordinate system (with superscript “E”):

- EC-2: Maximum position and orientation error in global coordinate system while driving:

- EC-3: Average error of position change and orientation change:

4. Results and Discussion

4.1. Simulation Results

4.2. Real Driving Results

5. Conclusions

- The greater the number of models used to constrain state variables, the higher the estimation accuracy, theoretically. In reality, it also depends on the quality and effective range of a model. Sensor quality is also a factor;

- The use of the state variables or the sensor signals as inputs in model-1 and model-2 plays no role in the estimation accuracy. If the state variables have state transition model-3, the robustness of the odometry will be ensured if the sensors are defective;

- The versions with model-1 and 2, model-1 and 3 and model-1, 2 and 3 have almost the same accuracy. Versions 213 and 233 are the two most robust versions.

- It simplified the process of designing a state estimator. The elements for a state estimator can be easily derived from the diagram of this method;

- The contribution of a certain model to accuracy and robustness can be predicted using this approach;

- New models or new sensors can be easily implemented, and possible effects can be analyzed;

- It provides a clear overview of all used models and sensors. It helps users to manage different estimators.

6. Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Reference Position of Real Driving Tests

Appendix B. Nomenclature

| Symbol | Description |

|---|---|

| Vehicle position in world coordinate system | |

| Yaw angle of vehicle | |

| Vehicle velocity | |

| Wheel velocity | |

| Wheel velocity along vehicle longitudinal axis | |

| Wheel velocity along vehicle lateral direction | |

| Wheel velocity along vehicle lateral direction | |

| Side slip angle | |

| Yaw rate | |

| Wheel steering angle | |

| Wheel velocity angle | |

| Distance between tire–road contact point and vehicle center of gravity in vehicle longitudinal direction | |

| Distance between tire–road contact point and vehicle center of gravity in vehicle lateral direction | |

| Sample time |

| Subscript | Description |

|---|---|

| Last time step | |

| Actual time step | |

| Sensor signal used as observation | |

| Sensor signal used as input value |

References

- Verordnung des Bundesministers für Verkehr, Innovation und Technologie, Mit der die Automatisiertes Fahren Verordnung Geändert Wird (1.Novelle zur AutomatFahrV). Available online: https://rdb.manz.at/document/ris.c.BGBl__II_Nr__66_2019 (accessed on 23 December 2020).

- Klein, M.; Mihailescu, A.; Hesse, L.; Eckstein, L. Einzelradlenkung des Forschungsfahrzeugs Speed E. ATZ Automob. Z. 2013, 115, 782–787. [Google Scholar] [CrossRef]

- Bünte, T.; Ho, L.M.; Satzger, C.; Brembeck, J. Zentrale Fahrdynamikregelung der Robotischen Forschungsplattform RoboMobil. ATZ Elektron. 2014, 9, 72–79. [Google Scholar] [CrossRef]

- Nees, D.; Altherr, J.; Mayer, M.P.; Frey, M.; Buchwald, S.; Kautzmann, P. OmniSteer—Multidirectional chassis system based on wheel-individual steering. In 10th International Munich Chassis Symposium 2019: Chassis. Tech Plus; Pfeffer, P.E., Ed.; Springer: Wiesbaden, Germany, 2020; pp. 531–547. ISBN 978-3-658-26434-5. [Google Scholar]

- Zekavat, R.; Buehrer, R.M. Handbook of Position Location. Theory, Practice, and Advances, 2nd ed.; John Wiley & Sons Incorporated: Newark, MJ, USA, 2018; ISBN 9781119434580. [Google Scholar]

- Winner, H.; Hakuli, S.; Lotz, F.; Singer, C. Handbuch Fahrerassistenzsysteme; Springer Fachmedien Wiesbaden: Wiesbaden, Germany, 2015; ISBN 978-3-658-05733-6. [Google Scholar]

- Scherzinger, B. Precise robust positioning with inertially aided RTK. Navigation 2006, 53, 73–83. [Google Scholar] [CrossRef]

- Vivacqua, R.; Vassallo, R.; Martins, F. A Low Cost Sensors Approach for Accurate Vehicle Localization and Autonomous Driving Application. Sensors 2017, 17, 2359. [Google Scholar] [CrossRef] [PubMed]

- Aqel, M.O.A.; Marhaban, M.H.; Saripan, M.I.; Ismail, N.B. Review of visual odometry: Types, approaches, challenges, and applications. SpringerPlus 2016, 5, 1897. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hertzberg, J.; Lingemann, K.; Nüchter, A. Mobile Roboter. In Eine Einführung aus Sicht der Informatik; Springer: Berlin/Heidelberg, Germany, 2012; ISBN 978-3-642-01726-1. [Google Scholar]

- Noureldin, A.; Karamat, T.B.; Georgy, J. Fundamentals of Inertial Navigation, Satellite-based Positioning and their Integration; Springer: Berlin/Heidelberg, Germany, 2013; ISBN 978-3-642-30466-8. [Google Scholar]

- Groves, P.D. Principles of GNSS, inertial, and multisensor integrated navigation systems, 2nd edition [Book review]. IEEE Aerosp. Electron. Syst. Mag. 2015, 30, 26–27. [Google Scholar] [CrossRef]

- Borenstein, J.; Everett, H.R.; Feng, L.; Wehe, D. Mobile robot positioning: Sensors and techniques. J. Robot. Syst. 1997, 14, 231–249. [Google Scholar] [CrossRef]

- Berntorp, K. Particle filter for combined wheel-slip and vehicle-motion estimation. In Proceedings of the American Control Conference (ACC), Chicago, IL, USA, 1–3 July 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 5414–5419, ISBN 978-1-4799-8684-2. [Google Scholar]

- Brunker, A.; Wohlgemuth, T.; Frey, M.; Gauterin, F. GNSS-shortages-resistant and self-adaptive rear axle kinematic parameter estimator (SA-RAKPE). In Proceedings of the 28th IEEE Intelligent Vehicles Symposium, Redondo Beach, CA, USA, 11–14 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 456–461, ISBN 978-1-5090-4804-5. [Google Scholar]

- Brunker, A.; Wohlgemuth, T.; Frey, M.; Gauterin, F. Dual-Bayes Localization Filter Extension for Safeguarding in the Case of Uncertain Direction Signals. Sensors 2018, 18, 3539. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Larsen, T.D.; Hansen, K.L.; Andersen, N.A.; Ravn, O. Design of Kalman filters for mobile robots; evaluation of the kinematic and odometric approach. In Proceedings of the 1999 IEEE International Conference on Control Applications Held Together with IEEE International Symposium on Computer Aided Control System Design, Kohala Coast, HI, USA, 22–27 August 1999; IEEE: Piscataway, NJ, USA, 1999; pp. 1021–1026, ISBN 0-7803-5446-X. [Google Scholar]

- Lee, K.; Chung, W. Calibration of kinematic parameters of a Car-Like Mobile Robot to improve odometry accuracy. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation (ICRA), Pasadena, CA, USA, 19–23 May 2008; pp. 2546–2551, ISBN 978-1-4244-1646-2. [Google Scholar]

- Kochem, M.; Neddenriep, R.; Isermann, R.; Wagner, N.; Hamann, C.D. Accurate local vehicle dead-reckoning for a parking assistance system. In Proceedings of the 2002 American Control Conference, Anchorage, AK, USA, 8–10 May 2002; Hilton Anchorage and Egan Convention Center: Anchorage, AK, USA; IEEE: Piscataway, NJ, USA, 2002; Volume 5, pp. 4297–4302, ISBN 0-7803-7298-0. [Google Scholar]

- Tham, Y.K.; Wang, H.; Teoh, E.K. Adaptive state estimation for 4-wheel steerable industrial vehicles. In Proceedings of the 1998 37th IEEE Conference on Decision and Control, Tampa, FL, USA, 16–18 December 1998; IEEE: Piscataway, NJ, USA, 1998; pp. 4509–4514, ISBN 0-7803-4394-8. [Google Scholar]

- Brunker, A.; Wohlgemuth, T.; Frey, M.; Gauterin, F. Odometry 2.0: A Slip-Adaptive EIF-Based Four-Wheel-Odometry Model for Parking. IEEE Trans. Intell. Veh. 2019, 4, 114–126. [Google Scholar] [CrossRef]

- Chung, H.; Ojeda, L.; Borenstein, J. Accurate mobile robot dead-reckoning with a precision-calibrated fiber-optic gyroscope. IEEE Trans. Robot. Automat. 2001, 17, 80–84. [Google Scholar] [CrossRef]

- Marco, V.R.; Kalkkuhl, J.; Seel, T. Nonlinear observer with observability-based parameter adaptation for vehicle motion estimation. IFAC-PapersOnLine 2018, 51, 60–65. [Google Scholar] [CrossRef]

- Rhudy, M.; Gu, Y. Understanding nonlinear Kalman filters part 1: Selection of EKF or UKF. Interact. Robot. Lett. 2013. Available online: https://yugu.faculty.wvu.edu/research/interactive-robotics-letters/understanding-nonlinear-kalman-filters-part-i (accessed on 23 December 2020).

- Julier, S.J.; Uhlmann, J.K. New extension of the Kalman filter to nonlinear systems. In Proceedings of the Signal Processing Sensor Fusion, and Target Recognition VI, Orlando, FL, USA, 28 July 1997; pp. 182–193. [Google Scholar]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter. In Proceedings of the ACM SIGGRAPH, Los Angeles, CA, USA, 12–17 August 2001; ACM Press, Addison-Wesley: New York, NY, USA, 2001. [Google Scholar]

- Mitschke, M.; Wallentowitz, H. Dynamik der Kraftfahrzeuge, Vierte, Neubearbeitete Auflage; Springer: Berlin/Heidelberg, Germany, 2004; ISBN 978-3-662-06802-1. [Google Scholar]

- Frey, M.; Han, C.; Rügamer, D.; Schneider, D. Automatisiertes Mehrdirektionales Fahrwerksystem auf Basis Radselektiver Radantriebe (OmniSteer). Schlussbericht zum Forschungsvorhaben: Projektlaufzeit: 01.01.2016-31.03.2019. Available online: https://www.tib.eu/suchen/id/TIBKAT:1687318824/ (accessed on 23 December 2020).

| Position | Sensor | Price/Unit |

|---|---|---|

| Wheel steering angle sensors | Bosch LWS 5.6.3 | 80 EUR |

| Wheel speed sensors | Integrated Speed Sensor of Traction Motor 1 | - |

| Yaw rate sensor | UM7 IMU | 150 EUR |

| Version | Model-1 | Model-2 | Model-3 | ||

|---|---|---|---|---|---|

| or Used? | Model-2 Used? | or Used? | Model-3 Used? | ||

| 111 | No | - | - | Model 1 | |

| 112 | No | - | No | ||

| 212 | No | - | No | ||

| 121 | Yes | - | Model 1 and 2 | ||

| 122 | Yes | No | |||

| 132 | Yes | No | |||

| 222 | Yes | No | |||

| 232 | Yes | No | |||

| 113 | No | - | Yes | Model 1 and 3 | |

| 213 | No | - | Yes | ||

| 123 | Yes | Yes | Model 1, 2 and 3 | ||

| 133 | Yes | Yes | |||

| 223 | Yes | Yes | |||

| 233 | Yes | Yes | |||

| Case No. | Failed Sensors |

|---|---|

| 1 | No sensor failed |

| 2 | Wheel speed sensor FL |

| 3 | Wheel speed sensor FR |

| 4 | Wheel speed sensor RL |

| 5 | Wheel speed sensor RR |

| 6 | Yaw rate sensor |

| 7 | Wheel steering sensor FL |

| 8 | Wheel steering sensor FR |

| 9 | Wheel steering sensor RL |

| 10 | Wheel steering sensor RR |

| 11 | Wheel speed sensor FL + Wheel steering sensor FL |

| 12 | Wheel speed sensor FR + Wheel steering sensor FR |

| 13 | Wheel speed sensor RL + Wheel steering sensor RL |

| 14 | Wheel speed sensor RR + Wheel steering sensor RR |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, C.; Frey, M.; Gauterin, F. Modular Approach for Odometry Localization Method for Vehicles with Increased Maneuverability. Sensors 2021, 21, 79. https://doi.org/10.3390/s21010079

Han C, Frey M, Gauterin F. Modular Approach for Odometry Localization Method for Vehicles with Increased Maneuverability. Sensors. 2021; 21(1):79. https://doi.org/10.3390/s21010079

Chicago/Turabian StyleHan, Chenlei, Michael Frey, and Frank Gauterin. 2021. "Modular Approach for Odometry Localization Method for Vehicles with Increased Maneuverability" Sensors 21, no. 1: 79. https://doi.org/10.3390/s21010079