Learning Polynomial-Based Separable Convolution for 3D Point Cloud Analysis

Abstract

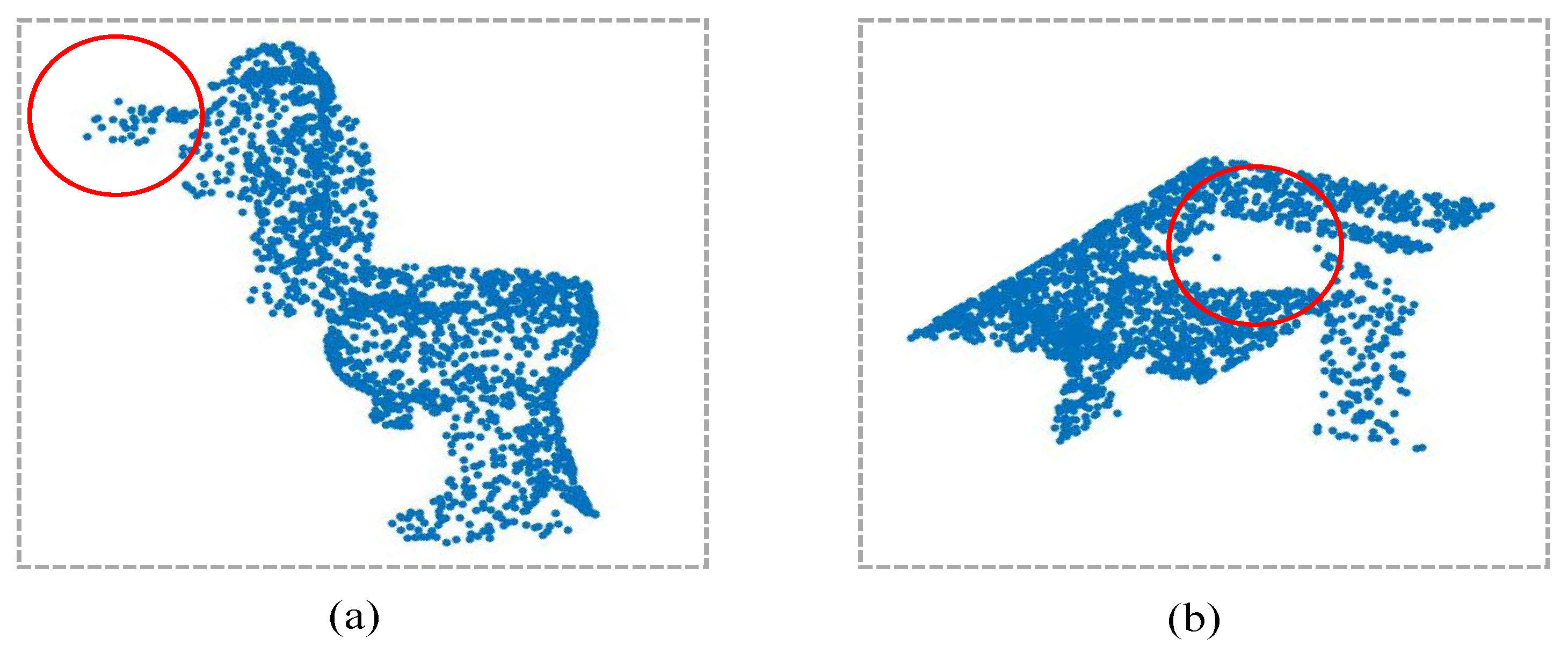

1. Introduction

2. Related Work

2.1. Convolution on 3D Point Cloud

2.2. Separable Convolution

3. Method

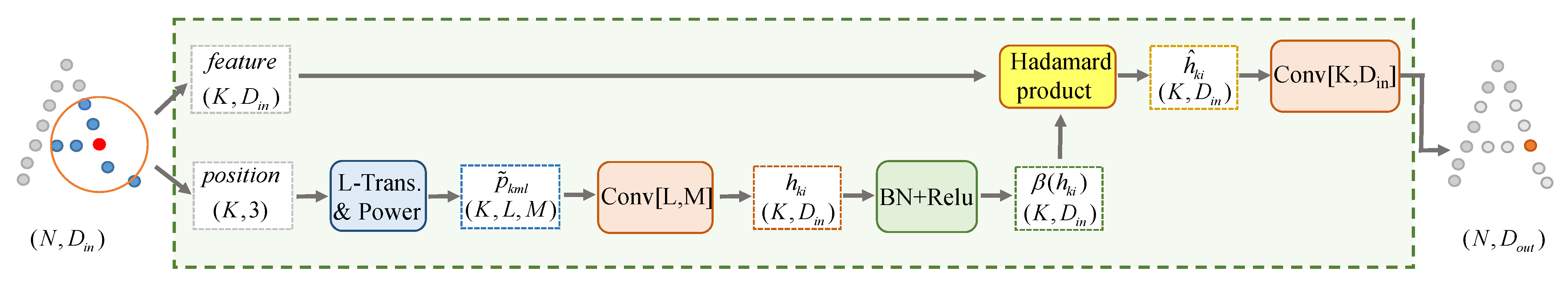

3.1. PSConv

3.2. Separable Formulation of PSConv

3.3. PSNet

4. Results

4.1. Datasets and Evaluation Methods

4.2. Compared Methods

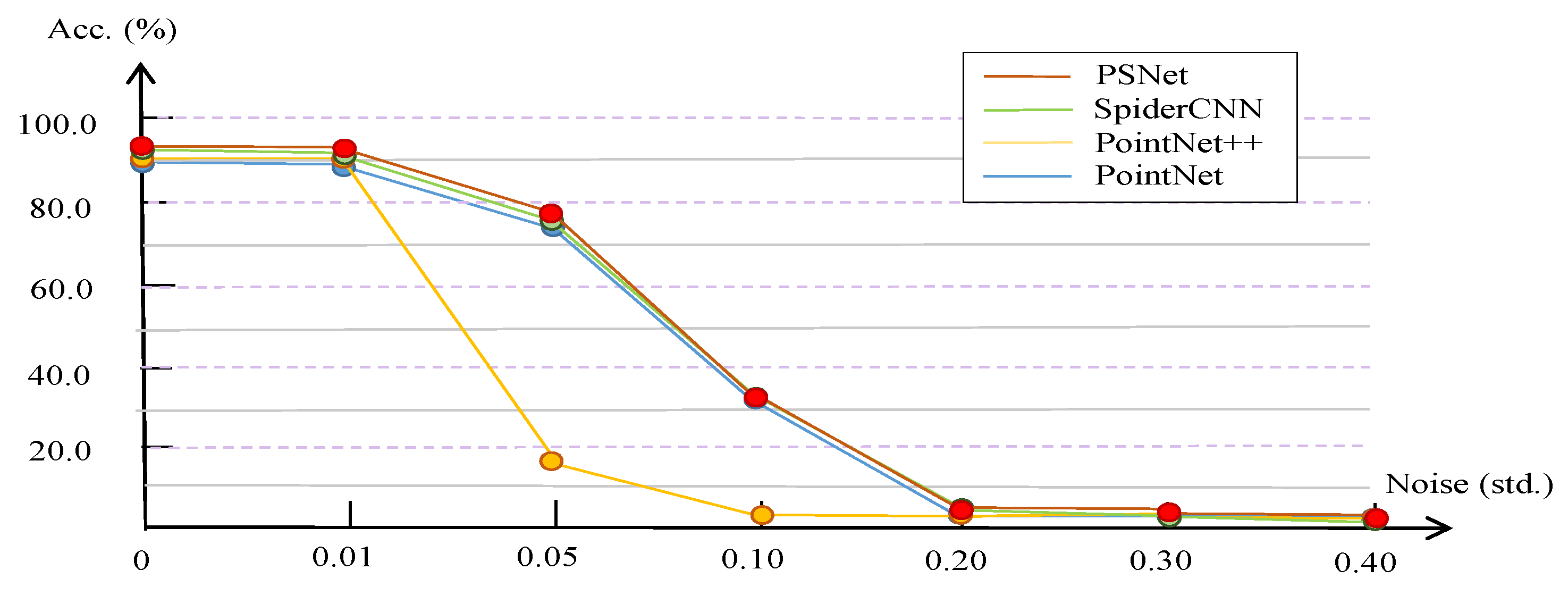

4.3. Shape Classification on ModelNet40

4.4. Shape Classification on ScanObjectNN

4.5. Shape Segmentation on ShapeNet Part

4.6. Ablation Study

4.7. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1

| Architecture | Parameters Size | ||

|---|---|---|---|

| Classification | Segmentation | ||

| Stage 1 | MLP →Max-pooling → PSConv1 → PSConv2 → PSConv3 → PSConv4 → | MLP:(32,32,64) PSConv1:(64) PSConv2:(64) PSConv3:(64) PSConv4:(64) | MLP:(32,32,64) PSConv1:(64) PSConv2:(64) PSConv3:(64) PSConv4:(64) |

| Concatenate | |||

| Stage 2 | MLP → Max-pooling → PSConv1 → PSConv2 → PSConv3 → PSConv4 → | MLP:(64, 64, 128) PSConv1:(128) PSConv2:(128) PSConv3:(128) PSConv4:(128) | MLP:(64, 64, 128) PSConv1:(128) PSConv2:(128) PSConv3:(128) PSConv4:(128) |

| Concatenate | |||

| MLP* | MLP | MLP:(256, 512, 1024) | MLP:(256, 512, 1024) |

| FP | FI1 → MLP1 → FI2 → MLP2 → FI3 → MLP3 | N/A | MLP1:(256, 256) MLP2:(256,128) MLP3:(128,128) |

| MLP** | MLP | MLP:(512, 256, 40) | MLP:(512, 256, 128, 50) |

Appendix A.2

| Method | mACC | Bag | Bin | Box | Cabinet | Chair | Desk | Display | Door | Shelf | Table | Bed | Pillow | Sink | Sofa | Toilet |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PointNet [4] | 74.4 | 47.1 | 80.0 | 35.7 | 80.0 | 93.6 | 86.7 | 73.8 | 97.6 | 81.6 | 88.9 | 59.1 | 76.2 | 54.2 | 85.7 | 76.5 |

| PointNet++ [5] | 82.1 | 70.6 | 90.0 | 35.7 | 84.0 | 96.2 | 83.3 | 81.0 | 88.1 | 83.7 | 87.0 | 86.4 | 85.7 | 79.2 | 92.9 | 88.2 |

| SpiderCNN [6] | 77.4 | 52.9 | 80.0 | 35.7 | 64.0 | 96.2 | 73.3 | 83.3 | 97.6 | 81.6 | 83.3 | 77.3 | 90.5 | 75.0 | 88.1 | 82.4 |

| PointCNN [7] | 83.3 | 64.7 | 90.0 | 46.4 | 82.7 | 98.7 | 83.3 | 85.7 | 88.1 | 85.7 | 88.9 | 86.4 | 90.5 | 70.8 | 92.9 | 94.1 |

| DGCNN [14] | 84.0 | 76.5 | 90.0 | 64.3 | 80.0 | 98.7 | 80.0 | 85.7 | 88.1 | 91.8 | 87.0 | 90.9 | 90.5 | 70.8 | 95.2 | 70.6 |

| 3DmFV [25] | 68.9 | 41.2 | 82.5 | 32.1 | 66.7 | 98.7 | 53.3 | 71.4 | 90.5 | 73.5 | 75.9 | 59.1 | 81.0 | 58.3 | 90.5 | 58.8 |

| Proposed | 84.3 | 52.9 | 85.0 | 75.0 | 85.3 | 100.0 | 80.0 | 85.7 | 95.2 | 77.6 | 88.9 | 86.4 | 85.7 | 79.2 | 95.2 | 91.7 |

| Method | mACC | Bag | Bin | Box | Cabinet | Chair | Desk | Display | Door | Shelf | Table | Bed | Pillow | Sink | Sofa | Toilet |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PointNet [4] | 69.4 | 47.1 | 65.0 | 25.0 | 65.3 | 93.6 | 50.0 | 76.2 | 95.2 | 87.8 | 72.2 | 77.3 | 76.2 | 62.5 | 83.3 | 64.7 |

| PointNet++ [5] | 79.9 | 52.9 | 90.0 | 39.3 | 80.0 | 96.2 | 80.0 | 78.6 | 90.5 | 83.7 | 74.1 | 90.9 | 85.7 | 87.5 | 92.9 | 76.5 |

| SpiderCNN [6] | 72.4 | 41.2 | 80.0 | 25.0 | 82.7 | 97.4 | 56.7 | 78.6 | 92.9 | 73.5 | 75.9 | 81.8 | 81.0 | 70.8 | 83.3 | 64.7 |

| PointCNN [7] | 83.3 | 58.8 | 95.0 | 50.0 | 82.7 | 100.0 | 73.3 | 83.3 | 90.5 | 89.8 | 83.3 | 90.9 | 90.5 | 79.2 | 100.0 | 82.4 |

| DGCNN [14] | 78.8 | 52.9 | 90.0 | 50.0 | 85.3 | 96.2 | 73.3 | 85.7 | 92.9 | 85.7 | 81.5 | 72.7 | 81.0 | 79.2 | 85.7 | 70.6 |

| 3DmFV [25] | 61.6 | 58.8 | 65.0 | 17.9 | 69.3 | 96.2 | 23.3 | 83.3 | 88.1 | 65.3 | 72.2 | 45.5 | 52.4 | 54.2 | 85.7 | 47.1 |

| Proposed | 83.4 | 35.3 | 87.5 | 71.4 | 88.0 | 94.9 | 83.3 | 88.1 | 95.2 | 91.8 | 83.3 | 86.4 | 90.5 | 79.2 | 92.9 | 83.3 |

| Method | mACC | Bag | Bin | Box | Cabinet | Chair | Desk | Display | Door | Shelf | Table | Bed | Pillow | Sink | Sofa | Toilet |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PointNet [4] | 63.4 | 36.1 | 69.8 | 10.5 | 62.6 | 89.0 | 50.0 | 73.0 | 93.8 | 72.6 | 67.8 | 61.8 | 67.6 | 64.2 | 76.7 | 55.3 |

| PointNet++ [5] | 75.4 | 49.4 | 84.4 | 31.6 | 77.4 | 91.3 | 74.0 | 79.4 | 85.2 | 72.6 | 72.6 | 75.5 | 81.0 | 80.8 | 90.5 | 85.9 |

| SpiderCNN [6] | 69.8 | 43.4 | 75.9 | 12.8 | 74.2 | 89.0 | 65.3 | 74.5 | 91.4 | 78.0 | 65.9 | 69.1 | 80.0 | 65.8 | 90.5 | 70.6 |

| PointCNN [7] | 75.1 | 57.8 | 82.9 | 33.1 | 83.6 | 92.6 | 65.3 | 78.4 | 84.8 | 84.2 | 67.4 | 80.0 | 80.0 | 72.5 | 91.9 | 85.9 |

| BAG-PN++ [8] | 77.5 | 54.2 | 85.9 | 39.8 | 81.7 | 90.8 | 76.0 | 84.3 | 87.6 | 78.4 | 74.4 | 73.6 | 80.0 | 77.5 | 91.9 | 85.9 |

| BAG-DGCNN [8] | 75.7 | 48.2 | 81.9 | 30.1 | 84.4 | 92.6 | 77.3 | 80.4 | 92.4 | 80.5 | 74.1 | 72.7 | 78.1 | 79.2 | 91.0 | 72.9 |

| DGCNN [14] | 73.6 | 49.4 | 82.4 | 33.1 | 83.9 | 91.8 | 63.3 | 77.0 | 89.0 | 79.3 | 77.4 | 64.5 | 77.1 | 75.0 | 91.4 | 69.4 |

| 3DmFV [25] | 58.1 | 39.8 | 62.8 | 15.0 | 65.1 | 84.4 | 36.0 | 62.3 | 85.2 | 60.6 | 66.7 | 51.8 | 61.9 | 46.7 | 72.4 | 61.2 |

| Proposed | 78.6 | 25.3 | 69.8 | 63.2 | 79.0 | 95.1 | 84.7 | 87.7 | 95.7 | 85.5 | 77.8 | 91.8 | 86.7 | 77.5 | 93.3 | 66.3 |

References

- Varley, J.; Weisz, J.; Weiss, J.; Allen, P. Generating Multi-Fingered Robotic Grasps via Deep Learning. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–2 October 2015; pp. 4415–4420. [Google Scholar]

- Liang, H.; Ma, X.; Li, S.; Gorner, M.; Tang, S.; Fang, B.; Sun, F.; Zhang, J. PointNetGPD: Detecting Grasp Configurations from Point Sets. In Proceedings of the International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 3629–3635. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum PointNets for 3D Object Detection from RGB-D Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar]

- Qi, C.R.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5100–5109. [Google Scholar]

- Xu, Y.; Fan, T.; Xu, M.; Zeng, L.; Qiao, Y. SpiderCNN: Deep learning on point sets with parameterized convolutional filters. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 90–105. [Google Scholar]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. PointCNN: Convolution on x-transformed points. In Proceedings of the Advances in Neural Information Processing Systems, Montreal QC, Canada, 2–8 December 2018; pp. 820–830. [Google Scholar]

- Uy, M.A.; Pham, Q.-H.; Hua, B.-S.; Nguyen, T.; Yeung, S.-K. Revisiting Point Cloud Classification: A New Benchmark Dataset and Classification Model on Real-World Data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 1588–1597. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: Boston, MA, USA, 2015; pp. 1912–1920. [Google Scholar]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for Real-Time Object Recognition. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar]

- Su, H.; Maji, S.; Kalogerakis, E.; Learned-Miller, E. Multi-View Convolutional Neural Networks for 3D Shape Recognition. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 945–953. [Google Scholar]

- Wei, X.; Yu, R.; Sun, J. View-GCN: View-Based Graph Convolutional Network for 3D Shape Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1847–1856. [Google Scholar]

- Liu, Y.; Fan, B.; Xiang, S.; Pan, C. Relation-Shape Convolutional Neural Network for Point Cloud Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8887–8896. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph. 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Yang, B.; Luo, W.; Urtasun, R. PIXOR: Real-Time 3D Object Detection from Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7652–7660. [Google Scholar]

- Su, H.; Jampani, V.; Sun, D.; Maji, S.; Kalogerakis, E.; Yang, M.-H.; Kautz, J. SPLATNet: Sparse Lattice Networks for Point Cloud Processing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2530–2539. [Google Scholar]

- Tatarchenko, M.; Park, J.; Koltun, V.; Zhou, Q.-Y. Tangent Convolutions for Dense Prediction in 3D. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3887–3896. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.-E.; Marcotegui, B.; Goulette, F.; Guibas, L. KPConv: Flexible and Deformable Convolution for Point Clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 6410–6419. [Google Scholar]

- Mao, J.; Wang, X.; Li, H. Interpolated Convolutional Networks for 3D Point Cloud Understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 1578–1587. [Google Scholar]

- Zhao, H.; Jiang, L.; Fu, C.-W.; Jia, J. PointWeb: Enhancing Local Neighborhood Features for Point Cloud Processing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5560–5568. [Google Scholar]

- Wu, W.; Qi, Z.; Fuxin, L. PointConv: Deep Convolutional Networks on 3D Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 9613–9622. [Google Scholar]

- Yi, L.; Kim, V.G.; Ceylan, D.; Shen, I.-C.; Yan, M.; Su, H.; Lu, C.; Huang, Q.; Sheffer, A.; Guibas, L. A Scalable Active Framework for Region Annotation in 3D Shape Collections. ACM Trans. Graph. 2016, 35, 1–12. [Google Scholar] [CrossRef]

- Le, T.; Duan, Y. PointGrid: A Deep Network for 3D Shape Understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9204–9214. [Google Scholar]

- Komarichev, A.; Zhong, Z.; Hua, J. A-CNN: Annularly Convolutional Neural Networks on Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7413–7422. [Google Scholar]

- Ben-Shabat, Y.; Lindenbaum, M.; Fischer, A. 3DmFV: Three-Dimensional Point Cloud Classification in Real-Time Using Convolutional Neural Networks. IEEE Robot. Autom. Lett. 2018, 3, 3145–3152. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Howard, A.; Sandler, M.; Chen, B.; Wang, W.; Chen, L.-C.; Tan, M.; Chu, G.; Vasudevan, V.; Zhu, Y.; Pang, R.; et al. Searching for MobileNetV3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–3 November 2019; pp. 1314–1324. [Google Scholar]

- Daquan, Z.; Hou, Q.; Chen, Y.; Feng, J.; Yan, S. Rethinking Bottleneck Structure for Efficient Mobile Network Design. arXiv 2020, arXiv:2007.02269. [Google Scholar]

- Olshausen, B.A.; Field, D.J. Sparse Coding with an Overcomplete Basis Set: A Strategy Employed by V1? Vis. Res. 1997, 37, 3311–3325. [Google Scholar] [CrossRef]

- Sifre, L. Rigid-Motion Scattering for Image Classification. Ph. D. Thesis, École Polytechnique, Palaiseau, France, 2014. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Lei, H.; Akhtar, N.; Mian, A. SegGCN: Efficient 3D Point Cloud Segmentation With Fuzzy Spherical Kernel. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11608–11617. [Google Scholar]

- Branges, L.D. The Stone-Weierstrass Theorem. Proc. Am. Math. Soc. 1959, 10, 822. [Google Scholar] [CrossRef]

- Klokov, R.; Lempitsky, V. Escape from Cells: Deep Kd-Networks for the Recognition of 3D Point Cloud Models. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 863–872. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning (Adaptive Computation and Machine Learning); The MIT Press: Cambridge, MA, USA, 2016; ISBN 978-0-262-03561-3. [Google Scholar]

- Wu, Z.; Shen, C.; van den Hengel, A. Wider or Deeper: Revisiting the ResNet Model for Visual Recognition. Pattern Recognit. 2019, 90, 119–133. [Google Scholar] [CrossRef]

- Iandola, F.; Moskewicz, M.; Karayev, S.; Girshick, R.; Darrell, T.; Keutzer, K. DenseNet: Implementing Efficient ConvNet Descriptor Pyramids. arXiv 2014, arXiv:1404.1869. [Google Scholar]

| Method | # Input | OA | mACC |

|---|---|---|---|

| PointNet [4] | 1024 | 89.1 | 86.2 |

| PointNet++ [5] | 1024 | 90.7 | – |

| PointCNN [7] | 1024 | 92.5 | 88.1 |

| RSCNN [13] | 1024 | 91.7 | – |

| DGCNN [14] | 1024 | 92.2 | 90.2 |

| InterpConv [19] | 1024 | 93.0 | – |

| PointWeb [20] | 1024 | 92.3 | 89.4 |

| PointConv [21] | 1024 | 92.5 | – |

| PointGrid [23] | 1024 | 92.0 | 88.9 |

| A-CNN [24] | 1024 | 92.6 | 90.3 |

| 3DmFV [25] | 1024 | 91.4 | 86.3 |

| PointNet++ [5] | 5000 | 91.9 | – |

| SpiderCNN [6] | 5000 | 92.4 | 86.8 |

| KD-Net [35] | 5000 | 91.8 | 88.5 |

| KPConv-rigid [18] | 6800 | 92.7 | – |

| KPConv-deform [18] | 6800 | 92.9 | – |

| Proposed | 1024 | 93.1 | 90.4 |

| Measure | Dataset | PointNet [4] | PointNet++ [5] | SpiderCNN [6] | PointCNN [7] | DGCNN [14] | 3DmFV [25] | Ours |

|---|---|---|---|---|---|---|---|---|

| OA | SV | 79.2 | 84.3 | 79.5 | 85.5 | 86.2 | 73.8 | 86.6 |

| SB | 73.3 | 82.3 | 77.1 | 86.4 | 82.8 | 68.2 | 86.6 | |

| SP | 68.2 | 77.9 | 73.7 | 78.5 | 78.1 | 63.0 | 82.2 | |

| mACC | SV | 74.4 | 82.1 | 77.4 | 83.3 | 84.0 | 68.9 | 84.3 |

| SB | 69.4 | 79.9 | 72.4 | 83.3 | 78.8 | 58.8 | 83.4 | |

| SP | 63.4 | 75.4 | 69.8 | 75.1 | 73.6 | 58.1 | 78.6 |

| Method | Mean | Aero | Bag | Cap | Car | Chair | Earph. | Guitar | Knife | Lamp | Laptop | Motor | Mug | Pistol | Rocket | Skate | Table |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PointNet [4] | 83.7 | 83.4 | 78.7 | 82.5 | 74.9 | 89.6 | 73.0 | 91.5 | 85.9 | 80.8 | 95.3 | 65.2 | 93.0 | 81.2 | 57.9 | 72.8 | 80.6 |

| PointNet++ [5] | 85.1 | 82.4 | 79.0 | 87.7 | 77.3 | 90.8 | 71.8 | 91.0 | 85.9 | 83.7 | 95.3 | 71.6 | 94.1 | 81.3 | 58.7 | 76.4 | 82.6 |

| SpiderCNN [6] | 85.3 | 83.5 | 81.0 | 87.2 | 77.5 | 90.7 | 76.8 | 91.1 | 87.3 | 83.3 | 95.8 | 70.2 | 93.5 | 82.7 | 59.7 | 75.8 | 82.8 |

| PointCNN [7] | 86.1 | 84.1 | 86.5 | 86.0 | 80.8 | 90.6 | 79.7 | 92.3 | 88.4 | 85.3 | 96.1 | 77.2 | 95.3 | 84.2 | 64.2 | 80.0 | 83.0 |

| RSCNN [13] | 86.2 | 83.5 | 84.8 | 88.8 | 79.6 | 91.2 | 81.1 | 91.6 | 88.4 | 86.0 | 96.0 | 73.7 | 94.1 | 83.4 | 60.5 | 77.7 | 83.6 |

| DGCNN [14] | 85.1 | 84.2 | 83.7 | 84.4 | 77.1 | 90.9 | 78.5 | 91.5 | 87.3 | 82.9 | 96.0 | 67.8 | 93.3 | 82.6 | 59.7 | 75.5 | 82.0 |

| SPLATNet3D [16] | 84.6 | 81.9 | 83.9 | 88.6 | 79.5 | 90.1 | 73.5 | 91.3 | 84.7 | 84.5 | 96.3 | 69.7 | 95.0 | 81.7 | 59.2 | 70.4 | 81.3 |

| SPLATNet2D-3D [16] | 85.4 | 83.2 | 84.3 | 89.1 | 80.3 | 90.7 | 75.5 | 92.1 | 87.1 | 83.9 | 96.3 | 75.6 | 95.8 | 83.8 | 64.0 | 75.5 | 81.8 |

| KPConv-rigid [18] | 86.2 | 83.8 | 86.1 | 88.2 | 81.6 | 91.0 | 80.1 | 92.1 | 87.8 | 82.2 | 96.2 | 77.9 | 95.7 | 86.8 | 65.3 | 81.7 | 83.6 |

| KPConv-deform [18] | 86.4 | 84.6 | 86.3 | 87.2 | 81.1 | 91.1 | 77.8 | 92.6 | 88.4 | 82.7 | 96.2 | 78.1 | 95.8 | 85.4 | 69.0 | 82.0 | 83.6 |

| PointConv [21] | 85.7 | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – | – |

| KD-Net [35] | 82.3 | 80.1 | 74.6 | 74.3 | 70.3 | 88.6 | 73.5 | 90.2 | 87.2 | 81.0 | 94.9 | 57.4 | 86.7 | 78.1 | 51.8 | 69.9 | 80.3 |

| Proposed | 86.2 | 83.5 | 85.4 | 86.5 | 79.8 | 91.3 | 78.0 | 91.4 | 88.6 | 84.5 | 96.1 | 72.2 | 95.0 | 83.6 | 69.0 | 75.7 | 83.8 |

| Method | L-Trans. | Power | Acc. |

|---|---|---|---|

| PSNet-noL-trans | × | √ | 92.4 |

| PSNet-noPower | √ | × | 92.3 |

| PSNet | √ | √ | 92.8 |

| L-Trans. | ReLU | Sig. | Tanh | L-ReLU | Exp | FC | Ours |

|---|---|---|---|---|---|---|---|

| 92.32 | 92.45 | 92.51 | 92.47 | 92.53 | 92.34 | 92.34 | 92.79 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, R.; Sun, J. Learning Polynomial-Based Separable Convolution for 3D Point Cloud Analysis. Sensors 2021, 21, 4211. https://doi.org/10.3390/s21124211

Yu R, Sun J. Learning Polynomial-Based Separable Convolution for 3D Point Cloud Analysis. Sensors. 2021; 21(12):4211. https://doi.org/10.3390/s21124211

Chicago/Turabian StyleYu, Ruixuan, and Jian Sun. 2021. "Learning Polynomial-Based Separable Convolution for 3D Point Cloud Analysis" Sensors 21, no. 12: 4211. https://doi.org/10.3390/s21124211

APA StyleYu, R., & Sun, J. (2021). Learning Polynomial-Based Separable Convolution for 3D Point Cloud Analysis. Sensors, 21(12), 4211. https://doi.org/10.3390/s21124211