HARP: Hierarchical Attention Oriented Region-Based Processing for High-Performance Computation in Vision Sensor

Abstract

1. Introduction

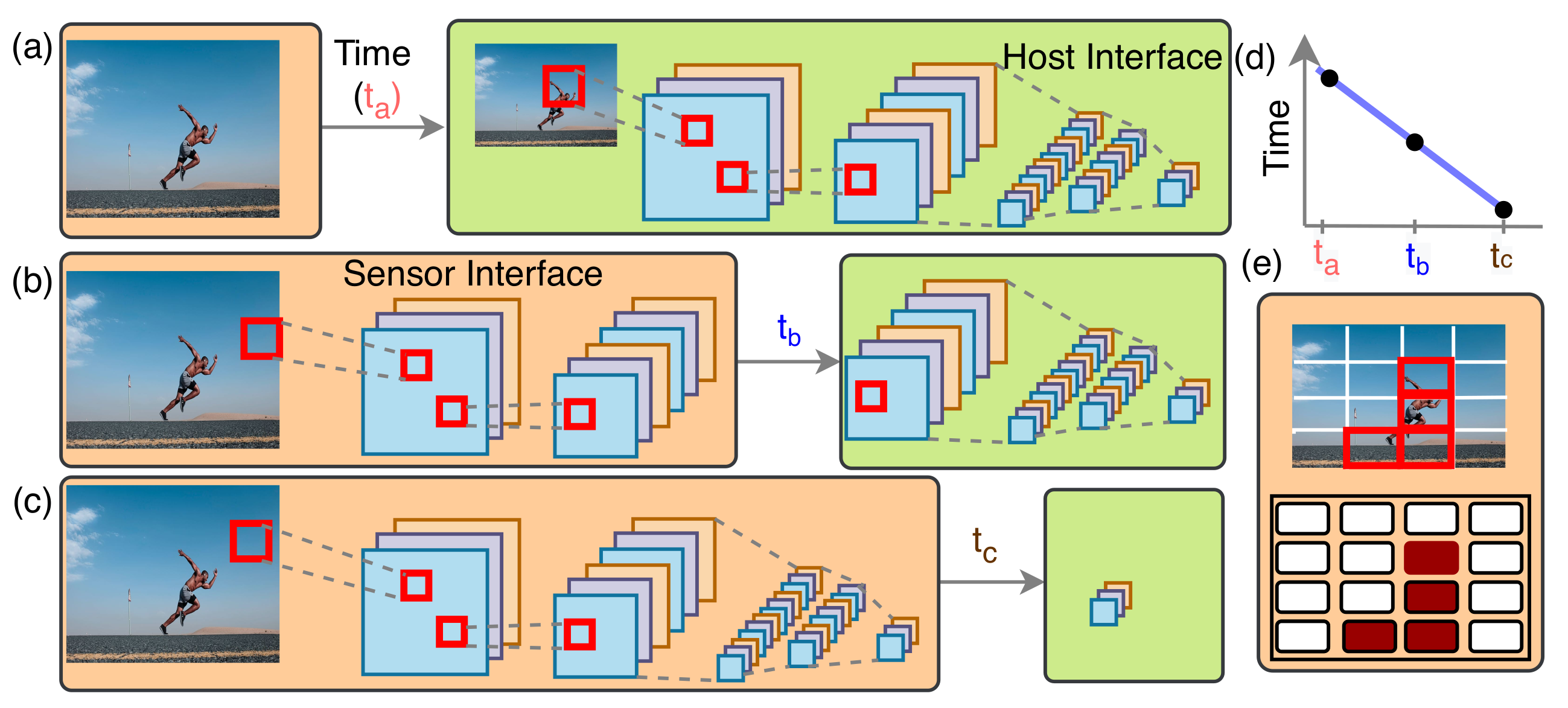

- We propose HARP architecture to perform low-level image processing and high-level inference computation at the image sensor, making sensor-level information creation.

- HARP introduces attention-oriented processing in the circuit to prune redundant spatiotemporal information and enables inference computation with a reduced amount of relevant data.

- Our model breaks the conventional nature of sequential image processing and applies massive parallelism to obtain high throughput by exploiting the large bandwidth available at the image source.

- The proposed method mitigates the high on-chip memory requirement and lessens the data movement among different layers. Furthermore, attention-oriented processing reduces dynamic power consumption and latency.

- FPGA and ASIC prototypes of our architecture are analyzed with different situations to observe the possible power and speedup.

2. Related Work

3. Proposed Architecture

3.1. Attention-Based Preprocessing Layer (APL)

3.1.1. Image Acquisition

3.1.2. Lightweight Method of Relevant Region Detection

3.2. Inference Computation Layer (ICL)

3.2.1. Region Inference Engine (RIE) in the ICL

- (a)

- Convolution Computation Process in each RIE:

- (b)

- Architecture of the FCL and SCL:

- (c)

- Configurable datapath in between the FCL and SCL:

3.2.2. Fully-Connected Neural-Network (FcNN)

4. Result

4.1. Evaluation Infrastructure

4.2. Evaluation Metric

4.3. Implementation Details

4.3.1. Silicon Footprint Analysis

4.3.2. Analysis on Energy and Latency

4.3.3. Impact of Irrelevant Regions on Power and Execution Time

4.3.4. Pipeline Processing for Faster Operation

4.3.5. Memory Optimization

4.3.6. Maximum Frequency

4.4. Case Study on Classifier

4.5. Performance Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AM | Attention Module |

| APL | Attention-Based Preprocessing Layer |

| FcNN | Fully Connected Neural Network |

| FCL | First Convolutional Layer |

| HARP | Hierarchical Attention oriented Region-based Processing |

| ICL | Inference Computation Layer |

| IOB | Intermediate Output Buffer |

| PPU | Pixel Processing Unit |

| RIE | Region Inference Engine |

| RPU | Region Processing Unit |

| ROB | Region Output Buffer |

| ROIC | Readout Integrated Chip |

| RSS | Regional Spatial Saliency |

| RTS | Regional Temporal Saliency |

| SCL | Second Convolutional Layer |

References

- Kleinfelder, S.; Lim, S.; Liu, X.; El Gamal, A. A 10000 frames/s CMOS digital pixel sensor. IEEE J. Solid-State Circuits 2001, 36, 2049–2059. [Google Scholar] [CrossRef]

- Sakakibara, M.; Ogawa, K.; Sakai, S.; Tochigi, Y.; Honda, K.; Kikuchi, H.; Wada, T.; Kamikubo, Y.; Miura, T.; Nakamizo, M.; et al. A back-illuminated global-shutter CMOS image sensor with pixel-parallel 14b subthreshold ADC. In Proceedings of the 2018 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 11–15 February 2018; IEEE: New York, NY, USA, 2018; pp. 80–82. [Google Scholar]

- Goto, M.; Hagiwara, K.; Iguchi, Y.; Ohtake, H.; Saraya, T.; Kobayashi, M.; Higurashi, E.; Toshiyoshi, H.; Hiramoto, T. Pixel-parallel 3-D integrated CMOS image sensors with pulse frequency modulation A/D converters developed by direct bonding of SOI layers. IEEE Trans. Electron Devices 2015, 62, 3530–3535. [Google Scholar] [CrossRef]

- Debrunner, T.; Saeedi, S.; Bose, L.; Davison, A.J.; Kelly, P.H. Camera Tracking on Focal-Plane Sensor-Processor Arrays. In Proceedings of the Workshop on Programmability and Architectures for Heterogeneous Multicores (MULTIPROG), Vancouver, BC, Canada, 15 November 2019. [Google Scholar]

- Bose, L.; Chen, J.; Carey, S.J.; Dudek, P.; Mayol-Cuevas, W. A Camera That CNNs: Towards Embedded Neural Networks on Pixel Processor Arrays. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Korea, 27–28 October 2019; IEEE: New York, NY, USA, 2019; pp. 1335–1344. [Google Scholar]

- Bobda, C.; Velipasalar, S. Distributed Embedded Smart Cameras: Architectures, Design and Applications, 1st ed.; Springer: New York, NY, USA, 2014. [Google Scholar]

- Eklund, J.E.; Svensson, C.; Astrom, A. VLSI implementation of a focal plane image processor-a realization of the near-sensor image processing concept. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 1996, 4, 322–335. [Google Scholar] [CrossRef]

- Garcia Lopez, P.; Montresor, A.; Epema, D.; Datta, A.; Higashino, T.; Iamnitchi, A.; Barcellos, M.; Felber, P.; Riviere, E. Edge-centric computing: Vision and challenges. ACM SIGCOMM Comput. Commun. Rev. 2015. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Schwartz, E.L.; Greve, D.N.; Bonmassar, G. Space-variant active vision: Definition, overview and examples. Neural Netw. 1995, 8, 1297–1308. [Google Scholar] [CrossRef]

- Bhowmik, P.; Pantho, M.J.H.; Bobda, C. Bio-inspired smart vision sensor: Toward a reconfigurable hardware modeling of the hierarchical processing in the brain. J. Real Time Image Proc. 2021, 18, 157–174. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C. Feature combination strategies for saliency-based visual attention systems. J. Electron. Imaging 2001, 10, 161–169. [Google Scholar] [CrossRef]

- Lee, M.; Mudassar, B.A.; Na, T.; Mukhopadhyay, S. A Spatiotemporal Pre-processing Network for Activity Recognition under Rain. In Proceedings of the BMVC, Cardiff, UK, 9–12 September 2019; p. 178. [Google Scholar]

- Bhowmik, P.; Pantho, M.J.H.; Bobda, C. Visual cortex inspired pixel-level re-configurable processors for smart image sensors. In Proceedings of the 2019 56th ACM/IEEE Design Automation Conference (DAC), Las Vegas, NV, USA, 2–6 June 2019; pp. 1–2. [Google Scholar]

- Strigl, D.; Kofler, K.; Podlipnig, S. Performance and scalability of GPU-based convolutional neural networks. In Proceedings of the 2010 18th Euromicro Conference on Parallel, Distributed and Network-based Processing, Pisa, Italy, 17–19 February 2010; IEEE: New York, NY, USA, 2010; pp. 317–324. [Google Scholar]

- Li, Z.; Wang, L.; Guo, S.; Deng, Y.; Dou, Q.; Zhou, H.; Lu, W. Laius: An 8-bit fixed-point CNN hardware inference engine. In Proceedings of the 2017 IEEE International Symposium on Parallel and Distributed Processing with Applications and 2017 IEEE International Conference on Ubiquitous Computing and Communications (ISPA/IUCC), Guangzhou, China, 12–15 December 2017; IEEE: New York, NY, USA, 2017; pp. 143–150. [Google Scholar]

- Solovyev, R.A.; Kalinin, A.A.; Kustov, A.G.; Telpukhov, D.V.; Ruhlov, V.S. FPGA implementation of convolutional neural networks with fixed-point calculations. arXiv 2018, arXiv:1808.09945. [Google Scholar]

- Feng, G.; Hu, Z.; Chen, S.; Wu, F. Energy-efficient and high-throughput FPGA-based accelerator for Convolutional Neural Networks. In Proceedings of the 2016 13th IEEE International Conference on Solid-State and Integrated Circuit Technology (ICSICT), Hangzhou, China, 25–28 October 2016; IEEE: New York, NY, USA, 2016; pp. 624–626. [Google Scholar]

- Spagnolo, F.; Perri, S.; Frustaci, F.; Corsonello, P. Energy-Efficient Architecture for CNNs Inference on Heterogeneous FPGA. J. Low Power Electron. Appl. 2020, 10, 1. [Google Scholar] [CrossRef]

- Zhang, C.; Sun, G.; Fang, Z.; Zhou, P.; Pan, P.; Cong, J. Caffeine: Toward uniformed representation and acceleration for deep convolutional neural networks. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2018, 38, 2072–2085. [Google Scholar] [CrossRef]

- Ma, Y.; Cao, Y.; Vrudhula, S.; Seo, J.S. Optimizing the convolution operation to accelerate deep neural networks on FPGA. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2018, 26, 1354–1367. [Google Scholar] [CrossRef]

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D.; Agrawal, G.; Bajwa, R.; Bates, S.; Bhatia, S.; Boden, N.; Borchers, A.; et al. In-Datacenter Performance Analysis of a Tensor Processing Unit. In Proceedings of the 44th Annual International Symposium on Computer Architecture, Toronto, ON, Canada, 24–28 June 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1–12. [Google Scholar]

- Davies, M.; Srinivasa, N.; Lin, T.H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A neuromorphic manycore processor with on-chip learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Possa, P.D.C.; Harb, N.; Dokládalová, E.; Valderrama, C. P2IP: A novel low-latency Programmable Pipeline Image Processor. Microprocess. Microsyst. 2015, 39, 529–540. [Google Scholar] [CrossRef]

- Chen, J.; Carey, S.J.; Dudek, P. Scamp5d Vision System and Development Framework. In Proceedings of the 12th International Conference on Distributed Smart Cameras, Eindhoven, The Netherlands, 3–4 September 2018; Association for Computing Machinery: New York, NY, USA, 2018. [Google Scholar]

- Du, Z.; Fasthuber, R.; Chen, T.; Ienne, P.; Li, L.; Luo, T.; Feng, X.; Chen, Y.; Temam, O. ShiDianNao: Shifting Vision Processing Closer to the Sensor. In Proceedings of the 42nd Annual International Symposium on Computer Architecture, Portland, OR, USA, 13–17 June 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 92–104. [Google Scholar]

- Gallego, G.; Delbruck, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.; Conradt, J.; Daniilidis, K.; et al. Event-based vision: A survey. arXiv 2019, arXiv:1904.08405. [Google Scholar]

- Boluda, J.A.; Zuccarello, P.; Pardo, F.; Vegara, F. Selective change driven imaging: A biomimetic visual sensing strategy. Sensors 2011, 11, 1000. [Google Scholar] [CrossRef]

- Delbruck, T. Frame-free dynamic digital vision. In Proceedings of International Symposium on Secure-Life Electronics Advanced Electronics for Quality Life and Society; Tokyo, Japan, 6–7 March 2008, Citeseer: State College, PA, USA, 2008; Volume 1, pp. 21–26. [Google Scholar]

- Samal, K.; Wolf, M.; Mukhopadhyay, S. Attention-Based Activation Pruning to Reduce Data Movement in Real-Time AI: A Case-Study on Local Motion Planning in Autonomous Vehicles. IEEE J. Emerg. Sel. Top. Circuits Syst. 2020, 10, 306–319. [Google Scholar] [CrossRef]

- Leal-Taixé, L.; Milan, A.; Reid, I.; Roth, S.; Schindler, K. Motchallenge 2015: Towards a benchmark for multi-target tracking. arXiv 2015, arXiv:1504.01942. [Google Scholar]

- Ghani, A.; See, C.H.; Sudhakaran, V.; Ahmad, J.; Abd-Alhameed, R. Accelerating retinal fundus image classification using artificial neural networks (ANNs) and reconfigurable hardware (FPGA). Electronics 2019, 8, 1522. [Google Scholar] [CrossRef]

- Murmann, B. ADC Performance Survey 1997–2020. Available online: http://web.stanford.edu/~murmann/adcsurvey.html (accessed on 2 August 2020).

- Karimi, N.; Chakrabarty, K. Detection, diagnosis, and recovery from clock-domain crossing failures in multiclock SoCs. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2013, 32, 1395–1408. [Google Scholar] [CrossRef]

- Xilinx. UltraScale Architecture Configurable Logic Block. Available online: https://www.xilinx.com/support/documentation/user_guides/ug574-ultrascale-clb.pdf (accessed on 20 September 2020).

- Aungmaneeporn, M.; Patil, K.; Chumchu, P. Image Dataset of Aedes and Culex Mosquito Species. IEEE Dataport 2020. [Google Scholar] [CrossRef]

- Williams, S.; Waterman, A.; Patterson, D. Roofline: An insightful visual performance model for multicore architectures. Commun. ACM 2009, 52, 65–76. [Google Scholar] [CrossRef]

- Wang, Y.; Xu, J.; Han, Y.; Li, H.; Li, X. DeepBurning: Automatic generation of FPGA-based learning accelerators for the neural network family. In Proceedings of the 2016 53nd ACM/EDAC/IEEE Design Automation Conference (DAC), Austin, TX, USA, 5–9 June 2016; IEEE: New York, NY, USA, 2016. [Google Scholar]

- Ko, J.H.; Na, T.; Amir, M.F.; Mukhopadhyay, S. Edge-host partitioning of deep neural networks with feature space encoding for resource-constrained internet-of-things platforms. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; IEEE: New York, NY, USA, 2018; pp. 1–6. [Google Scholar]

- Bose, L.; Chen, J.; Carey, S.J.; Dudek, P.; Mayol-Cuevas, W. Fully Embedding Fast Convolutional Networks on Pixel Processor Arrays. In Proceedings of the 16th European Conference on Computer Vision, ECCV 2020, Glasgow, UK, 23–28 August 2020. [Google Scholar]

| RTS | RSS | Impact on the Next Layer | |

|---|---|---|---|

| Region Processor | Output from the Region | ||

| 1 | 1 | Active | Driven by current state |

| 0 | 1 | Inactive | Driven by previous state |

| 1 | 0 | Inactive | Forced to Zero |

| 0 | 0 | Inactive | Forced to Zero |

| Threshold Value | ≤5 | ≤10 | ≤15 |

|---|---|---|---|

| Avg. Irrelevant regions (MNIST) | 50% | 62.5% | 68.7% |

| Avg. Irrelevant regions (Fashion MNIST) | 21.8% | 29.3% | 35.6% |

| Accuracy Drop (MNIST) | 0% | 0.9% | 5.8% |

| Accuracy Drop (Fashion MNIST) | 0% | 0% | 1% |

| Parameter/Module | Dimension | Parameter/Module | Dimension |

|---|---|---|---|

| Image Size (M × N) | 224 × 224 | No. of Regions (M) | 196 |

| Region Size with neighboring pixels | 18 × 18 | Pixels in a region (N) | 256 |

| RPU Size | 16 × 16 | No. of RIE | 4 |

| FCL Size | 16 × 16 | Kernel size (p × q) | 3 × 3 |

| SCL Size | 14 × 14 | PEs in FcNN (P) | 100 |

| LUT | FF | DSP | BRAM | Power (W) | Fmax | |

|---|---|---|---|---|---|---|

| APL | 154,404 | 210,744 | 0 | - | 2.044 | |

| RIE | 318,016 | 450,896 | 2,048 | - | 7.876 | 320 |

| FcNN | 3,982 | 3,632 | 104 | - | 0.47 | MHz |

| Overall | 469,288 | 663,488 | 2,100 | 27 | 10.68 | |

| Utilization | 39.68 (%) | 28.06 (%) | 30.70 (%) | 1.25 (%) | - |

| Modules | Area (µm) | Power (mW) | Delay µS | Wire Length µm | >Density (%) | Fmax MHz | |||

|---|---|---|---|---|---|---|---|---|---|

| Internal | Switching | Leakage | Total | ||||||

| APL | 950,989 | 314.6 | 51.56 | 6.48 | 372.68 | 0.71 | 3,125,754 | 71.2 | 430 |

| IRE | 1,830,866 | 408.1 | 142.14 | 10.33 | 581.4 | 2.47 | 6,773,609 | 68.05 | |

| FCL | 996,853 | 214.5 | 81.07 | 7.14 | 302.7 | 1.53 | 3,466,860 | 69.11 | |

| SCL | 697,797 | 160.5 | 63.19 | 5.31 | 229.05 | 1.12 | 260,0145 | 68.8 | |

| FcNN | 70,885.6 | 20.84 | 3.70 | 0.52 | 25.06 | 0.01 | 149,958 | 69.43 | |

| [9] | [19] | [21] | [38] | [20] | [40] | [39] | HARP | ||

|---|---|---|---|---|---|---|---|---|---|

| Medium | GPU | Xilinx FPGA | Intel FPGA | Xilinx FPGA | Xilinx FPGA | Cust. Hard. | ASIC | Xilinx FPGA | ASIC |

| Device | GTX Titan Black | Zynq XC7Z045 | Arria10 GX1150 | Zynq XC7Z045 | Kintex KU060 | Image Sensor | 28 nm Tech. | Virtex XCVU9P | 90 nm Tech. |

| Model | VGG-16 | VGG-16 | VGG-16 | VGG-16 | VGG-16 | LeNet5 | VGG-16 | VGG-16 | VGG-16 |

| Precision | 32-bits | 8-bits | 16-bits | 16-bits | 16-bits | 13-bits | 16-bits | 14-bits | 14-bits |

| Logics/Area | ∼ | 30 K | 138 K | 218 K | 433 K | ∼ | 1.079 mm | 469 K | 9.2 mm |

| Fmax | ∼ | 167 MHz | 200 MHz | 100 MHz | 200 MHz | ∼ | ∼ | 320 MHz | 430 MHz |

| Latency | 128.62 ms | 84.75 ms | 43.2 ms | 95.48 ms | ∼ | ∼ | ∼ | 47.19 ms | 34.78 ms |

| GOPs | ∼ | 135 | 30.95 | 57.31 | 45.07 | ∼ | ∼ | 49.92 | 58.28 |

| FPS | 7.8 | 11.8 | 23.14 | 10.47 | ∼ | 3000 | ∼ | 21.18 | 28.75 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bhowmik, P.; Pantho, M.J.H.; Bobda, C. HARP: Hierarchical Attention Oriented Region-Based Processing for High-Performance Computation in Vision Sensor. Sensors 2021, 21, 1757. https://doi.org/10.3390/s21051757

Bhowmik P, Pantho MJH, Bobda C. HARP: Hierarchical Attention Oriented Region-Based Processing for High-Performance Computation in Vision Sensor. Sensors. 2021; 21(5):1757. https://doi.org/10.3390/s21051757

Chicago/Turabian StyleBhowmik, Pankaj, Md Jubaer Hossain Pantho, and Christophe Bobda. 2021. "HARP: Hierarchical Attention Oriented Region-Based Processing for High-Performance Computation in Vision Sensor" Sensors 21, no. 5: 1757. https://doi.org/10.3390/s21051757

APA StyleBhowmik, P., Pantho, M. J. H., & Bobda, C. (2021). HARP: Hierarchical Attention Oriented Region-Based Processing for High-Performance Computation in Vision Sensor. Sensors, 21(5), 1757. https://doi.org/10.3390/s21051757