Relation3DMOT: Exploiting Deep Affinity for 3D Multi-Object Tracking from View Aggregation

Abstract

:1. Introduction

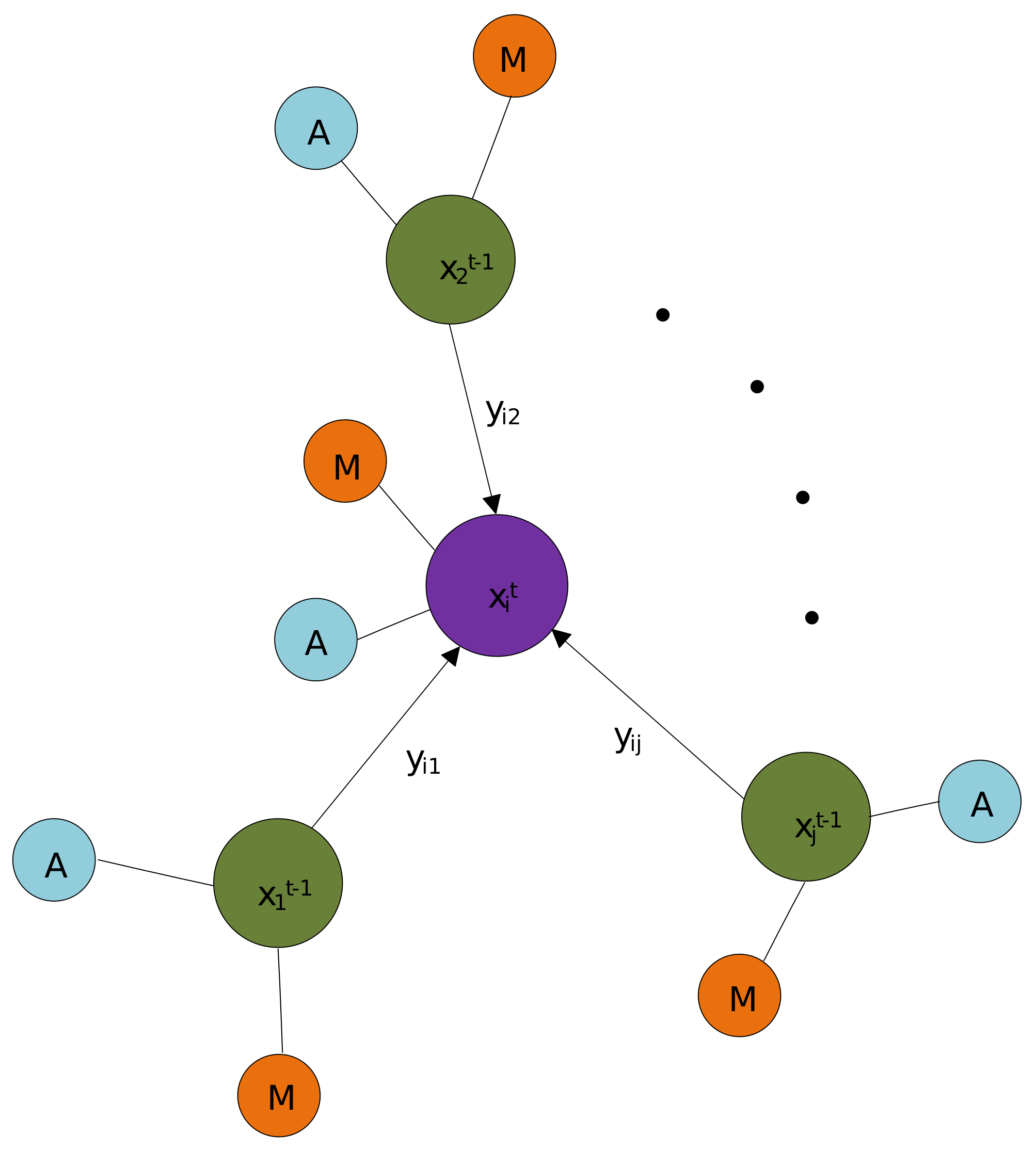

- We represent the detected objects as the nodes in a directed acyclic graph and propose a graph neural network to exploit the discriminative features of the objects in the adjacent frames for 3D MOT. Specifically, a directed graph is constructed by treating each object feature as the node. Consequently, the Graph Neural Network (GNN) technique could be employed to update node feature by combining the features of other nodes.

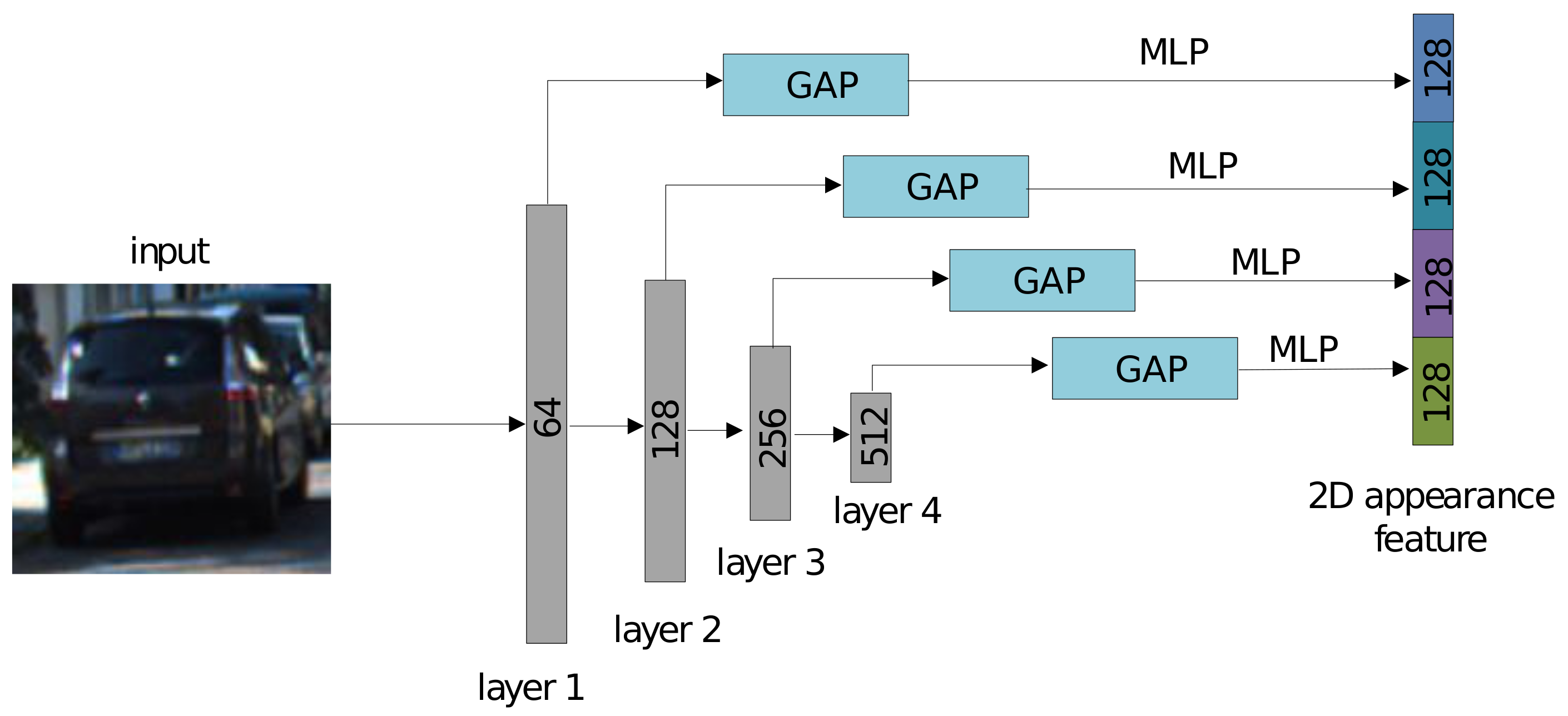

- We propose a novel joint feature extractor to learn both 2D/3D appearance features and 2D motion features from the images and the point clouds in the sequence. In particular, the 2D/3D appearance features are learned from the image and the point cloud respectively by applying corresponding feature extractors. The motion model uses the parameters of the 2D bounding box as the motion cues to capture the motion features, which are finally aggregated with the 2D/3D appearance features to obtain the joint feature.

- We propose the RelationConv operation to efficiently learn the correlation between each pair of objects for the affinity matrix. The proposed operation is similar to the kernel of Convolutional Neural Network (CNN) that is used on the standard grid data, but our operation is more flexible and can be applied over the irregular data (e.g., graphs).

2. Related Work

2.1. 2D Multi-Object Tracking

2.2. 3D Multi-Object Tracking

2.3. Joint Multi-Object Detection and Tracking

2.4. Data Association in MOT

3. Model Structure

3.1. Problem Statement

3.2. 2D Detector

3.3. Joint Feature Extractor

3.3.1. 2D Appearance Feature Extraction

3.3.2. 3D Appearance Feature Extraction

3.3.3. Motion Feature

3.3.4. Features Aggregation and Fusion

3.4. Feature Interaction Module

3.4.1. Graph Construction

3.4.2. Relation Convolution Operator

3.4.3. Feature Interaction

3.4.4. Confidence Estimator

3.5. Data Association

3.5.1. Affinity Matrix Learning

3.5.2. Linear Programming

4. Experiments

4.1. Dataset

4.2. Evaluation Metrics

4.3. Training Settings

4.4. Results

4.5. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Luiten, J.; Fischer, T.; Leibe, B. Track to reconstruct and reconstruct to track. IEEE Robot. Autom. Lett. 2020, 5, 1803–1810. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Zhou, H.; Sun, S.; Wang, Z.; Shi, J.; Loy, C.C. Robust multi-modality multi-object tracking. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 2365–2374. [Google Scholar]

- Hu, H.N.; Cai, Q.Z.; Wang, D.; Lin, J.; Sun, M.; Krahenbuhl, P.; Darrell, T.; Yu, F. Joint monocular 3D vehicle detection and tracking. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 5390–5399. [Google Scholar]

- Weng, X.; Wang, J.; Held, D.; Kitani, K. 3D Multi-Object Tracking: A Baseline and New Evaluation Metrics. arXiv 2020, arXiv:1907.03961. [Google Scholar]

- Bergmann, P.; Meinhardt, T.; Leal-Taixe, L. Tracking without bells and whistles. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 941–951. [Google Scholar]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Tracking Objects as Points. arXiv 2020, arXiv:2004.01177. [Google Scholar]

- Sun, S.; Akhtar, N.; Song, H.; Mian, A.S.; Shah, M. Deep affinity network for multiple object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 104–119. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. arXiv 2019, arXiv:1909.12605. [Google Scholar]

- Milan, A.; Rezatofighi, S.H.; Dick, A.; Reid, I.; Schindler, K. Online multi-target tracking using recurrent neural networks. arXiv 2016, arXiv:1604.03635. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in neural information processing systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef] [Green Version]

- Weng, X.; Wang, Y.; Man, Y.; Kitani, K. GNN3DMOT: Graph Neural Network for 3D Multi-Object Tracking with Multi-Feature Learning. arXiv 2020, arXiv:2006.07327. [Google Scholar]

- Shenoi, A.; Patel, M.; Gwak, J.; Goebel, P.; Sadeghian, A.; Rezatofighi, H.; Martin-Martin, R.; Savarese, S. JRMOT: A Real-Time 3D Multi-Object Tracker and a New Large-Scale Dataset. arXiv 2020, arXiv:2002.08397. [Google Scholar]

- Fortmann, T.; Bar-Shalom, Y.; Scheffe, M. Sonar tracking of multiple targets using joint probabilistic data association. IEEE J. Ocean. Eng. 1983, 8, 173–184. [Google Scholar] [CrossRef] [Green Version]

- Ma, C.; Huang, J.B.; Yang, X.; Yang, M.H. Hierarchical convolutional features for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3074–3082. [Google Scholar]

- Wang, L.; Ouyang, W.; Wang, X.; Lu, H. Visual tracking with fully convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3119–3127. [Google Scholar]

- Bhat, G.; Johnander, J.; Danelljan, M.; Shahbaz Khan, F.; Felsberg, M. Unveiling the power of deep tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 483–498. [Google Scholar]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 472–488. [Google Scholar]

- Choi, J.; Jin Chang, H.; Yun, S.; Fischer, T.; Demiris, Y.; Young Choi, J. Attentional correlation filter network for adaptive visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4807–4816. [Google Scholar]

- Kiani Galoogahi, H.; Fagg, A.; Lucey, S. Learning background-aware correlation filters for visual tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1135–1143. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. Context-aware correlation filter tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1396–1404. [Google Scholar]

- Yun, S.; Choi, J.; Yoo, Y.; Yun, K.; Young Choi, J. Action-decision networks for visual tracking with deep reinforcement learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2711–2720. [Google Scholar]

- Zhang, L.; Li, Y.; Nevatia, R. Global data association for multi-object tracking using network flows. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Schulter, S.; Vernaza, P.; Choi, W.; Chandraker, M. Deep network flow for multi-object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6951–6960. [Google Scholar]

- Weng, X.; Kitani, K. A baseline for 3d multi-object tracking. arXiv 2019, arXiv:1907.03961. [Google Scholar]

- Osep, A.; Mehner, W.; Mathias, M.; Leibe, B. Combined image-and world-space tracking in traffic scenes. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1988–1995. [Google Scholar]

- Scheidegger, S.; Benjaminsson, J.; Rosenberg, E.; Krishnan, A.; Granström, K. Mono-camera 3d multi-object tracking using deep learning detections and pmbm filtering. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 433–440. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Yin, T.; Zhou, X.; Krähenbühl, P. Center-based 3d object detection and tracking. arXiv 2020, arXiv:2006.11275. [Google Scholar]

- Luo, W.; Yang, B.; Urtasun, R. Fast and furious: Real time end-to-end 3d detection, tracking and motion forecasting with a single convolutional net. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3569–3577. [Google Scholar]

- Butt, A.A.; Collins, R.T. Multi-target tracking by lagrangian relaxation to min-cost network flow. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 1846–1853. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 918–927. [Google Scholar]

- Ren, J.; Chen, X.; Liu, J.; Sun, W.; Pang, J.; Yan, Q.; Tai, Y.W.; Xu, L. Accurate single stage detector using recurrent rolling convolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5420–5428. [Google Scholar]

- Bell, S.; Lawrence Zitnick, C.; Bala, K.; Girshick, R. Inside-outside net: Detecting objects in context with skip pooling and recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2874–2883. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. Pointcnn: Convolution on x-transformed points. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 820–830. [Google Scholar]

- Xu, B.; Wang, N.; Chen, T.; Li, M. Empirical evaluation of rectified activations in convolutional network. arXiv 2015, arXiv:1505.00853. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving. In Proceedings of the CVPR, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The CLEAR MOT metrics. EURASIP J. Image Video Process. 2008, 2008, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Huang, C.; Nevatia, R. Learning to associate: Hybridboosted multi-target tracker for crowded scene. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2953–2960. [Google Scholar]

- Karunasekera, H.; Wang, H.; Zhang, H. Multiple object tracking with attention to appearance, structure, motion and size. IEEE Access 2019, 7, 104423–104434. [Google Scholar] [CrossRef]

- Sharma, S.; Ansari, J.A.; Murthy, J.K.; Krishna, K.M. Beyond pixels: Leveraging geometry and shape cues for online multi-object tracking. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 3508–3515. [Google Scholar]

- Gündüz, G.; Acarman, T. A lightweight online multiple object vehicle tracking method. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 427–432. [Google Scholar]

- Tian, W.; Lauer, M.; Chen, L. Online multi-object tracking using joint domain information in traffic scenarios. IEEE Trans. Intell. Transp. Syst. 2019, 21, 374–384. [Google Scholar] [CrossRef]

- Xiang, Y.; Alahi, A.; Savarese, S. Learning to track: Online multi-object tracking by decision making. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4705–4713. [Google Scholar]

- Frossard, D.; Urtasun, R. End-to-end learning of multi-sensor 3d tracking by detection. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 635–642. [Google Scholar]

- Baser, E.; Balasubramanian, V.; Bhattacharyya, P.; Czarnecki, K. Fantrack: 3d multi-object tracking with feature association network. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1426–1433. [Google Scholar]

| Methods | Input | MOTA (%) | MOTP (%) | ID-SW | Frag | MT | ML |

|---|---|---|---|---|---|---|---|

| BeyondPixels [48] | 2D | 84.24 | 85.73 | 468 | 944 | 73.23 | 2.77 |

| MASS [47] | 2D | 85.04 | 85.53 | 301 | 744 | 74.31 | 2.77 |

| extraCK [49] | 2D | 79.99 | 82.46 | 343 | 938 | 62.15 | 5.54 |

| JCSTD [50] | 2D | 80.57 | 81.81 | 61 | 643 | 56.77 | 7.38 |

| IMMDP [51] | 2D | 83.04 | 82.74 | 172 | 365 | 60.62 | 11.38 |

| AB3DMOT [4] | 3D | 83.84 | 85.24 | 9 | 224 | 66.92 | 11.38 |

| PMBM [30] | 3D | 80.39 | 81.26 | 121 | 613 | 62.77 | 6.15 |

| mono3DT [3] | 3D | 84.52 | 85.64 | 377 | 847 | 73.38 | 2.77 |

| DSM [52] | 2D + 3D | 76.15 | 83.42 | 296 | 868 | 60.00 | 8.31 |

| FANTrack [53] | 2D + 3D | 77.72 | 82.32 | 150 | 812 | 62.61 | 8.76 |

| mmMOT [2] | 2D + 3D | 84.43 | 85.21 | 400 | 859 | 73.23 | 2.77 |

| GNN3DMOT [14] | 2D + 3D | 80.40 | 85.05 | 113 | 265 | 70.77 | 11.08 |

| OURS | 2D + 3D | 84.78 | 85.21 | 281 | 757 | 73.23 | 2.77 |

| Fusion Method | MOTA (%) | MOTP (%) | ID-SW | Frag | MT (%) | ML (%) |

|---|---|---|---|---|---|---|

| add | 91.72 | 90.35 | 106 | 210 | 90.28 | 0.9 |

| concatenate | 92.33 | 90.35 | 38 | 143 | 90.28 | 0.9 |

| weighted sum | 91.94 | 90.35 | 82 | 187 | 90.28 | 0.9 |

| Edge Feature | MOTA (%) | MOTP (%) | ID-SW | Frag | MT (%) | ML (%) |

|---|---|---|---|---|---|---|

| 90.94 | 90.35 | 193 | 290 | 90.28 | 0.9 | |

| 92.33 | 90.35 | 38 | 143 | 90.28 | 0.9 | |

| 91.85 | 90.35 | 92 | 194 | 90.28 | 0.9 | |

| 91.96 | 90.35 | 80 | 181 | 90.28 | 0.9 |

| Feature | MOTA (%) | MOTP (%) | ID-SW | Frag | MT (%) | ML (%) |

|---|---|---|---|---|---|---|

| A + MLP | 91.74 | 90.35 | 104 | 202 | 90.28 | 0.9 |

| A + M + MLP | 92.09 | 90.35 | 65 | 172 | 90.28 | 0.9 |

| A + RelationConv | 91.99 | 90.35 | 76 | 178 | 90.28 | 0.9 |

| A + M + RelationConv | 92.33 | 90.35 | 38 | 143 | 90.28 | 0.9 |

| Activation Function | MOTA (%) | MOTP (%) | ID-SW | Frag | MT (%) | ML (%) |

|---|---|---|---|---|---|---|

| RELU | 92.33 | 90.35 | 38 | 143 | 90.28 | 0.9 |

| Leaky RELU | 91.92 | 90.35 | 84 | 190 | 90.28 | 0.9 |

| Sigmoid | 91.33 | 90.35 | 110 | 197 | 90.28 | 0.9 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.; Zanotti Fragonara, L.; Tsourdos, A. Relation3DMOT: Exploiting Deep Affinity for 3D Multi-Object Tracking from View Aggregation. Sensors 2021, 21, 2113. https://doi.org/10.3390/s21062113

Chen C, Zanotti Fragonara L, Tsourdos A. Relation3DMOT: Exploiting Deep Affinity for 3D Multi-Object Tracking from View Aggregation. Sensors. 2021; 21(6):2113. https://doi.org/10.3390/s21062113

Chicago/Turabian StyleChen, Can, Luca Zanotti Fragonara, and Antonios Tsourdos. 2021. "Relation3DMOT: Exploiting Deep Affinity for 3D Multi-Object Tracking from View Aggregation" Sensors 21, no. 6: 2113. https://doi.org/10.3390/s21062113

APA StyleChen, C., Zanotti Fragonara, L., & Tsourdos, A. (2021). Relation3DMOT: Exploiting Deep Affinity for 3D Multi-Object Tracking from View Aggregation. Sensors, 21(6), 2113. https://doi.org/10.3390/s21062113