Behaviour Classification on Giraffes (Giraffa camelopardalis) Using Machine Learning Algorithms on Triaxial Acceleration Data of Two Commonly Used GPS Devices and Its Possible Application for Their Management and Conservation

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area and Animals

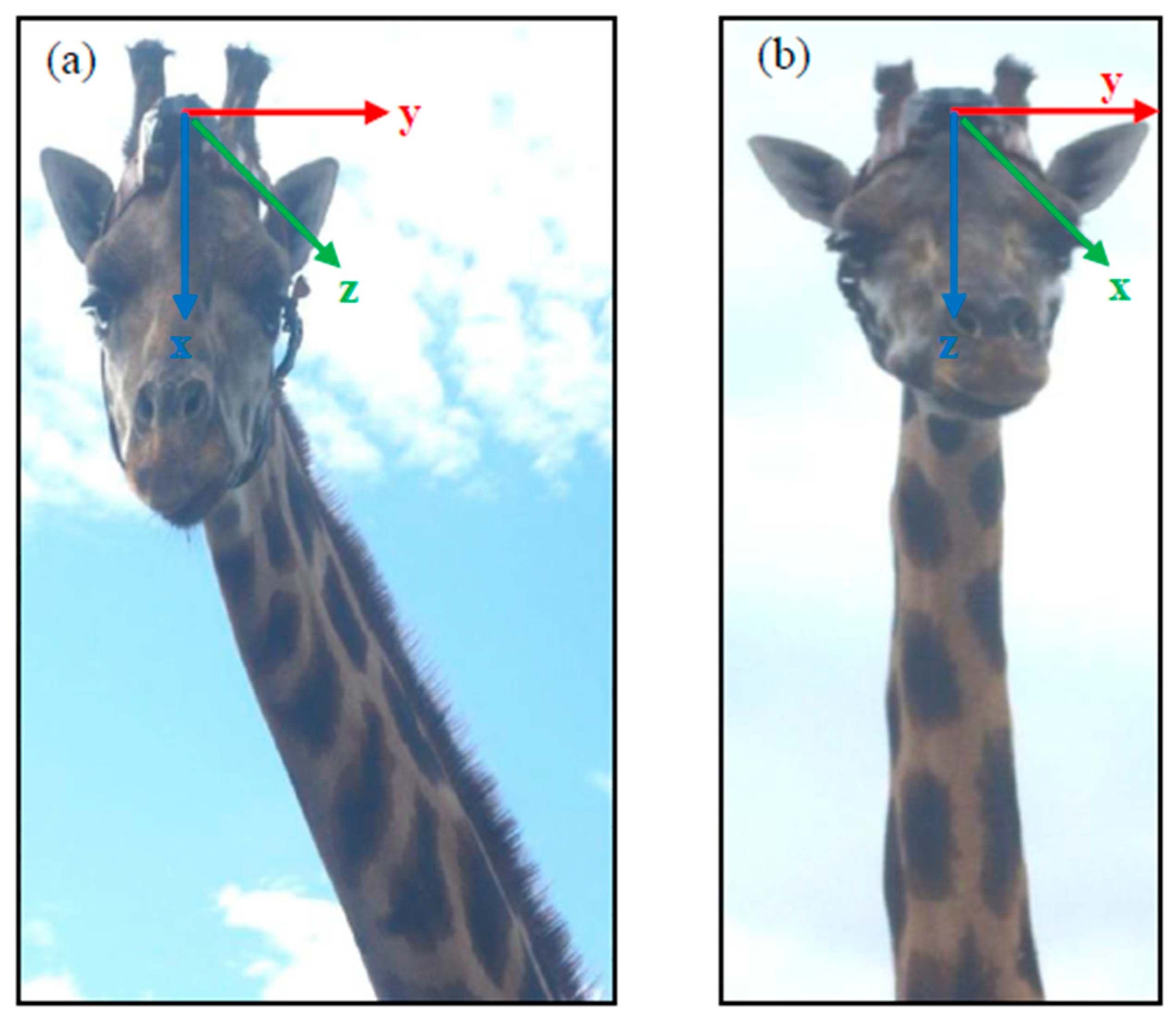

2.2. Accelerometers (e-obs and Africa Wildlife Tracking) and Collaring

2.3. Behavioral Observations

2.4. Data Processing

2.5. Data Analysis

3. Results

3.1. Application of the e-obs and AWT Accelerometer

3.1.1. Battery and Storage Capacity

3.1.2. Data Transmission

3.2. Prediction Accuracy of Behavior Categories with Individual Analyses

3.3. Prediction Accuracy of Behavior Categories with Cross-Validations

3.4. Comparison of Prediction Accuracies of Behavior Categories with the e-obs and AWT Accelerometer

4. Discussion

4.1. Random Forests Machine Learning Algorithm for Automatic Behavior Classification

4.2. Performance Depending on Input Data (Individual vs. Cross-Validation Analyses)

4.3. Prediction Accuracy of and Confusions between Behavior Categories

4.4. Comparison of e-obs and AWT Analyses and Handling

4.5. Future Accelerometers for Studies on Giraffes

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Behavior Category (Code) | Description | n (Sample Bursts) e-obs|AWT | ||

|---|---|---|---|---|

| Farrah | Jaffa | Max | ||

| standing (STA) | standing without any further movement or with movement of head and/or neck on different levels | 288|268 | 165|138 | 386 |

| lying (LIE) | lying down without further movement or with movement of head and/or neck on different levels, without further activity or while ruminating | 36|30 | 0|0 | 57 |

| feeding above eye level (FEA) | feeding on leaves or chewing or transition from feeding to chewing or vice versa, all with head stretched upwards | 73|140 | 9|16 | 142 |

| feeding at eye to middle level (FTM) | feeding on leaves/hay or nibble, e.g., on wood or chewing or transition from feeding to chewing or vice versa, all with head and neck at eye or middle level | 1515|1113 | 808|659 | 1733 |

| feeding at deep level (FED) | feeding on leaves or nibbling, e.g., on wood or chewing or transition from feeding to chewing or vice versa, all with head and neck at deep level | 46|49 | 46|41 | 220 |

| feeding at ground level (FEG) | feeding at ground level, front legs splayed out laterally | 169|427 | 60|112 | 39 |

| rumination (RUM) | ruminating while standing without any further movement or with movement of head and/or neck on different levels | 478|137 | 1001|322 | 1617 |

| drinking (DRI) | drinking from water source on/below ground level, front legs splayed out laterally | 31|30 | 7|7 | 16 |

| walking (WAL) | slow- to medium-speed locomotion, head and neck on different levels | 1076|666 | 600|350 | 731 |

| running (RUN) | high-speed locomotion, neck strongly swinging back and forth | 3|3 | 8|8 | 0 |

| grooming (GRO) | neck bent towards hind legs, nibbling/licking on own body or standing above pole, rubbing belly sideways | 7|15 | 3|6 | 27 |

| socio-positive behavior (SOC) | rubbing neck up and down on other individual, neck starting on different levels | 43|49 | 23|22 | 17 |

| socio-negative behavior/fight (FIG) | necking (swinging head and neck towards other individual), pushing other individual with head/neck at deep level or with body while head slightly to strongly stretched upwards | 0|0 | 0|0 | 309 |

| sleep (SLE) | lying with head and neck on eye to middle level, eyes closed or with neck bent towards hind legs, head put down on hip and eyes closed (REM/paradoxical sleep) | 0|0 | 0|0 | 208 |

| Prediction by RF | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Goal | STA | LIE | FEA | FTM | FED | FEG | RUM | WAL | FIG | SLE |

| STA | 17 | 0 | 0 | 1 | 5 | 1 | 3 | 0 | 1 | 2 |

| LIE | 0 | 28 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 2 |

| FEA | 0 | 0 | 28 | 2 | 0 | 0 | 0 | 0 | 0 | 0 |

| FTM | 0 | 0 | 6 | 19 | 1 | 0 | 1 | 2 | 1 | 0 |

| FED | 2 | 0 | 0 | 1 | 22 | 2 | 1 | 2 | 0 | 0 |

| FEG | 0 | 0 | 0 | 0 | 0 | 29 | 0 | 0 | 1 | 0 |

| RUM | 3 | 0 | 0 | 0 | 2 | 0 | 24 | 0 | 1 | 0 |

| WAL | 0 | 0 | 0 | 2 | 1 | 0 | 0 | 26 | 1 | 0 |

| FIG | 3 | 0 | 1 | 0 | 0 | 1 | 0 | 1 | 24 | 0 |

| SLE | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 26 |

| Prediction by RF | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Goal | STA | LIE | FEA | FTM | FED | FEG | RUM | DRI | WAL | SOC |

| STA | 15 | 4 | 0 | 1 | 3 | 0 | 2 | 1 | 1 | 3 |

| LIE | 3 | 20 | 0 | 0 | 1 | 1 | 5 | 0 | 0 | 0 |

| FEA | 0 | 0 | 28 | 1 | 0 | 0 | 0 | 0 | 0 | 1 |

| FTM | 0 | 1 | 1 | 17 | 1 | 0 | 6 | 0 | 1 | 3 |

| FED | 0 | 0 | 0 | 1 | 22 | 4 | 0 | 1 | 1 | 1 |

| FEG | 0 | 0 | 0 | 0 | 1 | 27 | 0 | 2 | 0 | 0 |

| RUM | 3 | 5 | 0 | 7 | 1 | 0 | 14 | 0 | 0 | 0 |

| DRI | 0 | 0 | 0 | 0 | 0 | 4 | 0 | 26 | 0 | 0 |

| WAL | 0 | 0 | 0 | 1 | 3 | 0 | 0 | 0 | 25 | 1 |

| SOC | 2 | 0 | 2 | 1 | 6 | 2 | 2 | 1 | 2 | 12 |

| Prediction by RF | ||||||

|---|---|---|---|---|---|---|

| Goal | STA | FTM | FED | FEG | RUM | WAL |

| STA | 36 | 3 | 1 | 0 | 0 | 0 |

| FTM | 1 | 33 | 3 | 1 | 2 | 0 |

| FED | 0 | 1 | 30 | 8 | 1 | 0 |

| FEG | 0 | 0 | 6 | 34 | 0 | 0 |

| RUM | 3 | 3 | 0 | 0 | 34 | 0 |

| WAL | 0 | 0 | 0 | 0 | 0 | 40 |

| Prediction by RF | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Goal | STA | LIE | FEA | FTM | FED | FEG | RUM | DRI | WAL | SOC |

| STA | 4 | 8 | 0 | 4 | 6 | 1 | 7 | 0 | 0 | 0 |

| LIE | 4 | 16 | 0 | 2 | 0 | 1 | 6 | 0 | 1 | 0 |

| FEA | 0 | 0 | 30 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| FTM | 1 | 2 | 0 | 16 | 2 | 0 | 4 | 0 | 2 | 3 |

| FED | 1 | 0 | 0 | 1 | 19 | 0 | 0 | 2 | 2 | 5 |

| FEG | 0 | 0 | 0 | 0 | 1 | 28 | 0 | 1 | 0 | 0 |

| RUM | 8 | 5 | 0 | 4 | 1 | 0 | 11 | 0 | 1 | 0 |

| DRI | 0 | 0 | 0 | 0 | 1 | 4 | 0 | 25 | 0 | 0 |

| WAL | 0 | 0 | 0 | 1 | 4 | 0 | 0 | 0 | 25 | 0 |

| SOC | 0 | 0 | 1 | 1 | 6 | 1 | 0 | 0 | 4 | 17 |

| Prediction by RF | ||||||

|---|---|---|---|---|---|---|

| al | STA | FTM | FED | FEG | RUM | WAL |

| STA | 27 | 2 | 5 | 1 | 5 | 0 |

| FTM | 1 | 32 | 2 | 0 | 1 | 4 |

| FED | 0 | 1 | 33 | 5 | 0 | 1 |

| FEG | 0 | 0 | 5 | 35 | 0 | 0 |

| RUM | 2 | 3 | 0 | 0 | 35 | 0 |

| WAL | 0 | 3 | 2 | 0 | 0 | 35 |

| Prediction by RF | ||||||

|---|---|---|---|---|---|---|

| Goal | STA | FTM | FED | FEG | RUM | WAL |

| STA | 22 | 2 | 4 | 0 | 1 | 1 |

| FTM | 4 | 16 | 1 | 0 | 1 | 8 |

| FED | 3 | 0 | 20 | 0 | 0 | 7 |

| FEG | 0 | 0 | 8 | 14 | 0 | 8 |

| RUM | 5 | 4 | 2 | 0 | 19 | 0 |

| WAL | 1 | 1 | 0 | 0 | 0 | 28 |

| Prediction by RF | ||||||

|---|---|---|---|---|---|---|

| Goal | STA | FTM | FED | FEG | RUM | WAL |

| STA | 19 | 4 | 1 | 1 | 2 | 3 |

| FTM | 0 | 20 | 1 | 0 | 9 | 0 |

| FED | 0 | 4 | 21 | 4 | 1 | 0 |

| FEG | 0 | 0 | 5 | 25 | 0 | 0 |

| RUM | 3 | 7 | 0 | 0 | 20 | 0 |

| WAL | 0 | 10 | 2 | 0 | 0 | 18 |

| Prediction by RF | ||||||

|---|---|---|---|---|---|---|

| Goal | STA | FTM | FED | FEG | RUM | WAL |

| STA | 25 | 0 | 2 | 0 | 3 | 0 |

| FTM | 0 | 17 | 6 | 1 | 4 | 2 |

| FED | 0 | 0 | 16 | 11 | 0 | 3 |

| FEG | 0 | 0 | 0 | 30 | 0 | 0 |

| RUM | 1 | 4 | 0 | 0 | 25 | 0 |

| WAL | 0 | 0 | 1 | 0 | 0 | 29 |

| Prediction by RF | ||||||

|---|---|---|---|---|---|---|

| Goal | STA | FTM | FED | FEG | RUM | WAL |

| STA | 8 | 8 | 6 | 1 | 6 | 1 |

| FTM | 1 | 20 | 2 | 0 | 4 | 3 |

| FED | 0 | 1 | 29 | 0 | 0 | 0 |

| FEG | 0 | 0 | 3 | 27 | 0 | 0 |

| RUM | 3 | 24 | 0 | 0 | 2 | 1 |

| WAL | 0 | 4 | 8 | 0 | 0 | 18 |

| Prediction by RF | ||||||

|---|---|---|---|---|---|---|

| Goal | STA | FTM | FED | FEG | RUM | WAL |

| STA | 24 | 1 | 4 | 1 | 0 | 0 |

| FTM | 6 | 13 | 7 | 0 | 2 | 2 |

| FED | 4 | 0 | 15 | 9 | 0 | 2 |

| FEG | 1 | 0 | 0 | 29 | 0 | 0 |

| RUM | 24 | 4 | 0 | 0 | 2 | 0 |

| WAL | 0 | 2 | 2 | 0 | 0 | 26 |

References

- Naeem, S.; Bunker, D.E.; Hector, A.; Loreau, M.; Perrings, C. Biodiversity, Ecosystem Functioning, and Human Wellbeing. An Ecological and Economic Perspective; Oxford University Press: Oxford, UK, 2009. [Google Scholar] [CrossRef]

- Steffen, W.; Richardson, K.; Rockström, J.; Cornell, S.E.; Fetzer, I.; Bennett, E.M.; Biggs, R.; Carpenter, S.R.; de Vries, W.; de Wit, C.A.; et al. Planetary boundaries: Guiding human development on a changing planet. Science 2015, 347, 1259855. [Google Scholar] [CrossRef]

- Ceballos, G.; Ehrlich, P.R.; Barnosky, A.D.; García, A.; Pringle, R.M.; Palmer, T.M. Accelerated modern human–induced species losses: Entering the sixth mass extinction. Sci. Adv. 2015, 1, e1400253. [Google Scholar] [CrossRef] [PubMed]

- Dagg, A.I.; Foster, J.B. The Giraffe: Its Biology, Behavior, and Ecology, 6th ed.; Van Nostrand Reinhold: New York, NY, USA, 1976. [Google Scholar]

- Fennessy, J. Home range and seasonal movements of Giraffa camelopardalis angolensis in the Northern Namib Desert. Afr. J. Ecol. 2009, 47, 318–327. [Google Scholar] [CrossRef]

- Deacon, F.; Smit, N. Spatial ecology and habitat use of giraffe (Giraffa camelopardalis) in South Africa. Basic Appl. Ecol. 2017, 21, 55–65. [Google Scholar] [CrossRef]

- O’Connor, T.G.; Puttick, J.R.; Hoffman, M.T. Bush encroachment in southern Africa: Changes and causes. Afr. J. Range For. Sci. 2014, 31, 67–88. [Google Scholar] [CrossRef]

- Wilson, D.E.; Mittermeier, R.A. Handbook of the Mammals of the World. Hoofed Mammals; Lynx Edicions: Barcelona, Spain, 2011; Volume 2. [Google Scholar]

- Muller, Z.; Bercovitch, F.; Fennessy, J.; Brown, D.; Brand, R.; Brown, M.; Bolger, D.; Carter, K.; Deacon, F.; Doherty, J.; et al. Giraffacamelopardalis (Linnaeus, 1758). In The IUCN Red List of Threatened Species; The International Union for Conservation of Nature: Gland, Switzerland, 2016. [Google Scholar] [CrossRef]

- Shepard, E.L.C.; Wilson, R.P.; Quintana, F.; Laich, A.G.; Liebsch, N.; Albareda, D.A.; Halsey, L.G.; Gleiss, A.; Morgan, D.T.; Myers, A.E.; et al. Identification of animal movement patterns using tri-axial accelerometry. Endanger. Species Res. 2008, 10, 47–60. [Google Scholar] [CrossRef]

- Swaisgood, R.R.; Greggor, A.L. Application of animal behavior to conservation. In Encyclopedia of Animal Behavior, 2nd ed.; Elsevier: Amsterdam, The Netherlands, 2019; pp. 220–229. [Google Scholar]

- Bracke, M.B.M.; Hopster, H. Assessing the importance of natural behaviour for animal welfare. J. Agric. Environ. Ethics 2006, 19, 77–89. [Google Scholar] [CrossRef]

- Tanaka, H.; Tagaki, Y.; Naito, Y. Swimming speeds and buoyancy compensation of migrating adult chum salmon Oncorhynchus keta revealed by speed/depth/acceleration data logger. J. Exp. Biol. 2001, 204, 3895–3904. [Google Scholar] [PubMed]

- Sutherland, W.J.; Gosling, L.M. Advances in the study of behaviour and their role in conservation. In Behaviour and Conservation; Gosling, L.M., Sutherland, W.J., Eds.; Cambridge University Press: Cambridge, UK, 2000; pp. 3–9. [Google Scholar]

- Cooke, S.J.; Blumstein, D.T.; Buchholz, R.; Caro, T.; Fernández-Juricic, E.; Franklin, C.E.; Metcalfe, J.; O’Connor, C.M.; St. Clair, C.C.; Sutherland, W.J.; et al. Physiology, behaviour and conservation. Physiol. Biochem. Zool. 2014, 87, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Gattermann, R.; Johnston, R.E.; Yigit, N.; Fritzsche, P.; Larimer, S.; Özkurt, S.; Neumann, K.; Song, Z.; Colak, E.; Johnston, J.; et al. Golden hamsters are nocturnal in captivity but diurnal in nature. Biol. Lett. 2008, 4, 253–255. [Google Scholar] [CrossRef]

- Berry, P.S. Range movements of giraffe in the Luangwa Valley, Zambia. Afr. J. Ecol. 1978, 16, 77–83. [Google Scholar] [CrossRef]

- Leuthold, B.M.; Leuthold, W. Ecology of the giraffe in Tsavo East National Park, Kenya. Afr. J. Ecol. 1978, 16, 1–20. [Google Scholar] [CrossRef]

- LePendu, Y.; Ciofolo, I. Seasonal movements of giraffes in Niger. J. Trop. Ecol. 1999, 15, 341–353. [Google Scholar]

- Kays, R.; Crofoot, M.C.; Jetz, W.; Wikelski, M. Terrestrial animal tracking as an eye on life and planet. Science 2015, 348, aaa2478. [Google Scholar] [CrossRef]

- Wilson, A.D.M.; Wikelski, M.; Wilson, R.P.; Cooke, S.J. Utility of biological sensor tags in animal conservation. Conserv. Biol. 2015, 29, 1065–1075. [Google Scholar] [CrossRef]

- Brown, D.D.; Kays, R.; Wikelski, M.; Wilson, R.; Klimley, A.P. Observing the unwatchable through acceleration logging of animal behaviour. Anim. Biotelem. 2013, 1, 20. [Google Scholar] [CrossRef]

- Nathan, R.; Spiegel, O.; Fortmann-Roe, S.; Harel, R.; Wikelski, M.; Getz, W.M. Using tri-axial acceleration data to identify behavioral modes of free-ranging animals: General concepts and tools illustrated for griffon vultures. J. Exp. Biol. 2012, 215, 986–996. [Google Scholar] [CrossRef]

- Bidder, O.R.; Campbell, H.A.; Gómez-Laich, A.; Urgé, P.; Walker, J.; Cai, Y.; Gao, L.; Quintana, F.; Wilson, R.P. Love Thy Neighbour: Automatic Animal Behavioural Classification of Acceleration Data Using the K-Nearest Neighbour Algorithm. PLoS ONE 2014, 9, e88609. [Google Scholar] [CrossRef]

- Rast, W.; Kimmig, S.E.; Giese, L.; Berger, A. Machine learning goes wild: Using data from captive individuals to infer wildlife behaviours. PLoS ONE 2020, 15, e0227317. [Google Scholar] [CrossRef]

- Sakamoto, K.Q.; Sato, K.; Ishizuka, M.; Watanuki, Y.; Takahashi, A.; Daunt, F.; Wanless, S. Can Ethograms Be Automatically Generated Using Body Acceleration Data from Free-Ranging Birds? PLoS ONE 2009, 4, e5379. [Google Scholar] [CrossRef]

- Yoda, K.; Naito, Y.; Sato, K.; Takahashi, A.; Nishikawa, J.; Ropert-Coudert, Y.; Kurita, M.; le Maho, Y. A new technique for monitoring the behaviour of free-ranging Adélie penguins. J. Exp. Biol. 2001, 204, 685–690. [Google Scholar]

- Halsey, L.G.; Portugal, S.J.; Smith, J.A.; Murn, C.P.; Wilson, R.P. Recording raptor behavior on the wing via accelerometry. J. Field Ornithol. 2009, 80, 171–177. [Google Scholar] [CrossRef]

- Williams, H.J.; Shepard, E.L.C.; Duriez, O.; Lambertucci, S.A. Can accelerometry be used to distinguish between flight types in soaringbirds? Anim. Biotelemetry 2015, 3, 45. [Google Scholar] [CrossRef]

- Sur, M.; Suffredini, T.; Wessells, S.M.; Bloom, P.H.; Lanzone, M.; Blackshire, S.; Sridhar, S.; Katzner, T. Improved supervised classification of accelerometry data to distinguish behaviors of soaring birds. PLoS ONE 2017, 12, e0174785. [Google Scholar] [CrossRef]

- Carroll, G.; Slip, D.; Jonson, I.; Harcourt, R. Supervised accelerometry analysis can identify prey capture by penguins at sea. J. Exp. Biol. 2014, 217, 4295–4302. [Google Scholar] [CrossRef]

- Viviant, M.; Trites, A.W.; Rosen, D.A.S.; Monestiez, P.; Guinet, C. Preycapture attempts can be detected in steller sea lions and othermarine predators using accelerometers. Polar Biol. 2010, 33, 713–719. [Google Scholar] [CrossRef]

- Whitney, N.M.; Pratt, H.L.; Pratt, T.C.; Carrier, J.C. Identifying shark mating behaviour using three-dimensional acceleration loggers. Endanger. Species Res. 2010, 10, 71–82. [Google Scholar] [CrossRef]

- Nishizawa, H.; Noda, T.; Yasuda, T.; Okuyama, J.; Arai, N.; Kobayashi, M. Decision tree classification of behaviors in the nesting process of green turtles (Chelonia mydas) from tri-axial acceleration data. J. Ethol. 2013, 31, 315–322. [Google Scholar] [CrossRef]

- Brownscombe, J.W.; Gutowsky, L.F.G.; Danylchuk, A.J.; Cooke, S.J. Foraging behaviour and activity of a marine benthivorous fish estimated using tri-axial accelerometer biologgers. Mar. Ecol. Prog. Ser. 2014, 505, 241–251. [Google Scholar] [CrossRef]

- Brewster, L.R.; Dale, J.J.; Guttridge, T.L.; Gruber, S.H.; Hansell, A.C.; Elliott, M.; Cowx, I.G.; Whitney, N.M.; Gleiss, A.C. Development and application of a machine learning algorithm for classification of elasmobranch behaviour from accelerometry data. Mar. Biol. 2018, 165, 62. [Google Scholar] [CrossRef]

- Shuert, C.R.; Pomeroy, P.P.; Twist, S.D. Assessing the utility and limitations of accelerometers and machine learning approaches in classifying behaviour during lactation in a phocid seal. Anim. Biotelemetry 2018, 6, 14. [Google Scholar] [CrossRef]

- Watanabe, S.; Izawa, M.; Kato, A.; Ropert-Coudert, Y.; Naito, Y. A new technique for monitoring the detailed behaviour of terrestrial animals: A case study with the domestic cat. App. Anim. Behav. Sci. 2005, 94, 117–131. [Google Scholar] [CrossRef]

- Soltis, J.; Wilson, R.P.; Douglas-Hamilton, I.; Vollrath, F.; King, L.E.; Savage, A. Accelerometers in collars identify behavioral states in captive African elephants Loxodonta africana. Endanger. Species Res. 2012, 18, 255–263. [Google Scholar] [CrossRef]

- McClune, D.W.; Marks, N.J.; Wilson, R.P.; Houghton, J.D.R.; Montgomery, I.W.; McGowan, N.E.; Gormley, E.; Scantlebury, M. Tri-axial accelerometers quantify behaviour in the Eurasian badger (Meles meles): Towards an automated interpretation of field data. Anim. Biotelemetry 2014, 2, 5. [Google Scholar] [CrossRef]

- Graf, P.M.; Wilson, R.P.; Qasem, L.; Hackländer, K.; Rosell, F. The Use of Acceleration to Code for Animal Behaviours; A Case Study in Free-Ranging Eurasian Beavers Castor fiber. PLoS ONE 2015, 10, e0136751. [Google Scholar] [CrossRef]

- Hammond, T.T.; Springthorpe, D.; Walsh, R.E.; Berg-Kirkpatrick, T. Using accelerometers to remotely and automatically characterize behavior in small animals. J. Exp. Biol. 2016, 219, 1618–1624. [Google Scholar] [CrossRef]

- Fehlmann, G.; O’Riain, M.J.; Hopkins, P.W.; O’Sullivan, J.; Holton, M.D.; Shepard, E.L.C.; King, A.J. Identification of behaviours from accelerometer data in a wild social primate. Anim. Biotelemetry 2017, 5, 6. [Google Scholar] [CrossRef]

- Kröschel, M.; Reineking, B.; Werwie, F.; Wildi, F.; Storch, I. Remote monitoring of vigilance behaviour in large herbivores using acceleration data. Anim. Biotelemetry 2017, 5, 10. [Google Scholar] [CrossRef]

- Chakravarty, P.; Cozzi, G.; Ozgul, A.; Aminian, K. A novel biomechanical approach for animal behaviour recognition using accelerometers. Methods Ecol. Evol. 2019, 10, 1–13. [Google Scholar] [CrossRef]

- Vitali, F.; Kariuki, E.K.; Mijele, D.; Kaitho, T.; Faustini, M.; Preziosi, R.; Gakuya, F.; Ravasio, G. Etorphine-Azaperone Immobilisation for Translocation of Free-Ranging Masai Giraffes (Giraffa Camelopardalis Tippelskirchi): A Pilot Study. Animals 2020, 10, 322. [Google Scholar] [CrossRef]

- Brown, M.B.; Bolger, D.T. Male-Biased Partial Migration in a Giraffe Population. Front. Ecol. Evol. 2020, 7, 524. [Google Scholar] [CrossRef]

- Razal, C.B.; Bryant, J.; Miller, L.J. Monitoring the behavioural and adrenal activity of giraffe (Giraffa camelopardalis) to assess welfare during seasonal housing changes. Anim. Behav. Cogn. 2017, 4, 154–164. [Google Scholar] [CrossRef]

- e-obs GmbH System Manual (2014) GPS-Acceleration-Tags. Grünwald, Germany. Available online: www.e-obs.de (accessed on 1 August 2017).

- Africa Wildlife Tracking Tag User Manual. Version 02. Available online: www.awt.co.za (accessed on 1 August 2017).

- Sikes, R.S.; The Animal Care and Use Committee of the American Society of Mammalogists. 2016 Guidelines of the American Society of Mammalogists for the use of wild mammals in research and education. J. Mammal. 2016, 97, 663–688. [Google Scholar] [CrossRef]

- Shorrocks, B. The Giraffe: Biology, Ecology, Evolution and Behaviour, 1st ed.; John Wiley & Sons, Ltd.: Chichester, UK, 2016. [Google Scholar] [CrossRef]

- Collins, P.M.; Green, J.A.; Warwick-Evans, V.; Dodd, S.; Shaw, P.J.A.; Arnould, J.P.Y.; Halsey, L.G. Interpreting behaviors from accelerometry: A method combining simplicity and objectivity. Ecol. Evol. 2015, 5, 4642–4654. [Google Scholar] [CrossRef]

- Venables, W.N.; Ripley, B.D. Modern Applied Statistics with S, 4th ed.; Springer: New York, NY, USA, 2002. [Google Scholar] [CrossRef]

- Therneau, T.; Atkinson, B. rpart: Recursive Partitioning and Regression Trees. R Package Version 4.1-12. 2018. Available online: https://CRAN.R-project.org/package=rpart (accessed on 1 August 2018).

- Liaw, A.; Wiener, M. Classification and Regression by randomForest. R News 2002, 2, 18–22. [Google Scholar]

- Meyer, D.; Dimitriadou, E.; Hornik, K.; Weingessel, A.; Leisch, F. e1071: Misc Function of the Department of Statistics, Probability Theory Group (Formerly: E1071), TU Wien. 2017. Available online: https://CRAN.R-project.org/package=e1701 (accessed on 1 August 2018).

- Schliep, K.; Hechenbichler, K. kknn: Weighted k-Nearest Neighbors. R Package Version 1.3.1. 2016. Available online: https://CRAN.R-project.org/package=kknn (accessed on 1 August 2018).

- Hastie, T.; Tibshirani, R.; Friedmann, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd ed.; Springer: New York, NY, USA, 2009. [Google Scholar]

- Campbell, H.A.; Gao, L.; Bidder, O.R.; Hunter, J.; Franklin, C.E. Creating a behavioural classification module for acceleration data: Using a captive surrogate for difficult to observe species. J. Exp. Biol. 2013, 216, 4501–4506. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From precision, recall and f-measure to ROC, informedness, markedness & correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Pampara, A. (Africa Wildlife Tracking, Johannesburg, South Africa); Brandes, S. (University of Potsdam, Brandenburg, Germany). Personal Communication, 2017.

- Brandes, S.; Sicks, F.; Berger, A. Data set—Behaviour Classification on Giraffes (Giraffa camelopardalis) Using Machine Learning Algorithms on Tri-Axial Acceleration Data of Two Commonly Used GPS-Devices and Its Possible Application for Their Management and Conservation. Study name “Accelerometry Giraffes”. 2021. Available online: www.Movebank.org (accessed on 1 March 2021).

- Breimann, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Foerster, F.; Smeja, M.; Fahrenberg, J. Detection of posture and motion by accelerometry: A validation study in ambulatory monitoring. Comput. Human Behav. 1999, 15, 571–583. [Google Scholar] [CrossRef]

- Ravi, N.; Dandekar, N.; Mysore, P.; Littman, M.L. Activity recognition from accelerometer data. In Proceedings of the 17th Conference on IAAI, Innovative Applications of Artificial Intelligence, Pittsburgh, PA, USA, 9–13 July 2005; Jacobstein, N., Porter, B., Eds.; American Association for Artificial Intelligence: Menlo Park, CA, USA, 2005; pp. 1541–1546. [Google Scholar]

- Martiskainen, P.; Järvinen, M.; Skön, J.P.; Tiirikainen, J.; Kolehmainen, M.; Mononen, J. Cow behaviour pattern recognition using three-dimensional accelerometer and support vector machines. Appl. Anim. Behav. Sci. 2009, 119, 32–38. [Google Scholar] [CrossRef]

- Burger, A.L.; Fennessy, J.; Fennessy, S.; Dierkes, P.W. Nightly selection of resting sites and group behavior reveal antipredator strategies in giraffe. Ecol. Evol. 2020, 10, 2917–2927. [Google Scholar] [CrossRef]

- Sicks, F. Paradoxer Schlaf als Parameter zur Messung der Stressbelastung bei Giraffen (Giraffa camelopardalis). Ph.D. Thesis, Johan Wolfgang Goethe University, Frankfurt on Main, Germany, 2012. [Google Scholar]

- Gerencsér, L.; Vásárhelyi, G.; Nagy, M.; Viscek, T.; Miklósi, A. Identification of behaviour in freely moving dogs (Canis familiaris) using intertial sensors. PLoS ONE 2013, 8, e77814. [Google Scholar] [CrossRef]

- Bom, R.A.; Bouten, W.; Piersma, T.; Oosterbeek, K.; van Gils, J.A. Optimizing acceleration-based ethograms: The use of variable-time versus fixed-time segmentation. Mov. Ecol. 2014, 2, 6. [Google Scholar] [CrossRef]

- Pastorini, J.; Prasad, T.; Leimgruber, P.; Isler, K.; Fernando, P. Elephant GPS tracking collars: Is there a best? Gajah 2015, 43, 15–25. [Google Scholar]

- Tost, D.; Strauß, E.; Jung, K.; Siebert, U. Impact of tourism on habitat use of black grouse (Tetrao tetrix) in an isolated population in northern Germany. PLoS ONE 2020, 15, e0238660. [Google Scholar] [CrossRef] [PubMed]

- Kumar, N.; Gupta, U.; Jhala, Y.V.; Qureshi, Q.; Gosler, A.G.; Sergio, F. GPS-telemetry unveils the regular high-elevation crossing of the Himalayas by a migratory raptor: Implications for definition of a “Central Asian Flyway”. Sci. Rep. 2020, 10, 15988. [Google Scholar] [CrossRef]

- Berger, A.; Lozano, B.; Barthel, L.M.F.; Schubert, N. Moving in the Dark—Evidence for an Influence of Artificial Light at Night on the Movement Behaviour of European Hedgehogs (Erinaceus europaeus). Animals 2020, 10, 1306. [Google Scholar] [CrossRef] [PubMed]

- Broell, F.; Noda, T.; Wright, S.; Domenici, P.; Steffensen, J.F.; Auclair, J.P.; Taggart, C.T. Accelerometer tags: Detecting and indentifying activities in fish and the effect of sampling frequency. J. Exp. Biol. 2013, 216, 1255–1264. [Google Scholar] [CrossRef]

- Schneirla, T.C. The relationship between observation and experimentation in the field study of behaviour. Ann. N. Y. Acad. Sci. 1950, 51, 1022–1044. [Google Scholar] [CrossRef]

- Hart, E.E.; Fennessy, J.; Rasmussen, H.B.; Butler-Brown, M.; Muneza, A.B.; Ciuti, S. Precision and performance of an 180 g solar-powered GPS device for tracking medium to large-bodied terrestrial mammals. Wildl. Biol. 2020, 2020, 00669. [Google Scholar] [CrossRef]

- Melzheimer, J.; Heinrich, S.K.; Wasiolka, B.; Müller, R.; Thalwitzer, S.; Palmegiani, I.; Weigold, A.; Portas, R.; Röder, R.; Krofel, M.; et al. Communication hubs of an asocial cat are the source of a human–carnivore conflict and key to its solution. Proc. Natl. Acad. Sci. USA 2020, 117, 33325–33333. [Google Scholar] [CrossRef] [PubMed]

| Behavior Category/Accuracy | e-obs | Mean Accuracy per Behavior Eobs | AWT | Mean Accuracy per Behavior AWT | |||

|---|---|---|---|---|---|---|---|

| Max | Farrah | Jaffa | Farrah | Jaffa | |||

| STA | 0.879 | 0.877 | 0.965 | 0.907 | 0.617 | 0.916 | 0.767 |

| LIE | 0.993 | 0.913 | --- | 0.953 | 0.856 | --- | 0.856 |

| FEA | 0.969 | 0.983 | --- | 0.976 | 0.997 | --- | 0.997 |

| FTM | 0.922 | 0.879 | 0.936 | 0.912 | 0.865 | 0.921 | 0.893 |

| FED | 0.930 | 0.904 | 0.904 | 0.913 | 0.861 | 0.906 | 0.884 |

| FEG | 0.983 | 0.951 | 0.933 | 0.956 | 0.969 | 0.951 | 0.960 |

| RUM | 0.957 | 0.833 | 0.959 | 0.916 | 0.779 | 0.951 | 0.865 |

| DRI | --- | 0.967 | --- | 0.967 | 0.970 | --- | 0.970 |

| WAL | 0.967 | 0.962 | 1 | 0.976 | 0.944 | 0.955 | 0.950 |

| SOC | --- | 0.835 | --- | 0.835 | 0.897 | --- | 0.897 |

| FIG | 0.957 | --- | --- | 0.957 | --- | --- | --- |

| SLE | 0.971 | --- | --- | 0.971 | --- | --- | --- |

| mean | 0.953 | 0.910 | 0.949 | 0.937 | 0.875 | 0.933 | 0.904 |

| precision | 0.973 | 0.946 | 0.968 | 0.962 | 0.915 | 0.959 | 0.937 |

| recall | 0.972 | 0.942 | 0.968 | 0.961 | 0.905 | 0.957 | 0.931 |

| Behavior Category/Accuracy | e-obs | Mean Accuracy per Behavior e-obs | AWT | Mean Accuracy per Behavior AWT | |||

|---|---|---|---|---|---|---|---|

| Max Test | Farrah Test | Jaffa Test | Farrah Test | Jaffa Test | |||

| STA | 0.867 | 0.899 | 0.963 | 0.910 | 0.727 | 0.777 | 0.752 |

| FTM | 0.838 | 0.781 | 0.870 | 0.830 | 0.723 | 0.796 | 0.760 |

| FED | 0.836 | 0.881 | 0.825 | 0.847 | 0.895 | 0.785 | 0.840 |

| FEG | 0.862 | 0.939 | 0.937 | 0.913 | 0.976 | 0.940 | 0.958 |

| RUM | 0.906 | 0.853 | 0.928 | 0.896 | 0.500 | 0.569 | 0.535 |

| WAL | 0.864 | 0.888 | 0.967 | 0.906 | 0.875 | 0.952 | 0.914 |

| mean | 0.862 | 0.874 | 0.915 | 0.884 | 0.827 | 0.803 | 0.815 |

| precision | 0.919 | 0.918 | 0.948 | 0.928 | 0.783 | 0.878 | 0.831 |

| recall | 0.898 | 0.913 | 0.939 | 0.917 | 0.779 | 0.801 | 0.790 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brandes, S.; Sicks, F.; Berger, A. Behaviour Classification on Giraffes (Giraffa camelopardalis) Using Machine Learning Algorithms on Triaxial Acceleration Data of Two Commonly Used GPS Devices and Its Possible Application for Their Management and Conservation. Sensors 2021, 21, 2229. https://doi.org/10.3390/s21062229

Brandes S, Sicks F, Berger A. Behaviour Classification on Giraffes (Giraffa camelopardalis) Using Machine Learning Algorithms on Triaxial Acceleration Data of Two Commonly Used GPS Devices and Its Possible Application for Their Management and Conservation. Sensors. 2021; 21(6):2229. https://doi.org/10.3390/s21062229

Chicago/Turabian StyleBrandes, Stefanie, Florian Sicks, and Anne Berger. 2021. "Behaviour Classification on Giraffes (Giraffa camelopardalis) Using Machine Learning Algorithms on Triaxial Acceleration Data of Two Commonly Used GPS Devices and Its Possible Application for Their Management and Conservation" Sensors 21, no. 6: 2229. https://doi.org/10.3390/s21062229

APA StyleBrandes, S., Sicks, F., & Berger, A. (2021). Behaviour Classification on Giraffes (Giraffa camelopardalis) Using Machine Learning Algorithms on Triaxial Acceleration Data of Two Commonly Used GPS Devices and Its Possible Application for Their Management and Conservation. Sensors, 21(6), 2229. https://doi.org/10.3390/s21062229