1. Introduction

Transportation infrastructure plays a vital role in our society, as it enables people to engage in activities that produce private, public, and social benefits [

1]. With relevance to large structure assets, bridge structures are built to connect people, shorten travel time, cross obstacles, improve traffic flow at complex crossroads, and allow access to regions otherwise inaccessible. In this sense, the socioeconomic impact of an inoperant bridge, as well as the life-threatening consequences of damage and unattended bridge network, can be incalculable. Therefore, the ability to evaluate the structural safety and the serviceability condition of existing bridge structures within the road infrastructure is one of the main tasks of engineers and a great desire of bridge owners. Additionally, many countries enforce the maintenance of their infrastructure assets with strict laws and regulations [

2], such as the German model building code [

3], the German civil code [

4], and EU regulations for construction products [

5].

A bridge is considered safe if the probability of failure during its service does not exceed a nominal value. The same is true for the serviceability, in which the likelihood of some service limits (e.g., vibration, deflection, etc.) to be exceeded must be small [

6]. This concept has been widely adopted for decades in design codes throughout the world, and it is strictly observed during the design and construction phases of new bridges and other wide-span structures. However, to deem an existing bridge structure as safe or unsafe to operate is not as easy a task as it may seem.

On the one hand, the safety and serviceability requirements undergo constant changes regarding actions and resistance models, as well as the understanding of structural behavior and failure modes. As a result, generations of bridges that were designed using expired codes may be unsafe to perform, even if they are undamaged [

7,

8]. On the other hand, deterioration processes and changes in environmental conditions severely affect bridge structures’ safety and serviceability. Some examples are the reduction of resistance due to deterioration of concrete decks, corrosion or mechanical damage, the increasing traffic volume and vehicle weight [

9], and the exposure to natural hazards due to climate change. Additionally, concrete material deterioration plays an essential role in the long-term damage accumulation and strength reduction in concrete structures [

10]. For example, corrosion causes stiffness reduction [

11], while cracking may increase the stress amplitude in the cross-section [

12]. A comprehensive review about degradation models and stiffness degradation can be found in [

11,

13].

To cope with the challenges of managing existing bridge structures, the concept of bridge management systems (BMS) emerged to support engineers and bridge managers to provide cost-effective decisions for the planning of maintenance, rehabilitation, and replacement (MRR) [

14]. A BMS is defined as the rational and systematic approach to organizing and carrying out all activities related to managing individual bridges within an infrastructure network [

15]. It became well established in 1993 when the US government issued legislation outlining the obligatory requirements for a BMS model, as summarized by Tran (2018) [

14]:

Database (inventory, inspection data, maintenance data).

Condition rating model (field evaluation of bridge condition).

Deterioration model (prediction of condition of bridge components).

Cost model (identification of costs and benefit).

Optimization model (search of optimal MMR strategies).

Risk model (risk ranking and risk assessment).

The different BMS practices among countries and companies have periodic visual inspection as the fundamental source of information [

16]. The observed changes in the structure are recorded in a database and used as a qualitative condition rating. Although the visual inspections—when carried out regularly by qualified personal—are cost-effective and provide thresholds for the decision-making process, they provide little information about the inspected bridge’s actual structural safety and serviceability state without subsequent analysis and structural assessment. Moreover, the visual inspection procedures focus on visible physical damages, disregarding the safety and serviceability constraints adopted during the design phase. Hence, costly in-depth investigations and maintenance actions are often unnecessarily prompted based only on the visual perception of safety. Simultaneously, real threatening events can occur between inspection appointments or go unnoticed for not being visible or accessible, such as the failure of prestressed tendons under certain conditions and risks from faulty execution. Only in rare cases are the bridge maintenance actions triggered by a failed safety and serviceability check [

6]. Therefore, visual inspections are inevitable as the primary means of information, but insufficient to satisfy the current needs for modern bridge maintenance programs [

2,

17,

18] and societal expectations.

In this sense, the inclusion of non-destructive testing (NDT) and structural health monitoring (SHM) techniques into the bridge management process has become increasingly sought [

19,

20]. The possibility of estimating the actual load level and occurrence and detecting structural deterioration and damage events using SHM systems could bring the BMS to the next level of sophistication. With the information provided by the sensor network, more realistic numerical models can be achieved through structural identification [

21] and model updating technics [

22], and then be used, e.g., to simulate the real structural behavior and estimate its expected time-life. Moreover, a suitable number and placement of sensors and robust data acquisition software enable the real-life detection of damage events and the online triggering of alarms.

The potential of SHM to optimize the management of bridge structures have motivated its research in the structural engineering field since the 1970s [

23], with more than 17,000 papers published from 2008 and 2017. Nonetheless, the transfer rate of research to industrial practice is disappointing [

24].

Cawley [

24] notes that the scientific community should acknowledge the need to perform SHM research on real practical cases, rather than on simple beam and plate specimens with idealized failure modes, controlled environmental constraints, and without consideration of false calls. The deployment of full SHM systems, including data handling and decision-making, must be carefully designed. It is crucial to ponder, among others, the environmental conditions in which the system operates, the characteristics of the damages that the system should detect and their probability of occurrence, how the data is collected and transmitted, how the resulting data will be analyzed and translated into reliable performance indicators, and what actions are to be taken from the results and who will be responsible for making then [

25]. Such considerations are difficult, if not unfeasible, to be investigated in simple laboratory tests.

Moreover, the sensor network and its operating system must allow the detection of local damages. Global monitoring, such as the exclusive use of vibration or shock sensors, has an excellent sensibility to detect changes in the boundary condition and mass distribution. Still, it may not be sensitive enough to detect local damage on large structures, depending on the setup and the system’s behavior. Cawley [

26] reported that a crack with a depth of 1% of the cross-section height at the root of a cantilever beam would reflect a reduction of its natural frequency by less than 0.1%. Even if 10% of the cross-section were removed, the natural frequency would be reduced by less than 1%. Hence, the detection of local damage on bridge structures requires a high sensor-count network with an appropriate area coverage and signal-to-noise ratio to prevent false calls. Therefore, the system must be carefully designed to ensure its cost-effective deployment and meaningful operation.

An important aspect during the design of real-size SHM systems is the optimum sensor placement (OSP), which involves defining the minimum number of sensing points and sensor layout [

27]. For simple reduced-scale models, the number of degrees of freedom (DOF) usually allows the placement of as many sensors as necessary to extract structural parameters correctly. However, in real-sized applications, structures may have many thousands of DOFs. At the same time, sensors can be placed at a finite number of locations [

28], leaving a gap between the experimental SHM results and the real structural response [

29]. While OSP algorithms based on modal analysis are well established [

30], few researchers discuss OSP based on static parameters such as stress, strain, cracking, and long-term deformation [

31,

32]. Furthermore, different structure types require different OSP approaches. For example, in a plane truss, the correct estimation of the nodal displacements seems to be a reasonable OSP approach, which can be accomplished by measuring the axial deformation on selected truss elements [

27,

31]. On the other hand, in prestressed concrete bridges, the rupture of prestressed tendons at random locations may alter the structure’s static response, thus requiring a new sensor-layout to identify structural damages correctly. In the latter, SHM systems that prioritize distributed or quasi-distributed sensing are more appropriated.

Another issue of real-size SHM—often disregarded in laboratory tests—is the translation of the measured data to reliable information, i.e., the interpretation and handling of a massive amount of data [

33]. A reasonable strain data collection on an average two-lane bridge concerning prestressed steel failure may result in hundreds of GB of data per month, corresponding to millions of spreadsheet lines. With the increasing availability of remote communication systems, the decreasing cost of sensors, and longer battery life and energy harvesting in remote locations, more and more structures are being monitored in some way. Still, the capacity to transfer a large amount of data into meaningful information has only marginally increased [

34]. Due to the growing number of sensors, the data stream can become unmanageable; many SHM managers report that they do not know what information to keep, ending up with piles of hard disks to store TBs of measured data no one ever looks at in detail [

35]. While in small deployments the engineer may individually analyze the data from installed sensors, a high sensor-count system must automatically highlight the anomalous signals where the existence, location, and severity of damages, followed by prognostics, is performed [

36,

37]. Moreover, the initial structural state is generally unknown outside of laboratory environments. The influence of temperature and other external disturbances, which can significantly influence the measured values, is also an example of drawbacks. Consequently, it is often necessary to manually check and interpret the data, requiring, on the one hand, the permanent availability of personnel and, on the other hand, leading to late detection or false alarms, which can compromise the monitoring system reliability.

Up to now, the detection of structural changes in SHM systems falls into two main philosophies: model-based and data-driven methods. The first, also known as model updating or the inverse approach, usually combines measured structural responses with finite element (FE) model predictions and supports long-term decision-making such as the lifetime and repair and evaluation [

38,

39,

40,

41,

42,

43,

44]. However, FE modeling and updating are time-consuming and comes with a high computational cost. Given the many uncertainties, a calibrated model may not be correct even if its predictions match the measured observations [

45]. For the data-driven methods, nonparametric approaches, such as the moving principal component analysis (MPCA) and robust regression analysis, can be applied to the data measurement history for damage detection and have been demonstrated in many laboratory tests and real-case applications [

46,

47,

48,

49]. Still, their real-time capability to detect structural changes is only feasible to a limited extent. The reasons lie in the unknown actual structural reaction due to local changes, such as prestressed steel rupture and crack formation, and the discrepancies between the real and theoretical properties related to the structure’s materials and geometry. Other methods independent of baseline references delivered promising results for real-life damage detection in bridge structures. They rely on statistical learning methods—e.g., neural networks and clustering—to extract intrinsic features without requiring prior knowledge of the structure health, and can be used on multiple sensing platforms, such as imaging technique, modal parameters, and static parameters (inclination, displacement, strain) [

50,

51,

52,

53,

54].

This work presents a novel algorithm for the real-time analysis and alarm triggering of a high sensor-count monitoring system deployed on a structure that is subjected to environmental and random dynamic loading. The SHM system is based on a long-gauge fiber Bragg grating (FBG) (LGFBG) sensor network and was installed on a real-life prestressed concrete national highway bridge in Neckarsulm, Germany. Statistical and quantitative parameters are continuously updated from the strain and temperature data stream using a real-time computing (RTC) algorithm that performs, amongst others, a correlation analysis between adjacent sensors and allows the automatic detection of unexpected local structure changes by the minute. The algorithm is built based on redundancy to enhance its reliability and prevent false calls. A three-step check is performed to handle outliers, noise, and other random and unpredictable events. One important novelty of the proposed algorithm is that it was implemented inside the data acquisition software and is executed during runtime. In other words, the damage detection algorithm runs parallel to the measurements, and the analysis is carried out before data storage and data transmission take place.

Additionally, a post-processing method based on the principal components analysis (PCA) is applied to demonstrate the correlation coefficient analysis’s reliability to detect behavior changes in a high sensor-count system. Although the PCA post-processing results are compared with the real-time analysis results, they are two independent matters and should not be confused. The novel real-time damage detection algorithm does not depend on any post-processing evaluation. The PCA results are used to mathematically support the findings around the proposed algorithm.

The novel algorithm for real-time analysis and the post-processing method, are part of a pilot monitoring system developed to access the structural health of prestressed concrete bridge structures by providing robust, yet meaningful information to support bridge managers and bridge inspectors in the decision-making process of bridge maintenance. The system has a comprehensive data management design to handle the large volume of data produced from four different post-processing approaches and the real-time analysis algorithm.

The paper is organized as follows:

Section 2 describes the monitored bridge and the installed monitoring system.

Section 3 presents the data management system.

Section 4 describes the novel real-time analysis algorithm and the post-processing method based on the MCPA.

Section 5 contains the results of the real-time analysis and the MPCA post-processing method.

Section 6 closes the work with the conclusions.

3. Data Management

The monitoring system’s data acquisition is performed using the commercial software Catman AP developed and distributed by Hottinger Brüel and Kjaer (HBK), Darmstadt, Hesse, Germany. Beyond the required characteristics of a robust acquisition software, Catman also allows the online processing of computational channels and auxiliary channels that can be used to perform user-defined tasks via scripting.

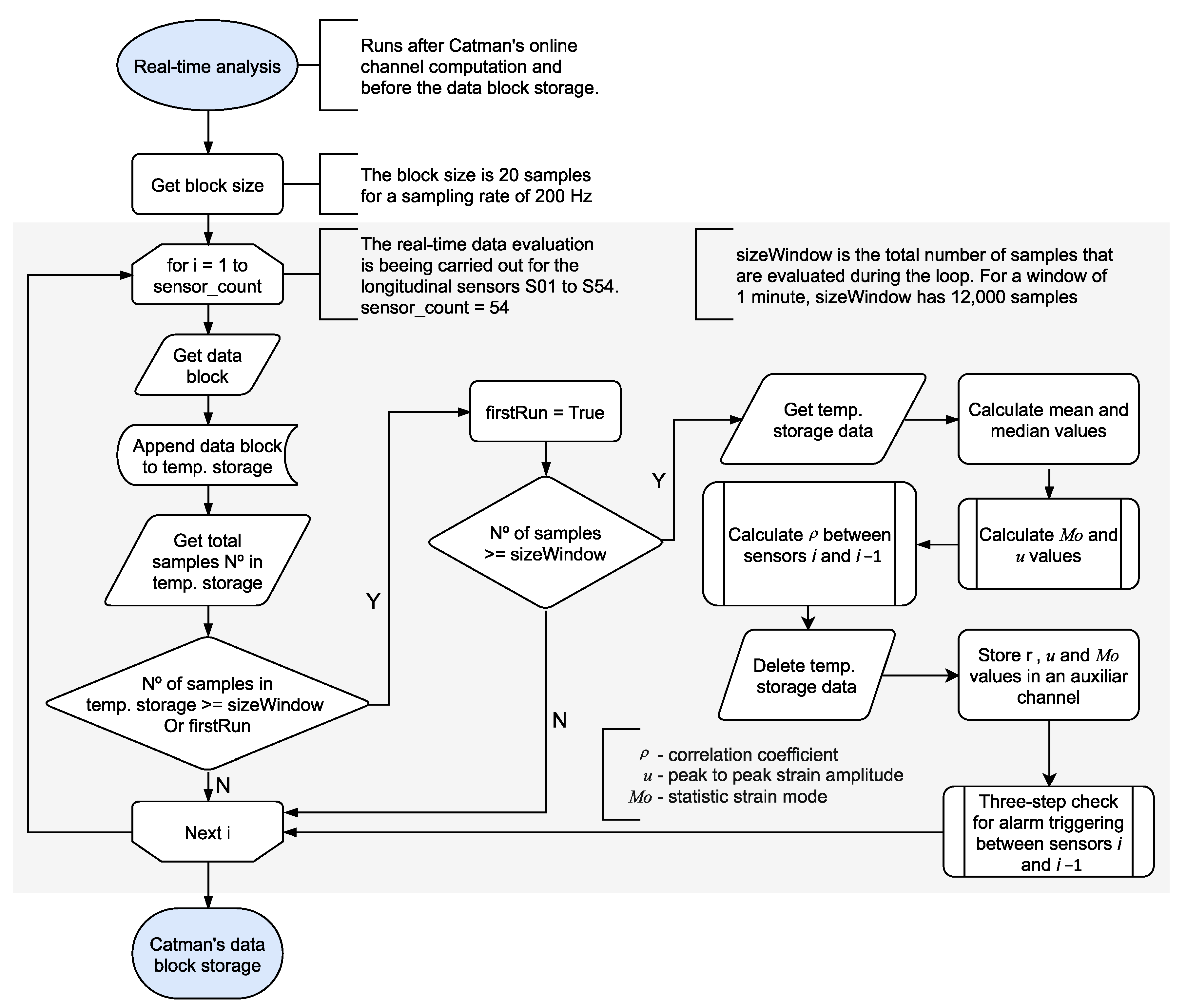

The data acquisition (DAQ) process in Catman (DAQ job) can be divided into three main stages, namely the job preparation, the data transfer cycle, and the job finalization. During each step, a series of closed tasks are executed in the background without the user’s control. The basic workflow of a DAQ job is shown in

Figure 5. However, using the Catman’s scripting functionality, it is possible to “intercept” a data block using a scripted procedure to carry out user-defined tasks. The system automatically sets the size of a data block according to the data sampling rate. For a sampling rate of 200 Hz, a data block has 20 measurement points for each of the 184 sensors, giving a total of 10 data transfer cycles per second per sensor (185 cycles every 100 ms). The data block cycle allows the implementation of RTC to process the data as it comes in, which is the core of the novel real-time analysis presented in this work.

Moreover, the Catman software allows parallel data recorders configuration, where selected sensors with different saving configurations can be simultaneously stored into permanent files. For the monitoring system in Neckarsulm, four separate recorders were created and associated with specific events, namely the dynamic continuously event, the dynamic triggered event, the statistic journal, and the real-time analysis data. However, only the real-time analysis data belongs to the scope of this paper.

Given the high sensor-count and the dynamic measuring characteristics, the amount of data generated is enormous; thus, it was necessary to create a robust data management for both the data storing and the post-processing. The chosen solution was integrating the software MathWorks MATLAB [

61], Natick, MA, USA, and the MySQL [

62], Austin, TX, USA, database using scripts written specifically for this application.

MATLAB is a powerful analysis software known for its vast availability of mathematical and statistical pre-generated methods for data analysis and numerical computation. MATLAB allows the connection with a SQL database and the execution of queries inside the MATLAB workspace among its many attributes. The data on MATLAB can be stored in a SQL database, and an SQL database can be loaded into MATLAB for analysis. The SQL database can save millions of data entries and provides fast access to a specific set of data within the database, optimizing the data saving and the data query for analysis.

Figure 6 shows the data storing process, where the received monitoring data is saved into a MySQL database through MATLAB processing. The monitoring system generates about 600 GB of raw data every month. Only 2 GB are effectively stored in the SQL database after executing the pre-processing scripts developed exclusively for this project. Nevertheless, all raw data generated during this SHM project is being stored in an external hard drive disk (HDD) for scientific purposes.

The SQL database was structured to allow easy management and query for post-processing and data visualization. The database schema has four groups of relational tables divided by the type of recorder, as shown in

Figure 6, and a single table to store the sensors’ information and calibration coefficients. Additionally, a rainflow analysis result from the dynamic continuously event is saved separately as a MATLAB structure after its pre-processing.

Figure 7 shows an overview of the SQL schema and its table groups. Each group has a parent file table that records the files’ information and gives them a unique file-id number, followed by a child entry table that indexes all the entries in each file with a unique entry-id number. Finally, the records are organized in separate result tables, where each row corresponds to a unique entry-id. The fields closed by curly brackets {} in the result tables refer to an array of fields, usually one for each sensor, and is represented this way for simplification.

The tables within each group are linked together by foreign keys, where the entry-id in the result tables refers to the entry table, which directs its file-id to the files table. The SQL relational structure optimizes the data-selection from the database without the loss of referential integrity and facilitates database management. The JOIN clause, e.g., permits the rows and columns from two or more result table to be combined based on their related entry-id, allowing the selection of result from the desired period of a specific sensor without the need to load the entire dataset. Moreover, if a file-id must be removed from the file table, all the rows in the entry table related to that file are automatically deleted. Likewise, all the rows in the results table linked to the removed rows in the entry table are erased.

5. Results from the Real-Size SHM

In this section, the detailed results from the novel real-time analysis for the sensors S02, S03, and S04 is first shown, followed by the general study of sensors S01–S27, located in one of the longitudinal quasi-distributed measuring lines. The real-time evaluation algorithm is based on a three-step validation process. Three statistical parameters are continuously calculated parallel to the reception of measurement data for all longitudinal direction sensors (S01 to S54). Each step will be referenced as a filter, as summarized in

Table 1. As described in

Section 4, the strain correlation coefficient

ρ between neighboring sensors, the maximal peak-to-peak amplitude

u, and the statistical strain mode

Mo are determined for every one-minute time window

. Lastly, The PCA results are used to evaluate the correlation between the sensors and their contribution to the structural system’s behavior.

5.1. Real-Time Analysis Algorithm

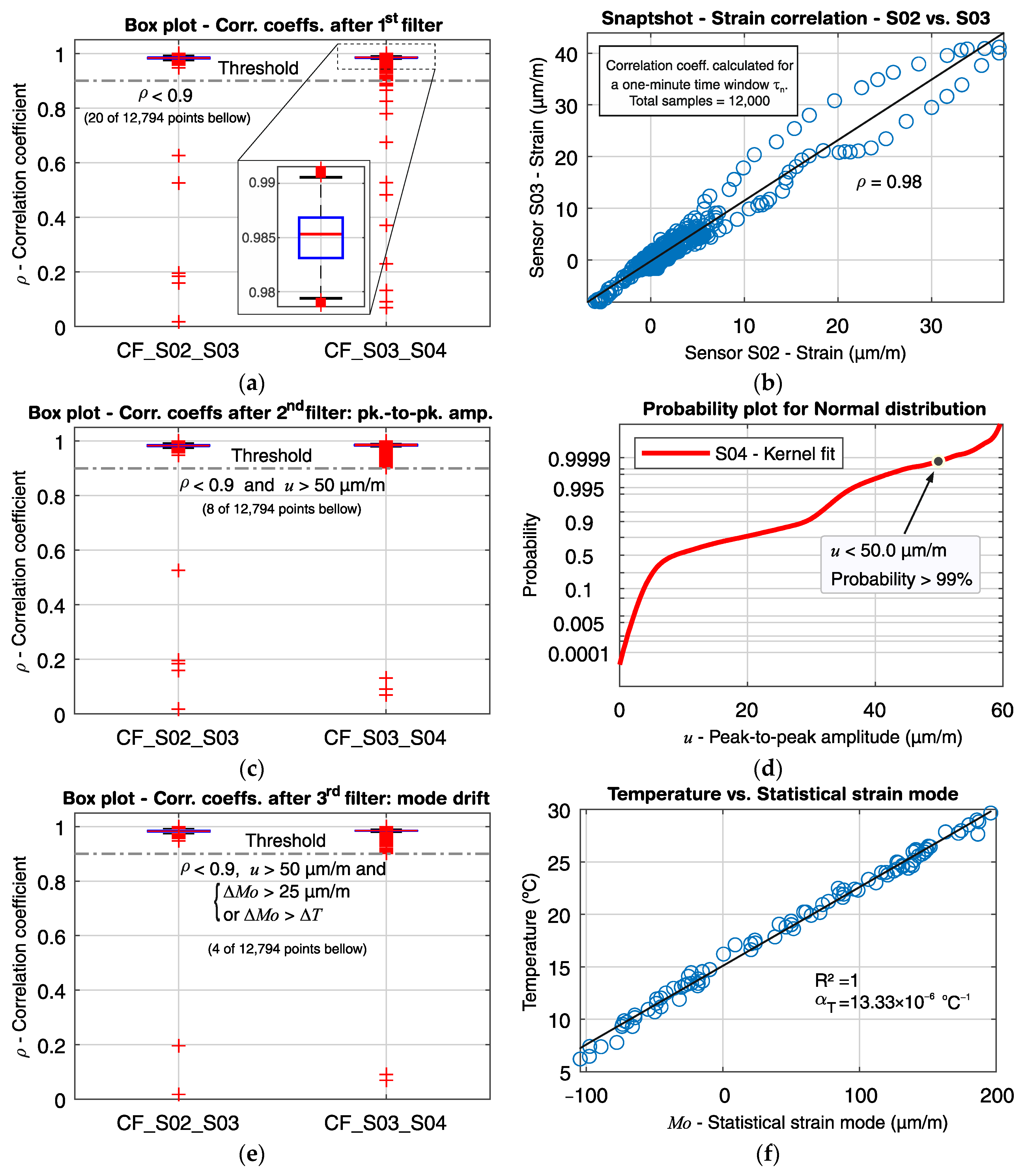

Figure 12a shows the box plot for the strain correlation coefficients

ρ between sensors S02 and S03 (CF_S02_S03), and sensors S03 and S04 (CF_S03_S04), where a total of 12,794 moving time windows

were recorded from 14 July to 6 November 2020 (116 days). It can be noticed that both pair of sensors have high correlation magnitudes, both with medians of approximately 0.98, and narrow interquartile and score ranges. The number of outliers is about 10% of the total cases, which is expected in large sample sets. The A magnified box plot for CF_S03_S04 is given in

Figure 12c, where the median, interquartile range and extreme limits are depicted in detail.

Figure 12b shows an example for the dependency between the strain signals from sensors S02 and S03 during a time window

, with the calculated correlation coefficient

ρ = 0.98.

When the first filter is applied, a total of 20 outliers representing 0.156% of the total measured points remain below the first filter’s threshold (ρ < 0.9). In other words, the correlation coefficients smaller than 0.9 are treated as potentially problematic, as they indicate a loss of linearity behavior between two neighboring sensors that ought to be otherwise linearly correlated and should thus be further analyzed. If only the first filter were used for the real-time analysis, the bridge managers would have received 20 alarm calls—around one call per week—for just two pairs of sensors. They would not have had additional information to judge whether the alarms were related to unexpected structural integrity changes or false calls (e.g., noise).

Next, the second filter is applied, where the peak-to-peak strain amplitudes

u of each sensor are checked at the time-intervals corresponding to the detected small correlation coefficients that went through the first filter. The peak-to-peak strain amplitude of a short moving time-window, such as

, is closely related to the traffic load; hence, values within the normal range of traffic operation can be disregarded during the real-time analysis, and only those with values above a specified limit should continue to be treated further. In this example, a peak-to-peak amplitude limit of

u > 50 µm/m is applied for demonstration purposes (during operation, the limit is set at

u > 60 µm/m, given that, based on the entire measurement history, there is a small than 1% probability that the peak-to-peak amplitude will not exceed 60 µm/m).

Figure 12a shows the box plots for the correlation coefficients after removing the points that passed the first filter (

ρ < 0.9) but were retained at the second filter for

u > 50 µm/m. Only eight remained after the second filter from the 20 points below the first filter’s threshold. In

Figure 12d, the cumulative distribution function of the peak-to-peak strain amplitude is depicted. It can be noted that the adopted limit for

u is within the service traffic load for the measurement history, where there is a probability less than 1% that

u will exceed 50 µm/m.

Even though the number of distinguished points was considerably reduced after the second filter, there is still insufficient information to call the remaining points problematic. A peak-to-peak strain amplitude above the average traffic operation does not necessarily mean that structural damages took place. The safety design checks require that the ultimate loads be higher than expected service loads.

Finally, the third and last filter is applied. In this stage, the strain mode

Mo at the time window

for each remaining point

i is compared with the strain modes of the time windows

and

. Given that each strain sensor has its own temperature sensor for temperature compensation, the drift in the strain signal offset over time can be related to the structure’s deformation due to temperature variation. Thus, the signal offset of each sensor for the time window

can be determined from the statistical strain mode. Since the traffic load is intermittent, and the crossing of a vehicle takes a few seconds, the statistical mode for a short time window should represent the signal offset for an “unloaded state”. The correlation between the strain mode (signal offset) and the temperature at sensor S02 is shown in

Figure 12f. A linear correlation can be observed, with

R2 ≈ 1 and

. Therefore, the strain mode drift should not be higher than the expected deformation due to the temperature variation for that same period, when analyzing two consecutive short-time periods. Thus, if the strain mode variation

is higher than 25 µm/m (which is equivalent to a crack opening with a width of 0.0125 mm) and

(

Mo in µm/m and temperature in °C), the point is called as problematic, and the system sends an alarm to the bridge managers.

Figure 12e shows the remaining points after applying the third filter, where four points remain after the application of the three-level filtering. These four points resulted from a demonstration during a visit at the bridge of the real-time analysis operation. A small weight was hanged on the gauge-length of sensor S03, causing a rapid perturbation on its measurement signal. Since the neighbor sensors S02 and S04 were not affected, a loss of linearity between sensor S03 and its neighbors was detected by the correlation coefficients CF_S02_S03 (

ρ = 0.196) and CF_S03_S04 (

ρ = 0.10), thus triggering the first filter (

ρ < 0.9). The slight change in the gauge-length curvature due to the added weight also produced an immediate peak-to-peak amplitude of 640 µm/m, which triggered the second filter (

u > 60 µm/m). Finally, after the initial perturbation caused by the hanging of the weight, the system went back to equilibrium, and the strain mode from sensor S03 suffered a drift of 222 µm/m (equivalent to a crack opening of

w ≈ 0.1 mm) due to the gauge-length elongation caused by the change on its curvature. Hence, the third and final filter was triggered, and the algorithm sent an alarm about this unexpected event. The sudden event of an unusual peak-to-peak amplitude associated with the loss of linearity could indicate structural damage, such as the rupture of a prestressed tendon caused by, e.g., the passing of an over-weighted truck or corrosion in the tendons. The follow-up mode drift suggests that the gauge-length abruptly changed, which is a good indicator that a crack opening or an unusual relative displacement between the sensor’s anchoring occurred.

To better understand the unexpected event depicted in

Figure 12e, the strain data from sensors S02 and S03 for the three consecutive one-minute time windows

,

, and

(timestamps and duration in

Table 2) is shown in

Figure 13. For each time window, the statistical strain mode

Mo, and the mean temperature

for sensor S03 is shown, as well as the correlation coefficient

ρ between sensors S02 and S03 (CF_S02_S03). The statistical parameters are summarized in

Table 3. The strain signals are depicted after removing the strain offsets, which is done by subtracting the strain mode from the raw strain signal.

During the time window , the unexpected event in the sensor S03 signal begins at timestamp 10:33:27 h. It can be observed that the sensor S03 strain signal displays unusual behavior, which deviates from its neighbor sensor S02. The correlation coefficient identifies the disagreement between sensors S02 and S03, with ρ = 0.196 at , therefore triggering the first filter (ρ < 0.9) of the three-step real-time analysis. Next, the second filter examines the peak-to-peak strain amplitude u during . A maximum u of 640 µm/m is recorded, thus triggering the second filter (u > 60 µm/m). Finally, the last filter comes into action, where the sensor S03 strain mode variation ΔMo and mean temperature variation between the previous and the subsequent time windows are verified. It can be observed that the strain mode and temperature variation between the previous time windows and were = 1.1 µm/m and = 0.015 K, which is not sufficient to trigger the third filter (ΔMo > 25 µm/m and ΔMo > ). However, after the strain perturbation in sensor S03 at takes place, the strain mode variation in the subsequent time window detects the new signal offset for sensor S03, caused by the elongation of its gauge-length. The strain mode and temperature variation between the subsequent time windows and were = 222 µm/m and = 0.021 °C, finally passing the third and last filter and triggering the alarm call.

In

Figure 14,

Figure 15 and

Figure 16, the correlation coefficients for every pair of neighboring sensors from S01 to S27 are represented in box plots for the period from 14 July to 6 November 2020 (116 days). In

Figure 14, the first filter is displayed as a threshold line at

ρ = 0.9. From a total of over 184,000 measured time windows

, 11,246 points were below the threshold. Although about 94% of the measuring points did not pass the first filter, there would still be many alarms, if only the first filter were used, triggering about 97 alarms per day.

Figure 15 shows in detail the correlation coefficient between sensors S14 and S15, where the distribution density, as well as the enlarged box plot, are depicted. It can be noticed that the mean and median values are close to one for well-correlated sensors, and the data dispersion is small. When the second filter is applied (

ρ < 0.9 and

u > 60 µm/m), as shown in

Figure 16, 36 points remain below the threshold. Nonetheless, there would be an alarm triggered every three days, considering just the first and the second filters. Finally, when the third filter level is engaged, only six points remain

Figure 16). Four out of the six remaining points are related to the demonstration described earlier, where the small weight was hanged on the sensor S3’s gauge-length, provoking a perturbation in its strain signal and thus generating two alarms between sensors S02 and S03, and two alarms between sensors S03 and S04. The other two outliers are associated with the correlation coefficients CF_S12_S13 and CF_S13_S14.

From

Figure 14, it is seen that the sensors located along the spans—namely sensors S01–S06, S10–S18, and S22–S27—are linearly correlated with their neighbor sensors, having medians and narrow interquartile ranges above 0.9 for the correlation coefficient, and a small number of outliers. The similar behavior can be observed between sensors S07–S09 and S19–S21, located on the massive cross-section at the intermediate supports. However, the first quartile of the correlation coefficients CF_S08_S09 and CF_S19_S20 is smaller than 0.9. Yet, the correlation coefficients for the sensors located at the transition between the section with hollow-cores and the massive cross-sections (

Figure 2) have a low correlation level. The coefficient CF_S09_S10, e.g., has a median of 0.44 and dispersed data, which can be seen from the wide interquartile ranging from 0.27 to 0.68. The low correlation level at locations, where the structure’s flexural rigidity changes abruptly are expected on statically indeterminate systems, since the internal loads and deformations distributions are a function of the structural rigidity.

It can also be observed from

Figure 14 that the correlation coefficients CF_S12_S13 and CF_S13_S14 have a large number of outliers when compared with the other sensors located at the spans, with 4137 outliers out of 14,740 points (28%), and 3,590 outliers out of 15,187 points (24%), respectively. Not only is the number of outliers high, but they are in a great quantity smaller than 0.9. While the correlation coefficients for sensors S02–S04, e.g., had only 0.156% of their outliers smaller than 0.9 (

Figure 12a), the same rate goes up to 5.163% for the correlation coefficients CF_S12_S13 and CF_S13_S14. Even though the number of outliers with values smaller than 0.9 is high, both correlation coefficients still have a median close to one and narrow interquartile ranges, suggesting a continuous structural dynamic behavior along with sensors S12–S14. Moreover, the low correlated points were always related to the strain signal from sensor S13, either due to a sudden drift in its offset signal or to a low-frequency vibration event after a vehicle’s crossing. This behavior could indicate that the segment covered by the sensor S13 has a higher level of cumulative degradation than its neighbor sensors. Nonetheless, only two points related to sensor S13 triggered the three-step real-time analysis, as shown in

Figure 16.

5.2. PCA Post-Processing

The principal components analysis (PCA) is performed to evaluate each sensor’s contribution to the system’s behavior and how they intercorrelate during the crossing of vehicles. A single vehicle’s crossing is analyzed to demonstrate how the PCA works and how the results are interpreted. The example event took place on 10 December 2019 and comprises a time window

with 5 s (1000 samples per sensors) of measurement data during a heavy vehicle crossing in the northern direction. The longitudinal sensors are divided into two groups for analysis according to their driving lanes location. Group A includes sensors S01–S27, and group B contains sensors S28–S54 (

Figure 3). The results will be demonstrated only for group A strain lines, as shown in

Figure 17.

First, the data set must be organized into a single matrix for the analyzed time window

, where each column corresponds to a sensor

Si, and each line to an observation

tn, where

i is the sensor’s number and

n the data sample, as follows:

After normalizing the data matrix, the covariance matrix is constructed, and its eigenvalues and eigenvectors are obtained. There are many eigenvectors as there are variables, and each eigenvector has many elements as the number of variables. Hence, the eigenvectors form a 27 × 27 matrix, given that group A has 27 sensors.

The principal components (PC) matrix is obtained by sorting the eigenvectors by their eigenvalues in decreasing order, where the first few principal components explain most of the data variance.

Figure 18 shows a scree plot with the decreasing rate at which the PCs explain the variance. The first four PCs explain 95.14% of the total variance, the first and the second being responsible for 82.87%.

Figure 19 shows line plots for the first four PCs’ elements for each sensor in the analyzed group A (S01 to S27). From now on, the elements of a PC will be designated as loading. The calculated loadings from the SHM data are plotted with circle markers and full-line, while the loadings estimated from a calibrated FE model are plotted with x-markers and dashed lines. The normalized root mean square error (NRMSE) between the FE model results and the SHM measured data is 1.92% for the first PC; 2.46% for the second PC, and the second PC; 2.75% for the third PC; and 3.13% for the fourth PC.

It can be noted from

Figure 19a that sensors S10–S18, located in the mid-span, have positive loadings for the first PC, while all sensors located in one of the side spans or in the columns’ region have negative loadings for the first PC. In addition, the line plot for the first PC loadings is symmetric with respect to a vertical axis at sensor S14. Loadings with the same signal mean that their variables are directly correlated, while variables that have loadings with different signals are inversely correlated. Likewise, variables with higher absolute loadings have a more considerable variance than variables with absolute smaller loadings.

For example, the first PC loadings for sensors S03 and S13 are −0.17 and 0.24, respectively. Since the original data set is composed by the longitudinal strain measurements during a vehicle’s crossing, and we analyze the first PC, it is likely that the strain lines’ behavior from S03 and S13 is inversely correlated. In fact, it can be seen from

Figure 17 that the strain lines S03 and S13 always have opposite signals and opposite inclinations. Moreover, the absolute loading value for sensor S13 is larger than for sensor S03, reflecting the more considerable variance of sensor S13.

The second PC (

Figure 19b) is most likely related to the shear deformation during the vehicle’s crossing. The second PC’s loadings have absolute maximal values at the intermediary columns (≈0.30) and close to the southern and northern abutment (≈0.25), where the shear force is usually larger. Unlike the first PC, the second PC’s line plot is symmetric about an origin defined by the horizontal zero-line and a vertical line at sensor S14. When the vehicle approaches sensors S08 and S09 at the first column, for example, the shear force reaches its maximal absolute value at that location. In contrast, the shear force at the second column (sensors S19 and S20) is close to zero at the same timestep (since the first column absorbs the shear load as the vehicle is standing on it). Likewise, as the vehicle approaches the sensors S19 and S20 at the second column, the shear force at the second column will reach its maximal value, while the shear force at the first column tends to zero at the same moment. The second PC’s loadings at the two described regions have approximately the same absolute value of 0.30, but with opposite signs. Furthermore, the summation of the second PC’s loadings is zero, just as the summation of the shear energy for a moving load crossing the structure should also be.

Although the third and fourth PCs (

Figure 19c,d) explain together only 12.27% of the system’s variance, their loadings are consistently distributed and resemble the bending moment diagram (third PC), and the shear force diagram (fourth PC) for a static uniform distributed loading.

Figure 20 shows a bi-plot, where the loadings of the 27 sensors for the first two PCs are plotted as vectors in the new coordinate system formed by the first and second PCs axis. The direction and length of the vectors indicate how each variable contributes to the two PCs. The variables’ vectors are systematically distributed in the bi-plot’s quadrants. Sensors S01 to S06 located at the bridge’s first span are in the second quadrant, sensors S22 to S27 located at the third span are in the third quadrant, sensors S10 to S18 located at the mid-span are symmetrically distributed about the first PC axis in the first and fourth quadrants. Sensors S07 to S09 and S19 to S21 located at the massive section at the columns are in the third and second quadrants, respectively.

Figure 20 shows each observation’s scores for the first and second PCs as red dots. The scores are a linear combination of the variables at each observation, weighted by their respective loadings, and then scaled with respect to the maximum vectors’ length. Since there are 1000 observations (1000 samples per sensor in the original dataset), there are 1000 pair of scores with scaled coordinates in the first and second PCs axis. The scores’ path is related to the vehicle’s movement and indicates the direction of most variance after each observation. As it can be seen, the first score is located close to the origin. As the vehicle enters the bridge, the scores’ path enters into the second quadrant, moving in the same direction as the vectors for sensors S01–S06. At some point, the scores’ path changes its direction towards the origin as the vehicle closes to the first column. After the vehicle enters the mid-span, the scores move inside the fourth quadrant, where sensors S10–S13 are located. When the vehicle reaches the bridge’s midpoint at sensor S14, the scores’ path changes once again its direction towards the origin inside the first quadrant, where sensors S15–S18 are. Lastly, the scores’ path moves in the same direction as the vectors for sensors S22–S27 in the third quadrant, and changes its directions towards the origin as the vehicle moves to the end of the bridge.

Finally, the same correlation coefficients calculated by the novel real-time analysis algorithm (as explained and demonstrated in

Section 4.1 and

Section 5.1) can be extracted from the covariance matrix of the data matrix

defined in Equation (5). Thus, the correlation coefficient between two neighbouring sensors is given by the element

of the covariation matrix

of the data matrix

, with

, where

N is the number of sensors (Equation (4)). The correlation coefficient CF_S02_S03 shown in

Figure 12a, for example, is equivalent to the covariance element

.

Figure 21 shows the correlation coefficients calculated by the novel real-time analysis algorithm for the heavy vehicle’s crossing, defined in

Figure 17. Likewise, the corresponding covariance elements are calculated using the calibrated FE model and plotted for comparison. The calculated correlation coefficients from the SHM measurement data are plotted with circle markers and full-line. In contrast, the estimated values from the calibrated FE model are plotted with x markers and dashed lines. The normalized root mean square error (NRMSE) between the FE model results and the SHM measured data is 1.92%.

7. Conclusions

In this work, the authors presented a novel real-time analysis algorithm for detecting unexpected events on a real-sized prestressed concrete bridge, subjected to random-dynamic loads and temperature variation, and a post-processing method based on the principal component analysis (PCA). The methods were demonstrated on a high sensor-count SHM system based on long-gauge FBG sensors installed on a real-sized prestressed bridge in Neckarsulm, Germany. Additionally, the authors describe the data management system developed to enable data condensation and translate the measurement data to reliable and meaningful information.

The proposed real-time analysis’s efficacy was demonstrated by analyzing the history of the three-step validation parameters extracted from the measured data from 14 July to 6 November 2020. First, the three-step validation filtering was described in the example of the data from sensor S03. The steps were:

Filtering the correlation coefficients between sensor S03 and its neighbors,

filtering relevant peak-to-peak amplitudes, and

filtering the strain mode variation,

Leaving at the end one alarm call related to an unexpected event, provoked on sensor S03 during a visit to the bridge. Next, the unexpected event at sensor S03 was shown, where the strain signals from sensors S02 and S03 were plotted for the one-minute time window that trigged the event, explaining in detail the flow of the three-step validation process. Finally, the overall results for all sensors in the quasi-distributed line S01–S27 during the three-step validation process were presented.

Additionally, the strain signals from sensors S01 to S27 were analyzed using the PCA during a heavy vehicle crossing. The first four principal components were used to evaluate the dependency between the strain sensors’ measurements and highlight the correlation coefficient analysis’s reliability to detect behavior changes.

From the results presented in this work, the following conclusions are drawn:

A robust data management system is essential to handle a high sensor-count monitoring system’s raw data in a real-case SHM application. The data management solution must be able to pre-process the raw data and store it on a reliable database and allow the efficient data selection for post-processing and visualization. Automated scripts should carry out both pre- and post-processing to optimize the speed and the reliability of the data handling. The monitoring system in Neckarsulm generates over 70 thousand measurement points per second, leading to about 600 GB of raw data per month, from which less than 3 GB of meaningful information are permanently stored in the database and are available for post-processing. It would be unbearable to manage such volume of data manually or using traditional spreadsheet software.

The area coverage and sensor-count are essential aspects to be considered during the development and deployment of a real-size SHM system. A large area of the structure must be measured to correctly depict its behavior and allow the detection of local damages, such as cracks and ruptures in prestressed tendons. The adopted solution with long-gauge FBG sensors offers a comprehensive area-coverage. Local damage can be detected within every two-meter segment along the two quasi-distributed sensor arrays in the longitudinal direction, and within every 1.35-m segment of the five quasi-distributed sensing arrays in the transverse direction. Moreover, the quasi-distributed sensing arrays can be used to analyze the cross-correlation between the sensors to assess how each structure’s segment interacts with one another. This analysis allows the call of unexpected structural behavior and the long-term evaluation of the structural integrity by checking for deviations in the cross-correlation relationships.

One important contribution of the novel damage detection algorithm is its implementation inside the data acquisition software, enabling the execution of robust analysis during runtime. The data evaluation is performed parallel to the measurements, before data storage and data transfer takes place. Therefore, large amounts of data can be analyzed to detect anomalous behavior as soon as the sensors measure them, without the need and the computational effort to store and transfer thousands of measurement lines for later processing.

The principal component analysis is a powerful method to reduce large datasets into smaller ones that still hold essential information about the original data. For example, it was possible to reduce a 27 by 1000 matrix of strain measurement points, generated during the crossing of a heavy vehicle, into a 27 × 27 principal components matrix. The first four principal components explain over 95% of the strain data’s variance, and allow an assessment of how each variable contributes to the overall behavior and how they interact with one another. The normalized root-mean-square error (NRMSE) between the PCA of the measured data and the estimated results from the calibrated FE model showed that the monitoring system itself is consistent. The strain measurements are closely and regularly correlated, which endorses the use of correlation coefficients as the critical parameter in the proposed real-time analysis algorithm.

The proposed real-time analysis algorithm is able to address many known limitations of a real-case SHM deployment. First of all, the algorithm runs automatically in real-time during the acquisition software’s runtime without the need for human interference and can detect unexpected changes with low false call rate by the minute. Secondly, the algorithm can tell the unexpected changes’ location with a resolution as small as the sensor’s gauge-lengths in combination with the high sensor-count and quasi-distributed sensing arrays. Moreover, the three-step validation process for alarm triggering is not tied to pre-defined failure modes, absolute limit values, or other known switches. On the contrary, it responds to random and dynamic loads, and it is free from environmental influences, such as temperature variation.

Another significant point is that the real-time analysis results can be stored and used later for post-processing. The results’ series can then be used to evaluate long-term structural changes. Finally, the proposed algorithm delivers a reliable notification system that allows bridge managers to track unexpected events with valuable information for decision-making.