Underwater Acoustic Signal Detection Using Calibrated Hidden Markov Model with Multiple Measurements

Abstract

1. Introduction

2. Problem Description

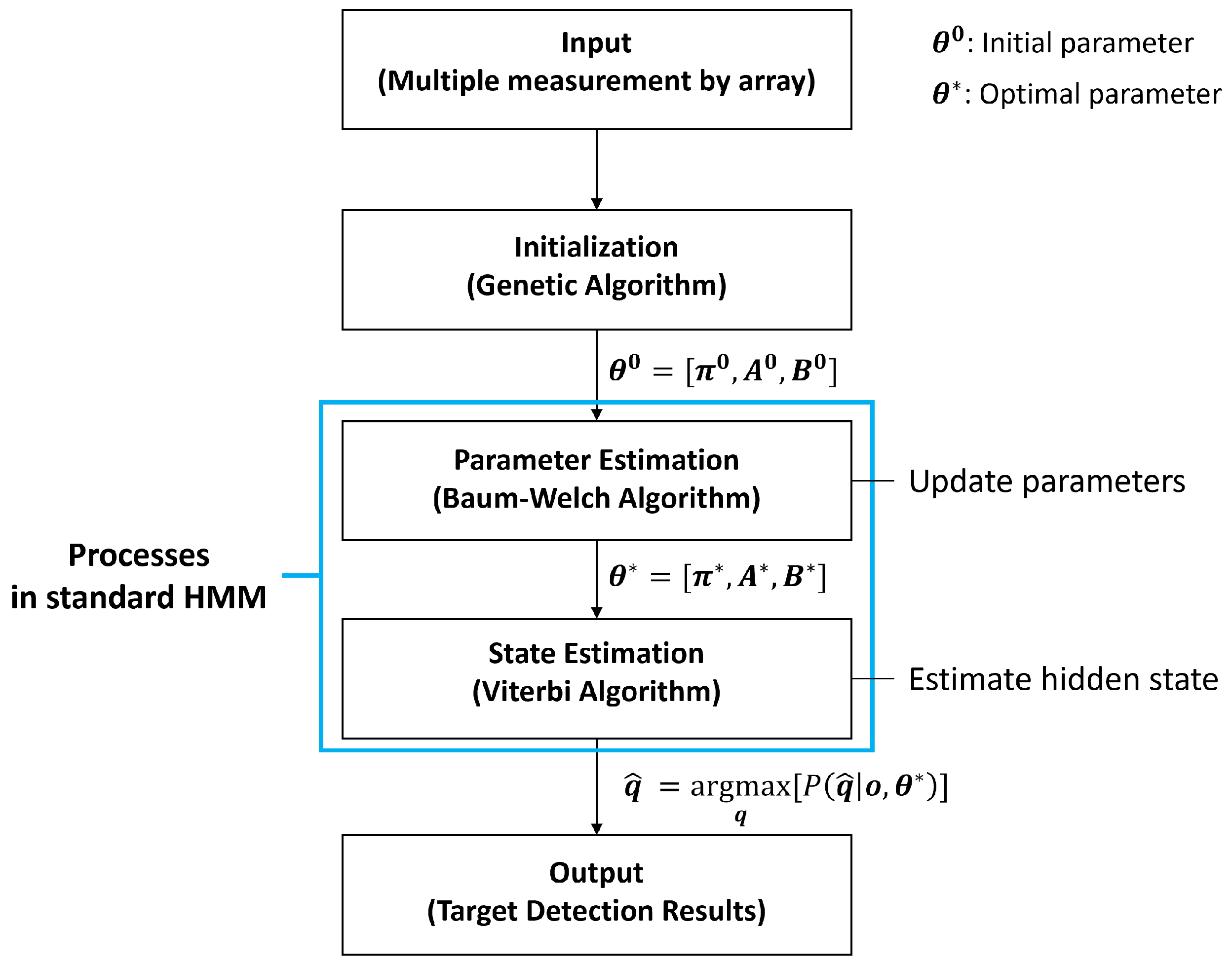

3. HMM Calibration and Parameter Adjustment Using Multiple Measurements

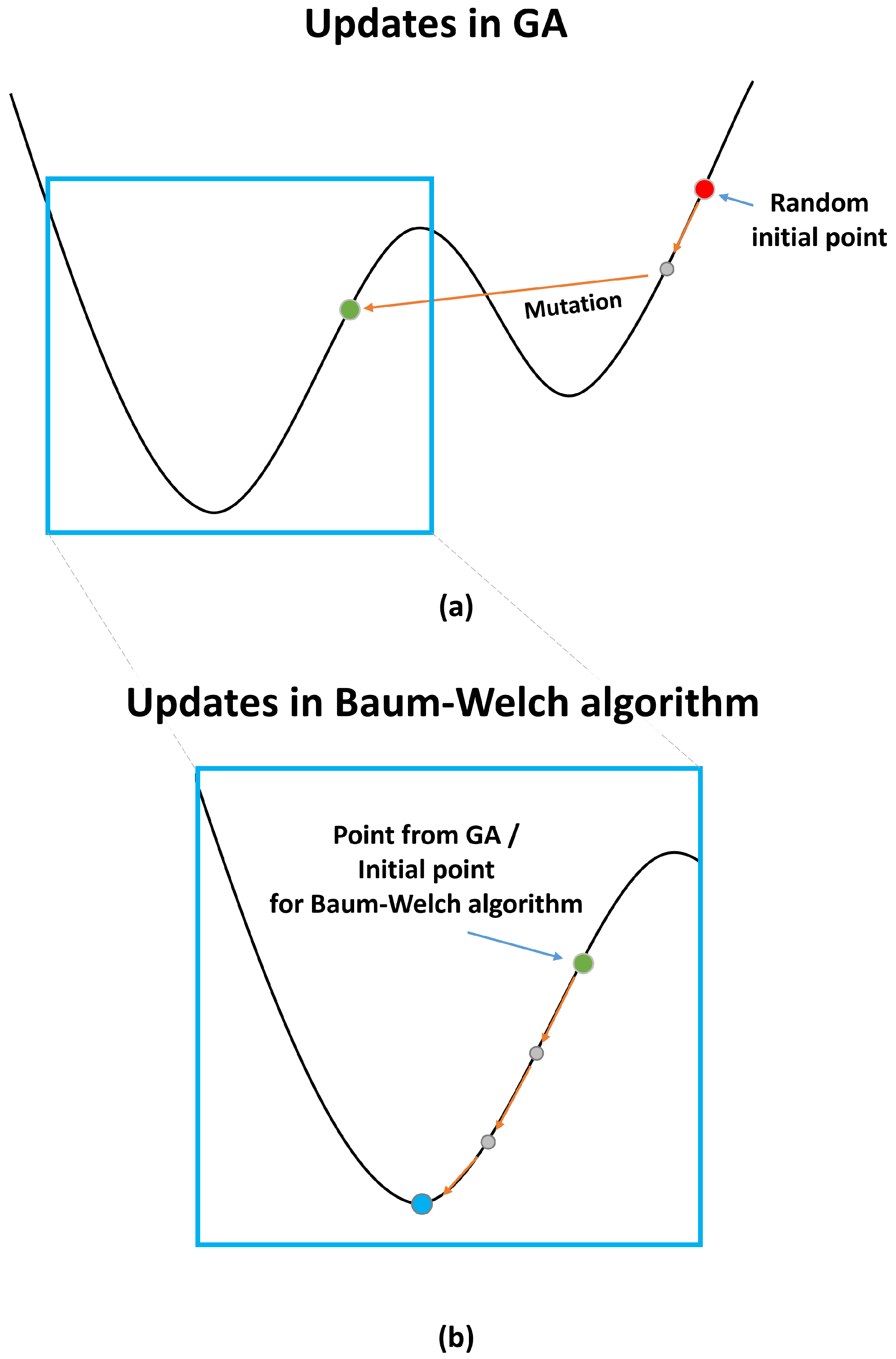

3.1. Initialization: Calibrating HMM

3.2. Parameter Adjustment Using Baum–Welch Algorithm with Multiple Measurements

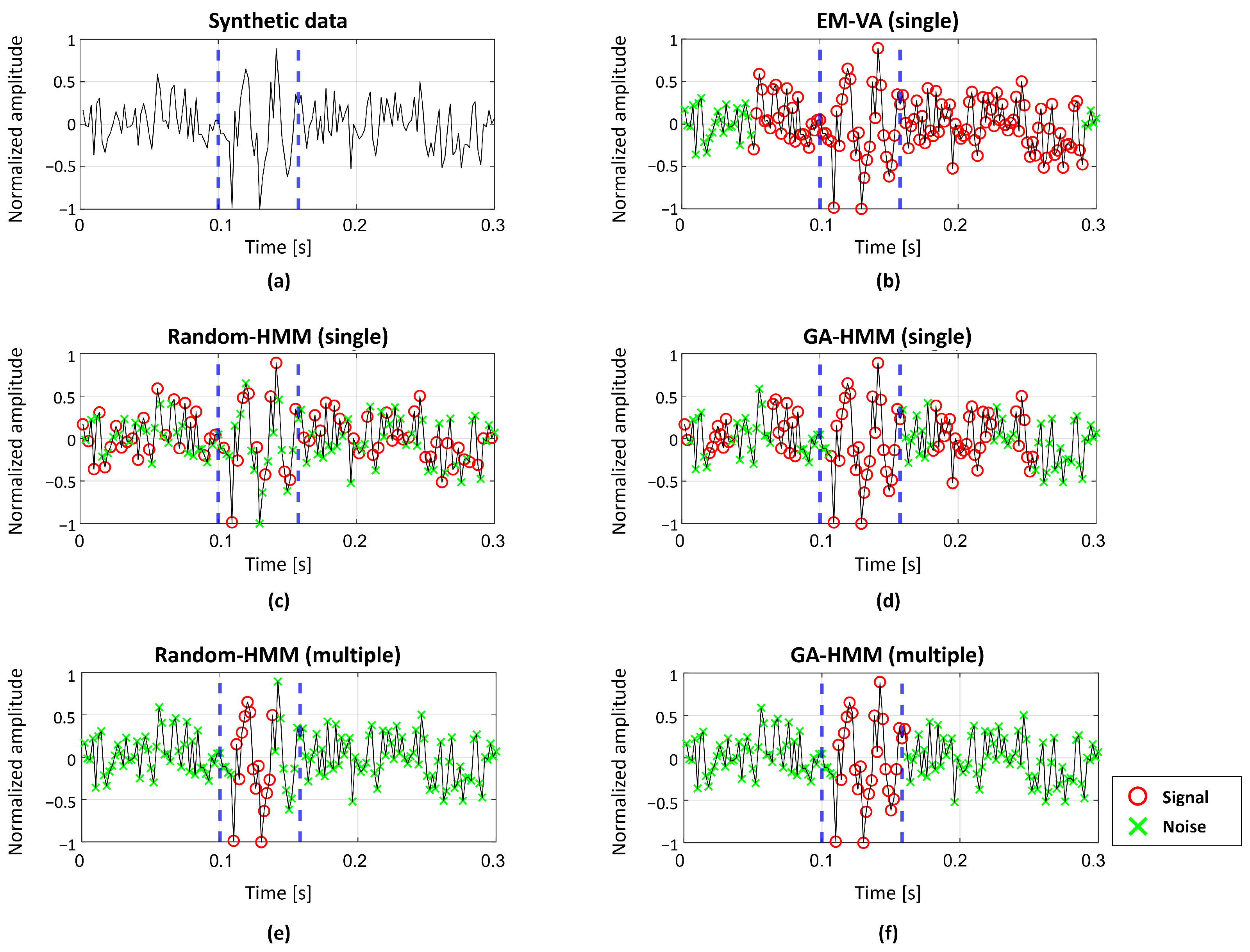

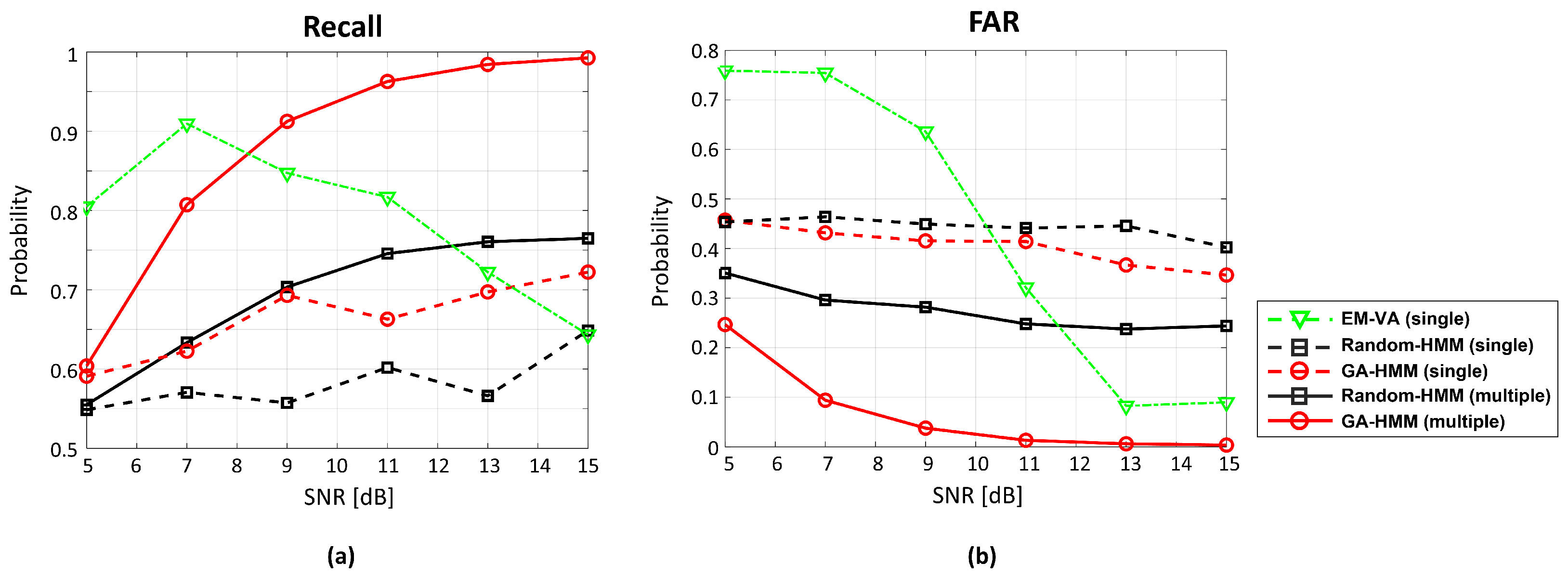

4. Analysis of GA-HMM Using Synthetic Data

4.1. Numerical Environment

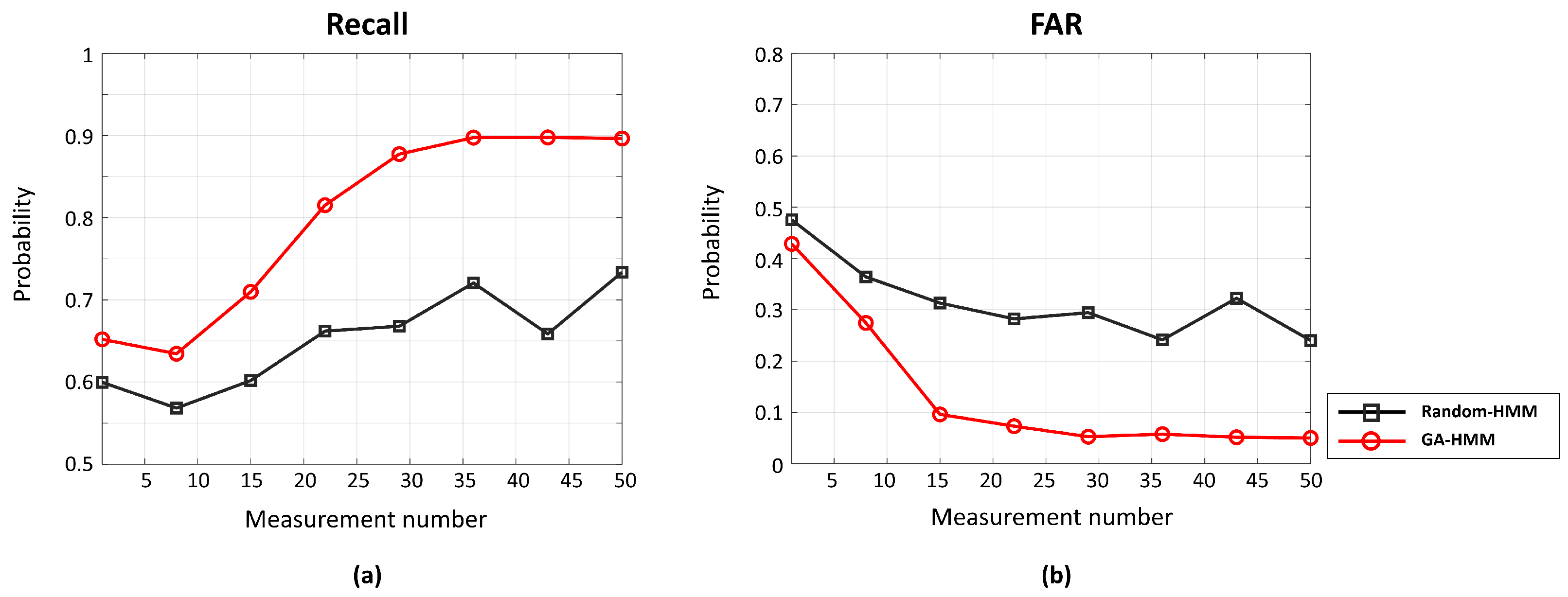

4.2. Detection Performance Analysis of GA-HMM

5. Application of GA-HMM to Measured Acoustic Data

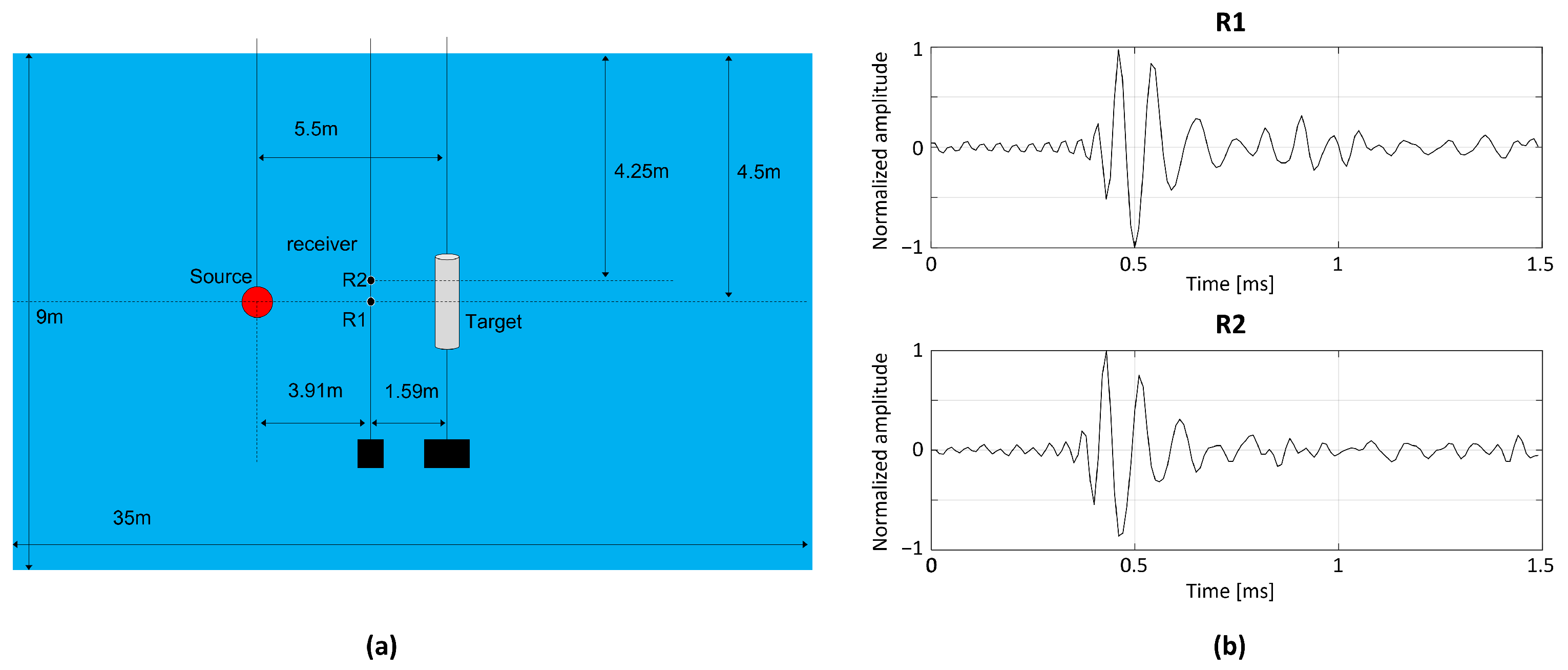

5.1. Experimental Environment

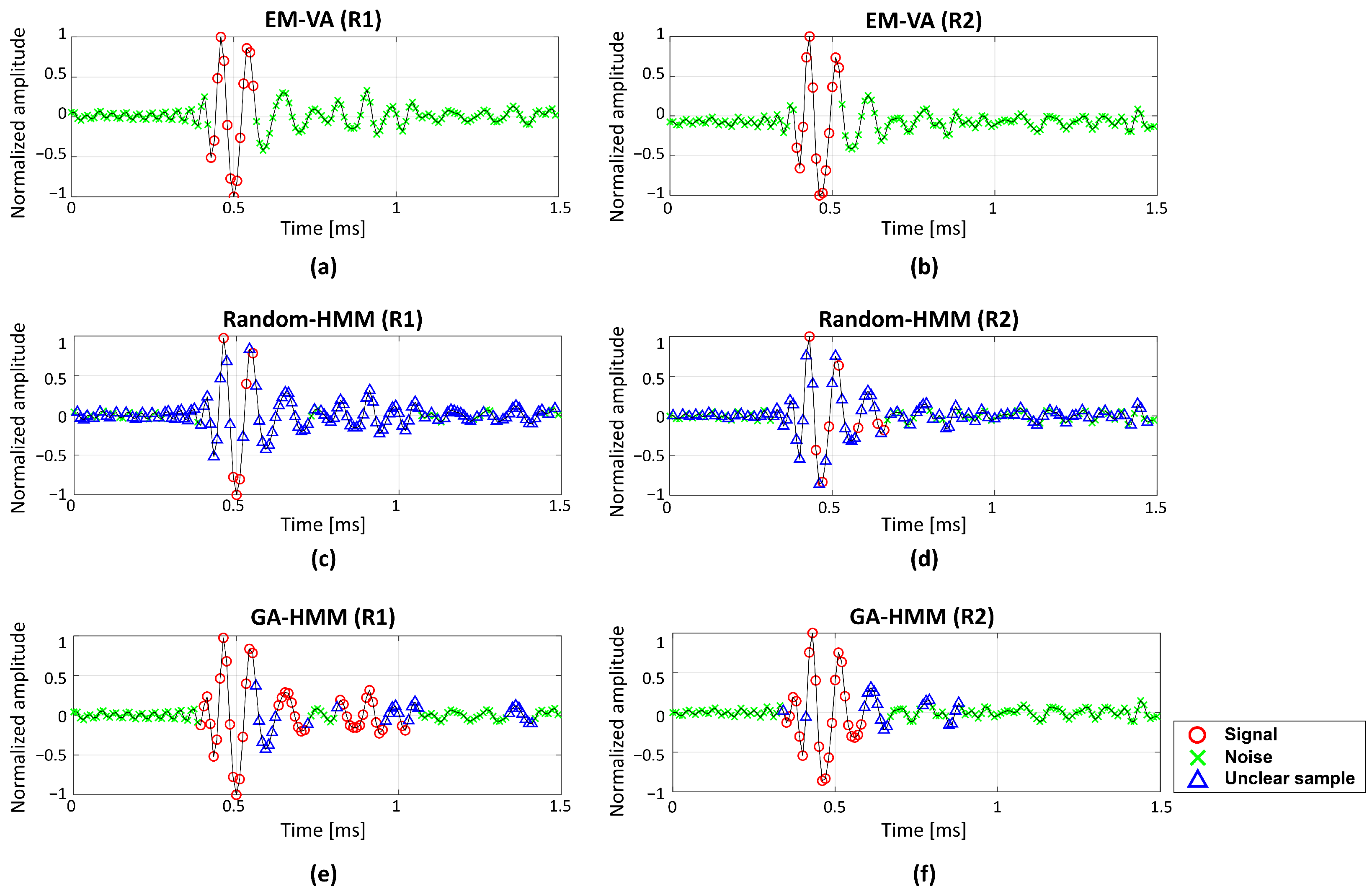

5.2. Detection Results of GA-HMM for Measured Acoustic Signals

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kabalci, E.; Kabalci, Y. From Smart Grid to Internet of Energy; Academic Press: New York, NY, USA, 2019. [Google Scholar]

- Abraham, D.A.; Willett, P.K. Active sonar detection in shallow water using the Page test. IEEE J. Ocean. Eng. 2002, 27, 35–46. [Google Scholar] [CrossRef]

- Murphy, S.M.; Hines, P.C. Examining the robustness of automated aural classification of active sonar echoes. J. Acoust. Soc. Am. 2014, 135, 626–636. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Yang, K. A denoising representation framework for underwater acoustic signal recognition. J. Acoust. Soc. Am. 2020, 147, EL377–EL383. [Google Scholar] [CrossRef] [PubMed]

- Hu, G.; Wang, K.; Liu, L. Underwater acoustic target recognition based on depthwise separable convolution neural networks. Sensors 2021, 21, 1429. [Google Scholar] [CrossRef] [PubMed]

- Tian, S.; Chen, D.; Wang, H.; Liu, J. Deep convolution stack for waveform in underwater acoustic target recognition. Sci. Rep. 2021, 11, 9614. [Google Scholar] [CrossRef] [PubMed]

- Wang, M.; Qiu, B.; Zhu, Z.; Xue, H.; Zhou, C. Study on Active Tracking of Underwater Acoustic Target Based on Deep Convolution Neural Network. Appl. Sci. 2021, 11, 7530. [Google Scholar] [CrossRef]

- Gales, M.; Young, S. The Application of Hidden Markov Models in Speech Recognition; Now Publishers Inc.: Hanover, MA, USA, 2008. [Google Scholar]

- Coleman, J.; Coleman, J.S. Introducing Speech and Language Processing; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing, 3rd (draft) ed.; Stanford University: Stanford, CA, USA, 2020. [Google Scholar]

- Rabiner, L.R. A tutorial on hidden Markov models and selected applications in speech recognition. Proc. IEEE 1989, 77, 257–286. [Google Scholar] [CrossRef]

- Tugac, S.; Efe, M. Radar target detection using hidden Markov models. Prog. Electromagn. Res. 2012, 44, 241–259. [Google Scholar] [CrossRef][Green Version]

- Putland, R.L.; Ranjard, L.; Constantine, R.; Radford, C.A. A hidden Markov model approach to indicate Bryde’s whale acoustics. Ecol. Indic. 2018, 84, 479–487. [Google Scholar] [CrossRef]

- Diamant, R.; Kipnis, D.; Zorzi, M. A clustering approach for the detection of acoustic/seismic signals of unknown structure. IEEE Trans. Geosci. Remote. Sens. 2017, 56, 1017–1029. [Google Scholar] [CrossRef]

- Oudelha, M.; Ainon, R.N. HMM parameters estimation using hybrid Baum-Welch genetic algorithm. In Proceedings of the 2010 International Symposium on Information Technology, Kuala Lumpur, Malaysia, 15–17 June 2010; IEEE: Piscataway, NJ, USA, 2010; Volume 2, pp. 542–545. [Google Scholar]

- Koller, D.; Friedman, N. Probabilistic Graphical Models: Principles and Techniques; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Li, X.; Parizeau, M.; Plamondon, R. Training hidden markov models with multiple observations-a combinatorial method. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 371–377. [Google Scholar]

- Viterbi, A. Error bounds for convolutional codes and an asymptotically optimum decoding algorithm. IEEE Trans. Inf. Theory 1967, 13, 260–269. [Google Scholar] [CrossRef]

- Press, W.H.; Teukolsky, S.A.; Vetterling, W.T.; Flannery, B.P. Numerical Recipes 3rd Edition: The Art of Scientific Computing; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar]

- Zhang, X.; Wang, Y.; Zhao, Z. A hybrid speech recognition training method for hmm based on genetic algorithm and baum welch algorithm. In Proceedings of the Second International Conference on Innovative Computing, Informatio and Control (ICICIC 2007), Kumamoto, Japan, 5–7 September 2007; IEEE: Piscataway, NJ, USA, 2007; p. 572. [Google Scholar]

- Liu, T.; Lemeire, J.; Yang, L. Proper initialization of Hidden Markov models for industrial applications. In Proceedings of the 2014 IEEE China Summit & International Conference on Signal and Information Processing (ChinaSIP), Xi’an, China, 9–13 July 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 490–494. [Google Scholar]

- Huda, M.; Ghosh, R.; Yearwood, J. A variable initialization approach to the EM algorithm for better estimation of the parameters of hidden markov model based acoustic modeling of speech signals. In Proceedings of the Industrial Conference on Data Mining, Leipzig, Germany, 14–15 July 2006; Springer: Berlin, Germany, 2006; pp. 416–430. [Google Scholar]

- Kipnis, D.; Diamant, R. A factor-graph clustering approach for detection of underwater acoustic signals. IEEE Geosci. Remote. Sens. Lett. 2018, 16, 702–706. [Google Scholar] [CrossRef]

- Mathew, T.V. Genetic algorithm. Report Submitted at IIT Bombay. 2012. Available online: http://datajobstest.com/data-science-repo/Genetic-Algorithm-Guide-[Tom-Mathew].pdf (accessed on 22 October 2021).

- Russell, S.J. Artificial Intelligence a Modern Approach; Pearson Education, Inc.: Upper Saddle River, NJ, USA, 2010. [Google Scholar]

- Mansouri, T.; Sadeghimoghadam, M.; Sahebi, I.G. A New Algorithm for Hidden Markov Models Learning Problem. arXiv 2021, arXiv:2102.07112. [Google Scholar]

- Lee, K.; Choo, Y.S.; Choi, G.; Choo, Y.; Byun, S.H.; Kim, K. Near-field target strength of finite cylindrical shell in water. Appl. Acoust. 2021, 182, 108233. [Google Scholar] [CrossRef]

| Scheme | Recall | False Alarm Rate | Computation Time |

|---|---|---|---|

| EM-VA (single) | 0.86 | 0.63 | 0.83 s |

| Random-HMM (single) | 0.60 | 0.48 | 0.08 s |

| GA-HMM (single) | 0.65 | 0.43 | 6.76 s |

| Random-HMM (multiple) | 0.69 | 0.27 | 11.02 s |

| GA-HMM (multiple) | 0.88 | 0.06 | 12.10 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

You, H.; Byun, S.-H.; Choo, Y. Underwater Acoustic Signal Detection Using Calibrated Hidden Markov Model with Multiple Measurements. Sensors 2022, 22, 5088. https://doi.org/10.3390/s22145088

You H, Byun S-H, Choo Y. Underwater Acoustic Signal Detection Using Calibrated Hidden Markov Model with Multiple Measurements. Sensors. 2022; 22(14):5088. https://doi.org/10.3390/s22145088

Chicago/Turabian StyleYou, Heewon, Sung-Hoon Byun, and Youngmin Choo. 2022. "Underwater Acoustic Signal Detection Using Calibrated Hidden Markov Model with Multiple Measurements" Sensors 22, no. 14: 5088. https://doi.org/10.3390/s22145088

APA StyleYou, H., Byun, S.-H., & Choo, Y. (2022). Underwater Acoustic Signal Detection Using Calibrated Hidden Markov Model with Multiple Measurements. Sensors, 22(14), 5088. https://doi.org/10.3390/s22145088