Bridging 3D Slicer and ROS2 for Image-Guided Robotic Interventions

Abstract

:1. Introduction

2. Methods

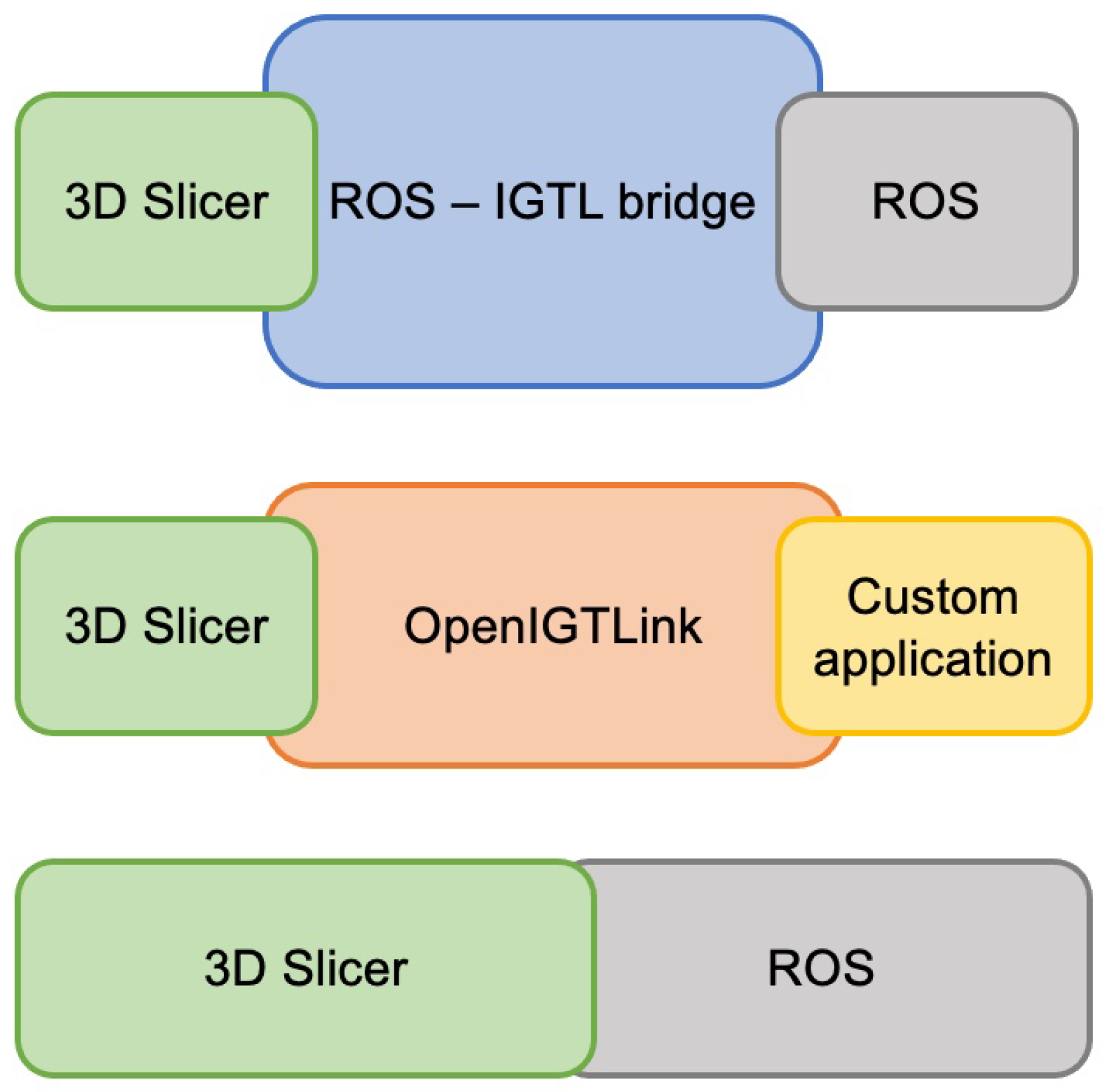

2.1. Comparison to Previous Implementations

2.2. Requirements

2.3. Dependencies

2.4. Robot Description

2.5. Robot State

2.6. Synchronization

3. Experiments

3.1. Generalizability

3.2. Latency Evaluation

3.3. Surgical Use Case

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rea, F.; Marulli, G.; Bortolotti, L.; Feltracco, P.; Zuin, A.; Sartori, F. Experience with the “da Vinci” robotic system for thymectomy in patients with myasthenia gravis: Report of 33 cases. Ann. Thorac. Surg. 2006, 81, 455–459. [Google Scholar] [CrossRef] [PubMed]

- Taylor, R.; Jensen, P.; Whitcomb, L.; Barnes, A.; Kumar, R.; Stoianovici, D.; Gupta, P.; Wang, Z.; Dejuan, E.; Kavoussi, L. A steady-hand robotic system for microsurgical augmentation. Int. J. Robot. Res. 1999, 18, 1201–1210. [Google Scholar] [CrossRef]

- Troccaz, J.; Dagnino, G.; Yang, G.Z. Frontiers of medical robotics: From concept to systems to clinical translation. Annu. Rev. Biomed. Eng. 2019, 21, 193–218. [Google Scholar] [CrossRef] [PubMed]

- DiMaio, S.; Hanuschik, M.; Kreaden, U. The da Vinci surgical system. In Surgical Robotics; Springer: Berlin/Heidelberg, Germany, 2011; pp. 199–217. [Google Scholar]

- Roche, M. The MAKO robotic-arm knee arthroplasty system. Arch. Orthop. Trauma Surg. 2021, 141, 2043–2047. [Google Scholar] [CrossRef] [PubMed]

- Kilby, W.; Dooley, J.; Kuduvalli, G.; Sayeh, S.; Maurer, C., Jr. The CyberKnife® robotic radiosurgery system in 2010. Technol. Cancer Res. Treat. 2010, 9, 433–452. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cleary, K.; Peters, T.M. Image-guided interventions: Technology review and clinical applications. Annu. Rev. Biomed. Eng. 2010, 12, 119–142. [Google Scholar] [CrossRef] [PubMed]

- Mezger, U.; Jendrewski, C.; Bartels, M. Navigation in surgery. Langenbeck’s Arch. Surg. 2013, 398, 501–514. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ungi, T.; Gauvin, G.; Lasso, A.; Yeo, C.T.; Pezeshki, P.; Vaughan, T.; Carter, K.; Rudan, J.; Engel, C.J.; Fichtinger, G. Navigated breast tumor excision using electromagnetically tracked ultrasound and surgical instruments. IEEE Trans. Biomed. Eng. 2015, 63, 600–606. [Google Scholar] [CrossRef] [PubMed]

- Lo, C.K.; Li, H.Y.; Wong, Y.C.; Wai, Y.L. Total knee replacement with iASSIST navigation system. J. Orthop. Trauma Rehabil. 2018, 24, 29–33. [Google Scholar] [CrossRef] [Green Version]

- Frank, T.; Krieger, A.; Leonard, S.; Patel, N.A.; Tokuda, J. ROS-IGTL-Bridge: An open network interface for image-guided therapy using the ROS environment. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1451–1460. [Google Scholar] [CrossRef] [PubMed]

- Herrell, S.D.; Galloway, R.L.; Su, L.M. Image-guided robotic surgery: Update on research and potential applications in urologic surgery. Curr. Opin. Urol. 2012, 22, 47–54. [Google Scholar] [CrossRef] [PubMed]

- Vladareanu, V.; Munteanu, R.I.; Mumtaz, A.; Smarandache, F.; Vladareanu, L. The optimization of intelligent control interfaces using Versatile Intelligent Portable Robot Platform. Procedia Comput. Sci. 2015, 65, 225–232. [Google Scholar] [CrossRef] [Green Version]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Berger, E.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Chen, Z.; Deguet, A.; Vozar, S.; Munawar, A.; Fischer, G.; Kazanzides, P. Interfacing the da Vinci Research Kit (dVRK) with the Robot Operating System (ROS). In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1–4. [Google Scholar]

- Tauscher, S.; Tokuda, J.; Schreiber, G.; Neff, T.; Hata, N.; Ortmaier, T. OpenIGTLink interface for state control and visualisation of a robot for image-guided therapy systems. Int. J. Comput. Assist. Radiol. Surg. 2015, 10, 285–292. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ungi, T.; Lasso, A.; Fichtinger, G. Open-source platforms for navigated image-guided interventions. Med. Image Anal. 2016, 33, 181–186. [Google Scholar] [CrossRef] [PubMed]

- Pieper, S.; Halle, M.; Kikinis, R. 3D Slicer. In Proceedings of the 2004 2nd IEEE International Symposium on Biomedical Imaging: Nano to Macro (IEEE Cat No. 04EX821), Arlington, VA, USA, 15–18 April 2004; pp. 632–635. [Google Scholar]

- Kikinis, R.; Pieper, S. 3D Slicer as a tool for interactive brain tumor segmentation. In Proceedings of the 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 6982–6984. [Google Scholar]

- Janssen, N.; Eppenga, R.; Peeters, M.J.V.; van Duijnhoven, F.; Oldenburg, H.; van der Hage, J.; Rutgers, E.; Sonke, J.J.; Kuhlmann, K.; Ruers, T.; et al. Real-time wireless tumor tracking during breast conserving surgery. Int. J. Comput. Assist. Radiol. Surg. 2018, 13, 531–539. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, K.F.; Ziu, M.; Ole Schmidt, N.; Vaghasia, P.; Cargioli, T.G.; Doshi, S.; Albert, M.S.; Black, P.M.; Carroll, R.S.; Sun, Y. Volume reconstruction techniques improve the correlation between histological and in vivo tumor volume measurements in mouse models of human gliomas. J. Neuro-Oncol. 2004, 68, 207–215. [Google Scholar] [CrossRef] [PubMed]

- Mehrtash, A.; Pesteie, M.; Hetherington, J.; Behringer, P.A.; Kapur, T.; Wells, W.M., III; Rohling, R.; Fedorov, A.; Abolmaesumi, P. DeepInfer: Open-source deep learning deployment toolkit for image-guided therapy. In Proceedings of the Medical Imaging 2017: Image-Guided Procedures, Robotic Interventions, and Modeling, Orlando, FL, USA, 11–16 February 2017; SPIE: Bellingham, WA, USA, 16 February 2017; Volume 10135, pp. 410–416. [Google Scholar]

- Tokuda, J.; Fischer, G.S.; Papademetris, X.; Yaniv, Z.; Ibanez, L.; Cheng, P.; Liu, H.; Blevins, J.; Arata, J.; Golby, A.J.; et al. OpenIGTLink: An open network protocol for image-guided therapy environment. Int. J. Med. Robot. Comput. Assist. Surg. 2009, 5, 423–434. [Google Scholar] [CrossRef] [PubMed]

- Connolly, L.; Deguet, A.; Sunderland, K.; Lasso, A.; Ungi, T.; Rudan, J.F.; Taylor, R.H.; Mousavi, P.; Fichtinger, G. An open-source platform for cooperative, semi-autonomous robotic surgery. In Proceedings of the 2021 IEEE International Conference on Autonomous Systems (ICAS), Montreal, QC, Canada, 11–13 August 2021; pp. 1–5. [Google Scholar]

- Klotzbücher, M.; Soetens, P.; Bruyninckx, H. Orocos RTT-Lua: An execution environment for building real-time robotic domain specific languages. In Proceedings of the International Workshop on Dynamic languages for Robotic and Sensors, Darmstadt, Germany, 15–18 November 2010; Volume 8. [Google Scholar]

- Stavrinos, G. ROS2 For ROS1 Users. In Robot Operating System (ROS); Springer: Berlin/Heidelberg, Germany, 2021; pp. 31–42. [Google Scholar]

- Gering, D.T.; Nabavi, A.; Kikinis, R.; Grimson, W.E.L.; Hata, N.; Everett, P.; Jolesz, F.; Wells, W.M. An integrated visualization system for surgical planning and guidance using image fusion and interventional imaging. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Cambridge, UK, 19–22 September 1999; Springer: Berlin/Heidelberg, Germany, 1999; pp. 809–819. [Google Scholar]

- Foote, T. tf: The transform library. In Proceedings of the 2013 IEEE Conference on Technologies for Practical Robot Applications (TePRA), Woburn, MA, USA, 22–23 April 2013; pp. 1–6. [Google Scholar]

- Kunze, L.; Roehm, T.; Beetz, M. Towards semantic robot description languages. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 5589–5595. [Google Scholar]

- Kazanzides, P.; Chen, Z.; Deguet, A.; Fischer, G.S.; Taylor, R.H.; DiMaio, S.P. An open-source research kit for the da Vinci® Surgical System. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 6434–6439. [Google Scholar] [CrossRef]

- Fontanelli, G.; Ficuciello, F.; Villani, L.; Siciliano, B. Da Vinci research kit: PSM and MTM dynamic modelling. In Proceedings of the IROS Workshop on Shared Platforms for Medical Robotics Research, Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Li, M.; Taylor, R.H. Performance of surgical robots with automatically generated spatial virtual fixtures. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 217–222. [Google Scholar]

- Welch, H.G.; Prorok, P.C.; O’Malley, A.J.; Kramer, B.S. Breast-cancer tumor size, overdiagnosis, and mammography screening effectiveness. N. Engl. J. Med. 2016, 375, 1438–1447. [Google Scholar] [CrossRef] [PubMed]

- D’Ettorre, C.; Mariani, A.; Stilli, A.; Valdastri, P.; Deguet, A.; Kazanzides, P.; Taylor, R.H.; Fischer, G.S.; DiMaio, S.P.; Menciassi, A.; et al. Accelerating surgical robotics research: Reviewing 10 years of research with the dvrk. arXiv 2021, arXiv:2104.09869. [Google Scholar]

- Attig, C.; Rauh, N.; Franke, T.; Krems, J.F. System latency guidelines then and now—Is zero latency really considered necessary? In Proceedings of the International Conference on Engineering Psychology and Cognitive Ergonomics, Vancouver, BC, Canada, 9–14 July; Springer: Berlin/Heidelberg, Germany, 2017; pp. 3–14. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Connolly, L.; Deguet, A.; Leonard, S.; Tokuda, J.; Ungi, T.; Krieger, A.; Kazanzides, P.; Mousavi, P.; Fichtinger, G.; Taylor, R.H. Bridging 3D Slicer and ROS2 for Image-Guided Robotic Interventions. Sensors 2022, 22, 5336. https://doi.org/10.3390/s22145336

Connolly L, Deguet A, Leonard S, Tokuda J, Ungi T, Krieger A, Kazanzides P, Mousavi P, Fichtinger G, Taylor RH. Bridging 3D Slicer and ROS2 for Image-Guided Robotic Interventions. Sensors. 2022; 22(14):5336. https://doi.org/10.3390/s22145336

Chicago/Turabian StyleConnolly, Laura, Anton Deguet, Simon Leonard, Junichi Tokuda, Tamas Ungi, Axel Krieger, Peter Kazanzides, Parvin Mousavi, Gabor Fichtinger, and Russell H. Taylor. 2022. "Bridging 3D Slicer and ROS2 for Image-Guided Robotic Interventions" Sensors 22, no. 14: 5336. https://doi.org/10.3390/s22145336