Matched Filter Interpretation of CNN Classifiers with Application to HAR

Abstract

1. Introduction

- Providing a clear interpretation of CNN classifiers as MF and presenting an experimental proof of concept to support this interpretation.

- Presenting a superlight highly accurate CNN classifier model for time series applications that suits both cloud and edge inference approaches.

- Applying the developed model to the renowned HAR problem and achieving outstanding results compared with the state-of-the-art models

- Testing and benchmarking the MF CNN classifier on an edge device and developing an android HAR application.

2. Literature Review

2.1. CNN Interpretation Background

2.2. Human Activity Recognition Related Work

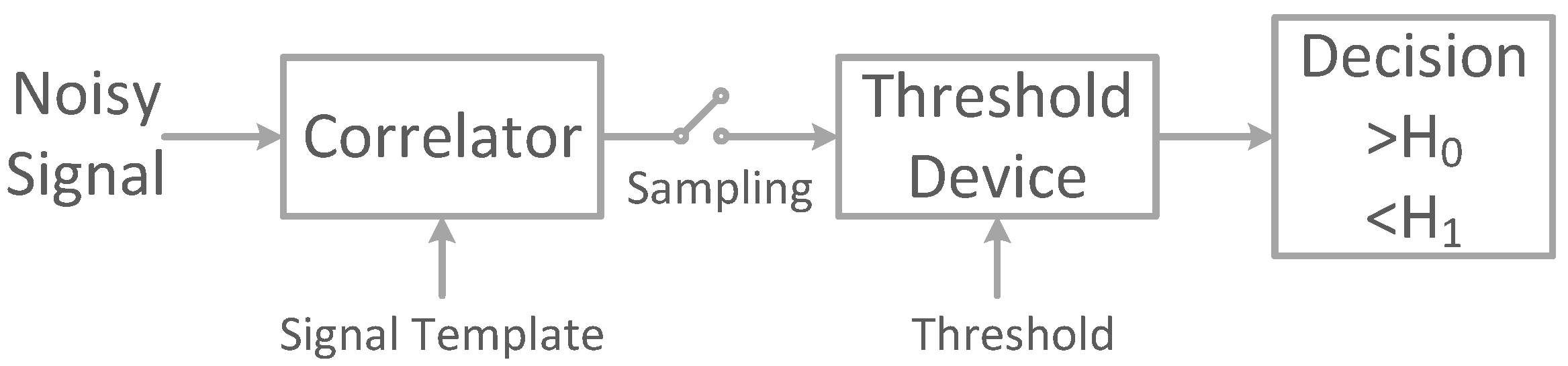

3. Matched Filter Interpretation of Convolutional Neural Network

3.1. Experimental Proof of Concept

3.1.1. Synthetic Dataset

3.1.2. Experimental Setup and Tools

3.1.3. Results and Visualizations

3.1.4. Analysis and Discussion

4. Human Activity Recognition Using the Matched Filter CNN Classifier

4.1. Datasets

4.2. Multivariate MF CNN Classifier

4.3. Methods and Tools

5. Results and Discussion

Comparison with Related Work

6. Conclusions and Future Work

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| BN | BatchNormalization |

| CAM | Class Activation Map |

| CNN | Convolutonal Neural Network |

| DNN | Deep Neural Network |

| DL | Deep Learning |

| FC | Fully Connected |

| GAP | GlobalAveragePooling |

| GMP | GlobalMaxPooling |

| GRU | Gated Recurrent Unit |

| HAR | Human Activity Recognition |

| LSTM | Long Short-Term Memory |

| MF | Matched Filter |

| ML | Machine Learning |

| RNN | Recurrent Neural Network |

References

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.; Henderson, D.; Howard, R.; Hubbard, W.; Jackel, L. Handwritten digit recognition with a back-propagation network. Adv. Neural Inf. Process. Syst. 1989, 2, 396–404. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Kiranyaz, S.; Avci, O.; Abdeljaber, O.; Ince, T.; Gabbouj, M.; Inman, D.J. 1D convolutional neural networks and applications: A survey. Mech. Syst. Signal Process. 2021, 151, 107398. [Google Scholar] [CrossRef]

- Fan, F.L.; Xiong, J.; Li, M.; Wang, G. On interpretability of artificial neural networks: A survey. IEEE Trans. Radiat. Plasma Med Sci. 2021, 5, 741–760. [Google Scholar] [CrossRef] [PubMed]

- Ziemer, R.E.; Tranter, W.H. Principles of Communications; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Molnar, C. Interpretable Machine Learning. 2020. Available online: https://bookdown.org/home/about/ (accessed on 1 October 2022).

- Montavon, G.; Samek, W.; Müller, K.R. Methods for interpreting and understanding deep neural networks. Digit. Signal Process. 2018, 73, 1–15. [Google Scholar] [CrossRef]

- Srinivasamurthy, R.S. Understanding 1D Convolutional Neural Networks Using Multiclass Time-Varying Signals. Ph.D. Thesis, Clemson University, Clemson, SC, USA, 2018. [Google Scholar]

- Pan, Q.; Zhang, L.; Jia, M.; Pan, J.; Gong, Q.; Lu, Y.; Zhang, Z.; Ge, H.; Fang, L. An interpretable 1D convolutional neural network for detecting patient-ventilator asynchrony in mechanical ventilation. Comput. Methods Programs Biomed. 2021, 204, 106057. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Yan, W.; Oates, T. Time series classification from scratch with deep neural networks: A strong baseline. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 1578–1585. [Google Scholar]

- Stankovic, L.; Mandic, D. Convolutional Neural Networks Demystified: A Matched Filtering Perspective Based Tutorial. arXiv 2021, arXiv:2108.11663. [Google Scholar]

- Farag, M.M. A Self-Contained STFT CNN for ECG Classification and Arrhythmia Detection at the Edge. IEEE Access 2022, 10, 94469–94486. [Google Scholar] [CrossRef]

- Farag, M.M. A Matched Filter-Based Convolutional Neural Network (CNN) for Inter-Patient ECG Classification and Arrhythmia Detection at the Edge. 2022. Available online: https://ssrn.com/abstract=4070665 (accessed on 1 October 2022).

- WHO. Disability and Health. 2021. Available online: https://www.who.int/news-room/fact-sheets/detail/disability-and-health (accessed on 13 October 2022).

- Kwapisz, J.R.; Weiss, G.M.; Moore, S.A. Activity recognition using cell phone accelerometers. ACM SigKDD Explor. Newsl. 2011, 12, 74–82. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra Perez, X.; Reyes Ortiz, J.L. A public domain dataset for human activity recognition using smartphones. In Proceedings of the 21th International European Symposium on Artificial Neural Networks, Computational Intelligence and Machine Learning, Bruges, Belgium, 24–26 April 2013; pp. 437–442. [Google Scholar]

- Ignatov, A. Real-time human activity recognition from accelerometer data using Convolutional Neural Networks. Appl. Soft Comput. 2018, 62, 915–922. [Google Scholar] [CrossRef]

- Xia, K.; Huang, J.; Wang, H. LSTM-CNN architecture for human activity recognition. IEEE Access 2020, 8, 56855–56866. [Google Scholar] [CrossRef]

- Nafea, O.; Abdul, W.; Muhammad, G.; Alsulaiman, M. Sensor-based human activity recognition with spatio-temporal deep learning. Sensors 2021, 21, 2141. [Google Scholar] [CrossRef] [PubMed]

- Yin, X.; Liu, Z.; Liu, D.; Ren, X. A Novel CNN-based Bi-LSTM parallel model with attention mechanism for human activity recognition with noisy data. Sci. Rep. 2022, 12, 7878. [Google Scholar] [CrossRef]

- Tan, T.H.; Wu, J.Y.; Liu, S.H.; Gochoo, M. Human activity recognition using an ensemble learning algorithm with smartphone sensor data. Electronics 2022, 11, 322. [Google Scholar] [CrossRef]

- Pushpalatha, S.; Math, S. Hybrid deep learning framework for human activity recognition. Int. J. Nonlinear Anal. Appl. 2022, 13, 1225–1237. [Google Scholar]

- Sikder, N.; Chowdhury, M.S.; Arif, A.S.M.; Nahid, A.A. Human activity recognition using multichannel convolutional neural network. In Proceedings of the 2019 5th International Conference on Advances in Electrical Engineering (ICAEE), Dhaka, Bangladesh, 26 September 2019; pp. 560–565. [Google Scholar]

- Luwe, Y.J.; Lee, C.P.; Lim, K.M. Wearable Sensor-Based Human Activity Recognition with Hybrid Deep Learning Model. Informatics 2022, 9, 56. [Google Scholar] [CrossRef]

- Ronald, M.; Poulose, A.; Han, D.S. iSPLInception: An inception-ResNet deep learning architecture for human activity recognition. IEEE Access 2021, 9, 68985–69001. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Sannara, E.K.; François Portet, P.L. Lightweight Transformers for Human Activity Recognition on Mobile Devices. arXiv 2022, arXiv:2209.11750. [Google Scholar]

- Tang, C.I.; Perez-Pozuelo, I.; Spathis, D.; Brage, S.; Wareham, N.; Mascolo, C. SelfHAR: Improving human activity recognition through self-training with unlabeled data. arXiv 2021, arXiv:2102.06073. [Google Scholar] [CrossRef]

- Rahimi Taghanaki, S.; Rainbow, M.J.; Etemad, A. Self-supervised Human Activity Recognition by Learning to Predict Cross-Dimensional Motion. In Proceedings of the 2021 International Symposium on Wearable Computers, Virtual, 21–26 September 2021; pp. 23–27. [Google Scholar]

- Taghanaki, S.R.; Rainbow, M.; Etemad, A. Self-Supervised Human Activity Recognition with Localized Time-Frequency Contrastive Representation Learning. arXiv 2022, arXiv:2209.00990. [Google Scholar]

- Géron, A. Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow: Concepts, Tools, and Techniques to Build Intelligent Systems; O’Reilly Media: Sebastopol, CA, USA, 2019. [Google Scholar]

- Malekzadeh, M.; Clegg, R.G.; Cavallaro, A.; Haddadi, H. Mobile Sensor Data Anonymization. In Proceedings of the International Conference on Internet of Things Design and Implementation, Montreal, QC, Canada, 15–18 April 2019; ACM: New York, NY, USA, 2019; pp. 49–58. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Tensorflow. Quantization Aware Training with TensorFlow Model Optimization Toolkit—Performance with Accuracy. 2020. Available online: https://blog.tensorflow.org/2020/04/quantization-aware-training-with-tensorflow-model-optimization-toolkit.html (accessed on 20 June 2022).

- Tensorflow. TensorFlow Lite: ML for Mobile and Edge Devices. 2022. Available online: https://www.tensorflow.org/lite/ (accessed on 20 June 2022).

| Work | Used Methods | Limitations |

|---|---|---|

| Ignatov [22], 2018 | CNN + Statistical Features | Statistical feature extraction requires additional computational cost |

| Xia et al. [23], 2020 | CNN + LSTM | The model depth and layer diversity increases the model complexity |

| Nafea et al. [24], 2021 | CNN + BiLSTM | |

| Yin et al. [25], 2022 | CNN + BiLSTM + Attention | LSTM and GRU RNNs suffer from increased computation time, limiting their applicability to edge inference |

| Tan et al. [26], 2022 | Conv1D + GRU + Ensemble learning | |

| Pushpalatha and Math [27], 2022 | CNN + GRU+ FC | Models tested on a single dataset do not establish the model generalization capabilities |

| Sikder et al. [28], 2019 | CNN | Using such a DNN increases the computational cost of the model |

| Luwe et al. [29], 2022 | CNN + BiLSTM | Using a DNN model with hybrid layers increases the model complexity and computational cost of the proposed classifier |

| Ronald et al. [30], 2021 | CNN + BiLSTM + Inception + ResNet | Such a deep model is not the best fit for edge inference, which requires smaller models with a reduced computational cost. |

| Sannara EK [32], 2022 | CNN + Transformer | The number of parameters is greater than 1 million |

| Tang et al. [33], 2021 | Teacher-Student CNN | |

| Rahimi Taghanaki et al. [34], 2021 | CNN + FC + Transfer Learning | Results achieved by self-supervised and semisupervised models fall behind their supervised learning counterparts by a considerable margin |

| Taghanaki et al. [35], 2022 | CNN + STFT + Transfer Learning |

| Symbol | Definition | Range of Values | |

|---|---|---|---|

| Dataset | N | Number of template signals | 2–5 |

| Number of examples per ith temp | 100, 1000, 10000 | ||

| P | Noise-to-signal % | 0, 50%, 100% | |

| Number of classes | 2–5 | ||

| Dataset size | |||

| Balanced | Is the dataset balanced | Yes, No | |

| Model | Number of Conv1D layer filters | 64, 128 | |

| Conv1D kernel size | 64, 128 | ||

| Model input size | 128 | ||

| Learnable | Learn layer weights | True, False |

| Dataset | Training Results | Validation % | Testing % | Number of Params | Android Benchmarking Results (Float32) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Acc % | % | Time (s) | Acc % | % | Acc % | % | Average Infer Time (μs) | Memory Footprint (MB) | File Size (KB) | ||

| UCI-HAR | 98.98 | 97.32 | 523.63 | 97.82 | 97.69 | 97.32 | 97.35 | 37,566 | 672.60 | 2.98 | 149.75 |

| mSense | 99.88 | 99.88 | 1199.88 | 98.73 | 98.51 | 98.03 | 97.88 | 25,044 | 517.28 | 2.92 | 101.65 |

| WISDM-AR | 99.95 | 99.53 | 151.62 | 97.60 | 96.86 | 97.67 | 96.34 | 22,383 | 294.63 | 2.96 | 89.46 |

| ID | Work | Used Methods | ACC % | % | Number of Parameters | Inference Time (ms) | |

|---|---|---|---|---|---|---|---|

| UCI-HAR | 1 | Proposed MF CNN | CNN | 97.32 | 97.35 | 37,566 | 0.67 |

| 2 | Nafea et al. [24], 2021 | CNN + BiLSTM | 97.04 | 97.00 | – | – | |

| 3 | Yin et al. [25], 2022 | CNN + BiLSTM + Attention | 96.71 | – | – | 14.71 | |

| 4 | Ignatov [22], 2018 | CNN + Statistical Features | 97.62 | 97.63 | – | – | |

| 5 | Tan et al. [26], 2022 | Conv1D + GRU + Ensemble learning | 96.70 | 96.80 | – | 1.68 | |

| 6 | Pushpalatha and Math [27], 2022 | CNN + GRU+ FC | 96.79 | 97.82 | – | – | |

| 7 | Sikder et al. [28], 2019 | CNN | 95.25 | 95.24 | – | – | |

| 8 | Xia et al. [23], 2020 | CNN + LSTM | 95.80 | 95.78 | 49,606 | – | |

| 9 | Tang et al. [33], 2021 | Teacher-Student CNN | – | 91.35 | – | – | |

| 10 | Ronald et al. [30], 2021 | CNN + BiLSTM + Inception + ResNet | 95.09 | 95.00 | 1,327,754 | – | |

| 11 | Sannara EK [32], 2022 | CNN + Transformer | – | 97.67 | 1,275,702 | 6.40 | |

| 12 | Rahimi Taghanaki et al. [34], 2021 | CNN + FC + Transfer Learning | 90.80 | 91.00 | – | – | |

| 13 | Luwe et al. [29], 2022 | CNN + BiLSTM | 95.48 | 95.45 | – | – | |

| MotionSense | 1 | Proposed MF CNN | CNN | 98.03 | 97.88 | 25,044 | 0.52 |

| 2 | Tang et al. [33], 2021 | Teacher-Student CNN | – | 96.31 | – | – | |

| 3 | Sannara EK [32], 2022 | CNN + Transformer | – | 98.32 | 1,275,702 | 6.40 | |

| 4 | Rahimi Taghanaki et al. [34], 2021 | CNN + FC + Transfer Learning | 93.30 | 91.8 | – | – | |

| 5 | Taghanaki et al. [35], 2022 | CNN + STFT + Transfer Learning | – | 94.30 | – | – | |

| 6 | Luwe et al. [29], 2022 | CNN + BiLSTM | 94.17 | 91.89 | – | – | |

| WISDM-AR | 1 | Proposed MF CNN | CNN | 97.67 | 96.34 | 22,383 | 0.29 |

| 2 | Nafea et al. [24], 2021 | CNN + BiLSTM | 98.53 | 97.16 | – | – | |

| 3 | Yin et al. [25], 2022 | CNN + BiLSTM + Attention | 95.86 | – | – | 12.11 | |

| 4 | Ignatov [22], 2018 | CNN + Statistical Features | 90.42 | – | – | – | |

| 5 | Xia et al. [23], 2020 | CNN + LSTM | 95.75 | 95.85 | 49,606 | – | |

| 6 | Tang et al. [33], 2021 | Teacher-Student CNN | – | 90.81 | – | – |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farag, M.M. Matched Filter Interpretation of CNN Classifiers with Application to HAR. Sensors 2022, 22, 8060. https://doi.org/10.3390/s22208060

Farag MM. Matched Filter Interpretation of CNN Classifiers with Application to HAR. Sensors. 2022; 22(20):8060. https://doi.org/10.3390/s22208060

Chicago/Turabian StyleFarag, Mohammed M. 2022. "Matched Filter Interpretation of CNN Classifiers with Application to HAR" Sensors 22, no. 20: 8060. https://doi.org/10.3390/s22208060

APA StyleFarag, M. M. (2022). Matched Filter Interpretation of CNN Classifiers with Application to HAR. Sensors, 22(20), 8060. https://doi.org/10.3390/s22208060