Individual Pig Identification Using Back Surface Point Clouds in 3D Vision

Abstract

1. Introduction

2. Materials and Methods

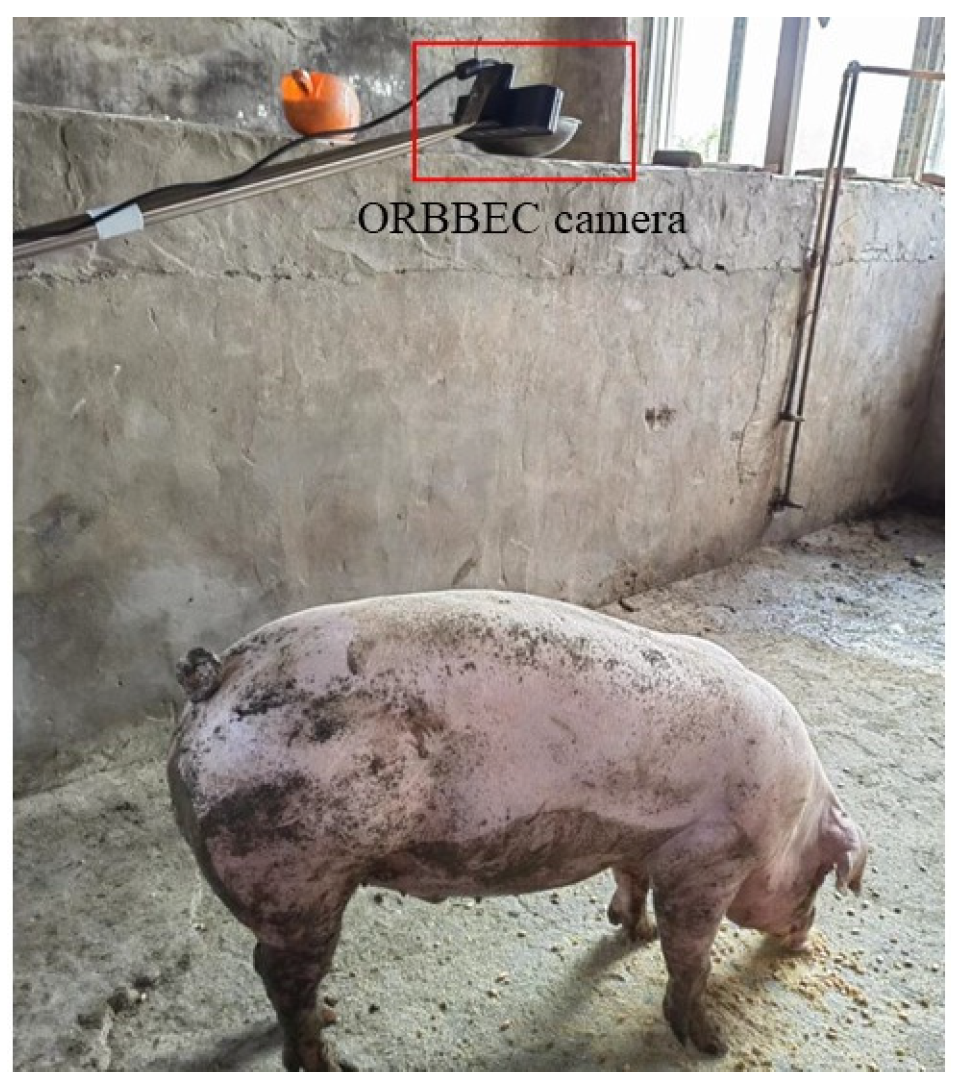

2.1. Pigs and Housing

2.2. Data Collection

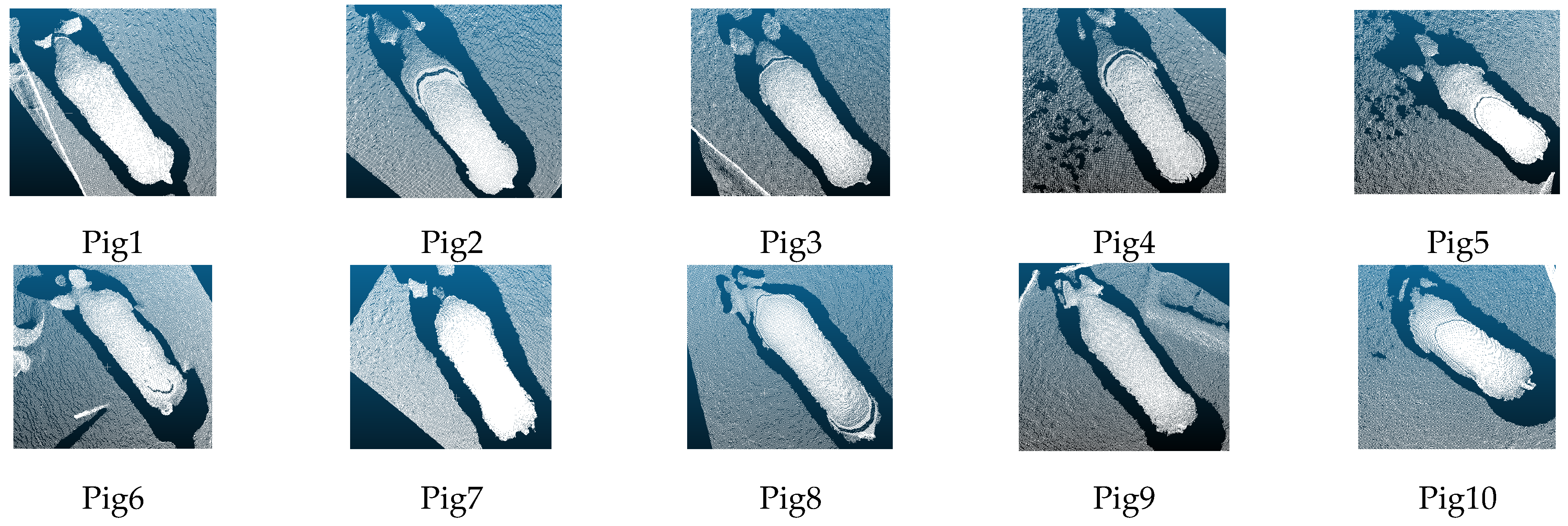

2.3. Dataset Description and Processing

- The image contained the complete pig body;

- The posture of the pig was standing and the body was straight.

2.4. Segmentation and Identification Methods

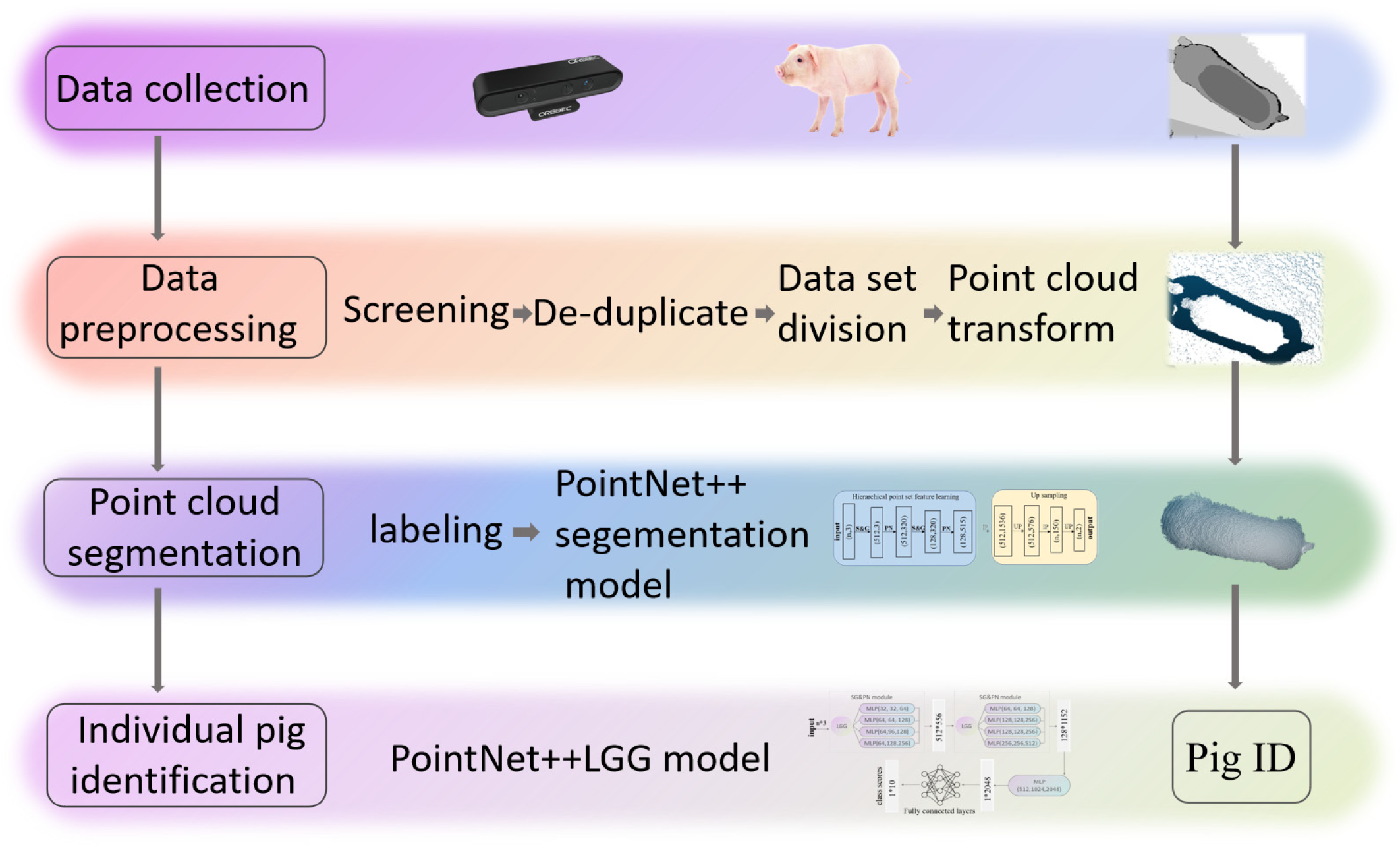

2.4.1. Overall Flow

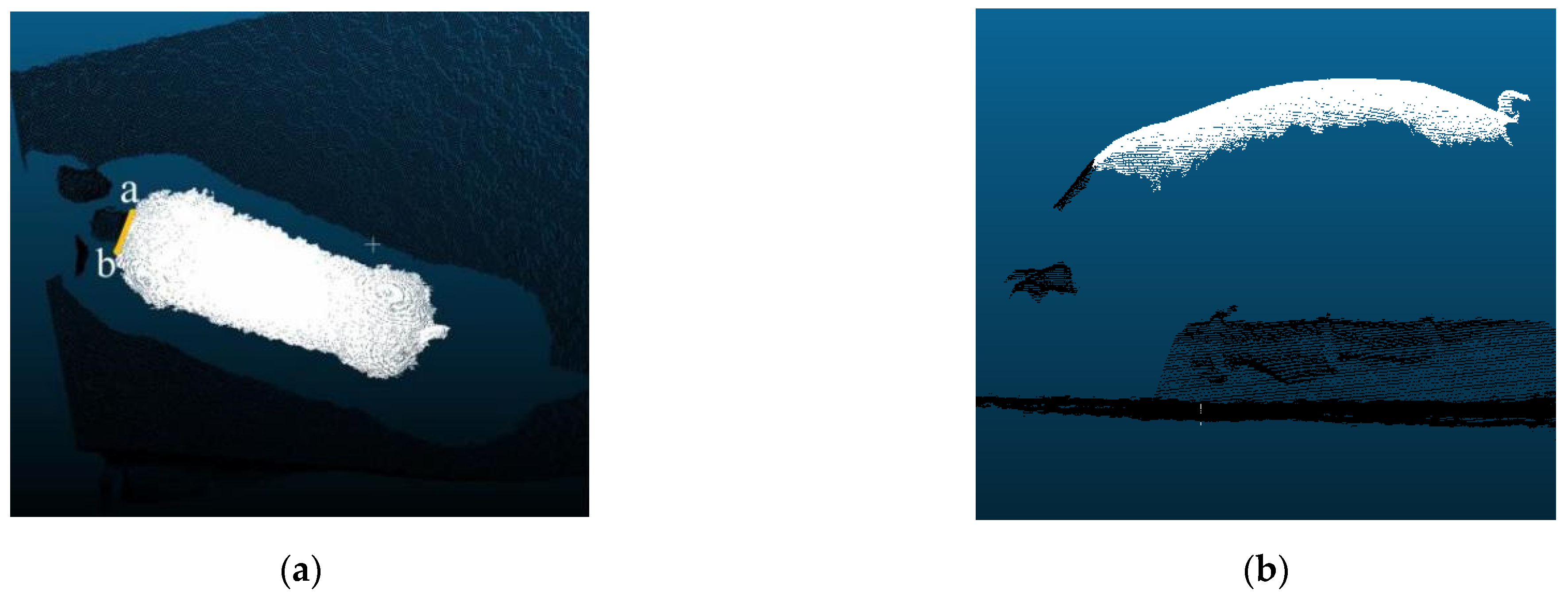

2.4.2. Pig Body Segmentation Methods

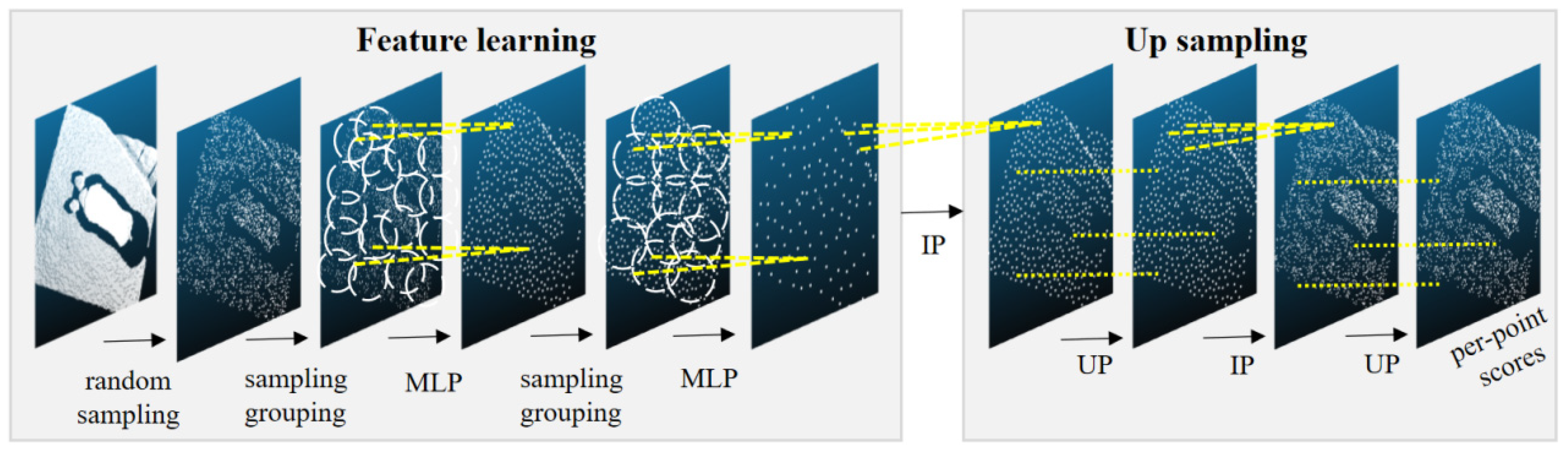

- Point cloud labeling

- 2.

- Building a PointNet++ segmentation model

2.4.3. Individual Pig Recognition Methods

- 3.

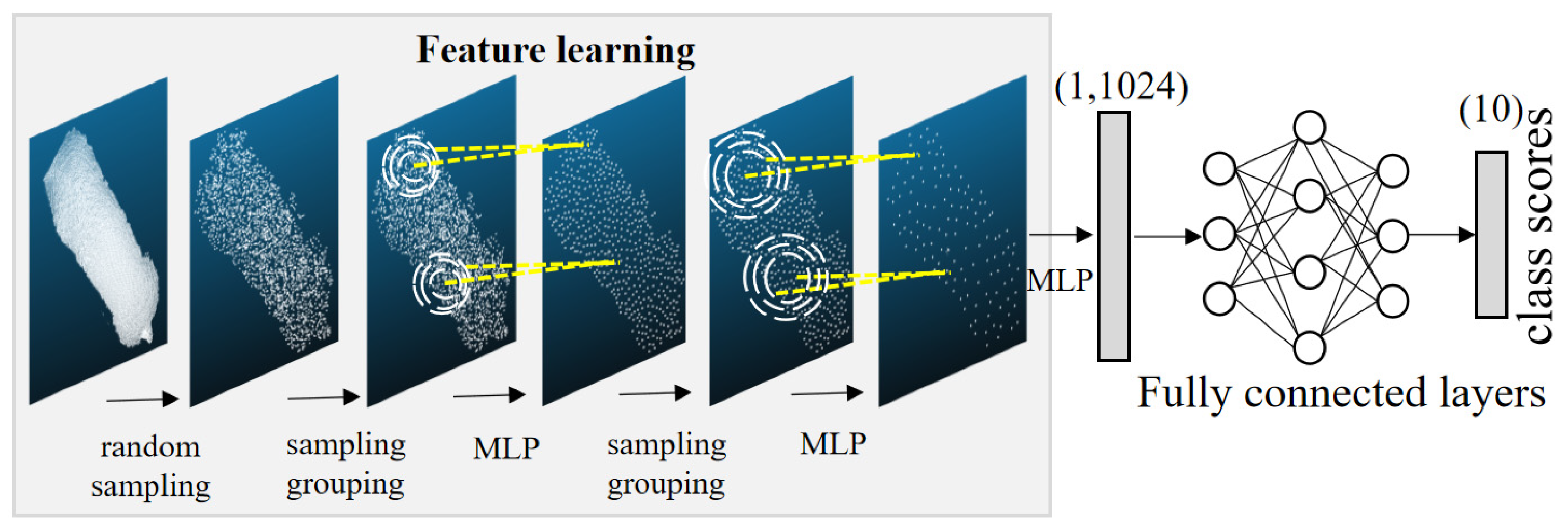

- Individual pig identification model based on PointNet++ classification algorithm

- 4.

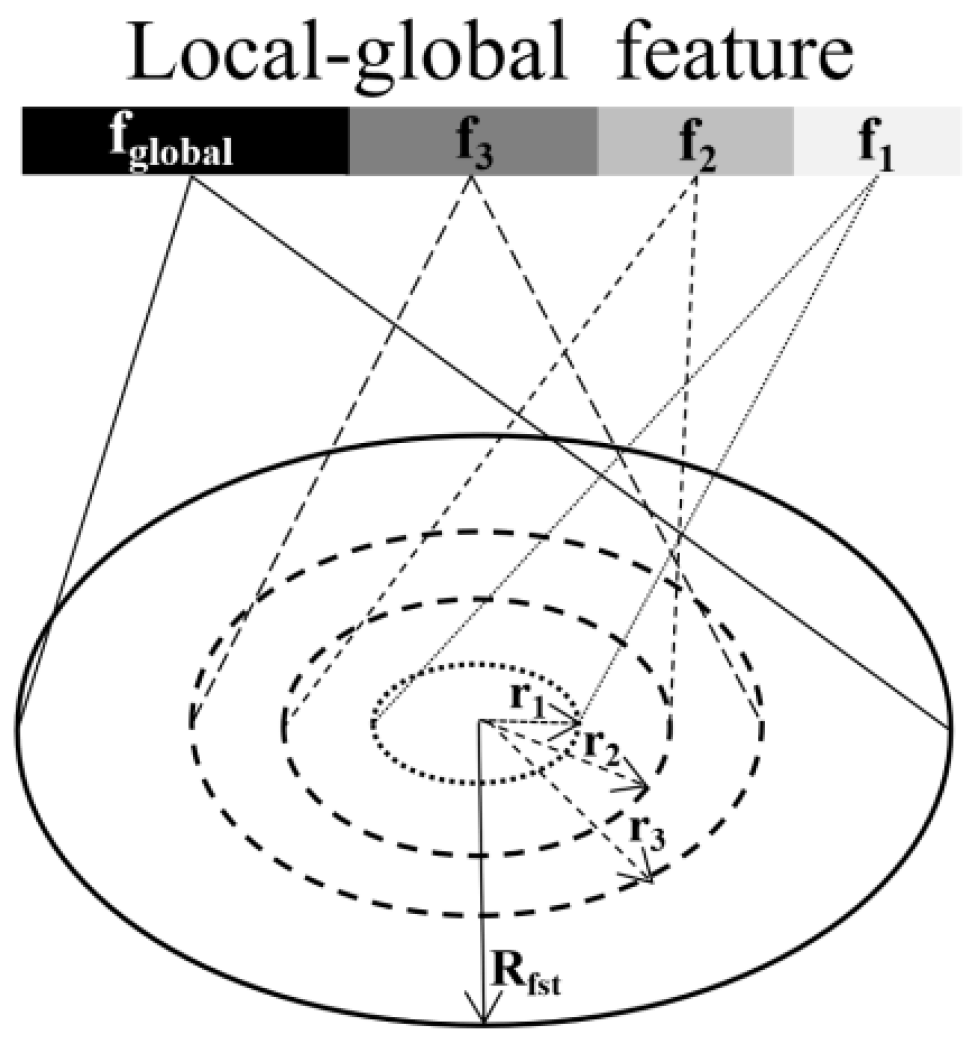

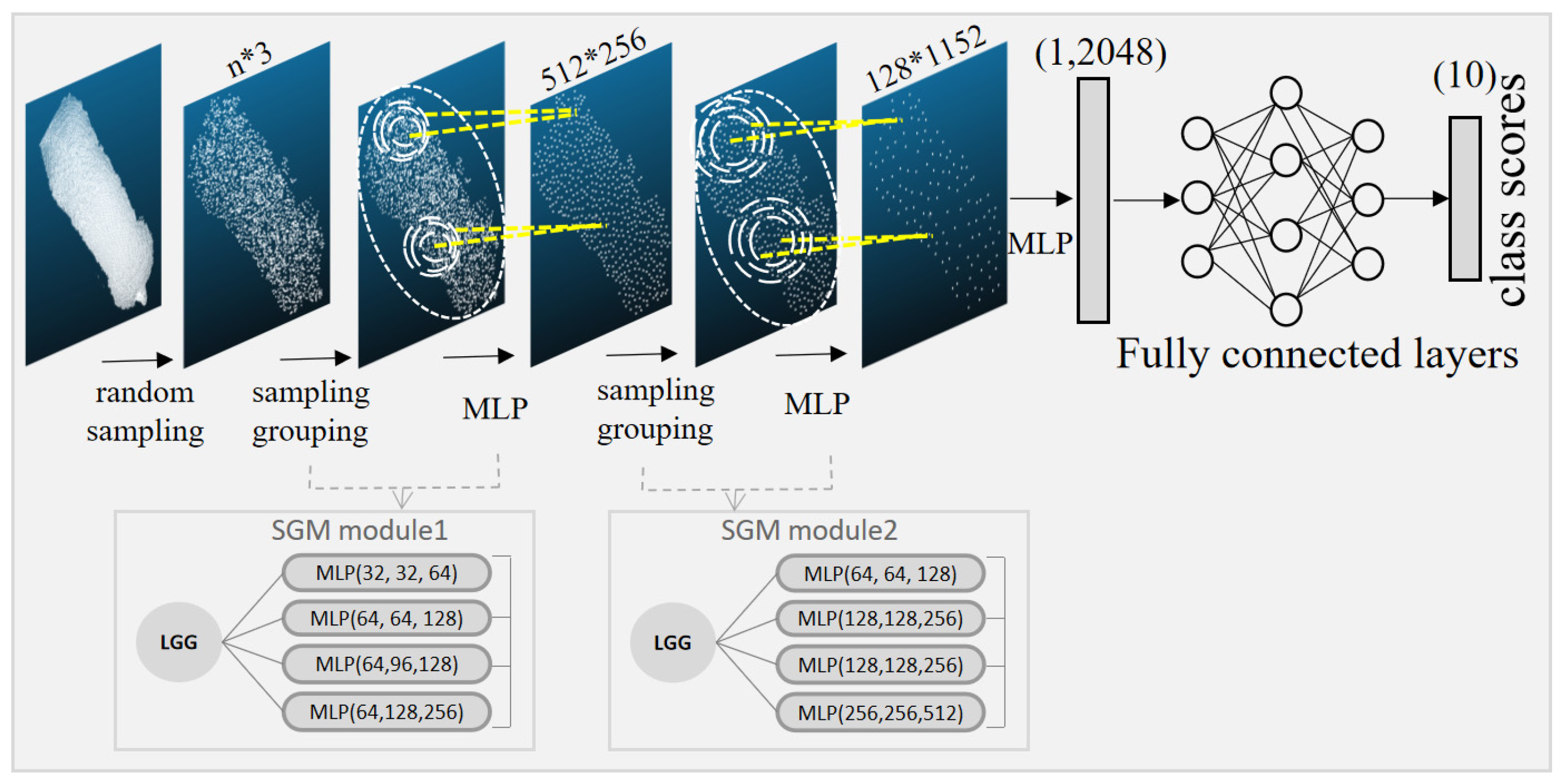

- Improved pig individual recognition model based on the PointNet++ LGG classification algorithm

2.5. Experiment and Parameter Setting

2.6. Evaluation Metrics

3. Results and Discussion

3.1. Pig Body Segmentation

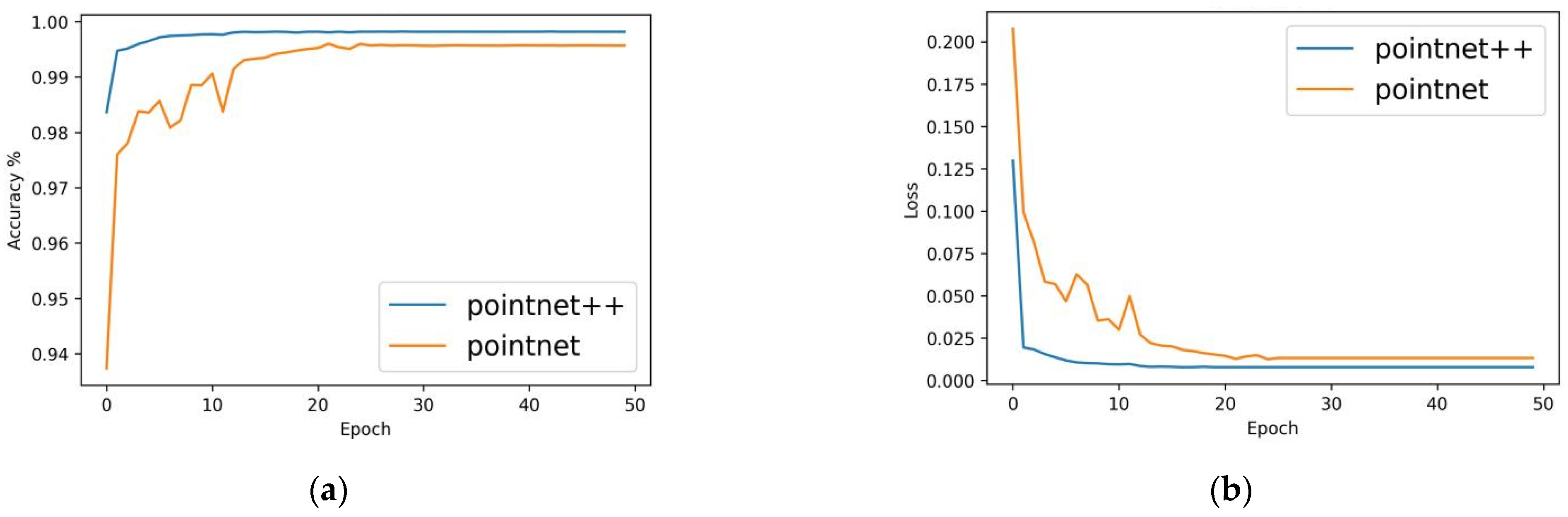

3.1.1. Model Training Results

3.1.2. Model Test Results

3.2. Individual Pig Identification

3.2.1. Model Training Results

3.2.2. Model Test Results

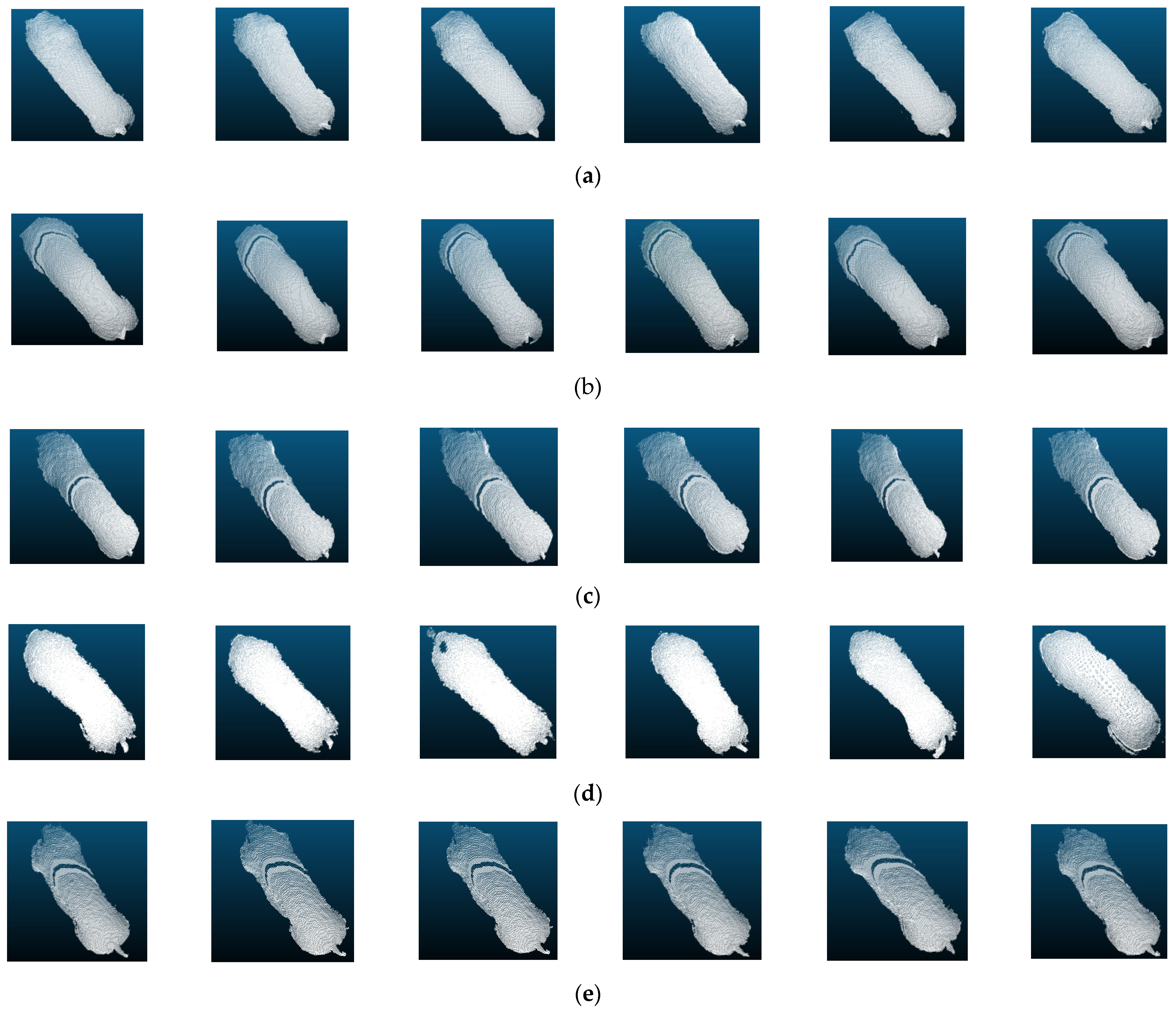

3.2.3. Visual Analysis of Samples for Classification

3.3. Discussion

4. Conclusions

- A fully automated method of non-contact identification of individual pigs based on 3D point cloud of the pig back in two stages was proposed. The PointNet++ pig body segmentation model was established to segment the pig back point cloud from the background, and the segmentation accuracy reached 99.80%.

- The PointNet++LGG algorithm with improved grouping strategy was proposed. The Accuracy, Precision, Recall and F1 score in individual recognition reached 95.26%, 95.51%, 95.53% and 95.52%, respectively. Compared with the PointNet algorithm, PointNet++SSG algorithm and PointNet++MSG algorithm, the recognition accuracy was higher, the recognition of similar individuals was better, the recognition of different individuals was more uniform, and the generalization ability was stronger.

- The proposed method of pig individual recognition based on three-dimensional point cloud image of the back was a new exploration in the field of animal individual recognition, avoiding the stress reaction to the animal by radio frequency identification (RFID), avoiding the problem of difficult to obtain pig face samples in pig face recognition, and providing a new method and idea for individual recognition of other animals.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Aquilani, C.; Confessore, A.; Bozzi, R.; Sirtori, F.; Pugliese, C. Review: Precision livestock larming technologies in pasture-based livestock systems. Animal 2022, 16, 100429. [Google Scholar] [CrossRef] [PubMed]

- García, R.; Aguilar, J.; Toro, M.; Pinto, A.; Rodríguez, P. A systematic literature review on the use of machine learning in precision livestock farming. Comput. Electron. Agric. 2020, 179, 105826–205837. [Google Scholar] [CrossRef]

- Tzanidakis, C.; Simitzis, P.; Arvanitis, K.; Panagakis, P. An overview of the current trends in precision pig farming technologies. Livest. Sci. 2021, 249, 104530. [Google Scholar] [CrossRef]

- Bao, J.; Xie, Q.J. Artificial intelligence in animal farming: A systematic literature review. J. Clean. Prod. 2022, 331, 129956–129968. [Google Scholar] [CrossRef]

- Thölke, H.; Wolf, P. Economic advantages of individual animal identification in fattening pigs. Agriculture 2022, 12, 126. [Google Scholar] [CrossRef]

- Collins, L.M.; Smith, L.M. Review: Smart agri-systems for the pig industry. Animal 2022, 16, 100518. [Google Scholar] [CrossRef]

- Wang, M.; Larsen, M.L.V.; Liu, D.; Winters, J.F.M.; Rault, J.; Norton, T. Towards re-identification for long-term tracking of group housed pigs. Biosyst. Eng. 2022, 222, 71–81. [Google Scholar] [CrossRef]

- Tzanidakis, C.; Tzamaloukas, O.; Simitzis, P.; Panagakis, P. Precision livestock farming applications (PLF)for grazing animals. Agriculture 2023, 13, 288. [Google Scholar] [CrossRef]

- Jin, H.; Meng, G.; Pan, Y.; Zhang, X.; Wang, C. An improved intelligent control system for temperature and humidity in a pig house. Agriculture 2022, 12, 1987. [Google Scholar] [CrossRef]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Benos, L.; Tagarakis, A.C.; Dolias, G.; Berruto, R.; Kateris, D.; Bochtis, D. Machine learning in agriculture: A comprehensive updated review. Sensors 2021, 21, 3758. [Google Scholar] [CrossRef] [PubMed]

- Fang, C.; Zheng, H.; Yang, J.; Deng, H.; Zhang, T. Study on poultry pose estimation based on multi-parts detection. Animals 2022, 12, 1322. [Google Scholar] [CrossRef] [PubMed]

- Akçay, H.G.; Kabasakal, B.; Aksu, B.; Demir, N.; Öz, M.; Erdogan, A. Automated bird counting with deep learning for regional bird distribution mapping. Animals 2020, 10, 1207. [Google Scholar] [CrossRef] [PubMed]

- Kashiha, M.; Bahr, C.; Ott, S.; Moons, C.P.H.; Niewold, T.A.; Ödberg, F.O.; Berckmans, D. Automatic identification of marked pigs in a pen using image pattern recognition. Comput. Electron. Agric. 2013, 93, 111–120. [Google Scholar] [CrossRef]

- Li, J.; Green-Miller, A.R.; Hu, X.; Lucic, A.; Mahesh, M.M.R.; Dilger, R.N.; Condotta, I.C.F.S.; Aldridge, B.; Hart, J.M.; Ahuja, N. Barriers to computer vision applications in pig production facilities. Comput. Electron. Agric. 2022, 200, 107227. [Google Scholar] [CrossRef]

- Hansena, M.F.; Smith, M.L.; Smith, L.N.; Salter, M.G. Baxterc. E.M.; Farish, M.; Grieve, B. Towards on-farm pig face recognition using convolutional neural networks. Comput. Ind. 2018, 98, 145–152. [Google Scholar] [CrossRef]

- Marsot, M.; Mei, J.; Shan, X.; Ye, L.; Feng, P.; Feng, X.; Li, C.; Zhao, Y. An adaptive pig face recognition approach using convolutional neural networks. Comput. Electron. Agric. 2020, 173, 105386–105395. [Google Scholar] [CrossRef]

- Sihalath, T.; Basak, J.K.; Bhujel, A.; Arulmozhi, E.; Moon, B.E.; Kim, H.T. Pig identification using deep convolutional neural network nased on different age range. J. Biosyst. Eng. 2021, 46, 182–195. [Google Scholar] [CrossRef]

- Yan, H.; Cui, Q.; Liu, Z. Pig face identification based on improved alexnet model. INMATEH Agric. Eng. 2020, 61, 97–104. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, T. Two-stage method based on triplet margin loss for pig face recognition. Comput. Electron. Agric. 2022, 194, 106737. [Google Scholar] [CrossRef]

- Adjabi, I.; Ouahabi, A.; Benzaoui, A.; Taleb-Ahmed, A. Past, present, and future of face recognition: A Review. Electronics 2020, 9, 1188. [Google Scholar] [CrossRef]

- Zhu, W.X.; Guo, Y.Z.; Jiao, P.P.; Ma, C.H.; Chen, C. Recognition and drinking behaviour analysis of individual pigs based on machine vision. Livest. Sci. 2017, 205, 129–136. [Google Scholar] [CrossRef]

- Huang, W.; Zhu, W.; Ma, C.; Guo, Y.; Chen, C. Identification of group-housed pigs based on gabor and local binary pattern features. Biosyst. Eng. 2018, 166, 90–100. [Google Scholar] [CrossRef]

- Li, S.; Kang, X.; Feng, Y.; Liu, G. Detection method for individual pig based on improved YOLOv4 Convolutional Neural Network. In Proceedings of the 2021 4th International Conference on Data Science and Information Technology, Shanghai, China, 23–25 July 2021; pp. 231–235. [Google Scholar]

- Lu, J.S.; Wang, W.; Zhao, K.; Wang, H.Y. Recognition and segmentation of individual pigs based on Swin. Anim. Genet. 2022, 53, 794–802. [Google Scholar] [CrossRef] [PubMed]

- Li, W.Y.; Ji, Z.T.; Wang, L.; Sun, C.H.; Yang, X.T. Automatic individual identification of Holstein dairy cows using tailhead. Comput. Electron. Agric. 2017, 142, 622–631. [Google Scholar] [CrossRef]

- Hu, H.Q.; Dai, B.S.; Shen, W.; Wei, X.L.; Sun, J.; Li, R.; Zhang, Y.G. Cow identification based on fusion of deep parts features. Biosyst. Eng. 2020, 192, 245–256. [Google Scholar] [CrossRef]

- Chen, C.; Zhu, W.; Norton, T. Behaviour recognition of pigs and cattle: Journey from computer vision to deep learning. Comput. Electron. Agric. 2021, 187, 106255. [Google Scholar] [CrossRef]

- Zang, J.; Zhuang, Y.; Ji, H.; Teng, G. Pig weight and body size estimation using a multiple output regression convolutional neural network: A fast and fully automatic method. Sensors 2021, 21, 3218. [Google Scholar] [CrossRef]

- Du, A.; Guo, H.; Lu, J.; Su, Y.; Ma, Q.; Ruchay, A.; Marinello, F.; Pezzuolo, A. Automatic livestock body measurement based on keypoint detection with multiple depth cameras. Comput. Electron. Agric. 2022, 198, 107059. [Google Scholar] [CrossRef]

- Song, X.; Bokkers, E.A.M.; Van der Tol, P.P.J.; Koerkamp, P.W.G.G.; van Mourik, S. Automated body weight prediction of dairy cows using 3-dimensional vision. J. Dairy Sci. 2018, 101, 4448–4459. [Google Scholar] [CrossRef]

- Yin, L.; Zhu, J.; Liu, C.; Tian, X.; Zhang, S. Point cloud-based pig body size measurement featured by standard and non-standard postures. Comput. Electron. Agric. 2022, 199, 107135. [Google Scholar]

- Yin, C.; Wang, B.; Gan, V.J.L.; Wang, M.Z.; Cheng, J.C.P. Automated semantic segmentation of industrial point clouds using ResPointNet++. Autom. Constr. 2021, 130, 103874. [Google Scholar] [CrossRef]

- Yu, T.; Hu, C.; Xie, Y.; Liu, J.Z.; Li, P.P. Mature pomegranate fruit detection and location combining improved F-PointNet with 3D point cloud clustering in orchard. Comput. Electron. Agric. 2022, 200, 107233. [Google Scholar] [CrossRef]

- Li, M.; Huang, B.; Tian, G. A comprehensive survey on 3D face recognition methods. Eng. Appl. Artif. Intell. 2022, 110, 104669. [Google Scholar] [CrossRef]

- Kim, P.; Chen, J.; Cho, Y.K. SLAM-driven robotic mapping and registration of 3D point clouds. Autom. Constr. 2018, 89, 38–48. [Google Scholar] [CrossRef]

- Alaba, S.Y.; Ball, J.E. A survey on deep-learning-based lidar 3d object detection for autonomous driving. Sensors 2022, 22, 9577. [Google Scholar] [CrossRef]

- Shi, S.; Yin, L.; Liang, S.; Zhong, H.J.; Tian, X.H.; Liu, C.X.; Sun, A.D.; Liu, H.X. Research on 3D surface reconstruction and body size measurement of pigs based on multi-view RGB-D cameras. Comput. Electron. Agric. 2020, 175, 105543–105552. [Google Scholar] [CrossRef]

- Samperio, E.; Lidon, I.; Rebollar, R.; Castejón-Limas, M.; Álvarez-Aparicio, C. Lambs’ live weight estimation using 3D images. Animal 2021, 15, 100212–100219. [Google Scholar] [CrossRef]

- Wang, K.; Zhu, D.; Guo, H.; Ma, Q.; Su, W.; Su, Y. Automated calculation of heart girth measurement in pigs using body surface point clouds. Comput. Electron. Agric. 2019, 156, 565–573. [Google Scholar] [CrossRef]

- Riekert, M.; Klein, A.; Adrion, F.; Hoffmann, C.; Gallmann, E. Automatically detecting pig position and posture by 2D camera imaging and deep learning. Comput. Electron. Agric. 2020, 174, 105391. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 1 July 2017. [Google Scholar]

- Qi, C.R.; Li, Y.; Hao, S.; Guibas, L.J. PointNet++: Deep hierarchical fFeature learning on point sets in a metric space. arXiv 2017, arXiv:1706.02413. [Google Scholar]

- Bello, S.A.; Wang, C.; Wambugu, N.M.; Adam, J.M. FFPointNet: Local and global fused feature for 3D point clouds analysis. Neurocomputing 2021, 461, 55–62. [Google Scholar] [CrossRef]

- Wang, K.; Guo, H.; Ma, Q.; Su, W.; Chen, L.C.; Zhu, D.H. A portable and automatic Xtion-based measurement system for pig body size. Comput. Electron. Agric. 2018, 148, 291–298. [Google Scholar] [CrossRef]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3D ShapeNets: A deep representation for volumetric shapes. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Pezzuolo, A.; Guarino, M.; Sartori, L.; González, L.A.; Marinello, F. On-barn pig weight estimation based on body measurements by a Kinect v1 depth camera. Comput. Electron. Agric. 2018, 148, 29–36. [Google Scholar] [CrossRef]

- Li, G.; Liu, X.; Ma, Y.; Wang, B.; Zheng, L.; Wang, M. Body size measurement and live body weight estimation for pigs based on back surface point clouds. Biosyst. Eng. 2022, 218, 10–22. [Google Scholar] [CrossRef]

- He, H.; Qiao, Y.; Li, X.; Chen, C.; Zhang, X. Automatic weight measurement of pigs based on 3D images and regression network. Comput. Electron. Agric. 2021, 187, 106299–106304. [Google Scholar] [CrossRef]

| Body Size | Pig1 | Pig2 | Pig3 | Pig4 | Pig5 | Pig6 | Pig7 | Pig8 | Pig9 | Pig10 |

|---|---|---|---|---|---|---|---|---|---|---|

| BW (kg) | 89.0 | 87.5 | 75.0 | 76.5 | 77.5 | 88.0 | 89.5 | 63.5 | 69.0 | 60.5 |

| CW (cm) | 30.6 | 32.1 | 30.3 | 27.7 | 29.6 | 31.5 | 30.56 | 25.9 | 27.6 | 25.9 |

| HW (cm) | 29.0 | 30.1 | 25.5 | 28.1 | 26.0 | 28.5 | 31.28 | 23.8 | 23.6 | 28.0 |

| CH (cm) | 67.5 | 66.4 | 56.6 | 57.5 | 57.8 | 63.6 | 60.9 | 53.2 | 54.4 | 52.1 |

| HH (cm) | 68.1 | 68.3 | 56.9 | 57.9 | 58.9 | 65.6 | 62.7 | 55.3 | 55.7 | 56.2 |

| BL (cm) | 97.7 | 95.4 | 82.8 | 86.8 | 86.6 | 96.7 | 90.6 | 85.9 | 89.2 | 77.4 |

| Dataset | Pig1 | Pig2 | Pig3 | Pig4 | Pig5 | Pig6 | Pig7 | Pig8 | Pig9 | Pig10 | Total |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Training set | 631 | 779 | 384 | 735 | 727 | 621 | 544 | 520 | 668 | 741 | 6350 |

| Validation set | 209 | 256 | 128 | 245 | 242 | 207 | 182 | 173 | 223 | 247 | 2112 |

| Test set | 209 | 256 | 128 | 245 | 242 | 207 | 182 | 173 | 223 | 247 | 2112 |

| Models | Evaluation Metrics | Total | Pig1 | Pig2 | Pig3 | Pig4 | Pig5 | Pig6 | Pig7 | Pig8 | Pig9 | Pig10 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PointNet | OA (%) | 99.53 | ||||||||||

| Precision (%) | 97.92 | 98.56 | 99.59 | 94.78 | 96.97 | 99.04 | 98.70 | 99.73 | 92.47 | 99.59 | 96.73 | |

| Recall (%) | 99.73 | 99.93 | 99.95 | 99.93 | 99.97 | 99.99 | 99.94 | 99.09 | 99.48 | 99.79 | 99.73 | |

| F1 score (%) | 98.82 | 99.24 | 99.77 | 97.29 | 98.45 | 99.51 | 9931 | 99.41 | 95.85 | 99.69 | 98.21 | |

| mIoU (%) | 98.55 | 99.03 | 99.70 | 96.88 | 98.11 | 99.41 | 99.17 | 99.12 | 95.25 | 99.64 | 97.89 | |

| PointNet++ | OA (%) | 99.80 | ||||||||||

| Precision (%) | 99.17 | 99.12 | 99.68 | 98.00 | 98.03 | 99.49 | 99.26 | 99.58 | 99.20 | 99.67 | 98.98 | |

| Recall (%) | 99.98 | 99.90 | 99.96 | 99.76 | 99.94 | 99.97 | 99.95 | 99.72 | 99.28 | 99.72 | 99.71 | |

| F1 score (%) | 99.49 | 99.51 | 99.82 | 98.87 | 98.97 | 99.73 | 99.60 | 99.65 | 99.24 | 99.70 | 99.34 | |

| mIoU (%) | 99.36 | 99.37 | 99.76 | 98.68 | 98.75 | 99.67 | 99.52 | 99.48 | 99.11 | 99.65 | 99.22 |

| Models | Accuracy (%) | Precision (%) | Recall (%) | F1Score |

|---|---|---|---|---|

| PointNet | 78.07 | 83.22 | 76.16 | 79.53 |

| PointNet++SSG | 78.50 | 85.31 | 78.61 | 81.82 |

| PointNet++MSG | 93.08 | 93.77 | 93.34 | 93.55 |

| PointNet++LGG (Improved model) | 95.26 | 95.51 | 95.53 | 95.52 |

| Models | Accuracy(%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Pig1 | Pig2 | Pig3 | Pig4 | Pig5 | Pig6 | Pig7 | Pig8 | Pig9 | Pig10 | |

| PointNet | 44.71 | 93.75 | 96.09 | 90.41 | 97.08 | 87.50 | 83.52 | 83.33 | 88.42 | 85.00 |

| PointNet++(SSG) | 68.26 | 95.31 | 88.28 | 77.50 | 87.50 | 93.00 | 100.00 | 50.00 | 71.75 | 52.50 |

| PointNet++(MSG) | 96.63 | 100.00 | 98.33 | 97.00 | 92.12 | 97.50 | 99.43 | 92.85 | 92.59 | 78.33 |

| PointNet++(LGG) (Improved model) | 90.86 | 100.00 | 94.53 | 89.58 | 99.16 | 99.00 | 99.43 | 97.61 | 85.64 | 90.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, H.; Li, Q.; Xie, Q. Individual Pig Identification Using Back Surface Point Clouds in 3D Vision. Sensors 2023, 23, 5156. https://doi.org/10.3390/s23115156

Zhou H, Li Q, Xie Q. Individual Pig Identification Using Back Surface Point Clouds in 3D Vision. Sensors. 2023; 23(11):5156. https://doi.org/10.3390/s23115156

Chicago/Turabian StyleZhou, Hong, Qingda Li, and Qiuju Xie. 2023. "Individual Pig Identification Using Back Surface Point Clouds in 3D Vision" Sensors 23, no. 11: 5156. https://doi.org/10.3390/s23115156

APA StyleZhou, H., Li, Q., & Xie, Q. (2023). Individual Pig Identification Using Back Surface Point Clouds in 3D Vision. Sensors, 23(11), 5156. https://doi.org/10.3390/s23115156