Utilising Emotion Monitoring for Developing Music Interventions for People with Dementia: A State-of-the-Art Review

Abstract

1. Introduction

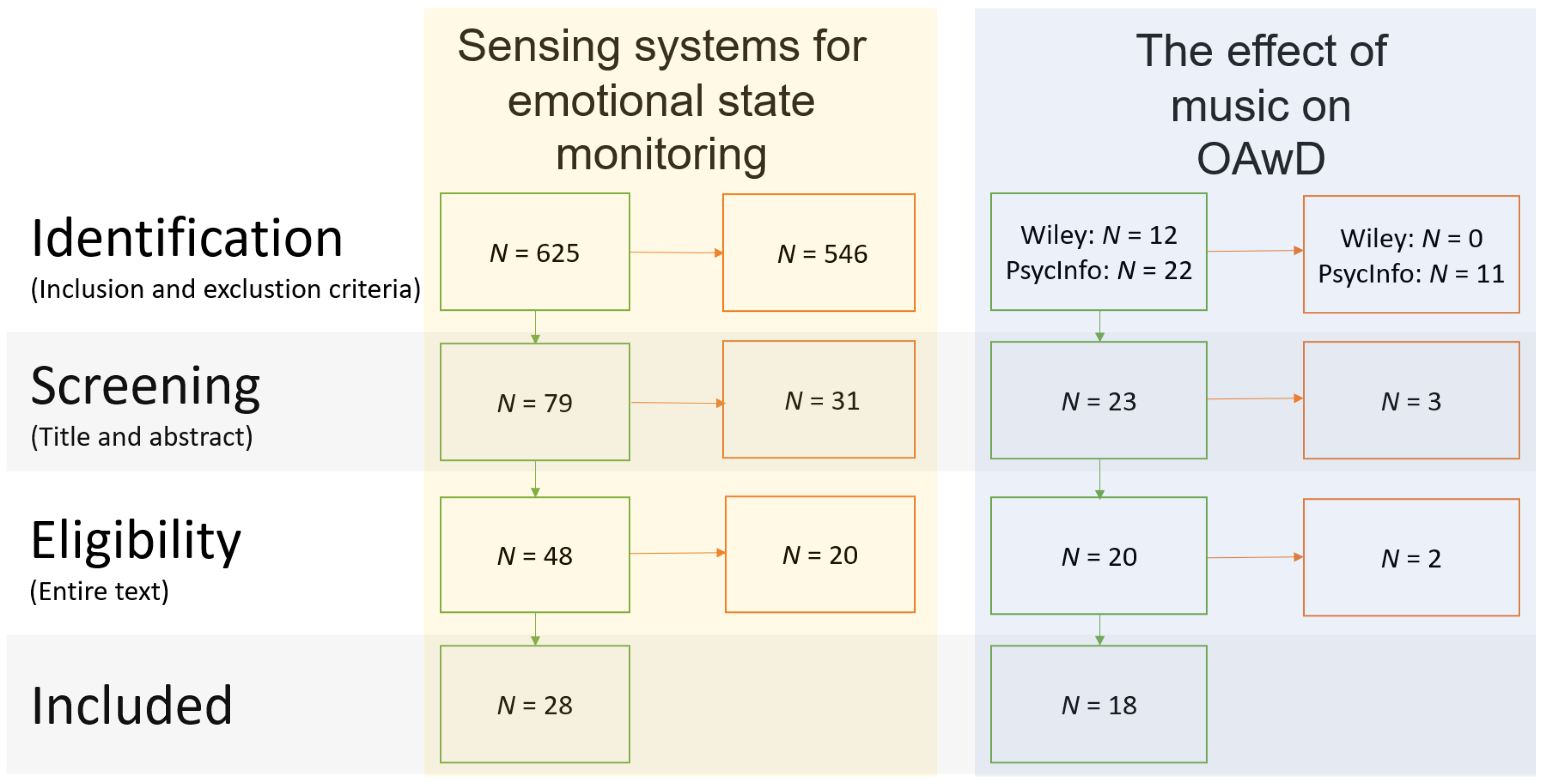

2. Methodology

Data Extraction

3. Results

3.1. Sensing Technologies for Emotion Recognition

3.1.1. Self-Reporting for Emotion Recognition

3.1.2. On-Body Sensors for Emotion Recognition

3.1.3. Device-Free Sensing for Emotion Recognition

3.2. Data Processing Methods for Emotion Recognition

3.3. Music and Dementia

3.3.1. Music and the Brain

3.3.2. Music Therapy

3.3.3. Emotional Models

3.4. Ethical Considerations

4. Discussion

4.1. Emotion Detection

4.2. Music and Dementia

4.3. Integrating Sensing Systems and Music

5. Conclusions and Recommendations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AD | Alzheimer’s disease |

| ADL | Activities of Daily Living |

| AI | artificial intelligence |

| AmI | ambient intelligence |

| ANOVA | analysis of variance |

| ANS | autonomic nervous system |

| BAN | body area network |

| BP | blood pressure |

| BPSD | behavioural and psychological symptoms of dementia |

| BSN | body sensor network |

| CBF | content-based filtering |

| CDR | Clinical Dementia Rating |

| CF | collaborative filtering |

| CL | contrastive learning |

| CNN | convolutional neural network |

| CPU | central processing unit |

| DNN | deep neural network |

| ECG | electrocardiography |

| EDA | electrodermal activity |

| EDR | electrodermal response |

| EEG | electroencephalography |

| EMG | electromyography |

| GDP | gross domestic product |

| GSR | galvanic skin response |

| HR | heart rate |

| HRV | heart rate variability |

| IoT | Internet of Things |

| IR | information retrieval |

| kNN | k-nearest neighbours |

| LDA | latent dirichlet allocation |

| LSTM | long short-term memory |

| MADM | multiple-attribute decision making |

| MAP | mean average precision |

| MEC | mobile edge computing |

| MLP | multi-layer perceptron |

| MMSE | Mini-Mental State Examination |

| MPR | mean percentile rank |

| MRR | mean reciprocal rank |

| MRS | music recommender system |

| NDCG | normalised discounted cumulative gain |

| NFC | near field communication |

| NLP | natural language processing |

| PLSA | probability-based latent semantic analysis |

| PPG | photoplethysmography |

| PwD | people with dementia |

| RMSE | root mean square error |

| RNN | recurrent neural network |

| RS | recommender system |

| RF | random forest |

| SCL | skin conductance level |

| SCR | skin conductance response |

| SMB | smart music box |

| SNS | sympathetic nervous system |

| SVD | singular value decomposition |

| SVM | support vector machine |

| WHO | World Health Organisation |

| XIM | eXperience Induction Machine |

Appendix A. Methods of Systematic Literature Review in Detail

Appendix A.1. Identification of Articles

| Topic | Search Words | Location |

|---|---|---|

| The effect of music on PWD | Music | Title |

| Dementia | Title | |

| Emotion | Abstract | |

| Sensing systems for emotional state monitoring | Sensing or sensors | |

| Pervasive or unobtrusive or device-free or ubiquitous | N/A |

| PsycInfo | Scopus |

|---|---|

| Search limitations for inclusion | |

| Papers that use related words or equivalent subjects | In final publication stage |

| Peer-reviewed | Peer-reviewed |

| Publication type is a journal | |

| Status is fully published | |

| Search limitations for exclusion | |

| The paper is not published in English | Paper does not contain one of the keywords in the list |

Appendix A.2. Selection of Relevant Studies

Appendix B. Overview of the Literature on Music Interventions for PwD

| f | female |

| m | male |

| mean age | |

| G | group (intervention) |

| I | individual (intervention) |

| A | active (music intervention) |

| P | passive (music intervention) |

| N/D | no data |

| N/A | not applicable |

| Study and Year | N, Age, Gender Availability per Work | Study Setup | Dementia Severity | Active or Passive | Type of Music | Time Span | Results |

|---|---|---|---|---|---|---|---|

| [6] 2015 | 2 studies (14, 48 participants), no age or gender given | G | Moderate to severe | A or P | Familiar (French) songs | 2 times a week for 4 weeks either 1 or 2 h | Non-pharmacological interventions can improve emotional and behavioural functioning and reduce caregiver distress, but the added benefit of music is questioned. |

| [12] 2019 | 1 participant f:1, age: 77 | I | Severe | A and P | Familiar music | Retrospective study, musical activities on a daily basis to a few times per week for years | The study suggests that music could be a vital tool in coping with symptoms of severe dementia. |

| [13] 2009 | 10 studies 16–60 participants | G and I | Mild to severe | A and P | Hymns, familiar songs, big band, classical, individualised, improvisation | 4–30 sessions, or weekly session for 2 years | The review reveals an increase in various positive behaviours and a reduction in negative behaviours, as well as the incorrect use of the term music therapy and lack of methodological rigour. |

| [14] 2012 | 30–100 participants | G and I | Mild to severe | A and P | Diverse | Diverse | Short-term studies incorporating music activities have shown positive effects, but a lack of proper methodological foundation and long-term study make these conclusions uncertain and less generalisable. |

| [67] 2005 | 6 participants | I | Severe | A | Well-known songs | 20 daily sessions of 20–30 min | Results show that individual music therapy is suitable to reduce secondary dementia symptoms and meets the psychosocial needs of patients. |

| [68] 2013 | 19 studies 10–55 participants | G and I | Mild to severe | A and P | Varying types of music per paper: classical, popular, selected music, live or recorded, etc. | 2 weeks–16, 53 weeks | Music interventions could improve the QoL for PwD, but poor methodological rigour limits confident interpretation of the results. |

| [63] 2011 | 100 participants f:53 m:47 : 81.8 | G | Mostly moderate | A and P | rhythmical, slow-tempo, instrumental, personalised, glockenspiel | two 30-min sessions per week for 6 weeks | The experimental group showed better performance (reduction in negative behaviours) after the intervention |

| [70] 2021 | 21 studies 9–74 participants = 68.9–87.9 | G and I | Mild or moderate | A | Diverse | Varying frequencies, durations and langths, from daily to weekly for weeks to months | Music causes a significant effect on the scores of cognitive function of elderly with mild cognitive impairment or dementia and has shown positive effects on mood and quality of life. |

| [66] 2011 | 43 participants f: 31, m: 12 = 78.2 | G | Moderate | A | Tailored, live or recorded | Once a week 6 h intervention for 8 weeks | Results suggest that weekly music therapy and activities can alleviate behavioural and depressive symptoms in PwD. |

| [75] 2001 | 9 participants f: 7, m:2 = 81 | I | Severe | A and P | Familiar, preferred music | 6–22 min, 3 sessions p.p. The average observation period for a patient was 13 days but varied between 3 and 49 days. | The use of music (by caregiver or background music) improves communication between the caregiver and patient, causing positive emotions to be amplified, while decreasing aggressiveness. |

| [77] 2015 | 9 participants f: 6, m:3 = 81 | I | Moderate to severe | A and P | Diverse | Weekly sessions of 23–39 min, at least 20 individual sessions over a period of 6 months per participant | Individual music therapy has a significant, positive effect on the communication, well-being, and (positive) emotion expression of people with dementia. |

| [81] 2016 | 89 caregivers 84/74 PwD | G and I | Mild to moderate | A and P | Familiar songs | Weekly sessions of 1.5 h for 10 weeks | Both singing and listening can target different depression symptoms. |

| [82] 2014 | 16 participants f: 15, m: 1 = 87.5 | G | Mild to severe | A and P | Patient’s preferences | 12 weekly sessions | The intervention does not show a significant change in quality of life, but it does show a significant increase in emotional well-being and a negative significant change in interpersonal relations. |

| [72] 2012 | 54 participants f: 30, m:15 | G and I | Mild to severe | A and P | Intimate live music, genre preference of audience | 45 min, 17 performances divided over a selection of nursing homes | Live music positively affected human contact, care relationships, and positive and negative emotions. |

| [59] 2009 | 23 participants f: 15, m: 8 =73 | I | Mild | P | Novel instrumental clips from the film genre | 1 time session with 3 tasks | Alzheimer patients show well-preserved emotional recognition of music. |

| [61] 2015 | 26 participants f:6, m: 20 = 64 | I | Frontotemporal dementia | P | Based on four-note arpeggio chords and wave files of human nonverbal emotional vocalisations | 1 session with multiple trials per condition | The research suggests that music can delineate neural mechanisms of altered emotion processing in people with dementia. |

| [108] 2012 | N/D | G and I | N/D | A and P | N/D | N/A | The literature reflection shows that music has a positive effect on people with dementia, but also questions the flexibility of methods with respect to changes in participants with dementia. |

| [106] 2012 | N/D | G | N/D | N/D | N/D | N/D | The article points to multiple studies that support the power of music, but also unveils challenges in research with dementia patents such as recruitment, narrow time windows, burden on participants and carers, and tailored research. |

Appendix C. Overview of Literature on Emotion Sensing

| f | female |

| m | male |

| mean age | |

| fr | frequency |

| d | duration |

| AI | Artificial Intelligence |

| AIC | Akaike Information Criterion |

| ANN | Artificial Neural Network |

| ANOVA | Analysis of Variance |

| ARM | Association Rule Mining |

| CMIM | Conditional Mutual Information Maximisation |

| CNN | Convolutional Neural Network |

| CSI | Channel State Information |

| cvx-EDA | Convex (optimisation) Electrodermal Activity |

| DISR | Domain-Invariant Subspace Representation |

| DNN | Deep Neural Network |

| DT | Decision Tree |

| ECG | Electrocardiogram |

| EDA | Electrodermal Activity |

| EDR | Electrodermal Response |

| EEG | Electroencephalogram |

| ELM | Extreme Learning Machine |

| EMG | Electromyography |

| FMCW | Frequency-Modulated Continuous Wave |

| FMF | Fuzzy Membership Functions |

| fMRI | Functional Magnetic Resonance Imaging |

| fNIRS | Functional Near-Infrared Spectroscopy |

| GDA | Gaussian Discriminant Analysis |

| GP | Genetic Programming |

| GPS | Global Positioning System |

| GBC | Gradient Boosting Classifier |

| GLM | Generalised Linear Model |

| GSR | Galvanic Skin Response |

| HMD | Head-Mounted Display |

| HR(V) | Heart rate (variability) |

| IMU | Inertial Measurement Unit |

| JMI | Joint Mutual Information |

| KBCS | Kernel-based Class Separability |

| kNN | k-Nearest Neighbours |

| LDA | Linear Discriminant Analysis |

| LDC | Linear Discriminant Classifier |

| LMM | Linear Mixed Models |

| LR | Logistic or Linear Regression |

| LSTM | Long Short-Term Memory |

| MANOVA | Multivariate Analysis of Variance |

| ML | Machine Learning |

| MLP | Multi-Layer Perceptron |

| NB | Naive Bayes |

| NN | Neural Network |

| PCA | Principal Component Analysis |

| PNN | Probalistic Neural Network |

| PPG | Photoplethysmogram |

| PSD | Position sensitive device |

| PTT | Partial Thromboplastin Time |

| RBF | Radial Basis Functions |

| RF | Random Forest |

| RFE | Recursive Feature Elimination |

| RNN | Recurrent Neural Network |

| RR | Respiration Rate |

| RSS | Received Signal Strength |

| RVM | Relevance Vector Machine |

| QDA | Quadratic Discriminant Analysis |

| SD | Standard Deviation |

| SDK | Software Development Kit |

| SFFS | Sequential Forward Floating Selection |

| (L-)SVM | (Linear) Support Vector Machines |

| WiFi | Wireless Fidelity |

| Study and Year | Aim | Features | Measuring | Sensors Used | Study Environment | n | Algorithm(s) | Results |

|---|---|---|---|---|---|---|---|---|

| [18] 2010 | Propose a framework to realise ideas and describe their effective functions in drug addiction treatment application scenarios for roaming patients. | Sensor proxy, pulse, blood pressure, breathing, body temperature | Response to drugs/drug overdose avoidance | Laptop-like wearable and biomedical sensors | Test-bed setting, simulations | 1 patient, 20 responders | Data aggregation and decision: user profile, local resuer selection: multi-attribute decision making algorithm | A (drug overdose) case study showed promising results in monitoring psychophysiological conditions, aggregating this data and prompting assistance. PEACH is viable in terms of its responsiveness, battery consumption, and memory use. |

| [21] 2020 | Explore whether perception clustering can be used to reduce the computational requirements necessary for personalised approaches to automated mood recognition. | Heart rate, pulse rate, pulse-wave transit time, skin temperature, and movements | Excited, happy, calm, tired, bored, sad, stressed, angry | ECG, PPG, and accelerometer | Work | n = 9 | k-means/hierarchical clustering, RFE with LR, classifiers (BE-DT, DT, kNN, LR, L-SVM, SVM-RBF, regression (GP) | Perception clustering is a compromising approach that reduces computational costs of personal models, while performing equally better than generalised models. |

| [24] 2021 | Investigate the effect of emotional autobiographic memory recall on a user’s physiological state in VR using EDA and pupil trackers. | Pupil physiological signals and sweat conductance features | Emotional autobiographic memory recall (positive, negative and neutral) in VR | EDA (Shimmer3 GSR+ sensor) + Eye tracker (Vive Eye tracking SDK, Vive Pro Eye HMD) | Lab | n = 6 (f: 2, m: 4, = 26.8) | Statistics (Friedman tests, Nemenyi post hoc tests, Wilcoxon-Signed Ranks tests, Shapiro–Wilk test) | EDA and pupil diameter can be used to distinguish autobiographic memory recall from non-AM recall, but no effect was found on emotional AM recall (positive, negative, neutral). |

| [27] 2021 | Validate a self-developed algorithm that recognises emotions using a glasses-type wearable with EDA and PPG. | Facial images and biosignals (heart rate features and skin conductance) | Arousal and valence, or categorical: amusement, disgust, depressed, and calm | Glasses-type wearable with EDA, camera and PPG | Shield room | n = 24, ( = 26.7) | PCA, LDA, and SVM with RBF kernel or binary RBF SVM model | The glass-type wearable can be used to accurately estimate the wearer’s emotional state. |

| [28] 2021 | Present a VR headmount with a multimodal approach to provide information about a person’s emotional and affective state, addressing low latency detection in valence. | Facial muscle activation, pulse measurements, and information on the head and upper body motions | Affective state and context (i.e., HRV, arousal, valence, and expressions) | f-EMG, PPG, and IMU | VR | Not mentioned (demo) | AI and ML | Not available (yet) |

| [29] 2021 | Reduce computational complexity while increasing performance in estimating tourist’s emotions and satisfaction. | Eye, head, and body movements, eye gaze, facial and vocal expressions, weather information | Tourist emotion and satisfaction. | Smartphone (camera) and Pupil Labs Eye Tracker, SenStick multi-sensor board | Sightseeing in Ulm in Germany, Nara and Kyoto in Japan | n = 22 + 24 | PCA, SVM, RBF | The newly suggested model outperforms a previous method by the authors. Additionally, the inclusion of weather conditions improves model accuracy. |

| [30] 2021 | Design a framework consisting of stress detection and emotion recognition to maintain productivity and workability while balancing work and private life in ageing workers unobtrusively. | Stress: statistical properties of biosignals (ECG and HRV signals) and normal-to-normal differences or 2D ECG spectrograms. Emotions: Facial images | Stress and emotion (Positive, negative, and neutral) | ECG, EDA, mobile phone camera | Office, lab setting | Stress: 25 and 15 subjects. Emotion: 28,000 images. | Stress: CNN and different classifiers (SVM, kNN, RBF, RF, ANN). Emotion: CNN | The presented framework outperforms previous work on the recognition of stressful conditions, mainly in the office environment, using EDA and ECG biosignals or facial expression patterns. |

| [31] 2018 | Validation and experiments of ORCATECH [40] and determining what is feasible. | Bed(room) activity, sleep (d), computer use (fr+d), motor speed and typing speed, medication taking behaviour, walking velocity, visitors (fr.+d), social activities on internet (d), out of the home (d), phone calls (fr), driving, body composition, pulse, temperature, and | Sleep, computer use, medication adherence, movement patterns and walking, social engagement | IR sensor, magnetic contact sensors, pc, optional: medication trackers, phone monitors, wireless scales, pulse, temperature and air quality and undisclosed driving sensors | In real homes | n = 480 homes | Update on [40] surveying multiple algorithms | An update on [40]: ORCATECH provides continuous unobtrusive monitoring that seems to be more widely supported in elderly homes |

| [32] 2018 | Investigate the use of smartphones/smartwatches to measure continuously and objectively and provide personalised support in rehabilitation. | Location, activity, step count, battery status, screen on/off | Behaviour analysis for rehabilitation | Smartphones and smartwatches | Real-life setting, participants required to live with caregiver | n = 6 PwD (m: 4, f: 2), ages: 68–78 | Location: density-based clustering, activity duration: filtering and grouping | The authors claim this is the first evidence describing the role of personal devices in dementia rehabilitation. Data gathered in real life are adequate for revealing behaviour patterns. Potential advantages of sensor-based over self-reported behaviour |

| [33] 2020 | Reflect on two studies: (1) link between cardiovascular markers of inflammation and anger while commuting and (2) investigate the effect on the heart rate. | IBI, PI, driving features, HR(V), PTT | Anger monitoring in commuter driving | Smartphone, Shimmer3 accelerometer, two Shimmer3 sensors (ECG and PPG) | Actual driving | (1) 14 (f: 7), age 25–57, (2): 8 (f: 6), age 28–57 | Independent evaluation of driving and physiology features, then ensemble classifier utilising LDA, Decision Tree, kNN classifiers. Study 2 is statistical analysis. | Accuracy of >70% in anger detection using physiology and driving features. The means of HR and power in high frequencies of HRV and PTT were sensitive to subjective experience of anger. |

| [34] 2014 | Proposing a hybrid approach to create a space with the advantages of a laboratory within a real-life setting (eXperience Induction Machine—XIM). | HR(V), skin conductance, acceleration, body orientation | Arousal and response to environment | Multi-modal tracking system, pressure sensors, microphones, sensing glove and shirt (EDR, ECG, respiration, gestures) | eXperience Induction Machine (real-life setting replicated with laboratory advantages) | Study 1: 7 (f: 4, m: 3), age: 29.7 ± 3.9 (SD), Study 2: 11 (f: 7, m: 4), age: 27 ± 4.51 (SD) | Linear Discriminant Classifier (LDC) | The authors claim it is possible to induce human-event-elicited emotional states through measuring psychophysiological signals under ecological conditions in their space |

| [35] 2015 | Propose and evaluate textile low-powered biofeedback wearables for emotional management to transform negative emotional states into positive. | ECG and respiratory rate, for HRV and RR (patterns) | Negative emotions | Self-designed textile wearable with ECG and respiration sensor | University | n = 15, ages 20–28, | Statistical analysis (ANOVA) | The authors developed a low-powered, low-complexity textile biofeedback system that measures real-time HRV, wirelessly connects to laptops/phones, and is statistically effective in cases of negative emotion. |

| [36] 2019 | Validation and experimental results (continuation of previous research) of a wearable ring to monitor the ANS through EDA, heart rate, skin temperature, and locomotion | Electrodermal activity/galvanic skin resistance, heart rate, motor, temperature | Stress levels are measured as an experiment in students | Ring with HR sensor and pulse oximeter, accelerometer, temperature sensor, skin conductance electrodes, wireless microprocessor | - | n = 43 (f: 20, m: 23), ages 19–26 | Decision tree, support vector machine, extreme gradient boosting | Stress levels monitored with 83.5% accuracy using SVM (not mentioned how many classes/different stress levels) |

| [37] 2017 | Introduction of wearable device for calm/distress conditions using EDA with classification accuracies and validation | Electrodermal activity (EDA) (temporal, morphological, frequency) | Calm or distress | 10 mm silver electrodes on the fingers (palm sides index and middle finger) | Not mentioned, but likely a lab given the prototype | n = 45 (f: 20, m: 25), age 23.54 + 2.64 (based on 50–5 were invalid) | Statistical analysis (ANOVA), decision trees | 89% accuracy for differentiating between calm or distress for combined features, with individual features this was (much) lower. |

| [38] 2018 | Estimation and evaluation of emotional status/satisfaction of tourists by using unconscious and natural actions. | Location, vocal and facial expressions, eye gaze, pupil features, head tilt, and body movement (footsteps) | Positive: excited, pleased, calm; neutral: neutral; negative: tired, bored, disappointed, distressed, alarmed | Smartphone for video and audio, eye-tracking headset, sensor board SenStick with accelerometer, gyroscope, GPS | Real-world experiments | n = 22 (f: 17, m: 5), ages 22–31, = 24.3 | RNN-LSTM with RMSProp optimiser | Unweighted Average Recall (UAR) of 0.484 for Emotion, and Mean Absolute Error (MAE) of 1.110 for Satisfaction based on the three emotion groups (rather than all nine emotions) |

| [39] 2017 | Propose an emotion recognition system for affective state mining (arousal) using EMG, EDA, ECG. | Arousal and valence (skin conductance level, frequency bands of parasympathetic and sympathetic signals and impulses of the zygomaticus muscle) | Happy, relaxed, disgust, sad, neutral | Biomedical sensors (EDA, ECG, facial EMG) | DEAP [109] | DEAP (n = 32 total) | Fusion of the data and then a convolutional neural network | 87.5% using the proposed CNN which at the time was the best state-of-the-art method |

| [41] 2013 | Develop a novel, wearable mood recognition system using ANS biosignals for mood recognition in bipolar patients | Inter-beat interval series, heart rate, and respiratory dynamics, ECG, RSP, body activity | Remission euthymia (ES), mild depression (MD), severe depression (SD), mild mixed state (MS) | PSYCHE platform sensorised t-shirt, textile ECG electrodes, piezoresistive sensor, accelerometer | Followed for 90 days, real-life scenario | n = 3, age = 37–55 with bipolar disorder (I or II) | PCA. linear and quadratic discriminant classifier, mixture of Gaussian, kNN, Kohonen self organising map, MLP, probabilistic neural network | Good classification accuracy (diagonal) in the matrices. It is stated that it is better at distinguishing between euthymia and severe states compared to mild states due to their closer resemblance |

| [42] 2009 | Exploration of the relationship between physiological and psychological variables measured at home manually or automatically | Activity, weight, steps, bed occupancy, HR, RR, blood pressure, room illumination, temperature; (self-assessment) stress, mood, exercise, sleep | Stress levels | Activity monitor, HR monitor, mobile phone, step counter, blood pressure monitor, personal weight scale, movement sensor in bed, temperature and light sensors | Rehabilitation centre | n = 17 (f: 14, m: 3), age 54.5 ± 5.4 (SD) | Statistical analysis (Spearman correlations) | Unobtrusive sensors measure significant variables, but overall modest correlation with self-assessed stress level when combined with other participants. Overall, there are strong correlations, but these may conflict on a personal level. |

| [43] 2021 | Evaluate VEmotion, aiming to predict driver emotions using contextual data in an unobtrusive fashion in the wild. | Facial expression, speech, vehicle speed and acceleration, weather conditions, traffic flow, road specifications, time, age, initial emotion | Driver emotions (neutral, happy, surprise, angry, disgust) | Smartphone: GPS, camera, microphone | In-car | n = 12 (m: 8, f: 2, ) | Random Forest Ensemble Learning based on 10-fold grid-search cross validation (using SVM, kNN, DT, Adaboost, and RF from scikit-learn with default parameters) | Context variables can be captured in real time using GPS at low cost, optionally accompanied by camera monitoring the driver “in-the-wild”, specifically in-vehicle. |

| [44] 2021 | Measure audience feedback/engagement of entertaining content with using ultrasound and echoes of the face and hand gestures. | (Echoes of) facial expressions | User engagement: six basic emotions combined with hand gestures | SonicFace (Speakers + microphones) ->ultrasound | Home environment (in front of screen) | n = 12 (f: 2, m: 10, ) | FMCW-based absolute tracking, beam-forming, multi-view CNN ensemble classifier | SonicFace reaches an accuracy of almost 80% for six expressions with four hand gestures and has shown robustness and generalisability in evaluations with different configurations. |

| [45] 2016 | The authors propose a method to use facial expression and gestures to detect emotions using video data and self-implemented software approaches. | Facial expressions according to CANDIDE face model | Happiness, relaxed, sadness, anger | Microsoft Kinect (Emotion Recognition Module) | Likely lab, but undisclosed | n = 2 | Genetic programming, voting, and multiple classifiers selected | Average accuracy of around 68% for test set (out of normal voting/evolved voting and combined/uncombined valance/arousal evolving subtrees) |

| [46] 2021 | Propose a domain-independent generative adversarial network for WiFi CSI-based activity recognition. | Raw CSI | Clap, sweep, push/pull, slide | WiFi-CSI | Classroom, hall and office | n = 17 (widar3.0 database)) | Adversarial domain adaptation network (CNN-based feature extraction and simplified CSI data pre-processing) | The ADA pipeline showed superior results compared to current models in activity recognition using WiFi-CSI in terms of robustness against domain change, higher accuracy of activity recognition, and reduced model complexity. |

| [47] 2020 | Establishing a mental health database; proposing a multimodel psychological computational technology in universal environment | Facial expression (gaze), speech (emotion detection) | Short-Term Basic emotions, long-term complex emotions and suspected mental disorders (uni- and bipolar DD, schizophrenia) | Camera, microphone | Mental health centre | n = 2600 (f: 405) over 12 scenarios | Multimodal deep learning for feature extraction, then input into LSTM with attention mechanism | State-of-the-art performance in emotion recognition, identified continuous symptoms of three mental disorders, now quantitatively described by a newly introduced model; established relationship between complex and basic emotions. |

| [48] 2020 | Exploring new combinations of parameters to assess people’s emotion with ubiquitous computing | Speech (variability of frequency of pitch, intensity, energy) and ECG (heart rate variability) | Arousal–valence model, the six basic emotions | ECG belt, multifunction headset with microphone | 50% in office environment, 50% in living room environment | n = 40 (f: 20, m: 20), age: 27.8 ± 7.6 (SD), range 18–49 | Statistical analysis with MANOVA (Wilks’ lambda) and ANOVA (Huynh-Feldt) | The aforementioned speech parameters combined with HRV provide a robust, reliable, and unobtrusive method of reflecting on a user’s affective state. |

| Study and Year | Aim | Features | Type of Emotions Recognised | Sensors Used | Study Environment | n | Algorithm(s) Used | Results |

|---|---|---|---|---|---|---|---|---|

| [22] 2021 | Present an elaborate overview of approaches used in emotion recognition and discussions of ethics and biases. | Facial expressions, speech (pitch, jitter, energy, rate, length, number of pauses), activity, heart rate (variability), galvanic skin response, eye gaze and dwell time, user’s location, time, weather and temperature, social media | Stress, depressive symptoms, user experience/user engagement, mental health, anger, anxiety, and more | Camera, IMU, EDA, ECG, PPG, EEG, microphone, fNIRS, accelerometer, gyroscope, magnetometer, compass | N/A | N/A | Regression analysis, SVM, predictive and descriptive models, DT, clustering, kNN, naive Bayes, RF, NN | A design space for emotion recognition is created and based on the literature it was found that the approach is decided based on domain-specific user requirements, drawbacks, and benefits. |

| [23] 2022 | Review state-of-the-art unobtrusive sensing of humans’ physical and emotional parameters comprehensively. | Natural signals (e.g., heat, breathing, sound, speech, body image (facial expression, posture)) and artificial signals (i.e., signal reflection and signal interference by the body). | Activity, vital signs, and emotional states | Geophone, finger oximeter, camera, smartphones, earphone, microphone, PPG, infrared, thermography, (FMCW) radio wave antennas, RSS, PSD, CSI | Diverse (lab, hospital, ambulance) | N/A | N/D | The paper provides a taxonomy for human sensing. Remaining challenges of human sensing include amongst others noise reduction, multi-person monitoring, emotional state detection, privacy, multimodality and data fusion, standardisation, and open datasets |

| [25] 2022 | Review IEEE research articles from the last 5 years that study affective computing using ECG and/or EDA thorougly. | Heart rate and skin conductance features | Stress, fear, valence, arousal, emotions | ECG and EDA | Diverse | n = 27 papers, 18–61 people per study included in this paper | cvxEDA, DT, PCA, kNN, (Gaussian) Naive Bayes, (L)SVM (with RBF), LDA, (M)ANOVA, LMM, SFFS-KBCS-based algorithm, GDA and LS-SVM, R-Square and AIC, RVM, ANN, CNN, RNN, JMI, CMIM, DISR, ELM | The authors argue that EDA and ECG will become a vital part of affective computing research and human lives, since the data can be collected comfortably using wearables and a(n upcoming) decrease in costs, but the current literature on the topic is limited compared to EEG. |

| [26] 2022 | Provide a comprehensive review of signal processing techniques for depression and bipolar disorder detection. | Heart activity, brain activity, typing content, phone use (e.g., typing metrics, number of communications, communication timings and length, screen on/off or lock data), speech, movement, location, posture, eye movement, social engagement | Depressive moods and manic behaviours | Clinical sensors (fNIRS, fMRI), ubiquitous sensors (electrodes (EEG/ECG), software, accelerometer, microphone, camera, GPS, WiFi) | Clinical setting | Depression: 13–5319 people per study included in this work. Bipolar: 2–221 | SVM, CNN, RF, Logistic and linear regression, Gradient Boosting Classifier, kNN, RNN, LSTM, MLP, Fuzzy Membership Functions, Adaboost, GLM, Naive Bayese, ANN, ARM, DNN, Gradient Boosting Classifier, Semi-Supervised Fuzzy C-Means, QDA | Two areas of improvement remain: dataset imbalance and the need to move towards regression analysis. Four challenges in disorder detection: clinical implementation, privacy concerns, lack of study towards bipolar disorder, and lack of long term longitudinal studies. |

References

- WHO. Dementia. 2022. Available online: https://www.who.int/news-room/fact-sheets/detail/dementia (accessed on 1 November 2022).

- Alzheimer’s Society. Risk Factors for Dementia. Available online: https://www.alzheimers.org.uk/sites/default/files/pdf/factsheet_risk_factors_for_dementia.pdf (accessed on 4 June 2022).

- Kar, N. Behavioral and psychological symptoms of dementia and their management. Indian J. Psychiatry 2009, 51 (Suppl. S1), 77–86. [Google Scholar]

- Alzheimer’s Disease International. Dementia Statistics. Available online: https://www.alzint.org/about/dementia-facts-figures/dementia-statistics/ (accessed on 1 November 2022).

- Alzheimers.org. The Psychological and Emotional Impact of Dementia. 2022. Available online: https://www.alzheimers.org.uk/get-support/help-dementia-care/understanding-supporting-person-dementia-psychological-emotional-impact (accessed on 23 November 2022).

- Samson, S.; Clément, S.; Narme, P.; Schiaratura, L.; Ehrlé, N. Efficacy of musical interventions in dementia: Methodological requirements of nonpharmacological trials. Ann. N. Y. Acad. Sci. 2015, 1337, 249–255. [Google Scholar] [CrossRef]

- Baird, A.; Samson, S. Memory for music in Alzheimer’s disease: Unforgettable? Neuropsychol. Rev. 2009, 19, 85–101. [Google Scholar] [CrossRef] [PubMed]

- Samson, S.; Dellacherie, D.; Platel, H. Emotional power of music in patients with memory disorders: Clinical implications of cognitive neuroscience. Ann. N. Y. Acad. Sci. 2009, 1169, 245–255. [Google Scholar] [CrossRef]

- Takahashi, T.; Matsushita, H. Long-term effects of music therapy on elderly with moderate/severe dementia. J. Music Ther. 2006, 43, 317–333. [Google Scholar] [CrossRef] [PubMed]

- El Haj, M.; Fasotti, L.; Allain, P. The involuntary nature of music-evoked autobiographical memories in Alzheimer’s disease. Conscious. Cogn. 2012, 21, 238–246. [Google Scholar] [CrossRef]

- Cuddy, L.L.; Duffin, J. Music, memory, and Alzheimer’s disease: Is music recognition spared in dementia, and how can it be assessed? Med. Hypotheses 2005, 64, 229–235. [Google Scholar] [CrossRef] [PubMed]

- Baird, A.; Thompson, W.F. When music compensates language: A case study of severe aphasia in dementia and the use of music by a spousal caregiver. Aphasiology 2019, 33, 449–465. [Google Scholar] [CrossRef]

- Raglio, A.; Gianelli, M.V. Music Therapy for Individuals with Dementia: Areas of Interventions and Research Perspectives. Curr. Alzheimer Res. 2009, 6, 293–301. [Google Scholar] [CrossRef]

- Särkämö, T.; Laitinen, S.; Tervaniemi, M.; Numminen, A.; Kurki, M.; Rantanen, P. Music, Emotion, and Dementia: Insight From Neuroscientific and Clinical Research. Music Med. 2012, 4, 153–162. [Google Scholar] [CrossRef]

- Mattap, S.M.; Mohan, D.; McGrattan, A.M.; Allotey, P.; Stephan, B.C.; Reidpath, D.D.; Siervo, M.; Robinson, L.; Chaiyakunapruk, N. The economic burden of dementia in low- and middle-income countries (LMICs): A systematic review. BMJ Glob. Health 2022, 7, e007409. [Google Scholar] [CrossRef]

- Esch, J. A Survey on ambient intelligence in healthcare. Proc. IEEE 2013, 101, 2467–2469. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The Prisma 2020 statement: An updated guideline for reporting systematic reviews. Syst. Rev. 2021, 10, 89. [Google Scholar] [CrossRef] [PubMed]

- Taleb, T.; Bottazzi, D.; Nasser, N. A novel middleware solution to improve ubiquitous healthcare systems aided by affective information. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 335–349. [Google Scholar] [CrossRef] [PubMed]

- Saha, D.; Mukherjee, A. Pervasive computing: A paradigm for the 21st century. Computer 2003, 36, 25–31. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Khan, A.; Zenonos, A.; Kalogridis, G.; Wang, Y.; Vatsikas, S.; Sooriyabandara, M. Perception Clusters: Automated Mood Recognition Using a Novel Cluster-Driven Modelling System. ACM Trans. Comput. Healthc. 2021, 2, 1–16. [Google Scholar] [CrossRef]

- Genaro Motti, V. Towards a Design Space for Emotion Recognition. In Proceedings of the Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp ’21) and Proceedings of the 2021 ACM International Symposium on Wearable Computers, Online, 21–26 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 243–247. [Google Scholar] [CrossRef]

- Fernandes, J.M.; Silva, J.S.; Rodrigues, A.; Boavida, F. A Survey of Approaches to Unobtrusive Sensing of Humans. ACM Comput. Surv. 2022, 55, 1–28. [Google Scholar] [CrossRef]

- Gupta, K.; Chan, S.W.T.; Pai, Y.S.; Sumich, A.; Nanayakkara, S.; Billinghurst, M. Towards Understanding Physiological Responses to Emotional Autobiographical Memory Recall in Mobile VR Scenarios. In Proceedings of the Adjunct Publication of the 23rd International Conference on Mobile Human–Computer Interaction (MobileHCI ’21 Adjunct), Online, 27 September–1 October 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Assabumrungrat, R.; Sangnark, S.; Charoenpattarawut, T.; Polpakdee, W.; Sudhawiyangkul, T.; Boonchieng, E.; Wilaiprasitporn, T. Ubiquitous Affective Computing: A Review. IEEE Sens. J. 2022, 22, 1867–1881. [Google Scholar] [CrossRef]

- Highland, D.; Zhou, G. A review of detection techniques for depression and bipolar disorder. Smart Health 2022, 24, 100282. [Google Scholar] [CrossRef]

- Kwon, J.; Ha, J.; Kim, D.H.; Choi, J.W.; Kim, L. Emotion Recognition Using a Glasses-Type Wearable Device via Multi-Channel Facial Responses. IEEE Access 2021, 9, 146392–146403. [Google Scholar] [CrossRef]

- Gjoreski, H.; Mavridou, I.; Fatoorechi, M.; Kiprijanovska, I.; Gjoreski, M.; Cox, G.; Nduka, C. EmteqPRO: Face-Mounted Mask for Emotion Recognition and Affective Computing. In Proceedings of the Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp ’21) and Proceedings of the 2021 ACM International Symposium on Wearable Computers, Online, 21–26 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 23–25. [Google Scholar] [CrossRef]

- Hayashi, R.; Matsuda, Y.; Fujimoto, M.; Suwa, H.; Yasumoto, K. Multimodal Tourists’ Emotion and Satisfaction Estimation Considering Weather Conditions and Analysis of Feature Importance. In Proceedings of the 2021 Thirteenth International Conference on Mobile Computing and Ubiquitous Network (ICMU), Tokyo, Japan, 17–19 November 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Liakopoulos, L.; Stagakis, N.; Zacharaki, E.I.; Moustakas, K. CNN-based stress and emotion recognition in ambulatory settings. In Proceedings of the 2021 12th International Conference on Information, Intelligence, Systems & Applications (IISA), Chania Crete, Greece, 12–14 July 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Kaye, J.; Reynolds, C.; Bowman, M.; Sharma, N.; Riley, T.; Golonka, O.; Lee, J.; Quinn, C.; Beattie, Z.; Austin, J.; et al. Methodology for establishing a community-wide life laboratory for capturing unobtrusive and continuous remote activity and health data. J. Vis. Exp. 2018, 137, e56942. [Google Scholar] [CrossRef]

- Thorpe, J.; Forchhammer, B.H.; Maier, A.M. Adapting mobile and wearable technology to provide support and monitoring in rehabilitation for dementia: Feasibility case series. JMIR Form. Res. 2019, 3, e12346. [Google Scholar] [CrossRef] [PubMed]

- Fairclough, S.H.; Dobbins, C. Personal informatics and negative emotions during commuter driving: Effects of data visualization on cardiovascular reactivity & mood. Int. J. Hum. Comput. Stud. 2020, 144, 1–13. [Google Scholar] [CrossRef]

- Betella, A.; Zucca, R.; Cetnarski, R.; Greco, A.; Lanatà, A.; Mazzei, D.; Tognetti, A.; Arsiwalla, X.D.; Omedas, P.; De Rossi, D.; et al. Inference of human affective states from psychophysiological measurements extracted under ecologically valid conditions. Front. Neurosci. 2014, 8, 286. [Google Scholar] [CrossRef] [PubMed]

- Wu, W.; Zhang, H.; Pirbhulal, S.; Mukhopadhyay, S.C.; Zhang, Y.T. Assessment of Biofeedback Training for Emotion Management Through Wearable Textile Physiological Monitoring System. IEEE Sens. J. 2015, 15, 7087–7095. [Google Scholar] [CrossRef]

- Mahmud, M.S.; Fang, H.; Wang, H. An Integrated Wearable Sensor for Unobtrusive Continuous Measurement of Autonomic Nervous System. IEEE Internet Things J. 2019, 6, 1104–1113. [Google Scholar] [CrossRef]

- Zangróniz, R.; Martínez-Rodrigo, A.; Pastor, J.M.; López, M.T.; Fernández-Caballero, A. Electrodermal activity sensor for classification of calm/distress condition. Sensors 2017, 17, 2324. [Google Scholar] [CrossRef]

- Matsuda, Y.; Fedotov, D.; Takahashi, Y.; Arakawa, Y.; Yasumoto, K.; Minker, W. EmoTour: Estimating emotion and satisfaction of users based on behavioral cues and audiovisual data. Sensors 2018, 18, 3978. [Google Scholar] [CrossRef]

- Alam, M.G.R.; Abedin, S.F.; Moon, S.I.; Talukder, A.; Hong, C.S. Healthcare IoT-Based Affective State Mining Using a Deep Convolutional Neural Network. IEEE Access 2019, 7, 75189–75202. [Google Scholar] [CrossRef]

- Lyons, B.E.; Austin, D.; Seelye, A.; Petersen, J.; Yeargers, J.; Riley, T.; Sharma, N.; Mattek, N.; Wild, K.; Dodge, H.; et al. Pervasive computing technologies to continuously assess Alzheimer’s disease progression and intervention efficacy. Front. Aging Neurosci. 2015, 7, 102. [Google Scholar] [CrossRef]

- Valenza, G.; Gentili, C.; Lanatà, A.; Scilingo, E.P. Mood recognition in bipolar patients through the PSYCHE platform: Preliminary evaluations and perspectives. Artif. Intell. Med. 2013, 57, 49–58. [Google Scholar] [CrossRef] [PubMed]

- Pärkkä, J.; Merilahti, J.; Mattila, E.M.; Malm, E.; Antila, K.; Tuomisto, M.T.; Viljam Saarinen, A.; van Gils, M.; Korhonen, I. Relationship of psychological and physiological variables in long-term self-monitored data during work ability rehabilitation program. IEEE Trans. Inf. Technol. Biomed. 2009, 13, 141–151. [Google Scholar] [CrossRef] [PubMed]

- Bethge, D.; Kosch, T.; Grosse-Puppendahl, T.; Chuang, L.L.; Kari, M.; Jagaciak, A.; Schmidt, A. VEmotion: Using Driving Context for Indirect Emotion Prediction in Real-Time. In Proceedings of the 34th Annual ACM Symposium on User Interface Software and Technology (UIST ’21), Online, 10–14 October 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 638–651. [Google Scholar] [CrossRef]

- Gao, Y.; Jin, Y.; Choi, S.; Li, J.; Pan, J.; Shu, L.; Zhou, C.; Jin, Z. SonicFace: Tracking Facial Expressions Using a Commodity Microphone Array. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022, 5, 156. [Google Scholar] [CrossRef]

- Yusuf, R.; Sharma, D.G.; Tanev, I.; Shimohara, K. Evolving an emotion recognition module for an intelligent agent using genetic programming and a genetic algorithm. Artif. Life Robot. 2016, 21, 85–90. [Google Scholar] [CrossRef]

- Zinys, A.; van Berlo, B.; Meratnia, N. A Domain-Independent Generative Adversarial Network for Activity Recognition Using WiFi CSI Data. Sensors 2021, 21, 7852. [Google Scholar] [CrossRef] [PubMed]

- Sun, X.; Song, Y.; Wang, M. Toward Sensing Emotions With Deep Visual Analysis: A Long-Term Psychological Modeling Approach. IEEE MultiMedia 2020, 27, 18–27. [Google Scholar] [CrossRef]

- Van Den Broek, E.L. Ubiquitous emotion-aware computing. Pers. Ubiquitous Comput. 2013, 17, 53–67. [Google Scholar] [CrossRef]

- Kanjo, E.; Al-Husain, L.; Chamberlain, A. Emotions in context: Examining pervasive affective sensing systems, applications, and analyses. Pers. Ubiquitous Comput. 2015, 19, 1197–1212. [Google Scholar] [CrossRef]

- Zangerle, E.; Chen, C.; Tsai, M.F.; Yang, Y.H. Leveraging Affective Hashtags for Ranking Music Recommendations. IEEE Trans. Affect. Comput. 2021, 12, 78–91. [Google Scholar] [CrossRef]

- Lisetti, C.L.; Nasoz, F. Using noninvasive wearable computers to recognize human emotions from physiological signals. EURASIP J. Appl. Signal Process. 2004, 2004, 929414. [Google Scholar] [CrossRef]

- Calvo, R.A.; D’Mello, S. Affect detection: An interdisciplinary review of models, methods, and their applications. IEEE Trans. Affect. Comput. 2010, 1, 18–37. [Google Scholar] [CrossRef]

- Global Social Media Statistics Research Summary [2021 Information]. Available online: https://www.smartinsights.com/social-media-marketing/social-media-strategy/new-global-social-media-research/ (accessed on 19 June 2023).

- Maki, H.; Ogawa, H.; Tsukamoto, S.; Yonezawa, Y.; Caldwell, W.M. A system for monitoring cardiac vibration, respiration, and body movement in bed using an infrared. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Milan, Italy, 25–29 August 2010; pp. 5197–5200. [Google Scholar] [CrossRef]

- Yousefian Jazi, S.; Kaedi, M.; Fatemi, A. An emotion-aware music recommender system: Bridging the user’s interaction and music recommendation. Multimed. Tools Appl. 2021, 80, 13559–13574. [Google Scholar] [CrossRef]

- Raja, M.; Sigg, S. Applicability of RF-based methods for emotion recognition: A survey. In Proceedings of the 2016 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops 2016), Sydney, NSW, Australia, 14–18 March 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Knappmeyer, M.; Kiani, S.L.; Reetz, E.S.; Baker, N.; Tonjes, R. Survey of context provisioning middleware. IEEE Commun. Surv. Tutor. 2013, 15, 1492–1519. [Google Scholar] [CrossRef]

- Gagnon, L.; Peretz, I.; Fülöp, T. Musical structural determinants of emotional judgments in dementia of the Alzheimer type. Psychol. Pop. Media Cult. 2011, 1, 96–107. [Google Scholar] [CrossRef]

- Drapeau, J.; Gosselin, N.; Gagnon, L.; Peretz, I.; Lorrain, D. Emotional recognition from face, voice, and music in dementia of the alzheimer type: Implications for music therapy. Ann. N. Y. Acad. Sci. 2009, 1169, 342–345. [Google Scholar] [CrossRef]

- Jacobsen, J.H.; Stelzer, J.; Fritz, T.H.; Chételat, G.; La Joie, R.; Turner, R. Why musical memory can be preserved in advanced Alzheimer’s disease. Brain 2015, 138, 2438–2450. [Google Scholar] [CrossRef] [PubMed]

- Agustus, J.L.; Mahoney, C.J.; Downey, L.E.; Omar, R.; Cohen, M.; White, M.J.; Scott, S.K.; Mancini, L.; Warren, J.D. Functional MRI of music emotion processing in frontotemporal dementia. Ann. N. Y. Acad. Sci. 2015, 1337, 232–240. [Google Scholar] [CrossRef]

- Slattery, C.F.; Agustus, J.L.; Paterson, R.W.; McCallion, O.; Foulkes, A.J.; Macpherson, K.; Carton, A.M.; Harding, E.; Golden, H.L.; Jaisin, K.; et al. The functional neuroanatomy of musical memory in Alzheimer’s disease. Cortex 2019, 115, 357–370. [Google Scholar] [CrossRef]

- Lin, Y.; Chu, H.; Yang, C.Y.; Chen, C.H.; Chen, S.G.; Chang, H.J.; Hsieh, C.J.; Chou, K.R. Effectiveness of group music intervention against agitated behavior in elderly persons with dementia. Int. J. Geriatr. Psychiatry 2011, 26, 670–678. [Google Scholar] [CrossRef]

- Zatorrea, R.J.; Salimpoor, V.N. From perception to pleasure: Music and its neural substrates. Proc. Natl. Acad. Sci. USA 2013, 110, 10430–10437. [Google Scholar] [CrossRef] [PubMed]

- Koelsch, S. Brain correlates of music-evoked emotions. Nat. Rev. Neurosci. 2014, 15, 170–180. [Google Scholar] [CrossRef] [PubMed]

- Han, P.; Kwan, M.; Chen, D.; Yusoff, S.; Chionh, H.; Goh, J.; Yap, P. A controlled naturalistic study on a weekly music therapy and activity program on disruptive and depressive behaviors in dementia. Dement. Geriatr. Cogn. Disord. 2011, 30, 540–546. [Google Scholar] [CrossRef] [PubMed]

- Ridder, H.M.; Aldridge, D. Individual music therapy with persons with frontotemporal dementia: Singing dialogue. Nord. J. Music Ther. 2005, 14, 91–106. [Google Scholar] [CrossRef]

- Vasionyte, I.; Madison, G. Musical intervention for patients with dementia: A meta-analysis. J. Clin. Nurs. 2013, 22, 1203–1216. [Google Scholar] [CrossRef] [PubMed]

- Polk, M.; Kertesz, A. Music and language in degenerative disease of the brain. Brain Cogn. 1993, 22, 98–117. [Google Scholar] [CrossRef] [PubMed]

- Dorris, J.L.; Neely, S.; Terhorst, L.; VonVille, H.M.; Rodakowski, J. Effects of music participation for mild cognitive impairment and dementia: A systematic review and meta-analysis. J. Am. Geriatr. Soc. 2021, 69, 1–9. [Google Scholar] [CrossRef]

- Lesta, B.; Petocz, P. Familiar Group Singing: Addressing Mood and Social Behaviour of Residents with Dementia Displaying Sundowning. Aust. J. Music Ther. 2006, 17, 2–17. [Google Scholar]

- Van der Vleuten, M.; Visser, A.; Meeuwesen, L. The contribution of intimate live music performances to the quality of life for persons with dementia. Patient Educ. Couns. 2012, 89, 484–488. [Google Scholar] [CrossRef]

- Ziv, N.; Granot, A.; Hai, S.; Dassa, A.; Haimov, I. The effect of background stimulative music on behavior in Alzheimer’s patients. J. Music Ther. 2007, 44, 329–343. [Google Scholar] [CrossRef]

- Ragneskog, H.; Asplund, K.; Kihlgren, M.; Norberg, A. Individualized music played for agitated patients with dementia: Analysis of video-recorded sessions. Int. J. Nurs. Pract. 2001, 7, 146–155. [Google Scholar] [CrossRef] [PubMed]

- Götell, E.; Brown, S.; Ekman, S.L. The influence of caregiver singing and background music on vocally expressed emotions and moods in dementia care. Int. J. Nurs. Stud. 2009, 46, 422–430. [Google Scholar] [CrossRef] [PubMed]

- Gerdner, L.A. Individualized music for dementia: Evolution and application of evidence-based protocol. World J. Psychiatry 2012, 2, 26–32. [Google Scholar] [CrossRef]

- Schall, A.; Haberstroh, J.; Pantel, J. Time series analysis of individual music therapy in dementia: Effects on communication behavior and emotional well-being. GeroPsych J. Gerontopsychol. Geriatr. Psychiatry 2015, 28, 113–122. [Google Scholar] [CrossRef]

- Hanson, N.; Gfeller, K.; Woodworth, G.; Swanson, E.A.; Garand, L. A Comparison of the Effectiveness of Differing Types and Difficulty of Music Activities in Programming for Older Adults with Alzheimer’s Disease and Related Disorders. J. Music Ther. 1996, 33, 93–123. [Google Scholar] [CrossRef] [PubMed]

- Mathews, R.M.; Clair, A.A.; Kosloski, K. Keeping the beat: Use of rhythmic music during exercise activities for the elderly with dementia. Am. J. Alzheimer Dis. Other Dement. 2001, 16, 377–380. [Google Scholar] [CrossRef]

- Cuddy, L.L.; Sikka, R.; Silveira, K.; Bai, S.; Vanstone, A. Music-evoked autobiographical memories (MEAMs) in alzheimer disease: Evidence for a positivity effect. Cogent Psychol. 2017, 4, 1–20. [Google Scholar] [CrossRef]

- Särkämö, T.; Laitinen, S.; Numminen, A.; Kurki, M.; Johnson, J.K.; Rantanen, P. Pattern of emotional benefits induced by regular singing and music listening in dementia. J. Am. Geriatr. Soc. 2016, 64, 439–440. [Google Scholar] [CrossRef]

- Solé, C.; Mercadal-Brotons, M.; Galati, A.; De Castro, M. Effects of group music therapy on quality of life, affect, and participation in people with varying levels of dementia. J. Music Ther. 2014, 51, 103–125. [Google Scholar] [CrossRef]

- Särkämö, T.; Tervaniemi, M.; Laitinen, S.; Numminen, A.; Kurki, M.; Johnson, J.K.; Rantanen, P. Cognitive, emotional, and social benefits of regular musical activities in early dementia: Randomized controlled study. Gerontologist 2014, 54, 634–650. [Google Scholar] [CrossRef]

- Olazarán, J.; Reisberg, B.; Clare, L.; Cruz, I.; Peña-Casanova, J.; Del Ser, T.; Woods, B.; Beck, C.; Auer, S.; Lai, C.; et al. Nonpharmacological therapies in Alzheimer’s disease: A systematic review of efficacy. Dement. Geriatr. Cogn. Disord. 2010, 30, 161–178. [Google Scholar] [CrossRef]

- Ekman, P.; Sorenson, E.R.; Friesen, W.V. Pan-cultural elements in facial displays of emotion. Science 1969, 164, 86–88. [Google Scholar] [CrossRef] [PubMed]

- Plutchik, R. Emotion: Theory, Research and Experience. Psychol. Med. 1981, 1, 3–33. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Posner, J.; Russell, J.A.; Peterson, B.S. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 2005, 17, 715. [Google Scholar] [CrossRef] [PubMed]

- Peter, C.; Herbon, A. Emotion representation and physiology assignments in digital systems. Interact. Comput. 2006, 18, 139–170. [Google Scholar] [CrossRef]

- Baccour, E.; Mhaisen, N.; Abdellatif, A.A.; Erbad, A.; Mohamed, A.; Hamdi, M.; Guizani, M. Pervasive AI for IoT Applications: Resource-efficient Distributed Artificial Intelligence. arXiv 2021, arXiv:2105.01798. [Google Scholar] [CrossRef]

- Saxena, N.; Choi, B.J.; Grijalva, S. Secure and privacy-preserving concentration of metering data in AMI networks. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017. [Google Scholar] [CrossRef]

- Langheinrich, M. Privacy by Design—Principles of Privacy-Aware Ubiquitous Systems. In Proceedings of the Ubiquitous Computing: International Conference (Ubicomp 2001), Atlanta, GA, USA, 30 September–2 October 2001. [Google Scholar] [CrossRef]

- McNeill, A.; Briggs, P.; Pywell, J.; Coventry, L. Functional privacy concerns of older adults about pervasive health-monitoring systems. In Proceedings of the 10th International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 21–23 June 2017; ACM: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Chakravorty, A.; Wlodarczyk, T.; Chunming, R. Privacy Preserving Data Analytics for Smart Homes. In Proceedings of the 2013 IEEE Security and Privacy Workshops, San Francisco, CA, USA, 23–24 May 2013. [Google Scholar] [CrossRef]

- Wac, K.; Tsiourti, C. Ambulatory assessment of affect: Survey of sensor systems for monitoring of autonomic nervous systems activation in emotion. IEEE Trans. Affect. Comput. 2014, 5, 251–272. [Google Scholar] [CrossRef]

- Sedgwick, P.; Greenwood, N. Understanding the Hawthorne effect. BMJ 2015, 351, h4672. [Google Scholar] [CrossRef]

- Bottazzi, D.; Corradi, A.; Montanari, R. Context-aware middleware solutions for anytime and anywhere emergency assistance to elderly people. IEEE Commun. Mag. 2006, 44, 82–90. [Google Scholar] [CrossRef]

- Taleb, T.; Bottazzi, D.; Guizani, M.; Nait-Charif, H. Angelah: A framework for assisting elders at home. IEEE J. Sel. Areas Commun. 2009, 27, 480–494. [Google Scholar] [CrossRef]

- Zhang, J.; Yin, Z.; Chen, P.; Nichele, S. Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review. Inf. Fusion 2020, 59, 103–126. [Google Scholar] [CrossRef]

- United Consumers. Smartphonegebruik Ouderen Stijgt. 2020. Available online: https://www.unitedconsumers.com/mobiel/nieuws/2020/02/20/smartphonegebruik-ouderen-stijgt.jsp (accessed on 1 November 2022). (In German).

- Rashmi, K.A.; Kalpana, B. A Mood-Based Recommender System for Indian Music Using K-Prototype Clustering. In Intelligence in Big Data Technologies—Beyond the Hype, Advances in Intelligent Systems and Computing; Peter, J., Fernandes, J., Alavi, A., Eds.; Springer: Singapore, 2021; Volume 1167, pp. 413–418. [Google Scholar] [CrossRef]

- Vink, A.C.; Bruinsma, M.S.; Scholten, R.J. Music therapy for people with dementia. In Cochrane Database of Systematic Reviews; John Wiley & Sons Ltd.: Hoboken, NJ, USA, 2003. [Google Scholar] [CrossRef]

- Raglio, A.; Bellelli, G.; Mazzola, P.; Bellandi, D.; Giovagnoli, A.R.; Farina, E.; Stramba-Badiale, M.; Gentile, S.; Gianelli, M.V.; Ubezio, M.C.; et al. Music, music therapy and dementia: A review of literature and the recommendations of the Italian Psychogeriatric Association. Maturitas 2012, 72, 305–310. [Google Scholar] [CrossRef]

- Ueda, T.; Suzukamo, Y.; Sato, M.; Izumi, S.I. Effects of music therapy on behavioral and psychological symptoms of dementia: A systematic review and meta-analysis. Ageing Res. Rev. 2013, 12, 628–641. [Google Scholar] [CrossRef] [PubMed]

- McDermott, O.; Crellin, N.; Ridder, H.M.; Orrell, M. Music therapy in dementia: A narrative synthesis systematic review. Int. J. Geriatr. Psychiatry 2013, 28, 781–794. [Google Scholar] [CrossRef] [PubMed]

- Halpern, A.R.; Peretz, I.; Cuddy, L.L. Introduction to special issue: Dementia and music. Music Percept. 2012, 29, 465. [Google Scholar] [CrossRef]

- WHO. Radiation and Health. Available online: https://www.who.int/teams/environment-climate-change-and-health/radiation-and-health/bstations-wirelesstech (accessed on 1 November 2022).

- Halpern, A.R. Dementia and music: Challenges and future directions. Music Percept. Interdiscip. J. 2012, 29, 543–545. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

| Emotion Sensing Method | Reference |

|---|---|

| On-body sensors | [18,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42] |

| Device free | [22,23,26,31,34,40,42,43,44,45,46,47,48] |

| Self-report ing | [31,32,33,34,37,38,39,40,42] |

| Method | References |

|---|---|

| Machine learning | [18,21,22,24,25,26,27,28,29,30,33,34,35,36,37,40,41,42,43,45,48,49] |

| Deep learning | [22,25,26,30,38,39,41,44,45,46,47] |

| Study Environment | References |

|---|---|

| Lab setting | [18,23,24,26,27,30,34,39] |

| Office environment | [21,30,46,48] |

| In home (incl. healthcare homes) | [31,32,40,42,44,47,48] |

| In car | [33,43] |

| Outdoor | [29,38] |

| VR | [28] |

| Other | [23,25,46] |

| Emotion detection | Limited generalisability due to the specific application design and limited diversity in current studies. |

| Sensor limitations (storage, battery consumption, and occasional loss of connection). | |

| Computation and responsiveness challenges. | |

| Scalability. | |

| Limited research on (side-)effects of radiation. | |

| A lack of standardisation and clear requirements in research. | |

| Research is mostly performed in the lab, limited results in natural environments. | |

| Adoption (privacy considerations and ethical concerns). | |

| Limited certainty of the findings due to the use of small sample sizes in research. | |

| Music and dementia | A lack of methodological rigour needed for standardisation. |

| Limited research on long-term effects. | |

| Focus on western cultures, limited knowledge of other cultures. | |

| No heuristics in what is the best intervention, many intervention design choices and trade-offs. | |

| Adaptability to the limitations of persons with dementia in general and the progressive nature of dementia. | |

| Strong results obtained by using music, but uncertainty about its added benefit compared to other pleasurable events. | |

| Research often puts an additional burden on caregivers. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vuijk, J.G.J.; Klein Brinke, J.; Sharma, N. Utilising Emotion Monitoring for Developing Music Interventions for People with Dementia: A State-of-the-Art Review. Sensors 2023, 23, 5834. https://doi.org/10.3390/s23135834

Vuijk JGJ, Klein Brinke J, Sharma N. Utilising Emotion Monitoring for Developing Music Interventions for People with Dementia: A State-of-the-Art Review. Sensors. 2023; 23(13):5834. https://doi.org/10.3390/s23135834

Chicago/Turabian StyleVuijk, Jessica G. J., Jeroen Klein Brinke, and Nikita Sharma. 2023. "Utilising Emotion Monitoring for Developing Music Interventions for People with Dementia: A State-of-the-Art Review" Sensors 23, no. 13: 5834. https://doi.org/10.3390/s23135834

APA StyleVuijk, J. G. J., Klein Brinke, J., & Sharma, N. (2023). Utilising Emotion Monitoring for Developing Music Interventions for People with Dementia: A State-of-the-Art Review. Sensors, 23(13), 5834. https://doi.org/10.3390/s23135834