Traversable Region Detection and Tracking for a Sparse 3D Laser Scanner for Off-Road Environments Using Range Images

Abstract

:1. Introduction

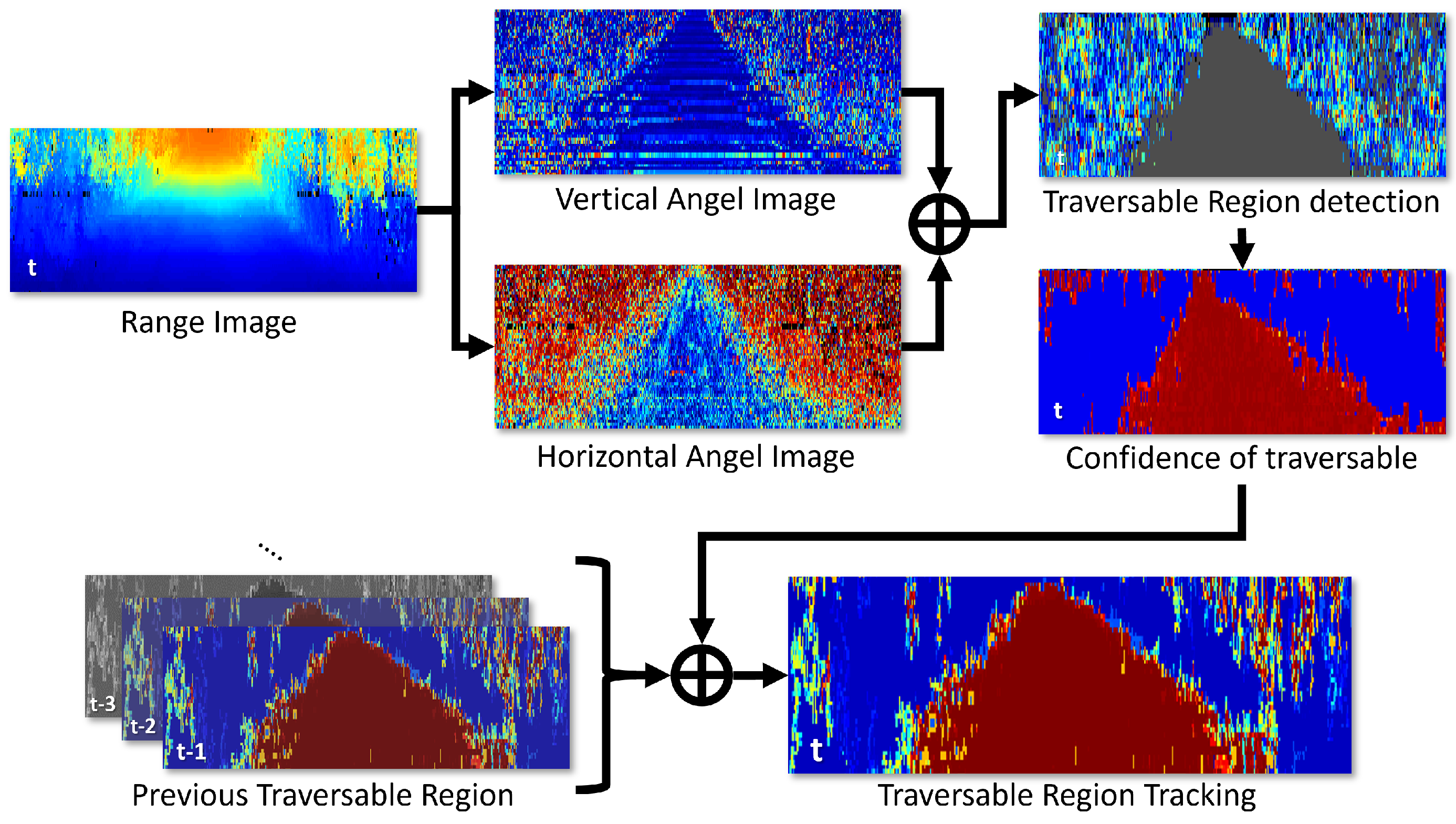

- An effective traversable-region-detection method using a 3D laser scanner is proposed. To deal with a large amount of 3D point-cloud data, we used range images with each pixel indicating the range data of a specific space. Then, each pixel and the adjacent pixels are searched based on the vertical and horizontal inclination angles of the ground;

- A traversable-region-tracking algorithm was developed to integrate the previous detection results, to prevent detrimental effects from an unexpected pose of the vehicle. By modeling the range data of each pixel as a probability value, the traversability of the previous and current pixels in the traversable region detection results can be fused using the Bayesian fusion method.

2. Related Work

3. Proposed Method

3.1. Range-Image-Based Traversable Region Detection

3.1.1. Range Image

3.1.2. Traversable Region Detection

3.2. Probabilistic Traversable Region Tracking

3.2.1. Confidence of Traversability

3.2.2. Bayesian Fusion in a Sequence

| Algorithm 1 Traversable Region Detection and Tracking |

Input: 3D point cloud and previous Traverable Region Output: Traversable Region Probability for every frame t do 01: ← Make Range Image 02: ← Make Vertical Angle Image 03: ← Make Horizontal Angle Image 04: ← Traversable Region Detection 05: ← Traversable Confidence 06: ← Tracking Traversable Region end for |

4. Experiment

4.1. Experiment Environment

4.2. Data Annotation

4.3. Evaluation Metrics

4.4. Quantitative Result

4.5. Computation Time

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Homm, F.; Kaempchen, N.; Burschka, D. Fusion of laserscannner and video based lanemarking detection for robust lateral vehicle control and lane change maneuvers. In Proceedings of the IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011; pp. 969–974. [Google Scholar]

- Guan, H.; Li, J.; Yu, Y.; Wang, C.; Chapman, M.; Yang, B. Using mobile laser scanning data for automated extraction of road markings. ISPRS J. Photogramm. Remote Sens. 2014, 87, 93–107. [Google Scholar] [CrossRef]

- Kumar, P.; McElhinney, C.P.; Lewis, P.; McCarthy, T. Automated road markings extraction from mobile laser scanning data. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 125–137. [Google Scholar] [CrossRef]

- Hata, A.Y.; Osorio, F.S.; Wolf, D.F. Robust curb detection and vehicle localization in urban environments. In Proceedings of the IEEE Intelligent Vehicles Symposium, Dearborn, MI, USA, 8–11 June 2014; pp. 1257–1262. [Google Scholar] [CrossRef]

- Guan, H.; Li, J.; Member, S.; Yu, Y.; Chapman, M.; Wang, C. Automated Road Information Extraction From Mobile Laser Scanning Data. IEEE Trans. Intell. Transp. Syst. 2015, 16, 194–205. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, J.; Wang, X.; Li, C.; Wang, L. 3D LIDAR-Based Intersection Recognition and Road Boundary Detection Method for Unmanned Ground Vehicle. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015. [Google Scholar] [CrossRef]

- Han, J.; Kim, D.; Lee, M.; Sunwoo, M. Enhanced Road Boundary and Obstacle Detection Using a Downward-Looking LIDAR Sensor. IEEE Trans. Veh. Technol. 2012, 61, 971–985. [Google Scholar] [CrossRef]

- Moosmann, F.; Pink, O.; Stiller, C. Segmentation of 3D lidar data in non-flat urban environments using a local convexity criterion. In Proceedings of the IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 215–220. [Google Scholar] [CrossRef]

- Himmelsbach, M.; von Hundelshausen, F.; Wuensche, H. Fast segmentation of 3D point clouds for ground vehicles. In Proceedings of the IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 560–565. [Google Scholar] [CrossRef]

- Choe, Y.; Ahn, S.; Chung, M.J. Fast point cloud segmentation for an intelligent vehicle using sweeping 2D laser scanners. In Proceedings of the International Conference on Ubiquitous Robots and Ambient Intelligence, Daejeon, Republic of Korea, 26–29 November 2012; pp. 38–43. [Google Scholar] [CrossRef]

- Chen, T.; Dai, B.; Wang, R.; Liu, D. Gaussian-Process-Based Real-Time Ground Segmentation for Autonomous Land Vehicles. J. Intell. Robot. Syst. 2014, 76, 563–582. [Google Scholar] [CrossRef]

- Bogoslavskyi, I.; Stachniss, C. Fast Range Image-Based Segmentation of Sparse 3D Laser Scans for Online Operation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Daejeon, Republic of Korea, 9–14 October 2016. [Google Scholar]

- Bogoslavskyi, I.; Stachniss, C. Efficient Online Segmentation for Sparse 3D Laser Scans. J. Photogramm. Remote Sens. Geoinf. Sci. 2017, 85, 41–52. [Google Scholar] [CrossRef]

- Caltagirone, L.; Scheidegger, S.; Svensson, L.; Wahde, M. Fast LIDAR-based road detection using fully convolutional neural networks. In Proceedings of the IEEE Intelligent Vehicles Symposium, Los Angeles, CA, USA, 11–14 June 2017; pp. 1019–1024. [Google Scholar] [CrossRef]

- Babahajiani, P.; Fan, L.; Kämäräinen, J.K.; Gabbouj, M. Urban 3D segmentation and modelling from street view images and LiDAR point clouds. Mach. Vis. Appl. 2017, 28, 679–694. [Google Scholar] [CrossRef]

- Zermas, D.; Izzat, I.; Papanikolopoulos, N. Fast segmentation of 3D point clouds: A paradigm on LiDAR data for autonomous vehicle applications. In Proceedings of the IEEE International Conference on Robotics and Automation, Singapore, 29 May–3 June 2017; pp. 5067–5073. [Google Scholar] [CrossRef]

- Shin, M.O.; Oh, G.M.; Kim, S.W.; Seo, S.W. Real-time and accurate segmentation of 3-D point clouds based on gaussian process regression. IEEE Trans. Intell. Transp. Syst. 2017, 18, 3363–3377. [Google Scholar] [CrossRef]

- Weiss, T.; Schiele, B.; Dietmayer, K. Robust Driving Path Detection in Urban and Highway Scenarios Using a Laser Scanner and Online Occupancy Grids. In Proceedings of the IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 184–189. [Google Scholar] [CrossRef]

- Homm, F.; Kaempchen, N.; Ota, J.; Burschka, D. Efficient occupancy grid computation on the GPU with lidar and radar for road boundary detection. In Proceedings of the IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 1006–1013. [Google Scholar] [CrossRef]

- An, J.; Choi, B.; Sim, K.B.; Kim, E. Novel Intersection Type Recognition for Autonomous Vehicles Using a Multi-Layer Laser Scanner. Sensors 2016, 16, 1123. [Google Scholar] [CrossRef]

- Jungnickel, R.; Michael, K.; Korf, F. Efficient Automotive Grid Maps using a Sensor Ray based Refinement Process. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gotenburg, Sweden, 19–22 June 2016. [Google Scholar]

- Eraqi, H.M.; Honer, J.; Zuther, S. Static Free Space Detection with Laser Scanner using Occupancy Grid Maps. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Maui, HI, USA, 4–7 November 2018. [Google Scholar]

- Wang, C.C.; Thorpe, C.; Thrun, S. Online simultaneous localization and mapping with detection and tracking of moving objects: Theory and results from a ground vehicle in crowded urban areas. In Proceedings of the IEEE International Conference on Robotics and Automation, Taipei, Taiwan, 14–19 September 2003; Volume 1, pp. 842–849. [Google Scholar] [CrossRef]

- Wang, C.C.; Thorpe, C.; Thrun, S.; Durrant-whyte, H. Simultaneous Localization, Mapping and Moving Object Tracking Moving Object Tracking. Int. J. Robot. Res. 2007, 26, 889–916. [Google Scholar] [CrossRef]

- Vu, T.D.; Aycard, O.; Appenrodt, N. Online Localization and Mapping with Moving Object Tracking in Dynamic Outdoor Environments. In Proceedings of the IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 190–195. [Google Scholar] [CrossRef]

- Vu, T.D.; Burlet, J.; Aycard, O. Grid-based localization and local mapping with moving object detection and tracking. Inf. Fusion 2011, 12, 58–69. [Google Scholar] [CrossRef]

- Steyer, S.; Tanzmeister, G.; Wollherr, D. Object tracking based on evidential dynamic occupancy grids in urban environments. In Proceedings of the IEEE Intelligent Vehicles Symposium, Los Angeles, CA, USA, 11–14 June 2017; pp. 1064–1070. [Google Scholar] [CrossRef]

- Hoermann, S.; Bach, M.; Dietmayer, K. Dynamic Occupancy Grid Prediction for Urban Autonomous Driving: A Deep Learning Approach with Fully Automatic Labeling. arXiv 2017, arXiv:1705.08781v2. [Google Scholar]

- Gies, F.; Danzer, A.; Dietmayer, K. Environment Perception Framework Fusing Multi-Object Tracking, Dynamic Occupancy Grid Maps and Digital Maps. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Maui, HI, USA, 4–7 November 2018; pp. 3859–3865. [Google Scholar] [CrossRef]

- Hoermann, S.; Henzler, P.; Bach, M.; Dietmayer, K. Object Detection on Dynamic Occupancy Grid Maps Using Deep Learning and Automatic Label Generation. In Proceedings of the IEEE Intelligent Vehicles Symposium, Changshu, China, 26–30 June 2018; pp. 190–195. [Google Scholar] [CrossRef]

- Papadakis, P. Terrain traversability analysis methods for unmanned ground vehicles: A survey. Eng. Appl. Artif. Intell. 2013, 26, 1373–1385. [Google Scholar] [CrossRef]

- Yang, S.; Xiang, Z.; Wu, J.; Wang, X.; Sun, H.; Xin, J.; Zheng, N. Efficient Rectangle Fitting of Sparse Laser Data for Robust On-Road Object Detection. In Proceedings of the IEEE Intelligent Vehicles Symposium, Suzhou, China, 26–30 June 2018. [Google Scholar]

- Jiang, P.; Osteen, P.; Wigness, M.; Saripalli, S. RELLIS-3D Dataset: Data, Benchmarks and Analysis. arXiv 2020, arXiv:2011.12954. [Google Scholar]

- Viswanath, K.; Singh, K.; Jiang, P.; Sujit, P.B.; Saripalli, S. OFFSEG: A Semantic Segmentation Framework For Off-Road Driving. arXiv 2021, arXiv:2103.12417. [Google Scholar]

- Palazzo, S.; Guastella, D.C.; Cantelli, L.; Spadaro, P.; Rundo, F.; Muscato, G.; Giordano, D.; Spampinato, C. Domain adaptation for outdoor robot traversability estimation from RGB data with safety-preserving loss. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 25–29 October 2020; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2020; pp. 10014–10021. [Google Scholar] [CrossRef]

- Hosseinpoor, S.; Torresen, J.; Mantelli, M.; Pitto, D.; Kolberg, M.; Maffei, R.; Prestes, E. Traversability Analysis by Semantic Terrain Segmentation for Mobile Robots. In Proceedings of the IEEE International Conference on Automation Science and Engineering, Lyon, France, 23–27 August 2021; IEEE Computer Society: Washington, DC, USA, 2021. [Google Scholar] [CrossRef]

- Leung, T.H.Y.; Ignatyev, D.; Zolotas, A. Hybrid Terrain Traversability Analysis in Off-road Environments. In Proceedings of the IEEE International Conference on Robotics and Automation, Philadelphia, PA, USA, 23–27 May 2022; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2022; pp. 50–56. [Google Scholar] [CrossRef]

- Sock, J.; Kim, J.; Min, J.; Kwak, K. Probabilistic traversability map generation using 3D-LIDAR and camera. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Ahtiainen, J.; Stoyanov, T.; Saarinen, J. Normal Distributions Transform Traversability Maps: LIDAR-Only Approach for Traversability Mapping in Outdoor Environments. J. Field Robot. 2017, 34, 600–621. [Google Scholar] [CrossRef]

- Martínez, J.L.; Morales, J.; Sánchez, M.; Morán, M.; Reina, A.J.; Fernández-Lozano, J.J. Reactive navigation on natural environments by continuous classification of ground traversability. Sensors 2020, 20, 6423. [Google Scholar] [CrossRef]

- An, J. Traversable Region Detection Method in rough terrain using 3D Laser Scanner. J. Korean Inst. Next Gener. Comput. 2022, 18, 147–158. [Google Scholar]

- Lee, H.; Hong, S.; Kim, E. Probabilistic background subtraction in a video-based recognition system. KSII Trans. Internet Inf. Syst. 2011, 5, 782–804. [Google Scholar] [CrossRef]

- Kim, G.; Kim, A. Scan Context: Egocentric Spatial Descriptor for Place Recognition Within 3D Point Cloud Map. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; IEEE: New York, NY, USA, 2018; pp. 4802–4809. [Google Scholar] [CrossRef]

- Thrun, S. The Graph SLAM Algorithm with Applications to Large-Scale Mapping of Urban Structures. Int. J. Robot. Res. 2006, 25, 403–429. [Google Scholar] [CrossRef]

- Douillard, B.; Underwood, J.; Kuntz, N.; Vlaskine, V.; Quadros, A.; Morton, P.; Frenkel, A. On the segmentation of 3D lidar point clouds. In Proceedings of the IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2798–2805. [Google Scholar] [CrossRef]

- Lai, K.; Fox, D. Object recognition in 3D point clouds using web data and domain adaptation. Int. J. Robot. Res. 2010, 29, 1019–1037. [Google Scholar] [CrossRef]

- Hoover, A.; Jean-Baptiste, G.; Jiang, X.; Flynn, P.J.; Bunke, H.; Goldgof, D.B.; Bowyer, K.; Eggert, D.W.; Fitzgibbon, A.; Fisher, R.B. An Experimental Comparison of Range Image Segmentation Algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 673–689. [Google Scholar] [CrossRef]

- Leonard, J.; How, J.; Teller, S.; Berger, M.; Campbell, S.; Fiore, G.; Fletcher, L.; Frazzoli, E.; Huang, A.; Karaman, S.; et al. A perception-driven autonomous urban vehicle. J. Field Robot. 2009, 56, 163–230. [Google Scholar] [CrossRef]

- Savitzky, A.; Golay, M.J. Smoothing and Differentiation of Data by Simplified Least Squares Procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

| Method | Route A | Route B | Route C | |||

|---|---|---|---|---|---|---|

| Iou | Dice | Iou | Dice | Iou | Dice | |

| Elevation Map [38] | 0.5090 | 0.6726 | 0.2004 | 0.3291 | 0.1610 | 0.2765 |

| Range Image [13] | 0.5617 | 0.7165 | 0.2069 | 0.3399 | 0.2997 | 0.4563 |

| Detection only [41] | 0.6509 | 0.7870 | 0.2773 | 0.4259 | 0.4816 | 0.6461 |

| Proposed method | 0.6701 | 0.7971 | 0.2871 | 0.4269 | 0.4826 | 0.6471 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

An, J. Traversable Region Detection and Tracking for a Sparse 3D Laser Scanner for Off-Road Environments Using Range Images. Sensors 2023, 23, 5898. https://doi.org/10.3390/s23135898

An J. Traversable Region Detection and Tracking for a Sparse 3D Laser Scanner for Off-Road Environments Using Range Images. Sensors. 2023; 23(13):5898. https://doi.org/10.3390/s23135898

Chicago/Turabian StyleAn, Jhonghyun. 2023. "Traversable Region Detection and Tracking for a Sparse 3D Laser Scanner for Off-Road Environments Using Range Images" Sensors 23, no. 13: 5898. https://doi.org/10.3390/s23135898