A Federated Attention-Based Multimodal Biometric Recognition Approach in IoT

Abstract

1. Introduction

- We present a novel multimodal biometric recognition model, AMBR. By fusing the face and voice features, our AMBR model collects and condenses the crucial inputs from each modality and shares them with another modality, achieving better performance for person recognition.

- Novel feature extraction approaches with attention mechanisms are developed for each modality. It not only improves the unimodal recognition accuracy but also effectively extracts features from different modalities for the multimodal fusion stage.

- Trained with FL, our model addresses the issue of data interoperability when collecting biometric data from different edge devices, and promotes data communication and collaboration while ensuring higher levels of privacy in the IoT.

2. Related Works

2.1. Multimodal Person Recognition

2.2. Attention Mechanism

2.3. Federated Learning

3. Proposed Model

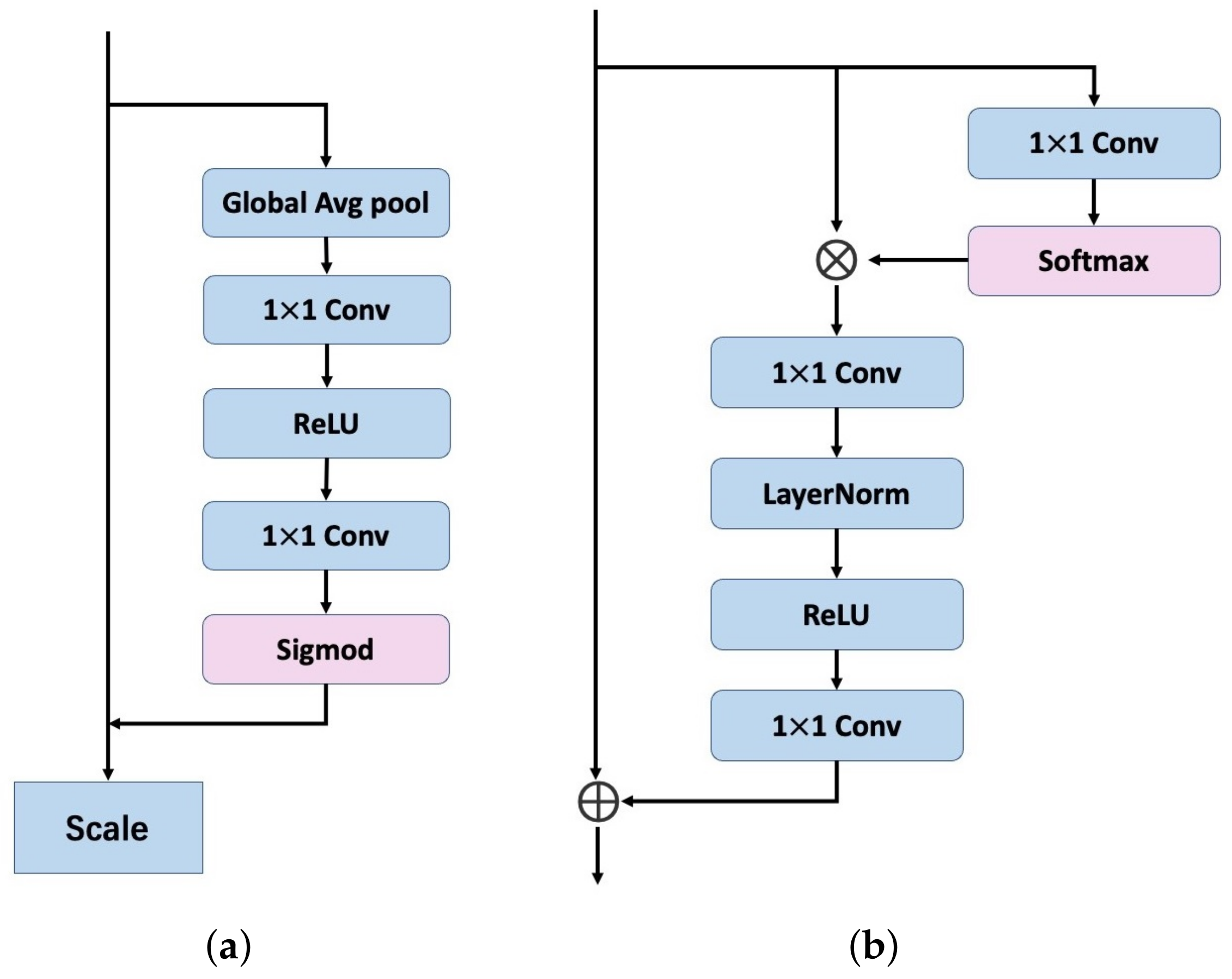

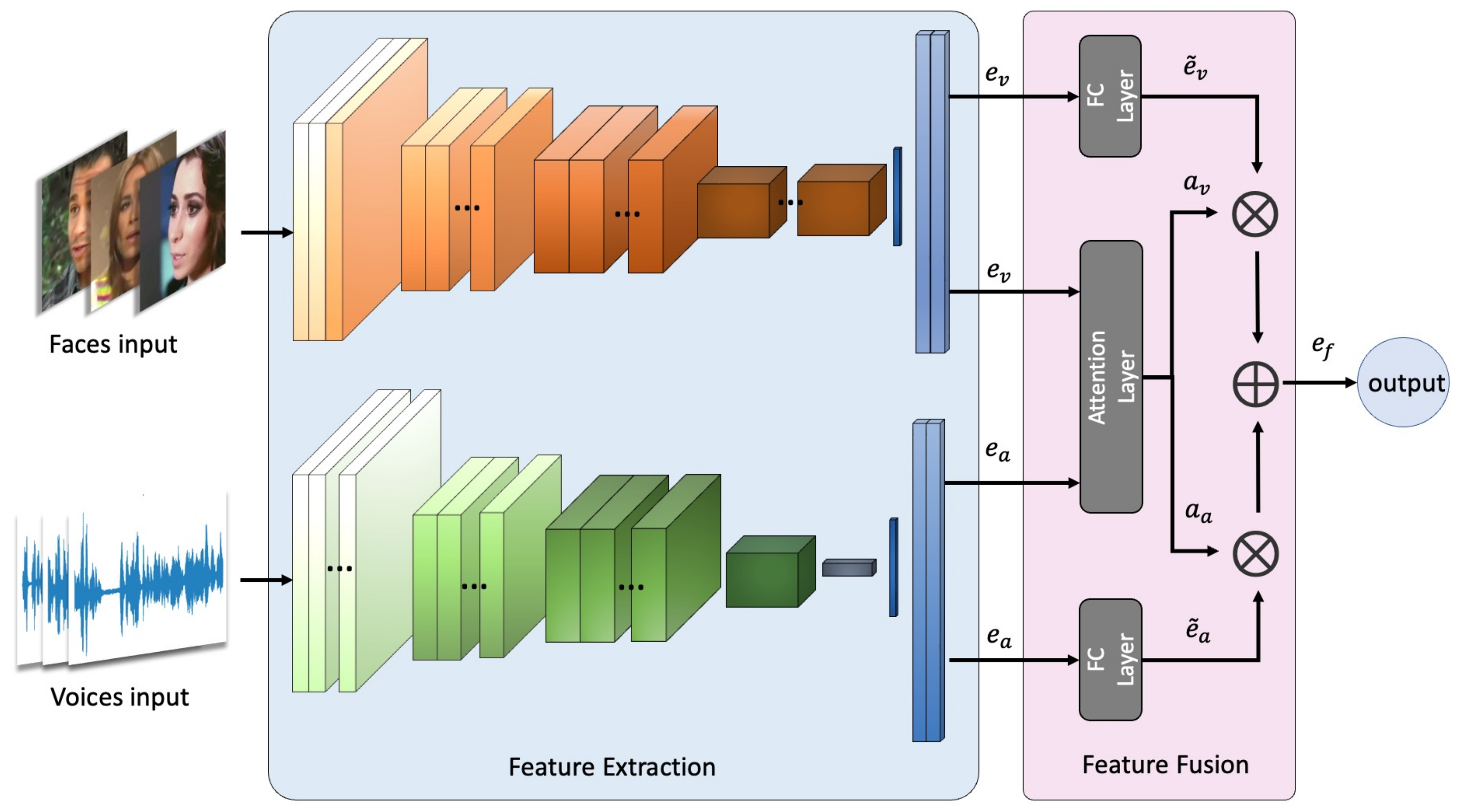

3.1. Feature Extraction Network

3.2. Biometric Modalities Fusion Network

3.3. The Federated AMBR Method

| Algorithm 1 The federated AMBR method. The K clients are indexed by k, rounds are indexed by r, n is the number of samples, represents the individual dataset owned by each client, E is the number of local epoch, B is the local batch size and w is the model parameters |

Server executes: initialize for each round do K ← (random subset of M clients) for each client in parallel do ← ClientUpdate end for end for ClientUpdate://Run on client k Get parameters w from FL server for each local epoch i from 1 to E do for batch do Extract audio embeddings and visual embeddings from local dataset Fuse the , through the fusion network Fine-tune and update w with loss function end for end for return w to Server |

4. Experiments

4.1. Datasets and Training Details

4.2. Unimodal Biometric Recognition

4.3. Multimodal Biometric Recognition

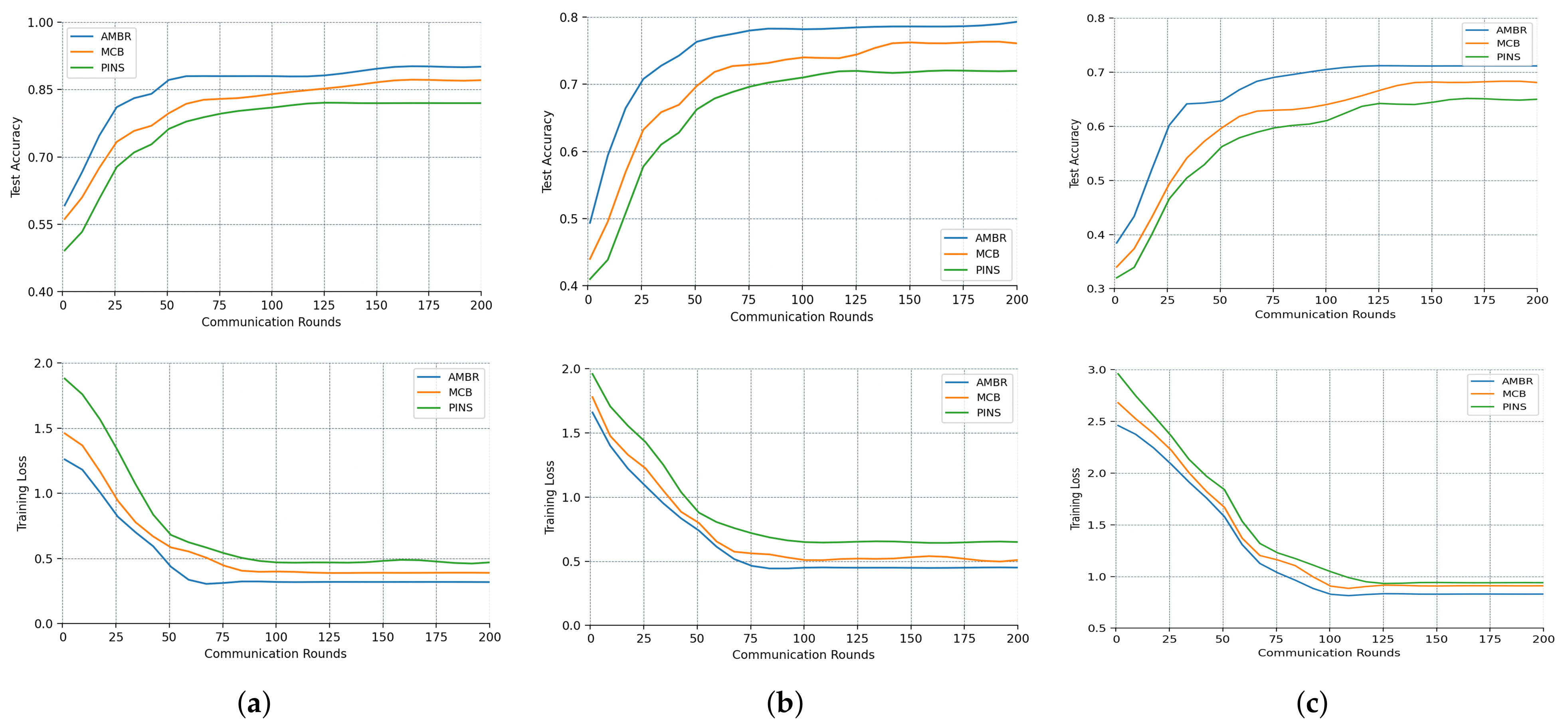

4.4. The Experimental Results in FL Settings

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Obaidat, M.S.; Rana, S.P.; Maitra, T.; Giri, D.; Dutta, S. Biometric security and internet of things (IoT). In Biometric-Based Physical and Cybersecurity Systems; Springer: Berlin/Heidelberg, Germany, 2019; pp. 477–509. [Google Scholar]

- Minaee, S.; Abdolrashidi, A.; Su, H.; Bennamoun, M.; Zhang, D. Biometrics recognition using deep learning: A survey. Artif. Intell. Rev. 2023, 56, 8647–8695. [Google Scholar] [CrossRef]

- Schuiki, J.; Linortner, M.; Wimmer, G.; Uhl, A. Attack detection for finger and palm vein biometrics by fusion of multiple recognition algorithms. IEEE Trans. Biom. Behav. Identity Sci. 2022, 4, 544–555. [Google Scholar] [CrossRef]

- Shon, S.; Oh, T.H.; Glass, J. Noise-tolerant audio-visual online person verification using an attention-based neural network fusion. In Proceedings of the 44th IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 3995–3999. [Google Scholar]

- Alay, N.; Al-Baity, H. A Multimodal Biometric System For Personal Verification Based On Different Level Fusion Of Iris And Face Traits. Biosci. Biotechnol. Res. Commun. 2019, 12, 565–576. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Qin, Z.; Zhao, P.; Zhuang, T.; Deng, F.; Ding, Y.; Chen, D. A survey of identity recognition via data fusion and feature learning. Inf. Fusion 2023, 91, 694–712. [Google Scholar] [CrossRef]

- Luo, D.; Zou, Y.; Huang, D. Investigation on joint representation learning for robust feature extraction in speech emotion recognition. In Proceedings of the 19th Annual Conference of the International-Speech-Communication-Association (INTERSPEECH), Hyderabad, India, 2–6 September 2018; pp. 152–156. [Google Scholar]

- Micucci, M.; Iula, A. Recognition Performance Analysis of a Multimodal Biometric System Based on the Fusion of 3D Ultrasound Hand-Geometry and Palmprint. Sensors 2023, 23, 3653. [Google Scholar] [CrossRef] [PubMed]

- Sell, G.; Duh, K.; Snyder, D.; Etter, D.; Garcia-Romero, D. Audio-visual person recognition in multimedia data from the IARPA Janus program. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 3031–3035. [Google Scholar]

- Nagrani, A.; Albanie, S.; Zisserman, A. Learnable pins: Cross-modal embeddings for person identity. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 71–88. [Google Scholar]

- Fang, Z.; Liu, Z.; Hung, C.C.; Sekhavat, Y.A.; Liu, T.; Wang, X. Learning coordinated emotion representation between voice and face. Appl. Intell. 2023, 53, 14470–14492. [Google Scholar] [CrossRef]

- Harizi, R.; Walha, R.; Drira, F.; Zaied, M. Convolutional neural network with joint stepwise character/word modeling based system for scene text recognition. Multimed. Tools Appl. 2022, 81, 3091–3106. [Google Scholar] [CrossRef]

- Ye, L.; Rochan, M.; Liu, Z.; Zhang, X.; Wang, Y. Referring Segmentation in Images and Videos with Cross-Modal Self-Attention Network. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 3719–3732. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Zhou, L.; Chen, J. Face recognition based on lightweight convolutional neural networks. Information 2021, 12, 191. [Google Scholar] [CrossRef]

- Tan, Z.; Yang, Y.; Wan, J.; Hang, H.; Guo, G.; Li, S.Z. Attention-based pedestrian attribute analysis. IEEE Trans. Image Process. 2019, 28, 6126–6140. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3146–3154. [Google Scholar]

- Savazzi, S.; Nicoli, M.; Rampa, V. Federated learning with cooperating devices: A consensus approach for massive IoT networks. IEEE Internet Things J. 2020, 7, 4641–4654. [Google Scholar] [CrossRef]

- Tikkinen-Piri, C.; Rohunen, A.; Markkula, J. EU General Data Protection Regulation: Changes and implications for personal data collecting companies. Comput. Law Secur. Rev. 2018, 34, 134–153. [Google Scholar] [CrossRef]

- Bonawitz, K.; Eichner, H.; Grieskamp, W.; Huba, D.; Ingerman, A.; Ivanov, V.; Kiddon, C.; Konečnỳ, J.; Mazzocchi, S.; McMahan, B.; et al. Towards federated learning at scale: System design. Proc. Mach. Learn. Syst. 2019, 1, 374–388. [Google Scholar]

- Li, B.; Ma, S.; Deng, R.; Choo, K.K.R.; Yang, J. Federated anomaly detection on system logs for the internet of things: A customizable and communication-efficient approach. IEEE Trans. Netw. Serv. Manag. 2022, 19, 1705–1716. [Google Scholar] [CrossRef]

- Li, B.; Jiang, Y.; Pei, Q.; Li, T.; Liu, L.; Lu, R. FEEL: Federated end-to-end learning with non-IID data for vehicular ad hoc networks. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16728–16740. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhao, J.; Jiang, L.; Tan, R.; Niyato, D.; Li, Z.; Lyu, L.; Liu, Y. Privacy-preserving blockchain-based federated learning for IoT devices. IEEE Internet Things J. 2020, 8, 1817–1829. [Google Scholar] [CrossRef]

- Li, B.; Wu, Y.; Song, J.; Lu, R.; Li, T.; Zhao, L. DeepFed: Federated deep learning for intrusion detection in industrial cyber-physical systems. IEEE Trans. Ind. Inform. 2020, 17, 5615–5624. [Google Scholar] [CrossRef]

- Wu, Q.; He, K.; Chen, X. Personalized federated learning for intelligent IoT applications: A cloud-edge based framework. IEEE Open J. Comput. Soc. 2020, 1, 35–44. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 29th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Okabe, K.; Koshinaka, T.; Shinoda, K. Attentive statistics pooling for deep speaker embedding. In Proceedings of the 19th Annual Conference of the International Speech Communication (INTERSPEECH), Hyderabad, India, 2–6 September 2018; pp. 2252–2256. [Google Scholar]

- Heigold, G.; Moreno, I.; Bengio, S.; Shazeer, N. End-to-end text-dependent speaker verification. In Proceedings of the 41st IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 5115–5119. [Google Scholar]

- Desplanques, B.; Thienpondt, J.; Demuynck, K. ECAPA-TDNN: Emphasized channel attention, propagation and aggregation in TDNN based speaker verification. In Proceedings of the 21st Annual Conference of the International Speech Communication Association (INTERSPEECH), Shanghai, China, 25–29 October 2020; pp. 3830–3834. [Google Scholar]

- Cao, Y.; Xu, J.; Lin, S.; Wei, F.; Hu, H. GCNet: Non-local networks meet squeeze-excitation networks and beyond. In Proceedings of the 17th IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Seoul, Republic of Korea, 27–28 October 2019; pp. 1971–1980. [Google Scholar]

- Nagrani, A.; Chung, J.S.; Zisserman, A. VoxCeleb: A large-Scale speaker identification dataset. In Proceedings of the 18th Annual Conference of the International Speech Communication Association (INTERSPEECH), Stockholm, Sweden, 20–24 August 2017; pp. 2616–2620. [Google Scholar]

- Chung, J.S.; Nagrani, A.; Zisserman, A. Voxceleb2: Deep speaker recognition. In Proceedings of the 19th Annual Conference of the International Speech Communication Association (INTERSPEECH), Hyderabad, India, 2–6 September 2018; pp. 1086–1090. [Google Scholar]

- Snyder, D.; Chen, G.; Povey, D. Musan: A music, speech, and noise corpus. arXiv 2015, arXiv:1510.08484. [Google Scholar]

- Deng, J.; Guo, J.; Yang, J.; Xue, N.; Cotsia, I.; Zafeiriou, S. ArcFace: Additive angular margin loss for deep face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 5962–5979. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.S.; Huh, J.; Mun, S.; Lee, M.; Heo, H.S.; Choe, S.; Ham, C.; Jung, S.; Lee, B.J.; Han, I. In defence of metric learning for speaker recognition. In Proceedings of the 21st Annual Conference of the International Speech Communication Association (INTERSPEECH), Shanghai, China, 25–29 October 2020; pp. 2977–2981. [Google Scholar]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the devil in the details: Delving deep into convolutional nets. arXiv 2014, arXiv:1405.3531. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Sarı, L.; Singh, K.; Zhou, J.; Torresani, L.; Singhal, N.; Saraf, Y. A multi-view approach to audio-visual speaker verification. In Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 6194–6198. [Google Scholar]

- Fukui, A.; Park, D.H.; Yang, D.; Rohrbach, A.; Darrell, T.; Rohrbach, M. Multimodal compact bilinear pooling for visual question answering and visual grounding. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing (EMNLP), Austin, TX, USA, 1 November 2016; pp. 457–468. [Google Scholar]

| Model | Architecture | Features | Fusion Strategy |

|---|---|---|---|

| Luo et al. [8] | CNN + RNN | voice and text | Fuse the audio and handcrafted low-level descriptor through simple vector concatenation. |

| Micucci et al. [9] | CNN | palmprint and hand-geometry | Score level fusion, sum the weighted scores from each modality. |

| Sell et al. [10] | DNN + CNN | face and voice | Converting the output scores generated from unimodal verification systems into log-likelihood ratios. |

| PINS [11] | VGG-M | face and voice | Establish a joint embedding between faces and voices. |

| EmoRL-Net [12] | ResNet-18 | face and voice | Project the representation of two full connection layers into a spherical space. |

| Test Modality | Method | EER(%) | ||

|---|---|---|---|---|

| Vox1_O | Vox1_E | Vox1_H | ||

| Audio | ECAPA-TDNN | 1.86 | 1.98 | 3.16 |

| Audio | ECAPA-TDNN * | 1.82 | 1.93 | 3.02 |

| Audio | AMBR | 1.72 | 1.76 | 2.80 |

| Audio | AMBR * | 1.65 | 1.73 | 2.71 |

| Visual | ResNet-34 | 1.61 | 1.45 | 2.01 |

| Visual | AMBR | 1.47 | 1.34 | 1.68 |

| Test Modality | Method | EER(%) | ||

|---|---|---|---|---|

| Vox1_O | Vox1_E | Vox1_H | ||

| Audio | ResNet-18 | 2.35 | 2.42 | 3.67 |

| Audio | ResNet-34 | 2.01 | 2.10 | 3.24 |

| Audio | VGG-M | 1.96 | 2.04 | 3.26 |

| Audio | AMBR | 1.65 | 1.73 | 2.71 |

| Visual | ResNet-18 | 1.74 | 1.66 | 2.08 |

| Visual | VGG-16 | 1.80 | 1.71 | 2.15 |

| Visual | AMBR | 1.47 | 1.34 | 1.68 |

| Test Modality | Method | EER(%) | ||

|---|---|---|---|---|

| Vox1_O | Vox1_E | Vox1_H | ||

| Audio | AMBR | 1.65 | 1.73 | 2.71 |

| Visual | AMBR | 1.47 | 1.34 | 1.68 |

| Visual + Audio | Sari et al. [38] | 0.90 | - | - |

| Visual + Audio | MCB [39] | 0.75 | 0.68 | 1.13 |

| Visual + Audio | PINS [11] | 0.79 | 0.50 | 0.91 |

| Visual + Audio | AMBR with SFF | 0.93 | 0.59 | 1.04 |

| Visual + Audio | AMBR with AF | 0.68 | 0.47 | 0.80 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, L.; Zhao, Y.; Meng, J.; Zhao, Q. A Federated Attention-Based Multimodal Biometric Recognition Approach in IoT. Sensors 2023, 23, 6006. https://doi.org/10.3390/s23136006

Lin L, Zhao Y, Meng J, Zhao Q. A Federated Attention-Based Multimodal Biometric Recognition Approach in IoT. Sensors. 2023; 23(13):6006. https://doi.org/10.3390/s23136006

Chicago/Turabian StyleLin, Leyu, Yue Zhao, Jintao Meng, and Qi Zhao. 2023. "A Federated Attention-Based Multimodal Biometric Recognition Approach in IoT" Sensors 23, no. 13: 6006. https://doi.org/10.3390/s23136006

APA StyleLin, L., Zhao, Y., Meng, J., & Zhao, Q. (2023). A Federated Attention-Based Multimodal Biometric Recognition Approach in IoT. Sensors, 23(13), 6006. https://doi.org/10.3390/s23136006